Empowering Smart Soybean Farming with Deep Learning: Progress, Challenges, and Future Perspectives

Abstract

1. Introduction

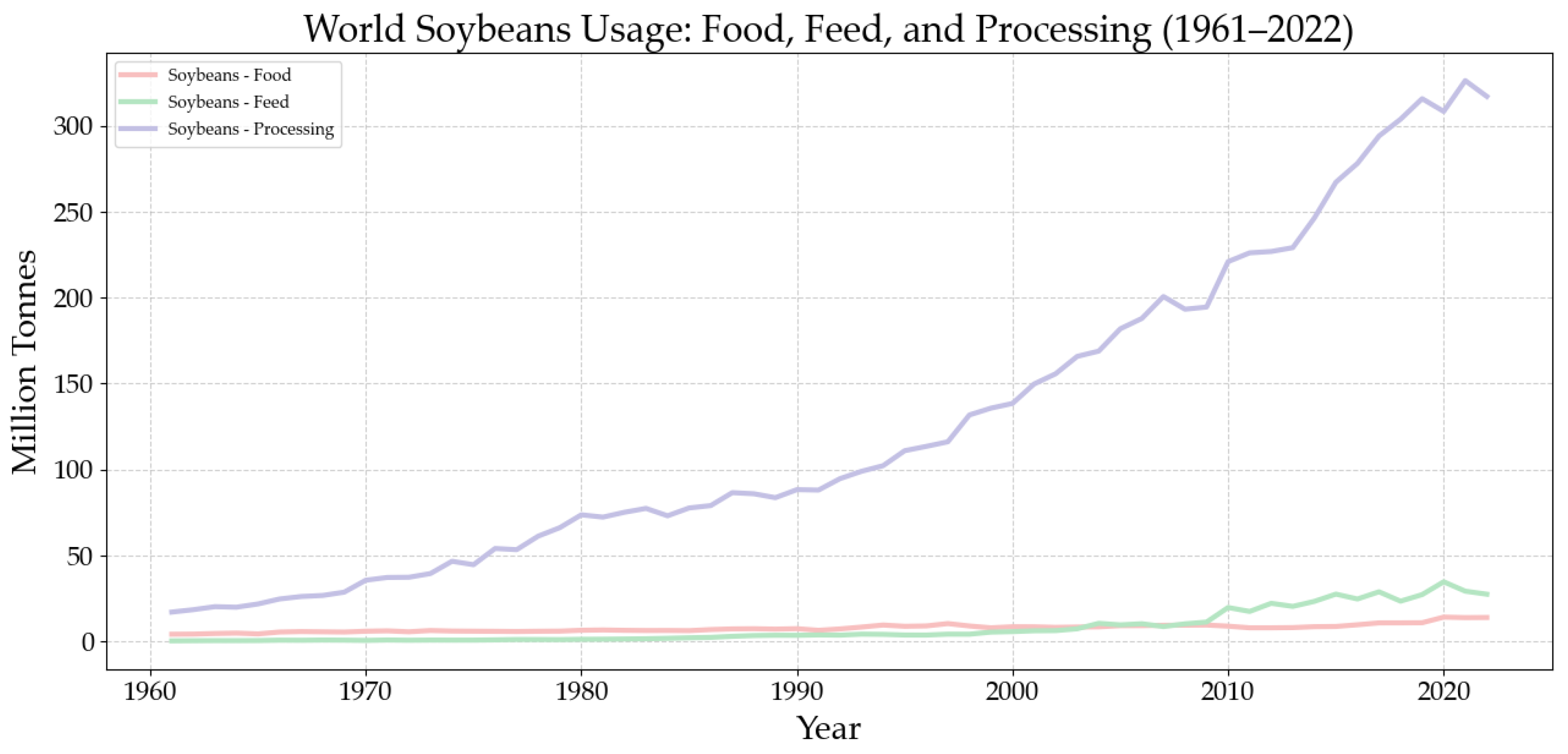

2. Literature Search and Selection Strategy Based on PRISMA

3. Soybean Industry Chain and the Demand for Intelligent Development

- High-throughput agriculture relies on high-throughput phenotyping platforms that integrate multi-source sensors (e.g., UAVs, LiDAR, multispectral imaging) and AI algorithms to automate environmental data acquisition and analysis. Examples include LQ-FieldPheno [46], HT3P [47], and UIISScan 1.1 [48], which have significantly improved the efficiency and precision of crop monitoring.

- Automation seeks to minimize human intervention in agricultural tasks through deep learning-based autonomous systems, thereby reducing labor costs and improving management efficiency and decision accuracy [49].

- Precision decision-making is driven by high-resolution, real-time agricultural data analyzed through advanced deep learning models. Techniques such as convolutional neural networks, combined with IoT, remote sensing, and transfer learning, have been employed to optimize machinery operations and decision-making processes [50,51].

4. The Applications of Deep Learning in Soybean Production

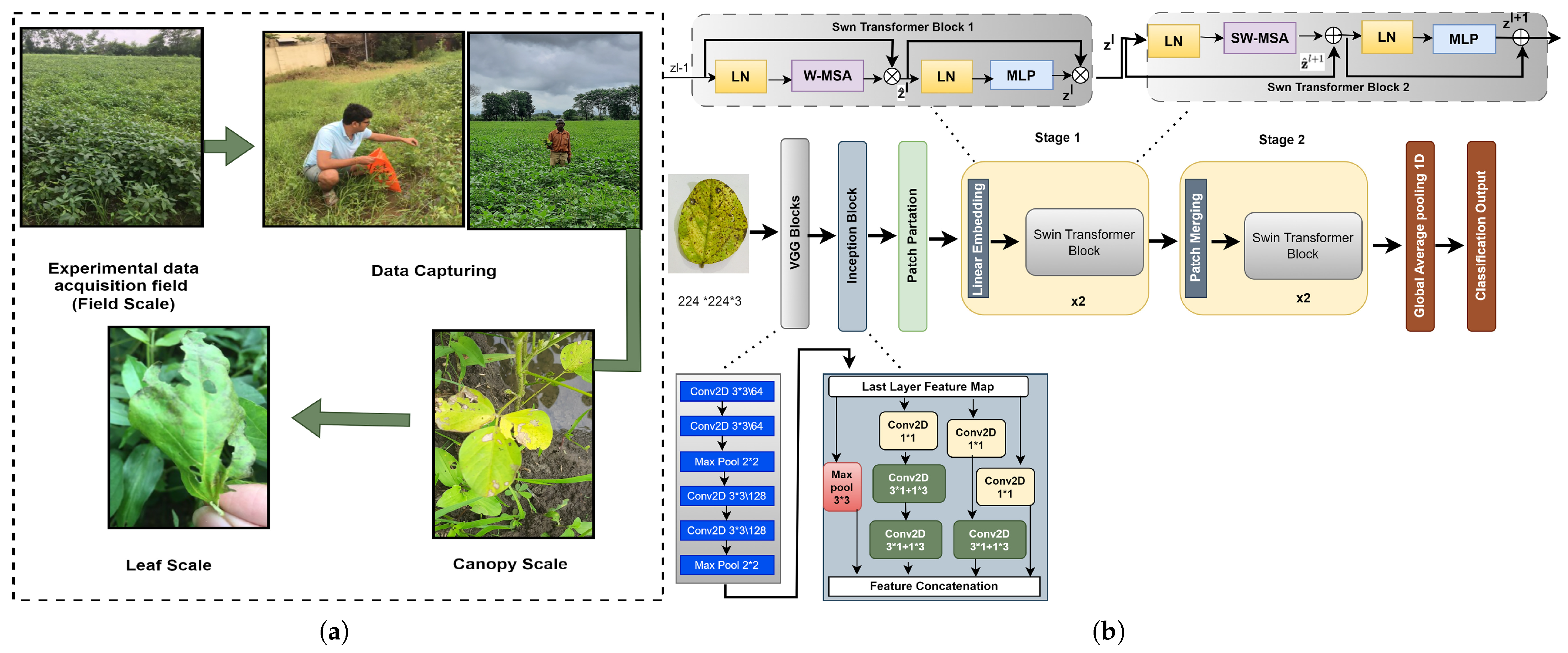

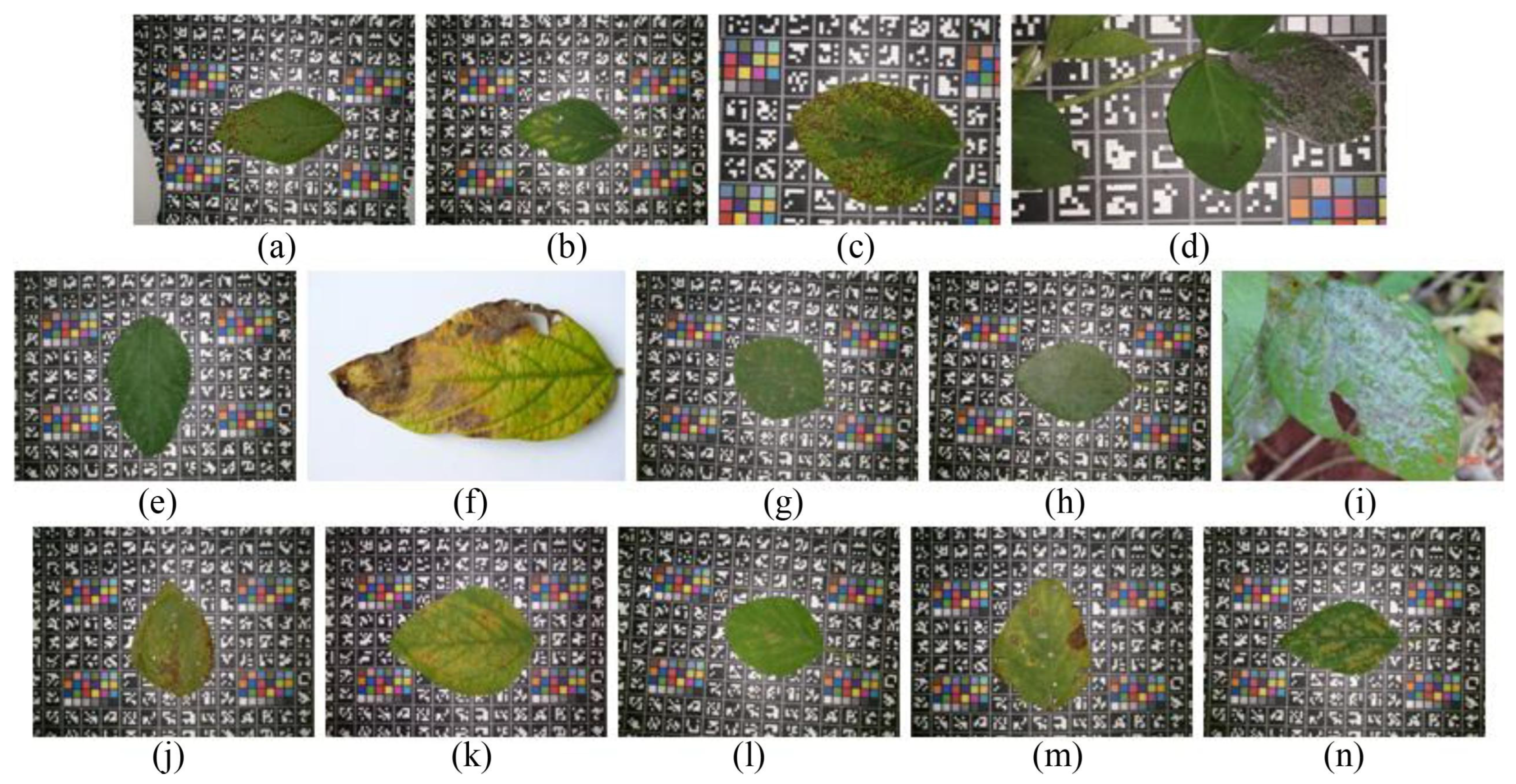

4.1. Diagnosis of Soybean Diseases and Pests

- Limited model generalizability, which hampers adaptability to variations in region, climate, and field conditions;

- Underdeveloped multimodal data fusion frameworks, with an over-reliance on RGB imagery and insufficient integration of hyperspectral, thermal infrared, and environmental sensor data;

- Lack of open-access, high-quality, and standardized image repositories for soybean pest and disease detection, restricting model reproducibility and comparative evaluation;

- Unoptimized trade-offs between model deployment and energy consumption, resulting in limited practicality and scalability.

- Development of multimodal recognition frameworks that integrate RGB, hyperspectral, thermal imaging, and LiDAR data to enhance model adaptability across diverse agricultural scenarios;

- Incorporation of multi-temporal remote sensing and UAV imagery to enable dynamic modeling and early warning of pest and disease progression over time;

- Advancement of lightweight models for edge deployment, tailored to meet the agricultural sector’s requirements for low cost, low energy consumption, and minimal latency;

- Construction of standardized and diversified soybean pest and disease image databases to improve model transferability and robustness across different environments;

- Promotion of cross-disciplinary research, such as integrating GANs, digital twins, sim2real transfer, and domain adaptation techniques into agricultural vision tasks, thereby mitigating challenges related to high annotation costs and sample imbalance.

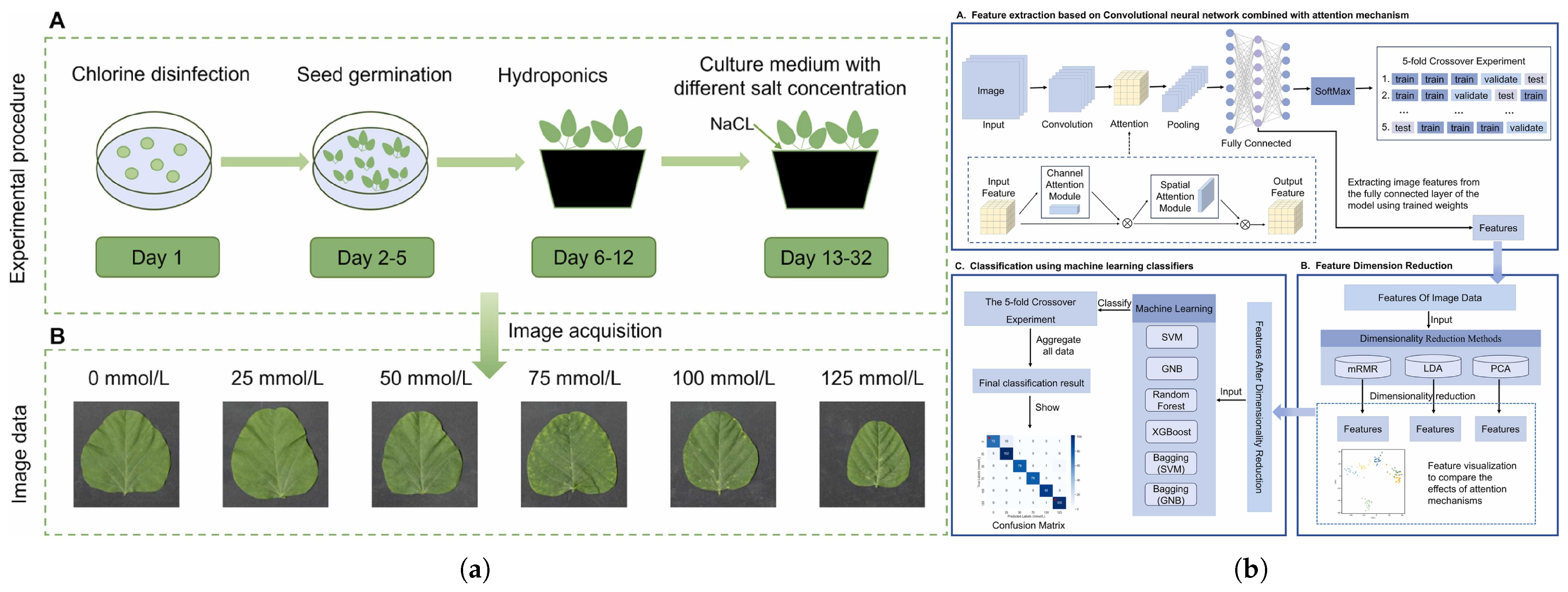

4.2. Soybean Growth Status and Phenotypic Trait Detection

- Limited model generalizability, particularly under complex and dynamic field conditions;

- Insufficient multimodal fusion, as most current models rely on single-modality inputs (e.g., RGB or hyperspectral images), limiting their ability to leverage diverse sources of information;

- Scarcity of high-quality, multi-annotated public datasets, which hampers training and benchmarking of models.

- Multimodal data fusion: Integrate RGB, hyperspectral, LiDAR, and thermal images to improve model adaptability and robustness in complex field scenarios.

- Multi-temporal remote sensing modeling: Use UAV imagery to dynamically monitor the full soybean growth cycle from sowing to harvest, enabling comprehensive modeling of growth stages, stress responses, and yield fluctuations.

- Lightweight model design and deployment optimization: Develop models suitable for edge computing to ensure efficient deployment in resource-constrained farm environments.

- Construction of standardized large-scale phenotyping databases: Promote the creation of open-access, high-quality soybean image datasets to enhance model comparability and reproducibility.

4.3. Weed Detection and Management

- Insufficient real-time multimodal data fusion: Most current systems rely on single-sensor inputs and lack efficient mechanisms for integrating multimodal data, limiting their adaptability to environmental changes.

- Limited model generalization and deployment adaptability: Deep learning models often struggle to generalize across diverse field conditions, regions, and growth stages due to limited training data and transfer difficulties.

- Lack of dynamic decision-making in weeding strategies: Many weeding robots still follow preset paths or fixed parameters, lacking the flexibility to adjust strategies based on real-time conditions.

- Balancing system complexity and economic feasibility: Achieving large-scale deployment requires managing hardware complexity and costs without compromising system performance.

- Develop lightweight, multi-task learning models optimized for real-time execution on edge devices;

- Introduce self-supervised and reinforcement learning mechanisms to enhance model adaptability in complex environments;

- Build open-access, multimodal field datasets for soybean to support high-quality model training and benchmarking;

- Explore multi-robot cooperative weeding systems, integrating coordination protocols to improve field-level management efficiency;

- Deeply integrate ADRC and deep learning-based navigation systems to construct intelligent platforms with high stability and dynamic control capabilities.

5. Mainstream Models and Optimization Strategies

5.1. Mainstream Models

5.2. Lightweight Architectures, Transfer Learning, and Model Optimization Strategies

6. Discussion

6.1. Challenges

6.2. Future Perspectives

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, X.; Komatsu, S. Improvement of soybean products through the response mechanism analysis using proteomic technique. Adv. Food Nutr. Res. 2017, 82, 117–148. [Google Scholar]

- El-Shemy, H. Soybean and Nutrition; BoD–Books on Demand: London, UK, 2011. [Google Scholar]

- Pagano, M.C.; Miransari, M. The importance of soybean production worldwide. In Abiotic and Biotic Stresses in Soybean Production; Elsevier: Amsterdam, The Netherlands, 2016; pp. 1–26. [Google Scholar]

- Shea, Z.; Singer, W.M.; Zhang, B. Soybean production, versatility, and improvement. In Legume Crops-Prospects, Production and Uses; IntechOpen: London, UK, 2020; pp. 29–50. [Google Scholar]

- Kofsky, J.; Zhang, H.; Song, B.H. The untapped genetic reservoir: The past, current, and future applications of the wild soybean (Glycine soja). Front. Plant Sci. 2018, 9, 949. [Google Scholar] [CrossRef]

- Colussi, J.; Schnitkey, G.; Janzen, J.; Paulson, N. The United States, Brazil, and China Soybean Triangle: A 20-Year Analysis. Farmdoc Dly. 2024, 14, 1–4. [Google Scholar]

- Yang, Z.Y.; Xia, W.K.; Chu, H.Q.; Su, W.H.; Wang, R.F.; Wang, H. A Comprehensive Review of Deep Learning Applications in Cotton Industry: From Field Monitoring to Smart Processing. Plants 2025, 14, 1481. [Google Scholar] [CrossRef]

- Zhang, X.; Li, Q. A Brief Introduction of Main Diseases and Insect Pests in Soybean Production in the Global Top Five Soybean Producing Countries. Plant Dis. Pests 2018, 9, 17–21. [Google Scholar]

- Wang, Z.; Wang, R.; Wang, M.; Lai, T.; Zhang, M. Self-supervised transformer-based pre-training method with General Plant Infection dataset. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Xi’an, China, 25–28 October 2024; pp. 189–202. [Google Scholar]

- Yu, S.F.; Wang, C.L.; Hu, Y.F.; Wen, Y.C.; Sun, Z.B. Biocontrol of three severe diseases in soybean. Agriculture 2022, 12, 1391. [Google Scholar] [CrossRef]

- Cheng, R.; Mei, R.; Yan, R.; Chen, H.; Miao, D.; Cai, L.; Fan, J.; Li, G.; Xu, R.; Lu, W.; et al. A new distinct geminivirus causes soybean stay-green disease. Mol. Plant 2022, 15, 927–930. [Google Scholar] [CrossRef]

- O’Neal, M.E.; Johnson, K.D. Insect pests of soybean and their management. In The Soybean: Botany, Production and Uses; CABI: Wallingford, UK, 2010; pp. 300–324. [Google Scholar]

- Hesler, L.S.; Allen, K.C.; Luttrell, R.G.; Sappington, T.W.; Papiernik, S.K. Early-season pests of soybean in the United States and factors that affect their risk of infestation. J. Integr. Pest Manag. 2018, 9, 19. [Google Scholar] [CrossRef]

- Gaur, N.; Mogalapu, S. Pests of soybean. In Pests and Their Management; Springer: Singapore, 2018; pp. 137–162. [Google Scholar]

- Staniak, M.; Szpunar-Krok, E.; Kocira, A. Responses of soybean to selected abiotic stresses—Photoperiod, temperature and water. Agriculture 2023, 13, 146. [Google Scholar] [CrossRef]

- Wang, X.; Wu, Z.; Zhou, Q.; Wang, X.; Song, S.; Dong, S. Physiological response of soybean plants to water deficit. Front. Plant Sci. 2022, 12, 809692. [Google Scholar] [CrossRef]

- Destro, D.; Carpentieri-Pípolo, V.; de Souza Kiihl, R.A.; de Almeida, L.A. Photoperiodism and genetic control of the long juvenile period in soybean: A review. Crop Breed. Appl. Biotechnol. 2001, 1, 72–92. [Google Scholar] [CrossRef]

- Bagale, S. Nutrient management for soybean crops. Int. J. Agron. 2021, 2021, 3304634. [Google Scholar] [CrossRef]

- Yao, M.; Huo, Y.; Ran, Y.; Tian, Q.; Wang, R.; Wang, H. Neural radiance field-based visual rendering: A comprehensive review. arXiv 2024, arXiv:2404.00714. [Google Scholar]

- Guan, R.; Liu, T.; Tu, W.; Tang, C.; Luo, W.; Liu, X. Sampling Enhanced Contrastive Multi-View Remote Sensing Data Clustering with Long-Short Range Information Mining. IEEE Trans. Knowl. Data Eng. 2025, 1–15. [Google Scholar] [CrossRef]

- Cao, Z.; Lu, Y.; Yuan, J.; Xin, H.; Wang, R.; Nie, F. Tensorized Graph Learning for Spectral Ensemble Clustering. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 2662–2674. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Automation in agriculture by machine and deep learning techniques: A review of recent developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Qin, Y.M.; Tu, Y.H.; Li, T.; Ni, Y.; Wang, R.F.; Wang, H. Deep Learning for Sustainable Agriculture: A Systematic Review on Applications in Lettuce Cultivation. Sustainability 2025, 17, 3190. [Google Scholar] [CrossRef]

- Li, Z.; Sun, C.; Wang, H.; Wang, R.F. Hybrid Optimization of Phase Masks: Integrating Non-Iterative Methods with Simulated Annealing and Validation via Tomographic Measurements. Symmetry 2025, 17, 530. [Google Scholar] [CrossRef]

- Guan, R.; Li, Z.; Tu, W.; Wang, J.; Liu, Y.; Li, X.; Tang, C.; Feng, R. Contrastive multiview subspace clustering of hyperspectral images based on graph convolutional networks. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5510514. [Google Scholar] [CrossRef]

- Wani, J.A.; Sharma, S.; Muzamil, M.; Ahmed, S.; Sharma, S.; Singh, S. Machine learning and deep learning based computational techniques in automatic agricultural diseases detection: Methodologies, applications, and challenges. Arch. Comput. Methods Eng. 2022, 29, 641–677. [Google Scholar] [CrossRef]

- Wang, R.F.; Su, W.H. The application of deep learning in the whole potato production Chain: A Comprehensive review. Agriculture 2024, 14, 1225. [Google Scholar] [CrossRef]

- Cui, K.; Shao, Z.; Larsen, G.; Pauca, V.; Alqahtani, S.; Segurado, D.; Pinheiro, J.; Wang, M.; Lutz, D.; Plemmons, R.; et al. PalmProbNet: A Probabilistic Approach to Understanding Palm Distributions in Ecuadorian Tropical Forest via Transfer Learning. In Proceedings of the 2024 ACM Southeast Conference, Tampa, FL, USA, 18–20 April 2024; pp. 272–277. [Google Scholar]

- Durai, S.K.S.; Shamili, M.D. Smart farming using machine learning and deep learning techniques. Decis. Anal. J. 2022, 3, 100041. [Google Scholar] [CrossRef]

- Zhou, G.; Wang, R.F.; Cui, K. A Local Perspective-based Model for Overlapping Community Detection. arXiv 2025, arXiv:2503.21558. [Google Scholar]

- Zhang, W.; Ma, M.; Jiang, Y.; Lian, R.; Wu, Z.; Cui, K.; Ma, X. Center-guided Classifier for Semantic Segmentation of Remote Sensing Images. arXiv 2025, arXiv:2503.16963. [Google Scholar]

- Cui, K.; Tang, W.; Zhu, R.; Wang, M.; Larsen, G.D.; Pauca, V.P.; Alqahtani, S.; Yang, F.; Segurado, D.; Fine, P.; et al. Efficient Localization and Spatial Distribution Modeling of Canopy Palms Using UAV Imagery. IEEE Trans. Geosci. Remote. Sens. 2025, 63, 4413815. [Google Scholar] [CrossRef]

- Kumari, S.; Dambale, A.S.; Samantara, R.; Jincy, M.; Bains, G. Introduction, history, geographical distribution, importance, and uses of soybean (Glycine max L.). In Soybean Production Technology: Physiology, Production and Processing; Springer: Singapore, 2025; pp. 1–17. [Google Scholar]

- Jarecki, W.; Bobrecka-Jamro, D. Effect of sowing date on the yield and seed quality of soybean (Glycine max (L.) Merr.). J. Elem. 2021, 26, 7–18. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, R.; Cao, Y.; Zheng, S.; Teng, Y.; Wang, F.; Wang, L.; Du, J. Deep learning based soybean seed classification. Comput. Electron. Agric. 2022, 202, 107393. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Deep learning for soybean monitoring and management. Seeds 2023, 2, 340–356. [Google Scholar] [CrossRef]

- Chandel, N.S.; Chakraborty, S.K.; Rajwade, Y.A.; Dubey, K.; Tiwari, M.K.; Jat, D. Identifying crop water stress using deep learning models. Neural Comput. Appl. 2021, 33, 5353–5367. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Astolfi, G.; de Souza Belete, N.A.; Amorim, W.P.; Roel, A.R.; Pistori, H. Detection and classification of soybean pests using deep learning with UAV images. Comput. Electron. Agric. 2020, 179, 105836. [Google Scholar] [CrossRef]

- Razfar, N.; True, J.; Bassiouny, R.; Venkatesh, V.; Kashef, R. Weed detection in soybean crops using custom lightweight deep learning models. J. Agric. Food Res. 2022, 8, 100308. [Google Scholar] [CrossRef]

- Attri, I.; Awasthi, L.K.; Sharma, T.P.; Rathee, P. A review of deep learning techniques used in agriculture. Ecol. Inform. 2023, 77, 102217. [Google Scholar] [CrossRef]

- Zhang, S.; Feng, H.; Han, S.; Shi, Z.; Xu, H.; Liu, Y.; Feng, H.; Zhou, C.; Yue, J. Monitoring of soybean maturity using UAV remote sensing and deep learning. Agriculture 2022, 13, 110. [Google Scholar] [CrossRef]

- Abed, S.H.; Al-Waisy, A.S.; Mohammed, H.J.; Al-Fahdawi, S. A modern deep learning framework in robot vision for automated bean leaves diseases detection. Int. J. Intell. Robot. Appl. 2021, 5, 235–251. [Google Scholar] [CrossRef] [PubMed]

- Shaheen, F.; Verma, B.; Asafuddoula, M. Impact of automatic feature extraction in deep learning architecture. In Proceedings of the 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 30 November–2 December 2016; pp. 1–8. [Google Scholar]

- Zhu, G.; Huang, X.; Peng, X.; Xu, J.; Guo, S.; Zhang, H. A deep learning-based quantitative prediction model for the processing potentials of soybeans as soymilk raw materials. Food Chem. 2024, 453, 139671. [Google Scholar] [CrossRef]

- Sharma, R. Artificial intelligence in agriculture: A review. In Proceedings of the 2021 5th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 6–8 May 2021; pp. 937–942. [Google Scholar]

- Fan, J.; Li, Y.; Yu, S.; Gou, W.; Guo, X.; Zhao, C. Application of internet of things to agriculture—The LQ-FieldPheno platform: A high-throughput platform for obtaining crop phenotypes in field. Research 2023, 6, 0059. [Google Scholar] [CrossRef]

- Li, D.; Quan, C.; Song, Z.; Li, X.; Yu, G.; Li, C.; Muhammad, A. High-throughput plant phenotyping platform (HT3P) as a novel tool for estimating agronomic traits from the lab to the field. Front. Bioeng. Biotechnol. 2021, 8, 623705. [Google Scholar] [CrossRef] [PubMed]

- Mukherjee, S.; Pal, S.; Pal, A.; Ghosh, D.; Sarkar, S.; Bhand, S.; Sarkar, P.; Bhattacharyya, N. UIISScan 1.1: A Field portable high-throughput platform tool for biomedical and agricultural applications. J. Pharm. Biomed. Anal. 2019, 174, 70–80. [Google Scholar] [CrossRef] [PubMed]

- Tian, H.; Wang, T.; Liu, Y.; Qiao, X.; Li, Y. Computer vision technology in agricultural automation—A review. Inf. Process. Agric. 2020, 7, 1–19. [Google Scholar] [CrossRef]

- Ghazal, S.; Munir, A.; Qureshi, W.S. Computer vision in smart agriculture and precision farming: Techniques and applications. Artif. Intell. Agric. 2024, 13, 64–83. [Google Scholar] [CrossRef]

- Coulibaly, S.; Kamsu-Foguem, B.; Kamissoko, D.; Traore, D. Deep learning for precision agriculture: A bibliometric analysis. Intell. Syst. Appl. 2022, 16, 200102. [Google Scholar] [CrossRef]

- Zhou, G.; Wang, R.F. The Heterogeneous Network Community Detection Model Based on Self-Attention. Symmetry 2025, 17, 432. [Google Scholar] [CrossRef]

- Shlezinger, N.; Whang, J.; Eldar, Y.C.; Dimakis, A.G. Model-based deep learning. Proc. IEEE 2023, 111, 465–499. [Google Scholar] [CrossRef]

- Dong, S.; Wang, P.; Abbas, K. A survey on deep learning and its applications. Comput. Sci. Rev. 2021, 40, 100379. [Google Scholar] [CrossRef]

- El Sakka, M.; Ivanovici, M.; Chaari, L.; Mothe, J. A Review of CNN Applications in Smart Agriculture Using Multimodal Data. Sensors 2025, 25, 472. [Google Scholar] [CrossRef]

- Gupta, A.; Singh, A. Agri-gnn: A novel genotypic-topological graph neural network framework built on graphsage for optimized yield prediction. arXiv 2023, arXiv:2310.13037. [Google Scholar]

- Lu, Y.; Chen, D.; Olaniyi, E.; Huang, Y. Generative adversarial networks (GANs) for image augmentation in agriculture: A systematic review. Comput. Electron. Agric. 2022, 200, 107208. [Google Scholar] [CrossRef]

- Jozdani, S.; Chen, D.; Pouliot, D.; Johnson, B.A. A review and meta-analysis of generative adversarial networks and their applications in remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2022, 108, 102734. [Google Scholar] [CrossRef]

- Li, P.; Zhou, J.; Sun, H.; Zeng, J. RDRM-YOLO: A High-Accuracy and Lightweight Rice Disease Detection Model for Complex Field Environments Based on Improved YOLOv5. Agriculture 2025, 15, 479. [Google Scholar] [CrossRef]

- Park, Y.H.; Choi, S.H.; Kwon, Y.J.; Kwon, S.W.; Kang, Y.J.; Jun, T.H. Detection of soybean insect pest and a forecasting platform using deep learning with unmanned ground vehicles. Agronomy 2023, 13, 477. [Google Scholar] [CrossRef]

- Nguyen, C.; Sagan, V.; Maimaitiyiming, M.; Maimaitijiang, M.; Bhadra, S.; Kwasniewski, M.T. Early detection of plant viral disease using hyperspectral imaging and deep learning. Sensors 2021, 21, 742. [Google Scholar] [CrossRef]

- Sharma, V.; Tripathi, A.K.; Mittal, H.; Nkenyereye, L. SoyaTrans: A novel transformer model for fine-grained visual classification of soybean leaf disease diagnosis. Expert Syst. Appl. 2025, 260, 125385. [Google Scholar] [CrossRef]

- Farah, N.; Drack, N.; Dawel, H.; Buettner, R. A deep learning-based approach for the detection of infested soybean leaves. IEEE Access 2023, 11, 99670–99679. [Google Scholar] [CrossRef]

- Omole, O.J.; Rosa, R.L.; Rodriguez, D.Z. Soybean disease detection by deep learning algorithms. In Proceedings of the 2023 International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Split, Croatia, 21–23 September 2023; pp. 1–5. [Google Scholar]

- Goshika, S.; Meksem, K.; Ahmed, K.R.; Lakhssassi, N. Deep learning model for classifying and evaluating soybean leaf disease damage. Int. J. Mol. Sci. 2023, 25, 106. [Google Scholar] [CrossRef]

- Yu, M.; Ma, X.; Guan, H.; Liu, M.; Zhang, T. A recognition method of soybean leaf diseases based on an improved deep learning model. Front. Plant Sci. 2022, 13, 878834. [Google Scholar] [CrossRef] [PubMed]

- Yu, M.; Ma, X.; Guan, H. Recognition method of soybean leaf diseases using residual neural network based on transfer learning. Ecol. Inform. 2023, 76, 102096. [Google Scholar] [CrossRef]

- Karlekar, A.; Seal, A. SoyNet: Soybean leaf diseases classification. Comput. Electron. Agric. 2020, 172, 105342. [Google Scholar] [CrossRef]

- Jin, Y.; Xia, X.; Gao, Q.; Yue, Y.; Lim, E.G.; Wong, P.; Ding, W.; Zhu, X. Deep learning in produce perception of harvesting robots: A comprehensive review. Appl. Soft Comput. 2025, 174, 112971. [Google Scholar] [CrossRef]

- Li, Z.; Xu, R.; Li, C.; Munoz, P.; Takeda, F.; Leme, B. In-field blueberry fruit phenotyping with a MARS-PhenoBot and customized BerryNet. Comput. Electron. Agric. 2025, 232, 110057. [Google Scholar] [CrossRef]

- Tan, C.; Sun, J.; Song, H.; Li, C. A customized density map model and segment anything model for cotton boll number, size, and yield prediction in aerial images. Comput. Electron. Agric. 2025, 232, 110065. [Google Scholar] [CrossRef]

- Cui, K.; Zhu, R.; Wang, M.; Tang, W.; Larsen, G.D.; Pauca, V.P.; Alqahtani, S.; Yang, F.; Segurado, D.; Lutz, D.; et al. Detection and Geographic Localization of Natural Objects in the Wild: A Case Study on Palms. arXiv 2025, arXiv:2502.13023. [Google Scholar]

- Huo, Y.; Wang, R.F.; Zhao, C.T.; Hu, P.; Wang, H. Research on Obtaining Pepper Phenotypic Parameters Based on Improved YOLOX Algorithm. AgriEngineering 2025, 7, 209. [Google Scholar] [CrossRef]

- Zhang, Q.Y.; Fan, K.J.; Tian, Z.; Guo, K.; Su, W.H. High-Precision Automated Soybean Phenotypic Feature Extraction Based on Deep Learning and Computer Vision. Plants 2024, 13, 2613. [Google Scholar] [CrossRef]

- Cui, K.; Li, R.; Polk, S.L.; Lin, Y.; Zhang, H.; Murphy, J.M.; Plemmons, R.J.; Chan, R.H. Superpixel-based and spatially-regularized diffusion learning for unsupervised hyperspectral image clustering. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 4405818. [Google Scholar] [CrossRef]

- Sun, G.; Zhang, Y.; Wang, L.; Zhou, L.; Fei, S.; Han, S.; Xiao, S.; Che, Y.; Yan, L.; Xu, Y.; et al. Bridging the gap between hyperspectral imaging and crop breeding: Soybean yield prediction and lodging classification with prototype contrastive learning. Comput. Electron. Agric. 2025, 230, 109859. [Google Scholar] [CrossRef]

- Araus, J.L.; Cairns, J.E. Field high-throughput phenotyping: The new crop breeding frontier. Trends Plant Sci. 2014, 19, 52–61. [Google Scholar] [CrossRef] [PubMed]

- Zhou, W.; Chen, Y.; Li, W.; Zhang, C.; Xiong, Y.; Zhan, W.; Huang, L.; Wang, J.; Qiu, L. SPP-extractor: Automatic phenotype extraction for densely grown soybean plants. Crop J. 2023, 11, 1569–1578. [Google Scholar] [CrossRef]

- Yang, H.; Fei, L.; Wu, G.; Deng, L.; Han, Z.; Shi, H.; Li, S. A novel deep learning framework for identifying soybean salt stress levels using RGB leaf images. Ind. Crop. Prod. 2025, 228, 120874. [Google Scholar] [CrossRef]

- Xu, W.; Ma, X.; Guan, H.; Meng, Y.; Zhang, X. MSAFNet: A multi-scale data fusion-based drought recognition method for three-dimensional images of the soybean plant. Earth Sci. Inform. 2025, 18, 391. [Google Scholar] [CrossRef]

- Grinblat, G.L.; Uzal, L.C.; Larese, M.G.; Granitto, P.M. Deep learning for plant identification using vein morphological patterns. Comput. Electron. Agric. 2016, 127, 418–424. [Google Scholar] [CrossRef]

- Wang, B.; Li, H.; You, J.; Chen, X.; Yuan, X.; Feng, X. Fusing deep learning features of triplet leaf image patterns to boost soybean cultivar identification. Comput. Electron. Agric. 2022, 197, 106914. [Google Scholar] [CrossRef]

- Lin, W.; Shu, L.; Zhong, W.; Lu, W.; Ma, D.; Meng, Y. Online classification of soybean seeds based on deep learning. Eng. Appl. Artif. Intell. 2023, 123, 106434. [Google Scholar] [CrossRef]

- Li, Y.; Jia, J.; Zhang, L.; Khattak, A.M.; Sun, S.; Gao, W.; Wang, M. Soybean seed counting based on pod image using two-column convolution neural network. IEEE Access 2019, 7, 64177–64185. [Google Scholar] [CrossRef]

- Liu, F.; Wang, S.; Pang, S.; Han, Z.; Zhao, L. SmartPod: An Automated Framework for High-Precision Soybean Pod Counting in Field Phenotyping. Agronomy 2025, 15, 791. [Google Scholar] [CrossRef]

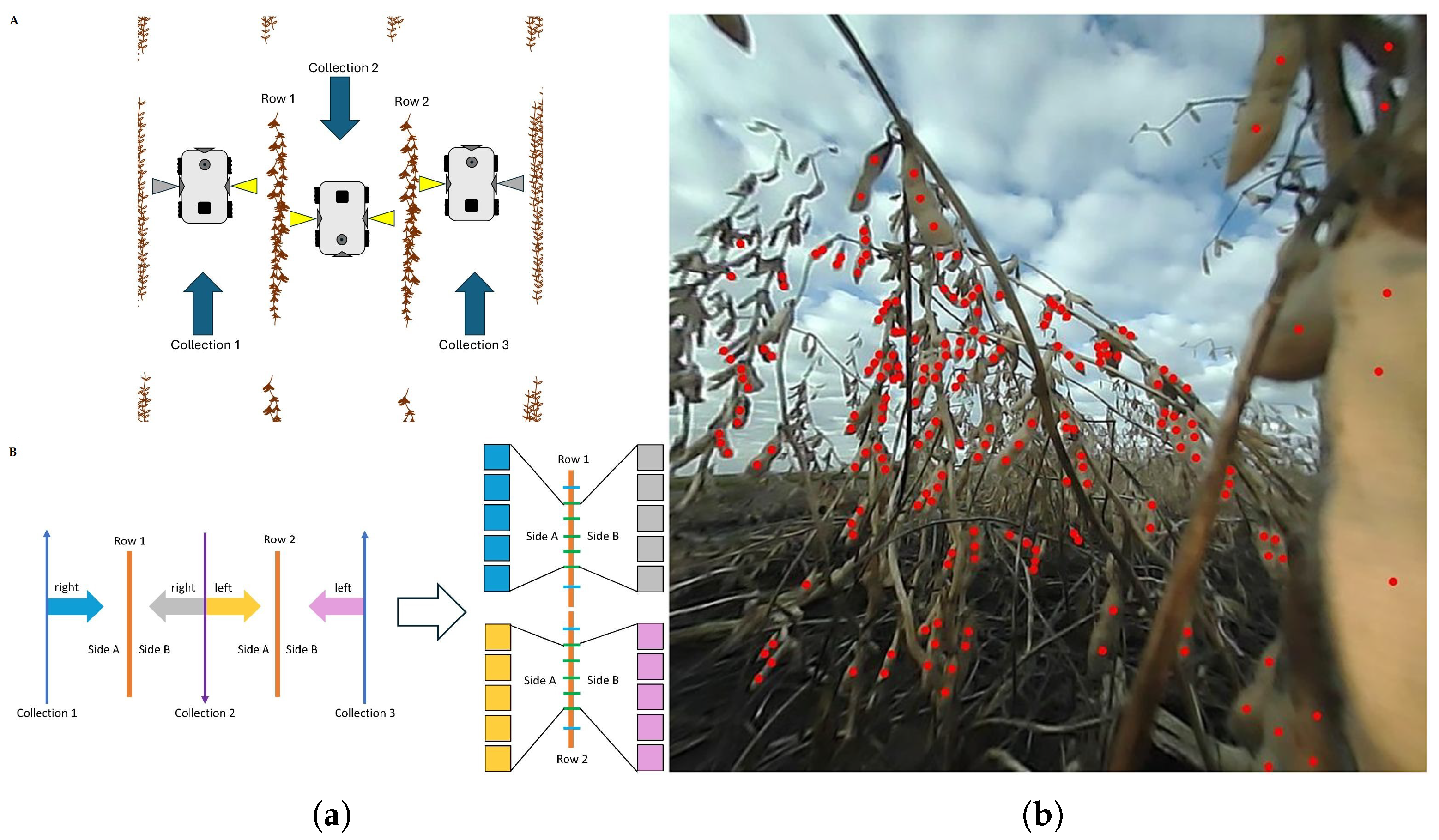

- Feng, J.; Blair, S.W.; Ayanlade, T.T.; Balu, A.; Ganapathysubramanian, B.; Singh, A.; Sarkar, S.; Singh, A.K. Robust soybean seed yield estimation using high-throughput ground robot videos. Front. Plant Sci. 2025, 16, 1554193. [Google Scholar] [CrossRef]

- Wang, R.F.; Tu, Y.H.; Li, X.C.; Chen, Z.Q.; Zhao, C.T.; Yang, C.; Su, W.H. An Intelligent Robot Based on Optimized YOLOv11l for Weed Control in Lettuce. In Proceedings of the 2025 ASABE Annual International Meeting, Toronto, ON, Canada, 13–16 July 2025; p. 1. [Google Scholar]

- Wang, R.F.; Tu, Y.H.; Chen, Z.Q.; Zhao, C.T.; Su, W.H. A Lettpoint-Yolov11l Based Intelligent Robot for Precision Intra-Row Weeds Control in Lettuce. Available at SSRN 5162748. 2025. Available online: https://ssrn.com/abstract=5162748 (accessed on 2 June 2025).

- Jiang, B.; Zhang, H.Y.; Su, W.H. Automatic localization of soybean seedlings based on crop signaling and multi-view imaging. Sensors 2024, 24, 3066. [Google Scholar] [CrossRef] [PubMed]

- Su, W.H.; Sheng, J.; Huang, Q.Y. Development of a three-dimensional plant localization technique for automatic differentiation of soybean from intra-row weeds. Agriculture 2022, 12, 195. [Google Scholar] [CrossRef]

- dos Santos Ferreira, A.; Freitas, D.M.; da Silva, G.G.; Pistori, H.; Folhes, M.T. Weed detection in soybean crops using ConvNets. Comput. Electron. Agric. 2017, 143, 314–324. [Google Scholar] [CrossRef]

- Fletcher, R.S. Using vegetation indices as input into random forest for soybean and weed classification. Am. J. Plant Sci. 2016, 7, 2186–2198. [Google Scholar] [CrossRef]

- Ye, S.; Xue, X.; Si, S.; Xu, Y.; Le, F.; Cui, L.; Jin, Y. Design and testing of an elastic comb reciprocating a soybean plant-to-plant seedling avoidance and weeding device. Agriculture 2023, 13, 2157. [Google Scholar] [CrossRef]

- Yao, M.; Huo, Y.; Tian, Q.; Zhao, J.; Liu, X.; Wang, R.; Xue, L.; Wang, H. FMRFT: Fusion mamba and DETR for query time sequence intersection fish tracking. arXiv 2024, arXiv:2409.01148. [Google Scholar]

- Bonin-Font, F.; Ortiz, A.; Oliver, G. Visual navigation for mobile robots: A survey. J. Intell. Robot. Syst. 2008, 53, 263–296. [Google Scholar] [CrossRef]

- Xu, R.; Li, C. A review of high-throughput field phenotyping systems: Focusing on ground robots. Plant Phenomics 2022, 2022, 9760269. [Google Scholar] [CrossRef]

- Kazerouni, I.A.; Fitzgerald, L.; Dooly, G.; Toal, D. A survey of state-of-the-art on visual SLAM. Expert Syst. Appl. 2022, 205, 117734. [Google Scholar] [CrossRef]

- Tu, Y.-H.; Wang, R.-F.; Su, W.-H. Active Disturbance Rejection Control—New Trends in Agricultural Cybernetics in the Future: A Comprehensive Review. Machines 2025, 13, 111. [Google Scholar] [CrossRef]

- Wu, A.Q.; Li, K.L.; Song, Z.Y.; Lou, X.; Hu, P.; Yang, W.; Wang, R.F. Deep Learning for Sustainable Aquaculture: Opportunities and Challenges. Sustainability 2025, 17, 5084. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wu, W.; Huo, L.; Yang, G.; Liu, X.; Li, H. Research into the application of ResNet in soil: A review. Agriculture 2025, 15, 661. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Hou, Y.; Wu, Z.; Cai, X.; Zhu, T. The application of improved densenet algorithm in accurate image recognition. Sci. Rep. 2024, 14, 8645. [Google Scholar] [CrossRef] [PubMed]

- Yogabalajee, V.; Sundaram, K.; Kanagaraj, K. Soybean Leaf Disease Classification using Enhanced Densenet121. In Proceedings of the 2024 10th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 21–23 March 2024; Volume 1, pp. 1515–1520. [Google Scholar]

- Bhujade, V.G.; Sambhe, V. Multi-disease classification and severity estimation of cotton and soybean plants using DenseNet. In Proceedings of the International Conference on Advanced Network Technologies and Intelligent Computing, Varanasi, India, 17–19 December 2023; pp. 20–41. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Chawla, S.; Gupta, D.; Pippal, S.K. Review on Architectures of Convolutional Neural Network. In Proceedings of the 2025 3rd International Conference on Disruptive Technologies (ICDT), Greater Noida, India, 7–8 March 2025; pp. 442–448. [Google Scholar]

- Wang, Z.; Zhang, H.W.; Dai, Y.Q.; Cui, K.; Wang, H.; Chee, P.W.; Wang, R.F. Resource-Efficient Cotton Network: A Lightweight Deep Learning Framework for Cotton Disease and Pest Classification. Plants 2025, 14, 2082. [Google Scholar] [CrossRef]

- Huo, Y.; Yao, M.; Tian, Q.; Wang, T.; Wang, R.; Wang, H. FA-YOLO: Research on Efficient Feature Selection YOLO Improved Algorithm Based on FMDS and AGMF Modules. arXiv 2024, arXiv:2408.16313. [Google Scholar]

- Zhao, C.-T.; Wang, R.-F.; Tu, Y.-H.; Pang, X.-X.; Su, W.-H. Automatic lettuce weed detection and classification based on optimized convolutional neural networks for robotic weed control. Agronomy 2024, 14, 2838. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 8–10 August 2024; pp. 1–6. [Google Scholar]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A comprehensive review of yolo architectures in computer vision: From yolov1 to yolov8 and yolo-nas. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Di, X.; Cui, K.; Wang, R.F. Toward Efficient UAV-Based Small Object Detection: A Lightweight Network with Enhanced Feature Fusion. Remote Sens. 2025, 17, 2235. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Li, X.; Zhang, T.; Yu, M.; Yan, P.; Wang, H.; Dong, X.; Wen, T.; Xie, B. A YOLOv8-based method for detecting tea disease in natural environments. Agron. J. 2025, 117, e70043. [Google Scholar] [CrossRef]

- Zhang, L.; Yu, S.; Yang, B.; Zhao, S.; Huang, Z.; Yang, Z.; Yu, H. YOLOv8 forestry pest recognition based on improved re-parametric convolution. Front. Plant Sci. 2025, 16, 1552853. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- Guleria, A.; Varshney, K.; Jindal, S. A systematic review: Object detection. AI Soc. 2025, 1–18. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Hemamalini, P.; Chandraprakash, M.; Laxman, R.; Rathinakumari, C.; Senthil Kumaran, G.; Suneetha, K. Thermal canopy segmentation in tomato plants: A novel approach with integration of YOLOv8-C and FastSAM. Smart Agric. Technol. 2025, 10, 100806. [Google Scholar] [CrossRef]

- Gao, Y.; Jiang, Y.; Peng, Y.; Yuan, F.; Zhang, X.; Wang, J. Medical Image Segmentation: A Comprehensive Review of Deep Learning-Based Methods. Tomography 2025, 11, 52. [Google Scholar] [CrossRef]

- Ingole, V.S.; Kshirsagar, U.A.; Singh, V.; Yadav, M.V.; Krishna, B.; Kumar, R. A Hybrid Model for Soybean Yield Prediction Integrating Convolutional Neural Networks, Recurrent Neural Networks, and Graph Convolutional Networks. Computation 2025, 13, 4. [Google Scholar] [CrossRef]

- Zhao, K.; Zhang, Q.; Wan, C.; Pan, Q.; Qin, Y. Visual Mamba UNet fusion multi-scale attention and detail infusion for unsound corn kernels segmentation. Sci. Rep. 2025, 15, 10933. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Wang, Y.; Deng, Y.; Zheng, Y.; Chattopadhyay, P.; Wang, L. Vision Transformers for Image Classification: A Comparative Survey. Technologies 2025, 13, 32. [Google Scholar] [CrossRef]

- Haruna, Y.; Qin, S.; Chukkol, A.H.A.; Yusuf, A.A.; Bello, I.; Lawan, A. Exploring the synergies of hybrid convolutional neural network and Vision Transformer architectures for computer vision: A survey. Eng. Appl. Artif. Intell. 2025, 144, 110057. [Google Scholar] [CrossRef]

- Zhang, J.; Zhou, H.; Liu, K.; Xu, Y. ED-Swin Transformer: A Cassava Disease Classification Model Integrated with UAV Images. Sensors 2025, 25, 2432. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Jin, C.; Zhou, L.; Pu, Y.; Zhang, C.; Qi, H.; Zhao, Y. Application of deep learning for high-throughput phenotyping of seed: A review. Artif. Intell. Rev. 2025, 58, 76. [Google Scholar] [CrossRef]

- Zhou, L.; Han, D.; Sun, G.; Liu, Y.; Yan, X.; Jia, H.; Yan, L.; Feng, P.; Li, Y.; Qiu, L.; et al. Soybean yield estimation and lodging discrimination based on lightweight UAV and point cloud deep learning. Plant Phenomics 2025, 7, 100028. [Google Scholar] [CrossRef]

- Howard, A.G. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Mehta, S.; Rastegari, M. Mobilevit: Light-weight, general-purpose, and mobile-friendly vision transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

- Mehta, S.; Rastegari, M. Separable self-attention for mobile vision transformers. arXiv 2022, arXiv:2206.02680. [Google Scholar]

- Wadekar, S.N.; Chaurasia, A. Mobilevitv3: Mobile-friendly vision transformer with simple and effective fusion of local, global and input features. arXiv 2022, arXiv:2209.15159. [Google Scholar]

- Jaquier, N.; Welle, M.C.; Gams, A.; Yao, K.; Fichera, B.; Billard, A.; Ude, A.; Asfour, T.; Kragic, D. Transfer learning in robotics: An upcoming breakthrough? A review of promises and challenges. Int. J. Robot. Res. 2025, 44, 465–485. [Google Scholar] [CrossRef]

- Liu, D.; Li, Z.; Wu, Z.; Li, C. Digital Twin/MARS-CycleGAN: Enhancing Sim-to-Real Crop/Row Detection for MARS Phenotyping Robot Using Synthetic Images. J. Field Robot. 2025, 42, 625–640. [Google Scholar] [CrossRef]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 9729–9738. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 16000–16009. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Jiang, L.; Rodriguez-Sanchez, J.; Snider, J.L.; Chee, P.W.; Fu, L.; Li, C. Mapping of cotton bolls and branches with high-granularity through point cloud segmentation. Plant Methods 2025, 21, 66. [Google Scholar] [CrossRef]

- Tan, C.; Sun, J.; Paterson, A.H.; Song, H.; Li, C. Three-view cotton flower counting through multi-object tracking and RGB-D imagery. Biosyst. Eng. 2024, 246, 233–247. [Google Scholar] [CrossRef]

- Tan, C.; Li, C.; Sun, J.; Song, H. Three-View Cotton Flower Counting through Multi-Object Tracking and Multi-Modal Imaging. In Proceedings of the 2023 ASABE Annual International Meeting, Omaha, NE, USA, 9–12 July 2023; p. 1. [Google Scholar]

- Yang, Z.X.; Li, Y.; Wang, R.F.; Hu, P.; Su, W.H. Deep Learning in Multimodal Fusion for Sustainable Plant Care: A Comprehensive Review. Sustainability 2025, 17, 5255. [Google Scholar] [CrossRef]

- Cao, Z.; Xin, H.; Wang, R.; Nie, F. Superpixel-Based Bipartite Graph Clustering Enriched with Spatial Information for Hyperspectral and LiDAR Data. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5505115. [Google Scholar] [CrossRef]

- Gupta, D.; Golder, A.; Zhu, R.; Cui, K.; Tang, W.; Yang, F.; Csillik, O.; Alaqahtani, S.; Pauca, V.P. MoSAiC: Multi-Modal Multi-Label Supervision-Aware Contrastive Learning for Remote Sensing. arXiv 2025, arXiv:2507.08683. [Google Scholar]

- Wang, H.; Zhu, M.; Li, L.; Wang, L.; Zhao, H.; Mei, S. Regional weed identification method from wheat field based on unmanned aerial vehicle image and shearlets. Trans. Chin. Soc. Agric. Eng. 2017, 33, 99–106. [Google Scholar]

- Li, L.; Li, J.; Wang, H.; Georgieva, T.; Ferentinos, K.; Arvanitis, K.; Sygrimis, N. Sustainable energy management of solar greenhouses using open weather data on MACQU platform. Int. J. Agric. Biol. Eng. 2018, 11, 74–82. [Google Scholar] [CrossRef]

- Yuan, H.; Li, L.; Wang, J.; Wang, H.; Sigrimis, N.A. Design and test of regulation and control equipment for nutrient solution of water and fertilizer integration in greenhouse. Trans. Chin. Soc. Agric. Eng. 2016, 32, 27–32. [Google Scholar]

- Li, Z.; Xu, R.; Li, C.; Fu, L. Simulation of an In-field Phenotyping Robot: System Design, Vision-based Navigation and Field Mapping. In Proceedings of the 2022 ASABE Annual International Meeting, Houston, TX, USA, 17–20 July 2022; p. 1. [Google Scholar]

| Model Type | Representative Models | Application Tasks | Advantages | Limitations | Future Directions |

|---|---|---|---|---|---|

| Image Classification | ResNet, DenseNet, EfficientNet | Disease classification | High accuracy, clear architecture | No lesion localization | Multi-modal and multi-temporal integration |

| Object Detection | YOLO, Faster R-CNN, SSD | Lesion and pest detection, seed detection, yield estimation | Real-time performance, versatile | Poor performance on small targets | Multi-scale detection, small object enhancement |

| Image Segmentation | U-Net, Mask R-CNN, DeepLabV3+ | Lesion segmentation | Pixel-level output | Labeling-intensive, high resource demand | Lightweight architectures, self-supervised learning |

| Transformer-Based | ViT, Swin Transformer, DETR | Remote sensing, dynamic modeling | Strong global modeling capability | High computational cost | Multi-modal fusion, lightweight optimization |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, H.; Chu, H.-Q.; Qin, Y.-M.; Hu, P.; Wang, R.-F. Empowering Smart Soybean Farming with Deep Learning: Progress, Challenges, and Future Perspectives. Agronomy 2025, 15, 1831. https://doi.org/10.3390/agronomy15081831

Sun H, Chu H-Q, Qin Y-M, Hu P, Wang R-F. Empowering Smart Soybean Farming with Deep Learning: Progress, Challenges, and Future Perspectives. Agronomy. 2025; 15(8):1831. https://doi.org/10.3390/agronomy15081831

Chicago/Turabian StyleSun, Huihui, Hao-Qi Chu, Yi-Ming Qin, Pingfan Hu, and Rui-Feng Wang. 2025. "Empowering Smart Soybean Farming with Deep Learning: Progress, Challenges, and Future Perspectives" Agronomy 15, no. 8: 1831. https://doi.org/10.3390/agronomy15081831

APA StyleSun, H., Chu, H.-Q., Qin, Y.-M., Hu, P., & Wang, R.-F. (2025). Empowering Smart Soybean Farming with Deep Learning: Progress, Challenges, and Future Perspectives. Agronomy, 15(8), 1831. https://doi.org/10.3390/agronomy15081831