Abstract

Accurate counting of cucumber flowers using intelligent algorithms to monitor their sex ratio is essential for intelligent facility agriculture management. However, complex greenhouse environments impose higher demands on the precision and efficiency of counting algorithms. This study proposes a dual-area counting algorithm based on an improved YOLOv8n-Track (YOLOv8n-T) and ByteTrack cascaded framework. This method accomplishes the cucumber flower counting task by detecting flower targets, tracking them frame-by-frame, and validating the count through dual-area counting. The YOLOv8n-T incorporates a Coordinate Attention (CA) mechanism and lightweight modules while optimizing the loss function, thereby improving floral feature extraction capabilities and reducing computational complexity. By integrating the ByteTrack tracking algorithm with a dual-area counting strategy, the robustness of flower counting in dynamic environments is strengthened. Experimental results show that the improved YOLOv8n-T achieves mAP and F1 scores of 86.9% and 82.1%, surpassing YOLOv8n by 3% and 2.6%, respectively, with a 0.3 G reduction in model parameters. The integrated framework achieves a detection accuracy of 82.4% for cucumber flower counting. This research provides a new method for monitoring cucumber flower sex ratios in facility agriculture, promoting the development of intelligent agricultural management.

1. Introduction

As a globally cultivated continuously harvested crop, cucumber exhibits significant dynamic distribution patterns of pistillate and staminate flowers that critically influence fruit yield and commercial quality [1,2]. Growers can enhance both the productivity and marketability of cucumber fruits through the precise regulation of floral sex ratios. However, traditional manual sampling methods suffer from substantial statistical errors and low efficiency, while comprehensive census approaches require excessive labor and temporal resources.

The development of computer vision-based intelligent counting algorithms has emerged as a pivotal technical pathway for achieving accurate sex ratio regulation [3]. The cucumber flower counting algorithm is based on a cascaded framework combining object detection and tracking. Early-flower identification methods leverage distinctive color features of blossoms. Thorp et al. [4] proposed floral pixel segmentation through HSV (Hue, Saturation, Value) color space conversion, subsequently estimating total flower counts via the coverage percentage of segmented pixels across image frames. However, in a complex environment, this method is vulnerable to influence.

With the gradual advancement of agriculture toward precision farming, deep learning algorithms have been extensively researched and applied in intelligent agricultural perception. The You Only Look Once (YOLO) series demonstrates strengths including high detection accuracy, rapid inference speed, and broad applicability [5]. To achieve dense apple inflorescence detection, Xue Xia et al. [6] proposed a novel transformer-based CNN model named MTYOLOX, achieving AP and AR values of 0.834 and 0.933, respectively. Guo Li et al. [7] applied transfer learning with YOLOv3 and YOLOv4 for kiwifruit bud detection, where experimental results demonstrated that YOLOv4 achieved a significantly higher mAP than YOLOv3. Guo Li et al. [8] also used YOLOv5l to conduct further classification and distribution studies on macaque flowers, and the mAP also showed a significant improvement.

Traditional object tracking methods, including optical flow [9] and frame differencing [10], suffer from limitations in real-time performance, high computational complexity, and environmental sensitivity. Conversely, modern approaches such as ByteTrack [11] and Bot-SORT [12] achieve superior tracking performance [13]. Praveen Kumar Sekharamantry et al. [14] developed a modified YOLOv7 integrated with ByteTrack for apple tracking and counting tasks. Xinquan Ye et al. [15] introduced an enhanced YOLOv5 integrated with StrongSORT for the dynamic visual tracking and counting of Pine Wilt Disease (PWD)-infected pine trees. The above research shows that the cascading framework based on YOLO as the target detector and multi-target tracking algorithm performs well in conventional scenarios. However, in complex greenhouse conditions, reliance solely on detection-tracking cascades may induce trajectory fragmentation or ID misassignment, consequently leading to undercounting and duplicate counting.

Kai Yuan et al. [16] proposed an FA–ByteTrack method to track and count flowering kale in specific regions. Shuqin Tu et al. [17] presented a computer vision algorithm integrating YOLOv8n, OC-SORT, and the Central Region Counting Method (CRCM) for passionfruit yield estimation. Although these studies use cascaded frameworks with detection, tracking, and counting strategies, their studies of counting strategies are simplistic.

To address these challenges, this study introduces an improved tracking-detection cascade framework with dual-region counting for the accurate counting of cucumber flowers. The work comprises the following:

- Collect cucumber flower images in complex greenhouse environments, create a dataset, and conduct visual analysis;

- Improve the YOLOv8n model to enhance the accuracy of the cucumber flower detection model and achieve model lightweighting in response to the complex greenhouse environment;

- Develop a dual-region counting algorithm and integrate it with the tracking and detection cascade framework to achieve dynamic detection of cucumber flowers.

2. Materials and Methods

2.1. Dataset Construction

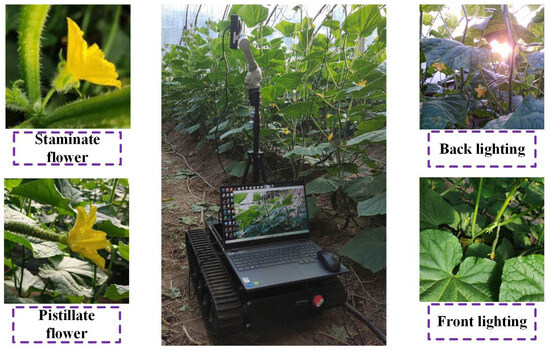

In September 2023, this study collected image data of cucumber flowers in a greenhouse environment. As cucumbers are monoecious plants, both staminate and pistillate flower images were systematically collected. The acquired images were classified into front lighting and back lighting conditions based on illumination direction, as shown in Figure 1.

Figure 1.

Cucumber flower image acquisition in complex greenhouse environments.

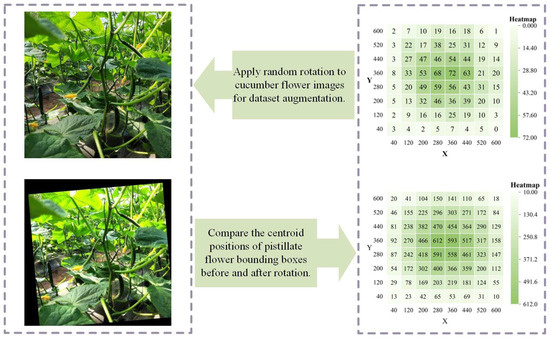

The collected images were filtered to retain 2000 cucumber flower images, and the dataset was then expanded to 10,000 images through data augmentation, including random image rotation employed in this study. Using LabelImg annotation tool, pistillate flowers were labeled as “m” and staminate flowers were labeled as “fm” categories. Original XML annotation files were converted into a normalized TXT format compatible with YOLO architectures. The final dataset was partitioned into training, validation, and test sets with a 7:2:1 ratio.

This study visualized the center coordinates of bounding boxes for pistillate flowers within the dataset. As shown in Figure 2, compared with the original distribution, the center coordinates distribution of the cucumber flower bounding boxes enhanced by the dataset presents a more uniform spatial coverage, indicating that data enhancement improves the stability of the dataset by reducing positional deviation.

Figure 2.

Visualization of image data augmentation.

2.2. Research Methodology

2.2.1. Model Optimization

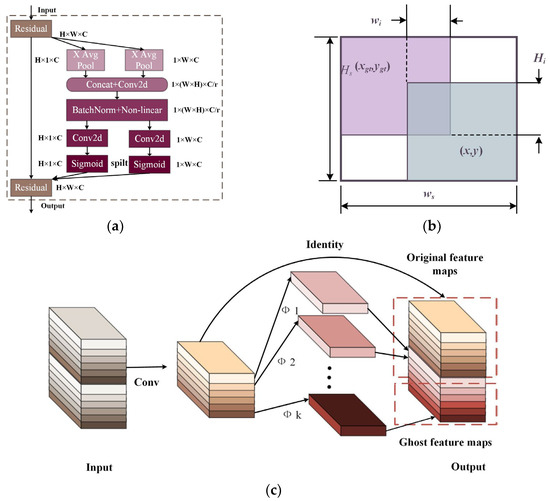

Attention mechanisms derive from human visual perception studies. Owing to information processing limitations, humans selectively focus on salient information while filtering irrelevant perceptible data. Common attention mechanisms include Squeeze-and-Excitation (SE) [18], Convolutional Block Attention Module (CBAM) [19], Simple Attention Mechanism (SimAM) [20], and Coordinate Attention (CA) [21]. The CA module enhances spatial information modeling in convolutional networks, improving target localization. By integrating coordinate-aware channel attention into YOLOv8, it boosts small target detection while preserving model efficiency. The CA module serves as a computational unit to enhance feature representation in neural networks. To improve cucumber flower detection accuracy, this study integrates the CA module into the YOLOv8 architecture, with its structural diagram shown in Figure 3a.

Figure 3.

Schematic diagram of enhanced modules and loss function used in Yolov8n-T. (a) Diagram of the CA structure; (b) Wiou; (c) the convolution process of GhostConv.

In computer vision, loss functions quantify discrepancies between predictions and ground truth annotations, serving as critical components in neural network training. The selection of loss functions depends on task-specific requirements. This work employs the Wise-IoU (WIoU) loss function [22], which prioritizes medium-quality cucumber flower samples to enhance model generalization capability and overall performance. The WIoU mechanism is depicted in Figure 3b.

significantly amplifies parameter for average-quality anchor boxes.

substantially reduces parameter for high-quality anchor boxes. When an anchor box exhibits strong overlap with the target bounding box, the loss function prioritizes minimizing the distance between their centroids. The formal definitions are given in Equations (1) and (2), which are as follows:

where and denote the dimensions of the minimum enclosing box. To prevent parameters from generating convergence-impeding gradients, parameters and are detached from the computational graph (denoted by superscript ∗).

GhostNet [23] (Huawei Noah’s Ark Lab, 2020) is a lightweight convolutional network whose core module reduces feature redundancy through phased convolution: initial features are generated via minimal standard convolutions in the first stage, followed by linear convolutions producing supplementary “ghost” features in the second stage, ultimately concatenating both features for lightweight output. Liu et al. [24] and Pan et al. [25] embedded GhostConv modules into detection models, significantly reducing computational complexity. Addressing lightweight deployment requirements for cucumber flower detection, this study optimizes feature extraction using GhostConv to balance accuracy and computational costs, as illustrated in Figure 3c.

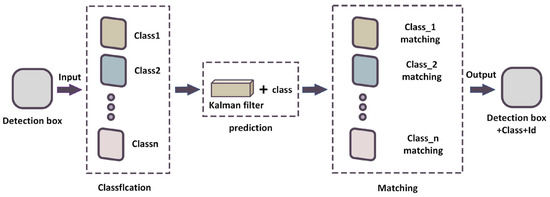

2.2.2. ByteTrack Integration

Multiple Object Tracking (MOT) [26] aims to continuously track multiple targets in video sequences while maintaining consistent identities across frames. This involves associating detected targets with existing tracked objects to address challenges such as occlusions and identity switches.

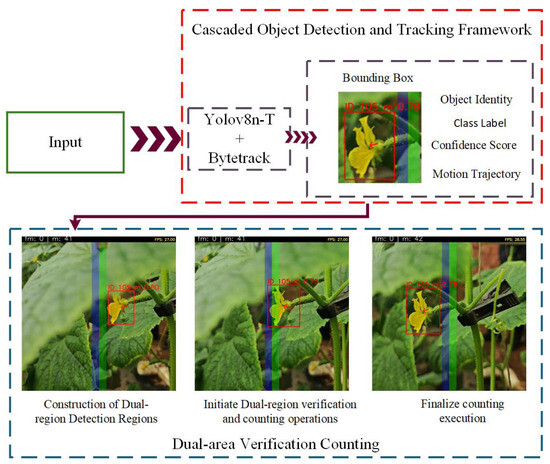

YOLOv8 incorporates the BYTE (ByteTrack) algorithm [11], an innovative data association method designed to enhance MOT accuracy and trajectory consistency. The cucumber flower tracking–counting framework proposed in this study incorporates the ByteTrack tracking algorithm as the tracker, as shown in Figure 4. Following target detection input, bounding boxes are first graded by confidence score. The Kalman filter then predicts states of existing trajectories. A two-stage data association is performed: prioritizing high-confidence box matching, followed by low-confidence box reassociation of unmatched trajectories. Finally, updated tracking targets and their IDs are output.

Figure 4.

Bytetrack.

2.2.3. Dual-Region Counting Framework

To achieve accurate cucumber flower counting, we integrate three trigger-based counting methods—line-based counting (LC), area range-based counting (AC), and dual-area counting (DC)—into the detection-tracking cascaded framework. The methods are detailed as follows:

Line-based counting (LC): This algorithm establishes a virtual detection line within the monitoring scene. When a target bounding box spatially intersects with this line (as shown in Figure 5a), the system captures the target’s ID and category to execute a single counting operation. The primary limitation of LC lies in its temporal sensitivity to linear detection zones, frequently resulting in missed detections due to transient line interactions.

Figure 5.

Three distinct counting methodologies. (a) Line-based counting; (b) area range-based counting; (c) dual-area counting.

Area range-based counting (AC): The algorithm defines a polygonal detection area. Targets intersecting with this region trigger category/ID recording and single-count operations (Figure 5b). While spatial dimension expansion improves detection fault tolerance through area coverage, AC inherently suffers from duplicate counting when persistent ID drift occurs within the detection zone.

Dual-area counting (DC): The algorithm constructs two spatiotemporally correlated detection zones (Figure 5c). When a target enters Zone 1, its ID and category are logged. A counting operation is triggered only if the same target (verified via ID consistency) subsequently enters Zone 2; otherwise, trajectory mismatches are discarded. This dual-validation mechanism expands detection coverage (through inter-zone span) while eliminating duplicate counts via spatiotemporal consistency constraints.

The video stream is processed through a cascaded YOLOv8n-T and ByteTrack framework for object tracking. Each cucumber flower target is assigned a unique ID, with detection results (bounding boxes, class labels, and confidence scores) overlaid on the video frames. A dual-zone counting strategy is then applied to quantify yellow cucumber flowers, with cumulative counts displayed in the detection window. The workflow of the counting methodology is illustrated in Figure 6.

Figure 6.

Cucumber flower counting algorithm integrating YOLOv8n-T with ByteTrack cascaded framework and dual-region counting.

2.3. Evaluation Indicators

This study utilizes performance and complexity as evaluation metrics for the target detection model. The performance metrics include precision (), recall (), mean average precision (), and . The serves as a comprehensive measure of overall model performance, calculated as the harmonic mean of and over the interval [0, 1], where and independently assess model quality. The formal definitions are given in Equations (3) and (4), which are as follows:

where , , and denote true positive, false positive, and false negative counts, respectively. represents object category count.

Model complexity is quantified through three metrics, parameter count (Params), floating-point operations (FLOPs), and model size, with computational formulations given in Equations (8)–(10) as follows:

where , , and denote input dimensions, kernel size, and output dimensions, respectively. are the output feature map dimensions. is the kernel size. is the stride. and are the input/output channels.

Counting performance is evaluated through counting precision (), average counting precision (), and mean average counting precision (), defined in Equations (11)–(13) as follows:

where and denote automated and ground-truth counts, respectively, and is the total number of test videos.

2.4. Experimental Configuration and Hyperparameters

YOLOv8 (You Only Look Once version 8) [27], as an upgraded iteration of single-stage object detection algorithms, achieves end-to-end detection through a single forward propagation. Its architecture consists of the following three parts: the Backbone (a CSPDarknet-based feature extraction network), the Neck (a feature pyramid fusion module), and the Head (a decoupled detection head). YOLOv8 significantly improves detection accuracy while maintaining real-time performance and it can still maintain a good real-time detection capability in complex multi-object scenarios.

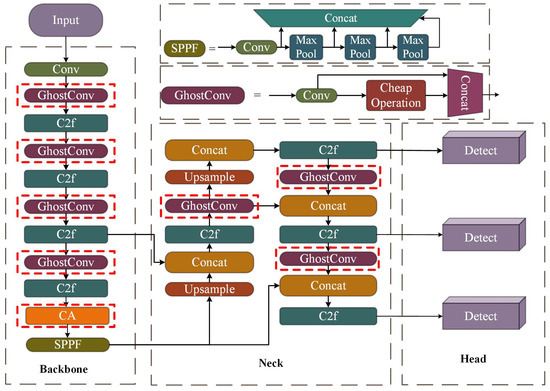

This study adopts YOLOv8n for object detection tasks, incorporating CA (Coordinate Attention) detection layers and Ghost modules into the YOLOv8n architecture, with the WIoU (Wise Intersection over Union) loss function. Except for the input layer, all standard convolutional layers are replaced with Ghost convolution modules to reduce the number of parameters through feature redundancy compression; the CA module is embedded at the end of the backbone to enhance spatial position perception. The structure of the modified YOLOv8n-T is illustrated in Figure 7.

Figure 7.

Structure of the YOLOv8n-T. (The content in the red dashed box is the modified module in the YOLOv8n-T network structure).

Model training and system development were executed on a unified hardware platform, with detailed specifications of the computational environment provided in Table 1. The collected dataset served as the input data, with images resized to a resolution of 640 × 640 pixels. Training hyperparameters were configured as follows: initial learning rate set to 0.01 and a batch size of 16. The model converged by epoch 100; therefore, this epoch was selected for evaluation.

Table 1.

Information on hardware and environment.

3. Experiments and Analysis

This section presents the experimental analysis of this study. First, a systematic investigation into the enhanced YOLOv8n-T model is detailed, incorporating attention mechanisms, ablation studies, multi-model comparisons, and visual analytics. These experiments validate the model’s efficacy for cucumber flower detection. Second, cucumber flower counting experiments were conducted using a YOLOv8n-T and ByteTrack cascaded framework integrated with three counting strategies. The analysis evaluates the effectiveness of the proposed counting methodology.

3.1. Attention Mechanism Evaluation

Three attention modules—Squeeze-and-Excitation (SE), Convolutional Block Attention Module (CBAM), and Simple Attention Mechanism (SimAM)—were selected to replace the CA module in the YOLOv8n-T model for comparative experiments. The performance of different attention mechanisms in cucumber flower detection tasks was evaluated, with experimental results presented in Table 2.

Table 2.

Results of a comparative trial of five attention mechanisms.

The Coordinate Attention (CA) module enhances channel relationship modeling and long-range dependency capture through spatial coordinate encoding mechanisms, significantly improving feature localization accuracy for cucumber flowers. Experimental results demonstrate that the CA module outperforms other attention mechanisms in both mAP and F1-score metrics. Compared to the parameter-free SimAM module, CA improves mAP and F1 by 0.4% and 0.1%, respectively. Relative to channel attention modules SE and CBAM, CA achieves a 0.9% higher mAP, with F1-score advantages of 0.2% and 1.1%, respectively. The integration of parametric attention modules (SE, CBAM, CA) introduces no significant changes to model parameters (Params), computational costs (FLOPs), or storage footprint. In contrast, parameter-free modules like SimAM, despite their feature extraction capability, increase parameter, FLOPs, and model size, indicating that non-parametric architectures may introduce additional computational overhead.

3.2. Ablation Study

In sophisticated neural networks, ablation studies elucidate network behavior through selective component removal. To validate the modified model’s performance gains, we sequentially removed three enhancement modules in ablation experiments. The notation “√” indicates the inclusion of a specific improvement, while “×” denotes its exclusion, with experimental results detailed in Table 3.

Table 3.

Findings of ablation experiments.

Seven ablation experiments (Improvement versions, IPV-1 to IPV-7) were performed. As shown in the table, the incorporation of GhostConv reduces the parameter count (Params), computational complexity (FLOPs), and model size for IPV 2, IPV 4, and IPV 6 variants. This demonstrates that the module effectively compresses redundant computations through feature reuse mechanisms, providing structural optimization for lightweight deployment. Concurrently, improved mAP for IPV 3, IPV 4, and IPV 5 confirm that the WIoU loss function addresses localization deviations in complex scenes by suppressing the background noise interference and enhancing spatial feature learning of floral targets, thereby directly improving detection robustness. Three improvement methods enhance the extraction ability of cucumber flower features of Yolov8n-T and improve the mAP and F1 of the model.

The three improvement methods jointly enhance the performance of the model. The mAP and F1 of IPV 7 increase by 3% and 2.6%, respectively, compared with YOLOv8n. The number of parameters, floating-point parameters, and model volume of IPV 7 are reduced by 0.3 × 106 M, 0.6 G, and 0.52 MB, respectively, compared with YOLOv8n.

3.3. Comparative Analysis of Different Models

YOLOv3-tiny, YOLOv7-tiny, YOLOv5n, and YOLOv10n are all lightweight models. Comparative experiments with these models validate the performance superiority of YOLOv8n-T in cucumber flower detection, with results presented in Table 4.

Table 4.

Findings of cucumber flower various one-stage detection algorithms.

It can be seen from the table that the mAP and F1 of YOLOv8n-T are the highest. Among several lightweight models, YOLOv7tiny has the highest mAP and F1, which is 86.9%, the same as that of YOLOv8n-T. However, the F1 of YOLOv8n-T is 0.8% higher than that of YOLOv7tiny. The model with the smallest number of parameters, floating-point parameters, and model size is YOLOv5n, which is 0.9 × 106 M, 3.4 G, and 1.55 MB less than YOLOv8n-T, respectively. However, the mAP and F1 of YOLOv8n-T are 15.7% and 11.5% higher than those of YOLOv5n, respectively.

3.4. Test Set Comparison

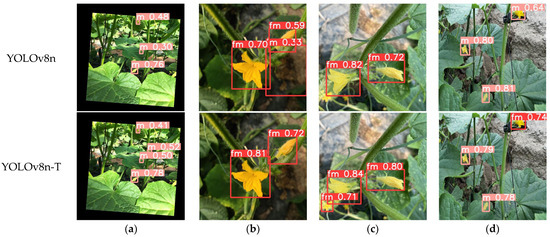

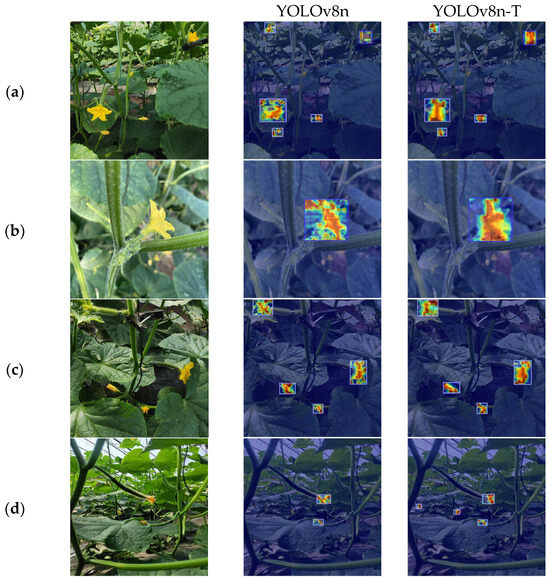

To evaluate and visually compare the efficacy of the YOLOv8n-T and YOLOv8n models in real-world detection scenarios, object detection was performed on the test set using each model, respectively, and the detection results were visualized. The test results are shown in Figure 8.

Figure 8.

Detection effect of different target detection models. ((a,c) represent the comparison of model omission detection, while (b,d) represent the comparison of model error detection).

The results reveal that YOLOv8n suffers from missed and false detections due to background interference. In contrast, YOLOv8n-T demonstrates superior performance with higher confidence scores and no missed/false detections. The GhostConv module in YOLOv8n-T effectively addresses missed detections (Figure 8a,c), while the CA module reduces classification errors and false positives (Figure 8b,d). The WIoU loss enhances bounding box regression by dynamically adjusting regression weights, as shown in Figure 8b.

3.5. Heatmap Analysis

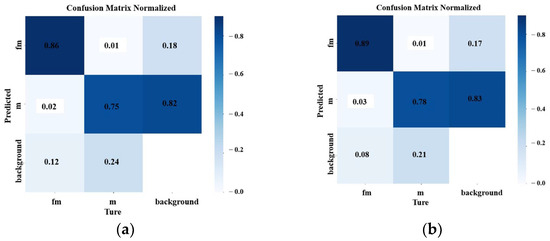

To compare the performance of YOLOv8n and YOLOv8n-T in the recognition of cucumber staminate flowers (fm), pistillate flowers (m), and background, the normalized confusion matrices of the two models were analyzed using the test set, as shown in Figure 9.

Figure 9.

Confusion matrices. ((a) YOLOv8n; (b) YOLOv8n-T).

The figure shows the comparison results of the normalized confusion matrices of YOLOv8n and YOLOv8-T models on staminate flowers, pistillate flowers, and the background. The accuracy of YOLOv8n-T in recognizing staminate flowers increased from 86% to 89%, and the recognition rate of pistillate flowers increased from 75% to 78%, both outperforming YOLOvn8. The proportion of background misidentified as pistillate flowers decreased from 24% to 21%, indicating that the resistance of YOLOv8n-T to background interference has slightly improved. However, the proportion of background misidentified as staminate flowers increased by 1%, suggesting that there is still room for improvement in distinguishing small targets from the background.

Overall, YOLOv8n-T has a stronger ability to distinguish staminate flowers, pistillate flowers, and background, with more stable recognition, and has greater application potential in complex greenhouse environments.

Gradient-weighted Class Activation Mapping (Grad-CAM) visualizes model attention via heatmaps to interpret deep neural network focus. Grad-CAM heatmaps visualize region-specific attention in YOLOv8n-T, where deeper red hues indicate higher activation intensity on cucumber flower targets, and uniform color distribution signifies desirable model performance with robust feature learning across cucumber flower targets, as illustrated in Figure 10.

Figure 10.

Grad-CAM. ((a–d) represent the different gradient response values of the YOLOv8n and YOLOv8n-T models in detecting cucumber flowers. Red indicates high gradient response values, while blue indicates low response values).

The heatmaps demonstrate that YOLOv8n-T exhibits stronger attention concentration on cucumber flowers in complex environments compared to YOLOv8n. YOLOv8n-T shows higher activation in floral center regions and enhanced edge response intensity (Figure 10a–c). Its robustness ensures reliable detection of small targets without missed cases (Figure 10b). This interpretability analysis confirms that YOLOv8n-T achieves improved detection accuracy by amplifying feature responses in target regions, validating the mechanism of enhanced attention distribution.

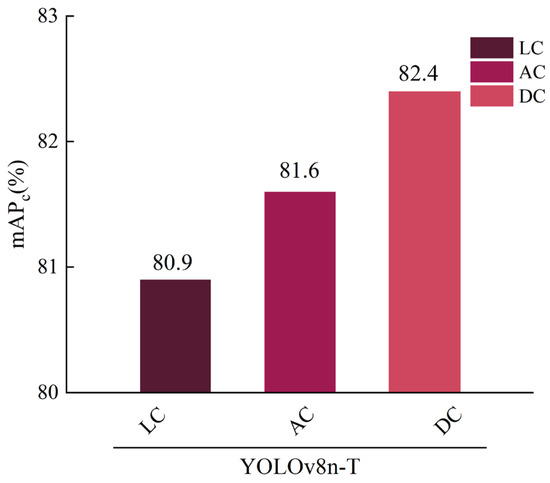

3.6. Counting System Evaluation

To validate the accuracy of three counting methods, this study evaluates the integrated framework combining YOLOv8n-T with ByteTrack using recorded videos. First, we manually counted the total number of cucumber flowers in the video (), then used three different technical strategies to detect the number of cucumber flowers (), and finally calculated the .

This study demonstrates the actual detection situations of three different counting methods by extracting key frames, with the aim of better understanding the problems that occur during the detection process of the three methods. The dual-area counting (DC) method achieves the highest mAPc accuracy of 82.4%, outperforming line-based counting (LC) and area-based counting (AC) by 1.5% and 0.8%, respectively, as shown in Figure 11. Practical detection results extracted from video frames (Figure 12) reveal that LC and AC exhibit missed counts, whereas DC accurately tracks and counts cucumber flowers. These results confirm that DC enhances counting robustness by expanding detection coverage while eliminating duplicate counts through spatiotemporal consistency constraints.

Figure 11.

Performance comparison of three counting methodologies.

Figure 12.

Real-world counting results across algorithms. (Purple: missed targets; red: successfully counted targets).

4. Discussion

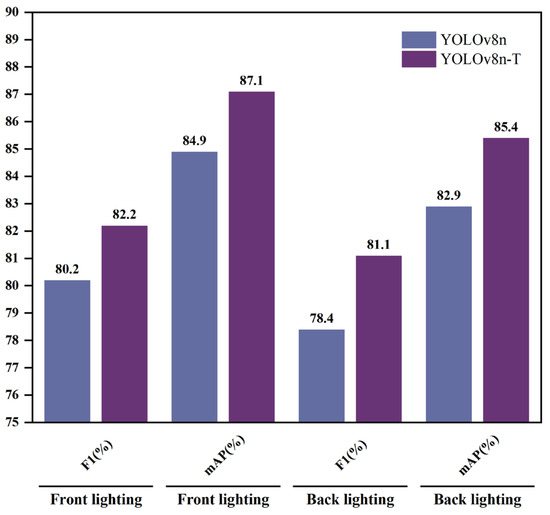

4.1. Impact of Front Lighting and Back Lighting on Detection

Lighting conditions critically influence visual recognition outcomes. By partitioning the test set into front lighting and back lighting subsets (Figure 13), this study evaluates YOLOv8n-T’s robustness. Experimental results indicate superior performance under frontlit conditions: YOLOv8n-T achieves 1.7% higher mAP and 1.1% higher F1 compared to backlit scenarios. Regardless of lighting, YOLOv8n-T outperforms YOLOv8n, with mAP improvements of 2.2% (front lighting) and 2.5% (back lighting), and F1 gains of 2.0% and 2.2%, respectively.

Figure 13.

Target detection results under different light intensities.

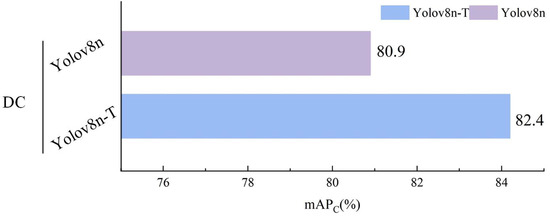

4.2. Detector Impact on Tracking Performance

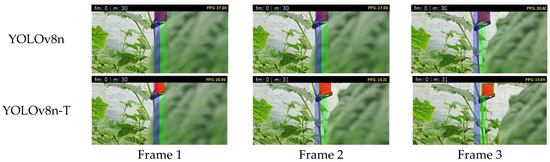

The dual-area counting method was tested using YOLOv8n and YOLOv8n-T as detectors on cucumber flower videos. First, we manually counted the total number of cucumber flowers in the video (), then used YOLOv8n and YOLOv8n-T as detectors to detect the number of cucumber flowers (), and finally calculated the .

The mAPcs obtained by using the YOLOv8n and YOLOv8n-T detectors, respectively, are shown in Figure 14. The mAPc of YOLOv8n-T is 1.5% higher than that of YOLOv8n. The actual keyframe detection effect is shown in Figure 15. Sampled frames demonstrate that YOLOv8n fails to detect foliage-occluded flowers, while YOLOv8n-T accurately identifies them, enabling DC-based counting.

Figure 14.

Comparison of counting accuracy between YOLOv8n-T and YOLOv8n.

Figure 15.

Counting performance comparison between YOLOv8n and YOLOv8n-T. (Purple: missed targets; red: successfully counted targets).

The experimental results show that the detection accuracy of the detector will affect the accuracy of the counting method. YOLOv8n-T exhibits stronger robustness in detecting occluded targets, effectively preventing missed counts and improving overall counting accuracy.

4.3. Technical Advancements and Future Directions in Video-Based Crop Counting

This study proposes a cascaded framework integrating YOLOv8n-T, ByteTrack, and a dual-region counting strategy for dynamic cucumber flower counting in video streams. Prior research by Juan Ballena-Ruiz et al. [28] employed convolutional neural networks for boll counting, yet their image-based approach lacked dynamic video monitoring capabilities. While Mengcheng Wu et al. [29] developed the lightweight Cabbage-YOLO model for Chinese cabbage counting, and Dongxuan Cao et al. [30] proposed an improved YOLOv7-Tiny-PDE network for apple counting—both integrating detection models with tracking algorithms for video analysis—their research inadequately addressed counting strategies. Notably, Aichen Wang et al. [31] introduced an optimized region-tracking method with a lightweight YOLO11n network to estimate tomato counts across maturity stages, crucially incorporating Particle Swarm Optimization (PSO) to enhance detection regions and counting precision. This work provides valuable insights for our future research direction; we will further optimize detection region design to enhance the precision of our counting methodology.

5. Conclusions

This study constructed a specialized cucumber flower dataset through image collection in a greenhouse environment, followed by screening and data augmentation, with visual comparative analysis validating its effectiveness in supporting cucumber flower target detection requirements.

This study proposes a dual-region counting algorithm integrating YOLOv8n-T with a ByteTrack cascaded framework for cucumber flower quantification. The YOLOv8n detection model enhances the ability to extract flower features and reduces the computational load by introducing the CA module and lightweight module and replacing the loss function. The integration of ByteTrack tracking with dual-region counting enhances robustness in occlusion scenarios, enabling precise flower counting in complex greenhouse environments.

The lightweight YOLOv8n-T achieves 86.9% mAP and 82.1% F1-score metrics, outperforming YOLOv8n by 3.0% and 2.6%, respectively. With 2.7 million parameters, 7.5 GFLOPs, and a 5.45 MB model size, YOLOv8n-T reduces resource requirements by 0.3 M parameters, 0.6 GFLOPs, and 0.52 MB compared to YOLOv8n. The integrated framework attains 82.4% mAPc, surpassing LC and AC methods by 1.5% and 0.8%, respectively.

This work provides a novel solution for intelligent cucumber flower counting in greenhouse settings, empowering growers to monitor floral sex ratios in real-time, reduce production costs, enhance efficiency, and advance precision agriculture management.

Author Contributions

Conceptualization, L.S.; methodology, L.S. and X.H.; software, L.S., H.Z. and X.M.; investigation, X.M. and H.Z.; resources, X.H.; data curation, S.Z.; writing—original draft, L.S.; writing—review and editing, L.S., X.H. and S.Z.; writing—review and editing, X.H. and S.Z. All authors have read and agreed to the published version of the manuscript.

Funding

Supported by the 2115 talent development program of China Agricultural University, and the earmarked fund for the China Agriculture Research System (CARS-28).

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhang, S.; Tan, F.; Chung, C.; Slavkovic, F.; Devani, R.S.; Troadec, C.; Marcel, F.; Morin, H.; Camps, C.; Gomez Roldan, M.V.; et al. The control of carpel determinacy pathway leads to sex determination in cucurbits. Science 2022, 378, 543–549. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Bai, Z.; Chu, Z.; Wen, Y. Study on the effect of the chiral herbicide imazethapyr on flowering initiation in Arabidopsis thaliana. Ecotoxicol. Environ. Saf. 2025, 293, 118049. [Google Scholar] [CrossRef] [PubMed]

- An, Q.; Cui, Y.; Tong, W.; Liu, Y.; Zhao, B.; Wei, L. StraTracker: A dynamic counting method for growing strawberries based on multi-target tracking. Comput. Electron. Agric. 2024, 227, 109564. [Google Scholar] [CrossRef]

- Thorp, K.R.; Dierig, D.A. Color image segmentation approach to monitor flowering in lesquerella. Ind. Crops Prod. 2011, 34, 1150–1159. [Google Scholar] [CrossRef]

- Lin, P.; Lee, W.S.; Chen, Y.M.; Peres, N.; Fraisse, C. A deep-level region-based visual representation architecture for detecting strawberry flowers in an outdoor field. Precis. Agric. 2019, 21, 387–402. [Google Scholar] [CrossRef]

- Xia, X.; Chai, X.; Li, Z.; Zhang, N.; Sun, T. MTYOLOX: Multi-transformers-enabled YOLO for tree-level apple inflorescences detection and density mapping. Comput. Electron. Agric. 2023, 209, 107803. [Google Scholar] [CrossRef]

- Li, G.; Suo, R.; Zhao, G.; Gao, C.; Fu, L.; Shi, F.; Dhupia, J.; Li, R.; Cui, Y. Real-time detection of kiwifruit flower and bud simultaneously in orchard using YOLOv4 for robotic pollination. Comput. Electron. Agric. 2022, 193, 106641. [Google Scholar] [CrossRef]

- Li, G.; Fu, L.; Gao, C.; Fang, W.; Zhao, G.; Shi, F.; Dhupia, J.; Zhao, K.; Li, R.; Cui, Y. Multi-class detection of kiwifruit flower and its distribution identification in orchard based on YOLOv5l and Euclidean distance. Comput. Electron. Agric. 2022, 201, 107342. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, X. Moving Target Detection and Tracking Based on Pyramid Lucas-Kanade Optical Flow. In Proceedings of the 2018 IEEE 3rd International Conference on Image, Vision and Computing (ICIVC), Chongqing, China, 27–29 June 2018. [Google Scholar] [CrossRef]

- Singla, N. Motion Detection Based on Frame Difference Method. Int. J. Inf. Comput. Technol. 2014, 4, 1559–1565. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022. [Google Scholar] [CrossRef]

- Nir, A.; Orfaig, R.; Bobrovsky, B.-Z. Bot-sort: Robust associations multi-pedestrian tracking. arXiv 2022, arXiv:2206.14651. [Google Scholar] [CrossRef]

- Li, X.; Wang, X.; Ong, P.; Yi, Z.; Ding, L.; Han, C. Fast Recognition and Counting Method of Dragon Fruit Flowers and Fruits Based on Video Stream. Sensors 2023, 23, 8444. [Google Scholar] [CrossRef] [PubMed]

- Sekharamantry, P.K.; Melgani, F.; Malacarne, J.; Ricci, R.; de Almeida Silva, R.; Marcato Junior, J. A Seamless Deep Learning Approach for Apple Detection, Depth Estimation, and Tracking Using YOLO Models Enhanced by Multi-Head Attention Mechanism. Computers 2024, 13, 83. [Google Scholar] [CrossRef]

- Ye, X.; Pan, J.; Shao, F.; Liu, G.; Lin, J.; Xu, D.; Liu, J. Exploring the potential of visual tracking and counting for trees infected with pine wilt disease based on improved YOLOv5 and StrongSORT algorithm. Comput. Electron. Agric. 2024, 218, 108671. [Google Scholar] [CrossRef]

- Yuan, K.; Wang, Q.; Mi, Y.; Luo, Y.; Zhao, Z. Improved Feature Fusion in YOLOv5 for Accurate Detection and Counting of Chinese Flowering Cabbage (Brassica campestris L. ssp. chinensis var. utilis Tsen et Lee) Buds. Agronomy 2024, 14, 42. [Google Scholar] [CrossRef]

- Tu, S.; Huang, Y.; Huang, Q.; Liu, H.; Cai, Y.; Lei, H. Estimation of passion fruit yield based on YOLOv8n+OC-SORT+CRCM algorithm. Comput. Electron. Agric. 2025, 229, 109727. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar] [CrossRef]

- Xianghe, Z.; Chongyang, W.; Hongen, L.; Zhangwei, Y. Improved ResNet-50 model for identifying defects on wood surfaces. Signal Image Video Process. 2023, 17, 3119–3126. [Google Scholar] [CrossRef]

- Zhang, L.-Q.; Liu, Z.-T.; Jiang, C.-S. An Improved SimAM Based CNN for Facial Expression Recognition. In Proceedings of the 41th Chinese Control Conference (CCC), Hefei, China, 25–27 July 2022; pp. 582–586. [Google Scholar] [CrossRef]

- Qibin, H.; Daquan, Z.; Jiashi, F. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. arXiv 2023. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features From Cheap Operations. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1577–1586. [Google Scholar] [CrossRef]

- Jun, L.; Qiqin, C.; Fumin, Z.; Yintian, Z.; Lyuchao, L.; Guo, F. BiGA-YOLO: A Lightweight Object Detection Network Based on YOLOv5 for Autonomous Driving. Electronics 2023, 12, 2745. [Google Scholar] [CrossRef]

- Pan, L.; Duan, Y.; Zhang, Y.; Xie, B.; Rui, Z. A lightweight algorithm based on YOLOv5 for relative position detection of hydraulic support at coal mining faces. J. Real-Time Image Process. 2023, 20, 40. [Google Scholar] [CrossRef]

- Luo, W.; Xing, J.; Zhang, X.; Zhao, X.; Kim, T.K. Multiple Object Tracking: A Literature Review. Artif. Intell. 2021, 293, 103448. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv8. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 17 January 2024).

- Ballena-Ruiz, J.; Arcila-Diaz, J.; Tuesta-Monteza, V. Automated Detection and Counting of Gossypium barbadense Fruits in Peruvian Crops Using Convolutional Neural Networks. AgriEngineering 2025, 7, 152. [Google Scholar] [CrossRef]

- Wu, M.; Yuan, K.; Shui, Y.; Wang, Q.; Zhao, Z. A Lightweight Method for Ripeness Detection and Counting of Chinese Flowering Cabbage in the Natural Environment. Agronomy 2024, 14, 1835. [Google Scholar] [CrossRef]

- Cao, D.; Luo, W.; Tang, R.; Liu, Y.; Zhao, J.; Li, X.; Yuan, L. Research on Apple Detection and Tracking Count in Complex Scenes Based on the Improved YOLOv7-Tiny-PDE. Agriculture 2025, 15, 483. [Google Scholar] [CrossRef]

- Wang, A.; Xu, Y.; Hu, D.; Zhang, L.; Li, A.; Zhu, Q.; Liu, J. Tomato Yield Estimation Using an Improved Lightweight YOLO11n Network and an Optimized Region Tracking-Counting Method. Agriculture 2025, 15, 1353. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).