2.3.1. Principles of Sorghum Tracking Process Based on DeepSort Algorithm

In video detection, sorghum spikes frequently reappear in consecutive frames, resulting in high similarity between adjacent frames. To eliminate duplicate counting, the system needs to predict the position of the target in subsequent frames based on the current frame, and then perform continuous tracking through trajectory computation, as shown in

Figure 5. The DeepSort algorithm primarily consists of three key stages: feature extraction, feature matching, and position prediction.

First, an image preprocessing pipeline is constructed to enhance the quality of the input data. Then, target detection is performed through the improved YOLOv8s-GOLD-LSKA network architecture, followed by the development of a dynamic prediction and data association mechanism. This ultimately forms a complete closed-loop tracking system. This study focuses on the sorghum spike phenotype observation scenario and highlights the algorithmic principles behind the two core modules: state prediction and target matching.

- (1)

State estimation and Kalman filtering

DeepSort’s state estimation involves predicting and correcting the motion trajectory of each tracked object. The object’s trajectory is represented by an 8-dimensional state vector [u, v, y, h, ., ., ]. where u, v are the coordinates of the detection box’s center in the current frame, y is the aspect ratio (width-to-height) of the predicted bounding box, h is the height of that box, and ., ., and encode the temporal velocities of u, v, y, and h, once the current state vector is obtained, a Kalman filter—a highly efficient autoregressive filter—is applied to predict the object’s next state. Its basic operations are as follows:

Here, U(k) denotes the value of the system input at time k; A is the state transition matrix; B is the control matrix; X(k − 1|k − 1) is the system’s estimate at the previous time step; and X(k|k − 1) is the predicted state at time k based on the preceding state.

where

P (

k − 1|

k − 1) and

P (

k|k − 1) are the covariances of the previous states

X (

k − 1|

k − 1) and

X (

k|k − 1), respectively.

Q is the covariance matrix of system noise.

- (c)

Kalman gain calculation

K is the Kalman gain value; R is the covariance matrix of noise; and H is the observation matrix.

- (d)

Status update

Z(k) denotes the noisy measurement at time k; and X(k|k) is the optimal state estimate at time k, computed from the measurement at k, the prior estimate, and the Kalman gain.

- (e)

Covariance update

I denotes the unit matrix; P (k − 1|k − 1) denotes the updated covariance matrix.

When labeling multiple targets in video tracking, three scenarios can arise. Correct tracking: a target detected in the previous frame is successfully associated—via its predicted position—with a detection in the current frame, and the tracking loop continues. Target loss: a target detected in the previous frame fails to match any detections for the next

n frames, at which point it is removed from the tracking list. New target appearance: A detection in the current frame does not match any existing tracks, but continues to be matched in subsequent frames, and is therefore initialized as a new target. Flowchart of the tracking system as shown in

Figure 6.

- (2)

Feature matching and similarity measure

The matching problem is the core component of the DeepSort algorithm, whose goal is to correctly associate detection bounding boxes in the current frame with existing tracks. Building on the Sort framework, DeepSort uses the IoU between current frame detections and tracker-predicted boxes as association information and employs two key similarity metrics—Mahalanobis distance and appearance features—to resolve matching from the perspectives of motion consistency and appearance similarity, respectively.

Mahalanobis distance accounts for uncertainty in the state by using the Kalman filter to predict a track’s state, such as its position and velocity, and then computing the motion similarity between a detection bounding box and that predicted state. This approach effectively handles sensor noise and inaccuracies in the motion model. The formula is given as follows:

where

d is the state vector of the detection frame,

t is the state vector of the predicted tracking trajectory, and (

)

T is the transpose of both deviation vectors.

Appearance similarity matching addresses the problem of identity maintenance when tracked targets are occluded, deformed, or cross paths. It extracts feature vectors for both detection bounding boxes and trajectories using the detection model, then computes the cosine similarity between the detection features and the track features. Even if a target temporarily disappears or its motion trajectory changes, appearance features still provide a stable basis for correct association. The formula is as follows:

where

fd and

ft denote the appearance feature vectors of the detection frame and tracking trajectory, respectively.

- (3)

Cascade matching optimization mechanism

To address the high false detection rate and frequent identity switches of the Sort algorithm, DeepSort introduces a cascade matching strategy, as shown in

Figure 7. This mechanism establishes a layered processing priority based on the duration of trajectory loss: short-term lost trajectories are prioritized for matching, followed by long-term lost trajectories, thereby enhancing the continuity of high-frequency target tracking. The Hungarian algorithm is used to perform optimal matching assignments between detection boxes and predicted trajectories, outputting three types of states: unmatched trajectories, unmatched detections, and matched trajectories. This effectively improves the robustness of trajectory association in complex scenes.

The cascade matching strategy assigns an initial timer value of 0 to each trajectory. When a trajectory fails to be matched, the timer value is incremented by 1; otherwise, it is reset to 0. This process records the time elapsed since the last successful match for each trajectory. After a trajectory is successfully matched consecutively for n times, its status is changed from unconfirmed to confirmed in order to reduce the impact of false detections by the detector. Unconfirmed trajectories are then matched using the IoU method, while confirmed trajectories are subject to cascade matching. In cascade matching, unmatched trajectories are no longer directly discarded. Instead, a default save time of 30 frames is set, during which the prediction and updating of unmatched trajectories are maintained. If a trajectory is not successfully re-matched after 30 frames, it is then deleted. This approach provides better detection and tracking performance when the target undergoes occlusion and can effectively preserve the target’s ID. In this study, during video detection, occlusion is commonly observed between sorghum spikes. The use of cascade matching significantly enhances tracking performance and improves detection accuracy. Since the camera moves slowly during video capture, after careful consideration, the parameters n and max_age are set to 3 and 40, respectively, to achieve better tracking results.

2.3.2. Improved Sorghum Spike Detection Model Based on DeepSort Algorithm

This study, based on the improved YOLOv8s-GOLD-LSKA object detection model, integrates the DeepSort multi-object tracking algorithm to build a real-time detection and counting system for sorghum spikes. As shown in

Figure 8, this technical approach consists of a dual processing flow: first, the pre-trained YOLOv8s-GOLD-LSKA model is used for frame-by-frame object detection on the video sequence to extract the spatial feature information of sorghum spikes; then, the DeepSort algorithm is employed to build a spatiotemporal association model, enabling continuous tracking and identity preservation of the target trajectories across frames. In the target tracking process, by setting the trajectory matching threshold as the condition for successful association across three consecutive frames, this approach effectively overcomes the target occlusion problem in complex scenarios, ensuring the reliability of the counting process.

- (1)

Removal of heavily shaded sorghum spikes

As the shooting angle changes, the occlusion between sorghum spikes also changes. Even if severely occluded sorghum ears are detected, the detection effect is not ideal, making it difficult to track and count them in the future. Additionally, during video capture, sorghum spikes in the background area are inevitably recorded, leading to false detections. Experimental research has shown that sorghum spikes with severe occlusion or those in the background typically have a lower confidence threshold. In this study, sorghum spikes in a 200 cm × 50 cm plot were randomly selected as detection targets. Through multiple sets of control experiments, the absolute difference between the number of detected sorghum spikes and the actual number was calculated for different threshold values, as shown in

Figure 9. The results indicate that when the confidence threshold is set to 0.46, the detection error is minimized. Therefore, the confidence threshold of the detection model was adjusted to 0.46 for this experiment.

- (2)

Video Interval Counting

During the video capture process, a total of ten video segments were recorded within a 200 cm × 50 cm area, with a steady walking speed of approximately 0.3 m/s. The height of the stabilizer was kept constant throughout the recording, and the frame rate was set to 30 frames per second. If the camera movement speed is too fast, the captured video will be unclear. On the other hand, if the camera moves too slowly, there will be little change in the target positions between adjacent frames, and performing detection and counting on every frame will increase the computational load of the model. To improve the model’s detection speed, this study adopts a frame-skipping counting method for processing the video frames. The video was frame extracted using Adobe Premiere Pro software (23.6.0), and different frame rate settings were applied when exporting the video sequence. After analyzing all the output results and considering the actual conditions during filming, it was decided to count every fifth frame in the video (in the subsequent sections of the paper, the frames referenced are processed frames; for example, Frame 2 actually represents Frame 6, and so on). An example of the video frames after applying the frame-skipping counting method is shown in

Figure 10.

- (3)

DeepSort sorghum spike tracking

To achieve accurate sorghum spike counting, it is necessary to track the movement trajectories of the sorghum spikes, as the video essentially consists of a series of continuous images. Therefore, without tracking and labeling the detected sorghum spikes, the same target may be detected multiple times, leading to duplicate counting. Based on the possible situations that may arise during the tracking process, the results are categorized into four types: currently tracked sorghum spikes, newly detected sorghum spikes, disappeared sorghum spikes, and sorghum spikes that disappear and then reappear. Cross-frame tracking, as the core technology of the tracking algorithm, plays a crucial role in sorghum spike tracking. In the tracking process, the system will only consider a target as successfully tracked and add it to the tracking list for counting if it is successfully matched in three consecutive frames. If the target is matched in three consecutive frames but was not detected in previous frames, the system will treat it as a new target and continue tracking.

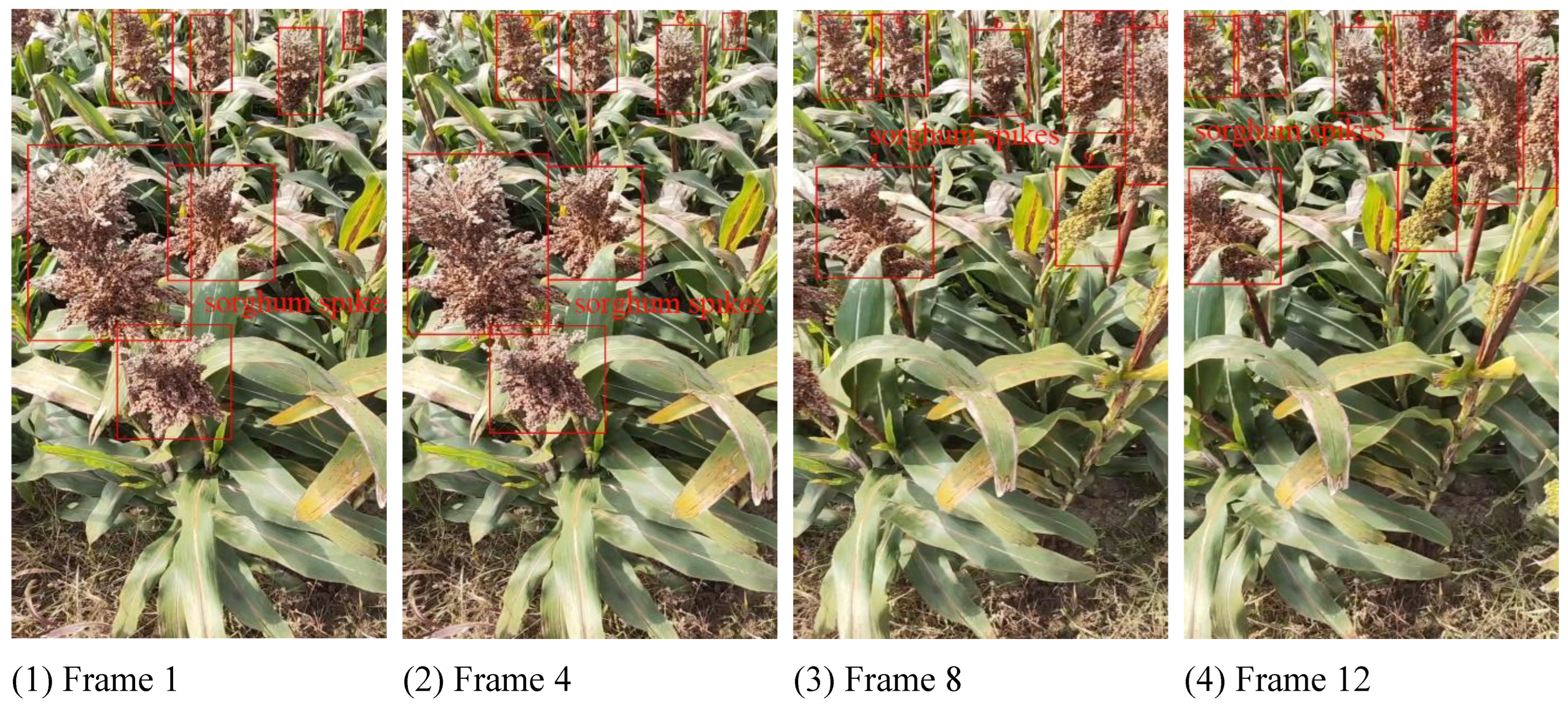

As shown in

Figure 11, the sorghum spike is detected by the YOLOv8s-GOLD-LSKA model in Frame 1, but it cannot be tracked by the DeepSort algorithm because the system requires three consecutive frame matches to confirm tracking. By Frame 4, the DeepSort algorithm completes cross-frame tracking, and the seven sorghum spikes detected by YOLOv8s-GOLD-LSKA have all been successfully tracked and recorded in the tracking list. By Frame 9, sorghum spikes 1 and 3 exit the frame. By Frame 12, two new sorghum spikes appear on the right side of the frame and have not yet been matched or tracked by the system, thus being considered as new targets.

In actual shooting, it is also possible for a previously detected target to leave the frame and then reappear in subsequent frames, or for a confirmed target to reappear due to the disappearance of occlusion in earlier frames caused by a change in shooting angle. The cascade matching method used by the DeepSort algorithm allows the system to retain the trajectory state from the last frame before the target disappeared and preserve its original ID, even after the target has left the frame.