Enhancing Dense-Scene Millet Appearance Quality Inspection Based on YOLO11s with Overlap-Partitioning Strategy for Procurement

Abstract

1. Introduction

2. Materials and Methods

2.1. Image Acquisition

2.2. Data Augmentation

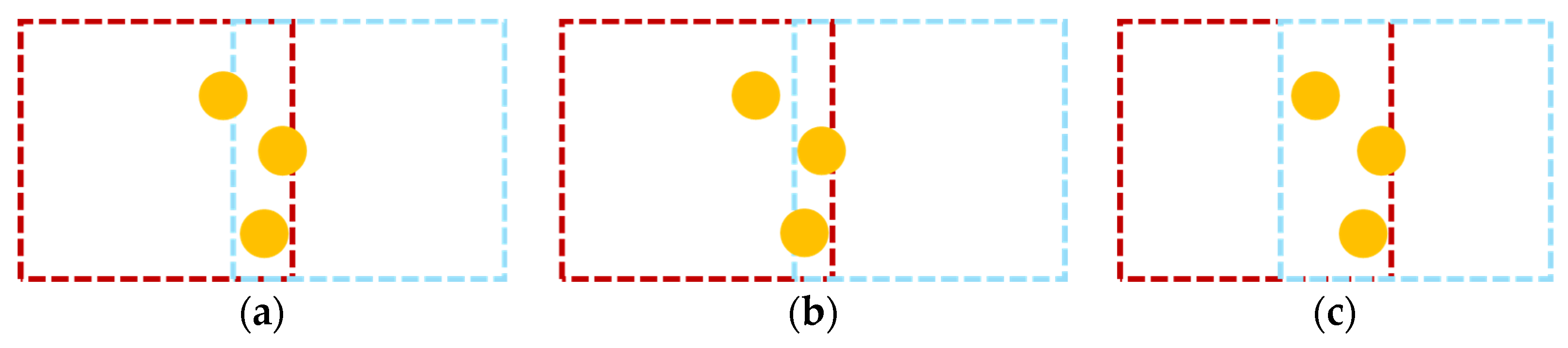

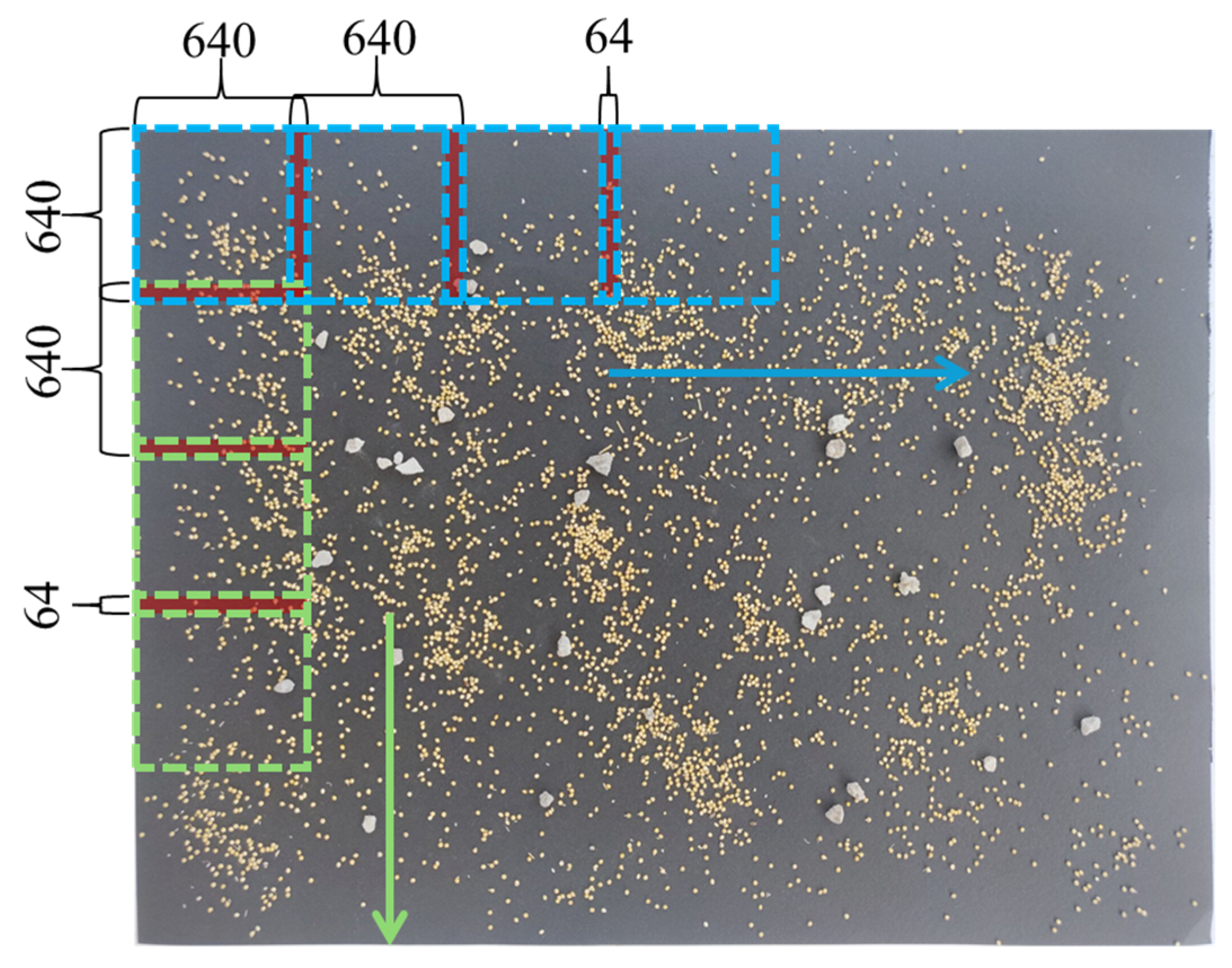

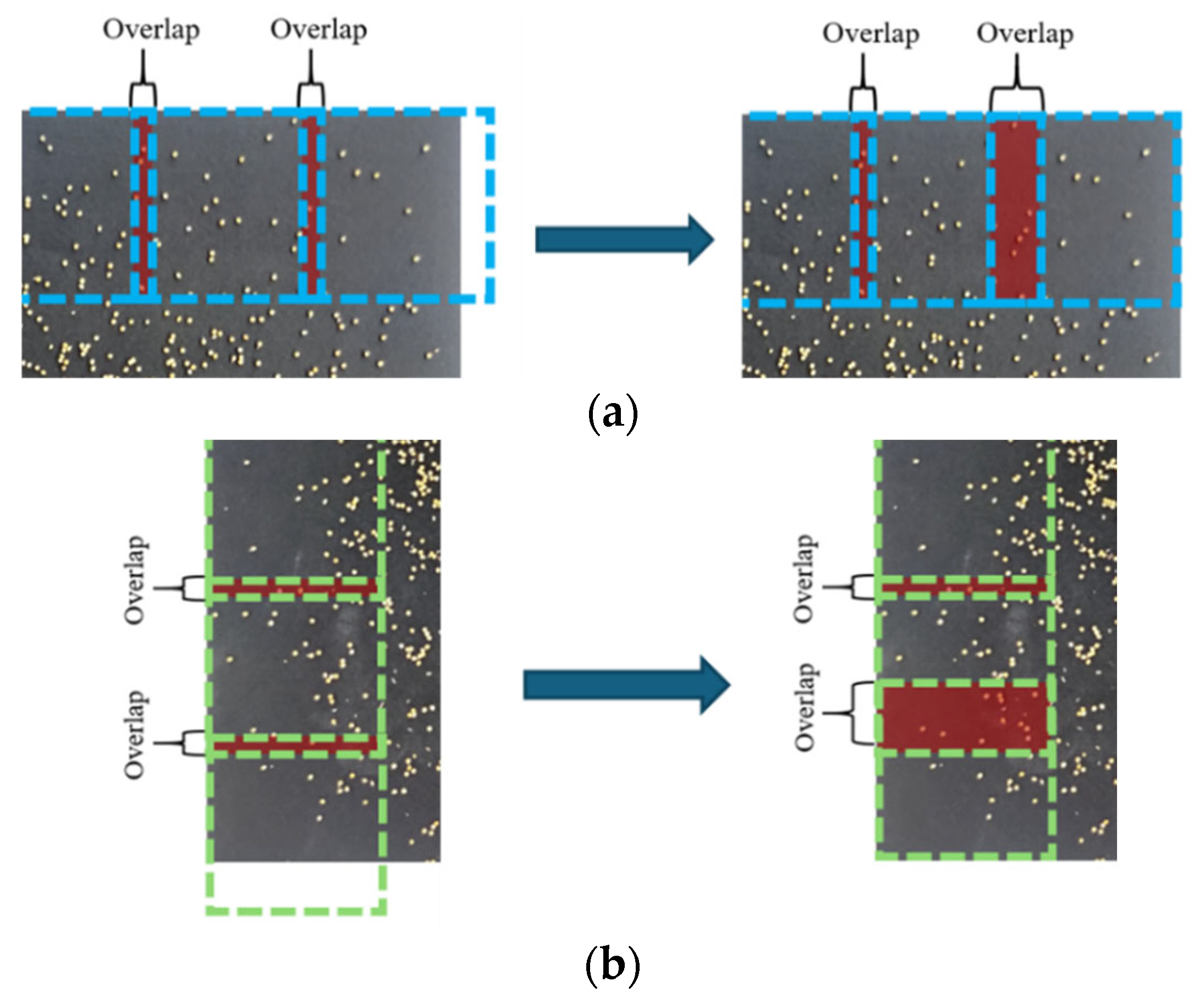

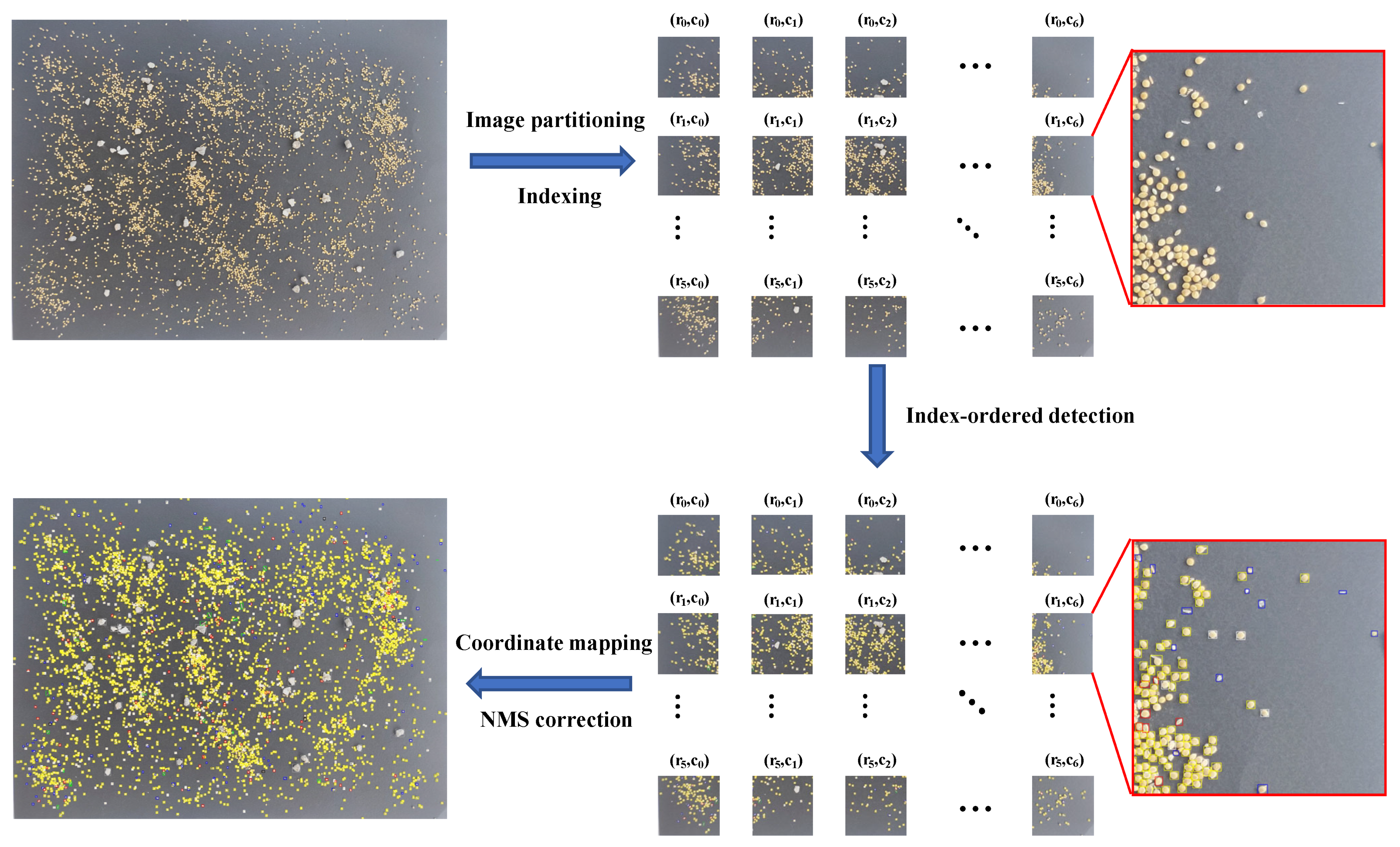

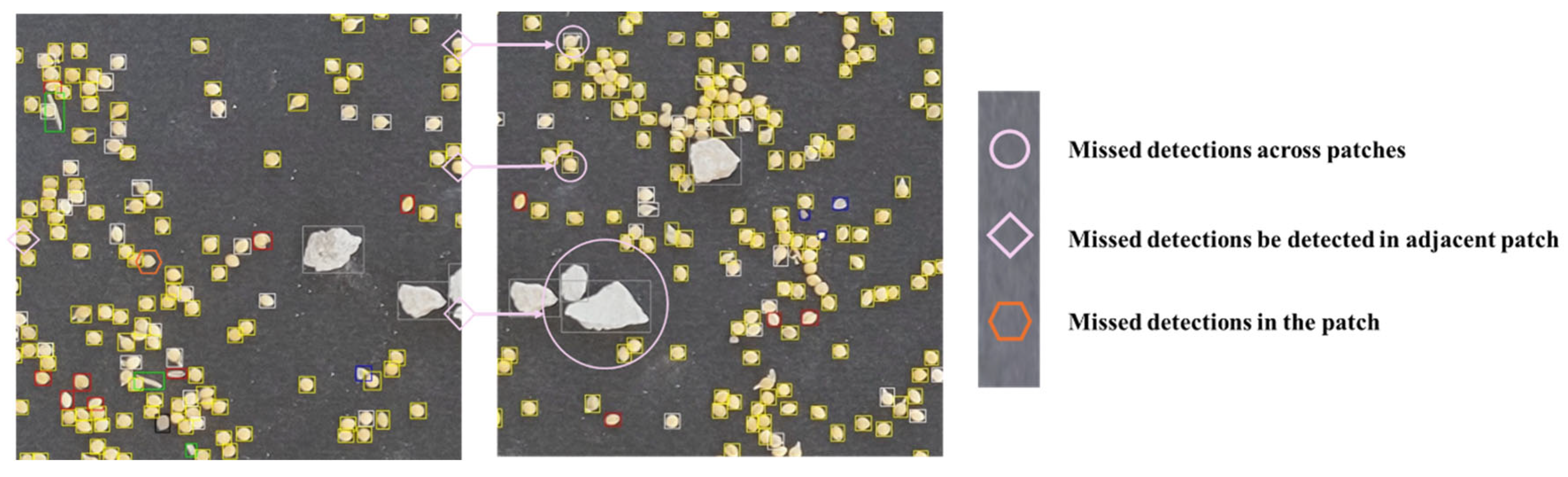

2.3. Overlap-Partitioning Strategy

2.4. Millet Detection Based on Deep Learning

2.4.1. YOLO11 Model

2.4.2. Network Training

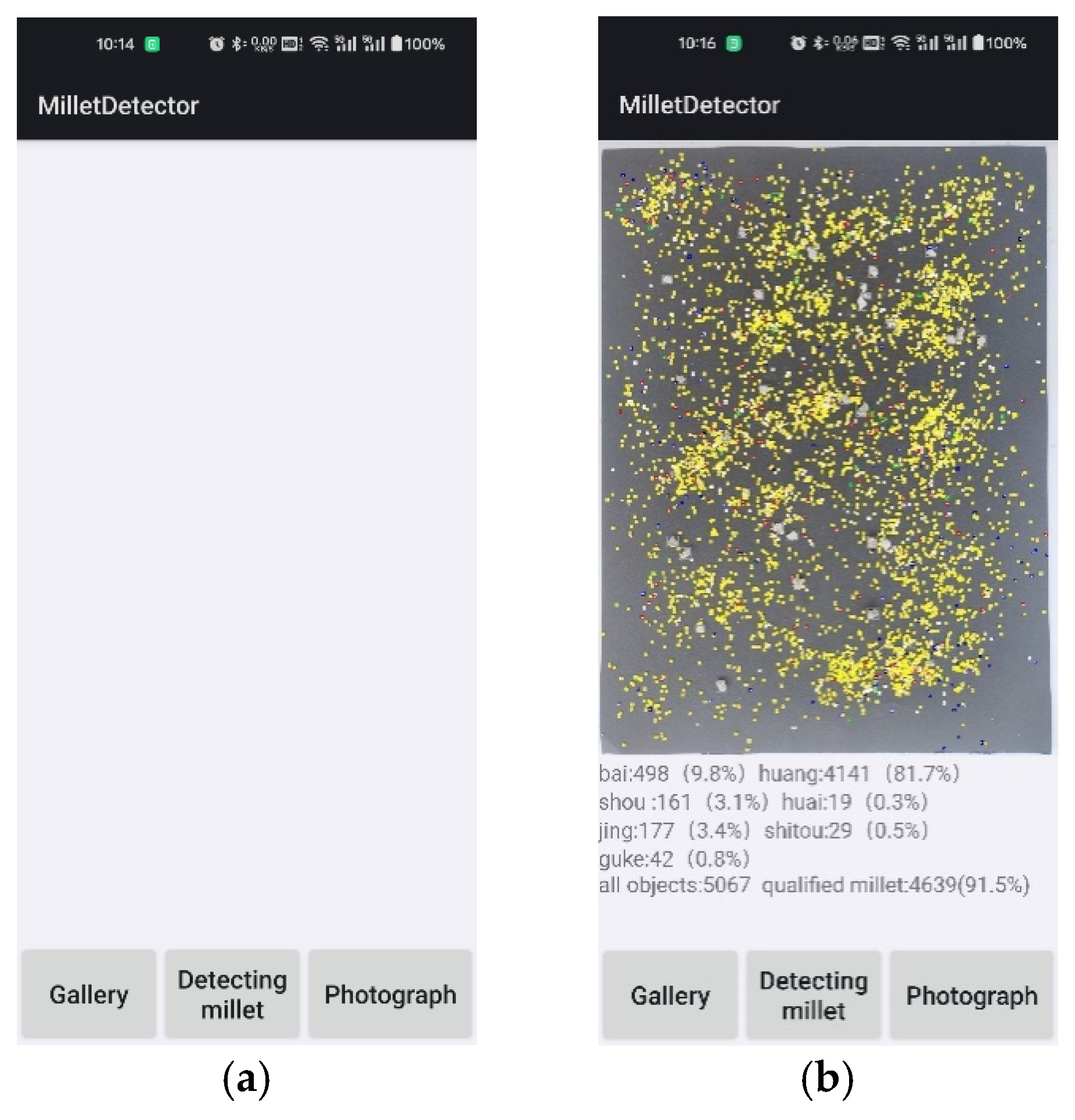

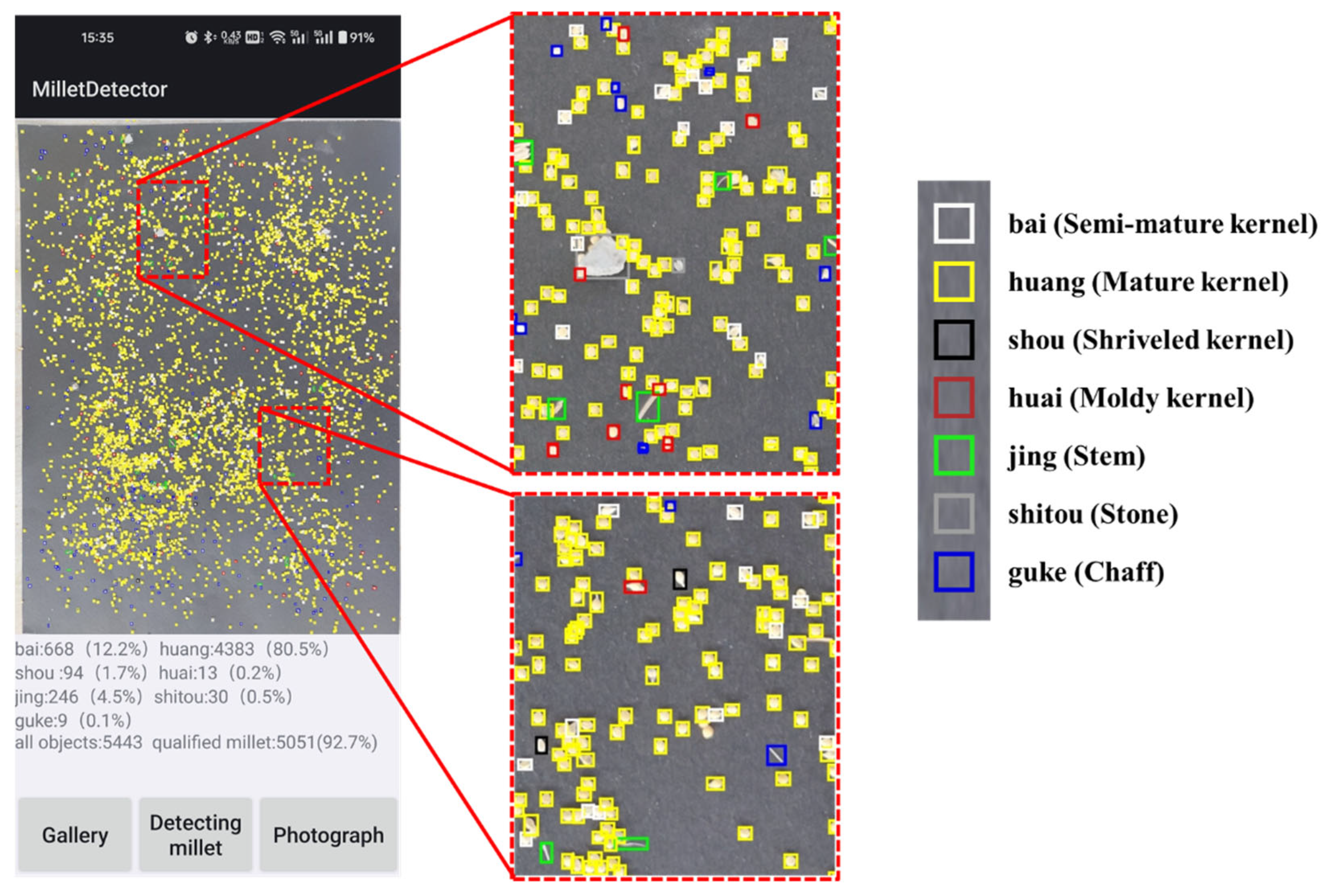

2.5. Design for Android Platform

2.5.1. NCNN Framework

2.5.2. App Development for Millet Appearance Inspection

2.6. Evaluation Indicators

3. Results and Discussion

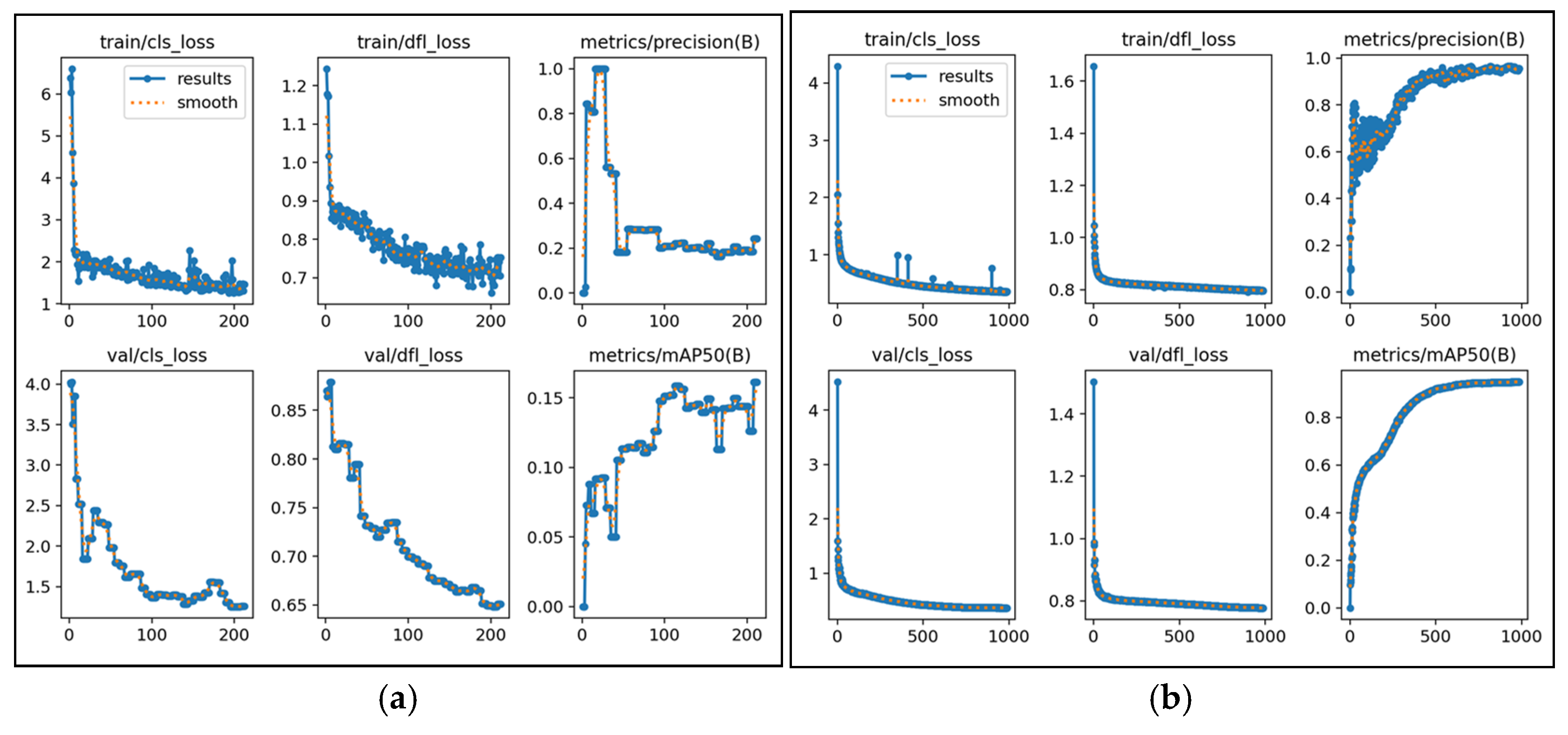

3.1. Training Evaluation of HRDM and OPSDM

3.2. Overall Performance of OPSDM

3.3. Comparison with Different Grain Inspection Systems

3.4. Further Works

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, Y.; Wu, S. Traditional maintenance and multiplication of foxtail millet (Setaria italica (L.) P.Beauv.) landraces in China. Euphytica 1996, 87, 33–38. [Google Scholar] [CrossRef]

- Liu, J.; Chang, L.; Hong, Y.; Zhang, D.; Sun, H.; Duan, X. Correlation between the porridge eating quality, kernel sensory quality and nutrients of milled foxtail mille. J. Chin. Inst. Food Sci. Technol. 2023, 23, 406–416. [Google Scholar] [CrossRef]

- Feng, H.; Li, L.; Wang, D.; Zhang, K.; Feng, M.; Song, H.; Li, R.; Han, P. Progress of the application of MIR and NIR spectroscopies in qualitytesting of minor coarse cereals. Spectrosc. Spectr. Anal. 2023, 43, 16–24. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, J.; Yuan, H.; Song, M.; Zhu, Y.; Cao, W.; Jiang, X.; Ni, J. Non-Destructive quality-detection techniques for cereal grains: A systematic review. Agronomy 2022, 12, 3187. [Google Scholar] [CrossRef]

- Chen, J.; Lin, W.; Cheng, H.; Hung, C.; Lin, C.; Chen, S. A smartphone-based application for scale pest detection using multiple-object detection methods. Electronics 2021, 10, 372. [Google Scholar] [CrossRef]

- Nadimi, M.; Divyanth, L.G.; Paliwal, J. Automated detection of mechanical damage in flaxseeds using radiographic imaging and machine learning. Food Bioprocess Technol. 2023, 16, 526–536. [Google Scholar] [CrossRef]

- Wang, Y.; Su, W. Convolutional neural networks in computer vision for grain crop phenotyping: A Review. Agronomy 2022, 12, 2659. [Google Scholar] [CrossRef]

- Wan, Y.; Lin, M.; Chiou, J. Rice quality classification using an automatic grain quality inspection system. Trans. ASAE 2002, 45, 379–387. [Google Scholar] [CrossRef]

- Kaur, H.; Singh, B. Classification and grading rice using multi-class SVM. Int. J. Sci. Res. Publ. 2013, 3, 624–628. [Google Scholar]

- Chen, X.; Ke, S.; Wang, L.; Xu, H.; Chen, W. Classification of rice appearance quality based on LS-SVM using machine vision. In Information Computing and Applications; ICICA 2012; Communications in Computer and Information Science; Springer: Berlin/Heidelberg, Germany, 2021; Volume 307, pp. 104–109. [Google Scholar] [CrossRef]

- Harini, S.; Chakrasali, S.; Krishnamurthy, G.N. Analysis of Indian Rice Quality Using Multi-Class Support Vector Machine; Springer Nature: Singapore, 2023; Volume 968, ISBN 9789811973451. [Google Scholar]

- Kundu, N.; Rani, G.; Dhaka, V.S. Seeds classification and quality testing using deep learning and YOLOv5. In Proceedings of the International Conference on Data Science, Machine Learning and Artificial Intelligence, Windhoek, Namibia, 9–12 August 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 153–160. [Google Scholar] [CrossRef]

- Zhao, W.; Liu, S.; Li, X.; Han, X.; Yang, H. Fast and accurate wheat grain quality detection based on improved YOLOv5. Comput. Electron. Agric. 2022, 202, 107426. [Google Scholar] [CrossRef]

- Fan, X.; Wang, L.; Liu, J.; Zhou, Y.; Zhang, J.; Suo, X. Corn seed appearance quality estimation based on improved YOLOv4. Trans. Chin. Soc. Agric. Mach. 2022, 53, 226–233. [Google Scholar] [CrossRef]

- Wonggasem, K.; Chakranon, P.; Wongchaisuwat, P. Automated quality inspection of baby corn using image processing and deep learning. Artif. Intell. Agric. 2024, 11, 61–69. [Google Scholar] [CrossRef]

- Li, X.; Niu, W.; Yan, Y.; Ma, S.; Huang, J.; Wang, Y.; Chang, R.; Song, H. Detection of broken hongshan buckwheat seeds based on improved YOLOv5s Model. Agronomy 2024, 14, 37. [Google Scholar] [CrossRef]

- Andrew, J.; Eunice, J.; Popescu, D.E.; Chowdary, M.K.; Hemanth, J. Deep learning-based leaf disease detection in crops using images for agricultural applications. Agronomy 2022, 12, 2395. [Google Scholar] [CrossRef]

- Wang, P.; Tan, J.; Yang, Y.; Zhang, T.; Wu, P.; Tang, X.; Li, H.; He, X.; Chen, X. Efficient and accurate identification of maize rust disease using deep learning model. Front. Plant Sci. 2024, 15, 1490026. [Google Scholar] [CrossRef]

- Deng, J.; Yang, C.; Huang, K.; Lei, L.; Ye, J.; Zeng, W.; Zhang, J.; Lan, Y.; Zhang, Y. Deep-learning-based rice disease and insect pest detection on a mobile phone. Agronomy 2023, 13, 2139. [Google Scholar] [CrossRef]

- Liang, J.; Chen, J.; Zhou, M.; Li, H.; Xu, Y.; Xu, F.; Yin, L.; Chai, X. An intelligent detection system for wheat appearance quality. Agronomy 2024, 14, 1057. [Google Scholar] [CrossRef]

- Andrianto, H.; Suhardi; Faizal, A.; Armandika, F. Smartphone Application for Deep learning-based rice plant disease detection. In Proceedings of the 2020 International Conference on Information Technology Systems and Innovation (ICITSI), Bandung, Indonesia, 19–23 October 2020; IEEE: New York, NY, USA, 2020; pp. 387–392. [Google Scholar] [CrossRef]

- Zhou, Z.; Song, Z.; Fu, L.; Gao, F.; Li, R.; Cui, Y. Real-time kiwifruit detection in orchard using deep learning on AndroidTM smartphones for yield estimation. Comput. Electron. Agric. 2020, 179, 105856. [Google Scholar] [CrossRef]

- Suharjito; Asrol, M.; Utama, D.N.; Junior, F.A. Marimin real-time oil palm fruit grading system using smartphone and modified YOLOv4. IEEE Access 2023, 11, 59758–59773. [Google Scholar] [CrossRef]

- Wu, W.; Zhou, L.; Chen, J.; Qiu, Z.; He, Y. GaintKW: A measurement system of thousand kernel weight based on the Android platform. Agronomy 2018, 8, 178. [Google Scholar] [CrossRef]

- Li, J.; Shi, L.; Mo, X.; Hu, X.; Su, C.; Han, J.; Deng, X.; Du, S.; Li, S. Self-correcting deep learning for estimating rice leaf nitrogen concentration with mobile phone images. Comput. Electron. Agric. 2024, 227, 109497. [Google Scholar] [CrossRef]

- Dang, H.; He, L.; Shi, Y.; Janneh, L.L.; Liu, X.; Chen, C.; Li, R.; Ye, H.; Chen, J.; Majeed, Y.; et al. Growth characteristics based multi-class kiwifruit bud detection with overlap-partitioning algorithm for robotic thinning. Comput. Electron. Agric. 2025, 229, 109715. [Google Scholar] [CrossRef]

- Liu, X.; Jing, X.; Jiang, H.; Younas, S.; Wei, R.; Dang, H.; Wu, Z.; Fu, L. Performance evaluation of newly released cameras for fruit detection and localization in complex kiwifruit orchard environments. J. Field Robot. 2024, 41, 881–894. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO11; GitHub: San Francisco, CA, USA, 2024; Available online: https://Github.Com/Ultralytics/Ultralytics (accessed on 5 November 2024).

- Geng, D.; Wang, Q.; Li, H.; He, Q.; Yue, D.; Ma, J.; Wang, Y.; Xu, H. Online detection technology for broken corn kernels based on deep learning. Trans. Chin. Soc. Agric. Eng. 2023, 39, 270–278. [Google Scholar] [CrossRef]

- Shen, R.; Zhen, T.; Li, Z. Segmentation of unsound wheat kernels based on improved Mask RCNN. Sensors 2023, 23, 3379. [Google Scholar] [CrossRef]

- Fan, L.; Fan, D.; Ding, Y.; Wu, Y.; Chu, H.; Pagnucco, M.; Song, Y. AV4GAInsp: An efficient dual-camera system for identifying defective kernels of cereal grains. IEEE Robot. Autom. Lett. 2024, 9, 851–858. [Google Scholar] [CrossRef]

- Fu, L.; Liu, Z.; Majeed, Y.; Cui, Y. Kiwifruit yield estimation using image processing by an Android mobile phone. IFAC-PapersOnLine 2018, 51, 185–190. [Google Scholar] [CrossRef]

| Property | Categories | Labels | Features |

|---|---|---|---|

| Millet kernels | Semi-mature kernel | bai | White, ellipsoid |

| Mature kernel | huang | Yellow, ellipsoid | |

| Shriveled kernel | shou | White or yellow, crackled | |

| Moldy kernel | huai | Brown, ellipsoid | |

| Impurities | Stem | jing | Yellow, rectangular |

| Stone | shitou | Gray, irregular | |

| Chaff | guke | White, semi-transparent, irregular |

| Model | Image Pixel Size (Pixels) | Model Input Resize (Pixels) | P (%) | R (%) | mAP (%) | Average Speed (ms) |

|---|---|---|---|---|---|---|

| HRDM | 4608 × 3456 | 640 × 640 | 22.2 | 9.7 | 15.9 | 15.5 |

| OPSDM | 640 × 640 | 640 × 640 | 95.5 | 89.5 | 94.8 | 13.5 |

| Categories | P (%) | R (%) | AP (%) | mAP (%) |

|---|---|---|---|---|

| Semi-mature kernel | 96.6 | 96.1 | 98.4 | 94.8 |

| Mature kernel | 97.6 | 96.3 | 98.2 | |

| Shriveled kernel | 95.3 | 82.6 | 91.6 | |

| Moldy kernel | 95.9 | 84.6 | 91.7 | |

| Stem | 93.9 | 92.4 | 97.1 | |

| Stone | 91.1 | 76.4 | 87.2 | |

| Chaff | 98.6 | 98.3 | 99.5 | |

| All | 95.5 | 89.5 | - |

| Model or Equipment | Grains | Devices of the Computing Platform | Average Number per Inspection | Average Inspection Time (s) |

|---|---|---|---|---|

| MDApp | Millet | Smartphone (CPU: Qualcomm Snapdragon 845; GPU: Adreno 630) | 5326 | 6.8 |

| AV4GAIsp [31] | Wheat; Sorghum; Rice | Nvidia Jetson Xavier NX (CPU: ARM Cortex-A57; GPU: Nvidia Volta) | 1500 | 151.7 |

| Improve Mask-RCNN [30] | Wheat | Desktop computer (CPU: -; GPU: 2 × Tesla T4) | 200 | 7.8 |

| BCK-CNN [29] | Corn | Desktop computer (CPU: i7-1165G7; GPU: RTX3060) | 700 | 12.0 |

| WGNet [13] | Wheat | Desktop computer (CPU: -; GPU: GTX1070) | 2500 | 10.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, L.; Wei, R.; Ding, Y.; Huang, J.; Wei, X.; Li, R.; Wang, S.; Fu, L. Enhancing Dense-Scene Millet Appearance Quality Inspection Based on YOLO11s with Overlap-Partitioning Strategy for Procurement. Agronomy 2025, 15, 1284. https://doi.org/10.3390/agronomy15061284

He L, Wei R, Ding Y, Huang J, Wei X, Li R, Wang S, Fu L. Enhancing Dense-Scene Millet Appearance Quality Inspection Based on YOLO11s with Overlap-Partitioning Strategy for Procurement. Agronomy. 2025; 15(6):1284. https://doi.org/10.3390/agronomy15061284

Chicago/Turabian StyleHe, Leilei, Ruiyang Wei, Yusong Ding, Juncai Huang, Xin Wei, Rui Li, Shaojin Wang, and Longsheng Fu. 2025. "Enhancing Dense-Scene Millet Appearance Quality Inspection Based on YOLO11s with Overlap-Partitioning Strategy for Procurement" Agronomy 15, no. 6: 1284. https://doi.org/10.3390/agronomy15061284

APA StyleHe, L., Wei, R., Ding, Y., Huang, J., Wei, X., Li, R., Wang, S., & Fu, L. (2025). Enhancing Dense-Scene Millet Appearance Quality Inspection Based on YOLO11s with Overlap-Partitioning Strategy for Procurement. Agronomy, 15(6), 1284. https://doi.org/10.3390/agronomy15061284