2.5.2. Algorithm Improvement

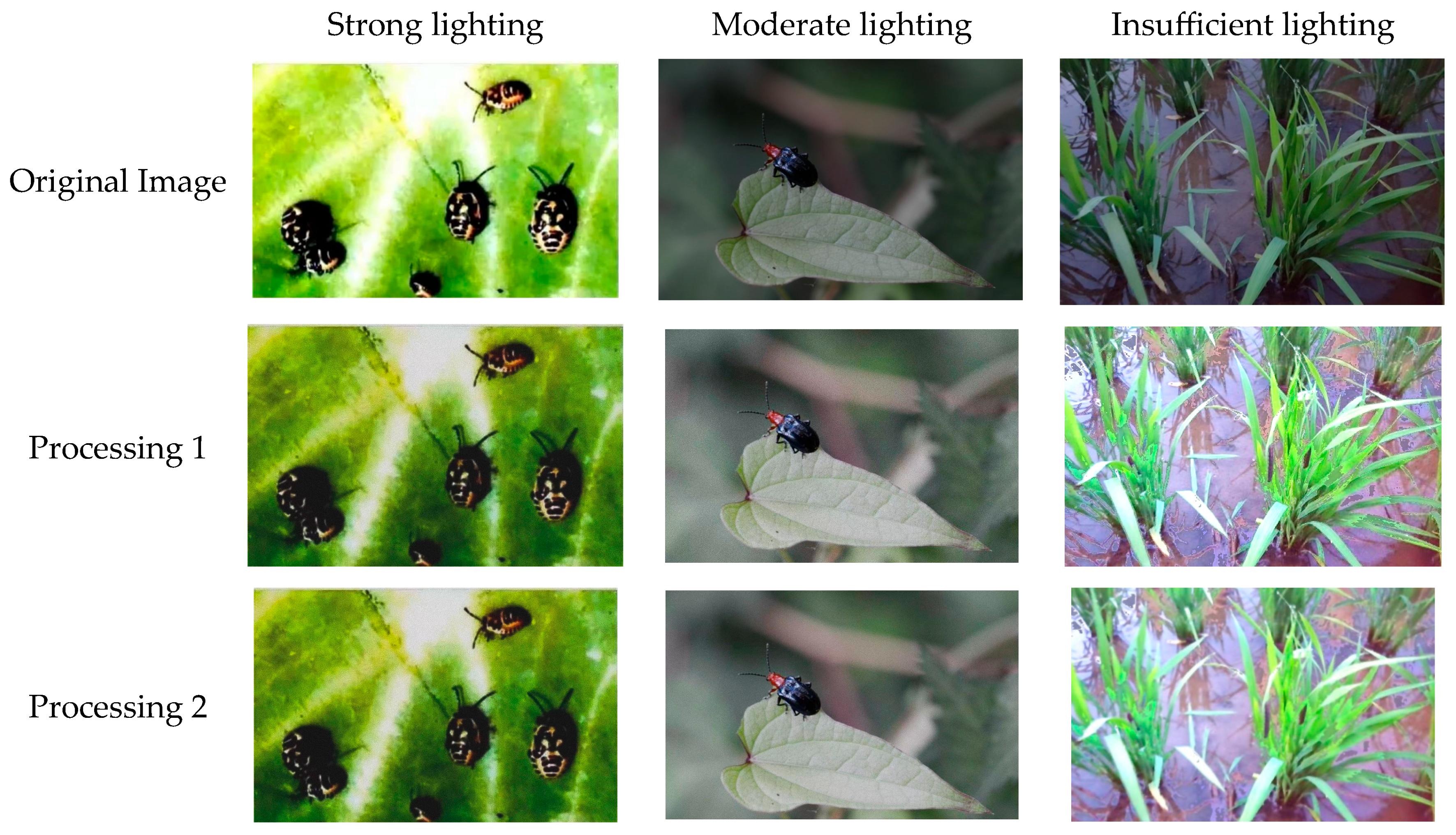

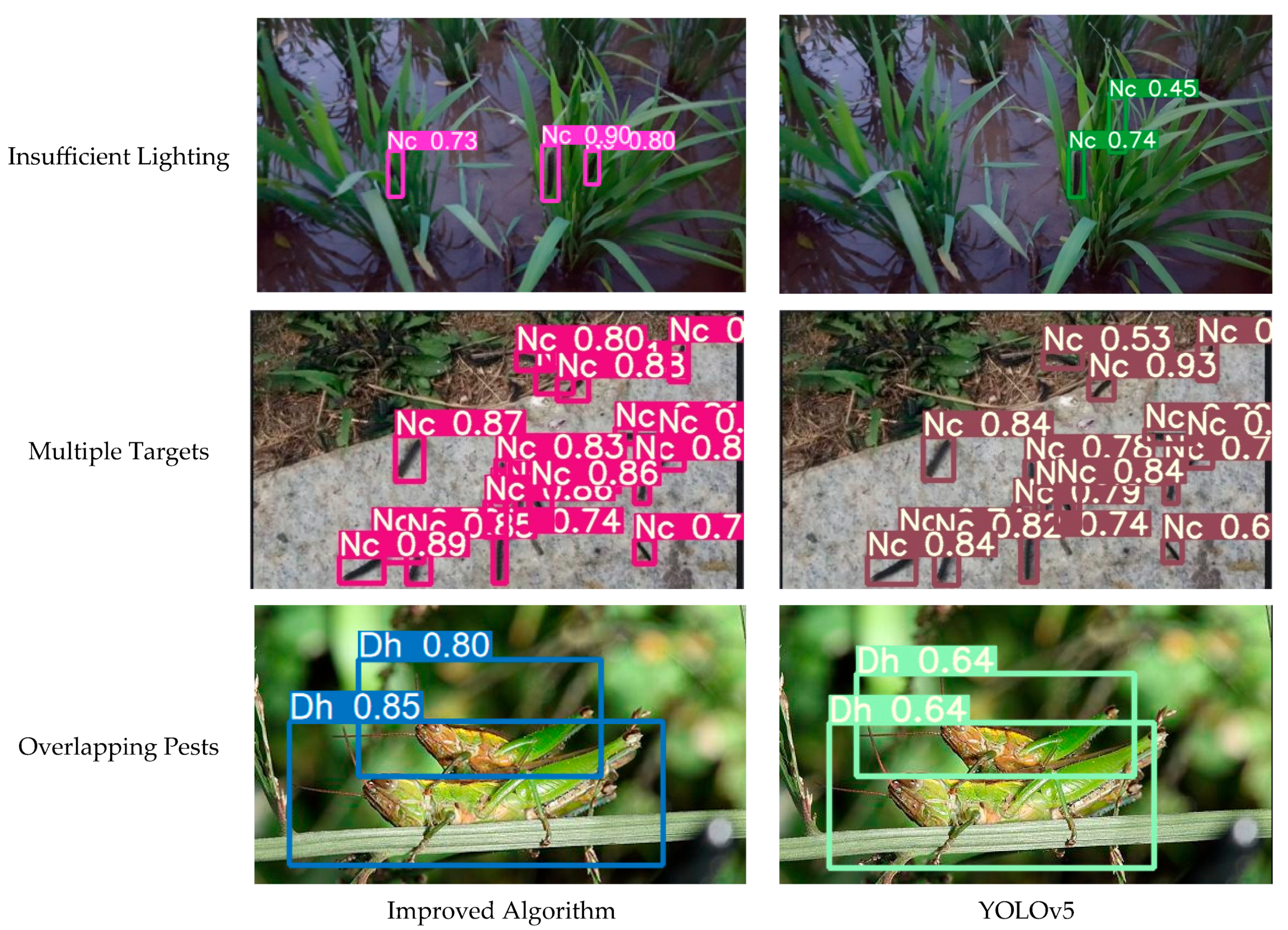

Although the impact of uneven lighting on the collected overlapping pest images was corrected using the MSRCP algorithm, there still exist near-field interference sources, such as soil particles and crop residues. Additionally, due to the local similarity in the texture and appendage features of the pests, there is a risk of feature confusion, which can lead to false detections. Furthermore, due to the limitations of the smartphone’s shooting angle and depth of field variations, along with the 3D stacking effect during pest aggregation, traditional methods face a risk of missing detections when identifying the morphological continuity of partially occluded pests.

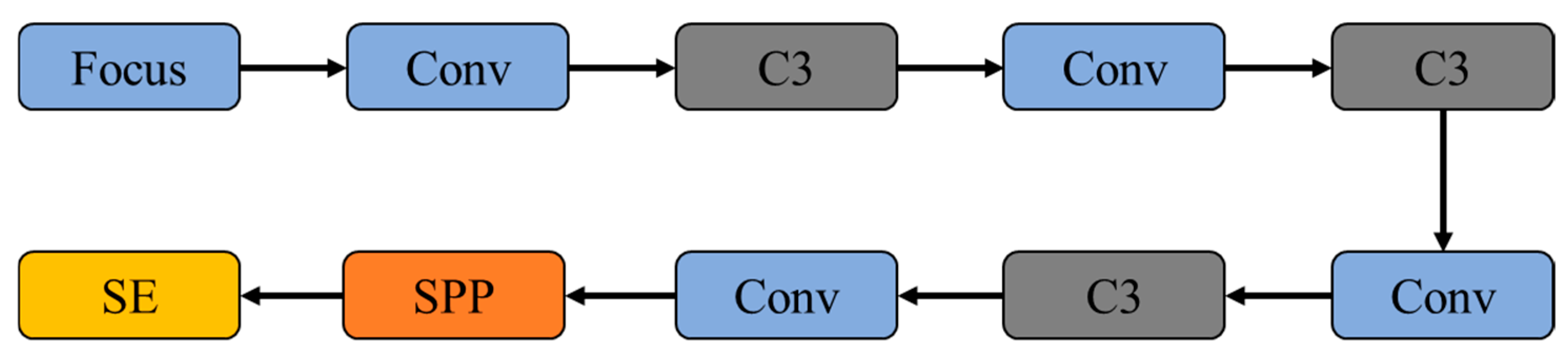

To address these issues, this study replaced the YOLOv5 backbone network with EfficientNetv2-S while retaining the Spatial Pyramid Pooling-Fast (SPPF) structure. The Convolutional Block Attention Module (CBAM) was introduced as a hybrid attention mechanism to enhance the response weights of pest-related features, such as antenna textures and intersegmental connections. Additionally, the Adaptive Feature Pyramid Network (AFPN) was employed to construct multi-scale adaptive fusion pathways, improving the representation of overlapping targets through adaptive cross-scale feature interactions.

- (1)

Replacing the Backbone Network.

Due to the limited computational power of the NVIDIA Jetson Nano development board, the model was optimized for lightweight processing by replacing the backbone network with the EfficientNetv2-S lightweight network.

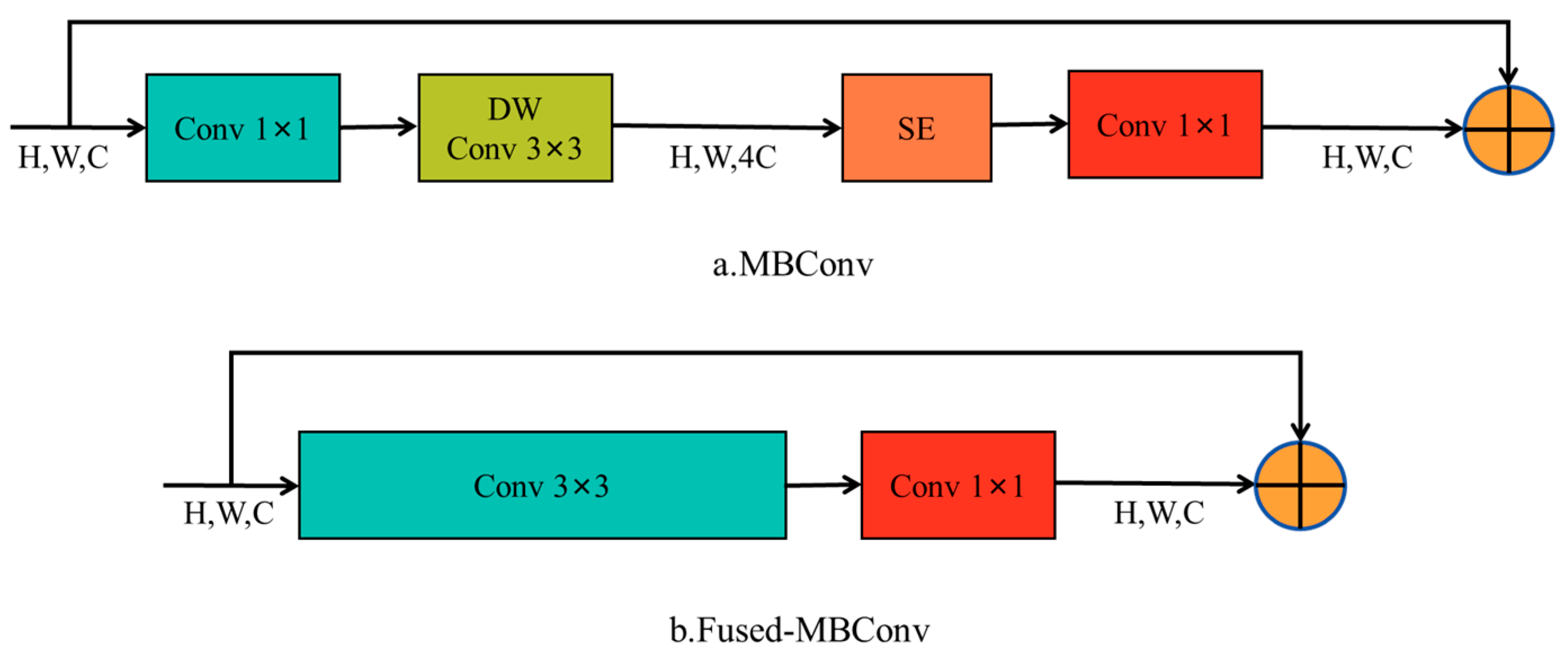

Compared to the EfficientNetv1 network, EfficientNetv2 replaces the shallow-layer MBConv with Fused-MBConv, which combines the 1 × 1 convolution and 3 × 3 depthwise convolution in MBConv into a single standard 3 × 3 convolution. This optimization fully utilizes GPU performance. Additionally, a progressive scaling strategy and optimized training methods were introduced, significantly improving training speed and inference efficiency.

The EfficientNetv2 series includes three model variants: S, M, and L. The S version serves as the base architecture, while M and L variants achieve performance gains by increasing the structural complexity, but this also requires more computational resources. Considering the balance between model efficiency and device computational capacity, this study selected the EfficientNetv2-S as the optimal solution for the backbone network due to its computational cost efficiency. The detailed structure of EfficientNetv2 is shown in

Figure 6.

The SE module was used to enhance the network’s channel feature extraction capabilities. However, in the actual network construction, the Fused-MBConv in shallow layers did not use the SE module, while the MBConv in deeper layers incorporated the SE module. This is because, in shallow layers with fewer channels, the computational overhead of the SE module is relatively high, and the benefits are limited.

- (2)

Adding the CBAM attention mechanism.

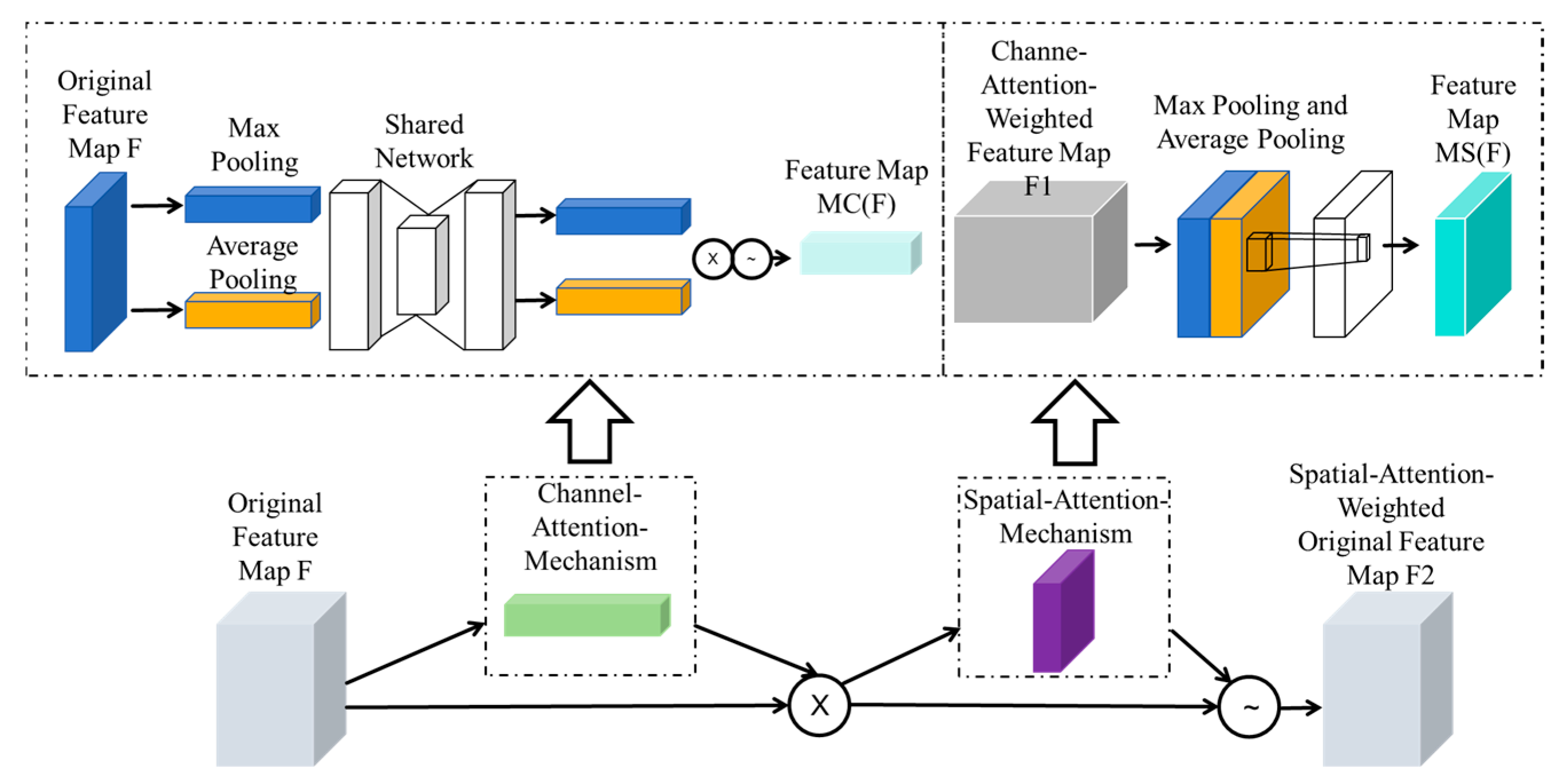

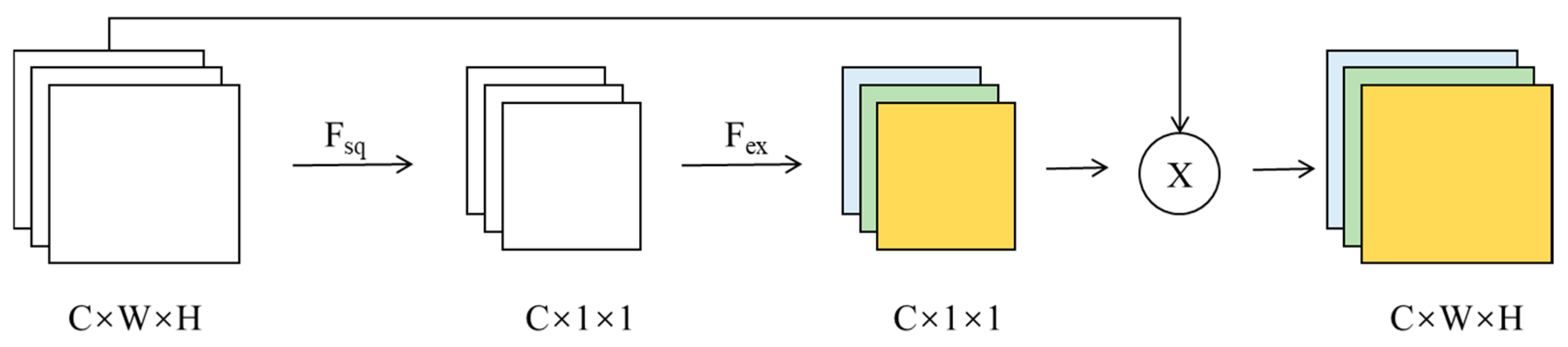

To improve detection performance in overlapping pest detection, which is hindered by overlapping pest body shapes, low-contrast features, and multi-scale interference, the CBAM (Convolutional Block Attention Module) attention mechanism employed a channel-space dual-branch weight recalibration strategy. By adding the CBAM attention mechanism before the SPPF, the model was optimized.

The channel attention module generates channel weight vectors using global average pooling and a multi-layer perceptron, selectively enhancing the channel dimensions corresponding to the morphology and high-frequency textures of overlapping pest bodies while suppressing interference from background channels with similar colors. The spatial attention module constructs a spatial weight matrix through max pooling and convolution operations, strengthening the spatial activation of key pest features (e.g., the base of the antenna and segmental connections) in overlapping areas. This spatial domain recalibration enhances the gradient response of these critical features.

The structure of the CBAM attention mechanism is illustrated in

Figure 7.

- (3)

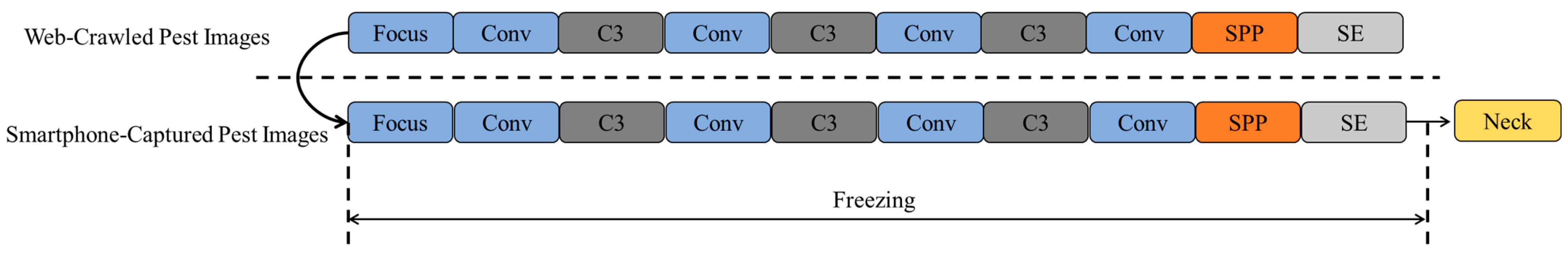

Replacing PANet with the AFPN Structure.

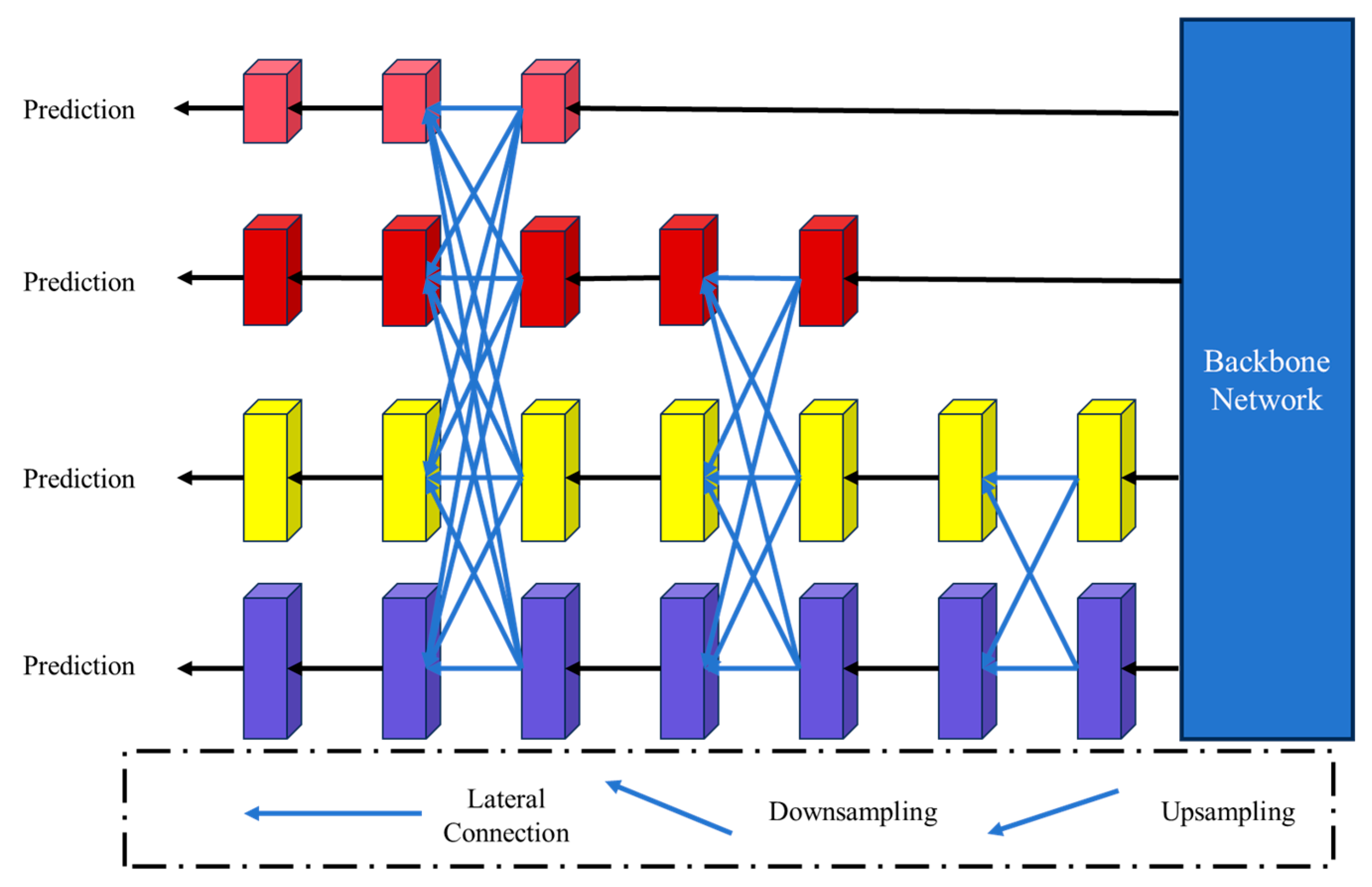

In the task of overlapping pest detection, feature confusion arises due to the dense accumulation of pest bodies, significant scale differences, and blurred anatomical details. To address this issue, the Neck layer of YOLOv5 was topologically restructured: the original PANet was replaced with the Adaptive Feature Pyramid Network (AFPN). The core improvement in AFPN lies in establishing an asymmetric interaction mechanism for multi-level features, as shown in

Figure 8.

Traditional FPN/PANet uses a linear bidirectional fusion strategy, which overlooks the heterogeneous contribution of features at different resolutions to overlapping pest detection tasks. This was especially problematic when processing low-resolution images in the dataset, as the high-frequency texture features of small-scale pests are easily overwhelmed by the large-scale background noise. As a result, PANet suffers from inadequate feature fusion, semantic information loss, and the degradation of fine details, ultimately affecting detection accuracy and robustness. To address this limitation, this study introduced the Adaptive Feature Pyramid Network (AFPN) to replace PANet in YOLOv5, as illustrated in

Figure 9.

ASFF2 to ASFF3: The specific structure of adaptive spatial feature fusion (ASFF). In the multilevel feature fusion process, ASFF technique is used to match the spatial weights of features at different levels and to adjust the influence of features at different levels so as to increase the importance of the key layers and to reduce the information conflicts from different layers.

As shown in

Figure 8, AFPN optimizes multi-scale information fusion through a hierarchical feature integration mechanism. During the feature generation process from bottom to top in the backbone network, an incremental fusion strategy is employed: first, shallow high-resolution features are integrated, followed by the fusion of middle-level semantic features, and finally, high-level features are incorporated.

Due to significant semantic differences between non-adjacent layers (e.g., bottom and top layers), cross-layer connections in traditional pyramid networks often lead to feature exclusion. AFPN innovatively introduces a dynamic spatial weight allocation module (indicated by the blue connection lines in the figure). This module constructs a feature importance evaluation matrix through a learnable spatial attention mechanism, achieving optimization in three aspects:

Differentiated spatial weight allocation: High weight coefficients are assigned to low-level detail features (e.g., pest appendage contours), while the weight of high-level abstract features is adaptively adjusted according to background complexity.

Conflicting signal suppression: A channel-space dual-dimension calibration is used to eliminate response conflicts between cross-layer feature maps.

Key feature enhancement: Spatial pyramid pooling is used to strengthen the structural significance of pest bodies.

This design, through the coordinated operation of lateral connections (cross-resolution feature bridging), downsampling (spatial information compression), and upsampling (detail restoration), significantly improves the feature separability of overlapping pest targets.