Yield Estimation in Banana Orchards Based on DeepSORT and RGB-Depth Images

Abstract

1. Introduction

2. Materials and Methods

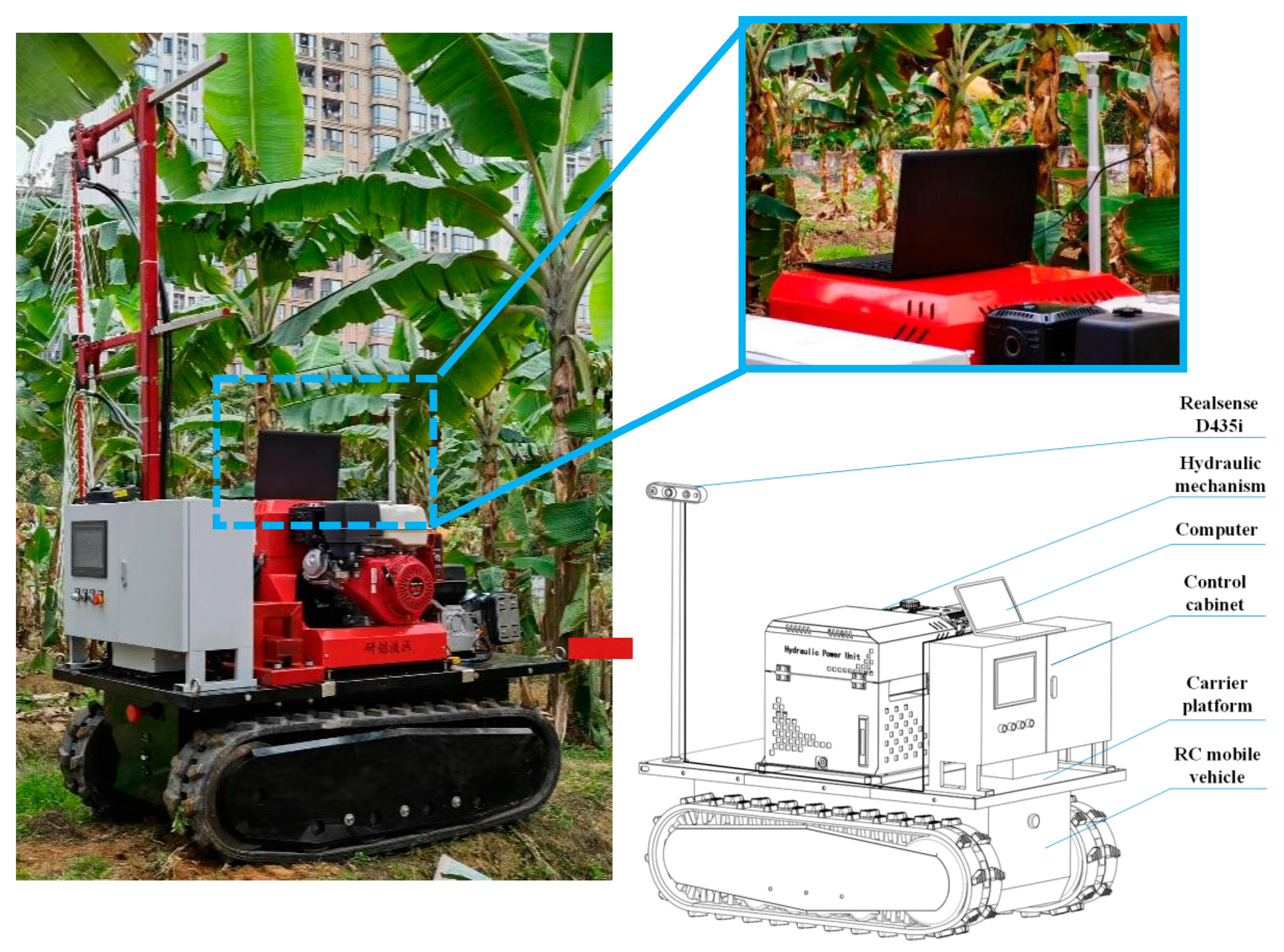

2.1. Sensor System and Data Collection

2.2. Weight Estimation of a Bunch of Bananas

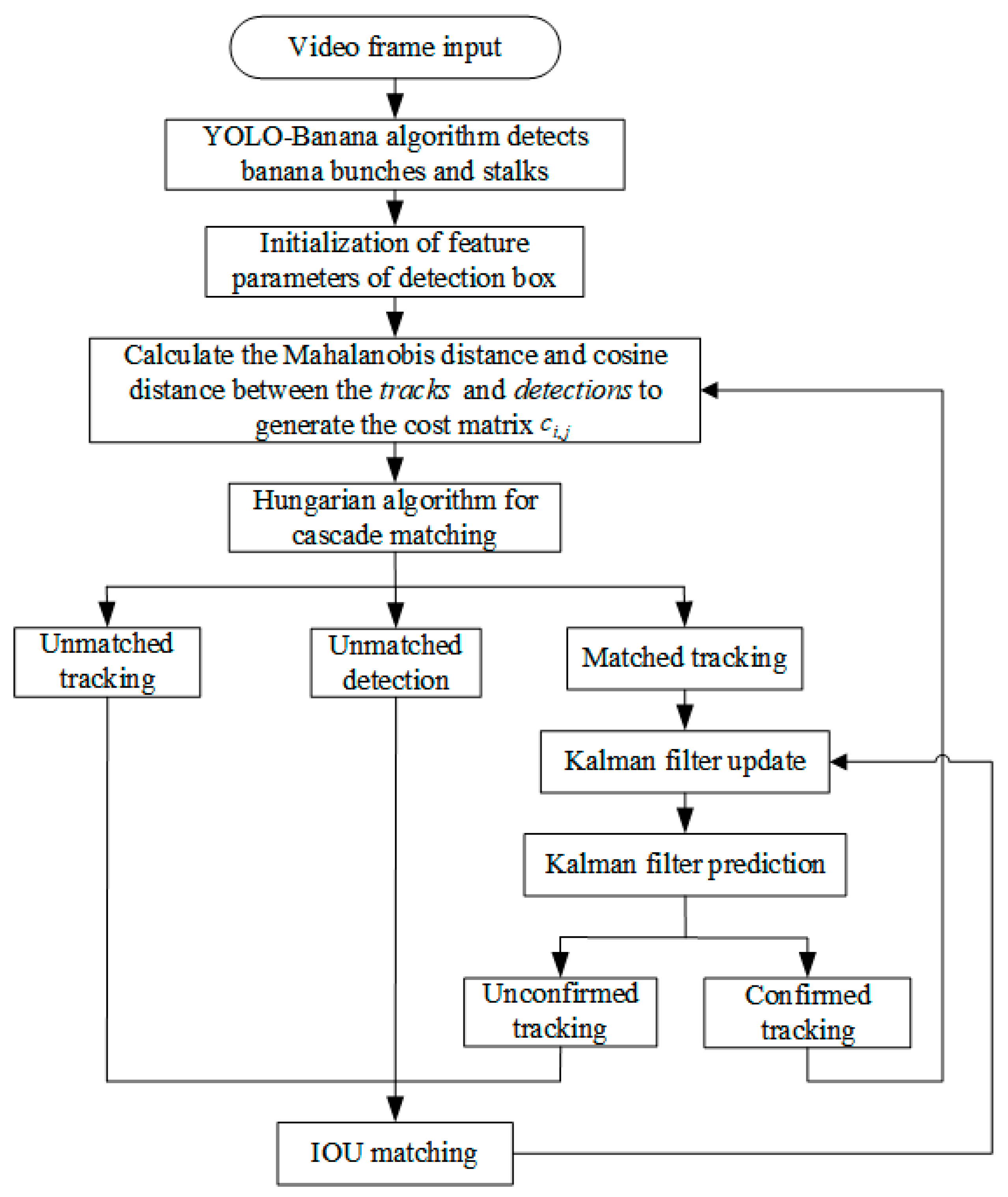

2.3. Banana Bunches Tracking and Counting

2.4. Yield Estimation Process in Banana Orchards

3. Results and Discussion

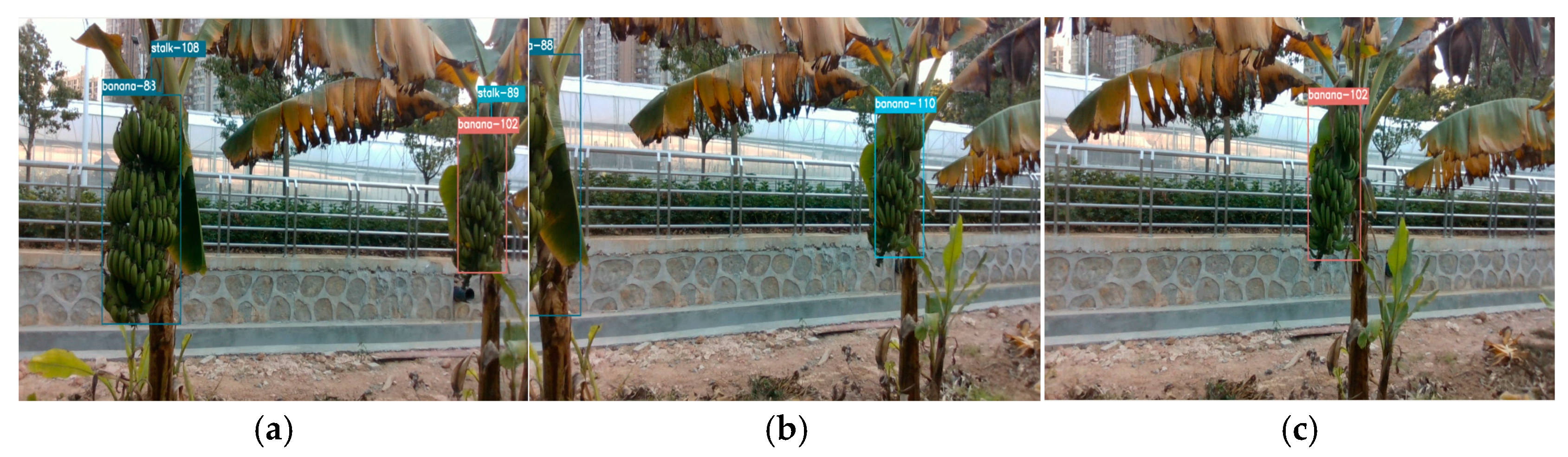

3.1. Tracking Results

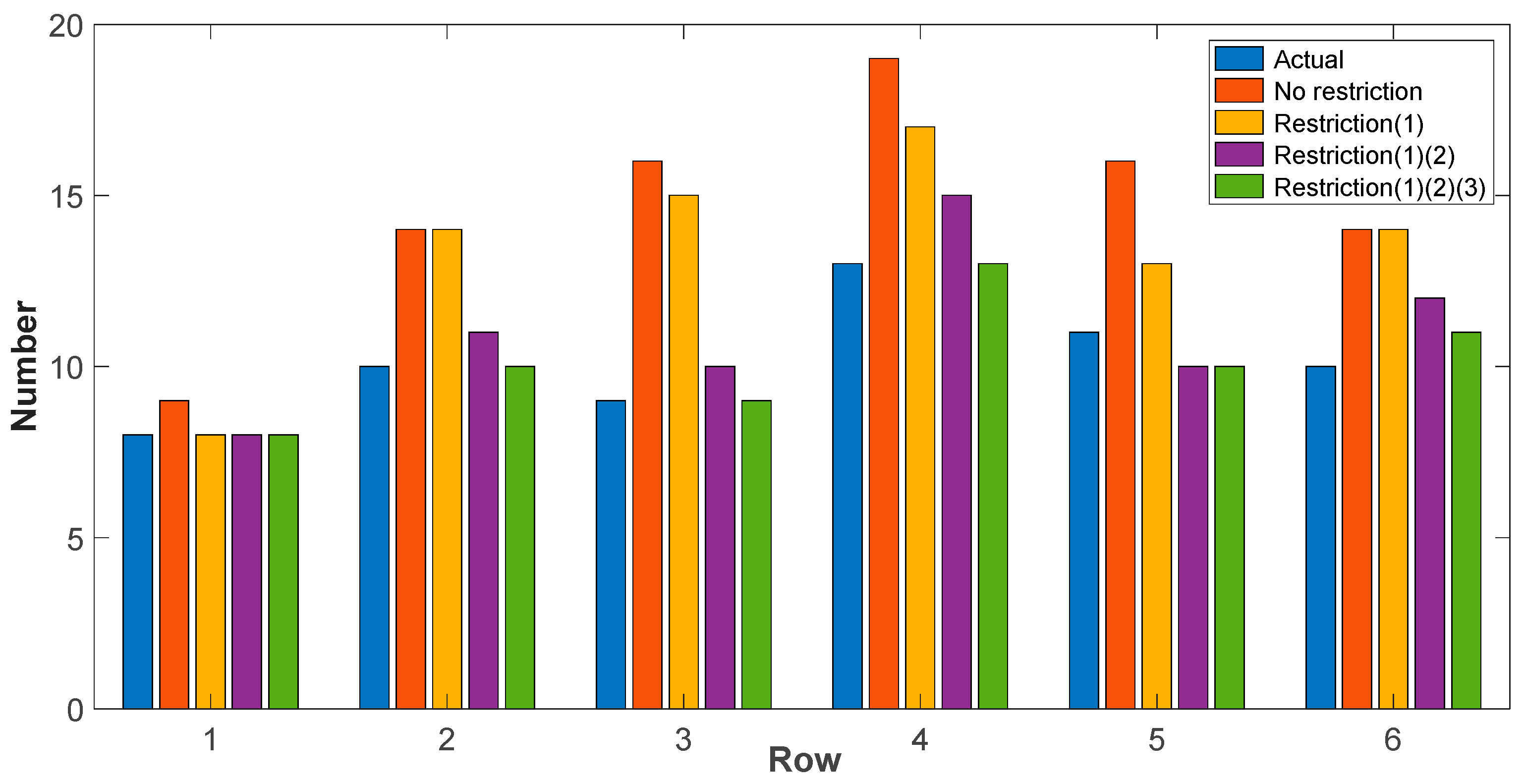

3.2. Contribution of Restrictions in Counting

3.3. Banana Bunch Counting Results

3.4. Yield Estimation Results

3.5. Discussions

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- He, L.; Fang, W.; Zhao, G.; Wu, Z.; Fu, L.; Li, R.; Majeed, Y.; Dhupia, J. Fruit yield prediction and estimation in orchards: A state-of-the-art comprehensive review for both direct and indirect methods. Comput. Electron. Agric. 2022, 195, 106812. [Google Scholar] [CrossRef]

- Huang, Y.; Qian, Y.; Wei, H.; Lu, Y.; Ling, B.; Qin, Y. A survey of deep learning-based object detection methods in crop counting. Comput. Electron. Agric. 2023, 215, 108425. [Google Scholar] [CrossRef]

- Noor, S.; Tajik, O.; Golzar, J. Simple random sampling. Int. J. Educ. Lang. Stud. 2022, 1, 78–82. [Google Scholar]

- Tang, Y.; Qiu, J.; Zhang, Y.; Wu, D.; Cao, Y.; Zhao, K.; Zhu, L. Optimization strategies of fruit detection to overcome the challenge of unstructured background in field orchard environment: A review. Precis. Agric. 2023, 24, 1183–1219. [Google Scholar] [CrossRef]

- Chen, M.; Chen, Z.; Luo, L.; Tang, Y.; Cheng, J.; Wei, H.; Wang, J. Dynamic visual servo control methods for continuous operation of a fruit harvesting robot working throughout an orchard. Comput. Electron. Agric. 2024, 219, 108774. [Google Scholar] [CrossRef]

- Mekhalfi, M.L.; Nicolò, C.; Ianniello, I.; Calamita, F.; Goller, R.; Barazzuol, M.; Melgani, F. Vision system for automatic on-tree kiwifruit counting and yield estimation. Sensors 2020, 20, 4214. [Google Scholar] [CrossRef]

- Roy, P.; Kislay, A.; Plonski, P.A.; Luby, J.; Isler, V. Vision-based preharvest yield mapping for apple orchards. Comput. Electron. Agric. 2019, 164, 104897. [Google Scholar] [CrossRef]

- Méndez, V.; Pérez-Romero, A.; Sola-Guirado, R.; Miranda-Fuentes, A.; Manzano-Agugliaro, F.; Zapata-Sierra, A.; Rodríguez-Lizana, A. In-field estimation of orange number and size by 3d laser scanning. Agronomy 2019, 9, 885. [Google Scholar] [CrossRef]

- Uribeetxebarria, A.; Martínez-Casasnovas, J.A.; Tisseyre, B.; Guillaume, S.; Escolà, A.; Rosell-Polo, J.R.; Arnó, J. Assessing ranked set sampling and ancillary data to improve fruit load estimates in peach orchards. Comput. Electron. Agric. 2019, 164, 104931. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Martínez-Guanter, J.; Egea, G.; Raja, P.; Pérez-Ruiz, M. Deep learning techniques for estimation of the yield and size of citrus fruits using a uav. Eur. J. Agron. 2020, 115, 126030. [Google Scholar] [CrossRef]

- Li, D.; Sun, X.; Jia, Y.; Yao, Z.; Lin, P.; Chen, Y.; Zhou, H.; Zhou, Z.; Wu, K.; Shi, L.; et al. A longan yield estimation approach based on uav images and deep learning. Front. Plant Sci. 2023, 14, 1132909. [Google Scholar] [CrossRef] [PubMed]

- Mathew, J.; Delavarpour, N.; Miranda, C.; Stenger, J.; Zhang, Z.; Aduteye, J.; Flores, P. A novel approach to pod count estimation using a depth camera in support of soybean breeding applications. Sensors 2023, 23, 6506. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Chen, J.; He, L.; Gui, J.; Jia, J. An rgb-d object detection model with high-generalization ability applied to tea harvesting robot for outdoor cross-variety tea shoots detection. J. Field Robot. 2024, 41, 1167–1186. [Google Scholar] [CrossRef]

- Yang, L.; Noguchi, T.; Hoshino, Y. Development of a pumpkin fruits pick-and-place robot using an rgb-d camera and a yolo based object detection ai model. Comput. Electron. Agric. 2024, 227, 109625. [Google Scholar] [CrossRef]

- Palacios, F.; Diago, M.P.; Melo-Pinto, P.; Tardaguila, J. Early yield prediction in different grapevine varieties using computer vision and machine learning. Precis. Agric. 2023, 24, 407–435. [Google Scholar] [CrossRef]

- Fu, L.; Wu, F.; Zou, X.; Jiang, Y.; Lin, J.; Yang, Z.; Duan, J. Fast detection of banana bunches and stalks in the natural environment based on deep learning. Comput. Electron. Agric. 2022, 194, 106800. [Google Scholar] [CrossRef]

- Fu, L.; Yang, Z.; Wu, F.; Zou, X.; Lin, J.; Cao, Y.; Duan, J. Yolo-banana: A lightweight neural network for rapid detection of banana bunches and stalks in the natural environment. Agronomy 2022, 12, 391. [Google Scholar] [CrossRef]

- Zhou, L.; Yang, Z.; Deng, F.; Zhang, J.; Xiao, Q.; Fu, L.; Duan, J. Banana bunch weight estimation and stalk central point localization in banana orchards based on rgb-d images. Agronomy 2024, 14, 1123. [Google Scholar] [CrossRef]

- Wu, F.; Yang, Z.; Mo, X.; Wu, Z.; Tang, W.; Duan, J.; Zou, X. Detection and counting of banana bunches by integrating deep learning and classic image-processing algorithms. Comput. Electron. Agric. 2023, 209, 107827. [Google Scholar] [CrossRef]

- Stein, M.; Bargoti, S.; Underwood, J. Image based mango fruit detection, localisation and yield estimation using multiple view geometry. Sensors 2016, 16, 1915. [Google Scholar] [CrossRef]

- Santos, T.T.; de Souza, K.X.; Neto, J.C.; Koenigkan, L.V.; Moreira, A.S.; Ternes, S. Multiple orange detection and tracking with 3-d fruit relocalization and neural-net based yield regression in commercial sweet orange orchards. Comput. Electron. Agric. 2024, 224, 109199. [Google Scholar] [CrossRef]

- Tan, C.; Sun, J.; Paterson, A.H.; Song, H.; Li, C. Three-view cotton flower counting through multi-object tracking and rgb-d imagery. Biosyst. Eng. 2024, 246, 233–247. [Google Scholar] [CrossRef]

- Hu, J.; Fan, C.; Wang, Z.; Ruan, J.; Wu, S. Fruit detection and counting in apple orchards based on improved yolov7 and multi-object tracking methods. Sensors 2023, 23, 5903. [Google Scholar] [CrossRef]

- Tu, S.; Huang, Y.; Liang, Y.; Liu, H.; Cai, Y.; Lei, H. A passion fruit counting method based on the lightweight yolov5s and improved deepsort. Precis. Agric. 2024, 25, 1731–1750. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17 September 2017. [Google Scholar]

- Escamilla, L.D.V.; Gómez-Espinosa, A.; Cabello, J.A.E.; Cantoral-Ceballos, J.A. Maturity recognition and fruit counting for sweet peppers in greenhouses using deep learning neural networks. Agriculture 2024, 14, 331. [Google Scholar] [CrossRef]

- Wang, C.; Wang, C.; Wang, L.; Li, Y.; Lan, Y. Real-time tracking based on improved yolov5 detection in orchard environment for dragon fruit. J. Asabe 2023, 66, 1109–1124. [Google Scholar] [CrossRef]

- Cao, D.; Luo, W.; Tang, R.; Liu, Y.; Zhao, J.; Li, X.; Yuan, L. Research on apple detection and tracking count in complex scenes based on the improved yolov7-tiny-pde. Agriculture 2025, 15, 483. [Google Scholar] [CrossRef]

- Zheng, Z.; Xiong, J.; Wang, X.; Li, Z.; Huang, Q.; Chen, H.; Han, Y. An efficient online citrus counting system for large-scale unstructured orchards based on the unmanned aerial vehicle. J. Field Robot. 2023, 40, 552–573. [Google Scholar] [CrossRef]

- Feng, Y.; Ma, W.; Tan, Y.; Yan, H.; Qian, J.; Tian, Z.; Gao, A. Approach of dynamic tracking and counting for obscured citrus in smart orchard based on machine vision. Appl. Sci. 2024, 14, 1136. [Google Scholar] [CrossRef]

- Du, P.; Chen, S.; Li, X.; Hu, W.; Lan, N.; Lei, X.; Xiang, Y. Green pepper fruits counting based on improved deepsort and optimized yolov5s. Front. Plant Sci. 2024, 15, 1417682. [Google Scholar] [CrossRef]

- Wu, M.; Yuan, K.; Shui, Y.; Wang, Q.; Zhao, Z. A lightweight method for ripeness detection and counting of chinese flowering cabbage in the natural environment. Agronomy 2024, 14, 1835. [Google Scholar] [CrossRef]

- Qi, Z.; Zhang, T.; Yuan, T.; Zhou, W.; Zhang, W. Assessment of the tomato cluster yield estimation algorithms via tracking-by-detection approaches. Inf. Process. Agric. 2025, in press. [Google Scholar] [CrossRef]

- Hernandez, B.; Medeiros, H. Multi-object tracking in agricultural applications using a vision transformer for spatial association. Comput. Electron. Agric. 2024, 226, 109379. [Google Scholar] [CrossRef]

- Saraceni, L.; Motoi, I.M.; Nardi, D.; Ciarfuglia, T.A. Agrisort: A simple online real-time tracking-by-detection framework for robotics in precision agriculture. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13 May 2024. [Google Scholar]

- Tan, S.; Kuang, Z.; Jin, B. Appleyolo: Apple yield estimation method using improved yolov8 based on deep oc-sort. Expert Syst. Appl. 2025, 272, 126764. [Google Scholar] [CrossRef]

- Vemuru, S.; Ankit, K.; Venkatesan, S.P.; Ghose, D.; Kolathaya, S. Yield prediction and counting using world coordinates. Ssrn 2024, 4829514. [Google Scholar] [CrossRef]

- Shui, Y.; Yuan, K.; Wu, M.; Zhao, Z. Improved multi-size, multi-target and 3d position detection network for flowering chinese cabbage based on yolov8. Plants 2024, 13, 2808. [Google Scholar] [CrossRef]

- Rong, J.; Zhou, H.; Zhang, F.; Yuan, T.; Wang, P. Tomato cluster detection and counting using improved yolov5 based on rgb-d fusion. Comput. Electron. Agric. 2023, 207, 107741. [Google Scholar] [CrossRef]

- Abeyrathna, R.R.D.; Nakaguchi, V.M.; Minn, A.; Ahamed, T. Recognition and counting of apples in a dynamic state using a 3d camera and deep learning algorithms for robotic harvesting systems. Sensors 2023, 23, 3810. [Google Scholar] [CrossRef]

- Villacrés, J.; Viscaino, M.; Delpiano, J.; Vougioukas, S.; Cheein, F.A. Apple orchard production estimation using deep learning strategies: A comparison of tracking-by-detection algorithms. Comput. Electron. Agric. 2023, 204, 107513. [Google Scholar] [CrossRef]

- Zhou, X.; Zhang, Y.; Jiang, X.; Riaz, K.; Rosenbaum, P.; Lefsrud, M.; Sun, S. Advancing tracking-by-detection with multimap: Towards occlusion-resilient online multiclass strawberry counting. Expert Syst. Appl. 2024, 255, 124587. [Google Scholar] [CrossRef]

- Jianping, Q.; Bin, X.; Wu, X.; Chen, M.; Wang, Y. A smartphone-based apple yield estimation application using imaging features and the ann method in mature period. Sci. Agric. 2018, 75, 273–280. [Google Scholar]

- Hondo, T.; Kobayashi, K.; Aoyagi, Y. Real-time prediction of growth characteristics for individual fruits using deep learning. Sensors 2022, 22, 6473. [Google Scholar] [CrossRef] [PubMed]

- Devanna, R.P.; Romeo, L.; Reina, G.; Milella, A. Yield estimation in precision viticulture by combining deep segmentation and depth-based clustering. Comput. Electron. Agric. 2025, 232, 110025. [Google Scholar] [CrossRef]

- Sarron, J.; Malézieux, É.; Sané, C.; Faye, É. Mango yield mapping at the orchard scale based on tree structure and land cover assessed by uav. Remote Sens. 2018, 10, 1900. [Google Scholar] [CrossRef]

- Wittstruck, L.; Kühling, I.; Trautz, D.; Kohlbrecher, M.; Jarmer, T. Uav-based rgb imagery for hokkaido pumpkin (cucurbita max.) Detection and yield estimation. Sensors 2021, 21, 118. [Google Scholar] [CrossRef]

- Mokria, M.; Gebrekirstos, A.; Said, H.; Hadgu, K.; Hagazi, N.; Dubale, W.; Bräuning, A. Fruit weight and yield estimation models for five avocado cultivars in ethiopia. Environ. Res. Commun. 2022, 4, 75013. [Google Scholar] [CrossRef]

- Bishop, G.; Welch, G. An introduction to the kalman filter. Proc Siggraph Course 2001, 8, 41. [Google Scholar]

- Kuhn, H.W. The hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Jing, T.; Xie, J.; Zhou, D. Banana Cultivation and Pest and Disease Control, 1st ed.; China Agriculture Press: Beijing, China, 2022; pp. 31–87. [Google Scholar]

| Row Number | Number of Banana Bunches | Actual Weight (kg) | Empirical Prediction | Model Prediction | ||

|---|---|---|---|---|---|---|

| Predicted Weight (kg) | Accuracy (%) | Predicted Weight (kg) | Accuracy (%) | |||

| 1 | 8 | 176.72 | 195 | 89.66 | 167.5 | 94.78 |

| 2 | 10 | 192.85 | 211 | 90.59 | 206.13 | 93.11 |

| 3 | 9 | 176.34 | 163 | 92.44 | 189.36 | 92.62 |

| 4 | 13 | 249.91 | 277 | 89.16 | 263.51 | 94.59 |

| 5 | 11 | 169.2 | 178 | 94.8 | 154.25 | 91.16 |

| 6 | 10 | 198.67 | 182 | 91.61 | 214.94 | 91.81 |

| Total | 61 | 1163.69 | 1206 | 96.36 | 1195.69 | 97.25 |

| Average Accuracy | -- | 91.37 | 93.01 | |||

| RMSE | 17.91 kg | 12.81 kg | ||||

| 95% confidence interval | [−18.80, 4.02] kg | [−10.19, 6.15] kg | ||||

| Fruit | Sensor | Detector | Tracker | Yield Estimation Type | Results |

|---|---|---|---|---|---|

| Grapevine [15] | RGB | SVR | Counting-based (image-based) | NRMSE of 29.77% | |

| Longan [11] | RGB | SF-YD model | Counting-based (image-based) | Average error rate of 2.99% | |

| Passion fruit [24] | RGB | YOLOv5s | DeepSORT | Counting-based (video-based) | Average accuracy of 95.1% |

| Strawberry [42] | RGB | YOLOv5s | DeepSORT | Counting-based (video-based) | The lowest error rate of 8.7% |

| Cotton flower [22] | RGB-D | Yolov8 | RAFT | Counting-based (video-based) | Mean absolute percentage error of 6.22% |

| Tomato cluster [39] | RGB-D | YOLOv5 | ByteTrack | Counting-based (video-based) | Average accuracy of 95.1% |

| Grape cluster [45] | RGB-D | MANet | Weight-based (volume and image) | Average error of 12% | |

| Banana (This work) | RGB-D | Yolo-Banana | DeepSORT | Weight-based | Average accuracy of 93.01% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, L.; Yang, Z.; Fu, L.; Duan, J. Yield Estimation in Banana Orchards Based on DeepSORT and RGB-Depth Images. Agronomy 2025, 15, 1119. https://doi.org/10.3390/agronomy15051119

Zhou L, Yang Z, Fu L, Duan J. Yield Estimation in Banana Orchards Based on DeepSORT and RGB-Depth Images. Agronomy. 2025; 15(5):1119. https://doi.org/10.3390/agronomy15051119

Chicago/Turabian StyleZhou, Lei, Zhou Yang, Lanhui Fu, and Jieli Duan. 2025. "Yield Estimation in Banana Orchards Based on DeepSORT and RGB-Depth Images" Agronomy 15, no. 5: 1119. https://doi.org/10.3390/agronomy15051119

APA StyleZhou, L., Yang, Z., Fu, L., & Duan, J. (2025). Yield Estimation in Banana Orchards Based on DeepSORT and RGB-Depth Images. Agronomy, 15(5), 1119. https://doi.org/10.3390/agronomy15051119