1. Introduction

The

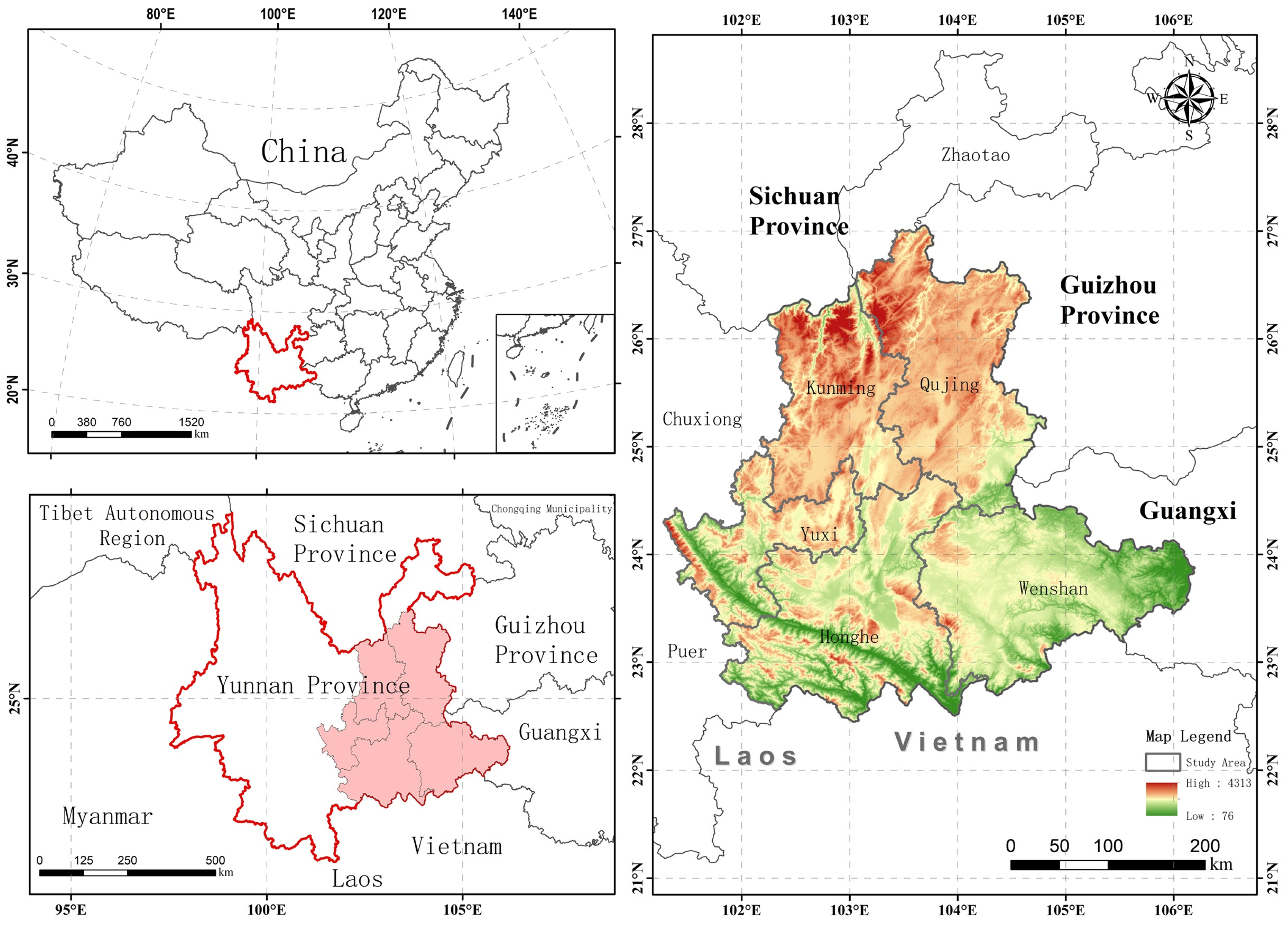

Spodoptera frugiperda (fall armyworm), listed by the Food and Agriculture Organization (FAO) of the United Nations as a major transboundary migratory pest of global concern, has spread from the Americas to nearly one hundred countries and regions worldwide [

1,

2,

3]. Its larvae are highly voracious and destructive, often causing severe yield losses or even total crop failure. The estimated annual economic loss exceeds USD 10 billion, posing a significant threat to the safe production of staple crops such as maize and rice, as well as to global food security and the livelihoods of smallholders [

4,

5,

6].

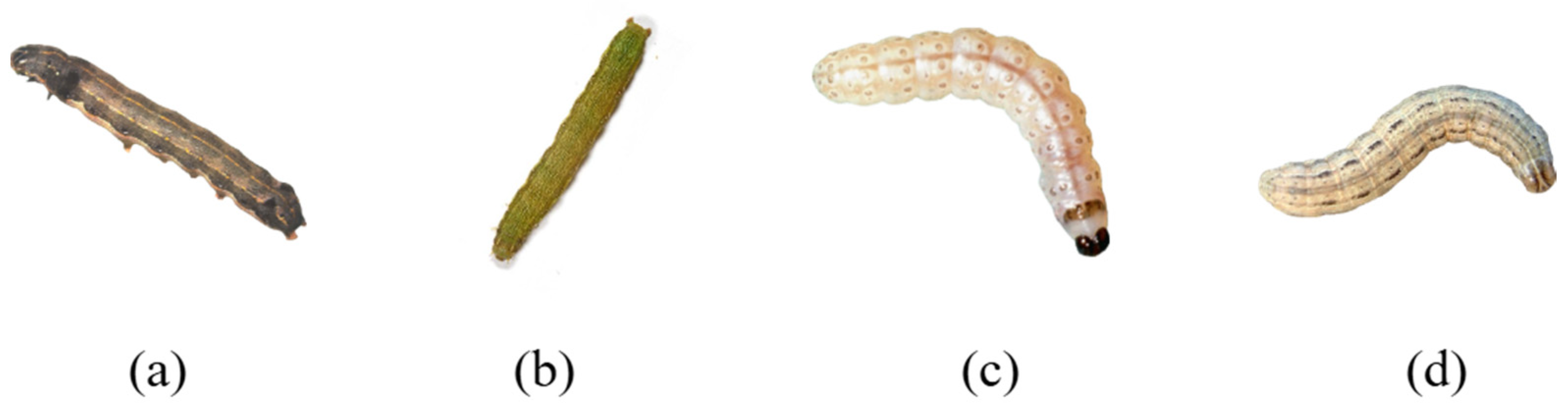

The larvae of

S. frugiperda typically undergo six instar stages, each exhibiting distinct behavioral patterns and damage characteristics. Early instar larvae (1st–3rd instars) tend to cluster on leaf surfaces, producing characteristic “window-pane” feeding symptoms. Although their feeding capacity is limited, this stage represents a critical window for effective chemical control due to their concealed feeding habits [

7,

8]. In contrast, late instar larvae (4th–6th instars, especially the 5th and 6th) become more dispersed, and their feeding intensity increases dramatically—accounting for approximately 80–90% of the total larval consumption. They are capable of boring into stems and ears, resulting in lodging and severe yield reduction. Studies have shown that larval tolerance to insecticides increases significantly with development, and older larvae exhibit strong resistance, thereby diminishing the effectiveness of chemical control [

9]. Differences in susceptibility to natural enemies among instars have also been reported [

10,

11,

12]. Therefore, accurate identification of larval instar stages—particularly the timely detection of early instars—is of great importance for precision pest management and minimizing economic losses.

Currently, a major challenge in field control lies in the difficulty of real-time and accurate identification of larval instar stages, especially in distinguishing the easily controllable early instars from the highly destructive and resistant late instars. Specific difficulties include: (1) early instars are small in size, cryptically colored, and often hidden on the undersides or whorls of leaves, making early detection difficult; (2) larval coloration varies with environmental conditions and host plants [

13]; and although late instars exhibit more distinct morphological traits, they can still be easily confused with other noctuid larvae such as Mythimna separata, leading to misidentification by inexperienced personnel; (3) traditional morphological identification methods rely heavily on expert experience and are time-consuming, which limits their scalability for large-scale monitoring [

14]; while molecular diagnostic techniques [

15] offer high accuracy, they are costly and technically demanding, restricting their field deployment. Moreover, the timeliness of identification poses another constraint: in the field, larvae of multiple instars often coexist and develop asynchronously [

16]. Missing the early instar control window allows larvae to progress into more resistant, stem-boring stages, resulting in reduced control efficacy, aggravated economic loss, and increased environmental and pesticide residue risks.

In recent years, rapid advances in deep learning have driven remarkable progress in agricultural pest image recognition. Researchers have developed a range of deep learning–based models tailored to different crops and pest species, achieving high accuracy and efficiency in classification tasks [

17,

18,

19,

20,

21,

22]. For example, Chiranjeevi et al. [

23] proposed an end-to-end framework named InsectNet for high-precision recognition of multiple insect categories in agroecosystems, including pollinators, parasitoids, predators, and pests. Zhang et al. [

24] developed a Vision Transformer-based method for crop disease and pest recognition, achieving superior performance to conventional CNNs on two datasets comprising 10 and 15 pest categories. Dharmasasth et al. [

25] introduced a CNN ensemble model optimized by a genetic algorithm to improve generalization ability, outperforming both single models and average ensemble strategies on a 10-class insect dataset. An et al. [

26] proposed a feature fusion network integrating ResNet, Vision Transformer, and Swin Transformer architectures and employed Grad-CAM-based attention selection for enhanced interpretability. Their model achieved state-of-the-art accuracy on both 20-class subsets and the full IP102 dataset, demonstrating strong robustness under augmented image conditions.

Although deep learning has been widely applied in pest identification, most studies have focused on species-level or disease-type classification tasks, while automatic identification of larval instar stages of S. frugiperda remains largely unexplored. To date, few studies have systematically modeled and classified its developmental stages using deep learning. Therefore, this study aims to fill this research gap by proposing a novel, deep learning-based approach for accurate instar-stage recognition of this globally significant pest.

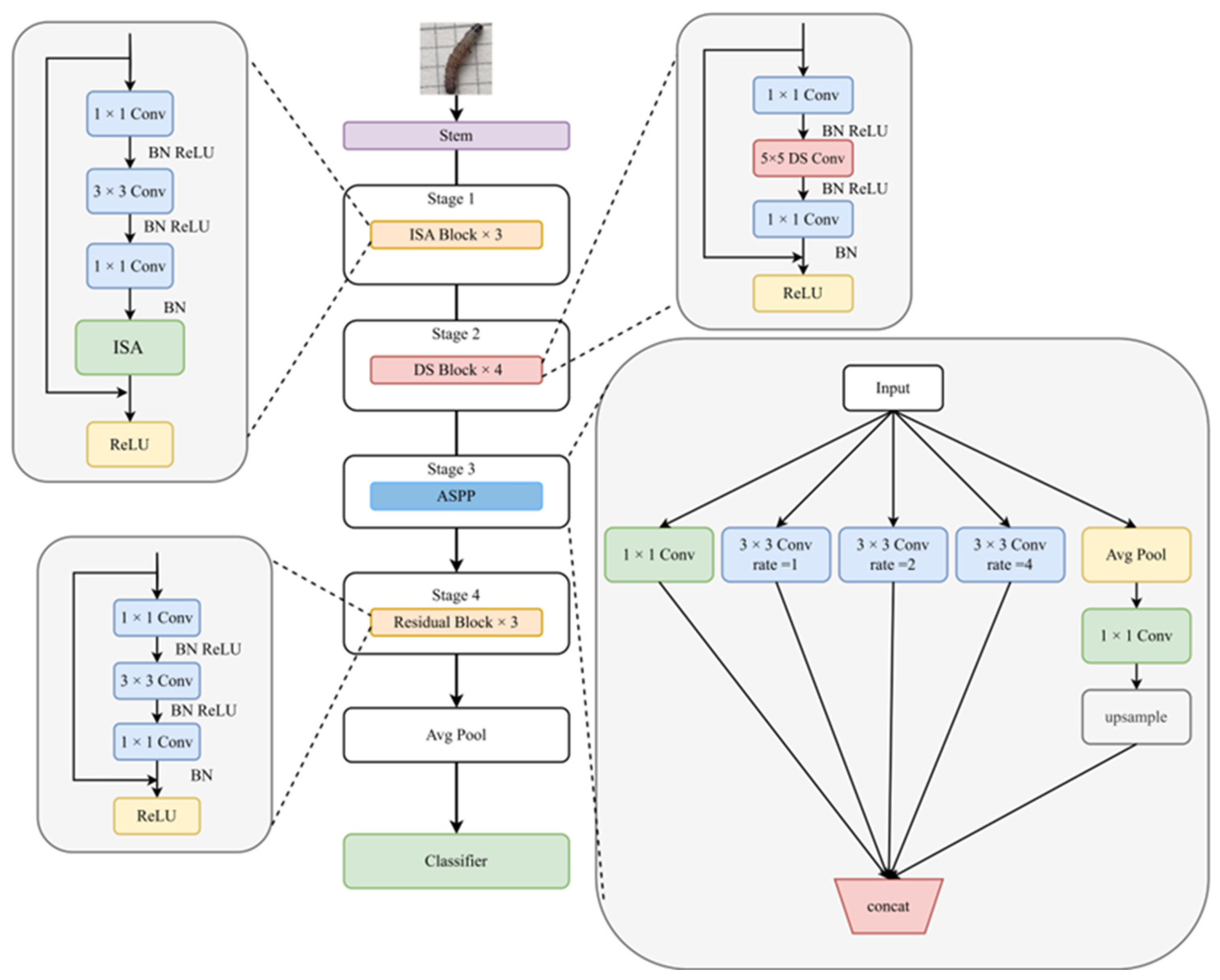

To overcome the aforementioned limitations, this study develops a structurally improved version of the classical ResNet50 architecture based on a self-constructed, high-resolution image dataset of S. frugiperda larvae. A novel recognition model, termed Multi-Scale and Self-Attention ResNet (MSA-ResNet), is proposed by integrating multi-scale perception and attention mechanisms. The proposed model not only enhances classification accuracy but also substantially reduces both missed and false detections. The main improvements are as follows:

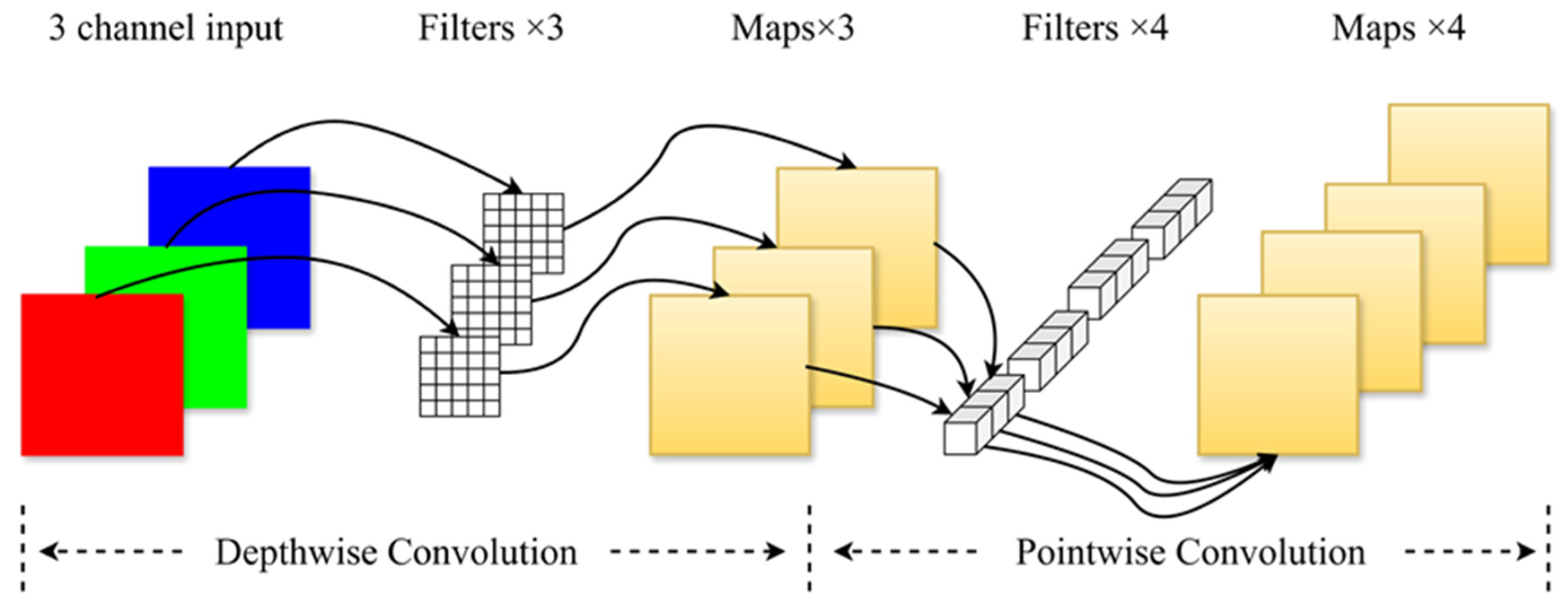

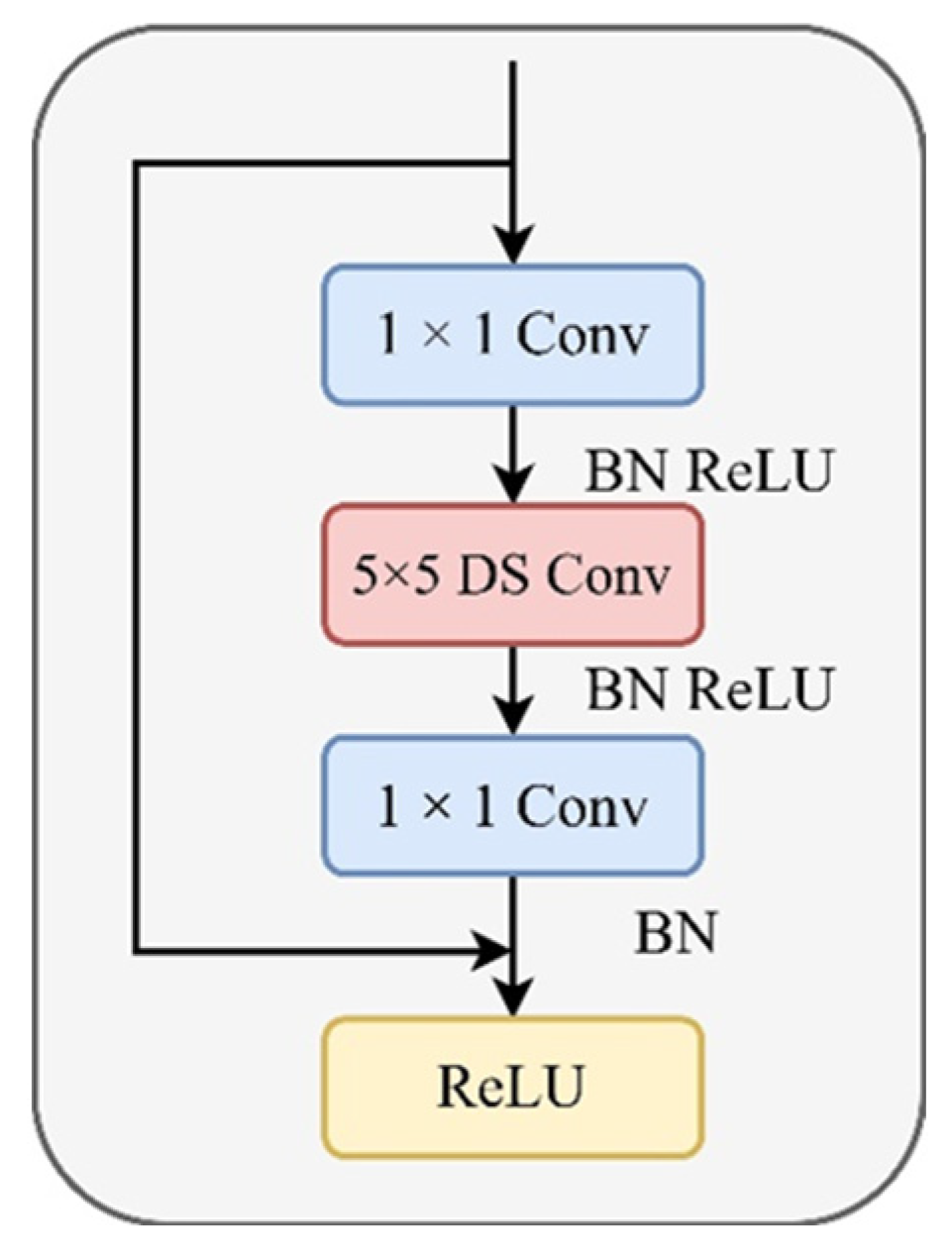

Large convolutional kernels for expanding shallow receptive fields: In the shallow feature extraction module, 5 × 5 depthwise separable convolutions are employed to replace traditional convolution operations. This design significantly enlarges the receptive field, enabling the network to capture richer texture and edge information while effectively reducing the number of parameters and computational complexity.

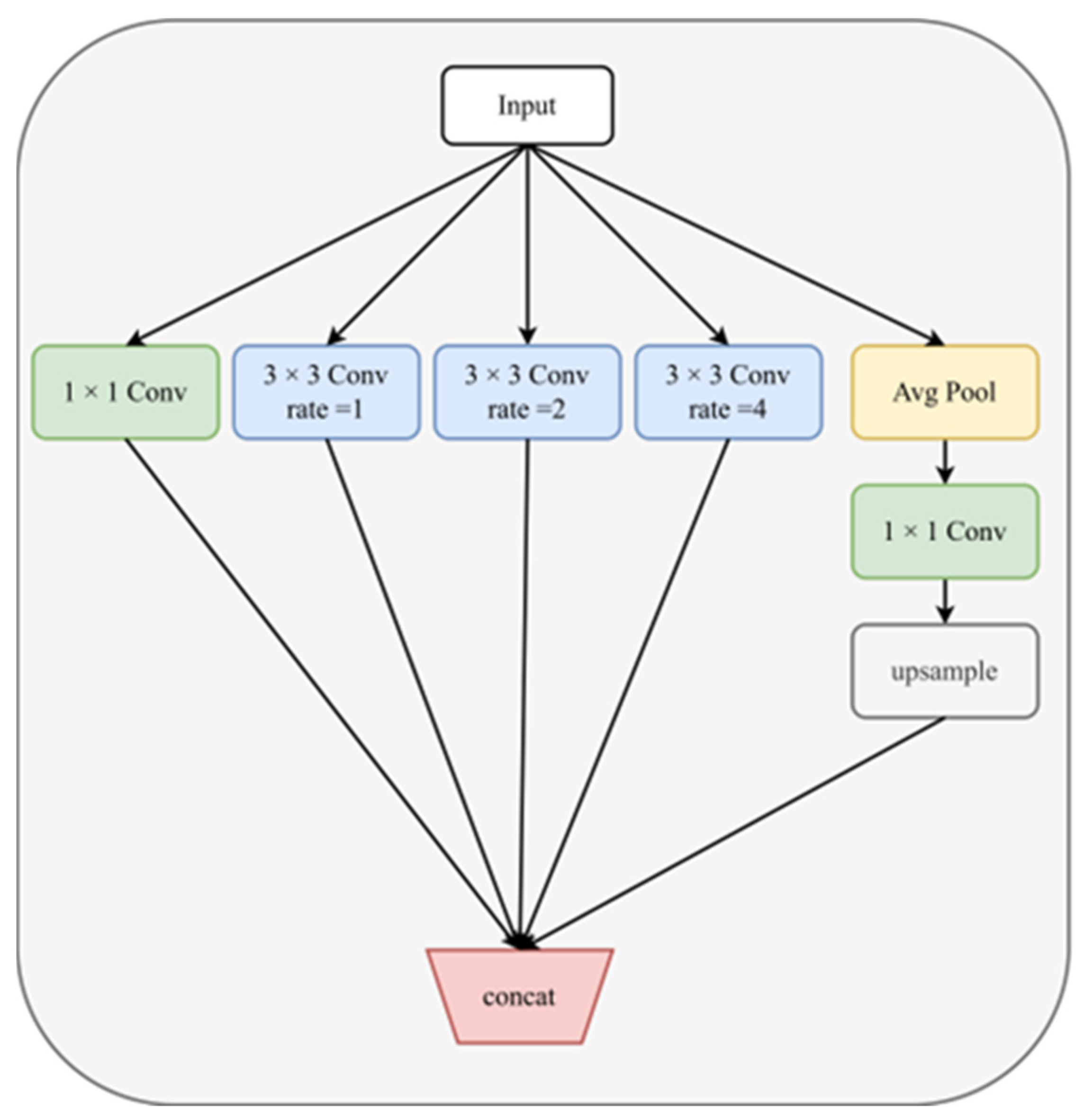

Atrous convolution for enhanced multi-scale perception: In the deep feature extraction stage, atrous (dilated) convolutions are introduced using a spatial pyramid structure with multiple dilation rates (e.g., r = 1, 2, 4). This multi-scale aggregation strengthens the joint modeling of local and global morphological features, thereby improving the model’s ability to distinguish subtle differences between adjacent instar stages.

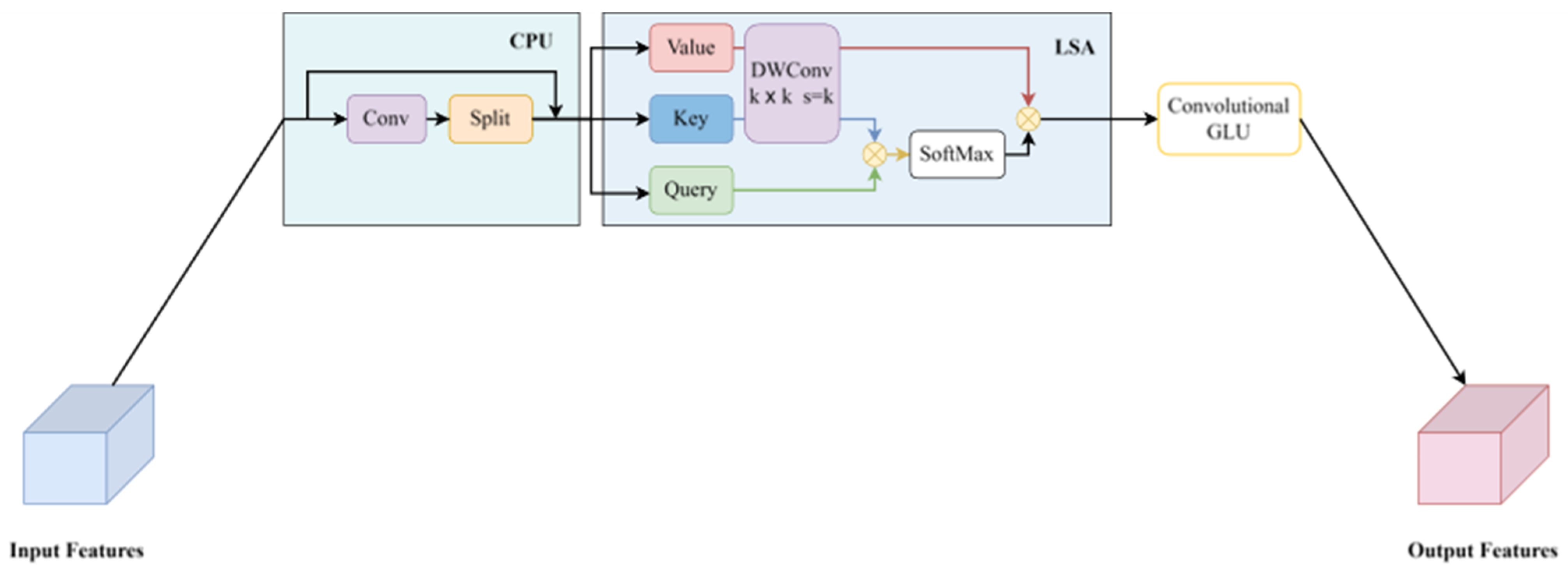

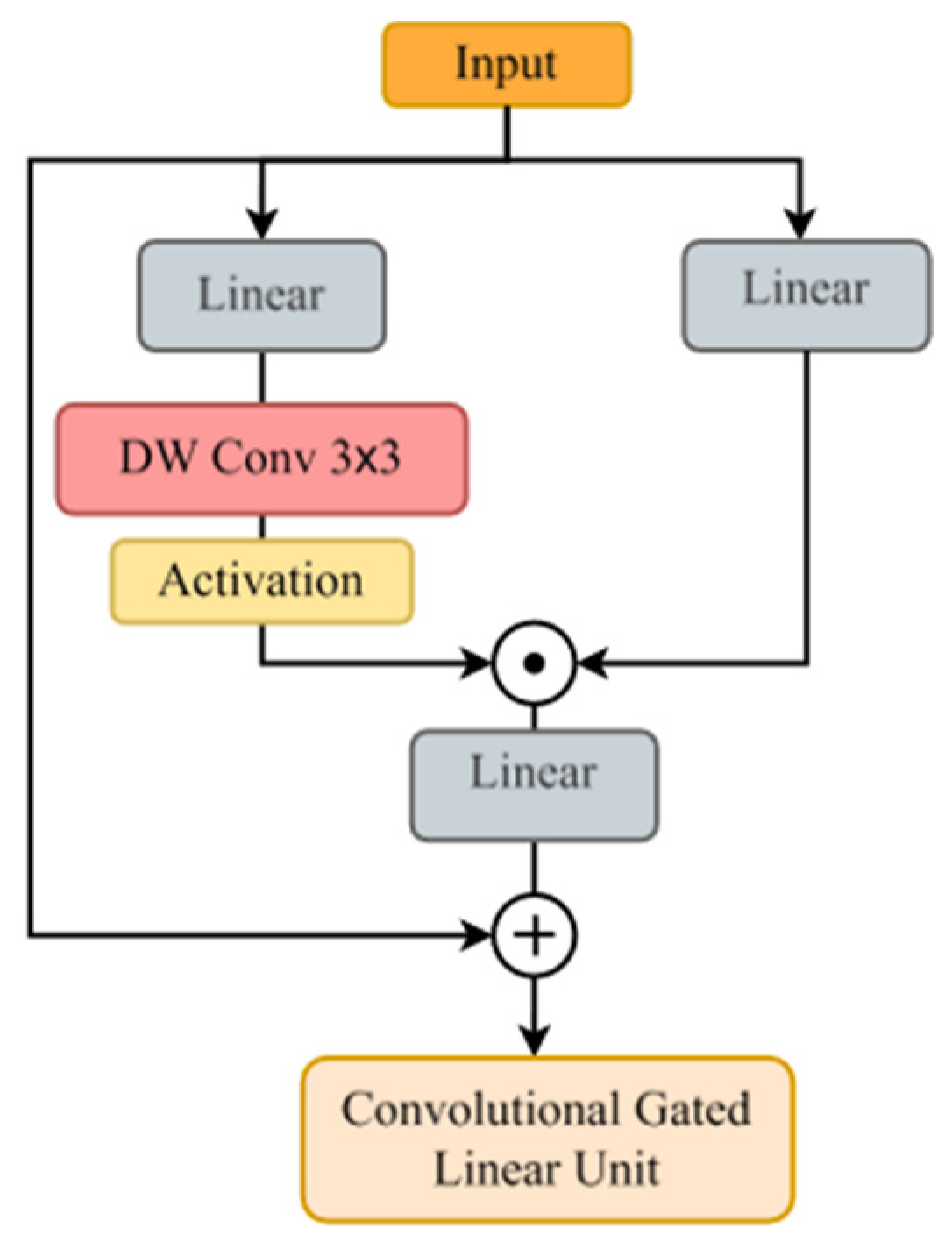

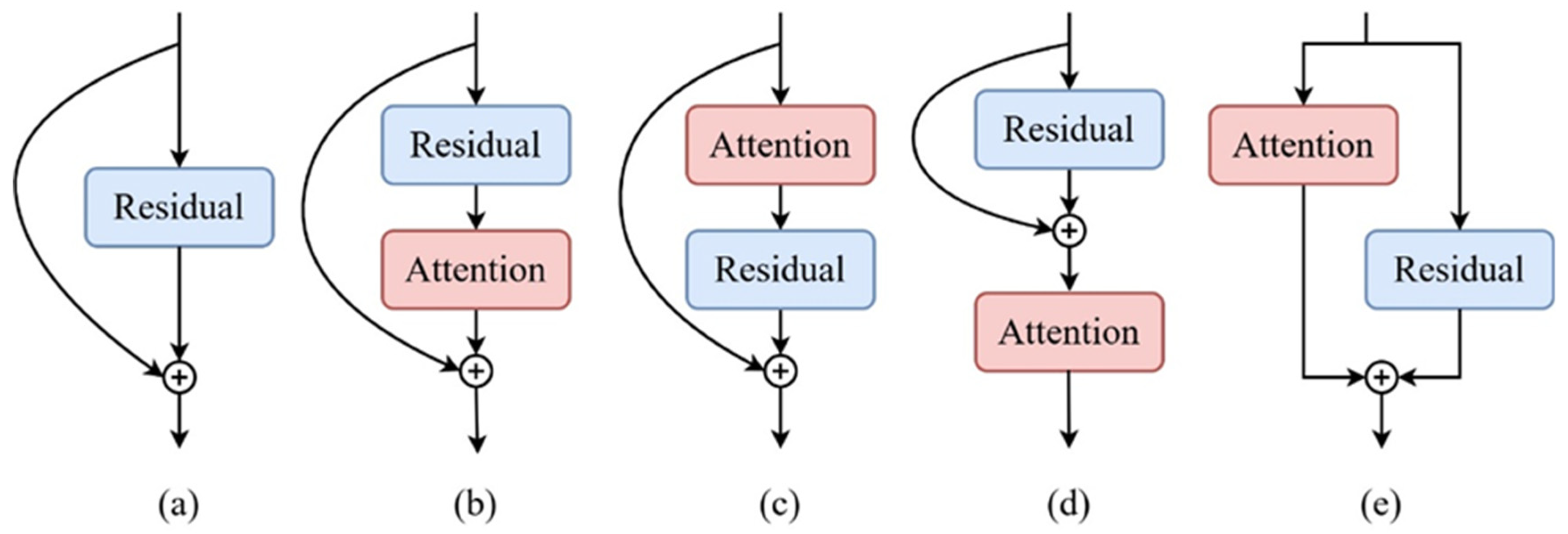

Improved self-attention for key-region modeling: An efficient self-attention module is incorporated into the modified residual blocks to achieve dynamic focusing on key feature regions. This enhancement increases the representational power and spatial modeling depth of the network while maintaining high inference efficiency.

Building upon the classical convolutional neural network framework, this study proposes a structural optimization pathway that integrates large convolutional kernels, atrous convolutions, and an improved self-attention mechanism to address issues such as limited receptive fields, insufficient multi-scale feature utilization, and weak responses to critical regions in image classification. The proposed MSA-ResNet model enables high-precision automatic recognition of larval instar stages of S. frugiperda, providing an efficient and stable intelligent tool for pest monitoring. It facilitates accurate developmental-stage identification and early warning, thereby supporting rational pesticide application, reducing chemical use and costs, mitigating environmental pollution, and promoting a shift from experience-based to data-driven decision making in pest management.

Under the accelerating development of green and precision agriculture, the findings of this study have broad application potential. The proposed framework can be applied to large-scale field monitoring systems and agricultural informatization platforms, and also serves as a transferable architectural reference for other fine-grained visual tasks, such as insect instar identification and plant disease classification.

The structure of the subsequent chapters is arranged as follows.

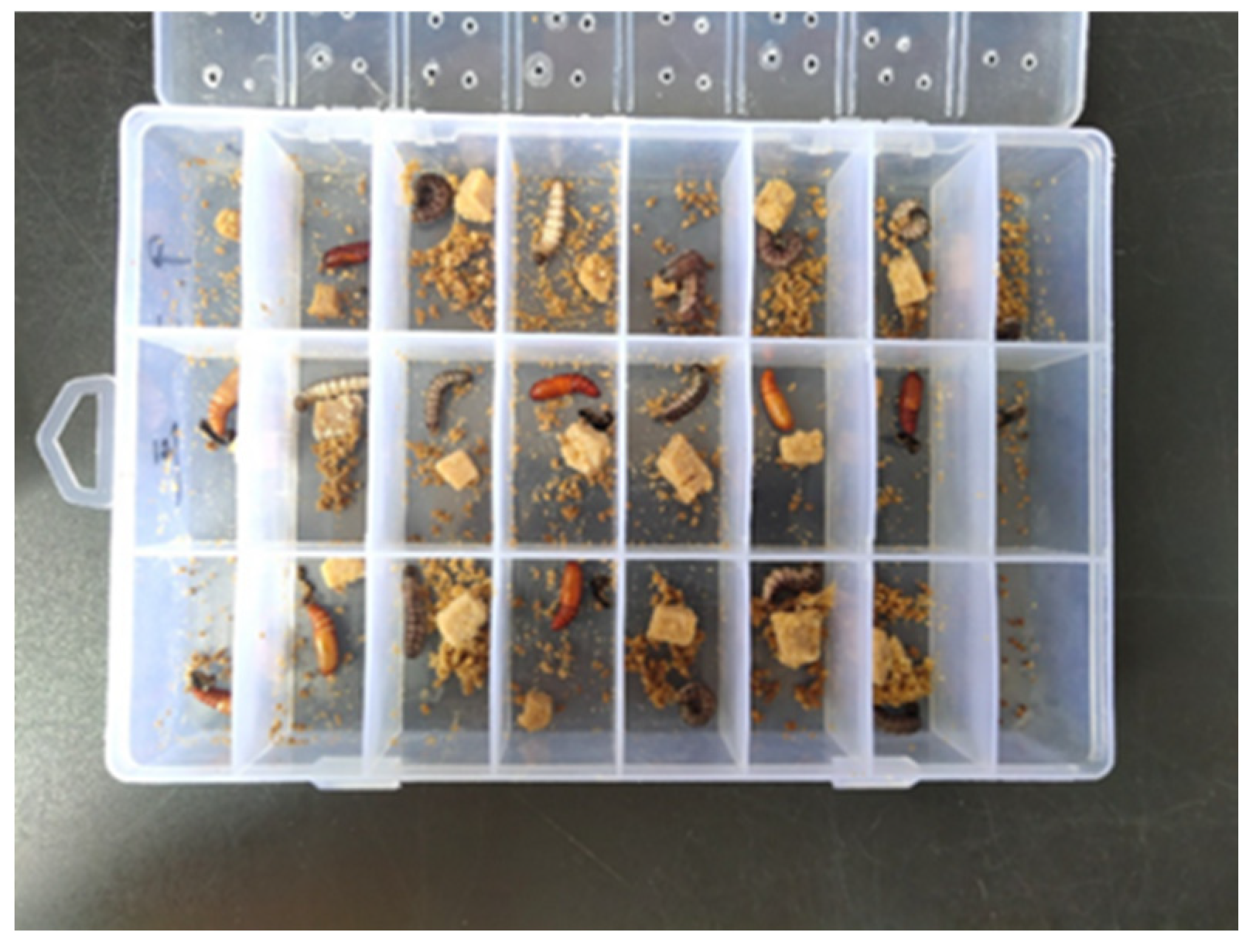

Section 2 provides a detailed description of the data acquisition and preprocessing procedures used in this study, including data sources, larval image collection methods, preprocessing strategies, and dataset partitioning. It also elaborates on the construction of the proposed model, covering the ResNet50 backbone, large-kernel convolution module, dilated convolution, the improved self-attention mechanism, and the overall network architecture.

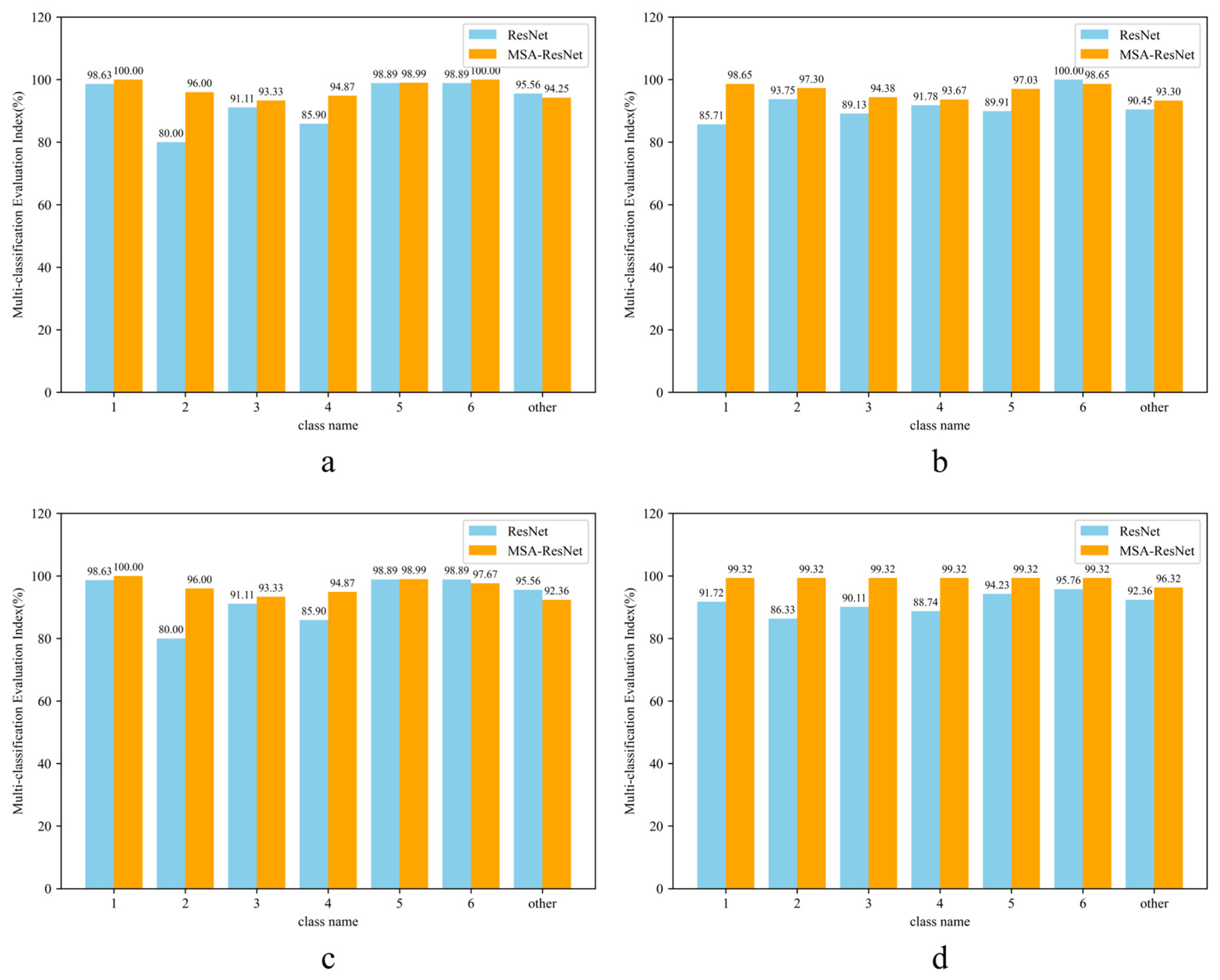

Section 3 presents comparative experiments and ablation studies to validate the model’s performance, analyzes the contribution of each improved module, and completes the performance evaluation.

Section 4 discusses the advantages of the proposed improvements, the underlying mechanisms of each module, and potential directions for future research.

Section 5 summarizes the main findings and conclusions of the entire study.