A Multi-Level Segmentation Method for Mountainous Camellia oleifera Plantation with High Canopy Closure Using UAV Imagery

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area

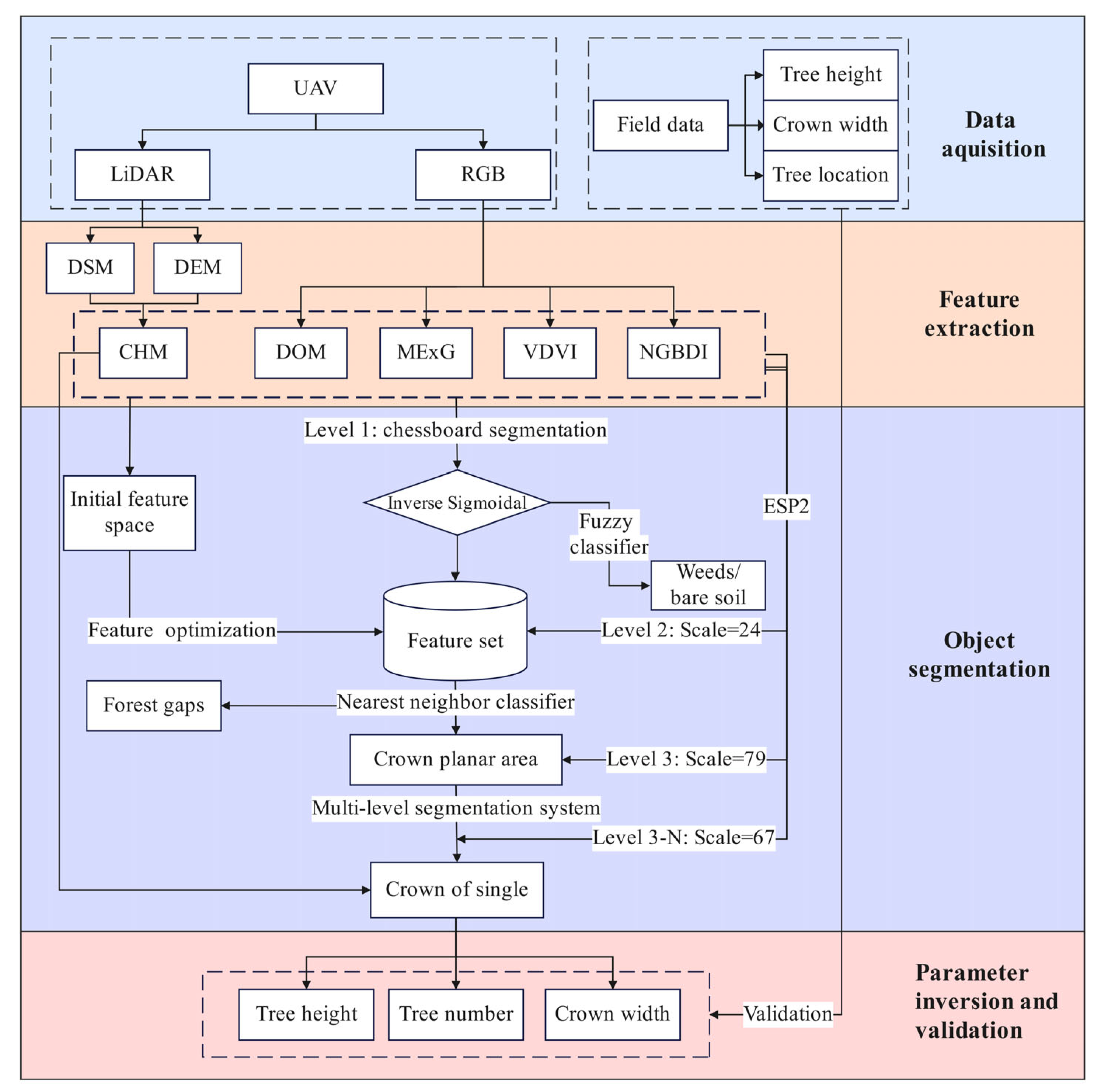

2.2. Technical Workflow

- (1)

- Data acquisition

- (2)

- Feature extraction

- (3)

- Object-based fuzzy classification (first-level segmentation)

- (4)

- Object-based nearest-neighbor classification (second-level segmentation)

- (5)

- Multi-level segmentation system (third-level segmentation)

- (6)

- Parameter extraction and accuracy validation

2.3. Data Acquisition

2.3.1. UAV Mission Configuration

2.3.2. Field Data Acquisition

2.4. Data Processing and Feature Analysis

2.4.1. Canopy Height Model (CHM) Generation

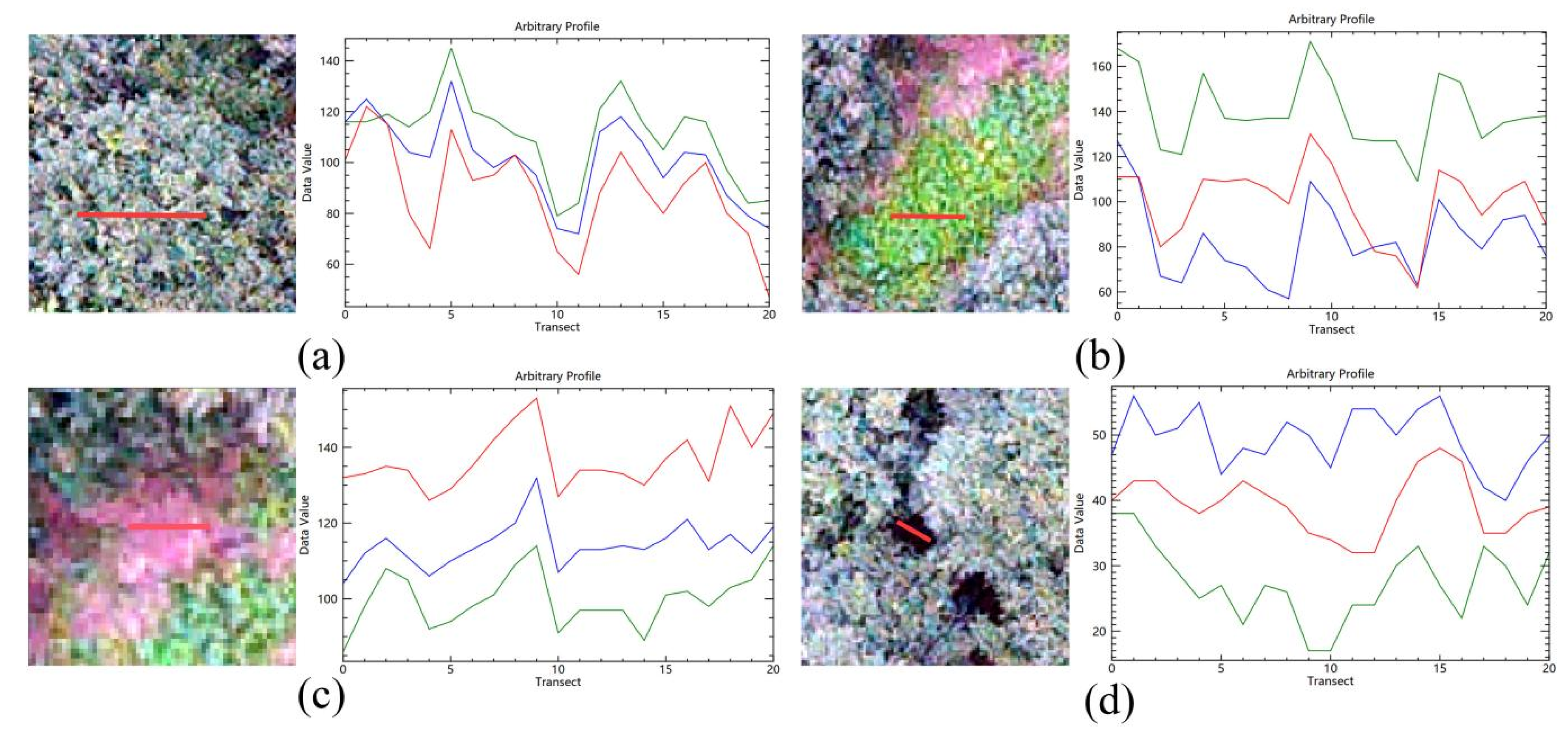

2.4.2. RGB and Vegetation Index Analysis

2.5. Object-Based Fuzzy Classification

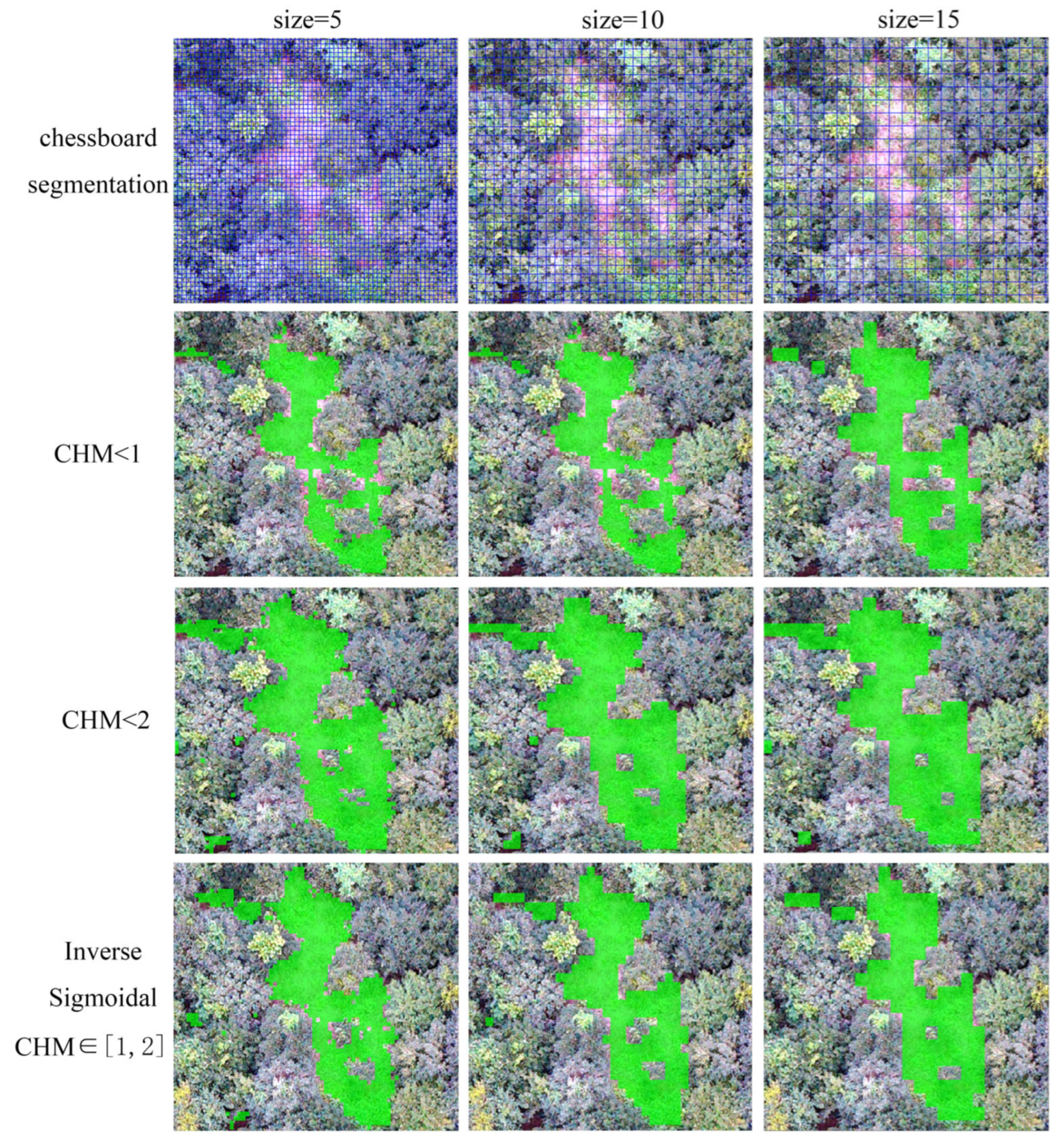

2.5.1. Chessboard Segmentation

2.5.2. Fuzzy Classification

2.6. Object-Oriented Nearest-Neighbor Classification

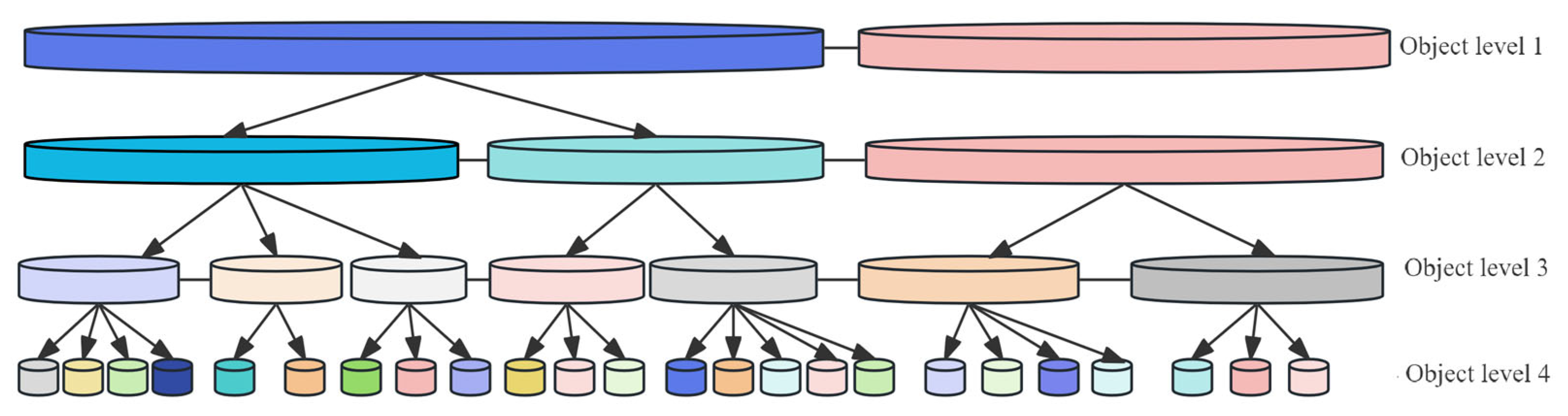

2.6.1. Multi-Resolution Segmentation System

2.6.2. Multi-Level Segmentation System

2.6.3. Initial Feature Construction and Optimization

2.6.4. Nearest-Neighbor Classification

2.7. Parameter Extraction and Accuracy Evaluation

2.7.1. Extraction of Tree Height and Crown Width Parameters

2.7.2. Accuracy Evaluation

3. Results

3.1. Spectral Characteristic Analysis of Ground Features

3.2. Construction of the Image Feature Space

3.2.1. Vegetation Indices

3.2.2. Canopy Height Model

3.3. Identification of Bare Soil/Weeds

3.4. Determination of Optimal Segmentation Parameters

3.4.1. Optimal Segmentation Scale

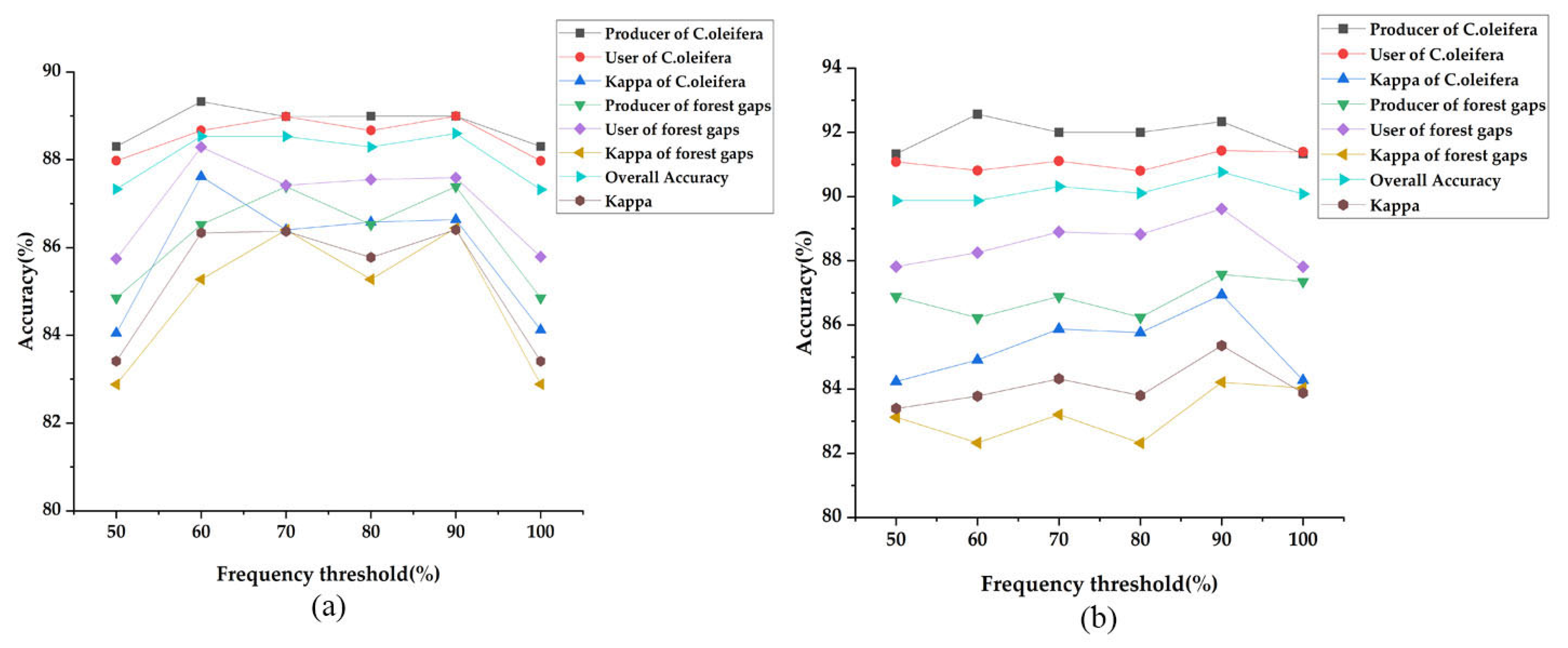

3.4.2. Parameterization for Forest Gap Segmentation

3.4.3. Parameterization for C. oleifera Segmentation

3.5. Nearest-Neighbor Classification with Multi-Feature Fusion

3.5.1. Feature Optimization and Frequency Statistics

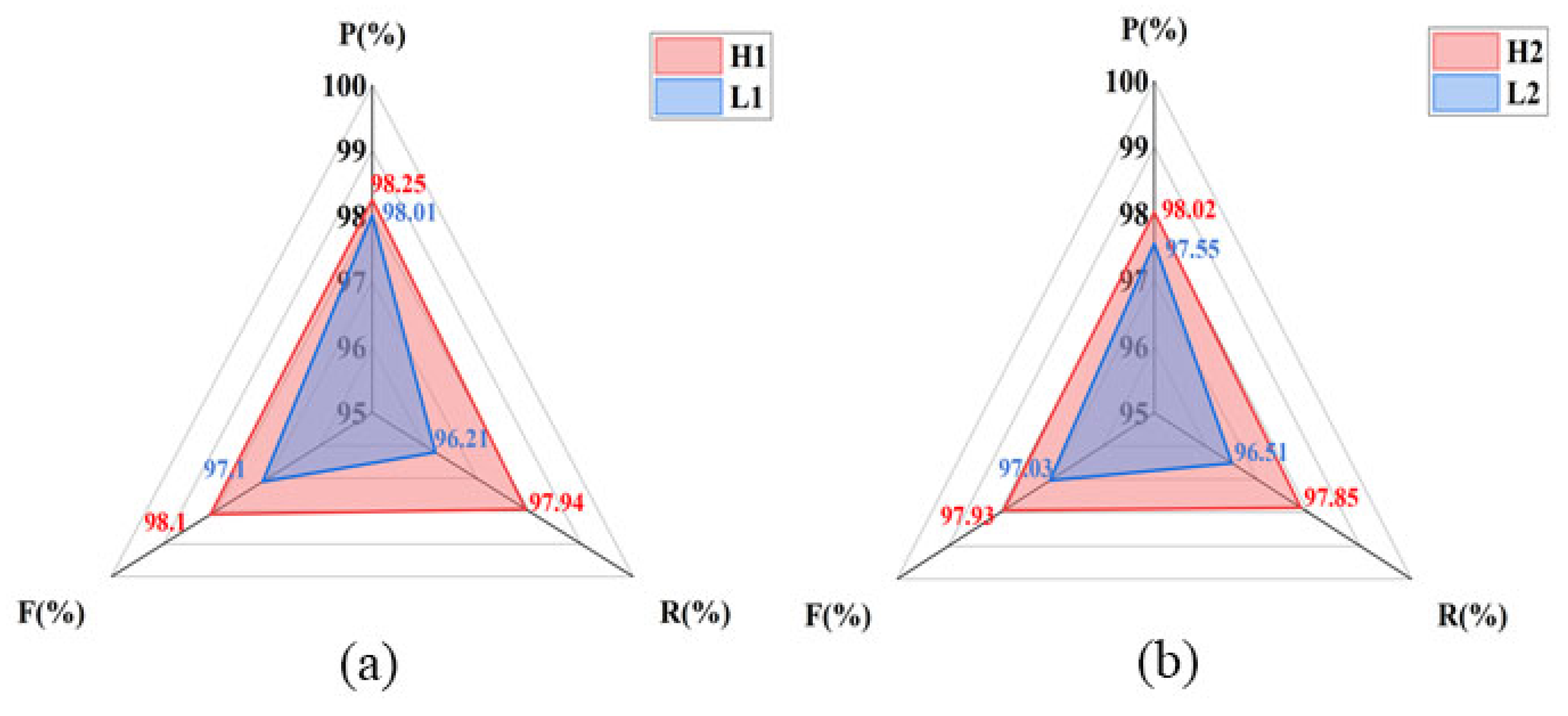

3.5.2. Feature Subset Evaluation and Generalization Validation

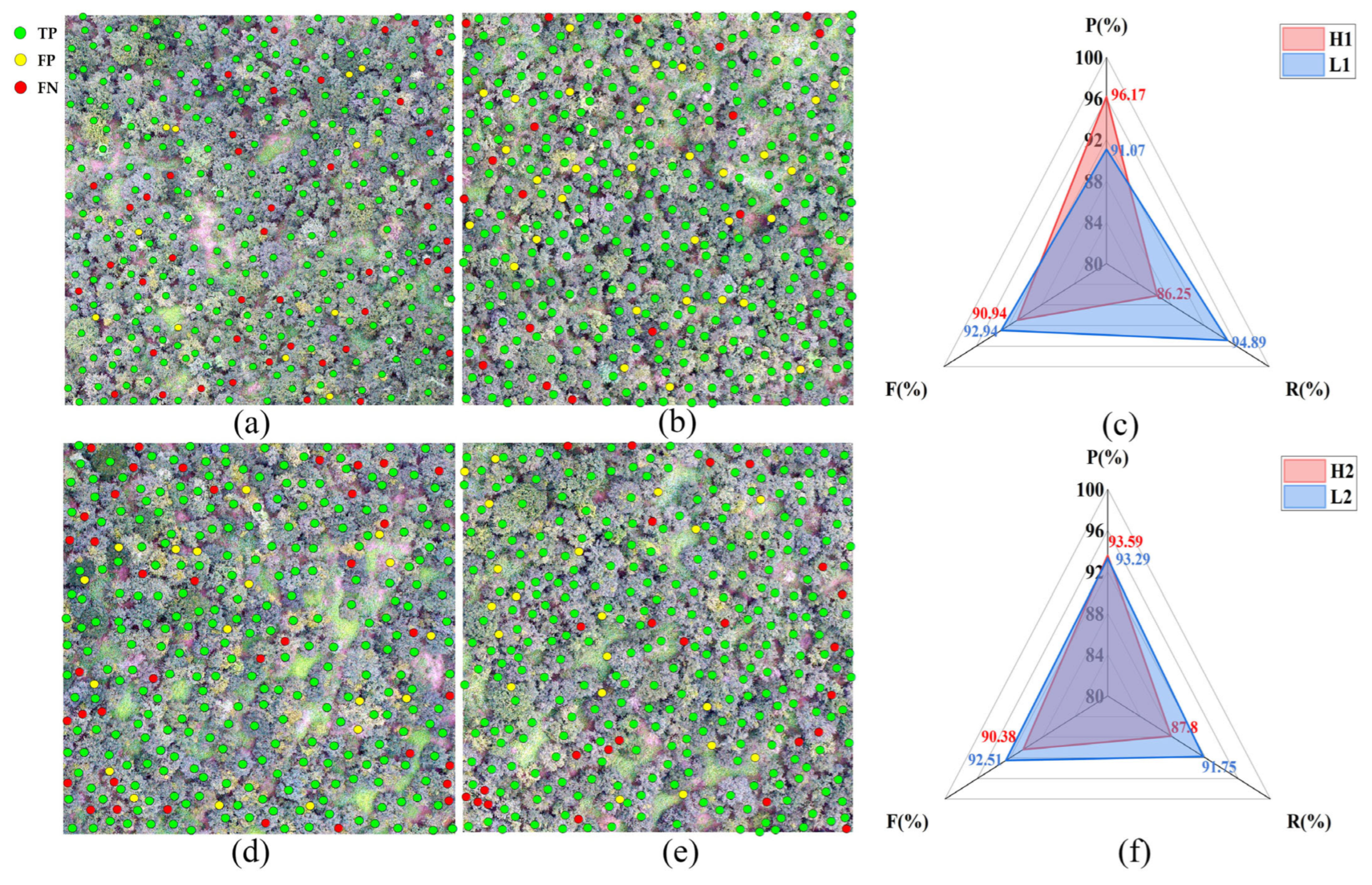

3.6. Accuracy Assessment of Canopy Identification Results

3.7. Object-Oriented Crown Segmentation

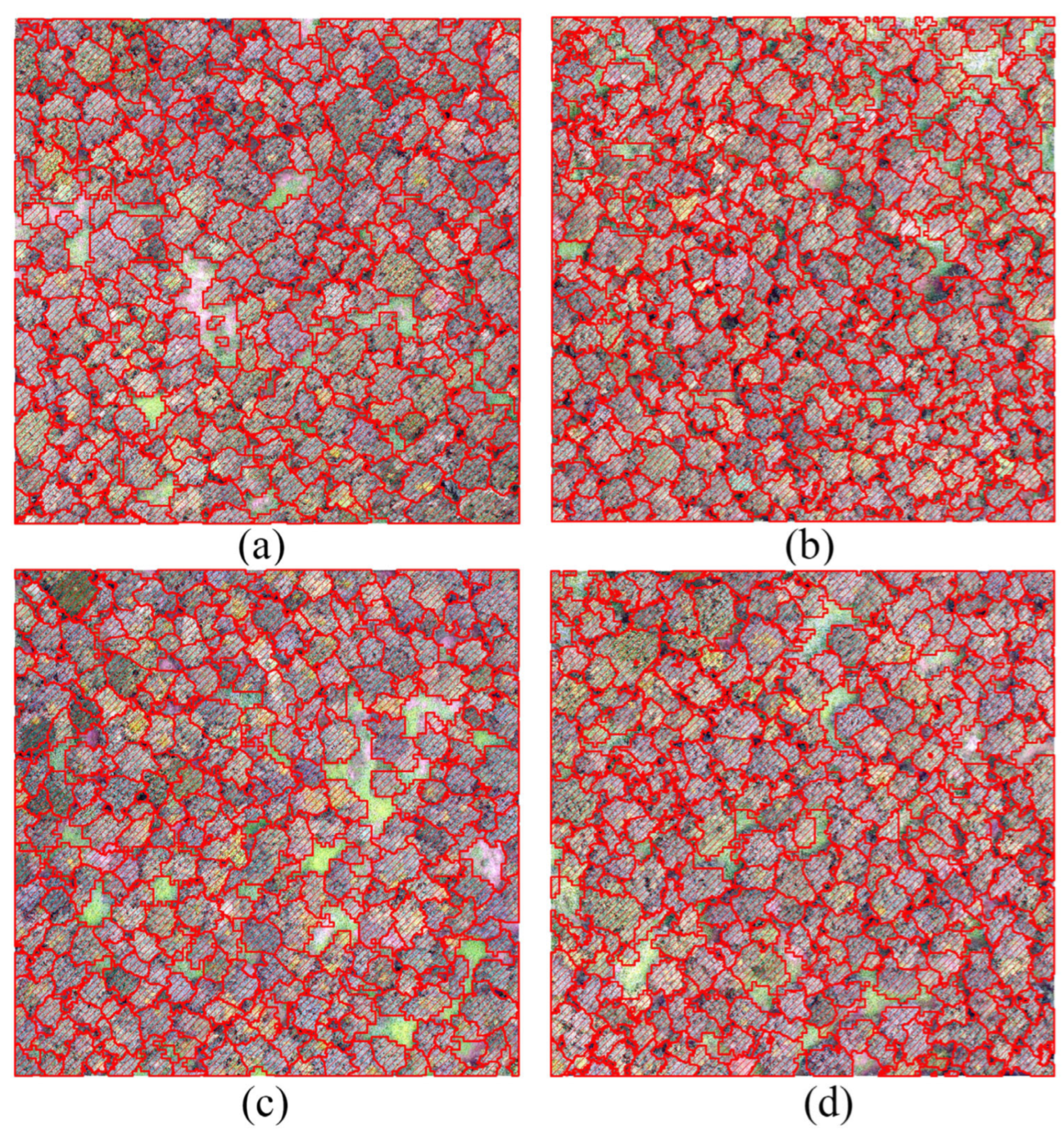

3.7.1. Single-Level Crown Segmentation

3.7.2. Multi-Level Segmentation Strategy and Accuracy Improvement

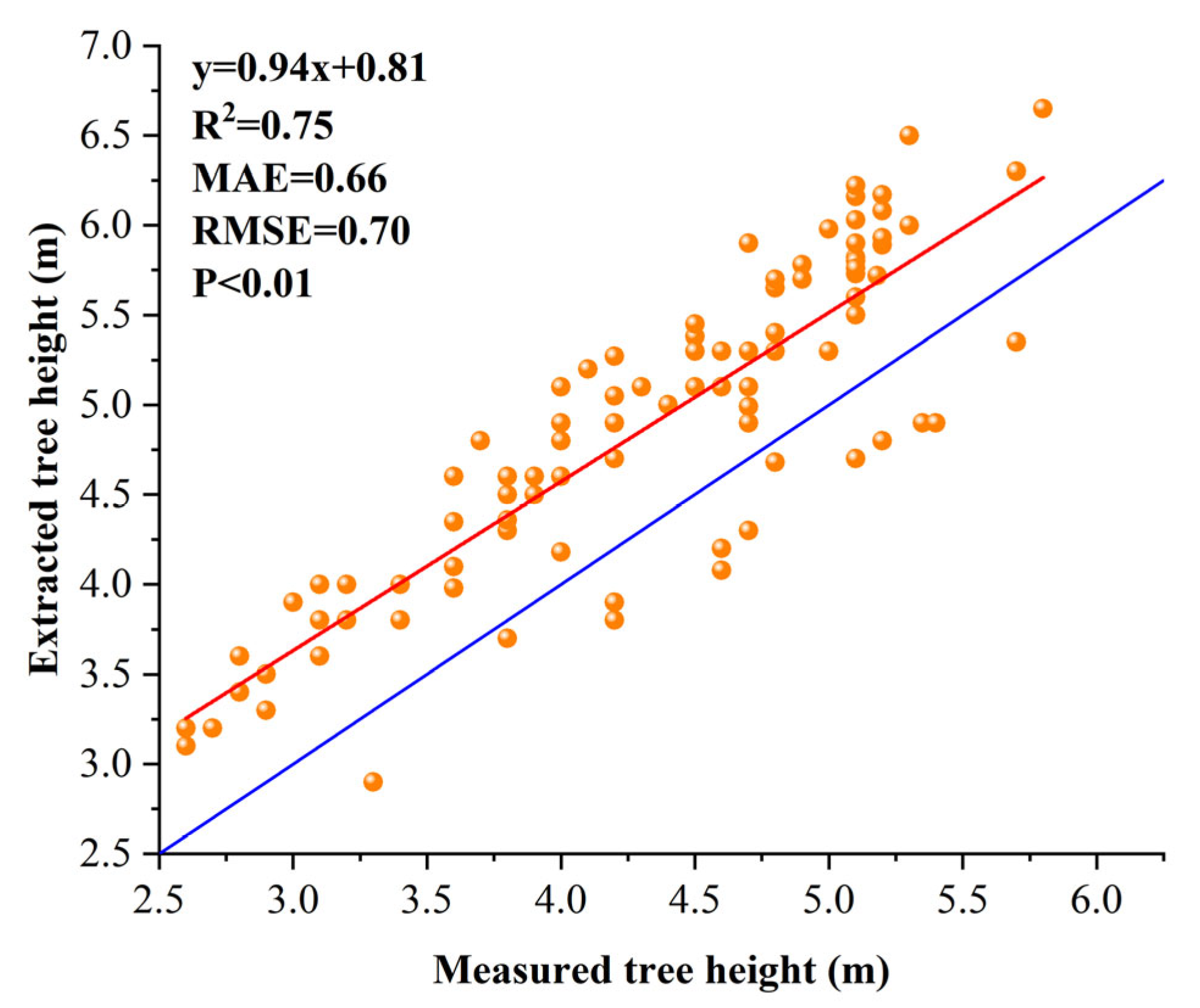

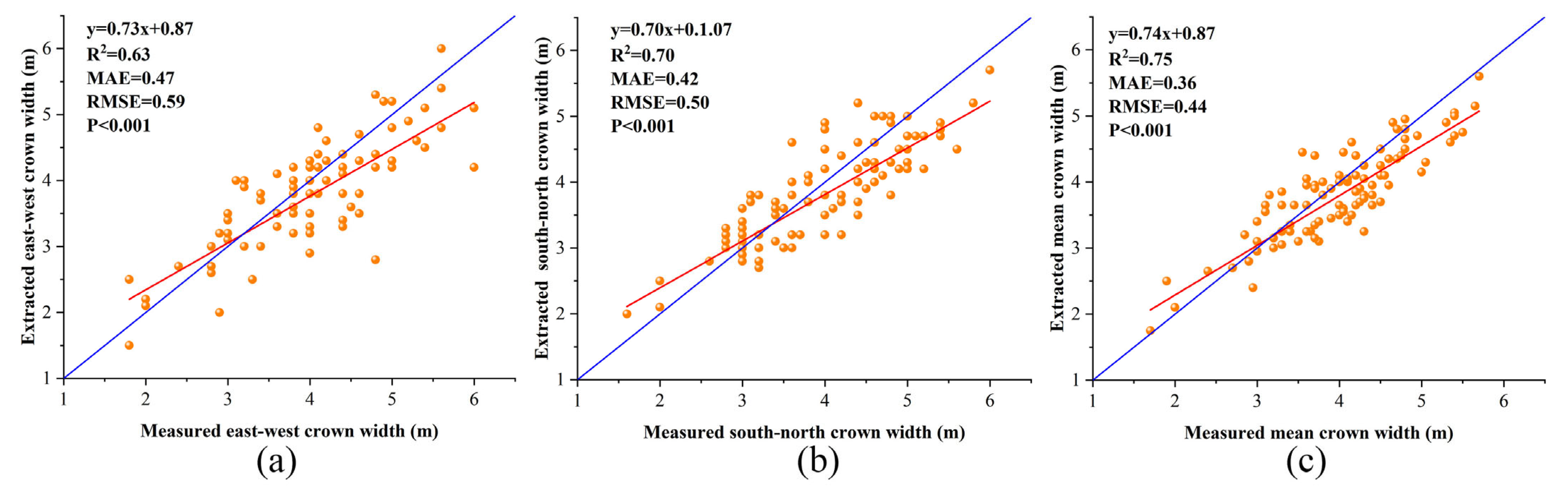

3.8. Extraction and Validation of Tree Height and Crown Width Parameters

- (1)

- Tree Height Extraction

- (2)

- Crown Width Parameter Extraction

- (3)

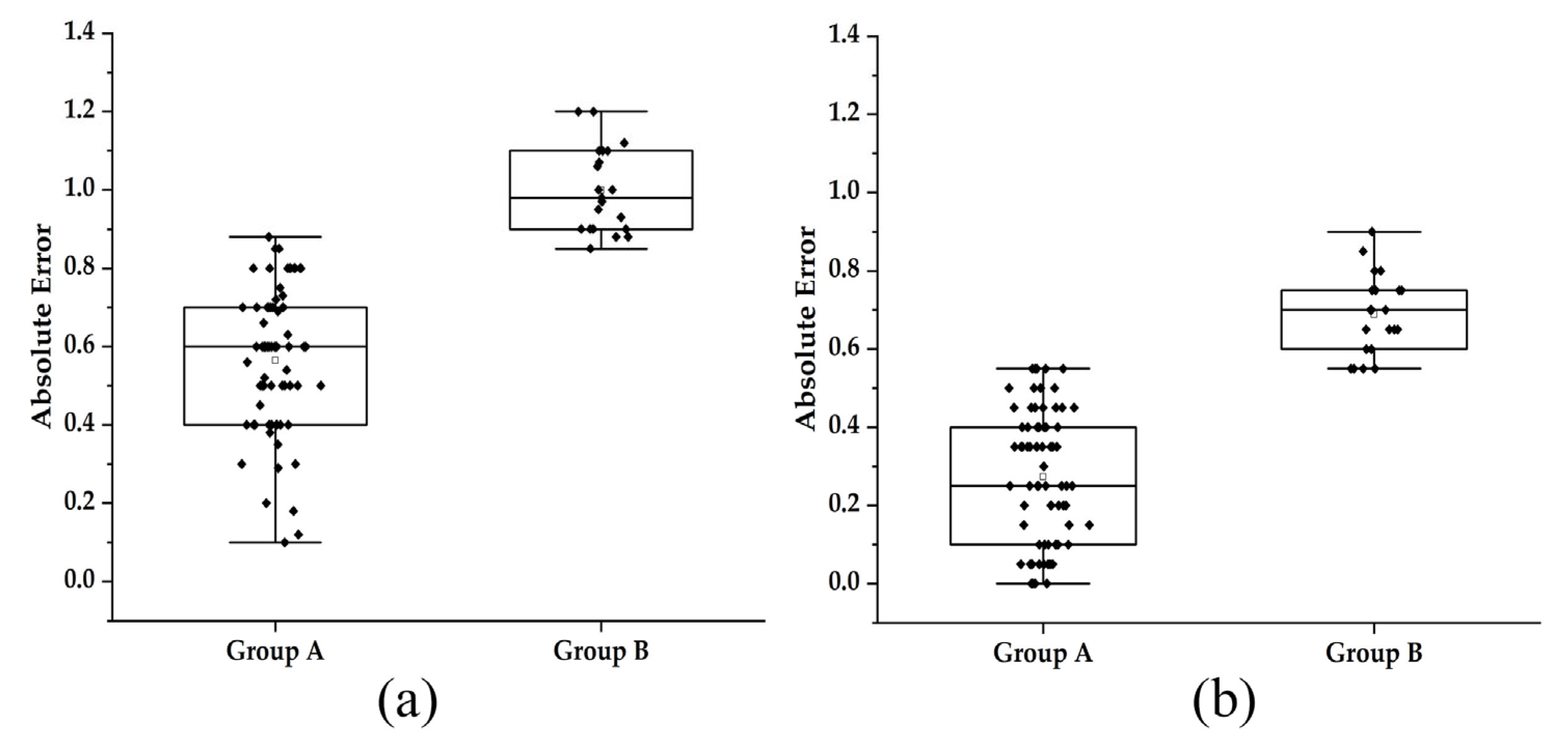

- Impact of Segmentation Errors on Forest Parameter Estimation

4. Discussion

4.1. Effectiveness and Adaptability of the Method in Mountainous Environments

- (1)

- Effectiveness of Background Removal and Parameter Sensitivity

- (2)

- Effectiveness of Multi-Feature Fusion and Optimization for Object Separation

4.2. Advantages of Multi-Level Segmentation for High-Canopy-Closure Stands

4.3. Accuracy Limitations and Error Sources

4.4. Limitations and Future Work

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- He, X.S.; Zhou, W.C.; Qiu, F.Y.; Gong, C.; Xu, L.C.; Xiao, X.X.; Wang, Y.J. Responses of different Camellia oleifera varieties to drought stress and the comprehensive evaluation of their drought resistance. J. Cent. South Univ. For. Technol. 2023, 43, 1–14. [Google Scholar]

- Zhang, D.S.; Jin, Q.Z.; Xue, Y.L.; Zhang, D.; Wang, X.G. Nutritional value and adulteration identification of oil-tea Camellia seed oil. China Oils Fats 2013, 38, 47–50. [Google Scholar]

- National Forestry and Grassland Administration National Park Administration. Available online: https://www.forestry.gov.cn/lyj/1/ggjjlcy/20250422/622027.html (accessed on 10 September 2025).

- The People’s Government of Guangxi Zhuang Autonomous Region. Available online: http://www.gxzf.gov.cn/mlgxi/gxrw/zrdl/t20886294.shtml (accessed on 10 September 2025).

- Zhou, X.C.; Wang, P.; Tan, F.L.; Chen, C.C.; Huang, H.Y.; Lin, Y. Biomass estimation of high-density forest harvesting based on Multi-temporal UAV Images. Trans. Chin. Soc. Agric. Mach. 2023, 54, 168–177. [Google Scholar]

- He, B.K.; Zhu, W.Q.; Shi, P.J.; Zhang, H.; Liu, R.Y.; Yang, X.Y.; Zhao, C.L. A Fine-Scale Remote Sensing Estimation Method for Fractional Vegetation Cover in Complex Terrain Areas: A Case Study in the Qinghai-Tibet Plateau Mountainous Regions. Acta Ecol. Sin. 2024, 44, 9039–9052. [Google Scholar]

- Zhang, H.L.; Wang, Y.; Hu, M.; Zhang, Y.Z.; Zhang, J.; Zhan, B.S.; Liu, X.M.; Luo, W. Application of multispectral index features based on sigmoid function normalization in remote sensing identification and sample migration study of Camellia oleifera Forest. Spectrosc. Spectr. Anal. 2025, 45, 1159–1167. [Google Scholar]

- Chen, Z.J.; Cheng, G.; Pu, Y.K.; Huang, W.; Chen, J.H.; Li, W.Z. Single tree parameters extraction of broad-leaved forest based on UAV tilting photography. For. Resour. Manag. 2022, 1, 132–141. [Google Scholar]

- Li, R.; Sun, Z.; Xie, Y.H.; Li, W.H.; Zhang, Y.L.; Sun, Y.J. Extraction of tree crown parameters of high-density pure Cunninghamia lanceolata plantations by combining the U-net model and watershed algorithm. Chin. J. Appl. Ecol. 2023, 34, 1024–1034. [Google Scholar]

- Pourreza, M.; Moradi, F.; Khosravi, M.; Deljouei, A.; Vanderhoof, M.K. GCPs-free photogrammetry for estimating tree height and crown diameter in Arizona Cypress plantation using UAV-Mounted GNSS RTK. Forests 2022, 13, 1905. [Google Scholar] [CrossRef]

- Panagiotidis, D.; Abdollahnejad, A.; Peter, S.; Chiteculo, V. Determining tree height and crown diameter from high-resolution UAV imagery. Int. J. Remote Sen. 2017, 38, 2392–2410. [Google Scholar] [CrossRef]

- Wu, Y.D.; Han, H. Extraction of forest canopy height and width using UAV aerial survey data in the Gannan Plateau. J. Gansu Agric. Univ. 2024, 59, 268–276. [Google Scholar]

- Wang, J.; Zhang, C.; Chen, Q.; Li, Y.H.; Peng, X.; Bai, M.X.; Xu, Z.Y.; Liu, H.D.; Chen, Y.F. The method of extracting information of Cunninghamia lanceolata crown combined with RGB and LiDAR based on UAV. J. Southwest For. Univ. 2022, 42, 133–141. [Google Scholar]

- Jin, Z.M.; Cao, S.S.; Wang, L.; Sun, W. A method for individual tree-crown extraction from UAV remote sensing image based on U-net and water shed algorithm. J. Northwest For. Univ. 2020, 35, 194–204. [Google Scholar]

- Li, Y.D.; Cao, M.L.; Li, C.Q.; Feng, Z.K.; Jia, S.H. Crown segmentation from UAV visible light DOM based on level set method. Trans. Chin. Soc. Agric. Eng. 2021, 37, 60–65. [Google Scholar]

- Bai, M.X.; Zhang, C.; Chen, Q.; Wang, J.; Li, Y.H.; Shi, X.R.; Tian, X.Y.; Zhang, Y.W. Study on the extraction of individual tree height based on UAV visual spectrum remote sensing. For. Resour. Manag. 2021, 1, 164–172. [Google Scholar]

- Wang, W.Y.; Li, F.; Hasituya; Hashengaowa. Information extraction of agricultural greenhouses types based on object-oriented multi-level multi-scale segmentation. J. China Agric. Univ. 2024, 29, 223–236. [Google Scholar]

- Li, X.C.; Long, J.P. Research on UAV Image Enhancement Based on Visible Light Vegetation Index. Cent. South For. Inventory Plan. 2024, 43, 38–43. [Google Scholar]

- Gao, F.; Shi, H.J.; Shui, J.F.; Zhang, Y.; Guo, M.H.; Wen, Z.M. Structural parameter extraction of artificial forest in northern Shaanxi based on UAV high-resolution image. Sci. Soil Water Conserv. 2021, 19, 1–12. [Google Scholar]

- Wu, N.S.; Wang, J.X.; Zhang, Y.; Yuan, M.T.; Zhang, Q.; Gao, C.Y. Determining tree species and crown width from unmanned aerial vehicle imagery in hilly loess region of west Shanxi, China: A case study from Caijiachuan watershed. Acta Agric. Zhejiangensis 2021, 33, 1505–1518. [Google Scholar]

- Li, L.; Mu, X.; Chianucci, F.; Qi, J.; Jiang, J.; Zhou, J.; Chen, L.; Huang, H.; Yan, G.; Liu, S. Ultrahigh-resolution boreal forest canopy mapping: Combining UAV imagery and photogrammetric point clouds in a deep-learning-based approach. Int. J. Appl. Earth Obs. 2022, 107, 102686. [Google Scholar] [CrossRef]

- Ferro, M.V.; Srensen, C.G.; Catania, P. Comparison of different computer vision methods for vineyard canopy detection using UAV multispectral images. Comput. Electron. Agric. 2024, 225, 109277. [Google Scholar] [CrossRef]

- Hao, Z.; Lin, L.; Post, C.J.; Mikhailova, E.A.; Li, M.; Chen, Y.; Yu, K.; Liu, J. Automated tree-crown and height detection in a young forest plantation using mask region-based convolutional neural network (Mask R-CNN). ISPRS J. Photogramm. 2021, 178, 112–123. [Google Scholar] [CrossRef]

- Puletti, N.; Guasti, M.; Innocenti, S.; Cesaretti, L.; Chiavetta, U. A semi-automatic approach for tree crown competition indices assessment from UAV LiDAR. Remote Sens. 2024, 16, 2576. [Google Scholar] [CrossRef]

- Zhu, B.D.; Luo, H.B.; Jin, J.; Yue, C.R. Optimization of Individual Tree Segmentation Methods for High Canopy Density Plantation Based on UAV LiDAR. Sci. Silvae Sin. 2022, 58, 48–59. [Google Scholar]

- Li, P.H.; Shen, X.; Dai, J.S.; Cao, L. Comparisons and Accuracy Assessments of LiDAR-Based Tree Segmentation Approaches in Planted Forests. Sci. Silvae Sin. 2018, 54, 127–136. [Google Scholar]

- Fu, Y.; Niu, Y.; Wang, L.; Li, W. Individual-tree segmentation from UAV–LiDAR data using a region-growing segmentation and supervoxel-weighted fuzzy clustering approach. Remote Sens. 2024, 16, 608. [Google Scholar] [CrossRef]

- Quan, Y.; Li, M.; Zhen, Z.; Hao, Y.; Wang, B. The feasibility of modelling the crown profile of Larix olgensis using Unmanned Aerial Vehicle laser scanning data. Sensors 2020, 20, 5555. [Google Scholar] [CrossRef] [PubMed]

- Wei, X.B.; Li, Z.Q.; Chen, S.G.; Liu, R. Single tree segmentation of mangrove trees of different species based on UAV-LiDAR. Sci. Technol. Eng. 2024, 24, 963–969. [Google Scholar]

- Wang, X.Y.; Huang, Y.; Xing, Y.Q.; Li, D.J.; Zhao, X.W. The single tree segmentation of UAV high-density LiDAR point cloud data based on coniferous plantations. J. Cent. South Univ. For. Technol. 2022, 42, 66–77. [Google Scholar]

- Huang, B.Q.; Cao, B.; Yue, C.R.; Zhou, Q. Research on a method for individual tree segmentation for mountains coniferous forests using UAV-LiDAR technology. Cent. South For. Inventory Plan. 2024, 43, 34–39+48. [Google Scholar]

- Li, H.K.; Wang, J.; Zhou, Y.B.; Long, B.P. Extraction of Camellia oleifera planting areas in southern hilly area by combining multi-features of time-series Sentinel data. Trans. Chin. Soc. Agric. Mach. 2024, 55, 241–251. [Google Scholar]

- Meng, H.R.; Li, C.J.; Zheng, X.Y.; Gong, Y.S.; Liu, Y.; Pan, Y.C. Research on extraction Camellia oleifera by integrating spectral, texture and time sequence remote sensing information. Spectrosc. Spectr. Anal. 2023, 43, 1589–1597. [Google Scholar]

- Gao, X.X.; Wang, T.H.; Chen, L.S.; Liu, S.H.; Shuai, X.Q.; Xie, A. Study on remote sensing extracting Camellia oleifera forest based on GF-6 multispectral imagery. Guizhou Agric. Sci. 2024, 52, 117–125. [Google Scholar]

- He, C.R.; Fan, Y.L.; Tan, B.X.; Yu, H.; Shen, M.T.; Huang, Y.F. Extraction and identification of Camellia oleifera plantations based on multi-feature combination of “Zhuhai-1” satellite images. J. Northwest For. Univ. 2024, 39, 61–70. [Google Scholar]

- Liu, J.Y.; Gao, Z.C.; Liu, H.Y.; Yin, J.Q.; Luo, Y.Y. Research on sorting task of Camellia oleifera fruit based on YOLOv8 improved algorithm. J. For. Eng. 2025, 10, 120–127. [Google Scholar]

- Xiao, S.P.; Zhao, Q.Y.; Zeng, J.Y.; Peng, Z.R. Camellia oleifera fruits occlusion detection and counting in complex environments based on improved YOLO–DCL. Trans. Chin. Soc. Agric. Mach. 2024, 55, 318–326+480. [Google Scholar]

- Li, Q.S.; Kang, L.C.; Yao, H.H.; Li, Z.F.; Liu, M.H. Recognition method of Camellia oleifera fruit in natural environment based on improved YOLOv4–Tiny. J. Chin. Agric. Mech. 2023, 44, 224–230. [Google Scholar]

- Yin, X.M.; Peng, S.F.; Cheng, J.Y.; Chen, Q.M.; Zhang, R.Q.; Mo, D.K.; Wei, W.; Yan, E.P. Identification of Camellia osmantha fruit based on deep learning. Non-Wood For. Res. 2023, 41, 70–81. [Google Scholar]

- Chen, F.J.; Chen, C.; Zhu, X.Y.; Shen, D.Y.; Zhang, X.W. Detection of Camellia oleifera fruit maturity based on improved YOLOv7. Trans. Chin. Soc. Agric. Eng. 2024, 40, 177–186. [Google Scholar]

- Yu, J.; Yan, E.; Yin, X.; Song, Y.; Wei, W.; Mo, D. Automated extraction of Camellia oleifera crown using unmanned aerial vehicle visible images and the ResU-Net deep learning model. Front. Plant Sci. 2022, 13, 958940. [Google Scholar]

- Wu, J.; Peng, S.F.; Jiang, F.G.; Tang, J.; Sun, H. Extraction of Camellia oleifera crown width based on the method of optimized watershed with multi-scale markers. Chin. J. Appl. Ecol. 2021, 32, 2449–2457. [Google Scholar]

- Gao, S.; Yuan, X.P.; Gan, S.; Yang, Y.F.; Li, X. Application of airborne LiDAR and UAV image fusion for complicated terrain. Bull. Surv. Mapp. 2021, 7, 65–69. [Google Scholar]

- Hu, Z.Y.; Shan, L.; Chen, X.Y.; Yu, K.Y.; Liu, J. Individual Tree Segmentation of UAV-LiDAR Based on the Combination of CHM and DSM. Sci. Silvae Sin. 2024, 60, 14–24. [Google Scholar]

- Sun, G.X.; Li, Y.B.; Wang, X.C.; Hu, G.Y.; Zhang, Y. Image segmentation algorithm for greenhouse cucumber canopy under various natural lighting conditions. Int. J. Agr. Bio. Eng. 2016, 9, 130–138. [Google Scholar]

- Wang, X.Q.; Wang, M.M.; Wang, S.Q.; Wu, Y.D. Extraction of vegetation information from visible unmanned aerial vehicle images. Trans. Chin. Soc. Agric. Eng. 2015, 31, 152–159. [Google Scholar]

- Li, P.F.; Guo, X.P.; Gu, Q.M.; Zhang, X.; Feng, C.D.; Guo, G. Vegetation coverage information extraction of mine dump slope in Wuhai City of Inner Mongolia based on visible vegetation index. J. Beijing For. Univ. 2020, 42, 102–112. [Google Scholar]

- Zong, H.L.; Yuan, X.P.; Gan, S.; Yang, M.L.; Lv, J.; Zhang, X.L. Spatial Distribution Mapping of Debris Flow Site in Xiaojiang River Basin Based on the GEE Platform. Spectrosc. Spectr. Anal. 2025, 45, 1045–1060. [Google Scholar]

- Zheng, X.; Qiu, C.X.; Li, C.J.; Zhou, J.P.; Huai, H.J.; Wang, J.Y.; Zhang, Q.Y. Object-oriented subfield boundary extraction from UAV remote sensing images. Sci. Technol. Innov. 2022, 3, 1–3. [Google Scholar]

- Goutte, C.; Gaussier, E. A probabilistic interpretation of precision, recall and F-score, with implication for evaluation. In Advances in Information Retrieval; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2005; p. 952. [Google Scholar]

- Sokolova, M.; Japkowicz, N.; Szpakowicz, S. Beyond accuracy, F-score and ROC: A family of discriminant measures for performance evaluation. In AI 2006: Advances in Artificial Intelligence; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1015–1021. [Google Scholar]

- Zheng, M.; Bao, Y.Y.; Li, J.X.; Li, X.L.; Wang, L.; Zhang, J. Research and application of identification of patchily degraded alpine meadows based on UAV imagery. Acta Ecol. Sin. 2026, 1, 1–15, Online First. [Google Scholar]

- Sun, Z.; Pan, L.; Sun, Y.J. Extraction of tree crown parameters from high-density pure Chinese fir plantations based on UAV image. J. Beijing For. Univ. 2020, 42, 20–26. [Google Scholar]

- Xie, Y.H.; Jing, X.H.; Sun, Z.; Ding, Z.D.; Li, R.; Li, H.W.; Sun, Y.J. Tree crown extraction of UAV remote sensing high canopy density stand based on instance segmentation. For. Res. 2022, 35, 14–21. [Google Scholar]

- Yan, P.F.; Ming, D.P. Segmentation of high spatial resolution remotely sensed data using watershed with self-adaptive parameterization. Remote Sens. Technol. Appl. 2018, 33, 321–330. [Google Scholar]

- Quan, Y.; Li, M.Z.; Zhen, Z.; Hao, Y.S. Modeling crown characteristic attributes and profile of Larix olgensis using UAV-borne LiDAR. J. Northeast For. Univ. 2019, 47, 52–58. [Google Scholar]

- Harders, L.O.; Ufer, T.; Wrede, A.; Hussmann, S.H. UAV-based real-time weed detection in horticulture using edge processing. J. Electron. Imaging 2023, 32, 052405. [Google Scholar] [CrossRef]

- Long, K.Y.; Long, J.P.; Lin, H.; Sun, H.; Xu, C.; Huang, Z.J. Single-tree volume estimation using UAV-based crown segmentation and multi-source feature fusion. Trans. Chin. Soc. Agric. Eng. 2025, 41, 221–230. [Google Scholar]

| Research Area | Number in Figure 1 | Standard Plot | Crown Size (m2) | Canopy Density | Mean Elevation (m) | Slopes (°) | Tree Number (Plant) |

|---|---|---|---|---|---|---|---|

| Experimental area | Figure 1d | H1 | 3182.94 | 0.88 | 189.08 | 29.45 | 320 |

| Figure 1e | L1 | 2969.65 | 0.82 | 140.95 | 31.38 | 333 | |

| Verification area | Figure 1f | H2 | 3049.05 | 0.85 | 168.63 | 30.61 | 301 |

| Figure 1g | L2 | 2985.67 | 0.83 | 139.25 | 29.32 | 303 |

| Vegetation Index | Equation | Advantage | Reference |

|---|---|---|---|

| Modified Excess Vegetation Index (MExG) | It improves soil-background resistance. | [45] | |

| Visible-band Difference Vegetation Index (VDVI) | It achieves high-precision vegetation extraction | [46] | |

| Normalized Green-Blue Difference Index (NGBDI) | It enables near-binary segmentation for species identification | [47] |

| Type | Feature Variable | Total |

|---|---|---|

| Mean | Max_diff, brightness, mean of every band | 9 |

| Standard deviation | Standard deviation of every band | 7 |

| HSI | Hue, saturation, intensity | 3 |

| Geometry Extent | Area, border length, length, length/width, number of pixels, rel.border to image border, width | 7 |

| Geometry Shape | Asymmetry, border index, compactness, density, elliptic fit, main direction, radius of largest enclosed ellipse, radius of smallest enclosing ellipse, rectangular fit, roundness, shape index | 11 |

| Texture | GLCM Ang.2nd moment, GLCM contrast, GLCM correlation, GLCM dissimilarity, GLCM entropy, GLCM homogeneity, GLCM mean, GLCM stdDev, | 8 |

| Ground Object | R | G | B | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Std | CV (%) | CRD (%) | Mean | Std | CV (%) | CRD (%) | Mean | Std | CV (%) | CRD (%) | |

| C. oleifera | 93.72 | 29.74 | 31.73 | - | 105.50 | 29.02 | 27.50 | - | 95.15 | 25.34 | 26.63 | - |

| Bare soil | 140.01 | 37.50 | 26.78 | 123.45 | 104.32 | 34.07 | 32.66 | 3.46 | 117.24 | 33.45 | 28.53 | 66.04 |

| Weeds | 97.06 | 28.29 | 29.15 | 11.78 | 137.99 | 25.22 | 18.27 | 128.85 | 93.17 | 19.87 | 21.33 | 9.98 |

| Forest gaps | 56.81 | 27.26 | 47.98 | 135.40 | 58.35 | 28.18 | 48.29 | 167.35 | 62.81 | 23.39 | 37.24 | 138.25 |

| Ground Object | MExG | VDVI | NGBDI | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Std | CV (%) | CRD (%) | Mean | Std | CV (%) | CRD (%) | Mean | Std | CV (%) | CRD (%) | |

| C. oleifera | 0.07 | 0.05 | 55.26 | - | 0.05 | 0.05 | 83.97 | - | 0.05 | 0.07 | 132.6 | - |

| Bare Soil | −0.08 | 0.03 | 57.60 | 202.84 | −0.11 | 0.04 | 52.05 | 298.20 | −0.11 | 0.04 | 101.37 | 231.26 |

| Weeds | 0.18 | 0.06 | 32.41 | 167.56 | 0.18 | 0.06 | 31.72 | 282.90 | 0.18 | 0.06 | 40.99 | 414.63 |

| Forest Gaps | −0.06 | 0.10 | 113.92 | 230.64 | −0.13 | 0.11 | 71.61 | 493.49 | −0.16 | 0.12 | 55.28 | 747.05 |

| Frequency (%) | Feature | Number |

|---|---|---|

| 100 | Brightness, mean B, mean G, mean MExG, std CHM, std MExG, std VDVI | 7 |

| [90,100) | HSV Hue, main direction, mean CHM, mean VDVI, HSV Value | 5 |

| [80,90) | Max.diff | 1 |

| [70,80) | HSV saturation | 1 |

| [60,70) | Width, mean R | 2 |

| [50,60) | Radius of largest enclose ellipse, radius of smallest enclose ellipse, std NGBDI | 3 |

| Experiment Area | Segmentation Level | Filter Condition (m2) |

|---|---|---|

| H1 | Level 3-1 | S ≤ 15.75 m2 |

| Level 3-2 | 15.75 < S ≤ 25.37 m2 | |

| Level 3-3 | 25.37 < S ≤ 35.08 m2 | |

| Level 3-4 | 35.08 < S ≤ 44.79 m2 | |

| Level 3-5 | 45.79 < S ≤ 54.50 m2 | |

| Level 3-6 | 54.50 < S ≤ 64.21 m | |

| L1 | Level 3-1 | S ≤ 11.09 m2 |

| Level 3-2 | 11.09 < S ≤ 17.15 m2 | |

| Level 3-3 | 17.15 < S ≤ 23.21 m2 | |

| Level 3-4 | 23.21 < S ≤ 29.27 m2 | |

| Level 3-5 | 29.27 < S ≤ 35.33 m2 | |

| Level 3-6 | 35.33 < S ≤ 41.39 m |

| Parameter | Group | Sample | RMSE | MAE |

|---|---|---|---|---|

| Tree Height | Group A | 75 | 0.59 | 0.56 |

| Group B | 21 | 1.01 | 0.99 | |

| Total | 96 | 0.7 | 0.66 | |

| Mean Crown | Group A | 75 | 0.32 | 0.27 |

| Group B | 21 | 0.70 | 0.69 | |

| Total | 96 | 0.44 | 0.36 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lai, S.; Li, Z.; Ming, D.; Long, J.; Wei, Y.; Zhang, J. A Multi-Level Segmentation Method for Mountainous Camellia oleifera Plantation with High Canopy Closure Using UAV Imagery. Agronomy 2025, 15, 2522. https://doi.org/10.3390/agronomy15112522

Lai S, Li Z, Ming D, Long J, Wei Y, Zhang J. A Multi-Level Segmentation Method for Mountainous Camellia oleifera Plantation with High Canopy Closure Using UAV Imagery. Agronomy. 2025; 15(11):2522. https://doi.org/10.3390/agronomy15112522

Chicago/Turabian StyleLai, Shuangshuang, Zhenxian Li, Dongping Ming, Jialu Long, Yanfei Wei, and Jie Zhang. 2025. "A Multi-Level Segmentation Method for Mountainous Camellia oleifera Plantation with High Canopy Closure Using UAV Imagery" Agronomy 15, no. 11: 2522. https://doi.org/10.3390/agronomy15112522

APA StyleLai, S., Li, Z., Ming, D., Long, J., Wei, Y., & Zhang, J. (2025). A Multi-Level Segmentation Method for Mountainous Camellia oleifera Plantation with High Canopy Closure Using UAV Imagery. Agronomy, 15(11), 2522. https://doi.org/10.3390/agronomy15112522