Key Technologies of Robotic Arms in Unmanned Greenhouse

Abstract

1. Introduction

| Reference Paper | Year | Primary Focus | Scope and Limitations | Distinction & Our Contribution |

|---|---|---|---|---|

| Jin & Han [17] | 2024 | Precision agriculture (general) | Broadest scope, covering greenhouses, open fields, and orchards; analysis of greenhouse-specific challenges is not centralized. | Offers breadth but lacks depth on unique greenhouse issues. Our work is exclusively focused on the greenhouse ecosystem for a more in-depth analysis. |

| Bac et al. [18] | 2014 | High-value crop harvesting | A classic review, but focuses on the single task of ‘harvesting’ and lacks coverage of the last decade’s advancements. | Single-task focus. Our work covers the entire workflow from monitoring to operation and integrates the latest progress. |

| Zhao et al. [11] | 2016 | Vision-based control for harvesting robots | Technology-specific focus, providing a deep dive into the ‘vision’ module. | Perspective is limited to a single technology. Our work emphasizes the systemic integration of modules like vision, control, and mobility. |

| Zhang et al. [16] | 2020 | End-effectors for agricultural robots | Component-specific focus, offering a comprehensive overview of ‘gripper’ design and control. | Perspective is limited to a single hardware component. Our work discusses the gripper as part of a larger, integrated system. |

| This Review | 2025 | The unmanned greenhouse as a unique, self-contained operational ecosystem | A focused, in-depth, and systematic exploration of robotic technologies, integration frameworks, and future paradigms specifically for the greenhouse context. | 1. Exclusive focus; 2. Systemic perspective; 3. Forward-looking blueprint. |

2. Robotic Arms Used in Greenhouses

2.1. Types of Robotic Arms Used in Greenhouses

2.2. Mobile Platforms for Robotic Arms

2.2.1. Rail-Mounted Mobile Platforms

2.2.2. UGV Mobile Platforms

3. Key Technologies of the Robotic Arm

3.1. End-Effector for Specific Tasks

| End-Effector Type | Key Features | Application Cases | Developer(s) |

|---|---|---|---|

| Suction Cup | Utilizes negative pressure for adhesion; non-contact, no compression. | Strawberry harvesting | Lehnert et al. [54] |

| Gripper (Rigid) | Mechanical grasping with rigid fingers; simple structure. | Harvesting of harder fruits like apples and pears | Yoshida et al. [33] |

| Gripper (Soft) | Uses compliant materials; adaptively envelops the target, distributes pressure. | Low-damage harvesting of various fruits and vegetables like plums | Brown and Sukkarieh [56] |

| Integrated Grasping & Cutting | Integrates grasping function with a cutting tool. | Harvesting of sweet peppers, strawberries, tomatoes | Arad et al. [57] |

| Task-Specific | The end-effector is a specialized tool, e.g., a nozzle or a brush. | Precision spraying, flower pollination | Vatavuk, Ming et al. [23,59] |

3.2. Perception Algorithms for Plants and Fruits

3.2.1. Two-Dimensional Target Identification and Localization

3.2.2. Three-Dimensional Spatial Perception and Occlusion Handling

3.2.3. Multi-Modal Perception and Biomimetic Interaction

4. Framework for Integrating Robotic Arms into Unmanned Greenhouses

5. Applications of Robotic Arms in Unmanned Greenhouses

5.1. Robotic Arms for Plant Protection

5.1.1. Robotic Arms for Plant Status Monitoring

5.1.2. Robotic Arms for Targeted Spraying

5.2. Robotic Arms for Fruit and Vegetable Harvesting

| Crop | Robotic Arm Used | DOF | End-Effector | Test Results | Developer(s) |

|---|---|---|---|---|---|

| Strawberry | Ur3e | 6 | Pneumatic soft gripper | Success rate: 78% Damage rate: 23% | Ren et al. [22] |

| Strawberry | Denso VS-6556 G | 6 | Suction cup + thermal cutter | Success rate: 86% Average time: 31.3 s | Feng Qingchun et al. [58] |

| Strawberry | Mitsubishi RV-2AJ | 5 | Enclosing gripper | Success rate: 53.6% Average time: 7.5 s | Xiong et al. [75] |

| Strawberry | Noronn | 3 | Enclosing gripper | High-precision mode: 89.2–89.9% High-density mode: 98.9% | Ge et al. [20] |

| Strawberry | Octinion | 6 | Soft gripper | Average time: 4 s | Octinion Company [76] |

| Crop | Robotic Arm Used | DOF | End-Effector | Test Results | Developer(s) |

|---|---|---|---|---|---|

| Tomato | AUBO i5 | 6 | Pneumatically controlled nylon fingers | Average time: 6.4 s Highest success rate: 84% (right) Lowest success rate: 69.4% (front) | Gao et al. [77] |

| Tomato | Ur5 | 6 | Three-jaw gripper | Average speed: 23 s | YAGUCHI et al. [78] |

| Tomato | HRP2W | 7 | Custom shears | Demonstrated the feasibility of humanoid robot harvesting | Chen et al. [32] |

| Tomato | Motoman | 6 | Suction cup + mechanical claw + air-puff solenoid valve | Alternating mode: 70% Composite mode: 83.3% | Liu Jizhan et al. [79] |

| Tomato | Denso VS-6556 G | 6 | Integrated clamping-shearing | Success rate: 83% Average time: 8 s | Feng Qingchun et al. [80] |

| Tomato | — | 6 | Soft gripper | First-time success rate: 86% Second-time success rate: 96% | Yu Fenghua et al. [81] |

| Crop | Robotic Arm Used | DOF | End-Effector | Test Results | Developer |

|---|---|---|---|---|---|

| Sweet Pepper | Ur5 | 6 | Suction cup + vibrating blade | Success rate: 76.5% | Lehnert et al. [54] |

| Sweet Pepper | Fanuc LR Mate 200iD | 6 | Mechanical claw + vibrating blade | Average speed: 24 s Success rate: 61% | Arad et al. [57] |

| Cucumber | Mitsubishi RV-E2 | 7 | Gripper and suction cup + thermal cutting device | Success rate: 80% Average time: 45 s | Van Henten et al. [82] |

| Cucumber | — | 4 | Soft gripper + cutter | Success rate: 85% Average time: 28.6 s | Ji Chao et al. [83] |

| Raspberry | Ur5 | 6 | Silicone gripper | Harvesting success rate: 80% | Junge et al. [84] |

| Eggplant | — | 4 | Mechanical claw | Success rate: 89% Average time: 37.4 s | Song Jian et al. [85] |

5.2.1. Strawberry Harvesting Robotic Arms

5.2.2. Tomato Harvesting Robotic Arms

5.2.3. Harvesting Robotic Arms for Other Greenhouse Crops

6. Discussion

6.1. Key Challenges in the Application of Robotic Arms

6.2. Development of Multi-Robot Systems and Swarm Robotics

6.3. Integrated Air–Ground Framework with Unmanned Aerial Vehicles (UAVs)

6.4. The Path to Commercialization: From Research to Application

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, T.; Xu, X.; Wang, C.; Li, Z.; Li, D. From Smart Farming towards Unmanned Farms: A New Mode of Agricultural Production. Agriculture 2021, 11, 145. [Google Scholar] [CrossRef]

- Gnauer, C.; Pichler, H.; Tauber, M.; Schmittner, C.; Christl, K.; Knapitsch, J.; Parapatits, M. Towards a Secure and Self-Adapting Smart Indoor Farming Framework. Elektrotechnik Informationstechnik 2019, 136, 341–344. [Google Scholar] [CrossRef]

- Daoliang, L.; Zhen, L. System Analysis and Development Prospect of Unmanned Farming. Trans. Chin. Soc. Agric. Mach. 2020, 51, 1–12. [Google Scholar]

- Luo, X.; Liao, J.; Hu, L.; Zhou, Z.; Zhang, Z.; Zang, Y.; Wang, P.; He, J. Research progress of intelligent agricultural machinery andpractice of unmanned farm in China. J. South China Agric. Univ. 2021, 42, 8–17. [Google Scholar]

- Shamshiri, R.R.; Kalantari, F.; Ting, K.C.; Thorp, K.R.; Hameed, I.A.; Weltzien, C.; Ahmad, D.; Shad, Z.M. Advances in Greenhouse Automation and Controlled Environment Agriculture: A Transition to Plant Factories and Urban Agriculture. Int. J. Agric. Biol. Eng. 2018, 11, 1–22. [Google Scholar] [CrossRef]

- Hemming, J.; Bac, C.W.; van Tuijl, B.A.J.; Barth, R.; Bontsema, J.; Pekkeriet, E. A Robot for Harvesting Sweet-Pepper in Greenhouses. In Proceedings of the International Conference on Agricultural Engineering 2014, Zurich, Switzerland, 6 July–10 July 2014; pp. 1–8. [Google Scholar]

- Kang, H.; Chen, C. Fruit Detection, Segmentation and 3D Visualisation of Environments in Apple Orchards. Comput. Electron. Agric. 2020, 171, 105302. [Google Scholar] [CrossRef]

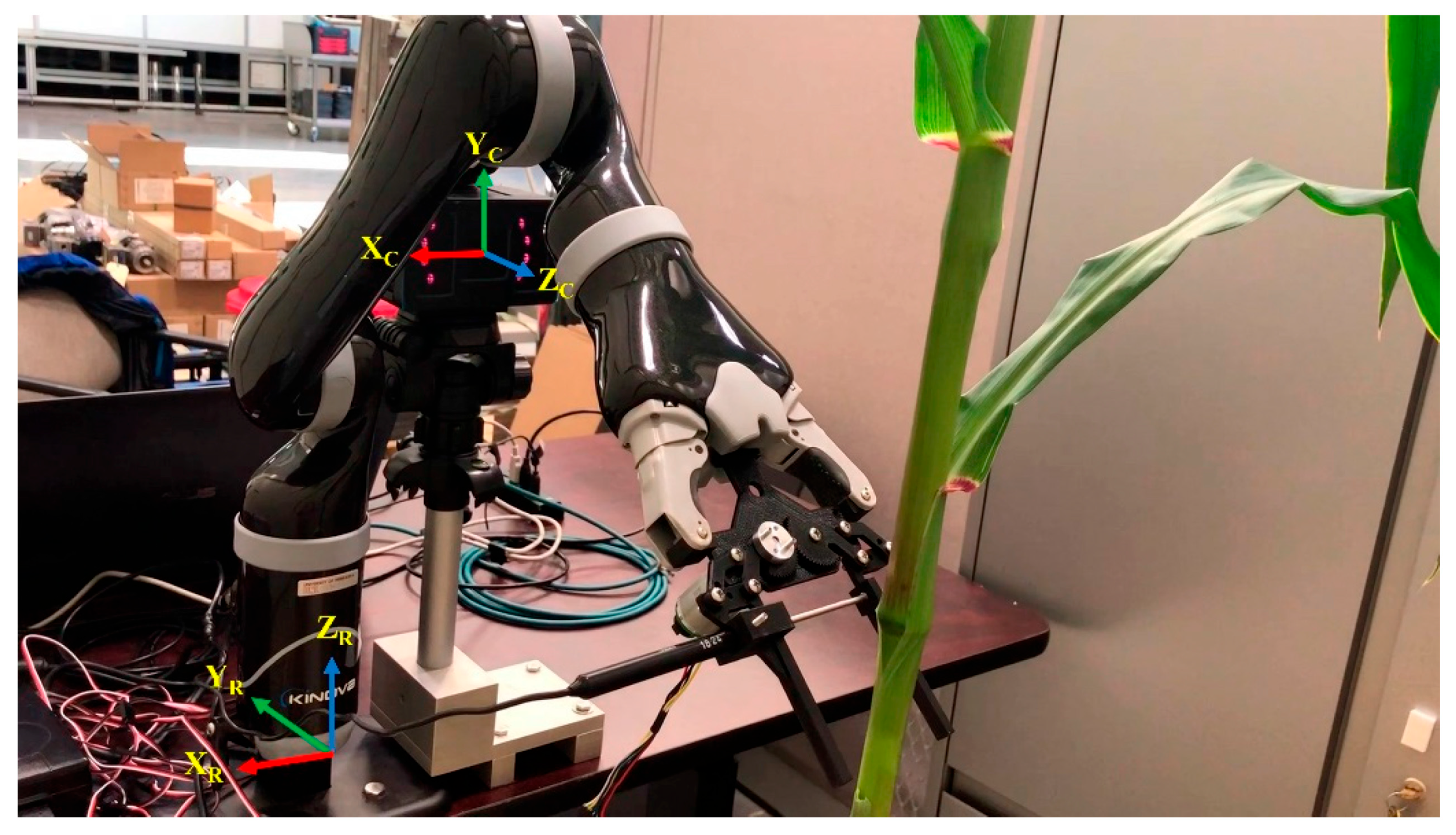

- Atefi, A.; Ge, Y.; Pitla, S.; Schnable, J. Robotic Detection and Grasp of Maize and Sorghum: Stem Measurement with Contact. Robotics 2020, 9, 58. [Google Scholar] [CrossRef]

- Qian, C.; Li, X.; Zhu, J.; Liu, T.; Li, R.; Li, B.; Hu, M.; Xin, Y.; Xu, Y. A Bionic Manipulator Based on Multi-Sensor Data Fusion. Integr. Ferroelectr. 2018, 192, 10–15. [Google Scholar] [CrossRef]

- Yang, Y.; Han, Y.; Li, S.; Yang, Y.; Zhang, M.; Li, H. Vision Based Fruit Recognition and Positioning Technology for Harvesting Robots. Comput. Electron. Agric. 2023, 213, 108258. [Google Scholar] [CrossRef]

- Zhao, Y.; Gong, L.; Huang, Y.; Liu, C. A Review of Key Techniques of Vision-Based Control for Harvesting Robot. Comput. Electron. Agric. 2016, 127, 311–323. [Google Scholar] [CrossRef]

- Rapado-Rincón, D.; van Henten, E.J.; Kootstra, G. Development and Evaluation of Automated Localisation and Reconstruction of All Fruits on Tomato Plants in a Greenhouse Based on Multi-View Perception and 3D Multi-Object Tracking. Biosyst. Eng. 2023, 231, 78–91. [Google Scholar] [CrossRef]

- Oliveira, L.F.P.; Moreira, A.P.; Silva, M.F. Advances in Agriculture Robotics: A State-of-the-Art Review and Challenges Ahead. Robotics 2021, 10, 52. [Google Scholar] [CrossRef]

- Kurtser, P.; Castro-Alves, V.; Arunachalam, A.; Sjöberg, V.; Hanell, U.; Hyötyläinen, T.; Andreasson, H. Development of Novel Robotic Platforms for Mechanical Stress Induction, and Their Effects on Plant Morphology, Elements, and Metabolism. Sci. Rep. 2021, 11, 23876. [Google Scholar] [CrossRef] [PubMed]

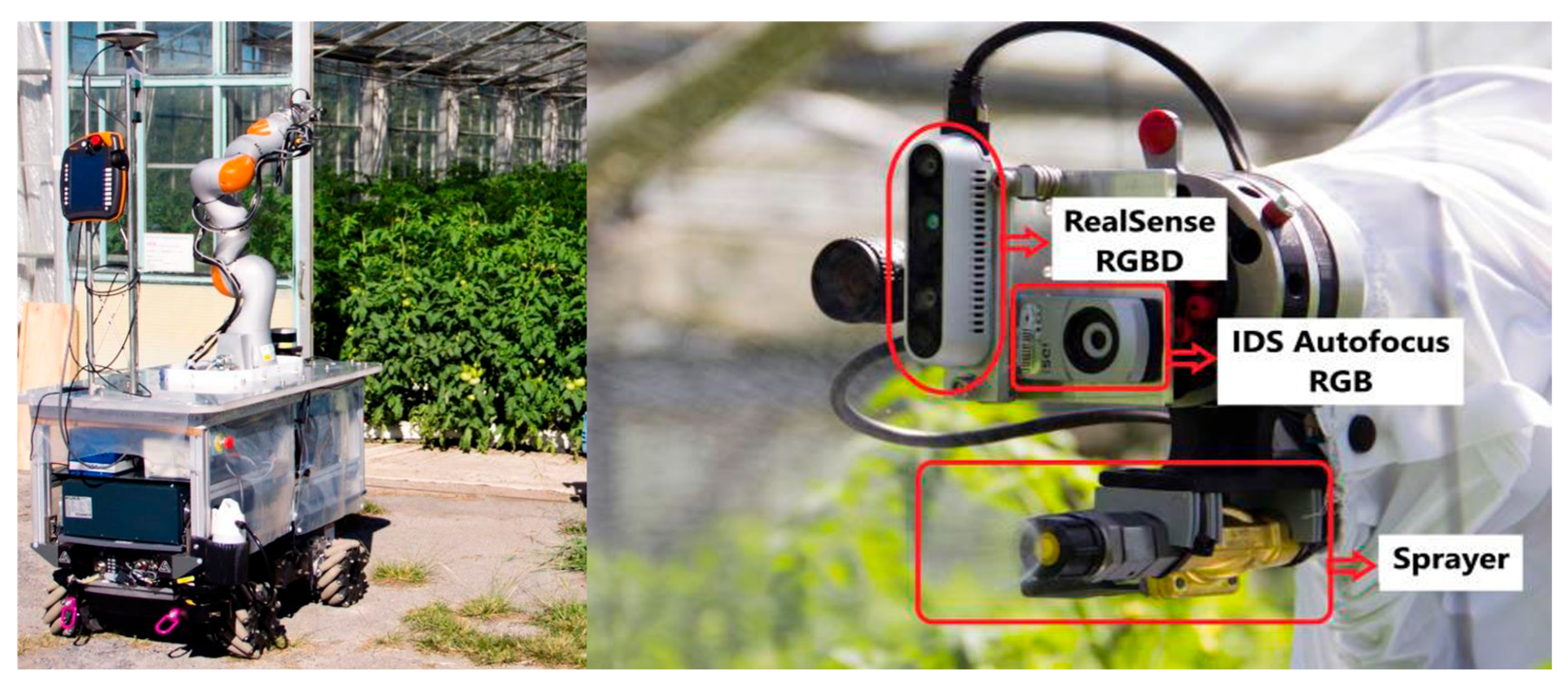

- Martin, J.; Ansuategi, A.; Maurtua, I.; Gutierrez, A.; Obregon, D.; Casquero, O.; Marcos, M. A Generic ROS-Based Control Architecture for Pest Inspection and Treatment in Greenhouses Using a Mobile Manipulator. IEEE Access 2021, 9, 94981–94995. [Google Scholar] [CrossRef]

- Zhang, B.; Xie, Y.; Zhou, J.; Wang, K.; Zhang, Z. State-of-the-Art Robotic Grippers, Grasping and Control Strategies, as Well as Their Applications in Agricultural Robots: A Review. Comput. Electron. Agric. 2020, 177, 105694. [Google Scholar] [CrossRef]

- Jin, T.; Han, X. Robotic Arms in Precision Agriculture: A Comprehensive Review of the Technologies, Applications, Challenges, and Future Prospects. Comput. Electron. Agric. 2024, 221, 108938. [Google Scholar] [CrossRef]

- Bac, C.W.; van Henten, E.J.; Hemming, J.; Edan, Y. Harvesting Robots for High-value Crops: State-of-the-art Review and Challenges Ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Roshanianfard, A.; Mengmeng, D.; Nematzadeh, S. 4-Dof Scara Robotic Arm for Various Farm Applications: Designing, Kinematic Modelling, and Parameterization. Acta Technol. Agric 2021, 24, 61–66. [Google Scholar] [CrossRef]

- Ge, Y.; Xiong, Y.; From, P.J. Three-Dimensional Location Methods for the Vision System of Strawberry-Harvesting Robots: Development and Comparison. Precis. Agric. 2022, 24, 764–782. [Google Scholar] [CrossRef]

- Ge, Y.; Xiong, Y.; Tenorio, G.L.; From, P.J. Fruit Localization and Environment Perception for Strawberry Harvesting Robots. IEEE Access 2019, 7, 147642–147652. [Google Scholar] [CrossRef]

- Ren, G.; Wu, T.; Lin, T.; Yang, L.; Chowdhary, G.; Ting, K.C.; Ying, Y. Mobile Robotics Platform for Strawberry Sensing and Harvesting within Precision Indoor Farming Systems. J. Field Robot. 2024, 41, 2047–2065. [Google Scholar] [CrossRef]

- Yang, M.; Lyu, H.; Zhao, Y.; Sun, Y.; Pan, H.; Sun, Q.; Chen, J.; Qiang, B.; Yang, H. Delivery of Pollen to Forsythia Flower Pistils Autonomously and Precisely Using a Robot Arm. Comput. Electron. Agric. 2023, 214, 108274. [Google Scholar] [CrossRef]

- Oberti, R.; Marchi, M.; Tirelli, P.; Calcante, A.; Iriti, M.; Tona, E.; Hočevar, M.; Baur, J.; Pfaff, J.; Schütz, C.; et al. Selective Spraying of Grapevines for Disease Control Using a Modular Agricultural Robot. Biosyst. Eng. 2016, 146, 203–215. [Google Scholar] [CrossRef]

- Oberti, R.; Marchi, M.; Tirelli, P.; Calcante, A.; Iriti, M.; Hočevar, M.; Baur, J.; Pfaff, J.; Schütz, C.; Ulbrich, H. Selective Spraying of Grapevine’s Diseases by a Modular Agricultural Robot. J. Agric. Eng. 2013, 44, 1–5. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, J.; Wang, T.; Song, Z.; Li, Y.; Huang, Y.; Wang, L.; Jin, J. Automated In-Field Leaf-Level Hyperspectral Imaging of Corn Plants Using a Cartesian Robotic Platform. Comput. Electron. Agric. 2021, 183, 105996. [Google Scholar] [CrossRef]

- Erick, M.; Fiestas, S.; Sixto, R.; Prado, G. Modeling and Simulation of Kinematics and Trajectory Planning of a Farmbot Cartesian Robot. In Proceedings of the 2018 IEEE XXV International Conference on Electronics, Electrical Engineering and Computing (INTERCON), Lima, Peru, 8–10 August 2018; pp. 1–4. [Google Scholar]

- Xiong, Y.; Ge, Y.; Grimstad, L.; From, P.J. An Autonomous Strawberry-harvesting Robot: Design, Development, Integration, and Field Evaluation. J. Field Robot. 2019, 37, 202–224. [Google Scholar] [CrossRef]

- Au, C.; Barnett, J.; Lim, S.H.; Duke, M. Workspace Analysis of Cartesian Robot System for Kiwifruit Harvesting. Ind. Robot Int. J. Robot. Res. Appl. 2020, 47, 503–510. [Google Scholar] [CrossRef]

- Barnett, J.; Duke, M.; Au, C.K.; Lim, S.H. Work Distribution of Multiple Cartesian Robot Arms for Kiwifruit Harvesting. Comput. Electron. Agric. 2020, 169, 105202. [Google Scholar] [CrossRef]

- Ling, X.; Zhao, Y.; Gong, L.; Liu, C.; Wang, T. Dual-Arm Cooperation and Implementing for Robotic Harvesting Tomato Using Binocular Vision. Robot. Auton. Syst. 2019, 114, 134–143. [Google Scholar] [CrossRef]

- Chen, X.; Chaudhary, K.; Tanaka, Y. Reasoning-Based Vision Recognition for Agricultural Humanoid Robot toward Tomato Harvesting. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1–8. [Google Scholar]

- Yoshida, T.; Onishi, Y.; Kawahara, T.; Fukao, T. Automated Harvesting by a Dual-Arm Fruit Harvesting Robot. ROBOMECH J. 2022, 9, 19. [Google Scholar] [CrossRef]

- Li, T.; Xie, F.; Zhao, Z.; Zhao, H.; Guo, X.; Feng, Q. A Multi-Arm Robot System for Efficient Apple Harvesting: Perception, Task Plan and Control. Comput. Electron. Agric. 2023, 211, 107979. [Google Scholar] [CrossRef]

- Wang, F.; Lever, P. Cell Mapping Method for General Optimum Trajectory Planning of Multiple Robotic Arms. Robot. Auton. Syst. 1994, 12, 15–27. [Google Scholar] [CrossRef]

- Liu, C.; Gao, J.; Bi, Y.; Shi, X.; Tian, D. A Multitasking-Oriented Robot Arm Motion Planning Scheme Based on Deep Reinforcement Learning and Twin Synchro-Control. Sensors 2020, 20, 3515. [Google Scholar] [CrossRef]

- Kawasaki, H.; Ueki, S.; Ito, S. Decentralized Adaptive Coordinated Control of Multiple Robot Arms without Using a Force Sensor. Automatica 2006, 42, 481–488. [Google Scholar] [CrossRef]

- Tian, S.; Liu, G.; Xing, D.; Sun, Z. Design and Experiment on Greenhouse Multi-function Rail Vehicle. J. Agric. Mech. Res. 2017, 39, 116–121. [Google Scholar]

- Feng, Q.; Wang, X.; Wang, G.; Li, Z. Design and test of tomatoes harvesting robot. In Proceedings of the 2015 IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015. [Google Scholar]

- Liang, L.; Wenai, Z.; Qingchun, F.; Xiu, W. System Design for Rail Spraying Robot in Greenhouse. J. Agric. Mech. Res. 2016, 38, 109–112. [Google Scholar]

- Qi, L.; Wang, H.; Zhang, J.; Ji, R.; Wang, J. 3D Numerical Simulation and Experiment of Air-velocity Distribution of Greenhouse Air-assisted Sprayer. Trans. Chin. Soc. Agric. Mach. 2013, 44, 69–74. [Google Scholar]

- Alinezhad, E.; Gan, V.; Chang, V.W.-C.; Zhou, J. Unmanned Ground Vehicles (UGVs)-Based Mobile Sensing for Indoor Environmental Quality (IEQ) Monitoring: Current Challenges and Future Directions. J. Build. Eng. 2024, 88, 109169. [Google Scholar] [CrossRef]

- Munasinghe, I.; Perera, A.; Deo, R.C. A Comprehensive Review of UAV-UGV Collaboration: Advancements and Challenges. J. Sens. Actuator Netw. 2024, 13, 81. [Google Scholar] [CrossRef]

- Farella, A.; Paciolla, F.; Quartarella, T.; Pascuzzi, S. Agricultural Unmanned Ground Vehicle (UGV): A Brief Overview; Springer Nature: Cham, Switzerland, 2024; pp. 137–146. [Google Scholar]

- Zhang, T.; Zhou, W.; Meng, F.; Li, Z. Efficiency Analysis and Improvement of an Intelligent Transportation System for the Application in Greenhouse. Electronics 2019, 8, 946. [Google Scholar] [CrossRef]

- Yang, Q. Design and Development of AGV-Based IoT Solution for Greenhouse Environmental Monitoring. Master’s Thesis, Swinburne University of Technology, Sarawak, Malaysia, 2025. [Google Scholar]

- Zimmer, D.; Šumanovac, L.; Jurišić, M.; Čosić, A.; Lucić, P. Automatically Guided Vehicles (AGV) in Agriculture. Teh. Glas. 2024, 18, 666–672. [Google Scholar] [CrossRef]

- Lynch, L.; Newe, T.; Clifford, J.; Coleman, J.; Walsh, J.; Toal, D. Automated Ground Vehicle (AGV) and Sensor Technologies—A Review. In Proceedings of the 2018 12th International Conference on Sensing Technology (ICST), Limerick, Ireland, 4–6 December 2018; pp. 347–352. [Google Scholar]

- Saike, J.; Meina, Z.; Xue, L.; Yannan, Q.; Xiaolan, L. Development of navigation and control technology for autonomous mobile equipment in greenhouse. J. Chin. Agric. Mech. 2022, 43, 159–169. [Google Scholar]

- Xiang, L.; Nolan, M.; Bao, Y.; Elmore, M.; Tuel, T.; Gai, J.; Shah, D.; Wang, P.; Huser, M.; Hurd, M. Robotic Assay for Drought (RoAD): An Automated Phenotyping System for Brassinosteroid and Drought Responses. Plant J. 2021, 107, 1837–1853. [Google Scholar] [CrossRef]

- Roure, F.; Moreno, G.; Soler, M.; Faconti, D.; Serrano, D.; Astolfi, P.; Bardaro, G.; Gabrielli, A.; Bascetta, L.; Matteucci, M. GRAPE: Ground Robot for Vineyard Monitoring and Protection. In Iberian Robotics Conference; Springer: Berlin/Heidelberg, Germany, 2017; pp. 249–260. [Google Scholar]

- Mohamed, A.; El-Gindy, M.; Ren, J. Advanced Control Techniques for Unmanned Ground Vehicle: Literature Survey. Int. J. Veh. Perform. 2018, 4, 46–73. [Google Scholar] [CrossRef]

- Kim, K.; Deb, A.; Cappelleri, J. P-AgBot: In-Row & under-Canopy Agricultural Robot for Monitoring and Physical Sampling. IEEE Robot. Autom. Lett. 2022, 7, 7942–7949. [Google Scholar]

- Lehnert, C.; McCool, C.; Sa, I.; Perez, T. Performance Improvements of a Sweet Pepper Harvesting Robot in Protected Cropping Environments. J. Field Robot. 2020, 37, 1197–1223. [Google Scholar] [CrossRef]

- Zhuang, Y.; Guo, Y.; Li, J.; Shen, L.; Wang, Z.; Sun, M.; Wang, J. Analysis of Mechanical Characteristics of Stereolithography Soft-Picking Manipulator and Its Application in Grasping Fruits and Vegetables. Agronomy 2023, 13, 2481. [Google Scholar] [CrossRef]

- Brown, J.; Sukkarieh, S. Design and Evaluation of a Modular Robotic Plum Harvesting System Utilizing Soft Components. J. Field Robot. 2020, 38, 289–306. [Google Scholar] [CrossRef]

- Arad, B.; Balendonck, J.; Barth, R.; Ben-Shahar, O.; Edan, Y.; Hellström, T.; Hemming, J.; Kurtser, P.; Ringdahl, O.; Tielen, T.; et al. Development of a Sweet Pepper Harvesting Robot. J. Field Robot. 2020, 37, 1027–1039. [Google Scholar] [CrossRef]

- Feng, Q.C.; Wang, X.; Zheng, W.G.; Qiu, Q.; Jiang, K. New Strawberry Harvesting Robot for Elevated-Trough Culture. Int. J. Agric. Biol. Eng. 2011, 5, 1–8. [Google Scholar]

- Vatavuk, I.; Vasiljević, G.; Kovačić, Z. Task Space Model Predictive Control for Vineyard Spraying with a Mobile Manipulator. Agriculture 2022, 12, 381. [Google Scholar] [CrossRef]

- Benavides, M.; Cantón-Garbín, M.; Sánchez-Molina, J.A.; Rodríguez, F. Automatic Tomato and Peduncle Location System Based on Computer Vision for Use in Robotized Harvesting. Appl. Sci. 2020, 10, 5887. [Google Scholar] [CrossRef]

- Lv, J.; Wang, Y.; Ni, H.; Wang, Q.; Rong, H.; Ma, Z.; Yang, B.; Xu, L. Method for Discriminating of the Shape of Overlapped Apple Fruit Images. Biosyst. Eng. 2019, 186, 118–129. [Google Scholar] [CrossRef]

- He, B.; Zhang, Y.; Gong, J.; Fu, G.; Zhao, Y.; Wu, R. Fast Recognition of Tomato Fruit in Greenhouse at Night Based on Improved YOLO v5. Trans. Chin. Soc. Agric. Mach. 2022, 53, 201–208. [Google Scholar]

- Feng, Q.; Cheng, W.; Li, Y.; Wang, B.; Chen, L. Method for identifying tomato plants pruning point using Mask R-CNN. Trans. Chin. Soc. Agric. Eng. 2022, 38, 128–135. [Google Scholar]

- Lehnert, C.; Sa, I.; McCool, C.; Upcroft, B.; Perez, T. Sweet pepper pose detection and grasping for automated crop harvesting. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- Ubina, N.; Cheng, S. A Review of Unmanned System Technologies with Its Application to Aquaculture Farm Monitoring and Management. Drones 2022, 6, 12. [Google Scholar] [CrossRef]

- Chu, L. Study the Operation Process of Factory Greenhouse Robot Based on Intelligent Dispatching Method. In Proceedings of the 2022 IEEE International Conference on Electrical Engineering, Big Data and Algorithms (EEBDA), Changchun, China, 25–27 February 2022; pp. 291–293. [Google Scholar]

- Thomopoulos, V.; Bitas, D.; Papastavros, K.; Tsipianitis, D.; Kavga, A. Development of an Integrated IoT-Based Greenhouse Control Three-Device Robotic System. Agronomy 2021, 11, 405. [Google Scholar] [CrossRef]

- Ferreira, B.; Petrović, T.; Bogdan, S. Distributed Mission Planning of Complex Tasks for Heterogeneous Multi-Robot Teams. arXiv 2021, arXiv:2109.10106. [Google Scholar] [CrossRef]

- Ma, Y.; Feng, Q.; Sun, Y.; Guo, X.; Zhang, W.; Wang, B.; Chen, L. Optimized Design of Robotic Arm for Tomato Branch Pruning in Greenhouses. Agriculture 2024, 14, 359. [Google Scholar] [CrossRef]

- Kaljaca, D.; Vroegindeweij, B.; Henten, E.J. Coverage Trajectory Planning for a Bush Trimming Robot Arm. J. Field Robot. 2019, 37, 283–308. [Google Scholar] [CrossRef]

- Nadafzadeh, M.; Banakar, A.; Mehdizadeh, S.A.; Bavani, M.Z.; Minaei, S.; Hoogenboom, G. Design, Fabrication and Evaluation of a Robot for Plant Nutrient Monitoring in Greenhouse (Case Study: Iron Nutrient in Spinach). Comput. Electron. Agric. 2024, 217, 108579. [Google Scholar] [CrossRef]

- Schor, N.; Berman, S.; Dombrovsky, A.; Elad, Y.; Ignat, T.; Bechar, A. Development of a Robotic Detection System for Greenhouse Pepper Plant Diseases. Precis. Agric. 2017, 18, 394–409. [Google Scholar] [CrossRef]

- Ebrahimi, M.A.; Khoshtaghaza, M.H.; Minaei, S.; Jamshidi, B. Vision-Based Pest Detection Based on SVM Classification Method. Comput. Electron. Agric. 2017, 137, 52–58. [Google Scholar] [CrossRef]

- Cho, S.; Kim, T.; Jung, D.H.; Park, S.H.; Na, Y.; Ihn, Y.S.; Kim, K. Plant Growth Information Measurement Based on Object Detection and Image Fusion Using a Smart Farm Robot. Comput. Electron. Agric. 2023, 207, 107703. [Google Scholar] [CrossRef]

- Xiong, Y.; Peng, C.; Grimstad, L.; From, P.J.; Isler, V. Development and Field Evaluation of a Strawberry Harvesting Robot with a Cable-Driven Gripper. Comput. Electron. Agric. 2019, 157, 392–402. [Google Scholar] [CrossRef]

- Preter, A.D.; Anthonis, J.; Baerdemaeker, J.D. Development of a Robot for Harvesting Strawberries. IFAC-Pap. 2018, 51, 14–19. [Google Scholar] [CrossRef]

- Gao, J.; Zhang, F.; Zhang, J.; Yuan, T.; Yin, J.; Guo, H.; Yang, C. Development and Evaluation of a Pneumatic Finger-like End-Effector for Cherry Tomato Harvesting Robot in Greenhouse. Comput. Electron. Agric. 2022, 197, 106879. [Google Scholar] [CrossRef]

- Yaguchi, H.; Nagahama, K.; Hasegawa, T.; Inaba, M. Development of An Autonomous Tomato Harvesting Robot with Rotational Plucking Gripper. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 1–6. [Google Scholar]

- Liu, J.; Li, Z.; Wang, F.; Li, P.; Xi, N. Hand-Arm Coordination for a Tomato Harvesting Robot Based on Commercial Manipulator. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013; pp. 2715–2720. [Google Scholar]

- Feng, Q.; Zou, W.; Fan, P.; Zhang, C.; Wang, X. Design and Test of Robotic Harvesting System for Cherry Tomato. Int. J. Agric. Biol. Eng. 2016, 11, 96–100. [Google Scholar] [CrossRef]

- Yu, F.; Zhou, C.; Yang, X.; Guo, Z.; Chen, C. Design and Experiment of Tomato Picking Robot in Solar Greenhouse. Trans. Chin. Soc. Agric. Mach. 2022, 53, 41–49. [Google Scholar]

- Henten, E.J.V.; Hemming, J.; van Tuijl, B.A.J.; Kornet, J.G.; Meuleman, J.; Bontsema, J.; van Os, E.A.; Henten, E.; Hemming, J.; Tuijl, B.V.; et al. An Autonomous Robot for Harvesting Cucumbers in Greenhouses. Auton. Robot. 2002, 13, 241–258. [Google Scholar] [CrossRef]

- Ji, C.; Feng, Q.; Yuan, T.; Tan, Y.; Li, W. Development and Performance Analysis on Cucumber Harvesting Robot System in Greenhouse. Robot 2011, 33, 726–730. [Google Scholar]

- Junge, K.; Pires, C.; Hughes, J. Lab2Field Transfer of a Robotic Raspberry Harvester Enabled by a Soft Sensorized Physical Twin. Commun. Eng. 2023, 2, 40. [Google Scholar] [CrossRef]

- Song, J.; Sun, X.; Zhang, T.; Zhang, B.; Xu, L. Design and Experiment of Opening Picking Robot for Eggplant. Trans. Chin. Soc. Agric. Mach. 2009, 40, 143–147. [Google Scholar]

- Qingchun, F.; Wengang, Z.; Kai, J.; Quan, Q.; Rui, G. Design of Strawberry Harvesting Robot on Table-top Culture. J. Agric. Mech. Res. 2012, 34, 122–126. [Google Scholar]

- Feng, Q.; Chen, J.; Zhang, M.; Wang, X. Design and Test of Harvesting Robot for Table-top Cultivated Strawberry. In Proceedings of the 2019 WRC Symposium on Advanced Robotics and Automation (WRC SARA), Beijing, China, 21–22 August 2019. [Google Scholar]

- Liu, J. Research Progress Analysis of Robotic Harvesting Technologies in Greenhouse. Trans. Chin. Soc. Agric. Mach. 2017, 48, 1–18. [Google Scholar]

- Liu, J. Analysis and Optimal Control of Vacuum Suction System for Tomato Harvesting Robots. Ph.D. Thesis, Jiangsu University, Zhenjiang, China, 2010. [Google Scholar]

- Li, Z.; Liu, J.; Li, P.; Li, W. Analysis of Workspace and Kinematics for a Tomato Harvesting Robot. In Proceedings of the 2008 International Conference on Intelligent Computation Technology and Automation (ICICTA), Changsha, China, 20–22 October 2008; pp. 823–827. [Google Scholar] [CrossRef]

- Henten, E.J.V.; Tuijl, B.A.J.V.; Hemming, J.; Kornet, J.G.; Bontsema, J.; Os, E.A.V. Field Test of an Autonomous Cucumber Picking Robot. Biosyst. Eng. 2003, 86, 305–313. [Google Scholar] [CrossRef]

- Feng, Q.; Ji, C.; Zhang, J.; Li, W. Optimization Design and Kinematic Analysis of Cucumber Harvesting Robot Manipulator. Trans. Chin. Soc. Agric. Mach. 2010, 41, 244–248. [Google Scholar]

- Jian, S. Optimization design and simulation on structure parameter of eggplant picking robot. Mach. Des. Manuf. 2008, 6, 166–168. [Google Scholar]

- Ju, C.; Kim, J.; Seol, J.; Son, H.I. A Review on Multirobot Systems in Agriculture. Comput. Electron. Agric. 2022, 202, 107336. [Google Scholar] [CrossRef]

- Rizk, Y.; Awad, M.; Tunstel, W.E. Cooperative Heterogeneous Multi-Robot Systems: A Survey. ACM Comput. Surv. 2019, 52, 1–31. [Google Scholar] [CrossRef]

- Ren, Z.; Zheng, H.; Chen, J.; Chen, T.; Xie, P.; Xu, Y.; Deng, J.; Wang, H.; Sun, M.; Jiao, W. Integrating UAV, UGV and UAV-UGV Collaboration in Future Industrialized Agriculture: Analysis, Opportunities and Challenges. Comput. Electron. Agric. 2024, 227, 109631. [Google Scholar] [CrossRef]

- Polic, M.; Ivanovic, A.; Maric, B.; Arbanas, B.; Tabak, J.; Orsag, M. Structured Ecological Cultivation with Autonomous Robots in Indoor Agriculture. In Proceedings of the 2021 16th International Conference on Telecommunications (ConTEL), Zagreb, Croatia, 30 June–2 July 2021; pp. 189–195. [Google Scholar]

- Bhadoriya, A.S.; Rathinam, S.; Darbha, S.; Casbeer, D.; Manyam, S. Assisted Path Planning for a UGV–UAV Team Through a Stochastic Network. J. Indian Inst. Sci. 2024, 104, 691–710. [Google Scholar] [CrossRef]

| Robotic Arm Type | Key Technical Features | Suitable Applications | Advantages | Disadvantages | Relative Cost |

|---|---|---|---|---|---|

| SCARA | 4 DOF; Fast horizontal motion; High vertical rigidity. | Seedling tasks, sorting, simple picking. | Fast, precise, simple structure, lower cost. | Limited flexibility; poor at complex 3D tasks. | Low to Medium |

| Articulated | 4- to 7 DOF; Human-like motion; Spherical workspace. | Complex harvesting, pruning, pollination, targeted spraying. | Highly flexible, obstacle avoidance, large reach. | Complex control, high cost & integration difficulty. | High |

| Cartesian (Gantry) | 3 linear axes (X, Y, Z); Rectangular workspace; High rigidity. | Large-area tasks, monitoring, imaging. | High accuracy & rigidity, large work area, simple control. | Inflexible, limited speed, large footprint. | Medium to High |

| Multi-arm Collaborative | Dual/multi-arm coordination; shared workspace. | Harvesting large/fragile items; tasks needing two hands | High dexterity for complex tasks. | Highly complex control, very high cost, mainly research-stage. | Very High |

| Platform Type | Navigation/ Movement Method | Suitable Environment | Advantages | Disadvantages | Relative Complexity/Cost |

|---|---|---|---|---|---|

| Fixed Platform | None, fixed position. | Fixed workstations for processing conveyed items. | High stability & accuracy, simple, low cost. | Limited workspace, inflexible, needs material transport. | Low |

| Rail-Mounted Platform | Moves along pre-set physical rails. | Structured, row-based environments. | High accuracy & repeatability, simple navigation stable. | Inflexible, limited to track, high installation cost. | Medium |

| UGV | Autonomous or marker-guided. | Complex, unstructured environments; cross-row tasks. | Highly flexible & adaptable, full greenhouse coverage. | Complex navigation, accuracy is environment-dependent, needs flat ground. | High |

| Aspect | Industrial Robotic Arm | Agricultural Robotic Arm |

|---|---|---|

| Environment | Fixed layout, constant lighting, clean, predictable. | Variable lighting, changing plant growth, dust, humidity, unpredictable. |

| Task Nature | High-speed, high-precision repetition of the same motion. | Each task is unique, requires real-time perception and planning. |

| Target Object | Standardized parts with known geometry and properties. | Living organisms with varied shapes, sizes, ripeness, and fragility. |

| Primary Technical Challenge | Maximizing speed, precision, and repeatability. Minimizing cycle time. | Robust object detection in clutter, gentle handling, real-time decision-making. |

| End-Effector | Simple, task-specific, often rigid grippers designed for one object. | Complex, adaptive, often “soft” grippers with force/tactile sensing to avoid damage. |

| Mobility | Typically stationery, bolted to the floor. | Often mobile; requires integration with a platform and robust navigation capabilities. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Liu, T.; Li, X.; Cai, C.; Chang, C.; Xue, X. Key Technologies of Robotic Arms in Unmanned Greenhouse. Agronomy 2025, 15, 2498. https://doi.org/10.3390/agronomy15112498

Zhang S, Liu T, Li X, Cai C, Chang C, Xue X. Key Technologies of Robotic Arms in Unmanned Greenhouse. Agronomy. 2025; 15(11):2498. https://doi.org/10.3390/agronomy15112498

Chicago/Turabian StyleZhang, Songchao, Tianhong Liu, Xiang Li, Chen Cai, Chun Chang, and Xinyu Xue. 2025. "Key Technologies of Robotic Arms in Unmanned Greenhouse" Agronomy 15, no. 11: 2498. https://doi.org/10.3390/agronomy15112498

APA StyleZhang, S., Liu, T., Li, X., Cai, C., Chang, C., & Xue, X. (2025). Key Technologies of Robotic Arms in Unmanned Greenhouse. Agronomy, 15(11), 2498. https://doi.org/10.3390/agronomy15112498