RipenessGAN: Growth Day Embedding-Enhanced GAN for Stage-Wise Jujube Ripeness Data Generation

Abstract

1. Introduction

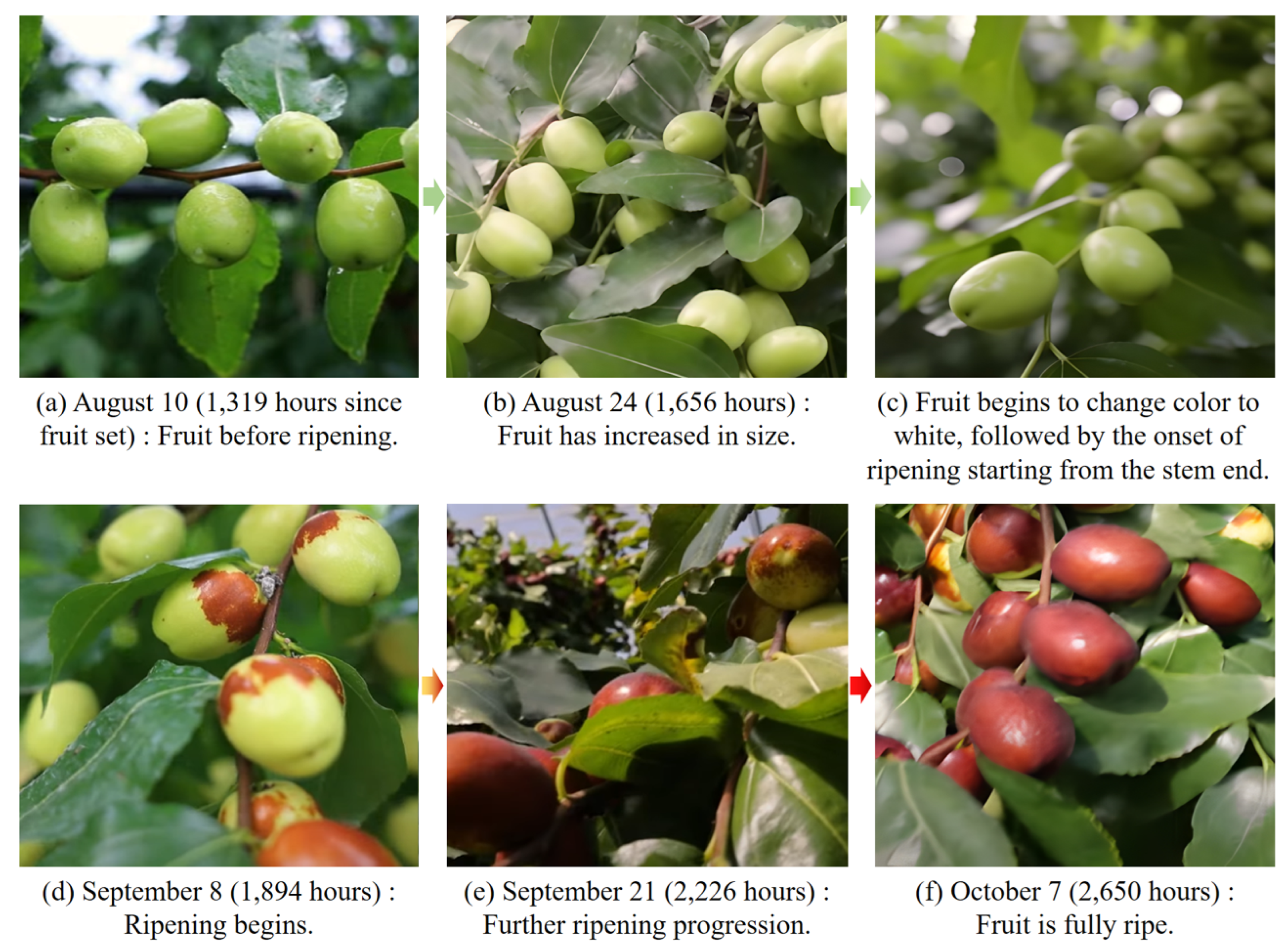

1.1. Research Purpose and Background

1.2. Key Contributions

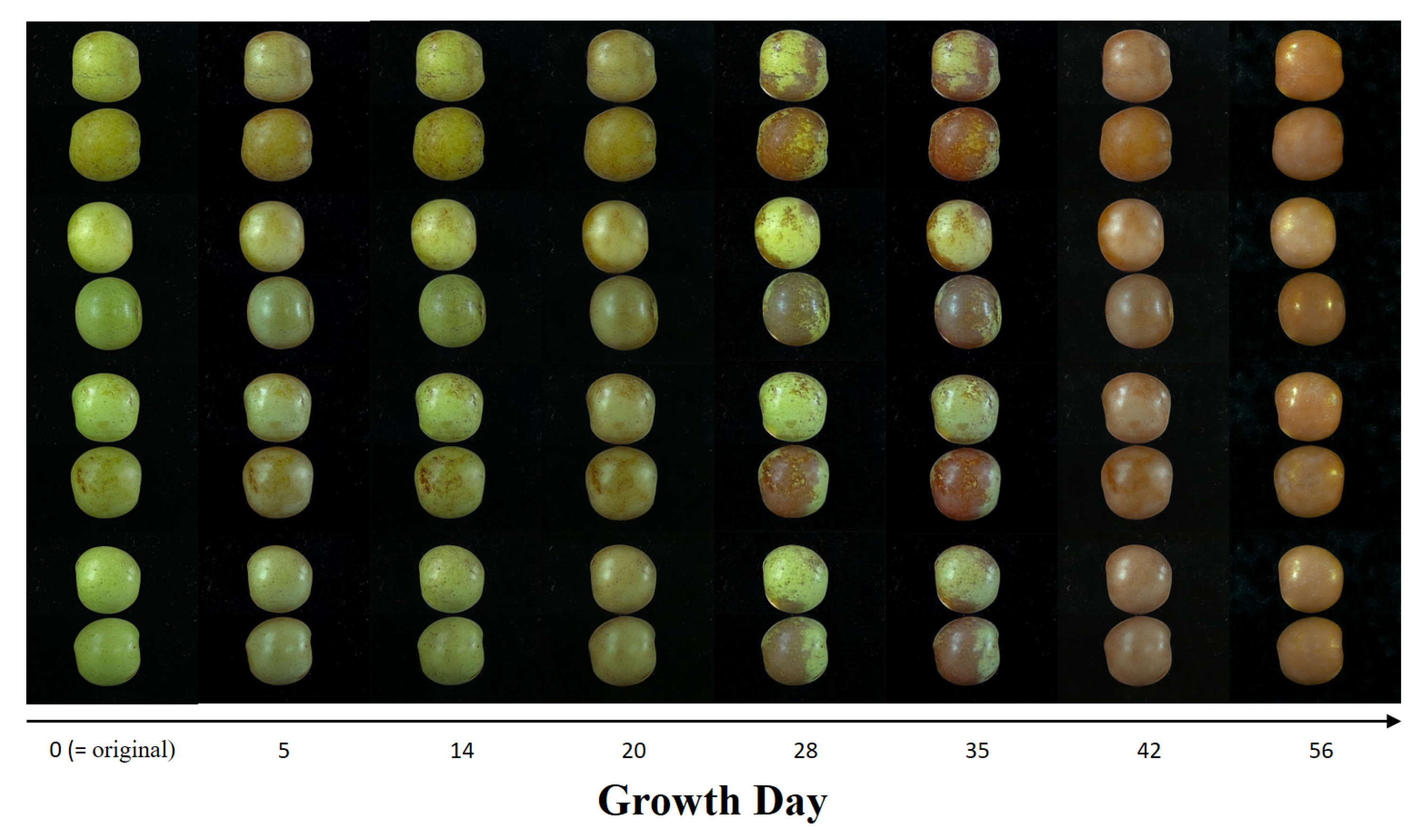

- Growth day embedding architecture: We introduce a Growth Day Embedding mechanism, enabling fine-grained temporal control over jujube ripeness generation, modeling continuous growth (0–56 days) within discrete class boundaries (0–3), and exceeding the expressiveness of existing GANs and diffusion model frameworks.

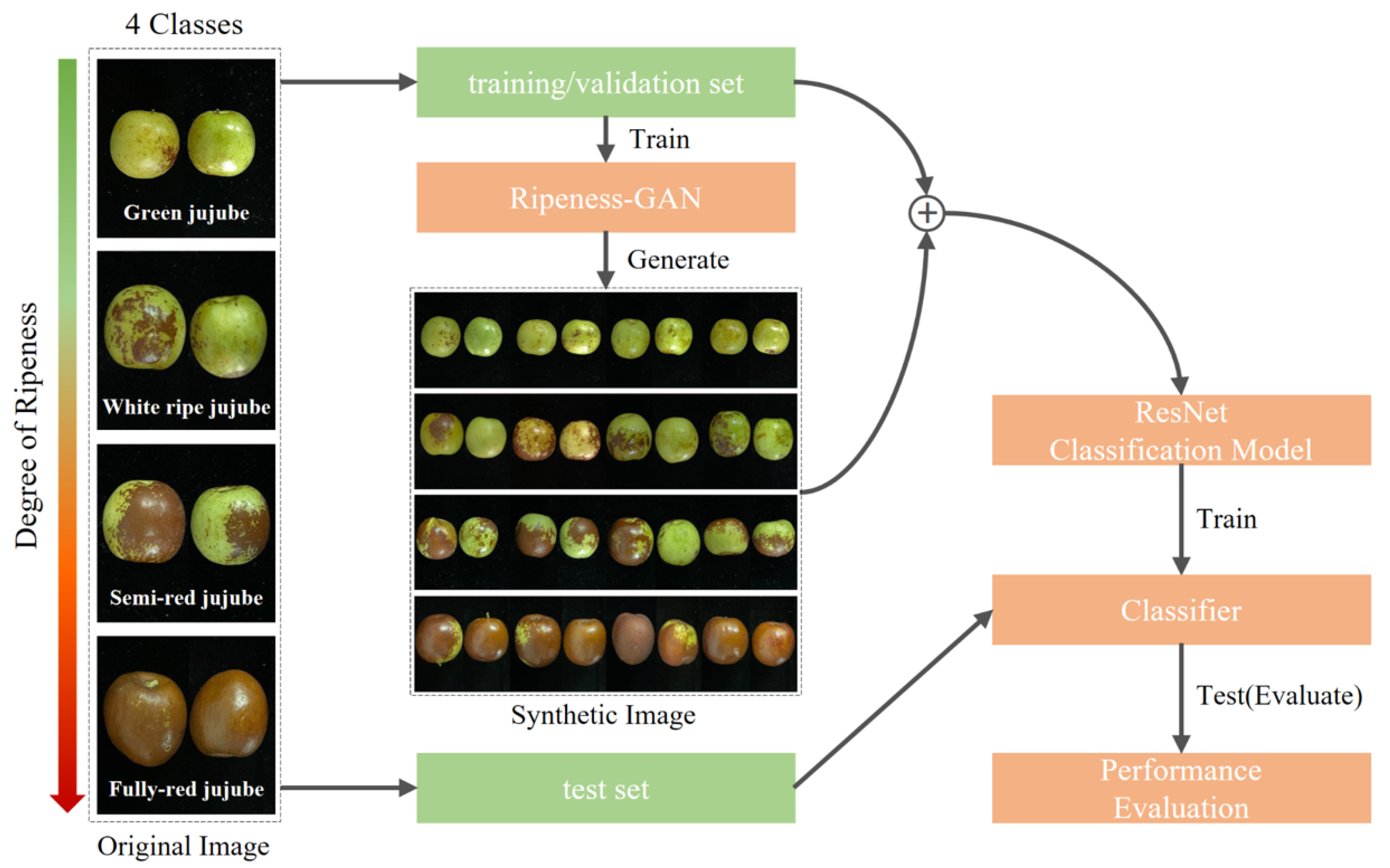

- RipenessGAN framework: We propose RipenessGAN, a generative model incorporating Growth Day Embedding to synthesize realistic, temporally controlled images, demonstrating superior diversity and consistency across all ripeness stages compared to CycleGAN.

- Temporal consistency loss: We developed a temporal consistency loss function to ensure smooth transitions between growth days while preserving class-specific characteristics, offering progression modeling that is unavailable with CycleGAN’s cycle consistency or diffusion models’ denoising.

- Addressing data imbalance through temporal augmentation: RipenessGAN’s synthetic data mitigates class imbalance by generating intermediate temporal states, providing more comprehensive augmentation than domain translation approaches.

- Comprehensive, three-way comparison: We implemented a controlled comparison methodology, ensuring fair evaluation between RipenessGAN, CycleGAN, and DDPM using an equivalent computational budget, an identical training dataset, and comparable model complexity.

2. Materials and Methods

2.1. Dataset Acquisition and Preprocessing

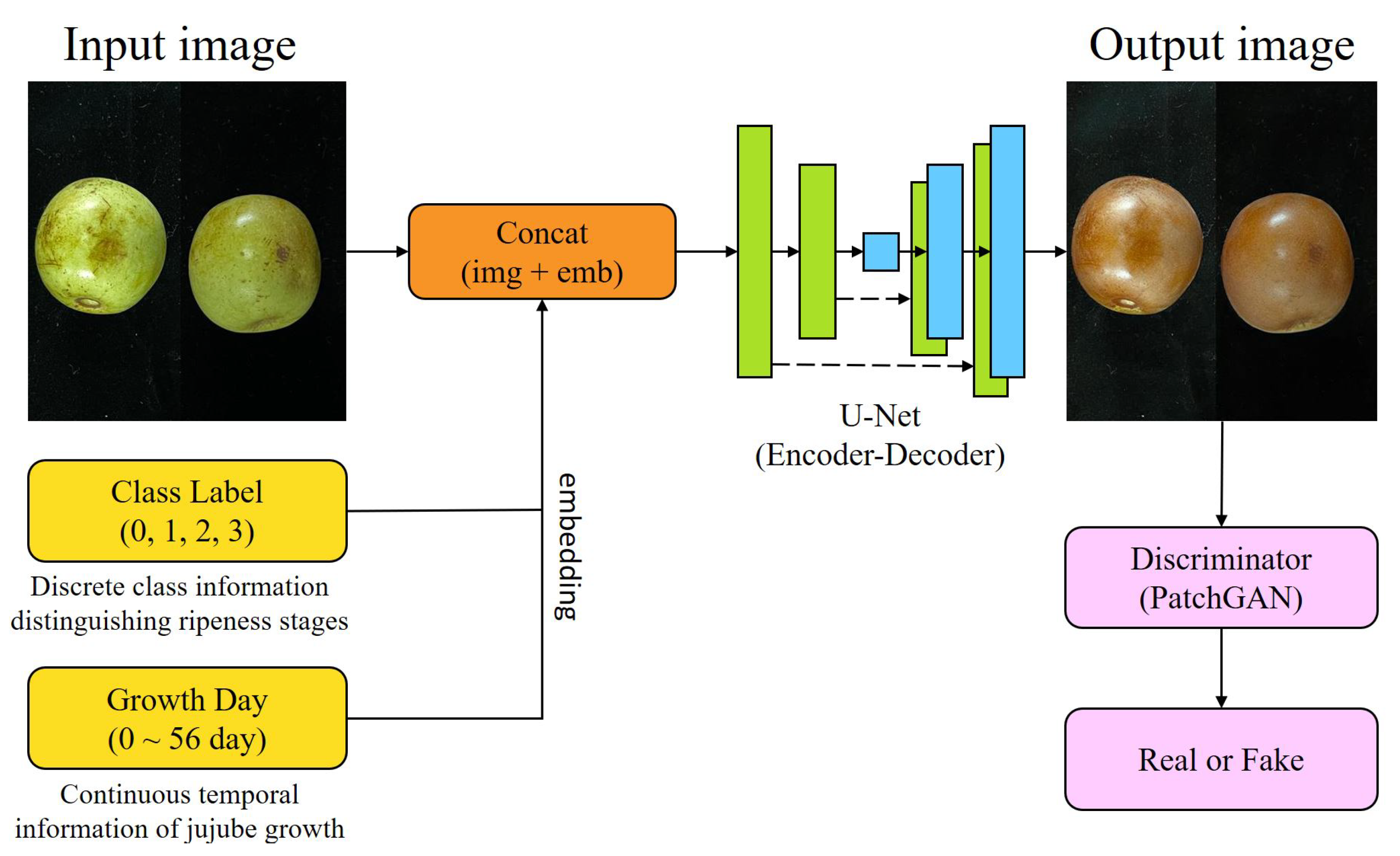

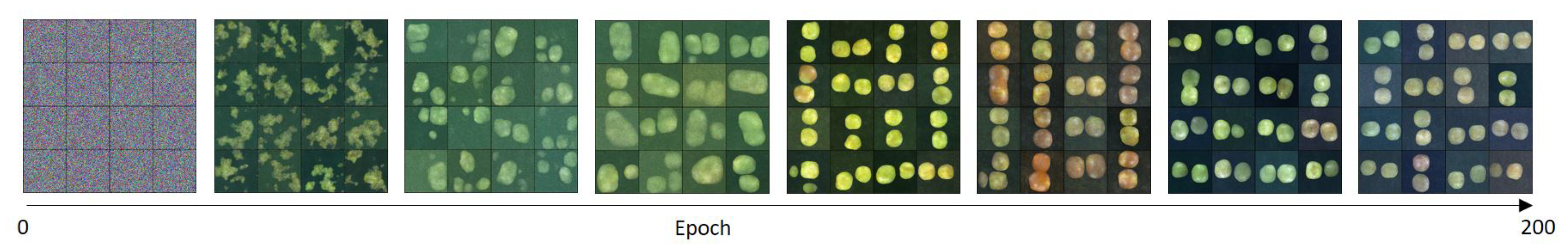

2.2. RipenessGAN Framework with Growth Day Embedding

2.2.1. Growth Day Embedding Architecture

| Algorithm 1 RipenessGAN Training with Hinge Adversarial and Temporal Consistency Loss |

|

2.2.2. Generator and Discriminator Structure

- Encoder: Five convolutional blocks (kernel size , stride 2) progressively downsampling to features.

- Class/Day embedding:

- Decoder: Five transposed convolutional blocks (kernel size , stride 2) with skip connections, progressively upsampling to .

- Self-attention [23]: A self-attention layer is inserted at a resolution to capture long-range dependencies.

- Input: Image + concatenated embeddings .

- Backbone: Six convolutional blocks (kernel size ; stride 2) with spectral normalization.

- Output: feature map, where each element represents the real/fake probability for a patch.

- Attention mechanism: Self-attention layer at resolution.

2.2.3. Growth Day-Based Loss Functions

2.3. Fair Comparison Methodology for RipenessGAN vs. CycleGAN vs. DDPM (Diffusion)

2.4. CycleGAN Implementation

2.5. DDPM Implementation

2.6. Classifier Training Protocol

3. Results

3.1. Evaluation Metrics

3.2. Three-Way Comparison Results: RipenessGAN vs. CycleGAN vs. DDPM (Diffusion)

3.3. Computational Efficiency Analysis

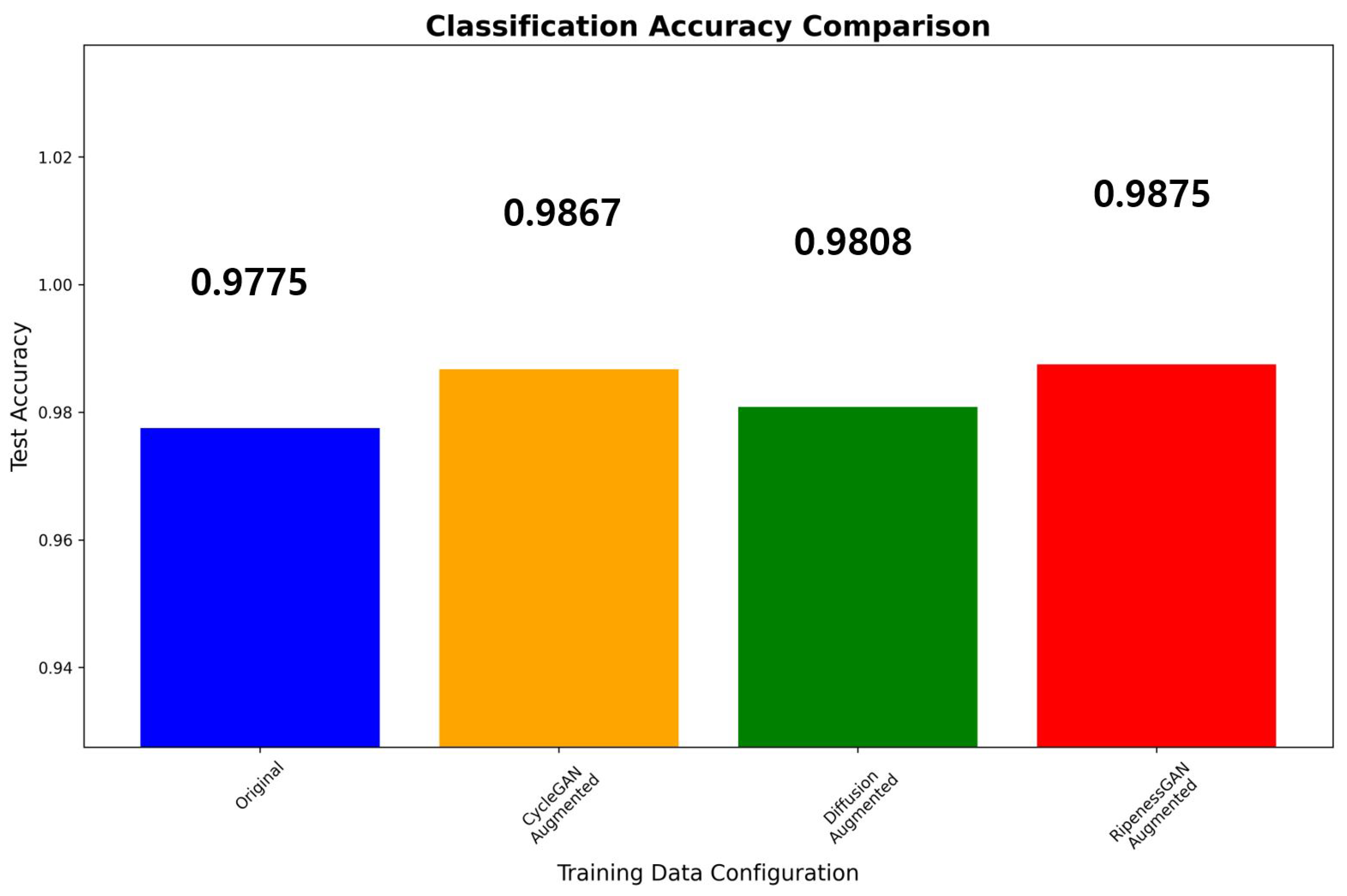

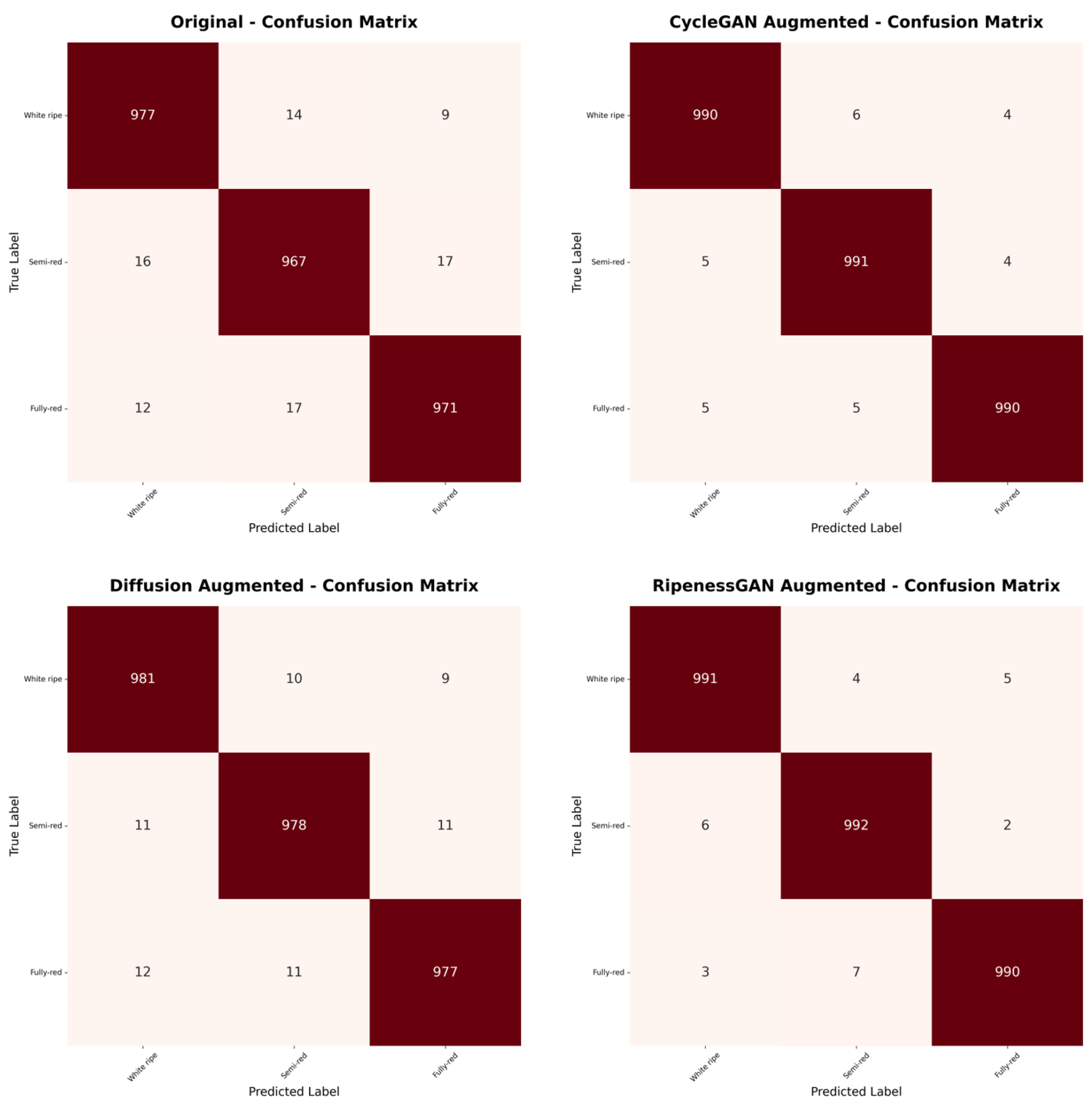

3.4. Ablation Study: Effect of Synthetic Data Augmentation

3.5. Stage-Wise Ripeness Transformation Experiments

4. Discussion

Limitations and Generalizability

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2020; Volume 33, pp. 6840–6851. [Google Scholar]

- Nichol, A.Q.; Dhariwal, P. Improved Denoising Diffusion Probabilistic Models. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8162–8171. [Google Scholar]

- Bird, J.J.; Barnes, C.M.; Manso, L.J.; Ekárt, A.; Faria, D.R. Fruit quality and defect image classification with conditional GAN data augmentation. Sci. Hortic. 2022, 293, 110684. [Google Scholar] [CrossRef]

- Cang, H.; Yan, T.; Duan, L.; Yan, J.; Zhang, Y.; Tan, F.; Lv, X.; Gao, P. Jujube quality grading using a generative adversarial network with an imbalanced data set. Biosyst. Eng. 2023, 236, 224–237. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Adegbenjo, A.O.; Ngadi, M.O. Handling the imbalanced problem in agri-food data analysis. Foods 2024, 13, 3300. [Google Scholar] [CrossRef]

- Waldner, F.; Chen, Y.; Lawes, R.; Hochman, Z. Needle in a haystack: Mapping rare and infrequent crops using satellite imagery and data balancing methods. Remote Sens. Environ. 2019, 233, 111375. [Google Scholar] [CrossRef]

- Hu, X.; Chen, H.; Duan, Q.; Ahn, C.K.; Shang, H.; Zhang, D. A Comprehensive Review of Diffusion Models in Smart Agriculture: Progress, Applications, and Challenges. arXiv 2025, arXiv:2507.18376. [Google Scholar] [CrossRef]

- Liu, D.; Cao, Y.; Yang, J.; Wei, J.; Zhang, J.; Rao, C.; Wu, B.; Zhang, D. SM-CycleGAN: Crop image data enhancement method based on self-attention mechanism CycleGAN. Sci. Rep. 2024, 14, 9277. [Google Scholar] [CrossRef]

- Wang, L.; Wang, L.; Chen, S. ESA-CycleGAN: Edge feature and self-attention based cycle-consistent generative adversarial network for style transfer. IET Image Process. 2022, 16, 176–190. [Google Scholar] [CrossRef]

- Muhammad, A.; Salman, Z.; Lee, K.; Han, D. Harnessing the power of diffusion models for plant disease image augmentation. Front. Plant Sci. 2023, 14, 1280496. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Y.; Nezami, F.R.; Edelman, E.R. A transformer-based pyramid network for coronary calcified plaque segmentation in intravascular optical coherence tomography images. Comput. Med Imaging Graph. 2024, 113, 102347. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, J.; Jin, S.; Lu, S. Vision-language models for vision tasks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5625–5644. [Google Scholar] [CrossRef] [PubMed]

- Huang, M.; Gu, C.; Bai, Y. Effect of fertilization on methane and nitrous oxide emissions and global warming potential on agricultural land in China: A meta-analysis. Agriculture 2023, 14, 34. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Cui, Y.; Jia, M.; Lin, T.Y.; Song, Y.; Belongie, S. Class-balanced loss based on effective number of samples. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9268–9277. [Google Scholar]

- Shahhosseini, M.M. Optimized Ensemble Learning and Its Application in Agriculture. Ph.D. Thesis, Iowa State University, Ames, IA, USA, 2021. [Google Scholar]

- Ban, Z.; Fang, C.; Liu, L.; Wu, Z.; Chen, C.; Zhu, Y. Detection of fundamental quality traits of winter jujube based on computer vision and deep learning. Agronomy 2023, 13, 2095. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Lucic, M.; Kurach, K.; Michalski, M.; Gelly, S.; Bousquet, O. Are gans created equal? A large-scale study. arXiv 2018, arXiv:1711.10337. [Google Scholar] [CrossRef]

- Maryam, M.; Biagiola, M.; Stocco, A.; Riccio, V. Benchmarking Generative AI Models for Deep Learning Test Input Generation. In Proceedings of the 2025 IEEE Conference on Software Testing, Verification and Validation (ICST), Napoli, Italy, 31 March–4 April 2025; pp. 174–185. [Google Scholar]

- Morande, S. Benchmarking Generative AI: A Comparative Evaluation and Practical Guidelines for Responsible Integration into Academic Research. 2023. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4571867 (accessed on 14 October 2025).

- Rosenkranz, M.; Kalina, K.A.; Brummund, J.; Kästner, M. A comparative study on different neural network architectures to model inelasticity. Int. J. Numer. Methods Eng. 2023, 124, 4802–4840. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Gao, Z.; Sun, Y. Statistical Inference for Generative Model Comparison. arXiv 2025, arXiv:2501.18897. [Google Scholar]

- Lesort, T.; Stoian, A.; Goudou, J.F.; Filliat, D. Training discriminative models to evaluate generative ones. In Proceedings of the International Conference on Artificial Neural Networks, Munich, Germany, 17–19 September 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 604–619. [Google Scholar]

- Joshi, A.; Guevara, D.; Earles, M. Standardizing and centralizing datasets for efficient training of agricultural deep learning models. Plant Phenomics 2023, 5, 0084. [Google Scholar] [CrossRef]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. Gans trained by a two time-scale update rule converge to a local nash equilibrium. arXiv 2017, arXiv:1706.08500. [Google Scholar] [CrossRef]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar]

- Buttcher, S.; Clarke, C.L.; Cormack, G.V. Information Retrieval: Implementing and Evaluating Search Engines; Mit Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Stehman, S.V. Selecting and interpreting measures of thematic classification accuracy. Remote Sens. Environ. 1997, 62, 77–89. [Google Scholar] [CrossRef]

- Yoo, T.K.; Choi, J.Y.; Kim, H.K. CycleGAN-based deep learning technique for artifact reduction in fundus photography. Graefe’s Arch. Clin. Exp. Ophthalmol. 2020, 258, 1631–1637. [Google Scholar] [CrossRef] [PubMed]

- Karras, T.; Laine, S.; Aila, T. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4401–4410. [Google Scholar]

- Jazbec, M.; Wong-Toi, E.; Xia, G.; Zhang, D.; Nalisnick, E.; Mandt, S. Generative Uncertainty in Diffusion Models. arXiv 2025, arXiv:2502.20946. [Google Scholar] [CrossRef]

| Model | Class | FID (↓) | PSNR (↑) | SSIM (↑) | LPIPS (↓) |

|---|---|---|---|---|---|

| CycleGAN | White ripe | 125.3880 | 15.0891 | 0.1906 | 0.4015 |

| Semi-red | 139.2082 | 15.5606 | 0.1715 | 0.4293 | |

| Fully red | 92.2703 | 16.7293 | 0.2924 | 0.3997 | |

| Average | 118.9555 | 15.7930 | 0.2182 | 0.4102 | |

| DDPM (Diffusion) | White ripe | 60.9517 | 15.6192 | 0.4692 | 0.4210 |

| Semi-red | 58.0850 | 16.0710 | 0.4636 | 0.4423 | |

| Fully red | 100.6835 | 17.1673 | 0.4765 | 0.4756 | |

| Average | 73.2400 | 16.2858 | 0.4698 | 0.4463 | |

| RipenessGAN (Proposed method) | White ripe | 22.9409 | 15.6279 | 0.4413 | 0.3538 |

| Semi-red | 58.2432 | 15.8139 | 0.3576 | 0.3543 | |

| Fully red | 84.4496 | 17.0849 | 0.4780 | 0.3897 | |

| Average | 55.2112 | 16.1756 | 0.4256 | 0.3659 |

| Model | Class | Precision | Recall | F1 | Acc. (%) |

|---|---|---|---|---|---|

| Original | White ripe | 0.9689 | 0.9777 | 0.9732 | 97.75 |

| Semi-red | 0.9621 | 0.9670 | 0.9646 | ||

| Fully red | 0.9723 | 0.9710 | 0.9716 | ||

| Original + CycleGAN | White ripe | 0.9900 | 0.9900 | 0.9900 | 98.67 |

| Semi-red | 0.9900 | 0.9910 | 0.9905 | ||

| Fully red | 0.9920 | 0.9900 | 0.9910 | ||

| Original + DDPM (Diffusion) | White ripe | 0.9761 | 0.9810 | 0.9785 | 98.08 |

| Semi-red | 0.9777 | 0.9780 | 0.9778 | ||

| Fully red | 0.9784 | 0.9770 | 0.9777 | ||

| Original + RipenessGAN (Proposed method) | White ripe | 0.9901 | 0.9910 | 0.9905 | 98.75 |

| Semi-red | 0.9871 | 0.9920 | 0.9896 | ||

| Fully red | 0.9949 | 0.9900 | 0.9924 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, J.-S.; Yoon, J.; Park, B.-J.; Kim, J.; Jee, S.C.; Song, H.-Y.; Chung, H.-J. RipenessGAN: Growth Day Embedding-Enhanced GAN for Stage-Wise Jujube Ripeness Data Generation. Agronomy 2025, 15, 2409. https://doi.org/10.3390/agronomy15102409

Kang J-S, Yoon J, Park B-J, Kim J, Jee SC, Song H-Y, Chung H-J. RipenessGAN: Growth Day Embedding-Enhanced GAN for Stage-Wise Jujube Ripeness Data Generation. Agronomy. 2025; 15(10):2409. https://doi.org/10.3390/agronomy15102409

Chicago/Turabian StyleKang, Jeon-Seong, Junwon Yoon, Beom-Joon Park, Junyoung Kim, Sung Chul Jee, Ha-Yoon Song, and Hyun-Joon Chung. 2025. "RipenessGAN: Growth Day Embedding-Enhanced GAN for Stage-Wise Jujube Ripeness Data Generation" Agronomy 15, no. 10: 2409. https://doi.org/10.3390/agronomy15102409

APA StyleKang, J.-S., Yoon, J., Park, B.-J., Kim, J., Jee, S. C., Song, H.-Y., & Chung, H.-J. (2025). RipenessGAN: Growth Day Embedding-Enhanced GAN for Stage-Wise Jujube Ripeness Data Generation. Agronomy, 15(10), 2409. https://doi.org/10.3390/agronomy15102409