1. Introduction

China is a major producer of

broccoli, accounting for over 30% of the global cultivation area [

1]. However, pests and diseases directly impact its yield and quality [

2]. Currently, the most effective method to combat pests and diseases remains chemical pesticide spraying [

3]. Traditional uniform application of pesticides [

4] leads to pesticide waste. Data shows that only about 30% of pesticides sprayed in the field adhere to the

broccoli plants, while the remaining 70% deposit into the soil or disperse into the atmosphere [

5], consequently causing soil and groundwater pollution. Precision-targeted pesticide spraying can reduce pesticide usage, improve utilization efficiency [

6], and minimize environmental pollution, making it an increasingly prominent technology.

The prerequisite for achieving precise pesticide application on

broccoli is the accurate identification of the target, obtaining precise information such as the target’s species and location [

7]. However, during actual recognition, complex backgrounds can adversely affect the model’s feature extraction [

8]. For instance, some weeds resemble

broccoli in shape, color, and texture, potentially causing the model to fail in extracting key features and leading to misclassifications [

9]. Furthermore, the computational power of edge devices often cannot match that of workstations. Practical field operations frequently face limitations due to constrained computing resources of edge devices [

10,

11], resulting in slow processing speeds and reduced accuracy during real-time recognition.

To date, numerous researchers have conducted studies on lightweight operations. Yuan et al. [

12] proposed a lightweight object detection model named CES-YOLO, which incorporates three key components: the C3K2-Ghost module, an EMA attention mechanism, and a SEAM detection head. This architecture significantly reduces computational complexity while maintaining high detection accuracy. On a blueberry ripeness detection task, the model achieved an mAP of 91.22%, with only 2.1 million parameters. Furthermore, it was successfully deployed on edge devices, enabling real-time detection capabilities. The study provides an efficient and practical solution for automated fruit maturity identification, demonstrating strong potential for smart agricultural applications. Qiu et al. [

13] addressed the need for potato seed tuber detection and proposed the DCS-YOLOv5s model. This model utilized DP_Conv to replace standard CBS convolution, reducing the number of parameters, and introduced Ghost convolution into the C3 module to decrease redundant features, achieving overall model lightweighting. Experiments showed that DCS-YOLOv5s achieved an mAP of 97.1%, a frame rate of 65 FPS, and a computational cost of only 10.7 GFLOPs, reducing computation by 33.1% compared to YOLOv5s. Tang et al. [

14] targeted automated tea recognition and grading needs, introduced MobileNetV3 as the backbone network into YOLOv5n. They achieved an mAP of 89.56% for four tea categories and 93.17% for three tea categories, with a model size of only 4.98 MB, a reduction of 2 MB compared to the original model. Chen et al. [

15] proposed a lightweight detection model based on YOLOv5s, constructing an efficient lightweight framework using ODConv (Omni-Dimensional Dynamic Convolution) for feature extraction. Compared to the original model, the proposed model’s mAP increased by 7.21% to 98.58%, while parameters and FLOPs decreased by 70.8% and 28.3%, respectively, providing an effective solution for recognizing rice grains under conditions of high density and tight adhesion.

The aforementioned studies validate the feasibility of lightweight technology in agricultural object detection. However, existing research often focuses on static optimization for single scenarios or specific backgrounds, leading to poor model robustness in complex environments. Consequently, many researchers have investigated object detection under complex background conditions. Li et al. [

16] proposed a crop pest recognition method based on convolutional neural networks (CNNs). Addressing multi-scene environments in natural scenes, they fine-tuned a GoogLeNet model using a manually verified dataset, achieving high-precision classification of 10 common pest types with a recognition accuracy of 98.91%, providing an efficient solution for agricultural monitoring to address the challenges posed by complex natural environments in crop pest and disease detection. Zhang et al. [

17] proposed an enhanced detection method based on the YOLOX model. The approach incorporates an Efficient Channel Attention (ECA) mechanism to improve focus on diseased regions, employs Focal Loss to mitigate sample imbalance issues, and utilizes the hard-Swish activation function to increase detection speed. Evaluated on a cotton pest and disease image dataset with complex backgrounds, the model achieved an mAP of 94.60% and a real-time detection speed of 74.21 FPS, significantly outperforming mainstream algorithms. Furthermore, it was successfully deployed on mobile devices, demonstrating practical applicability in real-world scenarios. Ulukaya and Deari [

18] proposed a Vision Transformer-based approach for rice disease recognition in complex field environments. By integrating transfer learning, categorical focal loss, and data augmentation, the model effectively addressed class imbalance and background noise, achieving 88.57% accuracy in classifying five disease types and outperforming conventional CNNs, offering a robust solution for automated crop disease monitoring. Peng et al. [

19] focused on rice leaf disease detection, proposed the RiceDRA-Net model, which integrates a Res-Attention module to reduce information loss. Recognition accuracy reached 99.71% for simple backgrounds and 97.86% for complex backgrounds, outperforming other mainstream models and demonstrating stability and accuracy in complex scenarios.

In practical field operations, intertwined complex factors such as dynamic crop changes, soil morphology, and weeds pose greater challenges for lightweight designs that maintain both high-speed inference and high accuracy. Feature interference from complex backgrounds further exacerbates the trade-off between robustness and accuracy. Therefore, in the practical recognition process of field broccoli, achieving the lightweight requirements of high speed and high accuracy, while enhancing model robustness and accuracy in complex backgrounds, remains a significant challenge.

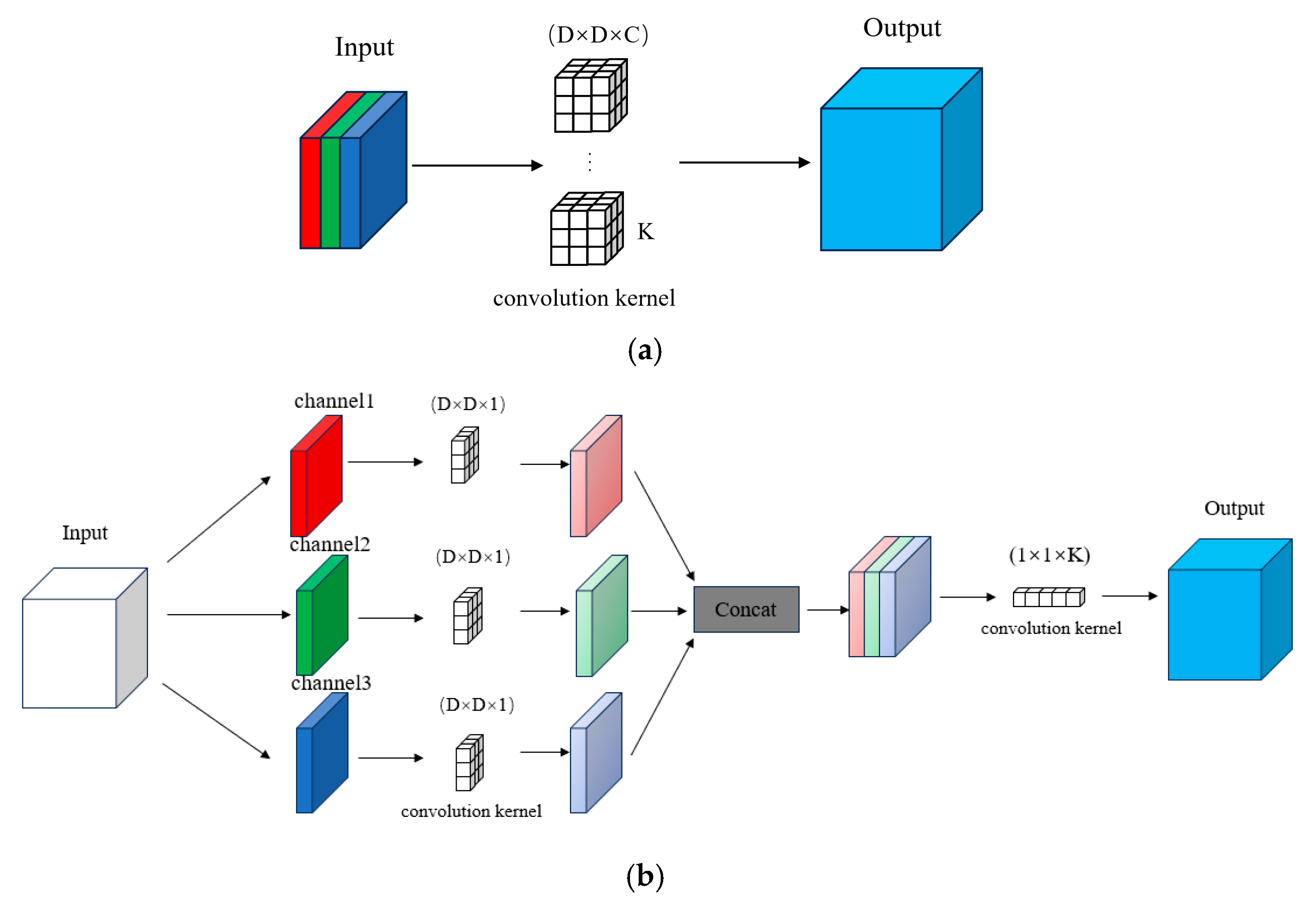

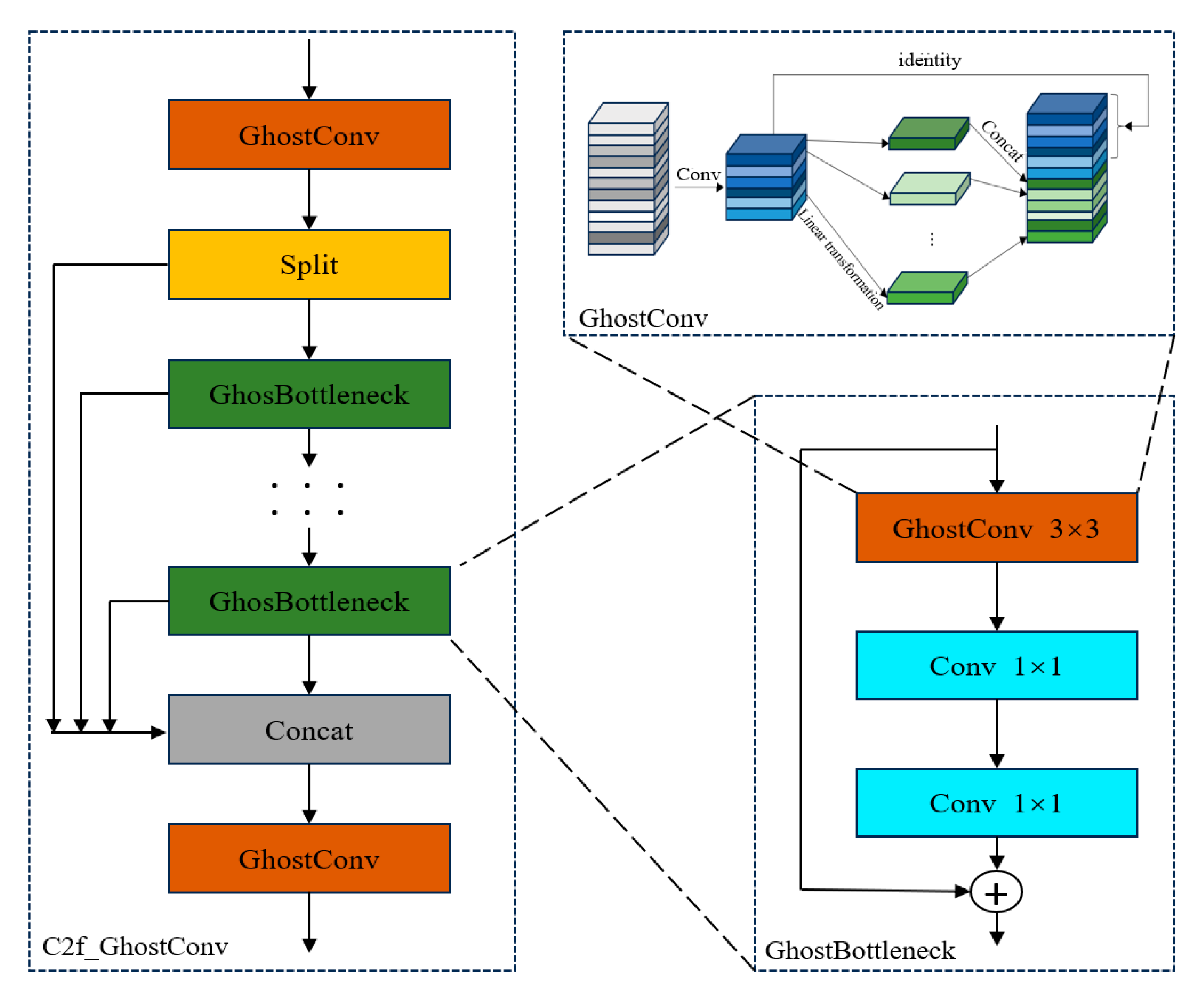

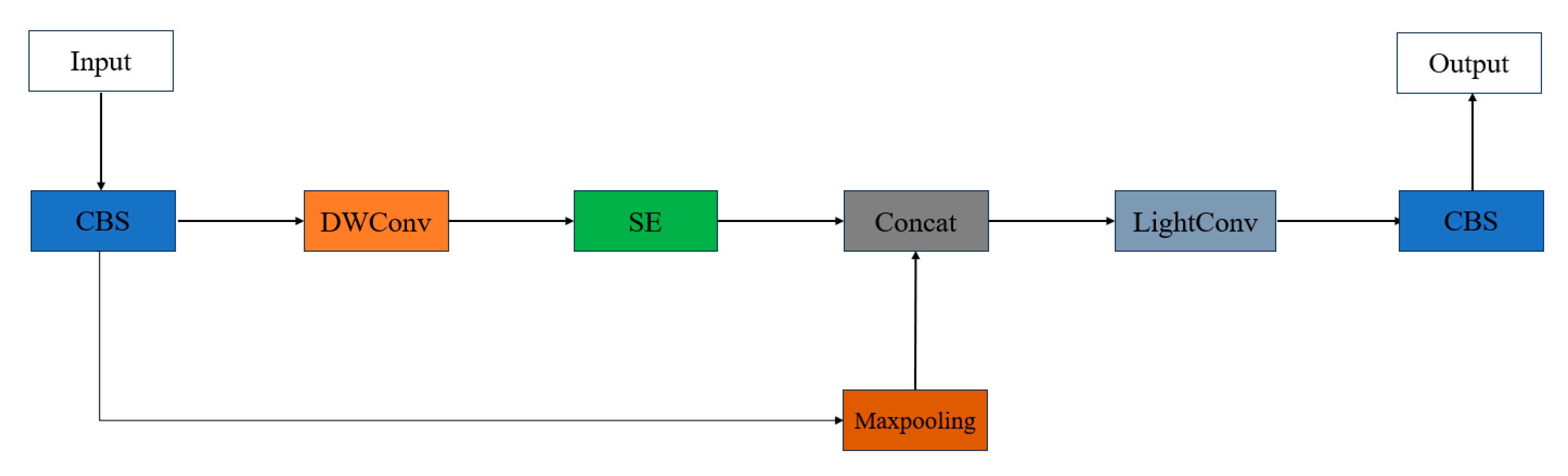

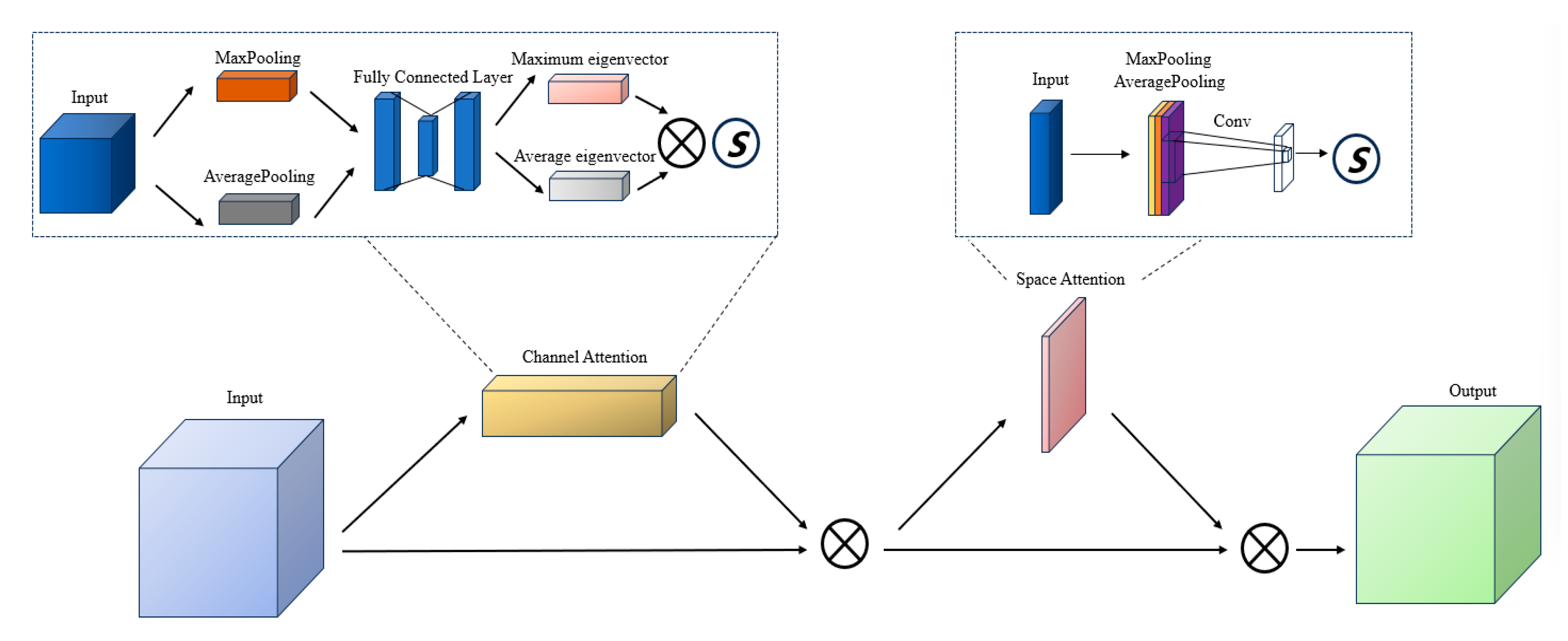

To address the above-described problems, this research proposes an improved model based on YOLOv8s to achieve more effective

broccoli detection. Firstly, the standard Conv module is replaced with the DWConv [

20] module, and GhostConv [

21] is introduced into the C2f module to form a C2f-GhostConv module, which helps reduce computational cost and the number of parameters while improving detection speed. Secondly, a CDSL module is designed to enhance the model’s ability to extract features from original images. The CBAM [

22] attention module is introduced to focus the model’s feature extraction on the target, suppress feature learning in non-target regions, and improve the ability to extract features in both channel and spatial dimensions. Finally, the WIoU loss function [

23] is adopted to accelerate convergence and reduce training loss.

3. Results

3.1. Ablation Study Results

To further evaluate the performance improvement effects of the various improvement methods and verify their feasibility, an ablation study was conducted using YOLOv8s as the baseline model. The aforementioned metrics were used as evaluation criteria, and the results are shown in

Table 3.

As shown in the table, replacing standard convolution with Depthwise Separable Convolution and modifying the C2f module by incorporating GhostConv reduced the model’s FLOPs and size, making the model more lightweight and improving inference speed. Using the CDSL module for initial feature extraction and adding the CBAM attention mechanism in the neck improved model accuracy but also increased model complexity and inference time. Replacing the CIoU loss function with WIoU improved the model’s recall, mAP, and processing speed, among other metrics.

Analyzing the table data, compared to the original YOLOv8s (Experiment 1), lightweighting the backbone using DWConv and the improved C2f_GhostConv module (Experiment 2) reduced FLOPs and model size by 46.4% and 54.7%, respectively, and reduced image processing time by 1.9 ms. However, precision and recall slightly decreased. The reason is that the improved C2f_GhostConv module reduces the number of convolution kernels, which focuses on extracting features from primary channels, potentially losing some features contained in the omitted channels, leading to a slight overall drop in accuracy. Replacing the initial Conv with the CDSL module for feature extraction and introducing CBAM in the neck (Experiment 3 vs. Experiment 1) increased FLOPs by 7.4%, but improved recall and mAP0.5 by 0.9% and 1.0%, respectively, while precision remained essentially unchanged. Changing the loss function to WIoU (Experiment 4 vs. Experiment 1) increased mAP0.5 by 0.6% and recall by 0.7%. The final improved model performance is shown in Experiment 8. Compared to the baseline network (Experiment 1), the model’s precision increased by 1.7 percentage points, recall by 0.9 percentage points, mAP0.5 by 3.4 percentage points, and while FLOPs decreased by 38.8%, model size decreased by 35.6%, and inference time reduced by 2.2 ms. DWG-YOLOv8, compared to YOLOv8s, enhances the recognition capability for field broccoli, reduces computational cost, decreases model size, and improves recognition accuracy and processing speed.

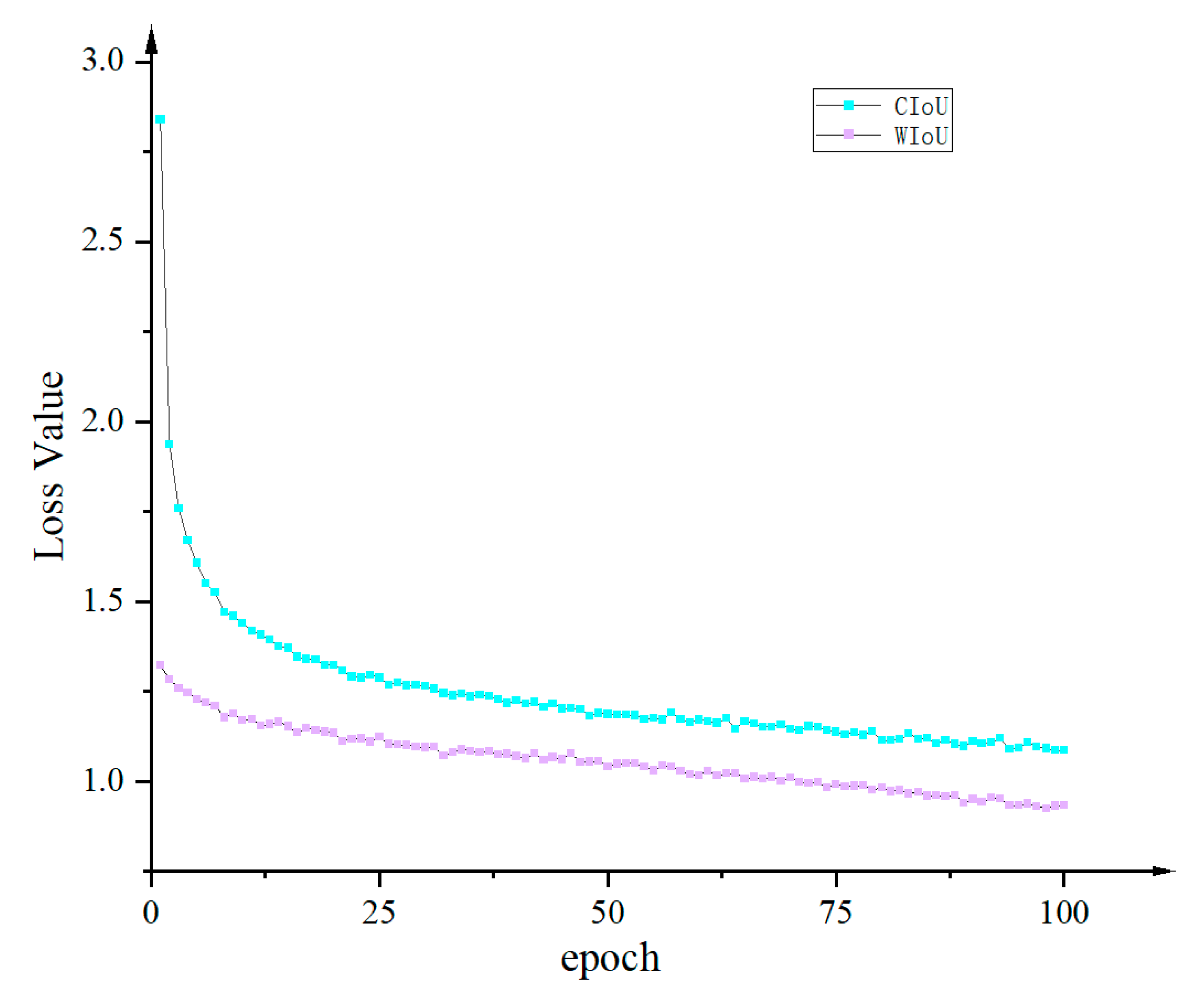

Figure 9 shows the loss comparison between the model from Experiment 1 (CIoU) and Experiment 4 (WIoU) over 100 epochs. Analysis shows that replacing the CIoU loss function with WIoU accelerated the model’s convergence speed and reduced the loss value. When using CIoU, the loss decreased at a slower rate after the 75th epoch and dropping from 2.84 to 1.13, with a final loss value of about 1.08 at convergence. In contrast, the WIoU loss function reached convergence around the 50th iteration, dropping from 1.32 to 1.03, and stabilized around 0.8 at final convergence.

3.2. Effect of Lightweight Methods

This paper employs DWConv and the C2f_GhostConv module for model lightweight design. According to the experimental results in

Table 3, comparing Experiment 1 and Experiment 2, the model’s mAP value remained unchanged, while FLOPs decreased from 28.4 G to 15.2 G, model size decreased from 22.5 MB to 10.2 MB, and image processing speed reduced from 10.8 ms to 8.9 ms. Comparing Experiment 3 and Experiment 5, the model’s mAP

0.5 increased from 87.9% to 88.4%, FLOPs decreased from 30.5 G to 17.3 G, model size decreased from 23.3 MB to 14.5 MB. Comparing Experiment 7 and Experiment 8, the model’s recall increased from 87.8% to 88.5%, mAP

0.5 increased from 89.6% to 90.3%, FLOPs decreased from 30.5 G to 17.4 G, weight size decreased from 23.3 MB to 14.5 MB, and single-image inference time also accelerated from 13.1 ms to 8.6 ms. These results indicate that the strategy of using DWConv and C2f_GhostConv for model lightweighting is feasible. It not only improves the model’s detection accuracy but also reduces computational cost, decreases model size, and accelerates inference speed.

3.3. Effect of CDSL Feature Extraction Module

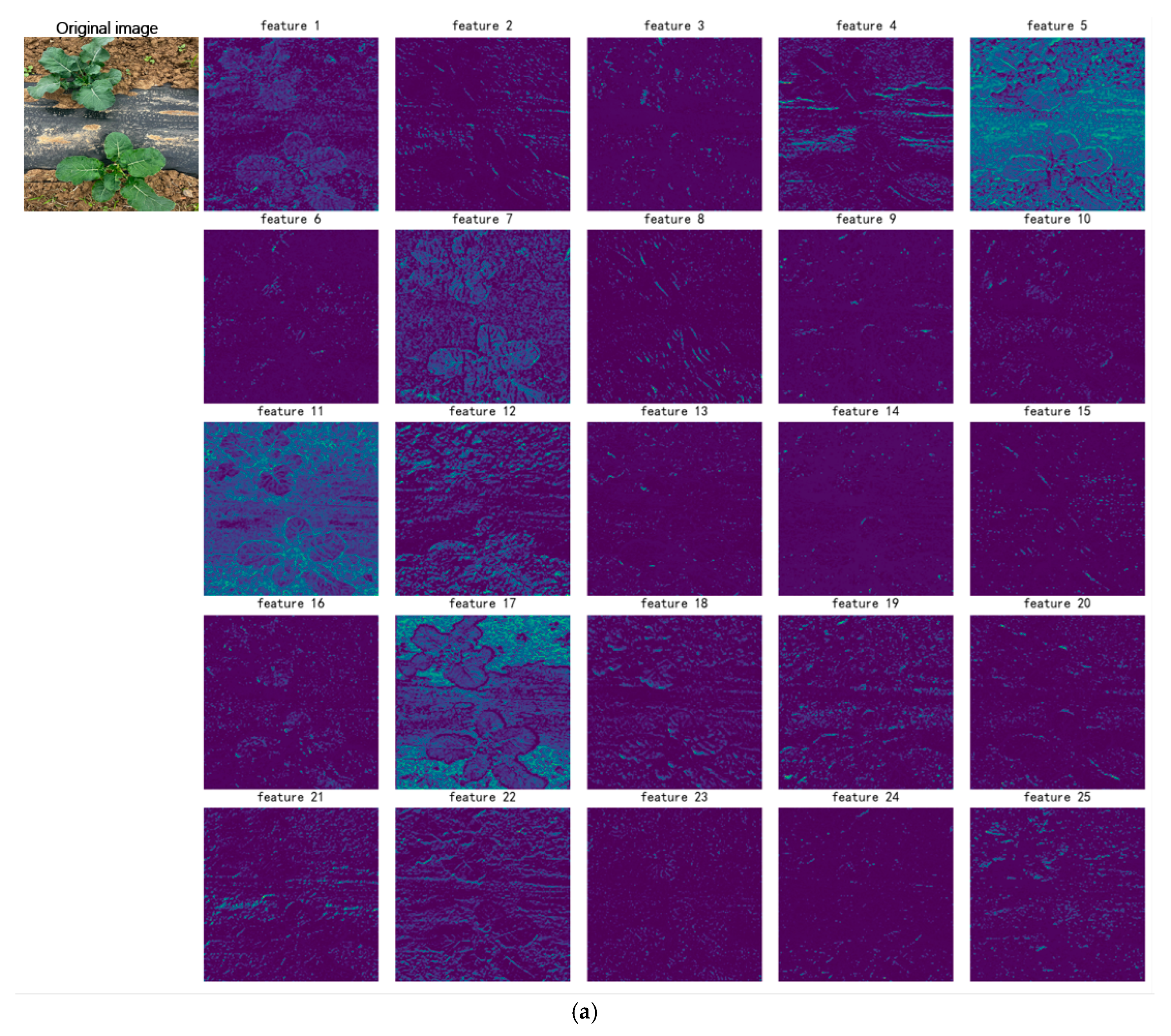

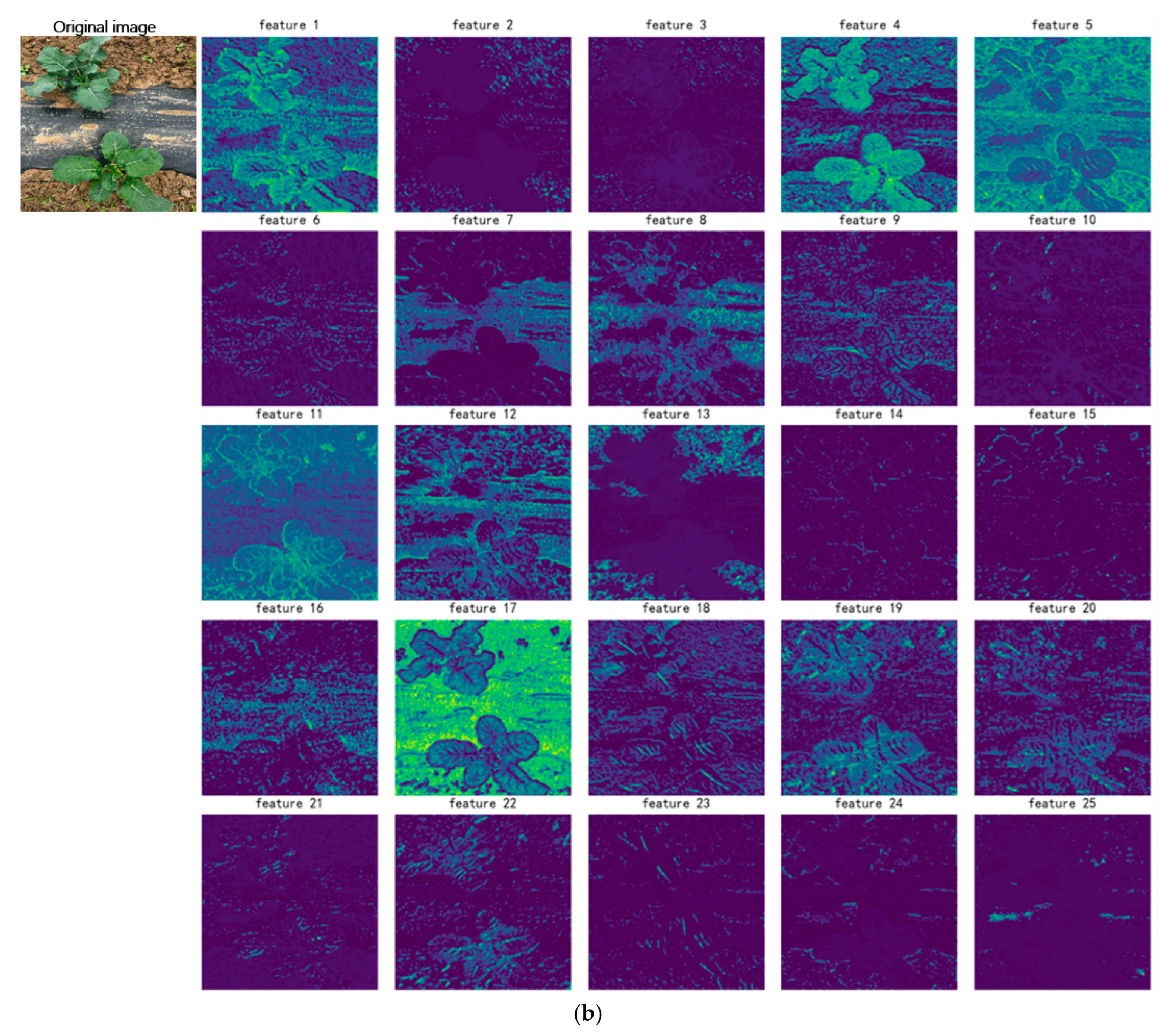

This paper has designed the CDSL module to replace the initial CBS module for feature extraction from the original image. To demonstrate this module’s enhancement of feature extraction capability, feature maps from the intermediate layers of the model were visualized [

27]. Feature maps are the result of convolutional kernels performing convolution operations on the input image and extracting corresponding features. They reflect the features learned by a specific layer of the model, such as edges, textures, colors and so on. Yellow areas in the feature maps indicate high activation values, meaning the model detected significant features relevant to the current layer’s task in that region. Blue areas indicate low activation values, suggesting lower relevance of features in that area to the current layer’s task. A comparison of feature maps from standard convolution and the CDSL module is shown in

Figure 10.

Compared to

Figure 10a, the bright areas in multiple feature maps in

Figure 10b are more pronounced, and details are clearer. For example, in feature maps 1, 4, 5, and 11, the target contours and target regions are more distinct. CDSL shows clearer contours and higher activation intensity in edge extraction. Comparing feature maps 8, 9, and 19, the CDSL feature maps contain more target details, including vein information and leaf texture features. This indicates that at the same depth, the CDSL module captures more refined and richer feature information, which is beneficial for improving detection accuracy.

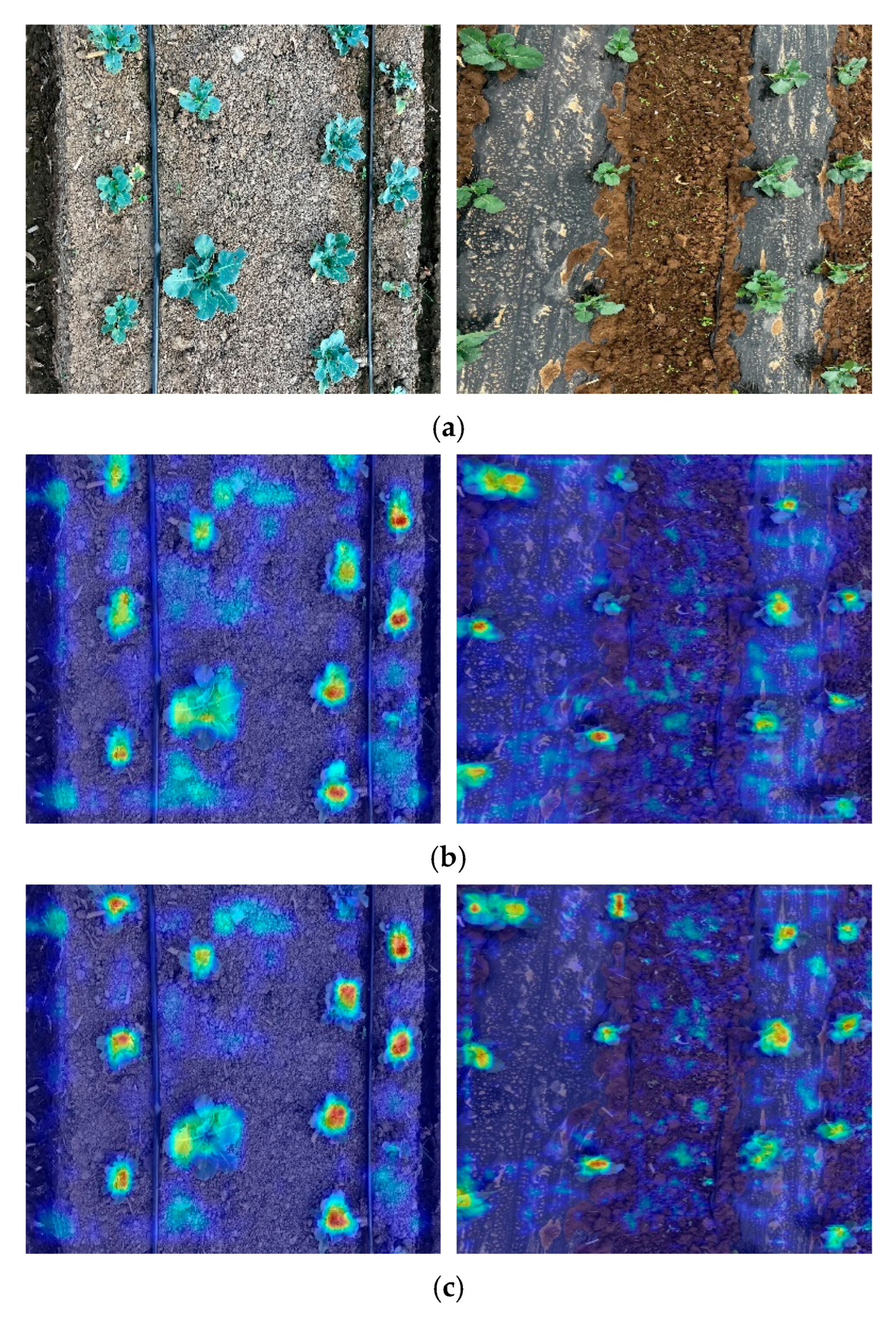

3.4. Effect of CBAM Attention Mechanism

To more intuitively observe the improvement in model recognition effect due to the CBAM attention mechanism, heatmaps were plotted. Heatmaps are used to represent the model’s focus on different locations during the prediction process, visually reflecting the model’s regions of interest in the image [

28]. The color in the heatmaps represents the model’s feature attention status: red areas are very important for the model’s prediction, while blue indicates less important areas. Heatmaps before and after adding the CBAM attention mechanism are shown in

Figure 10.

Compared to

Figure 11b, the

broccoli target area in

Figure 11c is brighter and has higher coverage, while the brightness of incorrectly detected areas is reduced. The CBAM attention mechanism enhances the model’s feature extraction capability and provides a more complete extraction of target feature information. It increases the model’s attention to channel and spatial information, enhances perception of the correct target, and suppresses the impact of non-target regions on the overall predictive performance.

3.5. Performance Comparison of DWG-YOLOv8 with Other Network Models

The improved DWG-YOLOv8 model was compared with current mainstream models SSD [

29], Faster R-CNN [

30], YOLOv5s [

31], YOLOv8n, YOLOv8s, YOLOv8m and YOLOv11s [

32]. The experimental results are shown in

Table 4.

As shown in the table, DWG-YOLOv8 demonstrates certain advantages over other models in terms of accuracy, recall, and mAP0.5. On the broccoli dataset, its mAP0.5 outperforms SSD, Faster R-CNN, YOLOv5s, YOLOv8n, YOLOv8s, YOLOv8m, and YOLOv11s by 11.1%, 2.6%, 3.1%, 6.2%, 3.4%, 2.7%, and 1.3%, respectively. Additionally, DWG-YOLOv8 outperforms most models in both floating-point operations and model size. Compared to SSD, Faster R-CNN, YOLOv5s, YOLOv8s, YOLOv8m, and YOLOv11s, its model size is reduced by 83.9%, 86.6%, 25.3%, 35.6%, 72.1%, and 24.5%, respectively. Processing speed outperforms other models: single-frame image processing time is reduced by 28.0 ms, 104.7 ms, 5.6 ms, 2.2 ms, 8.5 ms, and 1.7 ms compared to SSD, Faster R-CNN, YOLOv5s, YOLOv8s, YOLOv8m, and YOLOv11s, respectively. Although YOLOv8n outperforms DWG-YOLOv8 in model size and processing speed, DWG-YOLOv8 leads by 6.2 percentage points in accuracy and lags by only 1.0 ms in single-image processing speed. Overall, DWG-YOLOv8 demonstrates superior broccoli detection performance in multi-scene environments compared to other mainstream models, validating the rationality of using YOLOv8s as the baseline network.

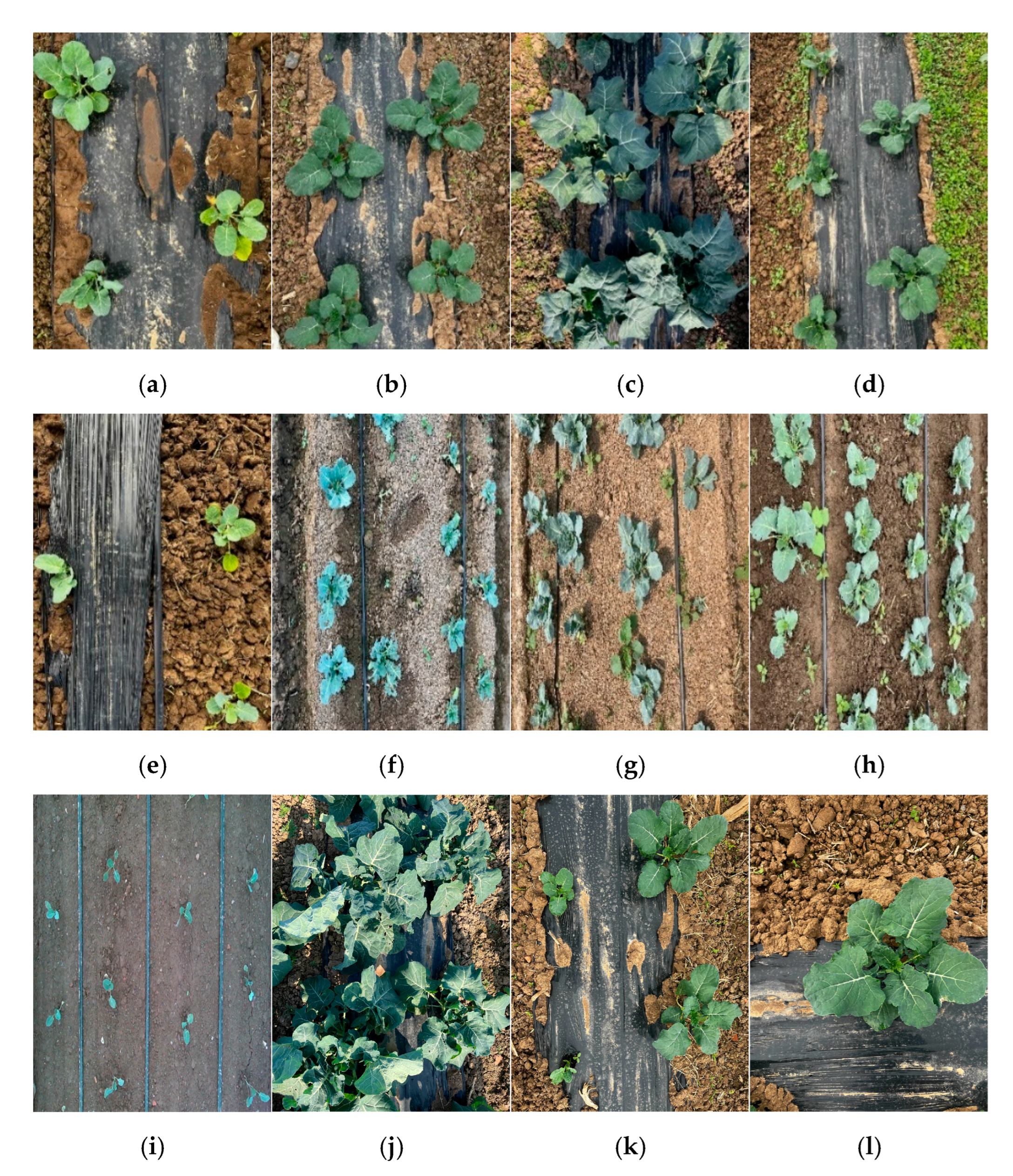

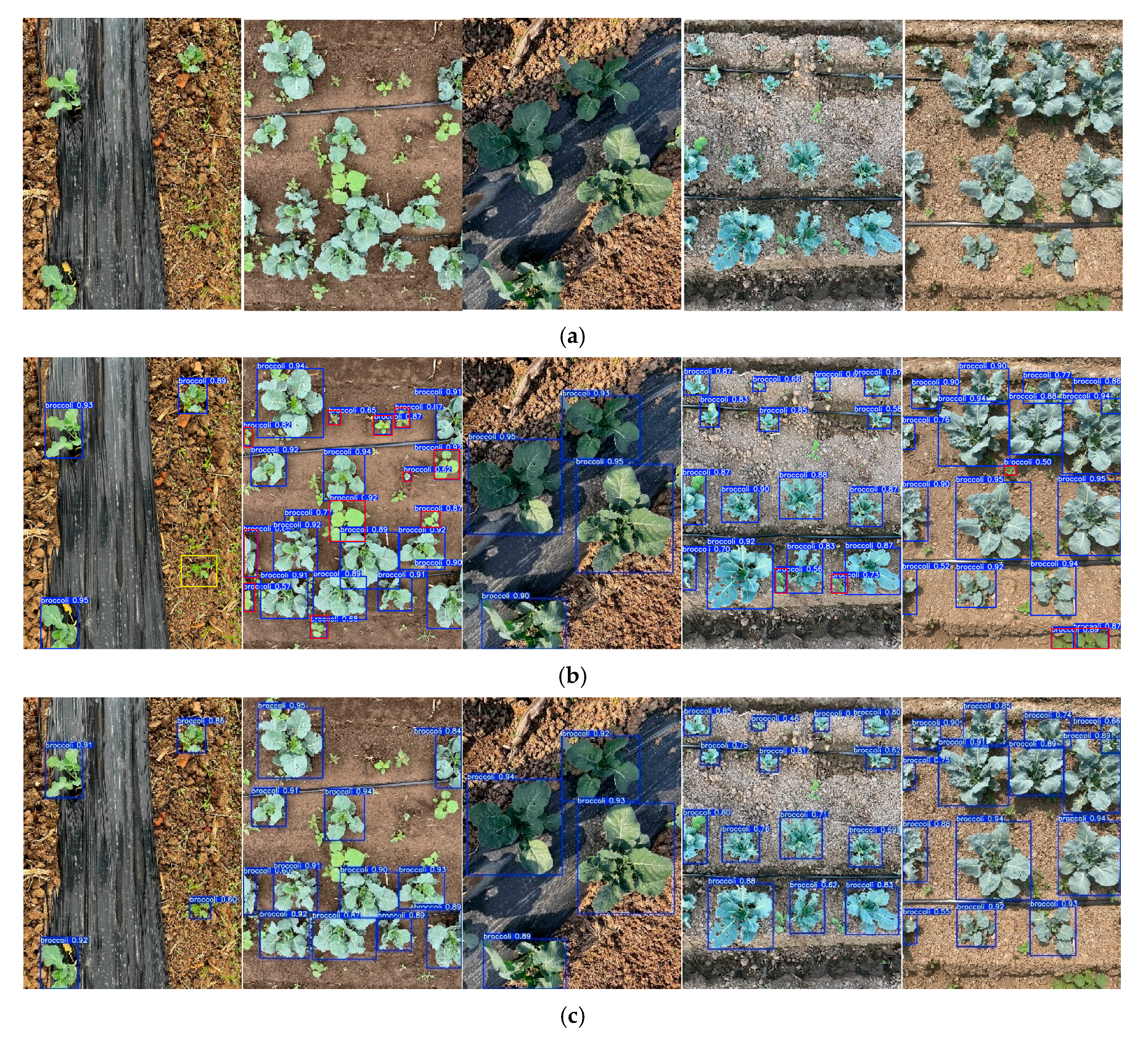

3.6. Complex Background Detection Effect Comparison

To verify the superiority of the proposed DWG-YOLOv8 in

broccoli detection under different complex background conditions, a comparative experiment was conducted using the trained DWG-YOLOv8 and YOLOv8s models on images selected from the test set. The selected images mainly included various conditions such as background with or without mulch, wet or dry soil, the presence of weeds, and different lighting conditions. The number of correctly identified targets, false positives, and missed detections was counted for each model. The results are shown in

Table 5.

Table 5 shows the recognition performance of the models before and after improvement on complex background image data. On the complex background image data with a total of 663 targets, the pre-improvement YOLOv8s had 21 Missed detections and 181 False Positives, with a Miss Rate and False Positive Rate of 3.2% and 21.9% (of detected objects), respectively. The improved DWG-YOLOv8 had only 12 Missed detections and 36 False Positives, with rates of 1.8% and 5.3%, respectively, decreases of 1.4% and 16.6% compared to the original model. This shows that the improved model outperforms the original YOLOv8s in both Miss Rate and False Positive Rate. Under different background conditions, DWG-YOLOv8 demonstrates better robustness and accuracy, particularly under weedy conditions where misidentification of weeds as

broccoli targets is significantly reduced.

Partial comparisons of partial recognition results are shown in

Figure 12. The figure shows that YOLOv8s is prone to missed detections in complex environments and, when weeds are abundant, often misidentifies weeds as

broccoli targets. The primary reasons for false detections of

broccoli targets by the YOLOv8s model can be summarized as follows: (1) Certain weeds exhibit high visual similarity to

broccoli in morphological structure and texture features, leading to confusion during the feature extraction stage. (2) The YOLOv8s model lacks dedicated channel and spatial attention mechanisms, resulting in insufficient capability to filter discriminative features. In the channel dimension, the model fails to sufficiently amplify feature channels relevant to

broccoli discrimination. In the spatial dimension, it struggles to focus on key target regions, ultimately leading to inadequate sensitivity in distinguishing subtle differences.

DWG-YOLOv8 extracts finer texture features through the CDSL module and enhances attention to target regions via the CBAM mechanism, demonstrating strong feature extraction capabilities and robust inference performance even in multi-scene environments. Under weed conditions, it significantly reduces the number of weeds misclassified as broccoli. Consequently, the improved DWG-YOLOv8 exhibits superior robustness in recognition performance across diverse background conditions compared to the original YOLOv8s model.

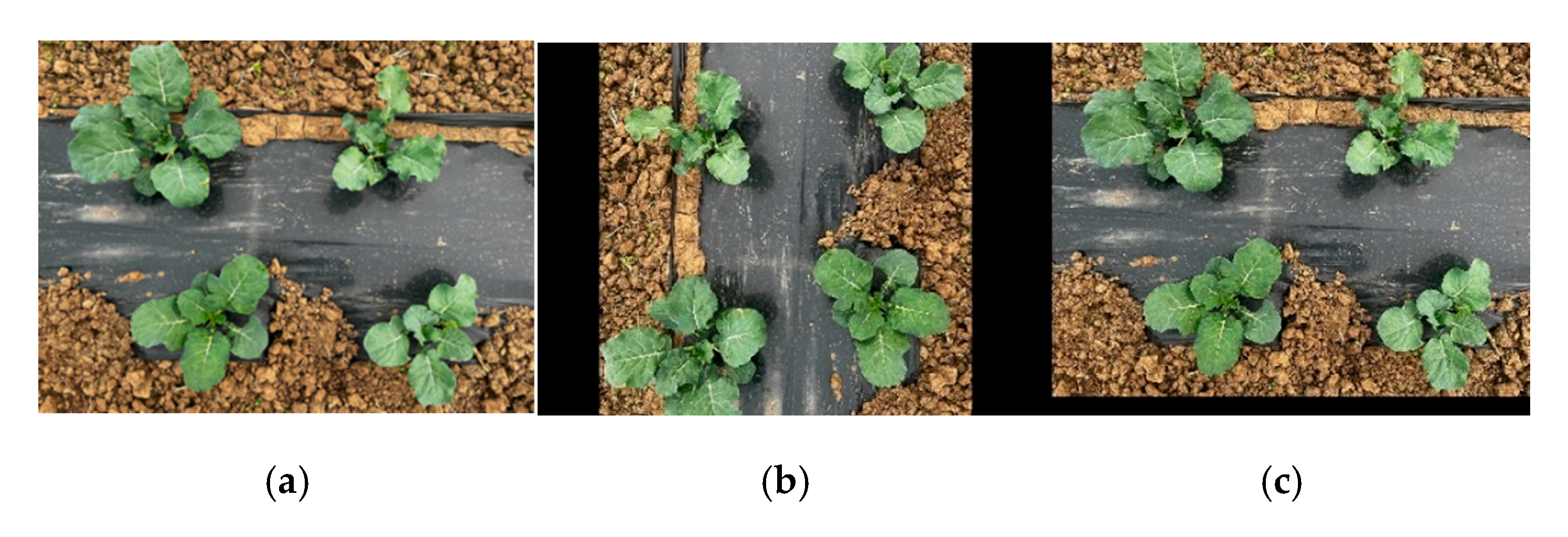

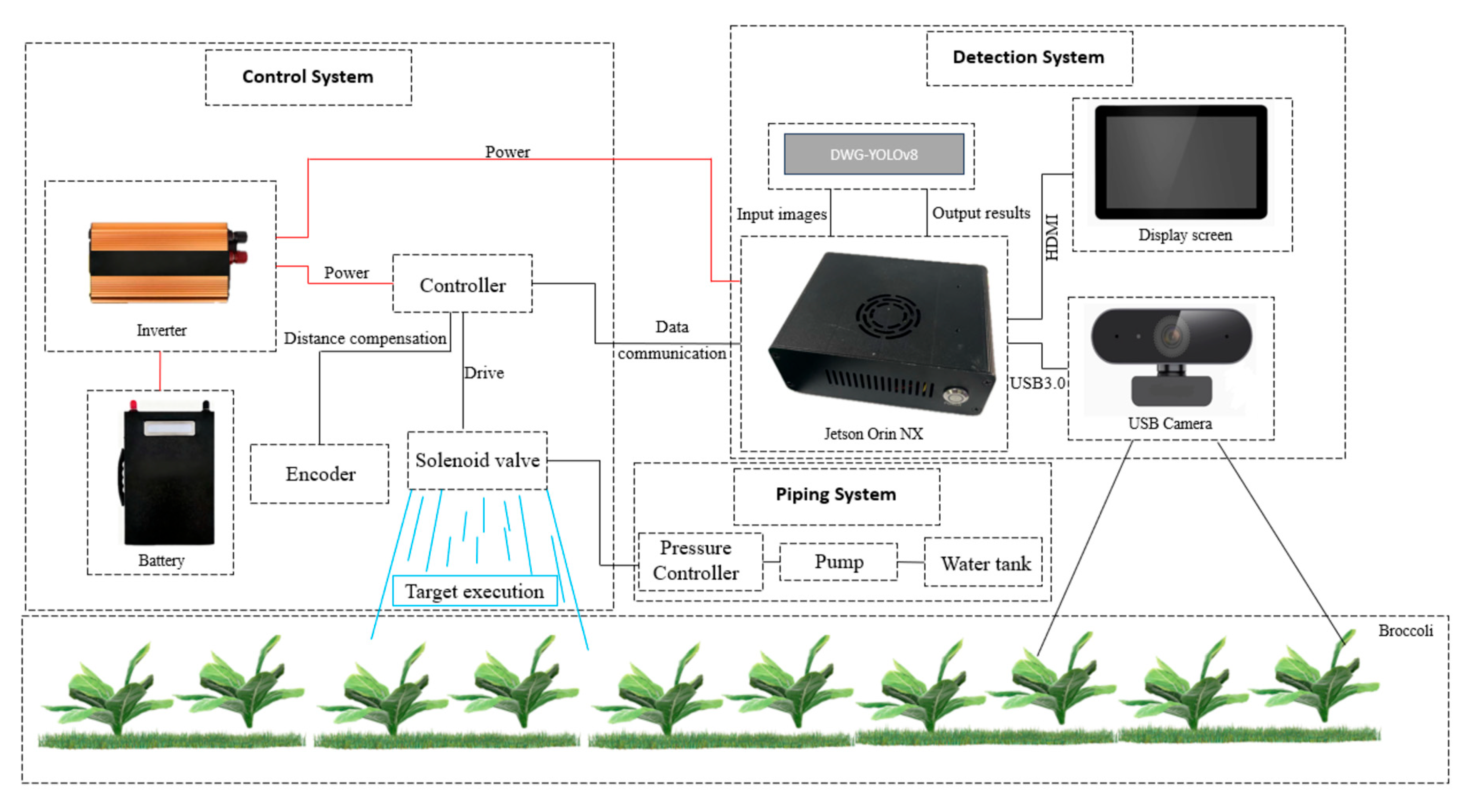

3.7. Edge Deployment

Edge devices often face insufficient computational power compared to workstations. To verify the operational performance of the DWG-YOLOv8 model on edge devices and to accelerate its inference speed on these devices, this study employed the TensorRT library [

33] for inference acceleration. TensorRT can reduce data transmission and computational overhead, increase model inference speed, and reduce inference latency.

First, the .pt weight file of the trained DWG-YOLOv8 model from the workstation was converted to universal .onnx format, which can be recognized and processed by TensorRT. The .onnx file was then imported onto the Jetson Orin NX device. Subsequently, TensorRT optimized the model structure of the .onnx file and performed compilation to generate the .engine inference engine. Deserializing the .engine file allows for accelerating inference. The frame rates of the models deployed on the device are shown in

Table 6.

As shown in the table, before TensorRT acceleration, the processing speed on the edge device, due to limited computational power, could not match that of the workstation, resulting in lower real-time detection frame rates (only 13.7 FPS for DWG-YOLOv8). After acceleration, the frame rate increased to 34.1 FPS, representing a 2.5-fold improvement in detection speed, achieving a single-image processing time of 29.33 ms.

To further test the practical application feasibility of DWG-YOLOv8 on edge devices for

broccoli recognition,

broccoli images data from actual field environments was selected for testing. The recognition results are shown in

Table 7. and partial recognition effect comparisons are shown in

Figure 12. Under cloudy weather,

broccoli and weeds have similar colors but distinct textures. Under sunny weather, high light intensity makes the images bright, and severe glare on some

broccoli veins causes loss of texture features. Under rainy weather, reduced light intensity lowers image contrast, and texture information for some

broccoli becomes blurred

As shown in the table, under edge device conditions, DWG-YOLOv8’s performance on broccoli image data from field environments is superior to YOLOv8s. For DWG-YOLOv8, out of 951 detected targets, 918 were correctly identified, with 22 missed and 33 false positives. For YOLOv8s, out of 983 detected targets, only 884 were correctly identified, with 56 missed and 99 false positives. The recognition accuracy of DWG-YOLOv8 was 96.53%, an improvement of 5.6% over YOLOv8s.

Figure 13 shows the recognition effect comparison under different weather conditions. As seen, under rainy conditions, YOLOv8s incorrectly identified 4 targets, while DWG-YOLOv8 had no missed or false detections. Under sunny weather, YOLOv8s had 2 false detections and 1 missed detection, while DWG-YOLOv8 had 1 missed detection. The reason for this is the high light intensity in sunny weather, causing small target

broccoli to lose some texture features, reducing the feature information acquired by the model and leading to missed detection. Under cloudy conditions, both YOLOv8s and DWG-YOLOv8 showed no missed or false detections. Overall, the deployment effect of DWG-YOLOv8 on the edge device is superior to YOLOv8s.

3.8. Analysis of Failure Cases

Although the DWG-YOLOv8 model demonstrates strong recognition accuracy and robustness in conventional detection scenarios, it still faces challenges of detection failure under certain extreme physical conditions. As shown in

Figure 14, when test samples exhibit severe motion blur, the model is prone to missed detections. The primary reasons are analyzed as follows:

Under the influence of motion blur, object edges in images become indistinct, and texture details become difficult to discern. This degradation in image quality makes it challenging for the model to accurately distinguish foreground targets from complex backgrounds during feature extraction, ultimately leading to increased classification error rates and reduced localization accuracy.

This phenomenon indicates that existing models still have limitations in feature robustness when confronted with complex physical interference. It also points to a key direction for future research aimed at enhancing the model’s adaptability to extreme environments.

4. Discussion

This research proposes DWG-YOLOv8, a lightweight detection model tailored for broccoli recognition. Based on the YOLOv8s architecture, it incorporates systematic enhancements across three key dimensions:

First, for lightweight design, standard convolutions in the backbone network are replaced with Depthwise Separable Convolution (DWConv). The C2f module incorporates Ghost Convolution to form the C2f_GhostConv structure, significantly reducing computational complexity and parameter count. Second, to enhance feature extraction capabilities, the CDSL module was designed. This embeds the CBAM attention mechanism within the Neck section, enabling the model to adaptively focus on key channels and spatial regions, thereby improving its ability to extract discriminative features of the target. Finally, the bounding box regression loss function was replaced with the WIoU loss to optimize convergence speed and stability during training.

In the experimental section, ablation studies validated the effectiveness and synergistic effects of each improved module. Model comparison experiments demonstrated that DWG-YOLOv8 outperforms existing mainstream methods in detection accuracy and robustness for broccoli in complex field environments. Edge deployment experiments further confirmed the model’s high efficiency and feasibility in practical applications.

Based on edge deployment results, DWG-YOLOv8 achieved ideal performance across various field scenarios in terms of timeliness, accuracy, and stability. Beyond performance metrics, the practical significance of this study lies in the model’s application value when deployed in precision application systems. The success of precision application depends not only on detection accuracy but also on inference speed, model size, and stability under typical variable field conditions—such as soil moisture fluctuations and weed presence. The DWG-YOLO model achieves significant volume compression and computational cost reduction. Its lightweight nature makes it particularly suitable for deployment on embedded devices commonly used in agricultural robots, such as the Jetson Orin NX. The model’s robustness to diverse field variations ensures reliable operation in real-world environments. Integration with TensorRT further enhances its practical value by achieving frame rates that meet the real-time demands of automated spraying platforms. Future work will focus on integrating this detection model with robotic execution systems to conduct closed-loop, end-to-end precision spraying trials. Overall, DWG-YOLOv8 maintains low computational resource consumption while demonstrating superior scene adaptability and detection performance, providing a viable technical approach for automated crop identification in field settings.

Compared with existing agricultural object detection methods, this study achieves significant progress in model lightweight design and adaptability to complex scenes. However, several issues remain to be explored in depth:

- 1.

Motion Blur Enhancement

Under certain levels of motion blur, model recognition performance degrades, leading to a significant increase in false negative rates. To address this, subsequent research will explore image restoration techniques to apply a degree of blur reduction before inputting images into the model, thereby mitigating the impact of motion blur. By modeling and filtering target states based on inter-frame motion continuity, the approach will alleviate image blur caused by rapid relative motion. This will enhance detection stability and trajectory continuity in dynamic scenes, enabling the model to reliably and accurately identify targets under motion-blurred conditions.

- 2.

Cross-Crop, Multi-Scenario Generalization Validation

Subsequent research will enhance the model’s generalization capabilities to enable recognition and detection across high-value crops such as tomatoes, cabbages, and cucumbers. The model’s application scenarios will be expanded to achieve robustness in diverse environments, including open fields, greenhouses, and orchards. Its operational conditions will be broadened to evaluate applicability under various light conditions for tasks like pesticide application guidance, crop monitoring, and yield estimation, thereby advancing the development of intelligent agricultural algorithms.

- 3.

Long-Term Deployment Validation for Targeted Application Scenarios

Integrate the model into an automated application robot platform to conduct targeted application research. Perform large-scale, long-term field trials in actual broccoli croplands. Validate the model’s comprehensive performance under complex lighting conditions, variable weather, and different growth stages. Achieve precise spraying by the application system in complex field environments, accelerating the translation of research outcomes into practical applications.

Through continuous exploration in the aforementioned directions, we are committed to further enhancing the practicality, adaptability, and scalability of this model in complex agricultural environments. This will enable precise and targeted spraying at crop locations in field settings, providing reliable technical support for high-precision, low-cost plant protection and production management.