Monitoring the Maize Canopy Chlorophyll Content Using Discrete Wavelet Transform Combined with RGB Feature Fusion

Abstract

1. Introduction

2. Materials and Methods

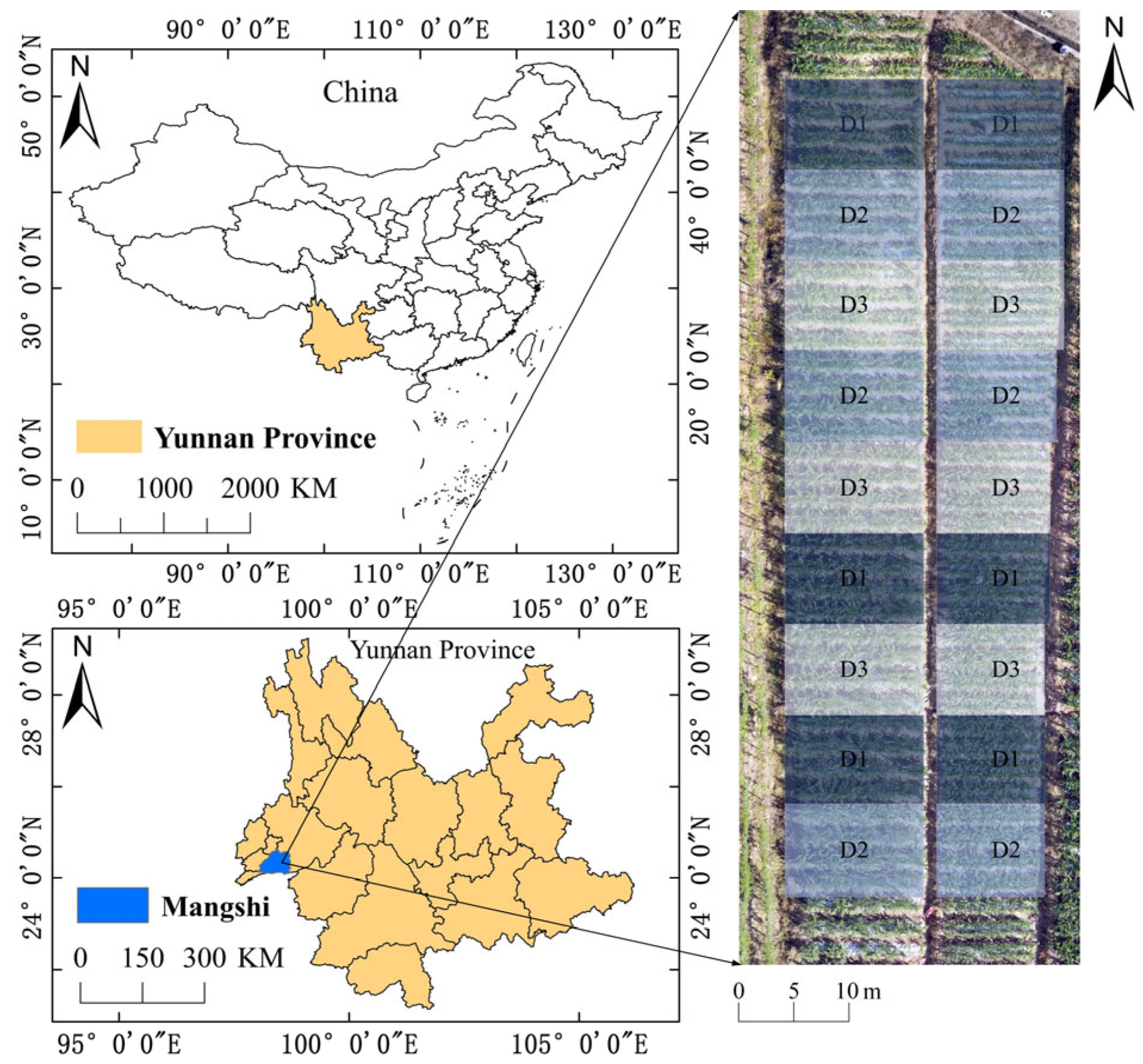

2.1. Study Area and Experimental Design

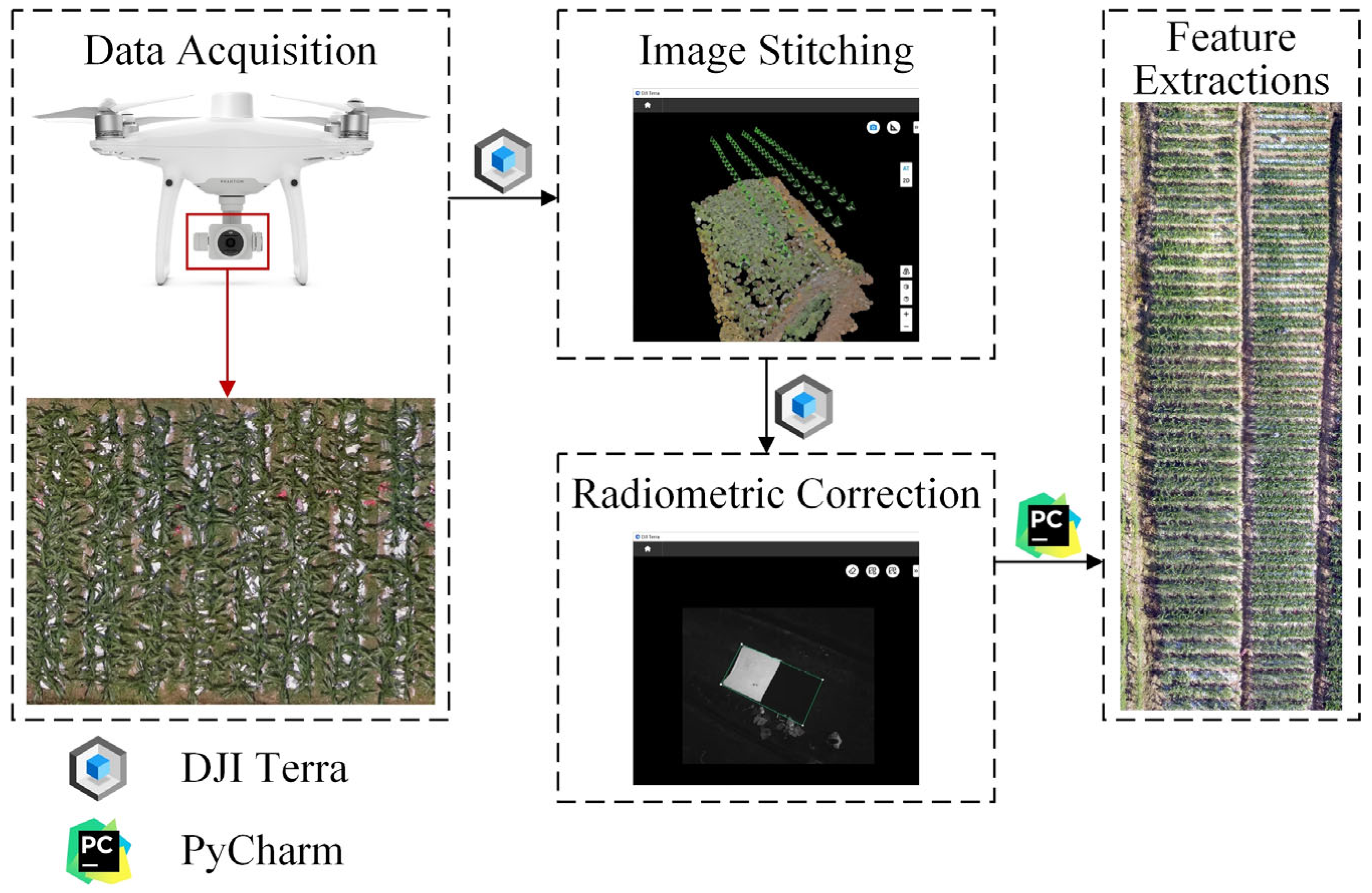

2.2. Acquisition and Processing of Aerial Images of Maize Canopy

2.3. Measurement of Chlorophyll Content

2.4. Research Methods

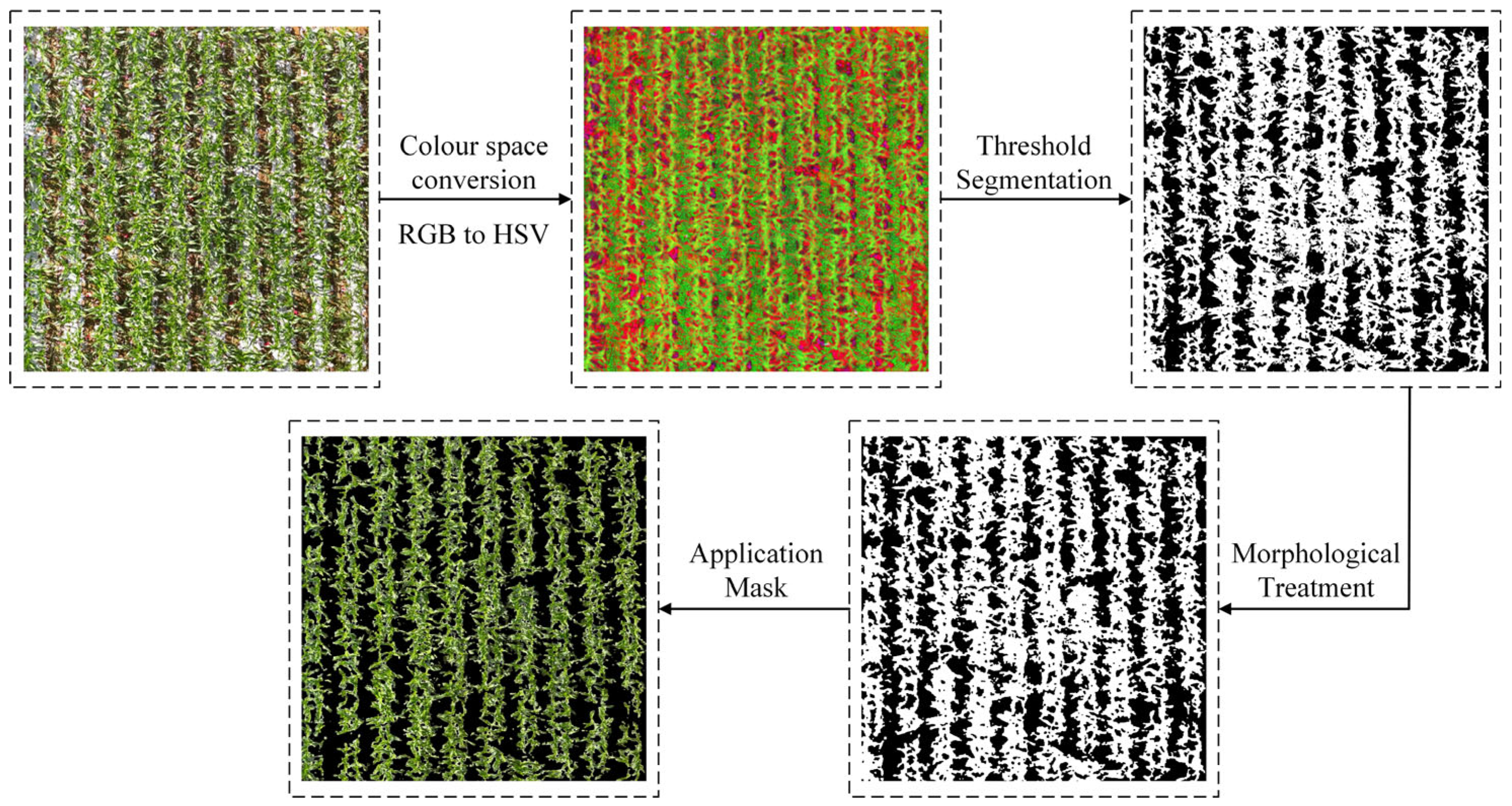

2.4.1. Color and Texture Feature Extraction

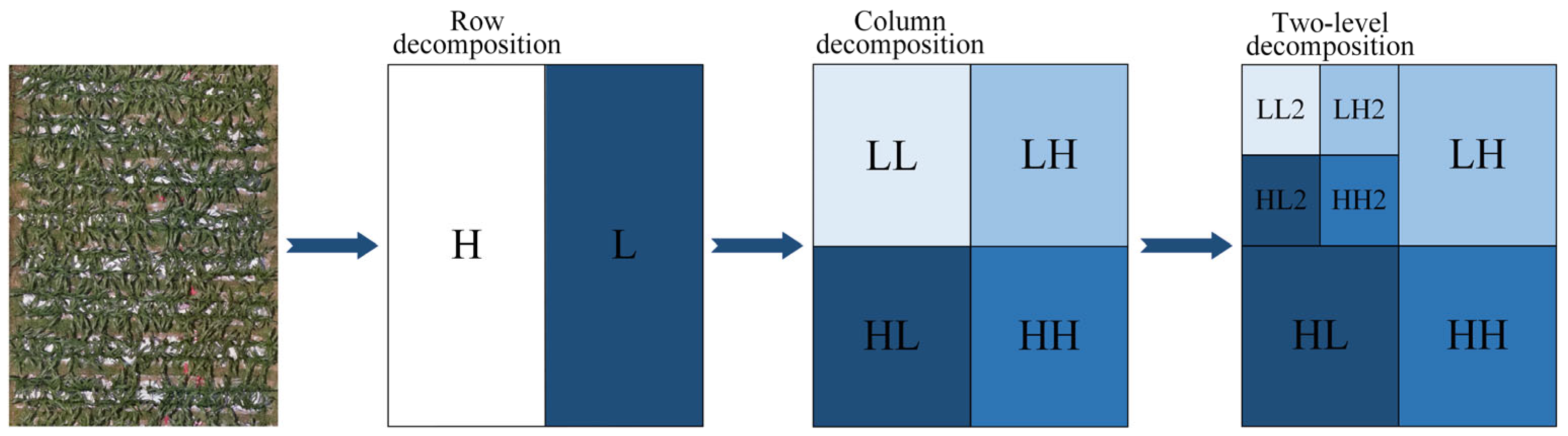

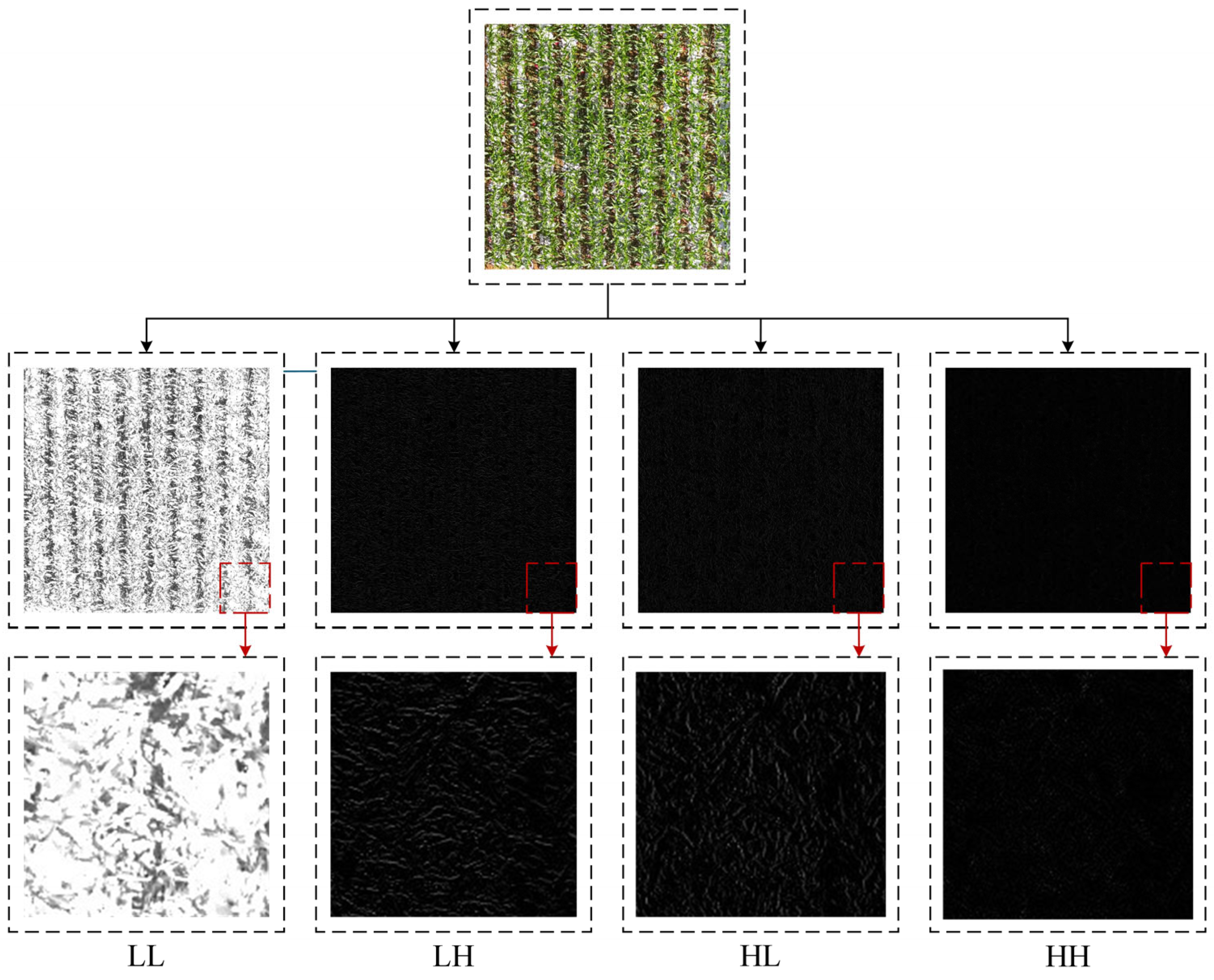

2.4.2. Discrete Wavelet Transform (DWT)

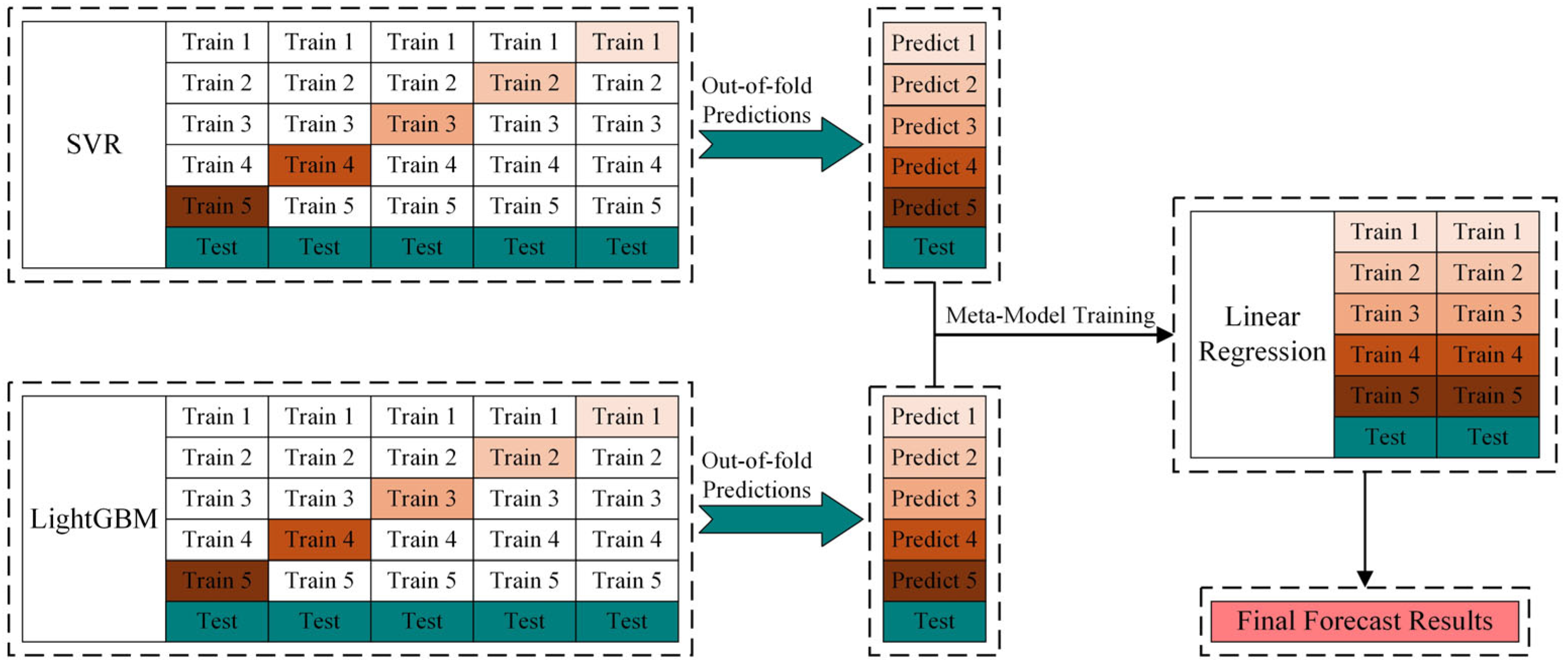

2.4.3. Machine Learning Model Selection

2.4.4. Model Accuracy Verification

3. Results

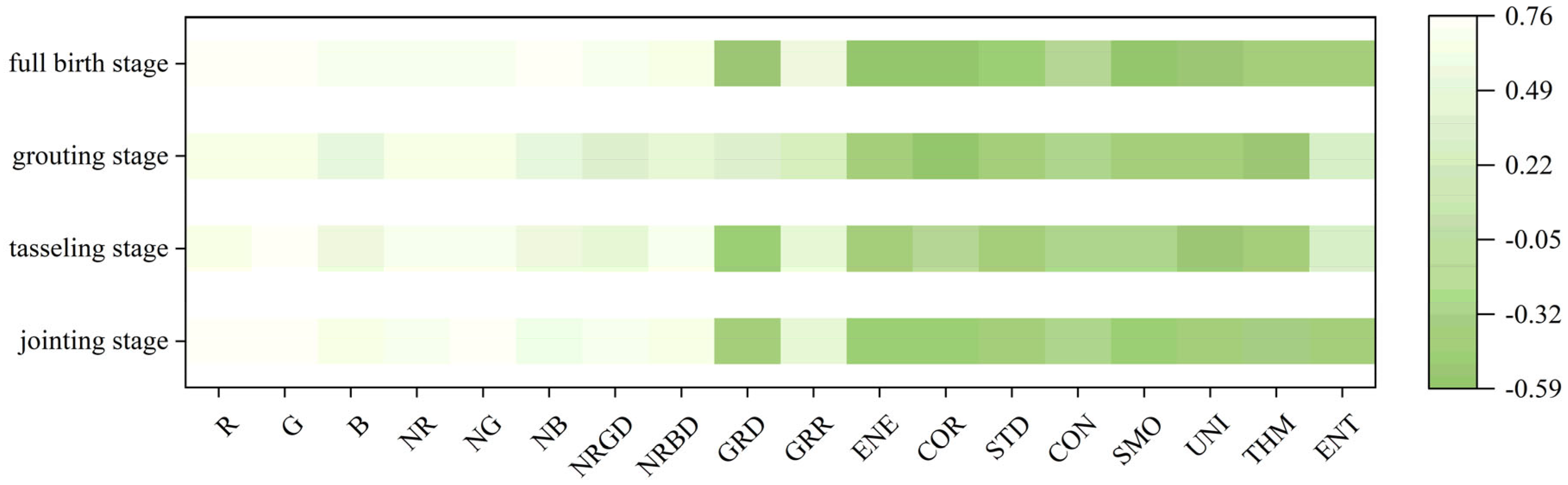

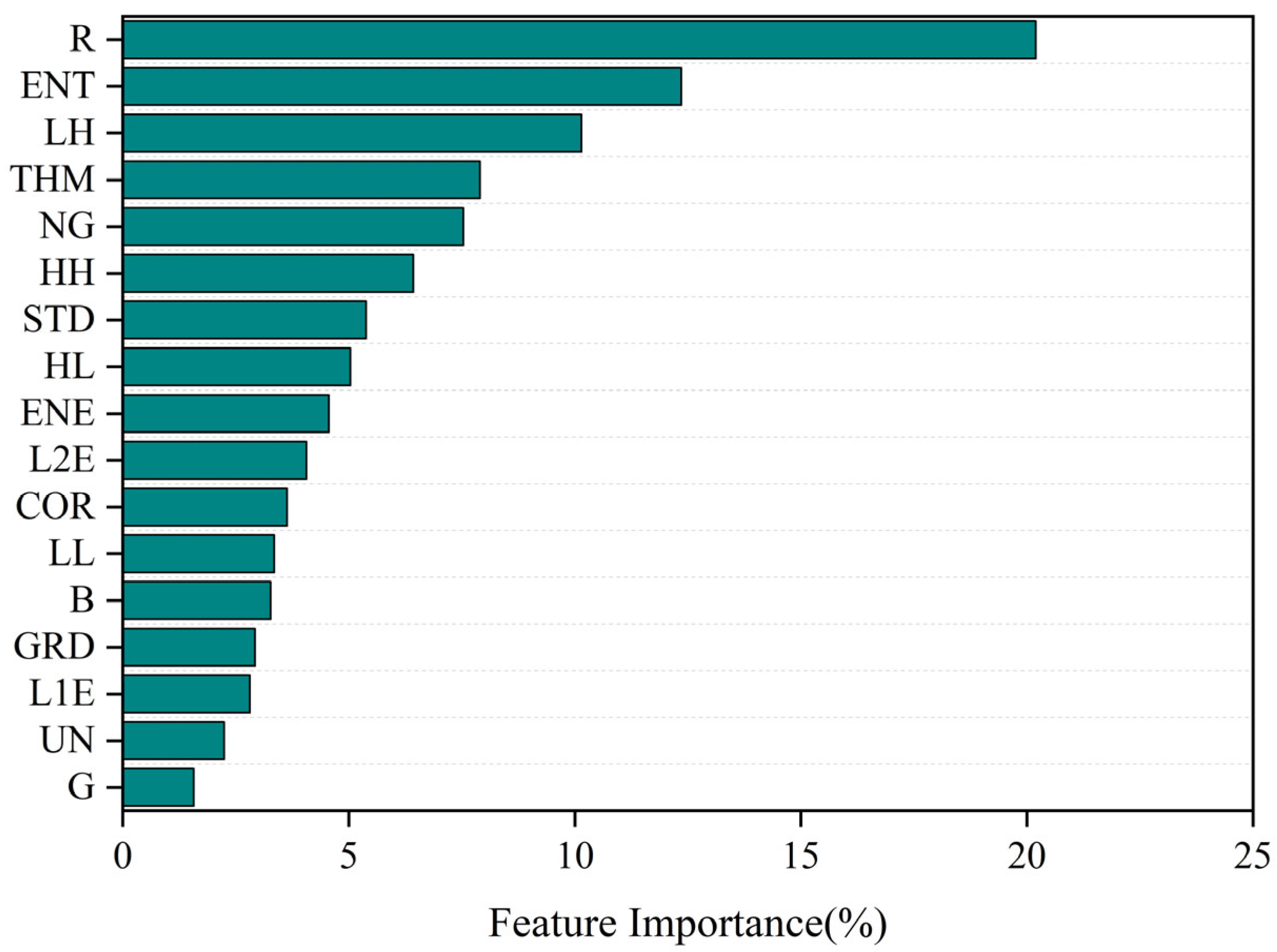

3.1. Correlation Analysis of Chlorophyll Content with Color and Textural Features

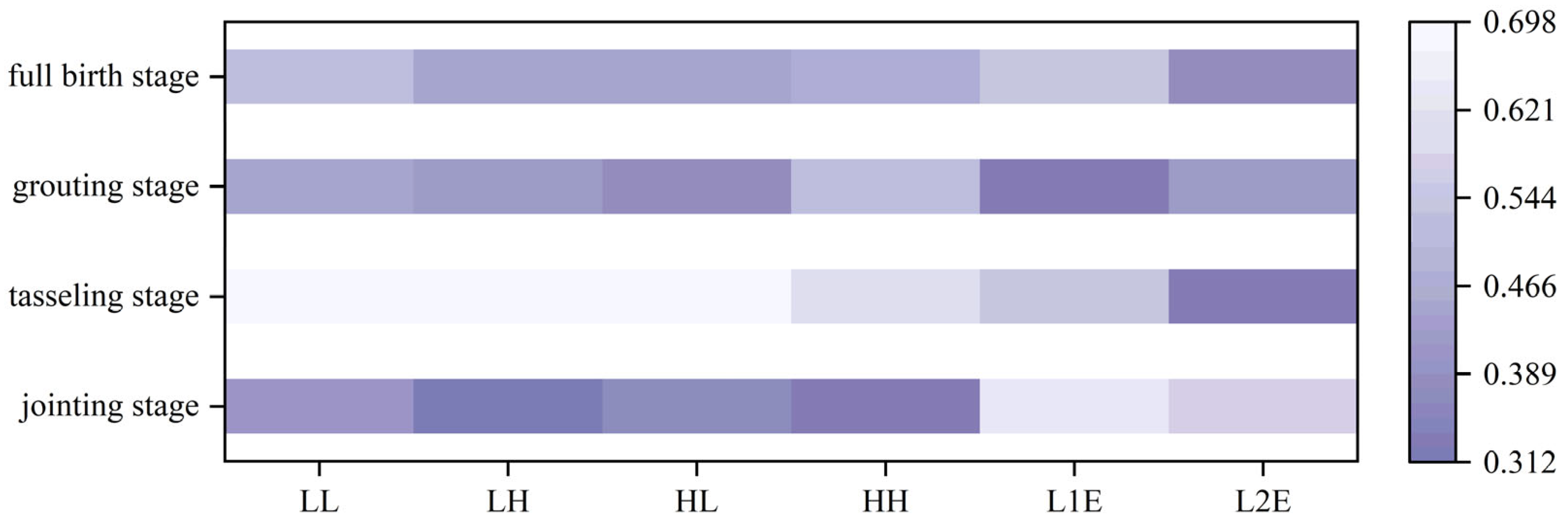

3.2. Correlation Analysis of Chlorophyll Content with Wavelet Characteristics

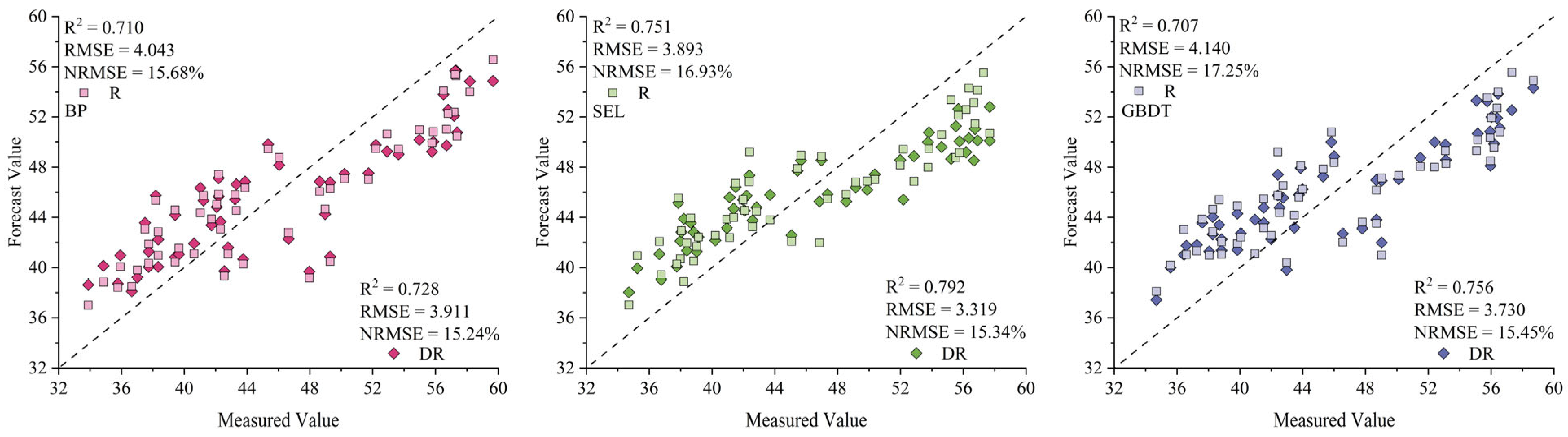

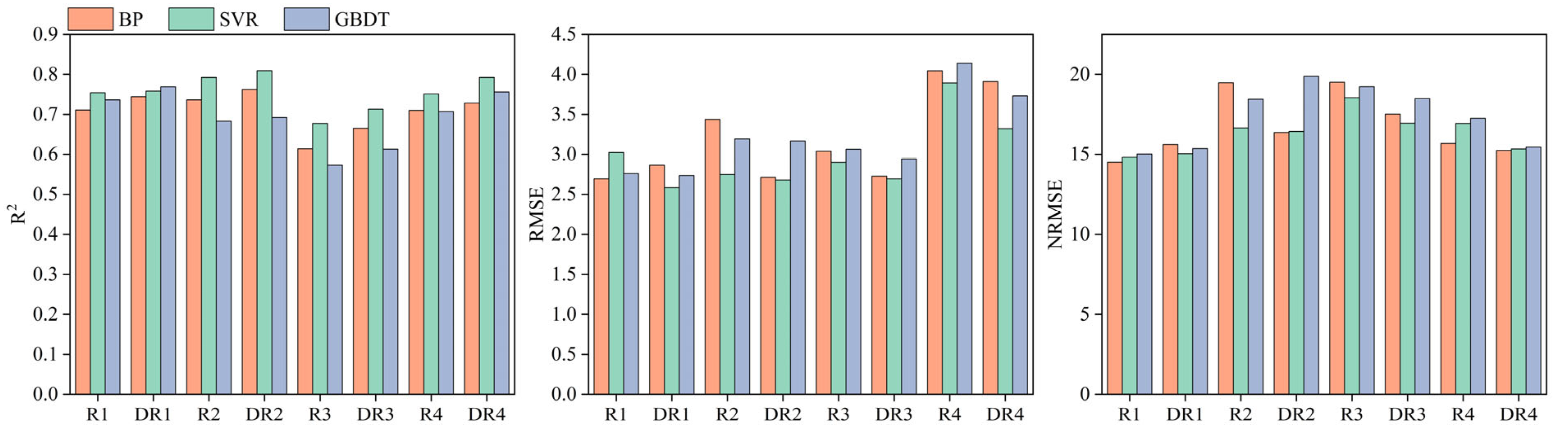

3.3. Inverse Modeling of Maize Canopy Chlorophyll Content

3.4. Comparison of Model Accuracy by Fertility Stage

4. Discussion

4.1. Analysis of Feature Fusion Potential

4.2. Analysis of Discrete Wavelet Transform Potential

4.3. Limitations and Future Prospects

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kasim, N.; Sawut, R.; Abliz, A.; Qingdong, S.; Maihmuti, B.; Yalkun, A.; Kahaer, Y. Estimation of the relative chlorophyll content in spring wheat Based on an optimized spectral index. Photogramm. Eng. Remote Sens. 2018, 84, 801–811. [Google Scholar] [CrossRef]

- Li, J.; Wijewardane, N.K.; Ge, Y.; Shi, Y. Improved chlorophyll and water content estimations at leaf level with a hybrid radiative transfer and machine learning model. Comput. Electron. Agric. 2023, 206, 107669. [Google Scholar] [CrossRef]

- Liu, Y.; Hatou, K.; Aihara, T.; Kurose, S.; Akiyama, T.; Kohno, Y.; Lu, S.; Omasa, K. A robust vegetation index based on different UAV RGB images to estimate SPAD values of naked barley leaves. Remote Sens. 2021, 13, 686. [Google Scholar] [CrossRef]

- Steele, M.R.; Gitelson, A.A.; Rundquist, D.C. A comparison of two techniques for nondestructive measurement of chlorophyll content in grapevine leaves. Agron. J. 2008, 100, 779–782. [Google Scholar] [CrossRef]

- Teshome, F.T.; Bayabil, H.K.; Hoogenboom, G.; Schaffer, B.; Singh, A.; Ampatzidis, Y. Unmanned aerial vehicle (UAV) imaging and machine learning applications for plant phenotyping. Comput. Electron. Agric. 2023, 212, 108064. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Xie, Q.; Dash, J.; Huete, A.; Jiang, A.; Yin, G.; Ding, Y.; Peng, D.; Hall, C.C.; Brown, L.; Shi, Y. Retrieval of crop biophysical parameters from Sentinel-2 remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 187–195. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating multispectral images and vegetation indices for precision farming applications from UAV images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Gevaert, C.M.; Suomalainen, J.; Tang, J.; Kooistra, L. Generation of spectral–temporal response surfaces by combining multispectral satellite and hyperspectral UAV imagery for precision agriculture applications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3140–3146. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Daughtry, C.S. What good are unmanned aircraft systems for agricultural remote sensing and precision agriculture? Int. J. Remote Sens. 2018, 39, 5345–5376. [Google Scholar] [CrossRef]

- Sun, Q.; Sun, L.; Shu, M.; Gu, X.; Yang, G.; Zhou, L. Monitoring maize lodging grades via unmanned aerial vehicle multispectral image. Plant Phenomics 2019, 2019, 5704154. [Google Scholar] [CrossRef]

- Gautam, D.; Ostendorf, B.; Pagay, V. Estimation of grapevine crop coefficient using a multispectral camera on an unmanned aerial vehicle. Remote Sens. 2021, 13, 2639. [Google Scholar] [CrossRef]

- Njane, S.N.; Tsuda, S.; van Marrewijk, B.M.; Polder, G.; Katayama, K.; Tsuji, H. Effect of varying UAV height on the precise estimation of potato crop growth. Front. Plant Sci. 2023, 14, 1233349. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Xu, X.; Han, J.; Zhang, L.; Bian, C.; Jin, L.; Liu, J. The estimation of crop emergence in potatoes by UAV RGB imagery. Plant Methods 2019, 15, 15. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, S.; Wang, S.; Wang, X.; Duan, H. Distributed bearing-based formation maneuver control of fixed-wing UAVs by finite-time orientation estimation. Aerosp. Sci. Technol. 2023, 136, 108241. [Google Scholar] [CrossRef]

- Zhao, J.; Gao, F.; Jia, W.; Yuan, W.; Jin, W. Integrated sensing and communications for UAV communications with jittering effect. IEEE Wirel. Commun. Lett. 2023, 12, 758–762. [Google Scholar] [CrossRef]

- Istiak, M.A.; Syeed, M.M.; Hossain, M.S.; Uddin, M.F.; Hasan, M.; Khan, R.H.; Azad, N.S. Adoption of Unmanned Aerial Vehicle (UAV) imagery in agricultural management: A systematic literature review. Ecol. Inform. 2023, 78, 102305. [Google Scholar] [CrossRef]

- Delavarpour, N.; Koparan, C.; Nowatzki, J.; Bajwa, S.; Sun, X. A technical study on UAV characteristics for precision agriculture applications and associated practical challenges. Remote Sens. 2021, 13, 1204. [Google Scholar] [CrossRef]

- Kou, J.; Duan, L.; Yin, C.; Ma, L.; Chen, X.; Gao, P.; Lv, X. Predicting leaf nitrogen content in cotton with UAV RGB images. Sustainability 2022, 14, 9259. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. Context-driven fusion of high spatial and spectral resolution images based on oversampled multiresolution analysis. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2300–2312. [Google Scholar] [CrossRef]

- Shi, W.; Zhu, C.; Zhu, C.; Yang, X. Multi-band wavelet for fusing SPOT panchromatic and multispectral images. Photogramm. Eng. Remote Sens. 2003, 69, 513–520. [Google Scholar] [CrossRef]

- Liu, H.; Sun, H.; Li, M.; Iida, M. Application of color featuring and deep learning in maize plant detection. Remote Sens. 2020, 12, 2229. [Google Scholar] [CrossRef]

- Cheng, T.; Riaño, D.; Ustin, S.L. Detecting diurnal and seasonal variation in canopy water content of nut tree orchards from airborne imaging spectroscopy data using continuous wavelet analysis. Remote Sens. Environ. 2014, 143, 39–53. [Google Scholar] [CrossRef]

- Xu, X.; Li, Z.; Yang, X.; Yang, G.; Teng, C.; Zhu, H.; Liu, S. Predicting leaf chlorophyll content and its nonuniform vertical distribution of summer maize by using a radiation transfer model. J. Appl. Remote Sens. 2019, 13, 034505. [Google Scholar] [CrossRef]

- Zhai, W.; Li, C.; Cheng, Q.; Ding, F.; Chen, Z. Exploring multisource feature fusion and stacking ensemble learning for accurate estimation of maize chlorophyll content using unmanned aerial vehicle remote sensing. Remote Sens. 2023, 15, 3454. [Google Scholar] [CrossRef]

- Hnizil, O.; Baidani, A.; Khlila, I.; Nsarellah, N.; Laamari, A.; Amamou, A. Integrating NDVI, SPAD, and Canopy Temperature for Strategic Nitrogen and Seeding Rate Management to Enhance Yield, Quality, and Sustainability in Wheat Cultivation. Plants 2024, 13, 1574. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Yang, Q.; Chen, X.; Ding, Z. An approach for joint estimation of grassland leaf area index and leaf chlorophyll content from UAV hyperspectral data. Remote Sens. 2023, 15, 2525. [Google Scholar] [CrossRef]

- Zhao, X.; Li, Y.; Chen, Y.; Qiao, X.; Qian, W. Water chlorophyll a estimation using UAV-based multispectral data and machine learning. Drones 2022, 7, 2. [Google Scholar] [CrossRef]

- Zhou, L.; Nie, C.; Su, T.; Xu, X.; Song, Y.; Yin, D.; Liu, S.; Liu, Y.; Bai, Y.; Jia, X. Evaluating the canopy chlorophyll density of maize at the whole growth stage based on multi-scale UAV image feature fusion and machine learning methods. Agriculture 2023, 13, 895. [Google Scholar] [CrossRef]

- Yin, H.; Huang, W.; Li, F.; Yang, H.; Li, Y.; Hu, Y.; Yu, K. Multi-temporal UAV imaging-based mapping of chlorophyll content in potato crop. PFG–J. Photogramm. Remote Sens. Geoinf. Sci. 2023, 91, 91–106. [Google Scholar] [CrossRef]

- Ban, S.; Liu, W.; Tian, M.; Wang, Q.; Yuan, T.; Chang, Q.; Li, L. Rice leaf chlorophyll content estimation using UAV-based spectral images in different regions. Agronomy 2022, 12, 2832. [Google Scholar] [CrossRef]

- Kandhway, P. A novel adaptive contextual information-based 2D-histogram for image thresholding. Expert Syst. Appl. 2024, 238, 122026. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Qian, B.; Shao, W.; Gao, R.; Zheng, W.; Hua, D.; Li, H. The extended digital image correlation based on intensity change model. Measurement 2023, 221, 113416. [Google Scholar] [CrossRef]

- Huang, Y.; Ma, Q.; Wu, X.; Li, H.; Xu, K.; Ji, G.; Qian, F.; Li, L.; Huang, Q.; Long, Y. Estimation of chlorophyll content in Brassica napus based on unmanned aerial vehicle images. Oil Crop Sci. 2022, 7, 149–155. [Google Scholar] [CrossRef]

- Gamon, J.; Surfus, J. Assessing leaf pigment content and activity with a reflectometer. New Phytol. 1999, 143, 105–117. [Google Scholar] [CrossRef]

- Dong, Y.; Fu, Z.; Peng, Y.; Zheng, Y.; Yan, H.; Li, X. Precision fertilization method of field crops based on the Wavelet-BP neural network in China. J. Clean. Prod. 2020, 246, 118735. [Google Scholar] [CrossRef]

- Zhao, Z.; Feng, G.; Zhang, J. The simplified hybrid model based on BP to predict the reference crop evapotranspiration in Southwest China. PLoS ONE 2022, 17, e0269746. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Dai, S.; Cao, Z.; Hong, J.; Jiang, S.; Yang, K. Using improved gradient-boosted decision tree algorithm based on Kalman filter (GBDT-KF) in time series prediction. J. Supercomput. 2020, 76, 6887–6900. [Google Scholar] [CrossRef]

- Zhang, Z.; Jung, C. GBDT-MO: Gradient-boosted decision trees for multiple outputs. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 3156–3167. [Google Scholar] [CrossRef] [PubMed]

- Yao, H.; Huang, Y.; Wei, Y.; Zhong, W.; Wen, K. Retrieval of chlorophyll-a concentrations in the coastal waters of the Beibu Gulf in Guangxi using a gradient-boosting decision tree model. Appl. Sci. 2021, 11, 7855. [Google Scholar] [CrossRef]

- Yuan, Z.; Ye, Y.; Wei, L.; Yang, X.; Huang, C. Study on the optimization of hyperspectral characteristic bands combined with monitoring and visualization of pepper leaf SPAD value. Sensors 2021, 22, 183. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Sun, H.; Yan, W.; Zhang, X. Multi-output parameter-insensitive kernel twin SVR model. Neural Netw. 2020, 121, 276–293. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Ding, S.; Zhang, Z.; Jia, W. An improved grid search algorithm to optimize SVR for prediction. Soft Comput. 2021, 25, 5633–5644. [Google Scholar] [CrossRef]

- Verma, B.; Prasad, R.; Srivastava, P.K.; Yadav, S.A.; Singh, P.; Singh, R. Investigation of optimal vegetation indices for retrieval of leaf chlorophyll and leaf area index using enhanced learning algorithms. Comput. Electron. Agric. 2022, 192, 106581. [Google Scholar] [CrossRef]

- Yang, H.; Hu, Y.; Zheng, Z.; Qiao, Y.; Zhang, K.; Guo, T.; Chen, J. Estimation of potato chlorophyll content from UAV multispectral images with stacking ensemble algorithm. Agronomy 2022, 12, 2318. [Google Scholar] [CrossRef]

- Liu, T.; Li, R.; Zhong, X.; Jiang, M.; Jin, X.; Zhou, P.; Liu, S.; Sun, C.; Guo, W. Estimates of rice lodging using indices derived from UAV visible and thermal infrared images. Agric. For. Meteorol. 2018, 252, 144–154. [Google Scholar] [CrossRef]

- Sorbelli, F.B.; Palazzetti, L.; Pinotti, C.M. YOLO-based detection of Halyomorpha halys in orchards using RGB cameras and drones. Comput. Electron. Agric. 2023, 213, 108228. [Google Scholar] [CrossRef]

- Acorsi, M.G.; das Dores Abati Miranda, F.; Martello, M.; Smaniotto, D.A.; Sartor, L.R. Estimating biomass of black oat using UAV-based RGB imaging. Agronomy 2019, 9, 344. [Google Scholar] [CrossRef]

- Barbosa, B.D.S.; Araújo e Silva Ferraz, G.; Mendes dos Santos, L.; Santana, L.S.; Bedin Marin, D.; Rossi, G.; Conti, L. Application of rgb images obtained by uav in coffee farming. Remote Sens. 2021, 13, 2397. [Google Scholar] [CrossRef]

- Qiu, Z.; Ma, F.; Li, Z.; Xu, X.; Ge, H.; Du, C. Estimation of nitrogen nutrition index in rice from UAV RGB images coupled with machine learning algorithms. Comput. Electron. Agric. 2021, 189, 106421. [Google Scholar] [CrossRef]

- Zhang, Y.; Hong, G. An IHS and wavelet integrated approach to improve pan-sharpening visual quality of natural colour IKONOS and QuickBird images. Inf. Fusion 2005, 6, 225–234. [Google Scholar] [CrossRef]

- Yocky, D.A. Image merging and data fusion by means of the discrete two-dimensional wavelet transform. J. Opt. Soc. Am. A 1995, 12, 1834–1841. [Google Scholar] [CrossRef]

- Zhou, J.; Civco, D.L.; Silander, J.A. A wavelet transform method to merge Landsat TM and SPOT panchromatic data. Int. J. Remote Sens. 1998, 19, 743–757. [Google Scholar] [CrossRef]

- Ranchin, T.; Wald, L. Fusion of high spatial and spectral resolution images: The ARSIS concept and its implementation. Photogramm. Eng. Remote Sens. 2000, 66, 49–61. [Google Scholar]

- Vaidya, S.P.; Mouli, P.C. Robust digital color image watermarking based on compressive sensing and DWT. Multimed. Tools Appl. 2024, 83, 3357–3371. [Google Scholar] [CrossRef]

- Tabassum, F.; Islam, M.I.; Khan, R.T.; Amin, M.R. Human face recognition with combination of DWT and machine learning. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 546–556. [Google Scholar] [CrossRef]

- Hasan, S.; Jahan, S.; Islam, M.I. Disease detection of apple leaf with combination of color segmentation and modified DWT. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 7212–7224. [Google Scholar] [CrossRef]

- Bendjillali, R.I.; Beladgham, M.; Merit, K.; Taleb-Ahmed, A. Improved facial expression recognition based on DWT feature for deep CNN. Electronics 2019, 8, 324. [Google Scholar] [CrossRef]

| Title | Features | Formulas | Title | Features | Formulas |

|---|---|---|---|---|---|

| R | R | GRD [27] | Green–Red Difference | G-R | |

| G | G | ENT [28] | Entropy | ||

| B | B | ENE [29] | Energy | ||

| NR | Normalized Red | R/(R + B + G) | COR [30] | Correlation | |

| NG | Normalized Green | G/(R + B + G) | CON [31] | Contrast | |

| NB | Normalized Blue | B/(R + B + G) | UNI [32] | Uniformity | |

| NRGD [33] | Normalized Red–Green Difference | THM [34] | Third-Order Moment | ||

| NRBD [33] | Normalized Red–Blue Difference | SMO [35] | Smoothness | ||

| GRR [27] | Green/Red Ratio | G/R | STD [36] | Standard Deviation |

| Number | Represent | Alphabet | Represent |

|---|---|---|---|

| 1 | Jointing stage | R | Non-fusion data |

| 2 | Tasseling stage | DR | Fusion data |

| 3 | Grouting stage | ||

| 4 | Full birth stage |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, W.; Pan, K.; Huang, Y.; Fu, G.; Liu, W.; He, J.; Xiao, W.; Fu, Y.; Guo, J. Monitoring the Maize Canopy Chlorophyll Content Using Discrete Wavelet Transform Combined with RGB Feature Fusion. Agronomy 2025, 15, 212. https://doi.org/10.3390/agronomy15010212

Li W, Pan K, Huang Y, Fu G, Liu W, He J, Xiao W, Fu Y, Guo J. Monitoring the Maize Canopy Chlorophyll Content Using Discrete Wavelet Transform Combined with RGB Feature Fusion. Agronomy. 2025; 15(1):212. https://doi.org/10.3390/agronomy15010212

Chicago/Turabian StyleLi, Wenfeng, Kun Pan, Yue Huang, Guodong Fu, Wenrong Liu, Jizhong He, Weihua Xiao, Yi Fu, and Jin Guo. 2025. "Monitoring the Maize Canopy Chlorophyll Content Using Discrete Wavelet Transform Combined with RGB Feature Fusion" Agronomy 15, no. 1: 212. https://doi.org/10.3390/agronomy15010212

APA StyleLi, W., Pan, K., Huang, Y., Fu, G., Liu, W., He, J., Xiao, W., Fu, Y., & Guo, J. (2025). Monitoring the Maize Canopy Chlorophyll Content Using Discrete Wavelet Transform Combined with RGB Feature Fusion. Agronomy, 15(1), 212. https://doi.org/10.3390/agronomy15010212