1. Introduction

Maize is a major grain crop in China, where the improvement of maize yield depends on the breeding of excellent new varieties. While in the process of maize growth, planting density and yield are positively correlated [

1], in order to enhance the breeding of maize seeds suitable for high-density planting, it is necessary to obtain maize phenotypic information from large-scale population trials at different planting densities to assess the performance of different maize varieties [

2]. Maize’s male tassel is an important agronomic trait in maize breeding [

3,

4], and the accurate detection and density assessment of maize male tassels is of wide significance. It is a prerequisite element in the research and development of new agricultural technologies [

5] and is key to the performance profile and yield prediction of different maize varieties. It helps to manage the impact of environmentally controllable factors on maize growth and development and helps to develop strategies to mitigate the effects of climate change and optimize crop management practices. It also contributes to precision agriculture by allowing farmers to customize inputs to the specific needs of the crop, reducing waste and increasing efficiency.

Current maize male tassel detection mainly relies on manual counting methods. But this approach has the disadvantages of being subjective, time-consuming, inefficient, and unable to adapt to large-scale quantitative analysis of crop traits and phenotypes. In recent years, with the development of drone technology and computer vision technology, researchers at home and abroad have conducted a lot of research on field corn male tassel acquisition and recognition based on drone technology and deep learning. There is the TasselNet convolutional neural network proposed by Lu et al. [

6], for example, to construct a new dataset MTC using visible light images captured by a high-resolution camera, to accurately and efficiently count maize male tassels through a local regression network, and to disclose a maize male tassel dataset. Yu et al. [

7] proposed a novel lightweight neural network, TasselLFANet, in the dataset MrMT to accurately and efficiently detect and count maize male tassels in high temporal image sequences. KHAKI S et al. [

8] proposed a YOLOv4-based computer vision method to detect wheat tassels. Buzzy M et al. [

9] used the Tiny-YOLOv3 network to accurately detect the real-time localization of leaves. F. Kurtulmus et al. [

10] used Support Vector Machines (SVMs) to detect corn male tassels; they manually collected 46 RGB high-resolution images of corn canopies as a small dataset, extracted the color information using SVMs, and classified the pixel points as having or not having tassels, with a detection accuracy of 81.6%. Liu et al. [

11] based their model on the Faster R-CNN network, modified the anchor point size, and detected corn male tassels by replacing different backbone feature extraction networks, and they concluded that a Residual Neural Network (ResNet) is better than a Visual Geometry Group Network as a feature extraction network for corn male tassels, but a large number of parameters and FLOPs can lead to a slow detection speed in Faster R-CNN. Hongming Zhang et al. [

12] took maize seedlings as the target, added lightweight improvement measures, and proposed a convolutional neural network detection network for seedling acquisition, which realized the acquisition of maize seedling plants at high throughput and completed the prediction of yield assessment. Liang et al. [

13] applied several mainstream detection models such as Faster R-CNN [

14], SSD [

15], and YOLOv3 [

16] to train and predict the labeled corn tassel dataset and compared the results. Yang et al. [

17] improved the CenterNet detection model [

18], which achieved good positive results in improving the accuracy and speed of maize male tassel detection, but due to the complexity of the field situation, strong light and leaf shading are serious, and some small male tassel targets cannot be detected, i.e., it will lead to an increase in the number of missed and misdetected tassels. A Zadjali et al. [

19] used Faster R-CNN as the base model while making some modifications to the threshold for the intersection ratio (Iou) between the predicted and true frames for target detection, which improved the accuracy and recall of the model; however, it still did not solve the problem of the large parameters of the Faster R-CNN model. Ji et al. proposed a coarse-to-fine maize male tassel detection mechanism, which was realized by continuous image acquisition for a wider range of applications and provided a new idea for maize tassel detection [

20]. Mirnezami et al. captured close-up images of corn tassels and used deep learning algorithms to detect, classify, and segment the tassels [

21]. They then utilized image processing techniques to intercept the major spikelets on the male tassel to track reproductive development. Falahat et al. proposed a maize tassel detection and counting technique based on an improved YOLOv5n network, which consists of applying an attentional mechanism on the trunk and using deep convolution on the neck to enable the model to learn more complex features for better tassel detection; the improved model resulted in an improvement of 6.67% in mAP@ 0.5 [

22].

During the growth of maize, the density of planting and yield are positively correlated. Maize density map estimation methods applied to the maize cob counting task to label densely planted cobs with points greatly reduces the workload of manual sample labeling, while the generated density maps help researchers to understand the spatial distribution of maize male cobs, making it possible to count closely planted maize in a plot, and providing assistance in realizing quantitative processing in production. However, it is difficult for existing methods for maize male cob density assessment to show adequate performance in field maize male cob counting tasks. Currently, most of the algorithms for maize male tassel density estimation use Gaussian filters for labeled images. Multi-column CNN [

23] uses a multi-scale convolutional kernel operator to adaptively process targets of different sizes to obtain a density map of the target. The shallow network structure does not learn well the various morphologies of corn cobs, and thus the accuracy that can be achieved is limited. To further improve target statistic accuracy, Li et al. [

24] proposed an extended convolutional network (CSRNet) to study the target recognition problem in highly congested scenarios. This network shows good performance in target statistics. However, its linear structure cannot effectively handle the variation of male spikes, and the expansion rate of the back-end network is fixed, which results in slow inference. Stack-pool (STP) [

25] addresses the scale invariance problem through a stacked pool structure but lacks an efficient back-end network to aggregate and effectively utilize the extracted multi-scale features. Dense Scale Network (DSNet) [

26] uses cascading extended convolutions with different scaling rates to enhance feature fusion, but the single-column structure limits its accuracy in scale-varying scenarios. Multi-task Point Supervision (MPS) [

27] uses three Vgg16 encoders to acquire feature maps at three scales, which are embedded into an extended convolutional network to improve the processing power of scale transformations. This method, however, performs poorly for a certain size of computation and makes inference slow due to its complex multi-column structure.

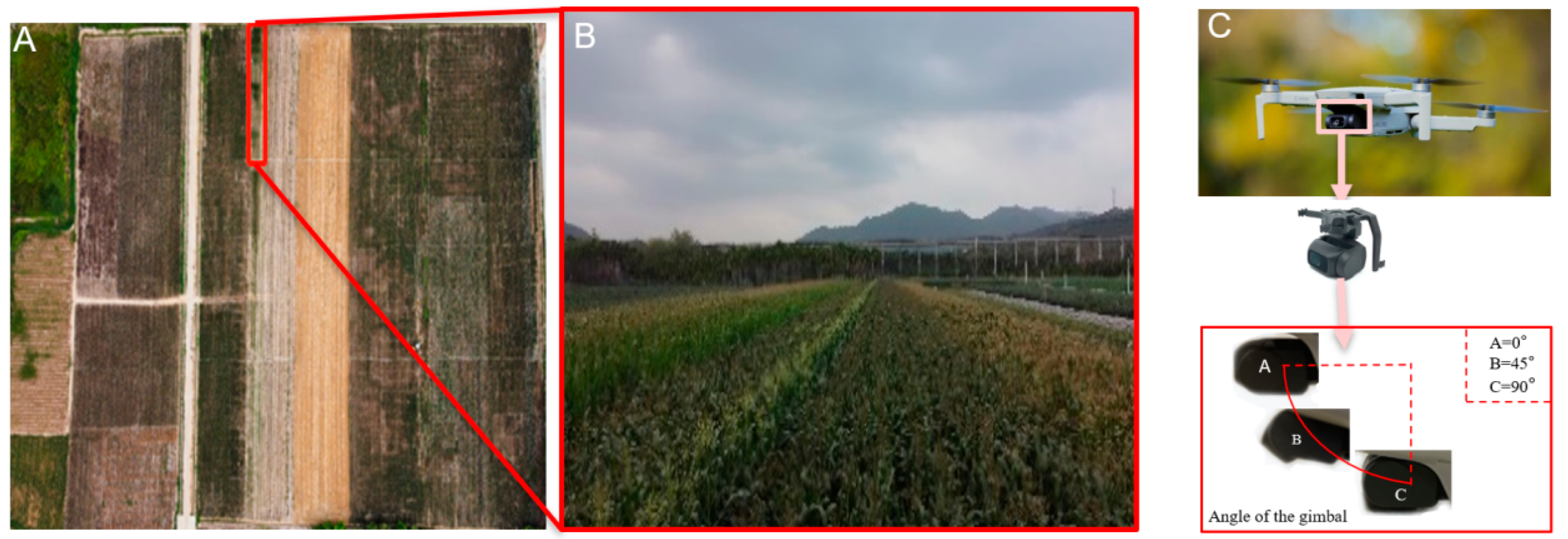

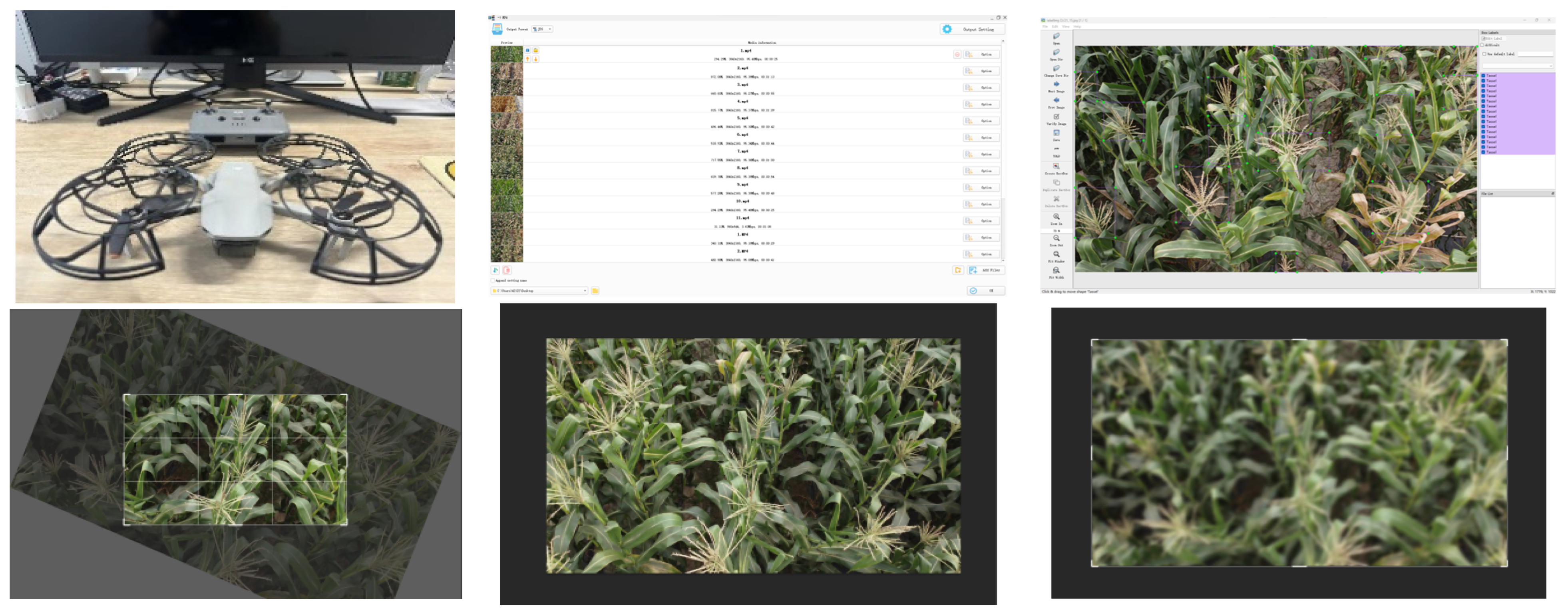

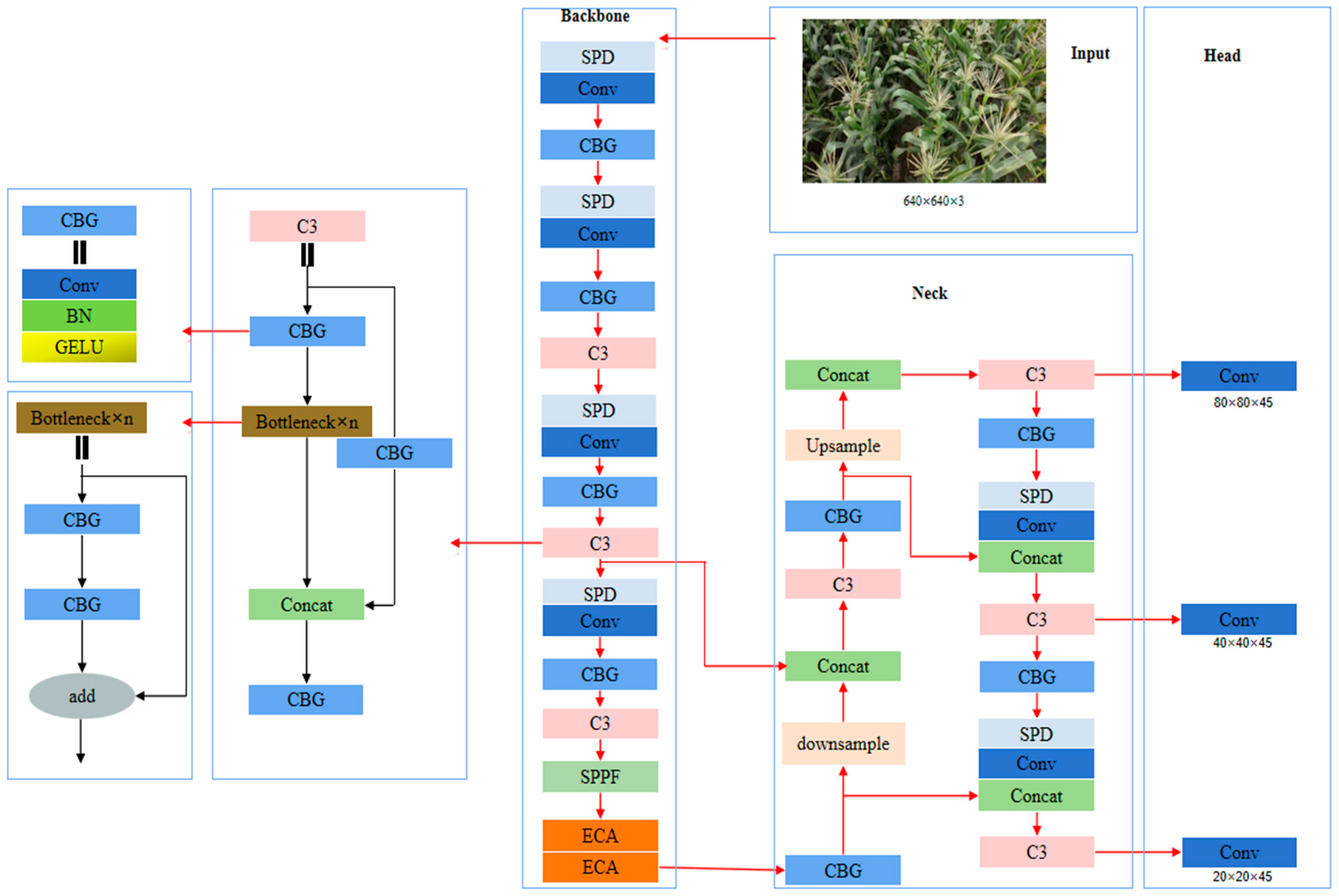

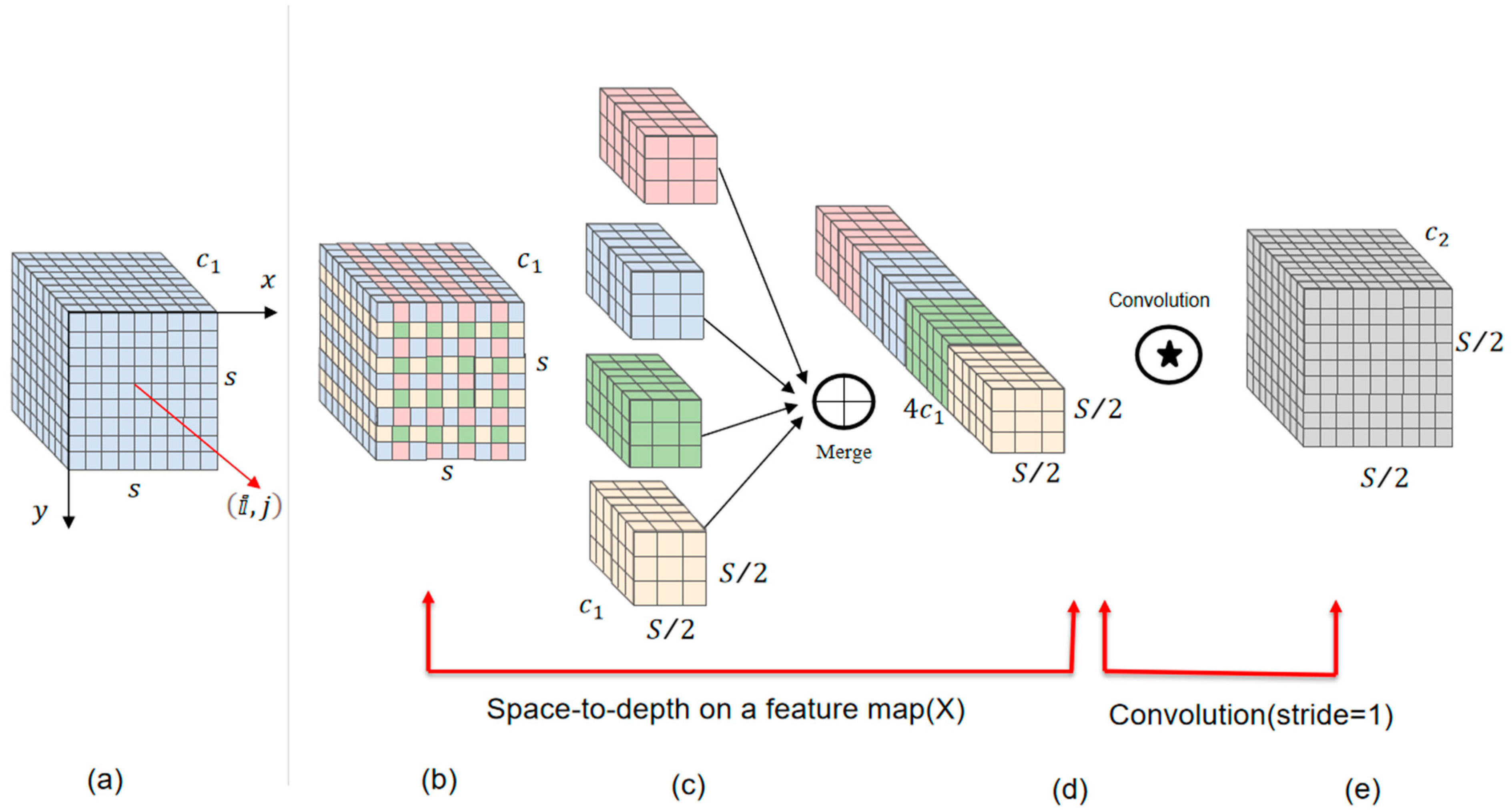

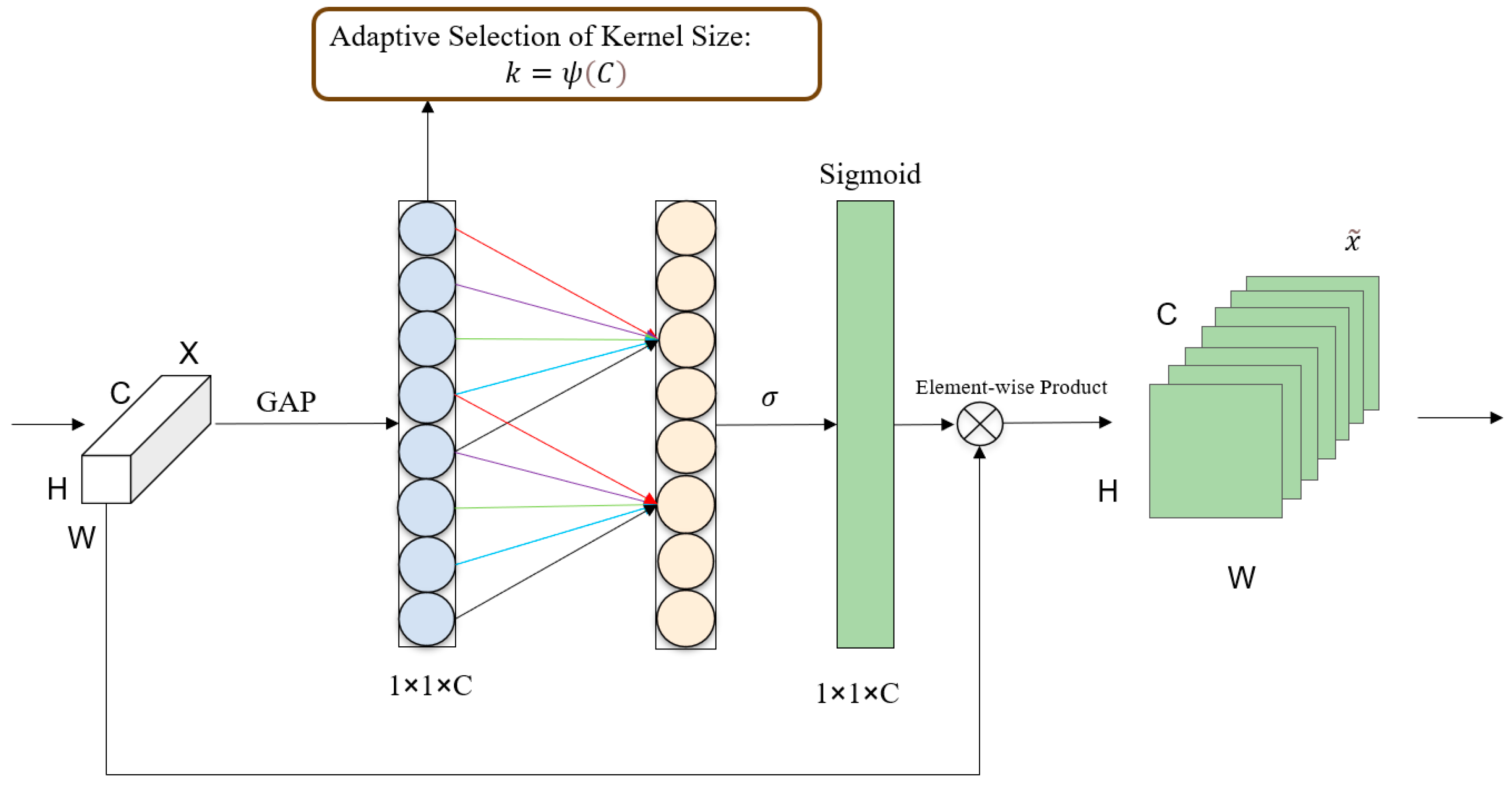

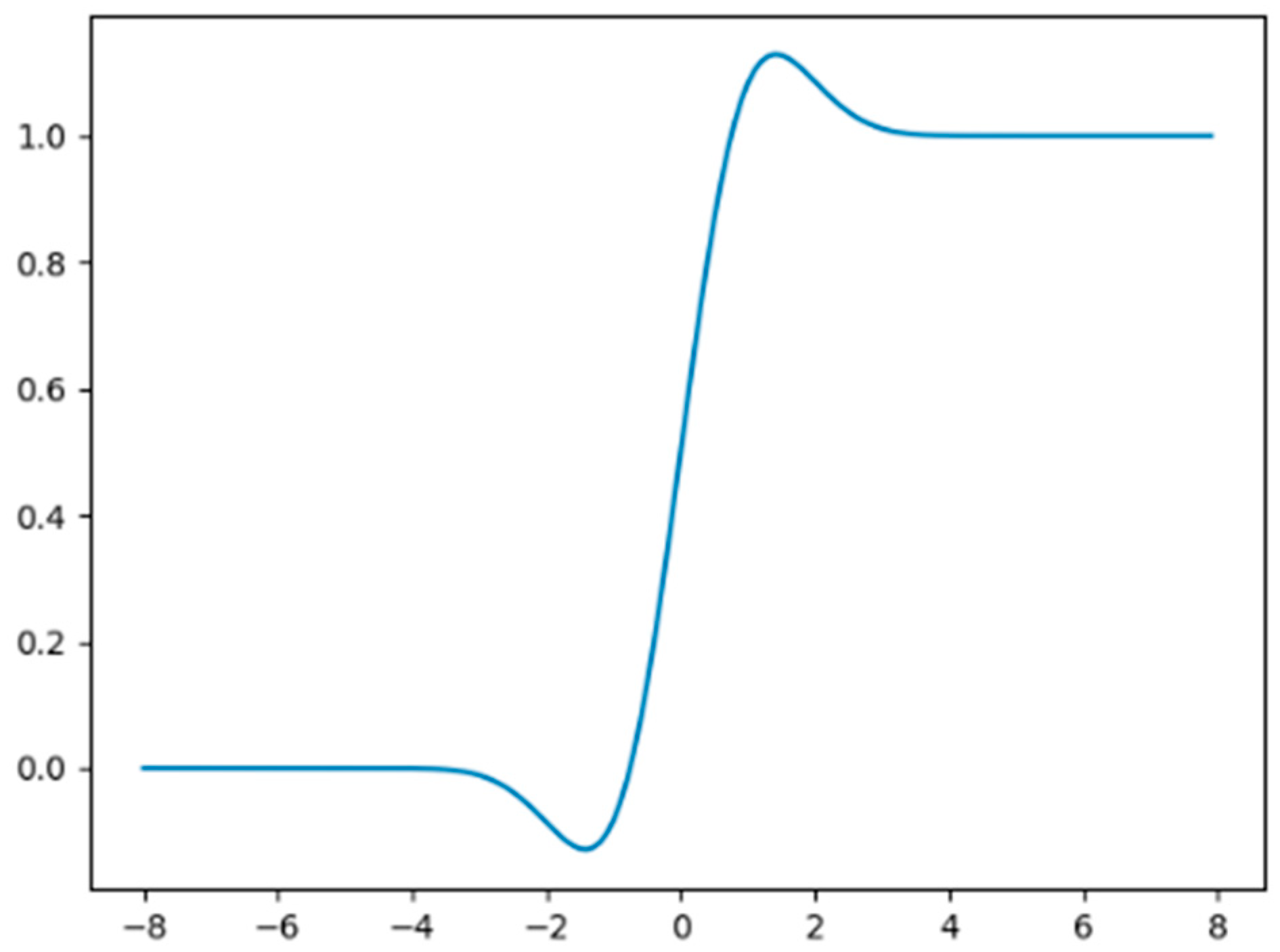

In summary, the direct application of density map-based methods to maize male tassels may lead to serious error problems for three reasons: In the first place, corn male tassels present different sizes at different growth stages, and image capture, i.e., filming, at different distances from the tassels leads to significant variations in the appearance of the tassels. This caused the male tassels to exhibit different shapes and sizes. The second point is that the complexity of the background in the field leads to a large number of force majeure things that shade the maize male tassels and interfere with the counting. For the third point, achieving fast inference and realizing straight-out assessment while ensuring counting accuracy is very challenging for existing networks, which have difficulty reconciling these two tasks. In order to optimize the challenge of scale variation in maize male cob images, a feature extraction network with SPD-Conv was added in this study. This structure is optimized for processing symmetric positive definite matrix data (e.g., covariance matrix, deformation tensor, etc.), which makes it effective for low-resolution images and small object detection. To address the challenge of complex background interference, this study adds a dual ECA attention mechanism module to suppress ambiguous features by emphasizing salient features. This module uses a 1 × 1 convolutional layer directly after the global average pooling layer, removes the fully connected layer, avoids dimensionality reduction, and captures cross-channel interactions effectively. In order to balance efficiency and accuracy, the activation function GULE is used in this study, which is a relatively stable strategy to perform detection and is not prone to large errors. In this study, the ESG-YOLO model was constructed, and the activation function SiLU was modified to GELU on the basis of the YOLOv7 detection model, and the dual ECA attention mechanism module and the SPD-Conv network module were added for the detection of maize male tassels in complex field environments. This method can achieve the accurate identification and counting of the number of maize male tassels in complex backgrounds, which can be combined with effective tassel stage determination to help the management of maize growth and yield estimation in the field, provide good information feedback for the screening of future breeding and selection work as well as agricultural robotic operations, and improve the accuracy and efficiency of maize male tassel phenotype detection.

4. Experimental Results and Analysis

4.1. Experimental Equipment and Parameter Settings

The operating environment for this test is a Dell Tower Workstation (Dell, Inc.; Londrock, TX, USA). The operating system environment is Windows 11, the processor is a 12th Gen Intel(R) Core(TM) i5-12500 3.00 GHz, and there are 32 G of machine-banded running memory, with a 1 TB SSD. The graphics card is an NVDIA GeForce RTX 3080 with 10 GB of video memory, in addition to 10 GB using GPU-accelerated computing. The software environment consisted of Python 3.9.13, PyTorch 1.7.0, Torchvision 0.8.2, and CUDA 11.0.

Iterative training of this model with the constructed training set is shown as the training curve of the optimized model in this study, with two different kinds of losses in this training process, frame loss and target loss, where box loss indicates the extent to which the predicted box covers the calibrated box and target loss indicates the probability that the target exists in the region of interest. As can be seen from the figure, the above losses decrease rapidly in the early stages of training. In addition,

Figure 7 shows the precision, recall, and average precision of the model during the training process. As can be seen from the figure, the model improves rapidly in terms of precision, recall, and average precision, and the curve starts to flatten out after 300 iterations, and the model stops after 500 iterations.

In this paper, we evaluate the performance of the model in terms of precision, recall, F1 score, and accuracy, as shown in Equations (3)–(7), where TP denotes the number of correct positive samples, FP denotes the number of negative samples predicted as positive samples, and FN denotes the number of positive samples predicted as negative samples.

Accuracy is the proportion of positive samples in the validation sample. The formula is calculated as in the following equation:

Recall is the proportion of validated samples that accurately predict positive samples and is calculated as in Equation (4):

The F1 metric is the reconciled average of accuracy and recall, which serves to synthesize the accuracy and recall, and is calculated by the following equation:

The

mAP is the area enclosed by the graph plotted on the

y-axis for accuracy and the

x-axis for recall based on the PR curve, calculated by the following equation:

Precision and recall complement each other and are evaluation metrics for binary classification problems, used to measure the accuracy and coverage of the model, and more intuitively reflect the degree of goodness of the model’s performance during the experiment. The harmonic mean combines precision and recall, and it is used to comprehensively evaluate the performance of the model, especially for unbalanced datasets. Average precision, on the other hand, is a key metric in modeling applications and is related to precision but is more concerned with ranking quality and average accuracy.

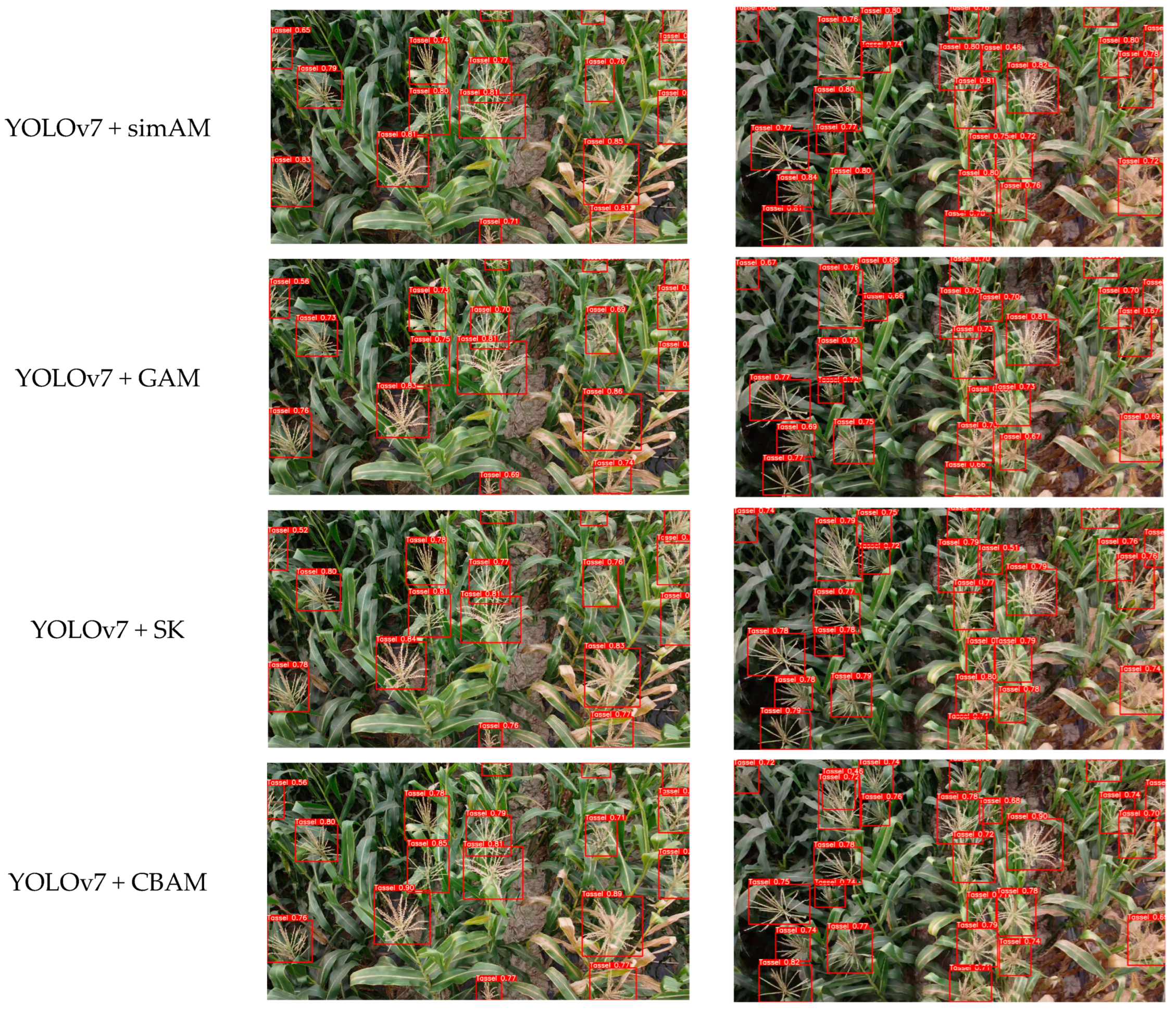

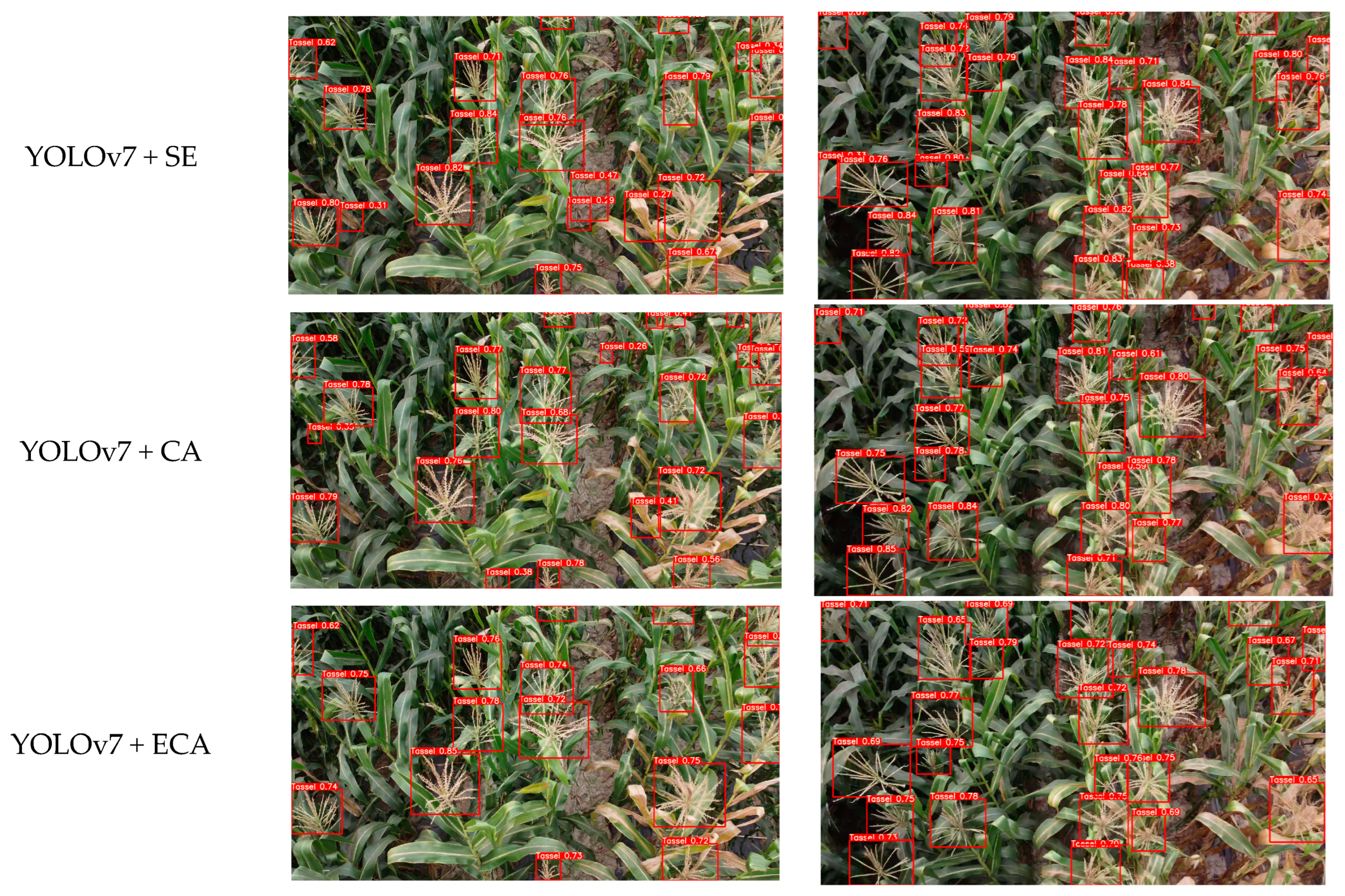

4.2. Comparison of Attention Mechanism Training

In order to verify the optimization effect of different attention mechanism modules for ESG-YOLO, seven different attention mechanism modules are selected in this paper, namely, the ECA attention mechanism module, simAM attention mechanism module [

35], CBAM attention mechanism module [

36], GAM attention mechanism module [

37], SK attention mechanism module [

38], SE attention mechanism module [

39], and CA attention mechanism module [

40]. The ECA, SE, CA, simAM, CBAM, GAM, and SK modules are sequentially added to the backbone feature network part in YOLOv7 to obtain the YOLOv7 + ECA, YOLOv7 + SE, YOLOv7 + CA, YOLOv7 + simAM, YOLOv7 + CBAM, YOLOv7 + GAM, and YOLOv7 + SK networks. The algorithmic precision, recall, F1, and A_P values are compared under different attention mechanisms, respectively, and the results are shown in

Table 1.

As can be seen from

Table 1, the model after the introduction of the attention mechanism has a significant improvement in all parameters, and the coordinate attention improvement module has a significant contribution to the network, in which the average precision of the YOLOv7 network before the improvement is 90.8%, and the average precision of the YOLOv7 + ECA network after the improvement is 92%, which is an improvement of 1.2 percentage points, and its precision, recall, and F1 are improved by 3.3, 1, and −1 percentage points, respectively; this network structure reconciles the decrease in average value, which indicates that the accuracy and coverage have relatively positive improvements. Values of 3.3, 1, and −1 percentage points indicate that this network structure reconciles the decrease in the average value with a relatively positive increase in accuracy and coverage, resulting in a significant increase in the average precision; the average precision of the YOLOv7 + simAM network is 91.4%, which is an increase of 0.6 percentage points, but the network structure has an increase in the accuracy but insufficient performance coverage, and its precision rate, recall rate and F1 are improved by 7.2, 0, and 0 percentage points, respectively. The average precision of the YOLOv7 + CBAM network is 91.9%, an increase of 1.1 percentage points, and its precision rate, recall rate, and F1 increase by 2.9, 2, and 0 percentage points, respectively, which improves the accuracy and coverage of the network structure, and the average precision increases accordingly; the average precision of the YOLOv7 + GAM network is 90.9%, an increase of 0.6 percentage points, but this network structure has improved accuracy but insufficient performance coverage, 90.9%, an improvement of 0.1 percentage points. Its precision, recall, and F1 are improved by 4.6, 0, and 0 percentage points, respectively, and only accuracy is improved in this network structure; the average precision of the YOLOv7 + SK network is 90.7%, which is reduced by 0.1 percentage points, and its precision, recall, and F1 are improved by 7.6, −1, and 0 percentage points, respectively, and the accuracy is improved significantly in this network structure, but the coverage is decreased, which leads to the average precision also decreases, while YOLOv7 + SE and YOLOv7 + CA present poor performance results. The experimental results show that in addition to the SK, CA, and SE attention mechanism modules, the introduction of the coordinate attention mechanism has good results for the extraction of maize male cob feature information, proving the effectiveness of adding the ECA attention mechanism to YOLOv7.

Heat map visualization of the detection process of the network models with the addition of seven types of attention mechanisms is shown in

Table 2. After the addition of the attention mechanisms, the attention of each network model becomes broad and deep, and under the single-strain heat effect, SK, CA, and SE are slightly less effective compared to ECA, SimAM, CBAM, and GAM. The GAM attention mechanism is slightly worse than ECA, SimAM, and CABM under the dual-plant thermal effect, while ECA is more effective compared to the SimAM and CBAM attention mechanisms under dense, sparse, and weak light conditions. The experimental results and visualization show that adding the ECA attention mechanism to the network model can effectively improve the detection accuracy of the overall network.

As shown in

Figure 8, there is a significant difference in the detection effect after adding the attention mechanism module, and for the cluttered environment in the field, the detection effect of adding the ECA attention mechanism module in the network model is better.

4.3. Ablation Experiment

Ablation experiments are performed on the constructed dataset and the results are shown in

Table 3. YOLOv7 is used as the benchmark model in this experiment. Comparisons were made with the following improved models, respectively: adding the SPD-Conv network module to YOLOv7; adding the ECA attention to YOLOv7; using GELU as the activation function instead of SiLU, the original activation function of YOLOv7; and YOLOv7’s addition of the SPD-Conv network module and ECA based on the alteration of the activation function to GELU. The attention mechanism module is a way to verify the significance of each module. As shown in the table, after replacing the backbone with a network, mAP@0.5 increased by 0.6 percentage points compared to YOLOv7, and mAP@0.5 improved by 1.2 percentage points after adding the attention mechanism to YOLOv7. The ECA attention module enhances the distinguishable feature information in the image features and improves the robustness of the model to extract features. The increase in the number of participants is only less than 0.01, which is due to the fact that the ECA attention module itself is also lightweight; when the activation function was replaced with GELU in the YOLOv7 model, the recall was improved. And after modifying the activation function to GELU in yolov7 and adding the SPD-Conv trunk and ECA attention mechanism module, the mAP@0.5 improved by 2.3 percentage points. Finally, an ECA attention mechanism is added on top of YOLOv7 + ECA + SPD + GELU to obtain the final model with a dual ECA attention mechanism, which is 7.3 percentage points more accurate than the single ECA attention mechanism and provides better loading fault tolerance for subsequent future lightweight applications. In the comprehensive analysis, the improved model is superior to other models.

4.4. Comparison of Different Algorithms

To evaluate the ESG-YOLO model, it was compared with four advanced target detection models: namely, YOLOv5, YOLOv7, MobileOne-YOLOv7 [

41], and Ghostnet-YOLOv7 [

42]. The detection results of each model are shown in

Table 4. Compared with the compared models, the improved model has some advantages in terms of mAP@0.5 and the number of parameters. Especially in terms of the number of parameters, the improved model adds the SPD-Conv network module, which makes the overall model more accurate for the small target detection layer. The comparison shows that the proposed ESG-YOLO is the most accurate and stable for the detection of maize male tassels in complex environments.

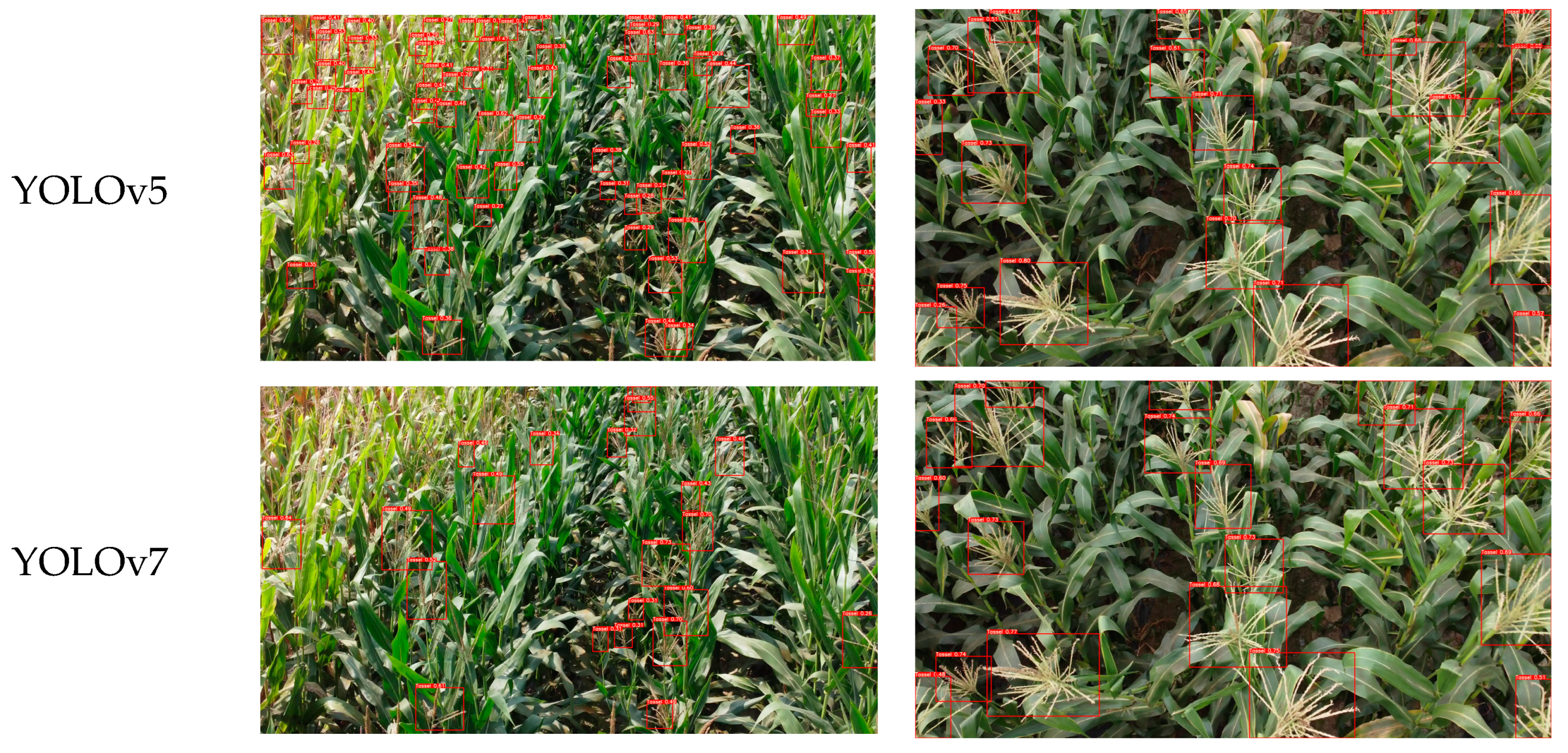

As can be seen in

Figure 9, from the comparison of the various models, YOLOv5 and YOLOv7 are detected slightly lower than the other models, while Ghostnet-YOLOv7 and MobileOne-YOLOv7, two lightweight version of the YOLO model, there is a certain amount of misdetection, so after a comprehensive comparison of the test, the detection of ESG-YOLO is the most effective.

4.5. Test Results of Variety and Density

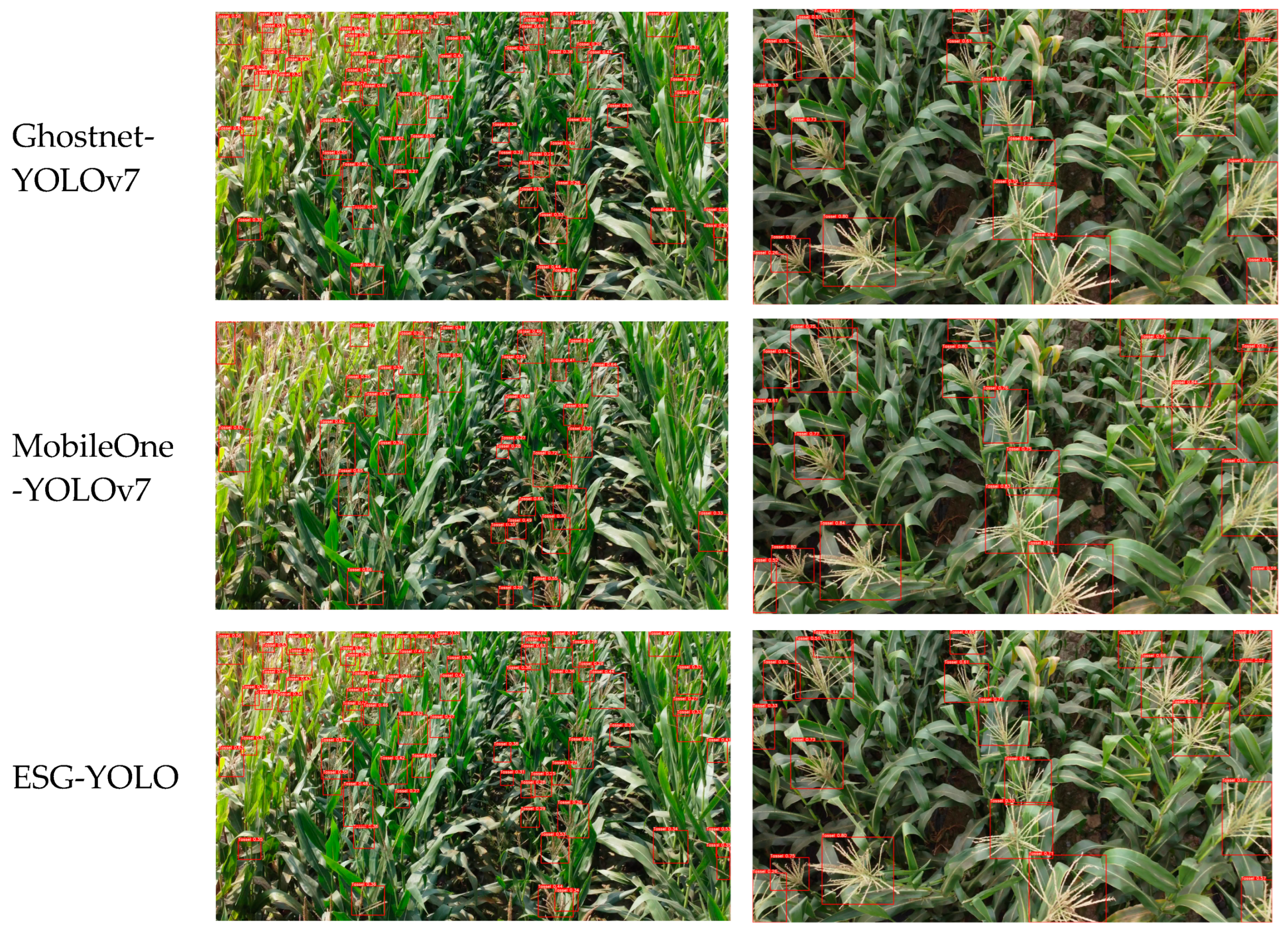

The field environment is complex and varied, and corn male detection often encounters problems such as the situation of shading, the influence of strong light, the density of growth, immaturity, and missed detection. In response to these complex field environmental conditions, ESG-YOLO was used for detection, as shown in

Figure 10, and the effect of maize male tassel detection was excellent.

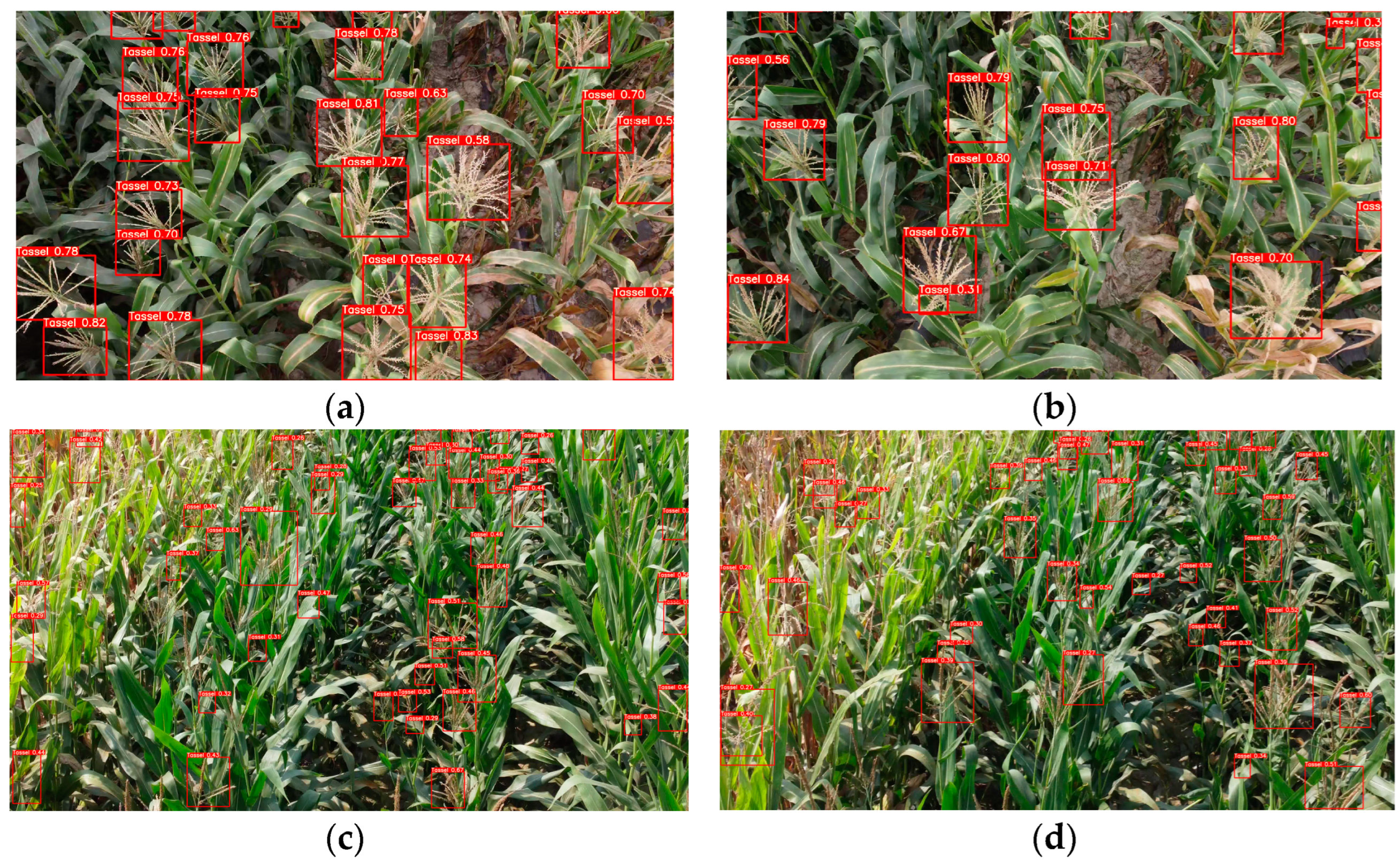

As shown in

Figure 11, five models, YOLOv5, YOLOv7, Ghostnet-YOLOv7, MobileOne-YOLOv7, and ESG-YOLO, were used to detect the male tassel of each variety of maize, and linear regression analyses were carried out to analyze the number of artificially labeled true frames and the results of model detection. The coefficient of determination R

2 of Tongfeng 162 was 0.898, 0.8057, 0.9168, 0.8973, and 0.9823 for YOLOv5, YOLOv7, Ghostnet-YOLOv7, MobileOne-YOLOv7, and ESG-YOLO models, respectively, and it had the worst adaptability to the model among the three varieties; The coefficient of determination R

2 of Kimberly 15 was 0.8999, 0.9741, 0.9807, 0.8986, and 0.9879, respectively, which was the best adaptation to the model among the three varieties. And among the five models, the coefficient of determination R

2 of ESG-YOLO model was 0.9828, 0.9831, and 0.9879 for the three varieties, which had the best detection effect, indicating that the ESG-YOLO model had better detection performance in each model, and it also provided a certain prerequisite guarantee for late maize yield assessment and prediction.

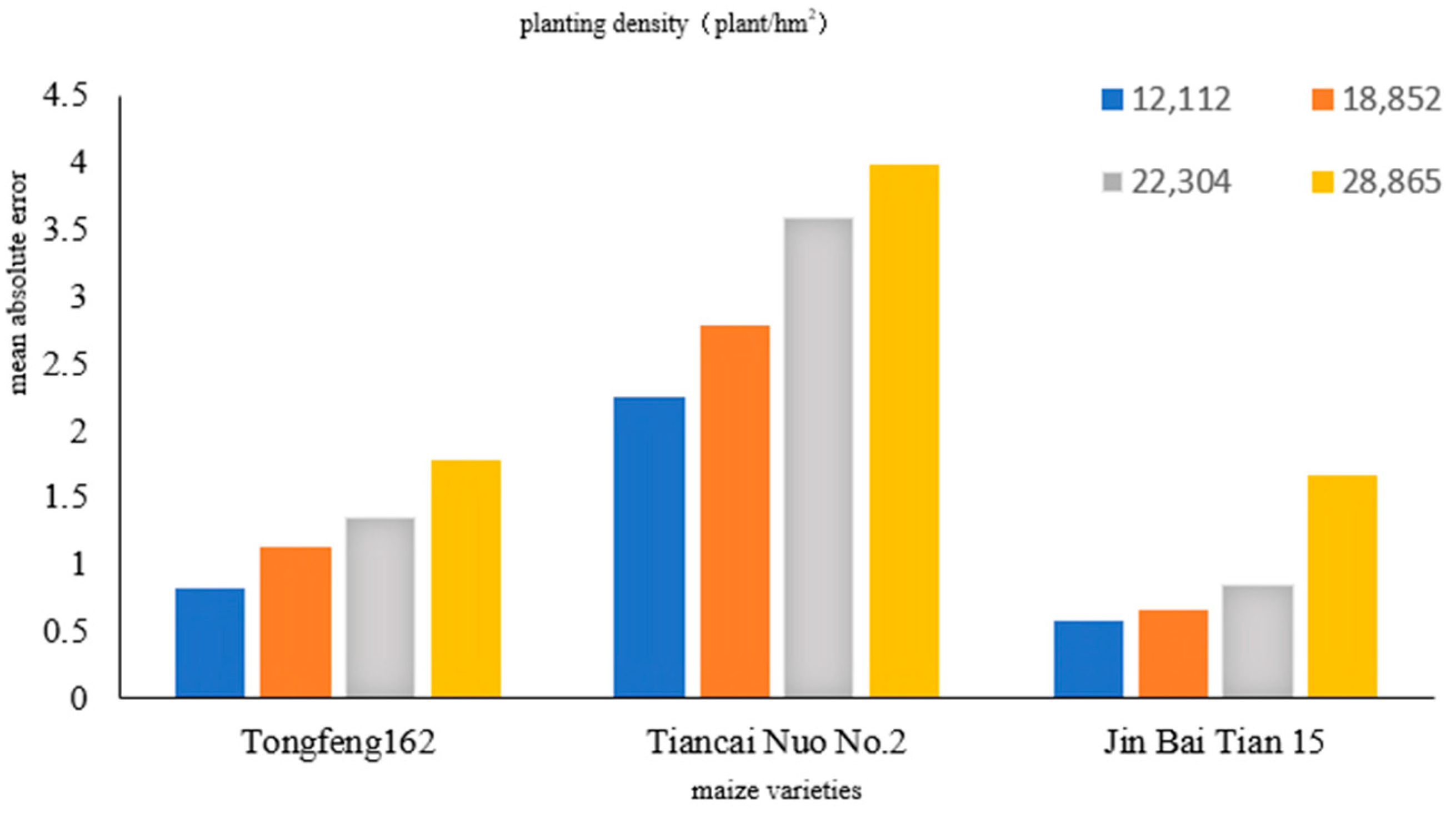

In order to verify the recognition effect of maize male tassel with different planting densities based on the ESG-YOLO model, maize male tassel images with different planting densities under the same variety were detected, respectively, and mean absolute error (

MAE) was calculated.

N is the number of images, as shown in Formula (8),

is the angle of the oriented detection box in the

image, and

is the angle of the corresponding oriented bounding box.

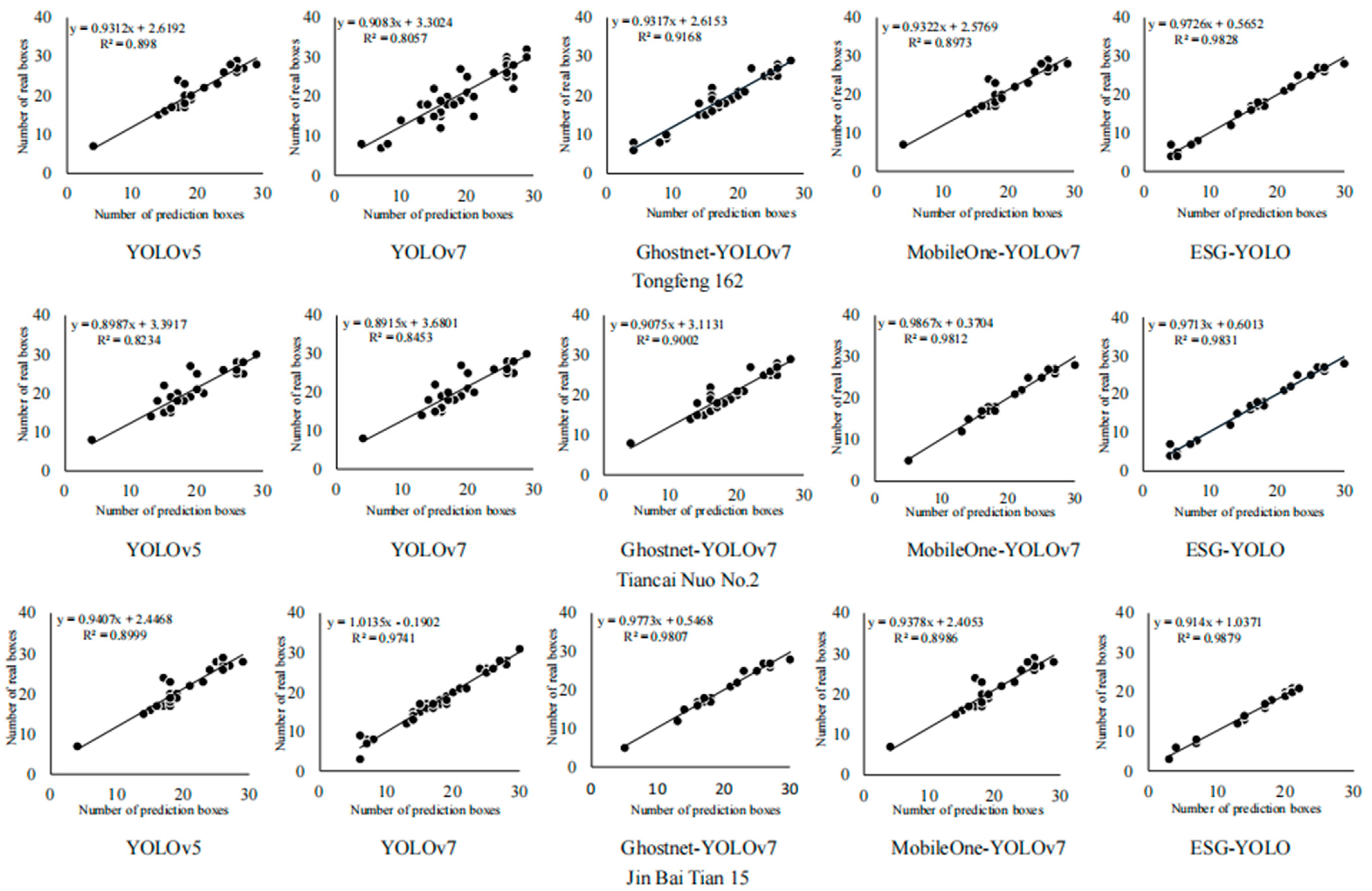

The results of the experiment are shown in

Figure 12. The mean absolute error (MAE) values of different varieties varied greatly, and the MAE values under the same planting density were also different. At planting densities of 12,112, 18,852, 22,304, and 28,865 plants/hm

2, the models detected MAEs of 0.82, 1.13, 1.35, and 1.78 for Tongfeng 162; 2.24, 2.78, 3.58, and 3.98 for Sweet Colored Glutinous No.2; and 0.57, 0.66, 0.84, and 1.67 for Jinbaitan 15, respectively. For the same variety, the greater the planting density, the greater the average absolute error value. The results showed that the detection accuracy of maize male tassels based on the ESG-YOLO model was not only related to planting density but also related to variety, and the main trend was that as the planting density increased, the detection error gradually became larger, and the main reason for this phenomenon was due to the fact that as the planting density increased, there was more and more serious cross overlap as well as shading between the tassel, which was prone to interfering with the detection process.

In summary, based on ESG-YOLO for the detection of maize male tassels, more intuitive and fast statistics for the number of maize male tassels and the density of male tassels were achieved using an automatic identification survey. This research method has certain accuracy and scientificity and also provides a certain theoretical basis and reference value for target detection and tassel recognition using the top view obtained from the UAV platform and field crop phenotype high-throughput collection platform.

5. Conclusions and Future Prospects

5.1. Conclusions

Detection of maize male tassel is the basic means for ensuring maize yield assessment. In order to realize the accuracy of maize male tassel recognition and detection in complex fields, this study constructed a maize male tassel image dataset based on the conditions of the complex field environment with different degrees of shading, different light intensities, and different densities. Using GELU as an activation function instead of the original SiLU, the SPD-Conv network module as well as the ECA Attention Mechanism module were added to train and predict the dataset in the ESG-YOLO model. The impact of the model improvement on the detection performance was analyzed, and the following conclusions can be drawn.

- (1)

The ESG-YOLO model mAP@0.5 is 93.1%, which is an improvement of 2.3% compared to the YOLOv7 model, respectively. In terms of accuracy, recall, F1 value, average frame rate, number of parameters, and computational effort combined, the model outperforms the comparison model, which has the best overall detection performance.

- (2)

The SPD-Conv network module is added to the ESG-YOLO model, which is optimized for processing symmetric positive definite matrix data, making it effective in low-resolution images and small-object target detection, which is more suitable for image detection of such small and multiple low-resolution targets as the male tassels of maize in complex fields.

- (3)

The class-averaged accuracy of the ESG-YOLO model on the complex maize field phenotyping platform test set reached a respectable 93.1%, respectively. Based on this, this model was applied to continue the heat visualization supply detection of detected maize male tassels, which more efficiently detected the growth status of maize male tassels in different environments.

- (4)

The ESG-YOLO model for the detection of maize male tassels is more intuitive and fast for counting the number of maize male tassels as well as the automatic identification of the density of male tassels.

This research method has certain accuracy and scientificity, and it also provides a certain theoretical basis and reference value for target detection and tassel stage identification using the top view obtained from the UAV platform and the field crop phenotype high-throughput collection platform. The optimization of intelligent detection and density assessment of low-resolution maize male tassels using the ESG-YOLO model in complex field environments lays a fundamental role in the research and development of new agricultural technologies, and it is the key to the performance of different maize varieties and yield prediction. It helps to control the impact of environmentally controllable factors on maize growth and development by providing information on maize plant growth, leaf color, and morphology, which in turn assesses the fertility and health of the soil, guides farmers in rational fertilizer application and soil management, improves the sustainable use of land, and helps formulate strategies for mitigating the effects of climate change and optimizing the sustainable management practices of land. It also contributes to precision agriculture by allowing for the real-time monitoring of maize growth and yield forecasts, providing decision support to farmers and the government, optimizing the allocation of agricultural resources, improving the efficiency of agricultural production, and promoting the sustainable development of the rural economy.

5.2. Future Prospects

There are two future perspectives for the detection and density assessment of maize male tassels. First, this paper proposes an improved complex model ESG-YOLO based on YOLOv7, which can significantly improve detection accuracy on the basis of ensuring detection precision. However, in order to make the detection accuracy rise, this study uses a dual ECA attention mechanism channel, which leads to an increase in the model detection time. And in order to realize the integrated management platform of intelligent agriculture, create precision agriculture, help farmers customize inputs according to the specific needs of the crop, and intelligently realize the reasonable control of statistics and planting density in the process of maize production in order to achieve cost savings, reduce waste, and improve production efficiency, the future will inevitably optimize the ESG-YOLO model again and apply it to the field intelligent system for operation. In the future, the ESG-YOLO model will be optimized and applied to the field intelligent system for operation, and the use of the ESG-YOLO model will be loaded into the field intelligent system, which requires a more lightweight adjustment, which is the goal of this study to continue to study and adjust in the future.

Secondly, this model needs to be considered to link to later relevant applications. The generalization application of deep learning models is correlative, associating with related applications that will be used in later stages, such as the application of deep neural networks and transfer learning in remote object detection by drones, the corn disease prediction framework based on the Internet of Things and interpretable machine learning, and the investigation of the application of deep convolutional neural networks in the prediction of plant leaf diseases and other extended applications. We will also generalize this ESG-YOLO model in disease detection and prediction and other deep convolutional neural networks to generalize the use of this ESG-YOLO model to help farmers and agricultural experts to detect problems such as pests and diseases in a timely manner and to take appropriate control measures, so as to safeguard the yield and quality of maize and to ensure the security of the food supply.