1. Introduction

Pests cause serious damage to crops all year, leading to serious losses in the agricultural economy. Necessary measures should be taken in time for the areas where pests occur to economically and effectively suppress the number of pests and ensure high crop yields [

1]. Therefore, it is necessary to control pests at the source and accurately detect pest control measures [

2].

Early pest detection methods identified crop pests through agricultural experts or farmers based on their existing experience. This method is both time-consuming and inefficient. Moreover, it was impossible to avoid misjudgments due to inspectors and, thus, the blind use of drugs. This situation not only leads to environmental pollution but also causes unnecessary economic losses. The application of computer vision technology in pest detection has become increasingly popular [

3]. The ability of computer vision technology to accurately identify pest types has provided the basis for automated and accurate pest detection [

4]. However, traditional machine learning techniques have poor robustness in complex scenarios and are difficult to meet the needs of complex scenarios. With the development of deep learning techniques, many deep learning network models have been gradually applied for pest detection. The object detection algorithms based on deep learning have been divided into one-stage and two-stage detection algorithms. Two-stage detection algorithms first generate candidate frames and then classify these candidate frames, such as R-CNN [

5], Fast R-CNN [

6], Faster R-CNN [

7], etc. The detection speed of these algorithms is slower. Instead of generating candidate frames, one-stage algorithms directly predict the whole image, such as the SSD [

8] and YOLO series algorithms. There is already some research based on deep learning for pest detection, but this area has not been fully explored. Kangshun Li et al. [

9] proposed a fast and lightweight detection algorithm for passion fruit pests based on the improved YOLOv5. This algorithm uses the mix-up method to enhance the data set at the input side to improve the robustness of the model. The CBAM module is added to the neck network, and the PLDIoU loss function is added to the output side to improve the detection speed and detection capability of small target pests. The final detection accuracy of 96.51% was achieved. Zijia Yang et al. [

10] designed a pest detection and recognition model for tea plantations using the Yolov7-tiny algorithm. This algorithm incorporated the deformable convolution, Biformer dynamic attention mechanism, non-extreme suppression algorithm module, and a novel implicit decoupling head to achieve a recognition accuracy of 93.23%.

Existing algorithms have suffered from the problem that a large number of network model parameters makes the computational cost high, they cannot be effectively deployed on mobile devices for real-time detection, and they mostly detect only a single pest. The specific objective of this study was to propose a lightweight crop pest detection algorithm based on an improved Yolov5s, which was capable of the automatic and accurate detection of eight common pests. Additionally, it was deployed on the mobile embedded device Jetson Nano [

11] for real-time detection. However, the deployment of mobile devices is still in the laboratory stage and has not been tested in the field. The main contributions of this paper are as follows.

2. Related Work

2.1. Spectral Image Analysis Technology

With the development of science and technology, a combination of information technology and agricultural production has been proposed for precision agriculture. Studies have been carried out recently to examine insect identification using spectral analysis methods [

19] on the spectral pictures of crops [

20]. Y.Y. Li et al. [

21] achieved the differentiation of insect species by combining wing beat frequency analysis and spectral features for reflectance and fluorescence spectral monitoring. Fernando Vanegas et al. [

22] used airborne RGB, multispectral, and hyperspectral image data combined with ground acquisition data to extract the average spectral features of grapevines for the detection of phylloxera.

2.2. Deep Learning Techniques

The pest data set is growing [

23] due to the rapid advancement of deep learning [

24], the modern period of agricultural information technology [

25], and the use of deep learning to identify pests [

26] has garnered a lot of interest. The appearance of the convolutional neural network (CNN) has improved the detection rate and accuracy of image recognition. The object detection algorithms based on deep learning have been divided into one-stage and two-stage detection algorithms. Compared to one-stage detection, two-stage detection algorithms have higher accuracy but slower detection speed. Wang Fangyuan et al. [

27] proposed a CNN-based rice planthopper search network to extract the characteristics of the rice planthopper area and further improved the performance of classification and bounding box regression by using a sensitivity score matrix, with an average accuracy of 81.42%. Chengkang Liu et al. [

28] used the ResNetV2 network model to extract pest features and finally realized a pest forecasting device for the automatic collection and transmission of cotton field pests with an identification accuracy of 85.7%.

Two-stage detection algorithms are computationally expensive and cannot meet the demand for real-time pest detection. The Yolo family of algorithms is representative of one-stage detection algorithms that balance detection accuracy and speed. Pang Haitong et al. [

29] improved the Yolov4 algorithm and upgraded the NMS algorithm [

30] to DIoU-NMS [

31] to achieve the real-time detection of orchard pests with an average accuracy of 92.86%. Fang Qi et al. [

32] proposed an improved pest detection model with the Yolov5 model. This algorithm uses GhostNet, a lightweight feature extraction network, as the backbone network. An efficient channel attention mechanism was introduced to extract features more efficiently. BiFPN was introduced to enhance the representation of small pest features, and the FFARF module was proposed to dynamically assign weights to each sensory field. Finally, the improved model was tested on the Pest24 dataset, and a 74.1% detection accuracy was obtained. Wei Zhang et al. [

33] proposed a lightweight pest detection model, AgriPest-YOLO, to achieve a good balance between pest detection efficiency, accuracy, and model size. The model proposes a CLA mechanism, designs a GSPPF module, and introduces a soft net management system in the prediction layer to optimize the final prediction results of overlapping pests. The model obtains a multi-scale representation of richer pest features and experiments on a large-scale multi-pest image dataset containing 24 classes and 25 k images, obtaining 71.3% of mAP. Qiuchi Xiang et al. [

34] explored and investigated the improvement of convolutional neural network models on the Teddy Cup pest dataset and proposed a lightweight and effective agricultural pest detection model for small target pests. This model incorporates the ConvNext module and the SE attention mechanism into the model and proposes a residual feature extraction structure with controlled channels to control the stacking of residual blocks to solve the problem of feature loss or redundancy. The model achieved 91.9% mAP on the Teddy Cup pest dataset. Shuai Yang et al. [

35] proposed a Yolov7-based real-time pest detection method for the detection of corn pests. The authors inserted the CSPResNeXt-50 module and the VoVGSCSP module into the model to improve the network detection’s accuracy and speed. The authors conducted experiments on 4533 images in 13 categories and achieved 76.3% of mAP.

3. Materials and Methods

3.1. Yolov5 Introduction

Yolov5 is a one-stage target detection algorithm that was proposed by Ultralytics in 2020, which mainly has Yolov5s, Yolov5n, Yolov5m, Yolov5l, and Yolov5x network models with increasing depth, width, and required computation. Yolov7 [

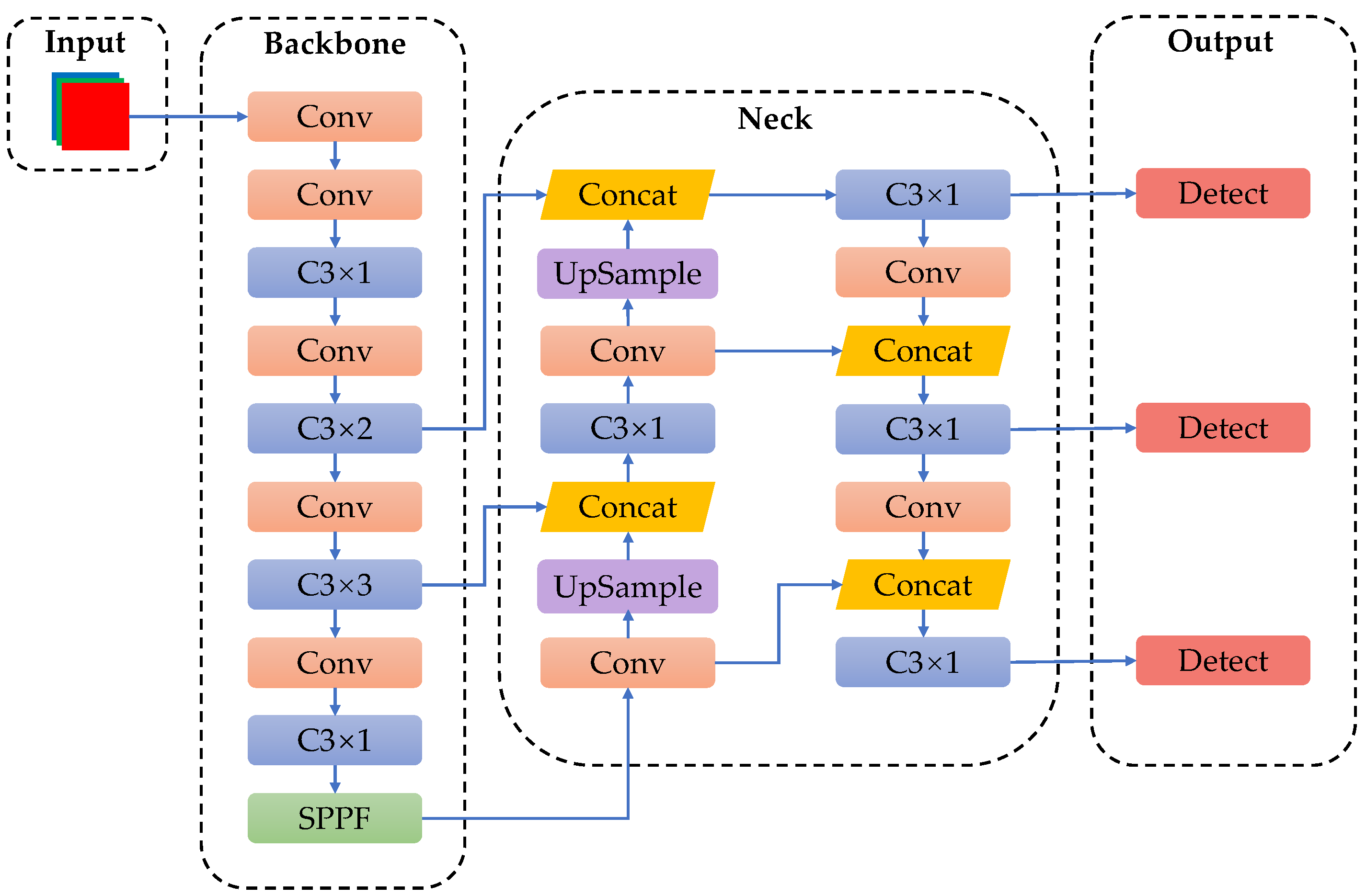

36] is a newer version of the Yolo series. Yolov7 uses new techniques and optimizations based on the Yolov5 algorithm. However, the advantage of Yolov5 is its speed. Yolov5 is much faster in training and inference than Yolov7 and can run on mobile devices. Yolov7 has the advantage of high accuracy but runs slowly and is less suitable for use on mobile devices. For better deployment on the Jetson Nano development board for real-time detection, we used Yolov5 as a benchmark algorithm. Yolov5s has the least number of layers and the smallest computational complexity compared to other models, and its model size is also the smallest. Therefore, we chose Yolov5s as the benchmark model. The network structure of the Yolov5s model is shown in

Figure 1 and can be divided into four parts: Input, Backbone, Neck, and Output.

The input side includes Mosaic data enhancement [

37], adaptive image scaling [

38], and an adaptive anchor frame [

39]. Mosaic data enhancement crops and scales 4 images randomly, then randomly arranges and stitches them to form a single image, which enriches the data set while increasing the small sample target and improving the training speed of the network. Adaptive image scaling is the process of adaptively adding black borders to the original images, scaling them uniformly to a standard size, and then feeding them into the detection network. An adaptive anchor frame is the adaptive calculation of the best anchor frame value in the training set at each training.

C3 [

40] and Spatial Pyramidal Pooling (SPP) modules [

41] make up the backbone network. The creators of Yolov5 updated it by replacing the BottleneckCSP module with the C3 module, which had a structurally similar role to the CSP (Cross Stage Partial) architecture. Only the correction units chosen were different. Three common convolutional layers and several Bottleneck modules were included in the C3 module. In contrast to the BottleneckCSP module, C3 changes the activation function of the conventional convolution module after concatenation from LeakyRelu [

42] to SiLU (Sigmoid Linear Unit) [

43] and removes the Conv module after witnessing the residual output. The SPP module first halves the input channel through a standard convolution module and then performs max-pooling [

44] with kernel sizes of 5, 9, and 13 (padding is adaptive for different core sizes). The result of the three max-pooling operations can be concatenated with the data without a pooling operation, and the number of channels after the final merge was twice the original one.

The neck network consists of the FPN + PAN structure. The Feature Pyramid Network (FPN) [

45] fuses the feature information at higher levels by using bottom-up, top-down, and lateral connections in three ways for feature transfer to improve the final network layer’s feature information capability. However, FPN only enhances semantic information transfer and is weak for shallow localization information transfer. In contrast, PAN enhances the transfer of location information by adding a bottom-up pyramid after FPN passes the 3 × 3 convolutions.

The output employs NMS (Non-Maximum Suppression) to filter multi-target boxes to enhance multi-target detection and CIoU (Complete IoU) loss [

46] as the loss function of the bounding box.

3.2. Improved Network Implementation

For the practical needs of the Yolov5s model in pest detection, the improvements to the Yolov5s model were as follows.

3.2.1. Backbone Network Improvement

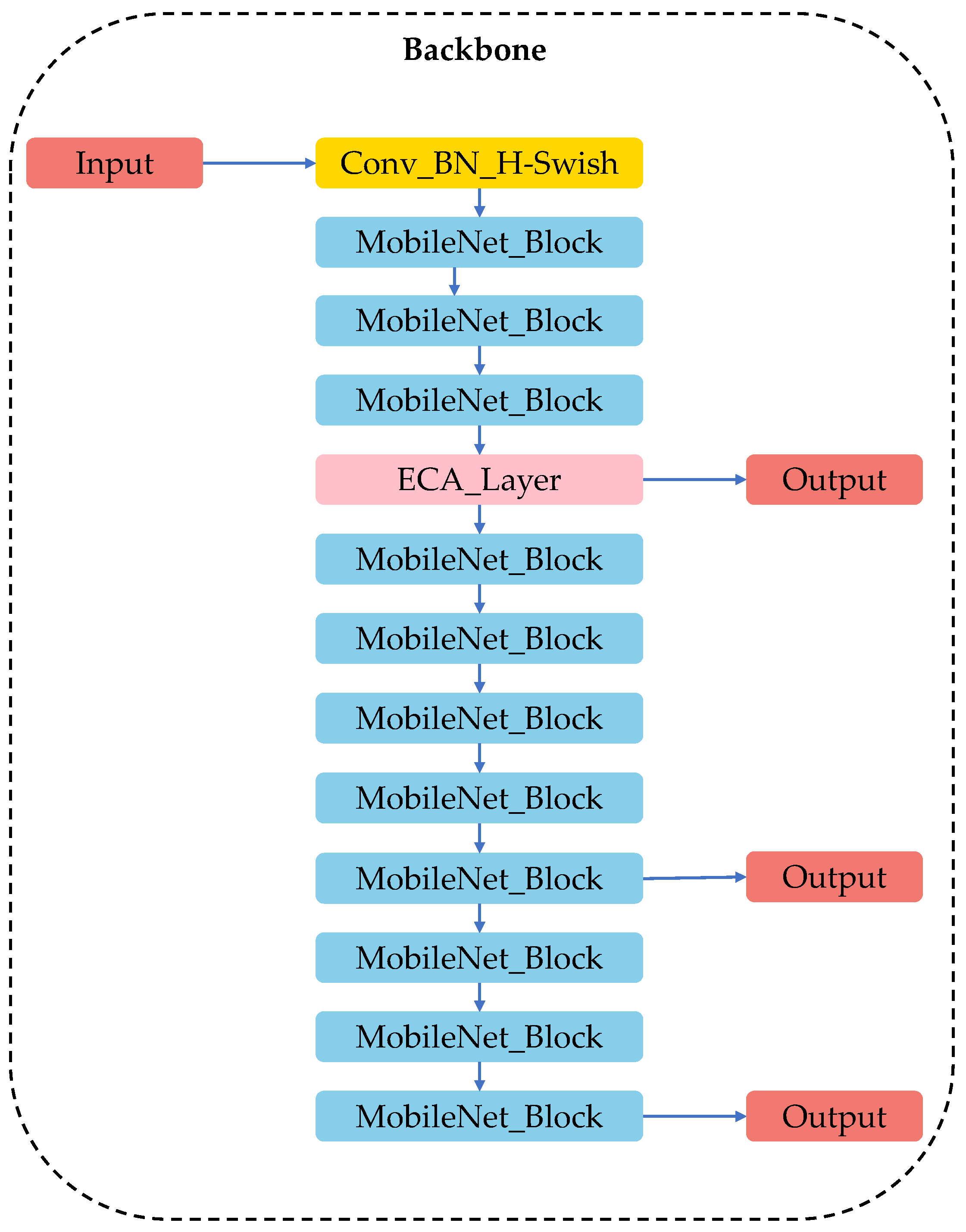

In order to meet the demand for the real-time monitoring of the model, using a lightweight feature extraction network could effectively reduce the computational complexity of the network model and improve the detection speed. Existing lightweight networks such as ShuffleNets, GhostNet, and MobileNets, respectively, were used to improve the backbone network for the Yolov5 model. Considering the model’s complexity and accuracy, MobileNetV3 was finally selected as the backbone network for the pest detection model.

With a focus on the lightweight CNN network in mobile terminals or embedded devices, the MobileNetV1 network [

47] presented a depthwise separable convolution [

48] as a potent replacement for the conventional convolution layer that significantly decreased processing. By isolating the spatial filtering from the feature generation process, the depthwise separable convolution efficiently decomposed the classical convolution. The MobileNetV2 network [

49] features a smaller model and improved accuracy compared to the MobileNetV1 network. By utilizing the low rank feature of this issue, it created a linear bottleneck [

50] and an inverted residual structure [

51] to increase the effectiveness of the layer structure. MobileNetV3 builds on the previous two versions by adding a neural network architecture search (NAS) [

52] and the h-swish activation function [

53] and introducing the SE channel’s attention mechanism [

54] with excellent performance and speed.

The C3 module in the original Yolov5s backbone network is composed of more complex components, such as BottleNeck. In order to avoid the redundant calculations caused by these structures, lightweight improvements were considered. Therefore, combining the performance and speed advantages of the MobileNetV3 network, we used the MobileNet_Block module, which contained a depthwise separable convolution, to reconstruct the backbone network. The C3 module of the previous backbone network was replaced with the MobileNet_Block module, which was developed from the high-performance lightweight network MobileNetV3. This could drastically reduce the amount of processing in the network model.

Figure 2 displays the MobileNet_Block module structural diagram.

The ability to extract image features is hampered by the MobileNet_Block module’s considerable reduction in the number of network model parameters. In order to enhance the network’s capacity to extract features, the ECA attention mechanism was introduced and implemented into the MobileNetV3 shallow network. By utilizing a local cross–channel interaction technique without dimension reduction and an adaptive selection of the one-dimensional convolutional kernel size, the ECA module simply added a minimal number of parameters to enhance the performance of the network model.

Figure 3 shows how the backbone network improved in terms of structure. Among them, the Conv_BN_H-Swish module indicated that the Conv layer merged with the BN layer and the H-Swish activation function. The images at the input were fed into the first layer of the backbone network for convolutional operations and normalization to improve the speed of forward inference in the model. The H-Swish activation function was used to improve the network’s accuracy.

The improved backbone network structure and parameter configuration are shown in

Table 1.

In the table, n indicates the number of repetitions in the module. Params indicate the number of parameters in the module and the serial number position. The module indicates the name of the module. Configuration is the parameter configuration. Three configuration parameters in the Conv_BN_H-Swish module indicated the number of channels for input, the number of channels for the output feature map, and the step of the convolution kernel, respectively. The seven configuration parameters in MobileNet_Block indicated the number of input channels, the number of output channels, the number of expansion channels in the inverse residual structure, the size of the convolution kernel, the step of the convolution kernel, whether to use the SE attention mechanism, and whether to use the H_Swish activation function. The ECA_Layer module had two configuration parameters that describe the size of the convolution kernel and the number of input channels. Assuming that the input image size was 3 × 640 × 640, the output feature map in the table showed that after a series of steps, the aspect scale of the feature map gradually reduced, the number of channels gradually expanded, and these features were expressed step by step from shallow to deep.

3.2.2. Neck Network Improvement

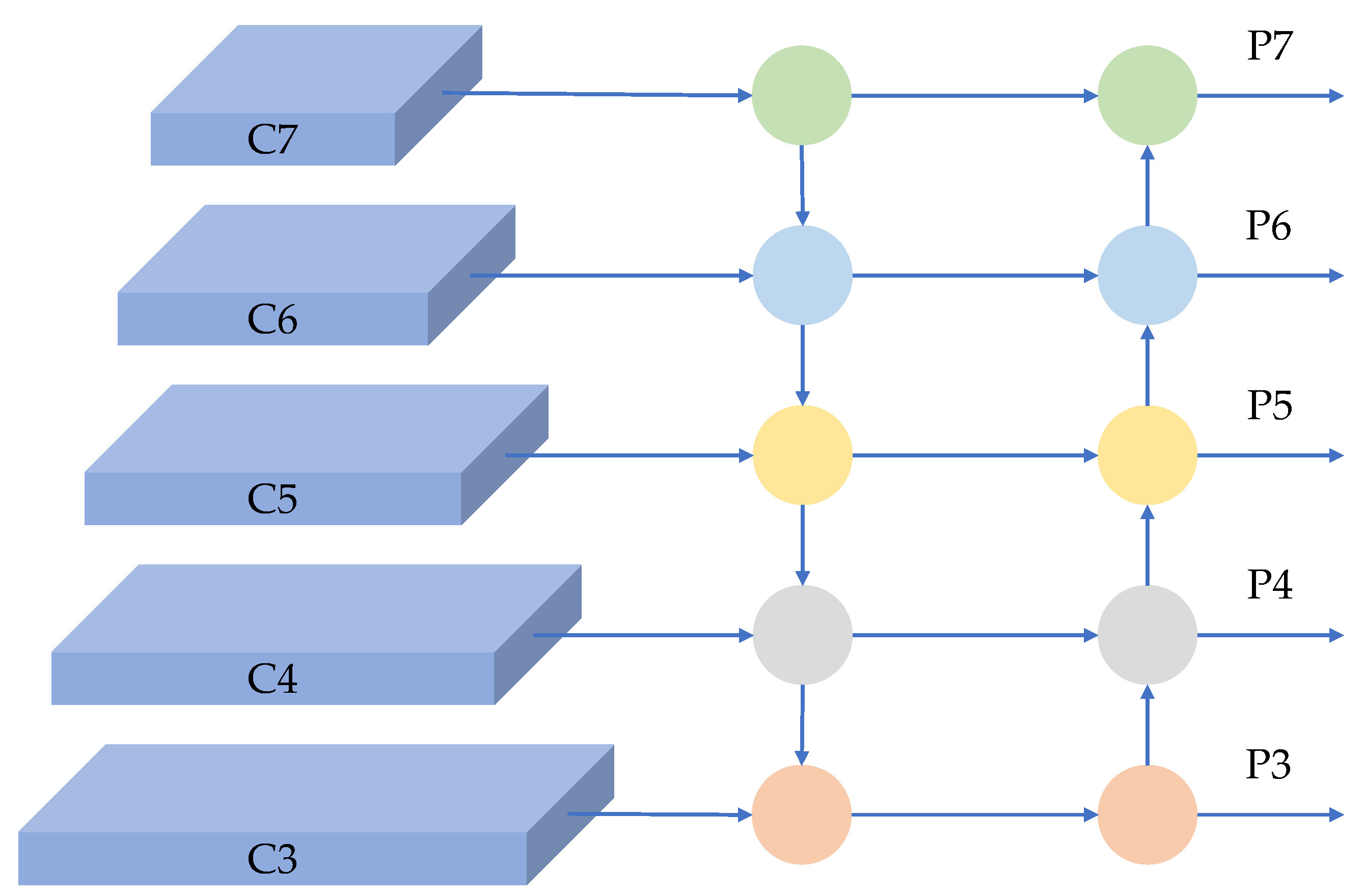

The majority of the pest targets were small to medium-sized, according to our analysis of the pest dataset. To increase the lightweight model’s detection precision for tiny targets, the PAnet in the original model was enhanced using BiFPN.

Figure 4 depicts PAnet’s structure, with C standing for the input feature map and P for the output feature map.

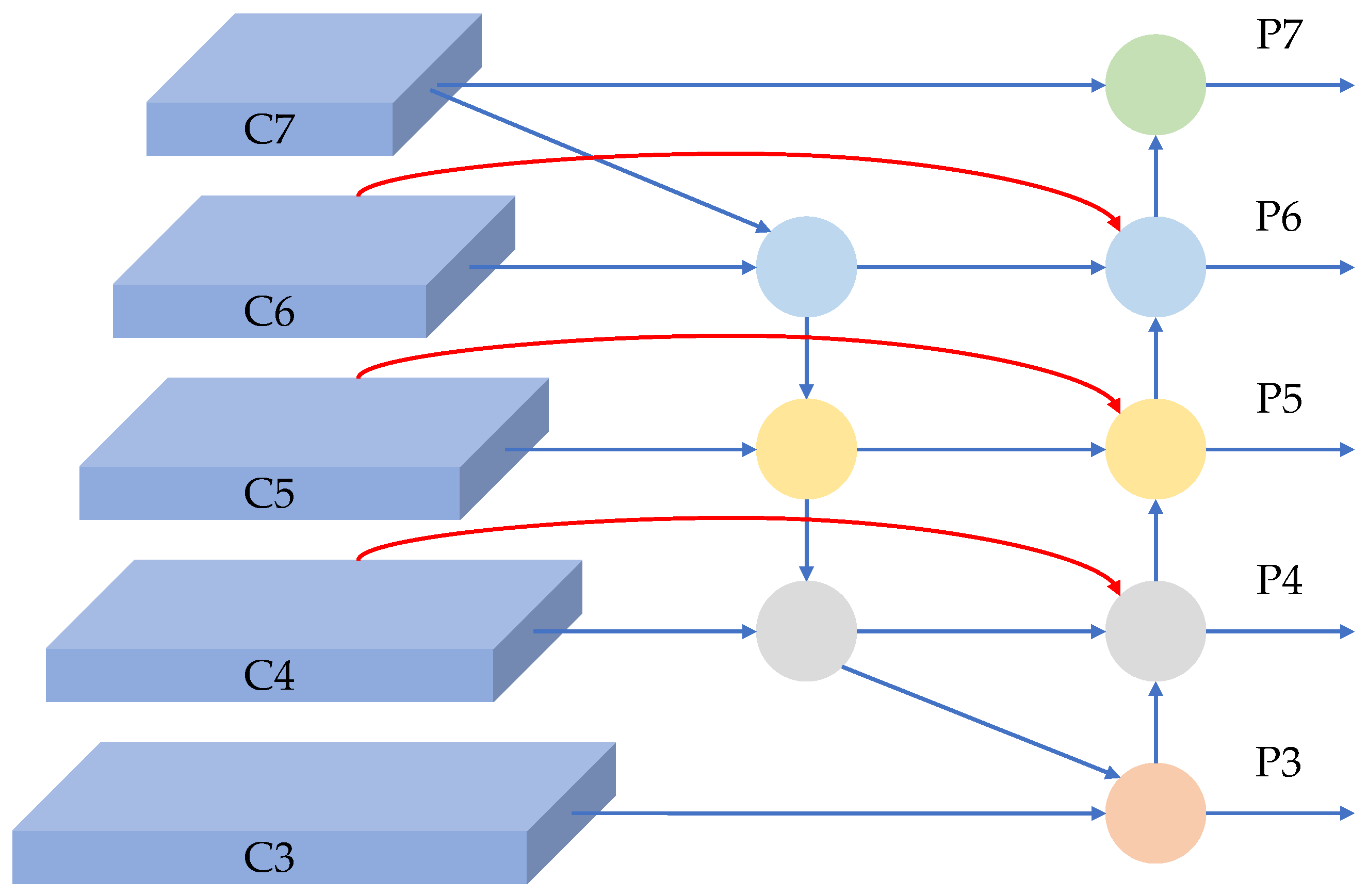

BiFPN performs a weighted feature fusion and bidirectional cross-scale connection, and its structure is depicted in

Figure 5. The nodes between C3 and P3 and C7 and P7 were removed from the network with no feature fusion and little contribution, and the edges from the original input node to the output node were increased and multiplied by the corresponding weights, i.e., C4 to P4, C5 to P5, and C6 to P6, to achieve the efficient fusion of low and middle-level features and high-level features; therefore, that they could have strong semantic information and still retain a strong perception of details to improve the accuracy of the model for small target detection.

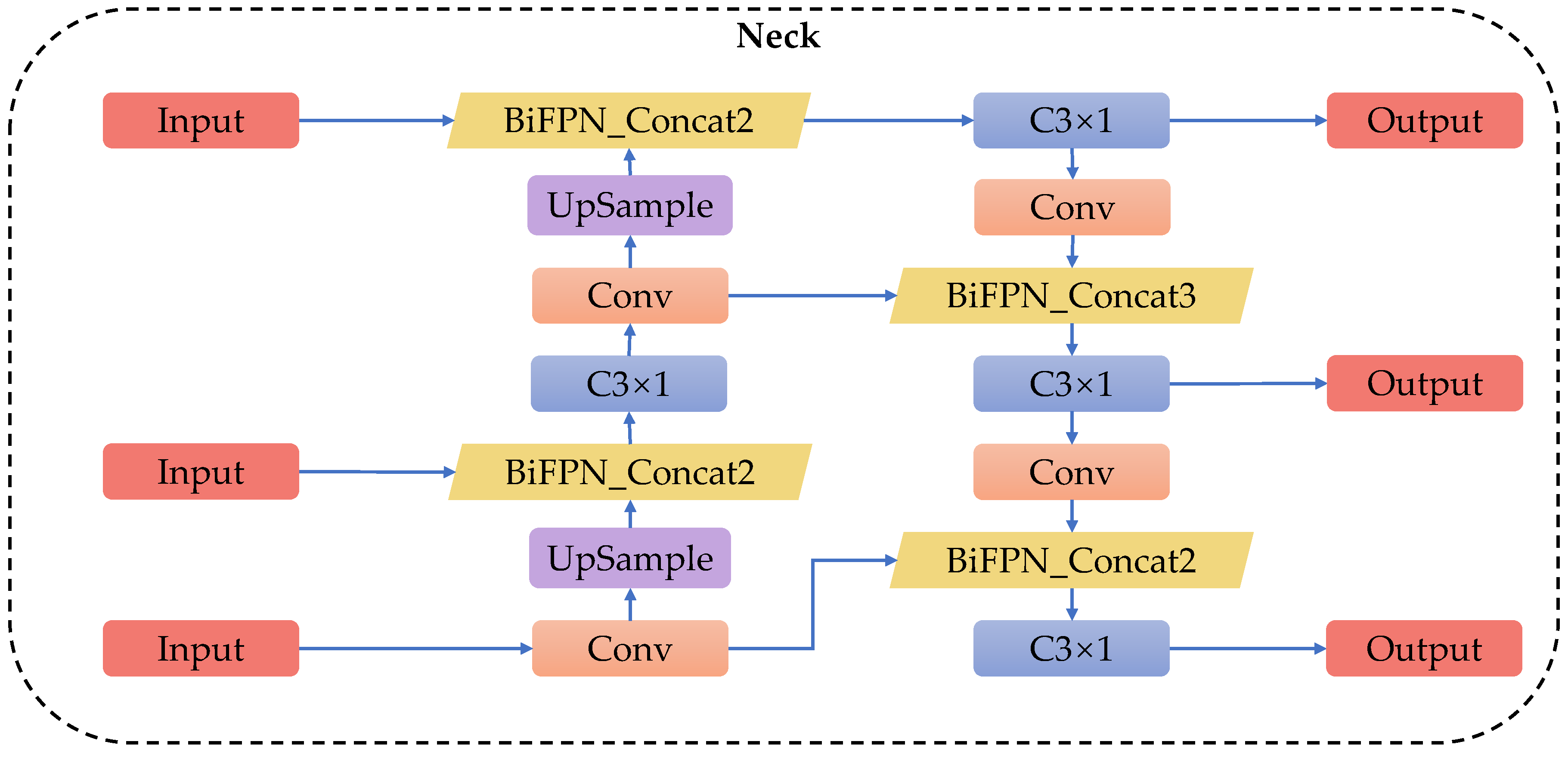

Figure 6 displays the model’s improved neck network’s structural diagram. The BiFPN_Concat2 module and the BiFPN_Concat3 module took over the role of the Concat module from the original model in the improved neck network. The BiFPN_Concat2 module performed two-branch concatenation operations, and the BiFPN_Concat3 module performed three-branch concatenation operations.

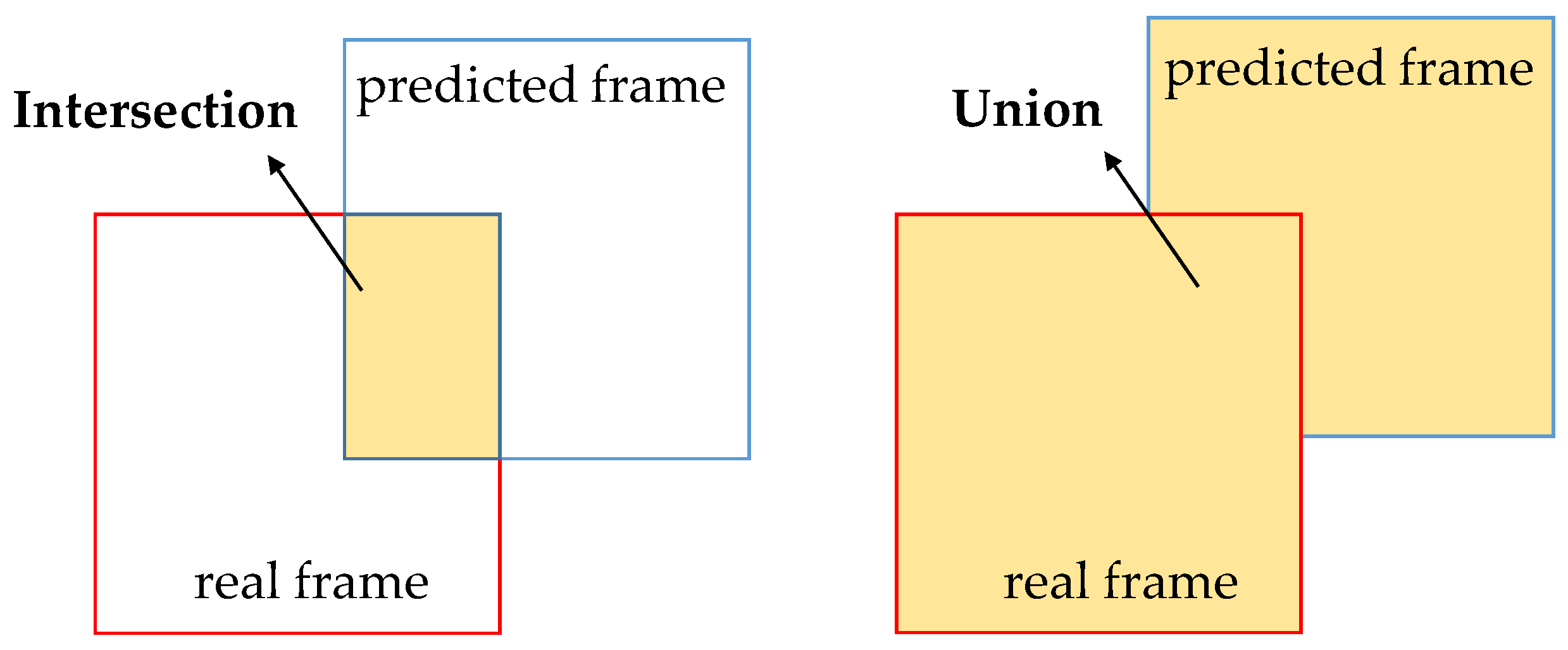

3.2.3. Output Improvement

Yolov5 employs the CIoU loss, which takes into account the overlap area, the centroid distance, and the aspect ratio of the bounding box regression. However, it represents the difference in the aspect ratio rather than the true difference in aspect and confidence, which could stall convergence and impede model optimization. As a result, the SIoU loss function was introduced to replace the CIoU’s loss function. SIoU also analyzes the vector angle between the real frame and the prediction frame and redefines four penalty indicators, namely angle loss, distance loss, shape loss, and IoU loss [

55], which can increase the prediction frame’s convergence speed and inference accuracy.

The angle loss function,

AL, is defined as follows.

In this calculation formula, is the height difference between the center point of the real frame and the predicted frame and is the distance between the center point of the real frame and the predicted frame.

The distance loss function,

DL, is defined as follows.

In these calculation formulas, is the x-coordinate of the center point of the real frame and is the y-coordinate of the center point of the real frame. is the x-coordinate of the center point of the predicted frame and is the y-coordinate of the center point of the predicted frame. is the width of the minimum outer rectangle of the real frame and the predicted frame. is the height of the minimum outer rectangle of the real frame and the predicted frame.

The shape loss function,

SL, is defined as follows.

In these calculation formulas, is the width of the real frame and is the height of the real frame. is the width of the predicted frame and is the height of the predicted frame. controls the degree of attention to shape loss.

The

IoU loss function is defined as follows.

Figure 7 shows the intersection and union.

The following is a definition of the

SIoU loss function.

In this calculation formula, denotes the ratio of intersection and concurrent sets, is the distance loss, and is the shape loss.

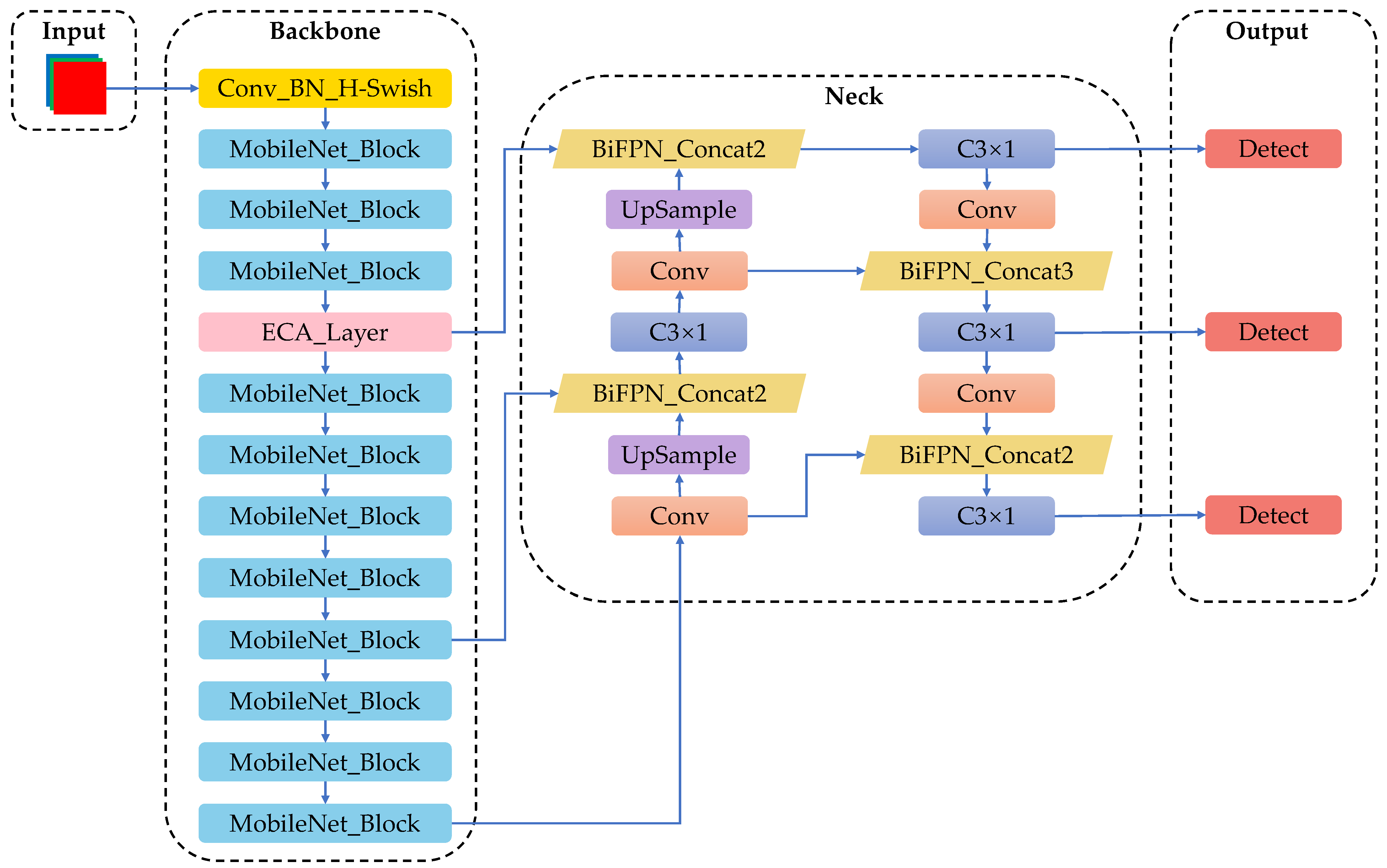

Figure 8 displays the network topology of the final, enhanced ECMB-Yolov5 model.

4. Results

4.1. Experimental Data and Evaluation Indexes

4.1.1. Experimental Data

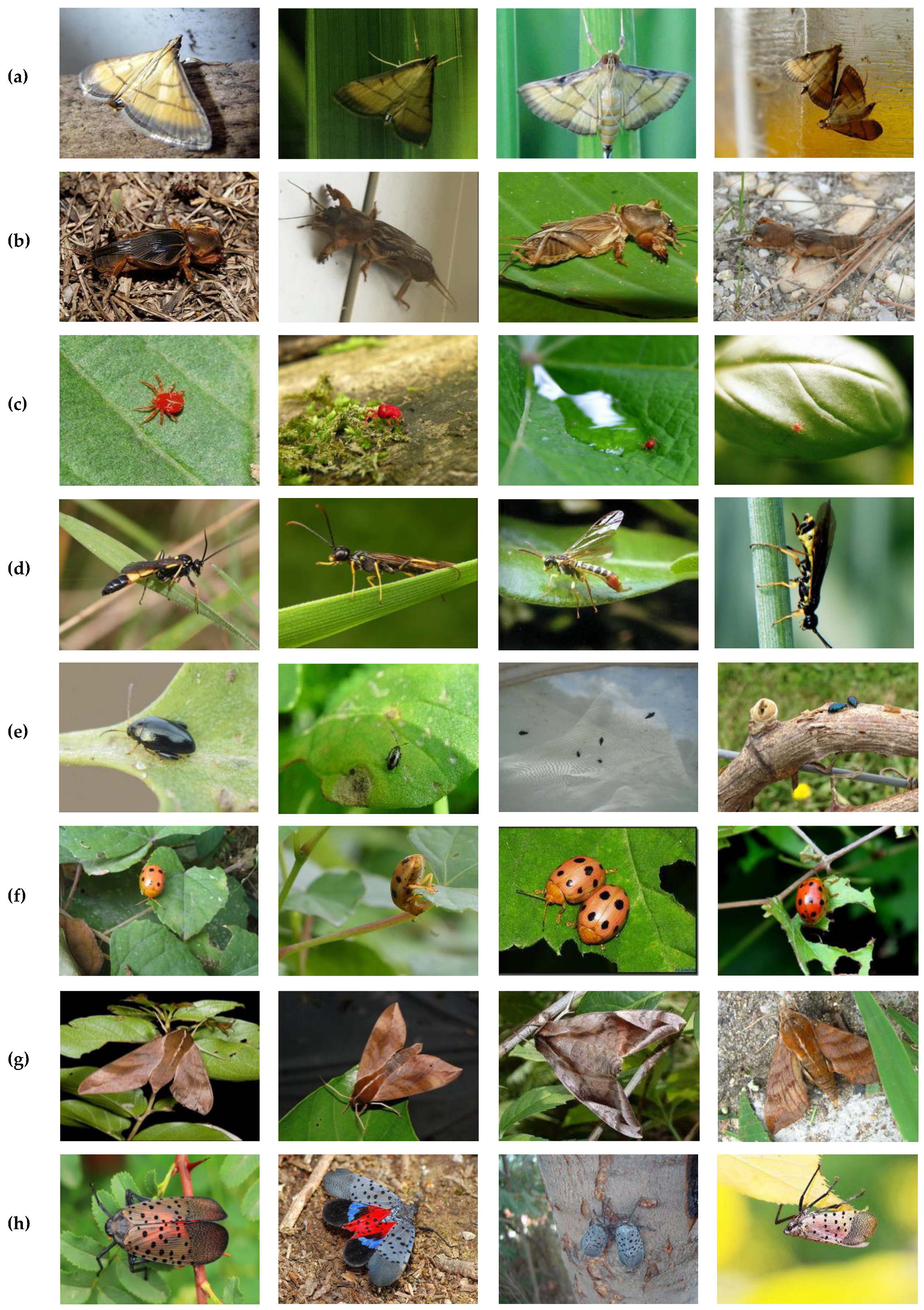

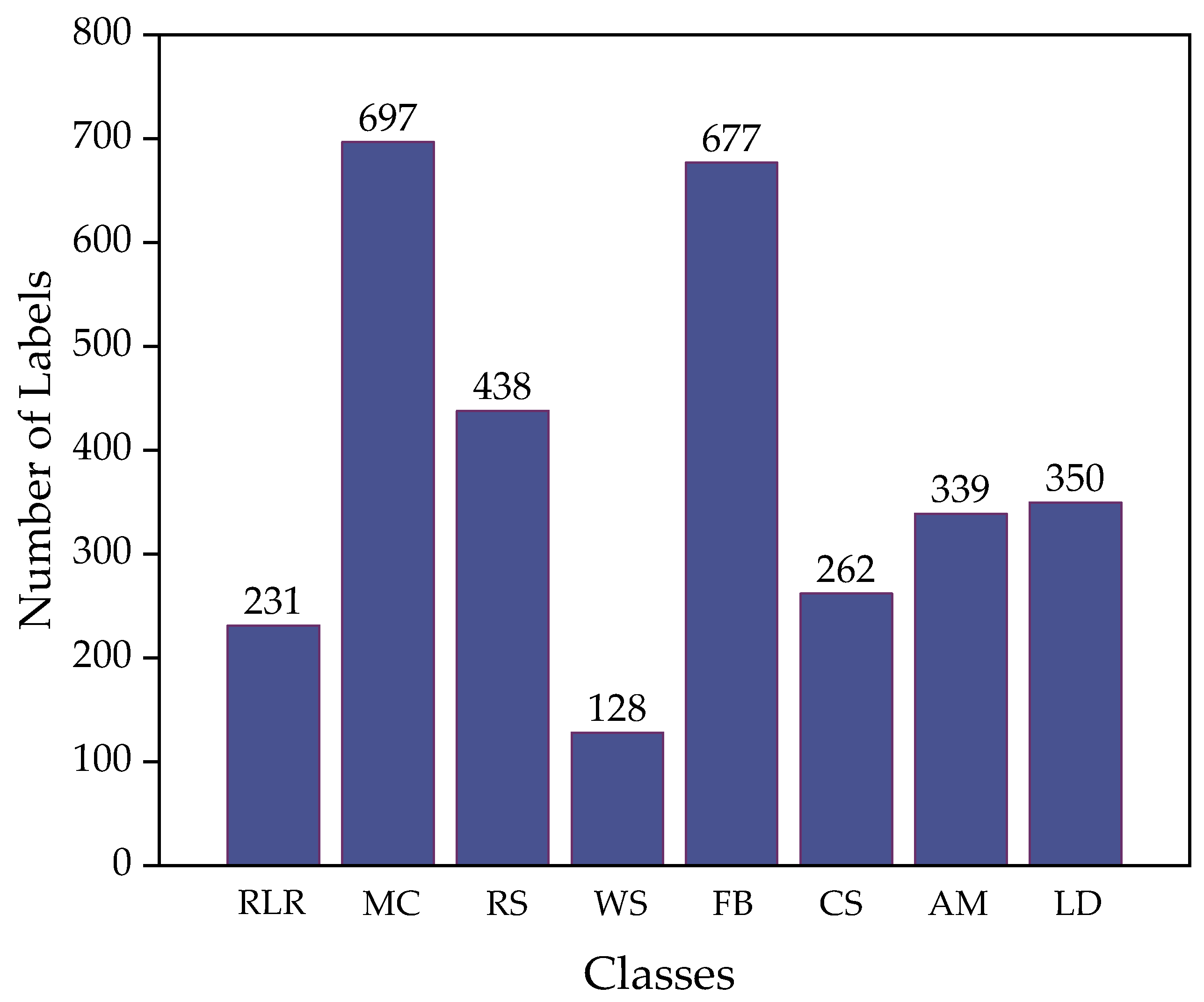

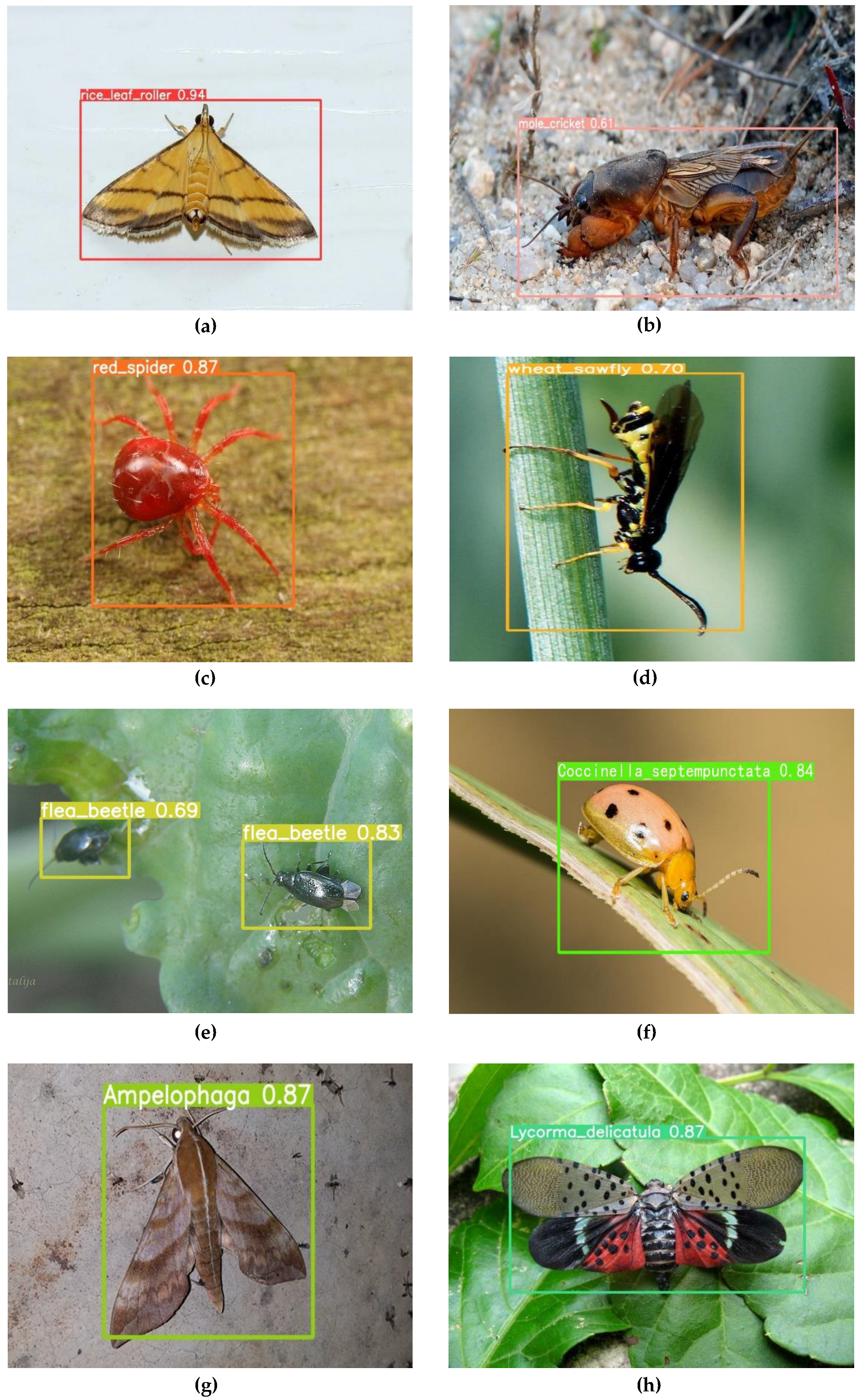

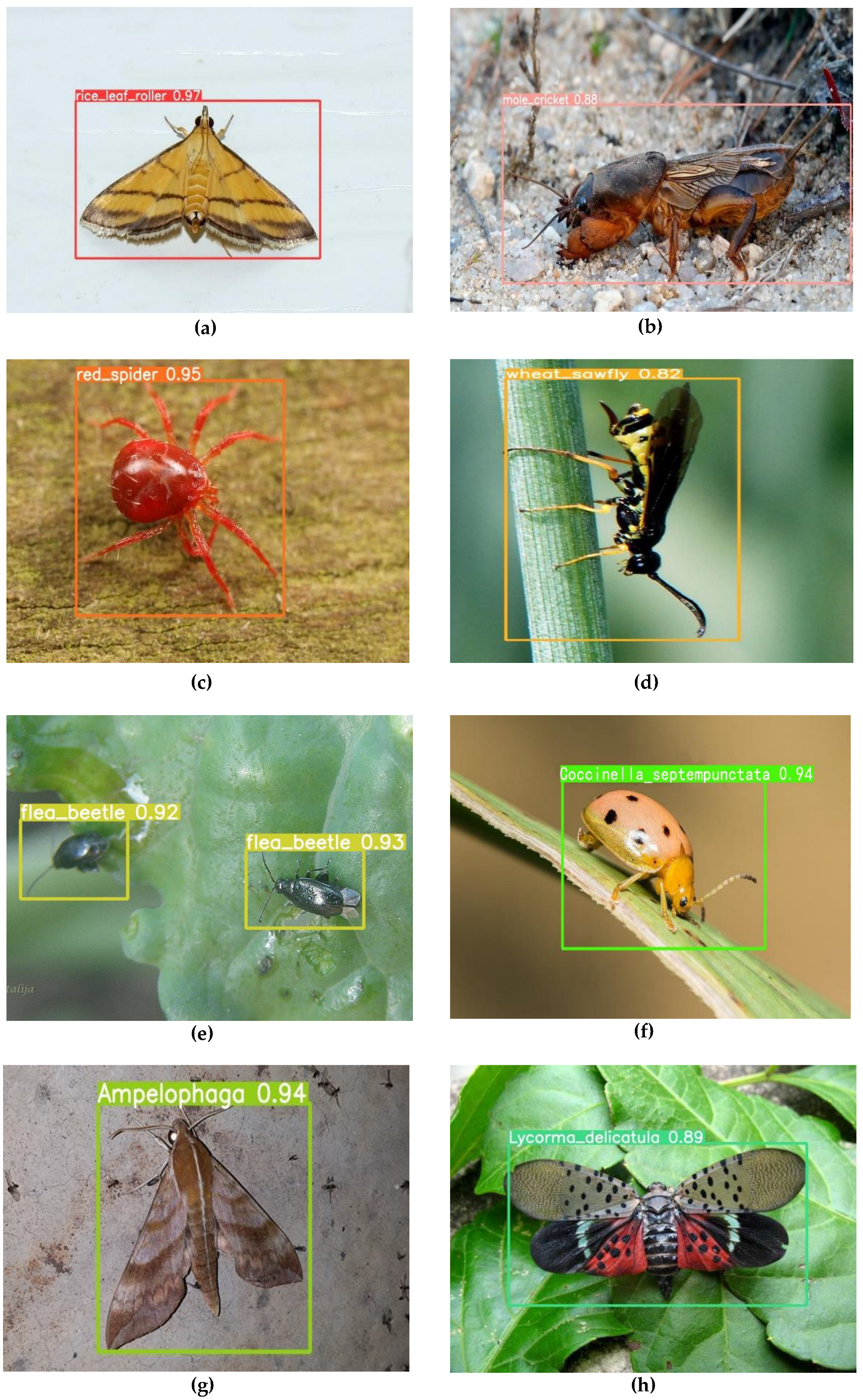

The dataset used in this study was partly derived from the large-scale open-source dataset IP102 and partly photographed by a cell phone. In total, 2570 images were collected, containing eight categories:

Rice Leaf Roller (RLR),

Mole Cricket (MC),

Red Spider (RS),

Wheat Sawfly (WS),

Flea Beetle (FB),

Coccinella Septempunctata (CS),

Ampelophaga (AM), and

Lycorma Delicatula (LD). We used LabelImg software to label the images. Finally, we randomly split the dataset into a training set, a validation set, and a test set in the ratio of 8:1:1. Representative samples of each class of pest images are shown in

Figure 9.

The statistics of the number of labels for each category in the dataset are shown in

Figure 10.

4.1.2. Evaluation Indexes

To select the optimal model, several metrics were used to evaluate the performance of the model, including precision (

P), recall (

R), the mean average precision (

mAP), preprocessing elapsed time (Preprocess), network preprocessing elapsed time (Inference), postprocessing elapsed time (NMS), floating point operations (GFLOPS), and frames per second (FPS). mAP@0.5 was the average accuracy value for all categories when the IoU threshold was set to 0.5. mAP@0.5:0.95 was the average accuracy value for all categories on different IoU thresholds (from 0.5 to 0.95 with a step size of 0.05).

The number of positive samples identified as positive samples are indicated by the symbol TP in these calculation algorithms. FP stands for the number of negative samples that were mistaken for positive samples. FN stands for the number of positive samples that were mistaken for negative ones. The number N indicates how many sample categories were found. P(r) denotes the PR curve. The average precision (AP) is the area under the PR curve enclosed by recall in the horizontal coordinate and the precision in the vertical coordinate.

4.2. Experimental Results and Analysis

4.2.1. Experimental Environment and Configuration

The experimental training environment used in this paper had the following hardware and software configurations: the CPU processor was Intel i5-11400H, the graphics card was NVIDIA GeForce RTX 3050 with a 4G video memory, the operating system was Windows, the CUDA version was 11.6, the Pytorch version was 1.11.0, and the Python version was 3.9.12. Training Settings: the batch size was 16, the input image resolution was 640 × 640, and the epochs were 300.

4.2.2. Backbone Network Performance Comparison

Three lightweight feature extraction networks, ShuffleNetV2, GhostNet, and MobileNetV3, were selected to reconfigure the original Yolov5 backbone network to reduce the number of parameters. Additionally, compared to the impact brought by different backbone networks, the specific experimental results are shown in

Table 2. The experimental results showed that the number of parameters used by the MobileNetV3 network to reconstruct the Yolov5 backbone network was about one-third of the number of parameters used by the two outside networks. Additionally, for our dataset, the Yolov5s-MobileNetV3 network achieved the highest accuracy among these three at 98.1%. Therefore, considering the computational cost and accuracy of the model, we chose MobileNetV3 to reconstruct the backbone network.

4.2.3. Ablation Experiments

The improvement methods for different parts of the network structure were studied and divided into five experiments. The first one was the original Yolov5s model. The second one was the model using the neck network improvement. The third one was the model using the backbone network improvement. The fourth one was the model using the backbone network improvement + neck network improvement. The fifth one was the model using the backbone network improvement + neck network improvement + loss function improvement, which was the final improved model, ECMB-Yolov5.

The data for the total category ablation experiments are shown in

Table 3. The experiments show that the second experiment was to improve the neck network part of the original Yolov5s, where the number of parameters only increased by 0.9%, and the mAP@0.5 increased by 0.1%; therefore, the detection accuracy is improved by using BiFPN. The third, fourth, and fifth experiments used MobileNetV3 as the backbone network, and the P, R, and mAP@0.5 were gradually improved. The number of parameters of the ECMB-Yolov5 model was reduced by 80.3% compared with the original Yolov5s parameters, and the memory required was only 21.5% of the original Yolov5s, which showed that the number of parameters and memory were much smaller than those of the original model. Although the P and R of the final improved model were slightly lower than the original model and the mAP@0.5 was reduced by 0.8%, it was particularly important for the lightweight network model deployed on the specific embedded device Jetson Nano to achieve real-time detection. The ECMB-Yolov5 model sacrificed very little detection accuracy, while the number of parameters and the required memory were significantly reduced, which had a very significant improvement on the real-time detection speed, as shown in

Section 4.2.5.

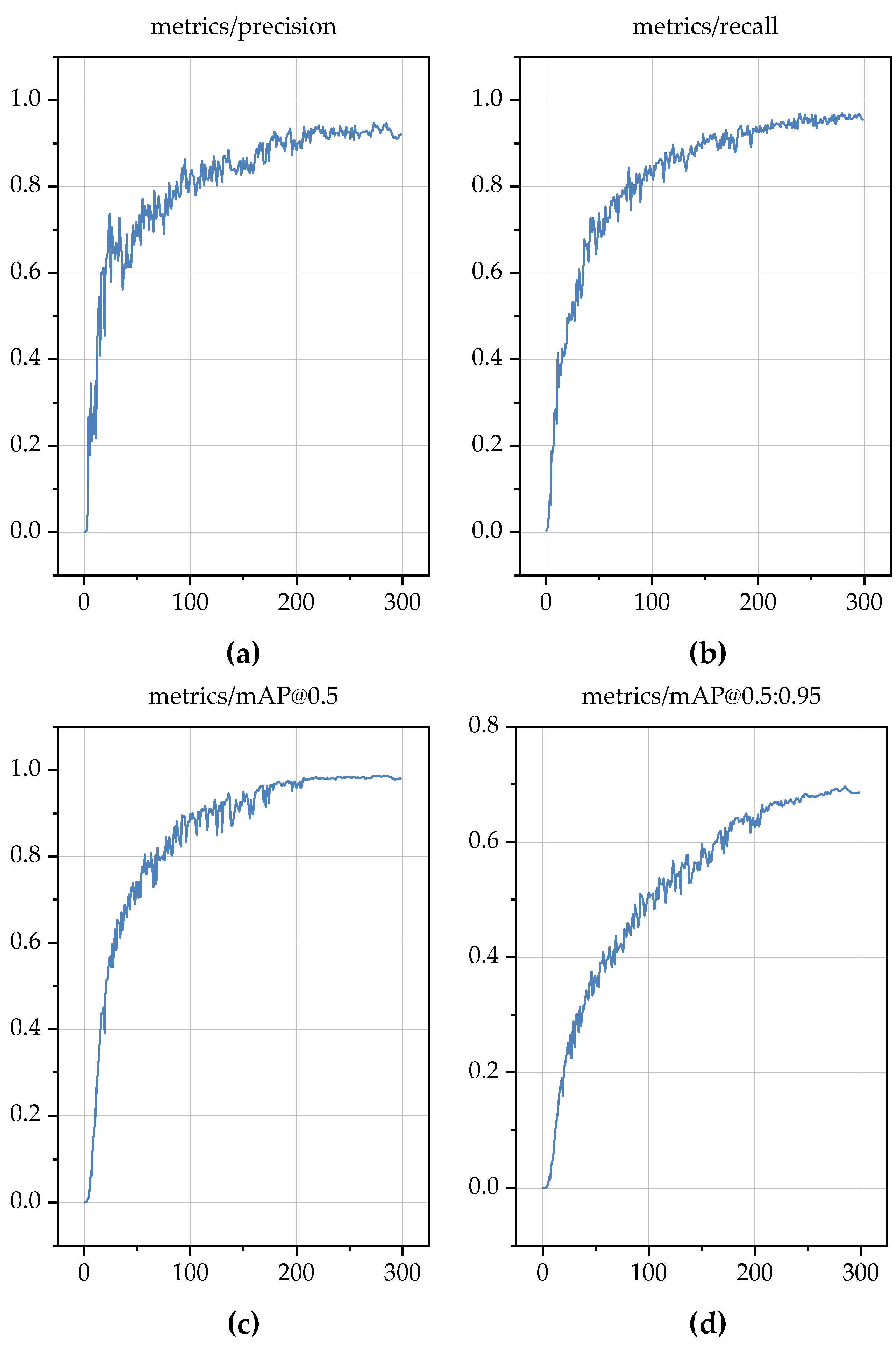

We compared the models reconstructed from the backbone network using MobileNetV3. The convergence of the mAP@0.5 metrics of the Yolov5s-MobileNetV3-model, the Yolov5s-MobileNetV3-BiFPN model, and the ECMB-Yolov5 model is shown in

Figure 11. The final accuracy of the ECMB-Yolov5 model was better than the other two models.

Figure 12 depicts the precision, recall, mAP@0.5, and mAP@0.5:0.95 convergence processes that occurred throughout the ECMB-Yolov5 model’s training, and the succeeding figures demonstrate advancements for a quicker convergence of the network.

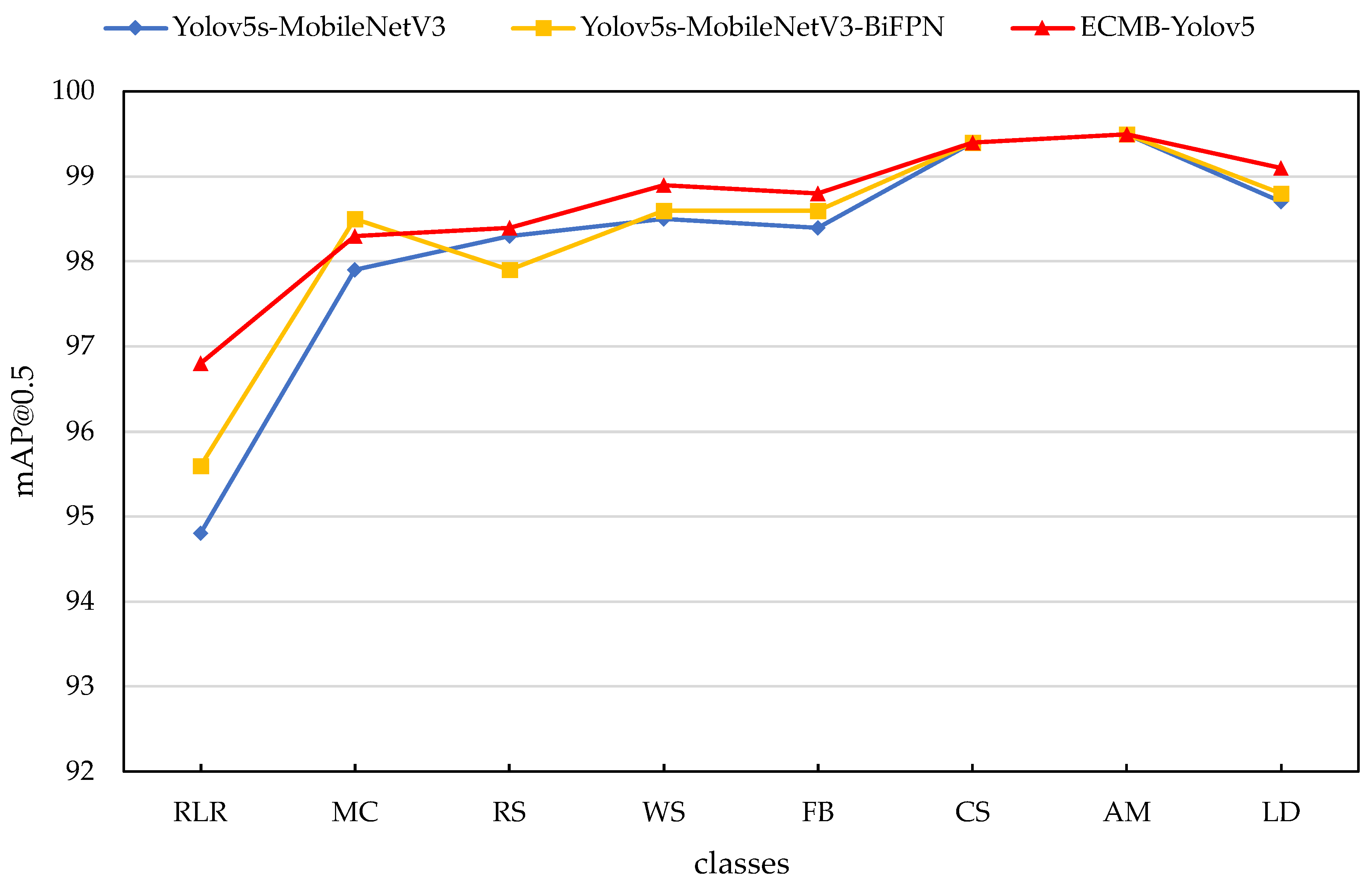

The ablation experiments of individual categories for the models after reconstructing the backbone network using MobileNetV3 and the mAP metrics of the pest categories in each model are shown in

Figure 13. The ECMB-Yolov5 model showed an increase in the mAP@0.5 index for all categories compared with the other two models, except for a slight decrease in the Mole Cricket category.

4.2.4. Comparison Experiments with Other Common Models

In order to select the benchmark network as well as to make a more comprehensive comparison, we selected some other common object detection models for experimental comparison. The experimental environment and configuration are described in

Section 4.1, and the specific experimental results are shown in

Table 4. The experimental results show that the number of parameters and memory size of the Yolov5s model were much lower than those of the Faster R-CNN, Yolov3, Yolov4, and Yolov7 models and that the P, R, and mAP@0.5 were higher than those of the Faster R-CNN, Yolov3, Yolov4, and Yolov7 models. Therefore, the Yolov5s model had the advantages of small size and higher accuracy. The Yolov4-tiny model was a lightweight version of the Yolov4 model. Compared with the Yolov4-tiny model, the number of parameters and memory of the improved model was only 23.5% and 13.8% to those of the Yolov4-tiny model, respectively, and the average accuracy was 8.4% higher than that of the Yolov4-tiny model. Therefore, we weighed the number of parameters and the average accuracy of the improved model to determine which was more advantageous for use in real-time insect detection.

4.2.5. Speed Comparison Experiments Deployed on Jetson Nano

The Jetson Nano hardware configuration is shown in

Table 5.

The Jetson Nano environment build: install the Ubuntu 18.04 operating system, configure the model runtime environment as JetPack 4.6, Python 3.6.9, Pytorch 1.8.0, Torchvision 0.9.0, CUDA 10.2, and CUDNN 8.2, expand the graphics memory from 3.9 GB to 7.7 GB, and equip it with a CSI camera. The Jetson Nano device is shown in

Figure 14.

The experimental results are shown in

Table 6. From the experimental results, it could be seen that the ECMB-Yolov5 model had a better forward transmission time than the original model, with a 37.5% improvement in forward transmission times. The forward transmission time included the pre-processing time, network forward transmission time, and post-processing time. The floating-point operations of the improved model were much lower than those of the original model, and the final FPS was improved by 5.7. Therefore, our improved model could be well deployed on embedded devices for real-time detection.

The detection accuracies of the ECMB-Yolov5 model and Yolov5s model on the Jetson Nano for eight pest categories are shown in

Figure 15 and

Figure 16, respectively. These findings reveal that the ECMB-Yolov5 model’s detection accuracy was marginally worse than that of the Yolov5s model and that its real-time detection speed was 15.2 FPS as opposed to 9.5 FPS for the Yolov5s model. When compared to the detection accuracy and real-time detection speed, the ECMB-Yolov5 model performed well in terms of detection accuracy and had a quicker detection speed.

5. Discussion

Pests cause serious damage to crops all year round, resulting in serious losses to the agricultural economy. Therefore, it is necessary to control pests from their source and accurately detect pest control measures; therefore, it is of great practical importance to study pest detection algorithms. The shape and size of different species of pests vary widely [

56], making it difficult to detect pest targets. In addition, the performance of the Yolov5s model is lower than the required performance for subsequent studies when the algorithm model is deployed on a mobile-embedded device for outdoor real-time detection. Therefore, we improved the Yolov5s model through multiple approaches.

Firstly, we acknowledged the need for it to be deployed on mobile embedded devices with limited device arithmetic power and a large number of Yolov5s model parameters: we considered this a lightweight improvement. We reconfigured the backbone network using the MobileNet_Block module in MobileNetV3, and the number of network model parameters was significantly reduced. However, this led to a decrease in its feature extraction ability, resulting in a decrease in mAP@0.5 metrics. Based on this, the MobileNetV3 shallow network topology was expanded to include the ECA attention mechanism. The ECA module uses a local cross-channel interaction technique without dimensionality reduction and an adaptive selection of a one-dimensional convolutional kernel size to enhance the performance of the network model. The revised model only had 19.7% as many parameters as the Yolov5s model.

Secondly, we found that most of the pest targets in the dataset belonged to small and medium sizes; therefore, we used BiFPN to improve PAnet in the original model and in order to improve the detection accuracy of the lightweight model for small targets. The experimental results showed that the number of participants increased by only 0.9%, and the detection accuracy increased by 0.1%. Yolov5 used CIoU loss in the output. The CIoU loss function sometimes led to slow convergence and hindered model optimization. We introduced the SIoU loss function to replace the CIoU loss function. SIoU further considered the vector angle between the real frame and the prediction frame and redefined a total of four penalty metrics: angle loss, distance loss, shape loss, and IoU loss. Therefore, the convergence speed of the prediction frame and the accuracy of inference were improved.

With a size of just 3.1 MB, a detection accuracy of 98.6%, and a real-time detection speed of 15.2 FPS when implemented on the Jetson Nano, we developed the ECMB-Yolov5 model. In a follow-up study, we need to further improve the functionality of ECMB-Yolov5. First off, there is still room for improvement in the ECMB-Yolov5 model’s capacity for tiny target identification. We aim to analyze the reasons for this and further improve it. Secondly, the current real-time detection speed is still in the laboratory stage, which we will later deploy in unmanned carts for field detection in future research. In addition, we aim to build a pest detection system to provide practical applications for agricultural pest control.

6. Conclusions

In this paper, we have addressed the issue of low detection accuracy in pest detection, often only for a single category of crop pests, and the challenges of deploying this on mobile-embedded devices for real-time detection. Our lightweight detection model was based on enhanced Yolov5s. When used with the Jetson Nano, the finished model had a size of just 3.1MB, a detection accuracy of 98.6%, and a real-time detection speed of 15.2 FPS. This dramatically increased the model’s detection speed while somewhat decreasing the accuracy.

In this study, the Yolov5′s model’s backbone network was recreated using the MobileNetV3 network, dramatically reducing the model’s parameter count to just 19.7% of the original model. To address the problems of a reduced number of parameters and the feature extraction capability of the backbone network, the ECA attention mechanism was incorporated into MobileNetV3 to achieve the goal of introducing a small number of parameters that could effectively improve the network’s performance. Small targets’ capacity to extract features, as well as the convergence speed and accuracy of the prediction frame, was enhanced by swapping out the PAnet structure for BiFPN and the CIoU loss function for SIoU.

These experimental findings show that the model described in this research was successful in real-time insect detection. The next step was to investigate the cause of the loss of lightweight model accuracy, optimize the model accuracy, assure detection speed and accuracy, and eventually enhance the construction of a real-time pest detection system.

Author Contributions

Conceptualization, J.Z.; methodology, J.Z.; software, J.Z.; validation, J.Z.; formal analysis, J.W.; investigation, J.Z.; resources, J.W.; data curation, J.Z.; writing—original draft preparation, J.Z.; writing—review and editing, J.W.; visualization, J.Z.; supervision, M.Z.; project administration, M.Z.; funding acquisition, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Jiangsu Overseas Visiting Scholar Program for University Prominent Young & Middle-aged Teachers and Presidents (grant number 2019070).

Data Availability Statement

All data generated or presented in this study are available upon request from corresponding author. Furthermore, the models and code used during this study cannot be shared as the data also form part of an ongoing study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Naba, K. Recent problems in pest management technologies on crop field—Ideals, realities and prospects of integrated pest management in Japan. J. Pestic. Sci. 2001, 26, 399–407. [Google Scholar] [CrossRef]

- Hugo, D.G. Economic analysis of pest problems in agriculture and food chains in Africa. Curr. Opin. Insect Sci. 2022, 54, 100969. [Google Scholar]

- Deng, L.; Wang, Z.; Zhou, H. Application of Image Segmentation Technology in Crop Disease Detection and Recognition. IFIP Adv. Inf. Commun. Technol. 2019, 545, 365–374. [Google Scholar]

- Dimililer, K.; Zarrouk, S. ICSPI: Intelligent Classification System of Pest Insects Based on Image Processing and Neural Arbitration. Appl. Eng. Agric. 2017, 33, 453–460. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Matas, J.; Sebe, N.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. Computer Vision—ECCV 2016; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Li, K.S.; Wang, J.C.; Jalil, H.; Wang, H. A fast and lightweight detection algorithm for passion fruit pests based on improved YOLOv5. Comput. Electron. Agric. 2023, 204, 107534. [Google Scholar] [CrossRef]

- Yang, Z.; Feng, H.; Ruan, Y.; Weng, X. Tea Tree Pest Detection Algorithm Based on Improved Yolov7-Tiny. Agriculture 2023, 13, 1031. [Google Scholar] [CrossRef]

- Suo, J.S.; Zhang, X.Z.; Zhang, S.L.; Zhou, W.; Shi, W. Feasibility Analysis of Machine Learning Optimization on GPU-based Low-cost Edges. In Proceedings of the 2021 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/IOP/SCI), Atlanta, GA, USA, 18–21 October 2021; pp. 89–96. [Google Scholar]

- Wu, X.P.; Zhan, C.; Lai, Y.K.; Cheng, M.M.; Yang, J. IP102: A Large-Scale Benchmark Dataset for Insect Pest Recognition. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 8779–8788. [Google Scholar]

- Ultralytics-Yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 October 2022).

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Wang, Q.L.; Wu, B.G.; Zhu, P.F.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- Tan, M.X.; Pang, R.M.; Quoc, V.L. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.F.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Zhora, G. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:1409.1556. [Google Scholar]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral Remote Sensing Data Analysis and Future Challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Deborah, H.; Richard, N.; Hardeberg, J.Y. On The Quality Evaluation of Spectral Image Processing Algorithms. In Proceedings of the 2014 Tenth International Conference on Signal-Image Technology and Internet-Based Systems, Marrakech, Morocco, 23–27 November 2014; pp. 133–140. [Google Scholar]

- Li, Y.Y.; Zhang, H.; Duan, Z.; Lian, M.; Zhao, G.Y.; Sun, X.H.; Hu, J.D.; Gao, L.N.; Feng, H.Q.; Svanberg, S. Optical characterization of agricultural pest insects: A methodological study in the spectral and time domains. Appl. Phys. B-Lasers Opt. 2016, 122, 213. [Google Scholar] [CrossRef]

- Vanegas, F.; Bratanov, D.; Powell, K.; Weiss, J.; Gonzalez, F. A Novel Methodology for Improving Plant Pest Surveillance in Vineyards and Crops Using UAV-Based Hyperspectral and Spatial Data. Sensors 2018, 18, 260. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.J.; Zhang, S.Y.; Dong, S.F.; Zhang, G.-C.; Yang, J.; Li, R.; Wang, H.-Q. Pest24: A large-scale very small object data set of agricultural pests for multi-target detection. Comput. Electron. Agric. 2020, 175, 105585. [Google Scholar] [CrossRef]

- Zhang, W.J.; Yang, G.S.; Lin, Y.Z.; Ji, C.; Gupta, M.M. On Definition of Deep Learning. In Proceedings of the 2018 World Automation Congress (WAC), Stevenson, WA, USA, 3–6 June 2018; pp. 232–236. [Google Scholar]

- Liu, J.F.; Wu, J.Z.; Zhang, J.H.; Liu, J. Status and Prospects of Agricultural Information Technology. In Proceedings of the 2016 International Conference on Computer Science and Electronic Technology, Zhengzhou, China, 13–14 August 2016; pp. 322–327. [Google Scholar]

- Lee, Y.J.; Yoo, S.; Gu, Y.H.; Piao, Z.; Yi, H.; Park, J.H. An Integrated Image Retrieval and Recognition System for Detecting Diseases and Insect Pests. J. Korean Inst. Next Gener. Comput. 2017, 13, 100–111. [Google Scholar]

- Wang, F.Y.; Wang, R.J.; Xie, C.J.; Zhang, J.; Li, R.; Liu, L. Convolutional neural network based automatic pest monitoring system using hand-held mobile image analysis towards non-site-specific wild environment. Comput. Electron. Agric. 2021, 187, 106268. [Google Scholar] [CrossRef]

- Liu, C.K.; Zhai, Z.Q.; Zhang, R.Y.; Bai, J.; Zhang, M. Field pest monitoring and forecasting system for pest control. Front. Plant Sci. 2022, 13, 990965. [Google Scholar] [CrossRef]

- Pang, H.T.; Zhang, Y.T.; Cai, W.M.; Bin Li, B.; Song, R. A real-time object detection model for orchard pests based on improved YOLOv4 algorithm. Sci. Rep. 2022, 12, 13557. [Google Scholar] [CrossRef]

- Gong, M.L.; Wang, D.; Zhao, X.X.; Guo, H.; Luo, D.; Song, M. A review of non-maximum suppression algorithms for deep learning target detection. In Proceedings of the 7th Symposium on Novel Photoelectronic Detection Technology and Applications, Kunming, China, 5–7 November 2020; p. 133. [Google Scholar]

- Liang, T.J.; Bao, H. A optimized YOLO method for object detection. In Proceedings of the 2020 16th International Conference on Computational Intelligence and Security (CIS), Nanning, China, 27–30 November 2020; pp. 30–34. [Google Scholar]

- Qi, F.; Wang, Y.; Tang, Z.; Chen, S. Real-time and effective detection of agricultural pest using an improved YOLOv5 network. Real-Time Image Process. 2023, 20, 33. [Google Scholar] [CrossRef]

- Zhang, W.; Huang, H.; Sun, Y.; Wu, X. AgriPest-YOLO: A rapid light-trap agricultural pest detection method based on deep learning. Front. Plant Sci. 2022, 13, 1079384. [Google Scholar] [CrossRef]

- Xiang, Q.; Huang, X.; Huang, Z.; Chen, X.; Cheng, J.; Tang, X. Yolo-Pest: An Insect Pest Object Detection Algorithm via CAC3 Module. Sensors 2023, 23, 3221. [Google Scholar] [CrossRef]

- Yang, S.; Xing, Z.; Wang, H.; Dong, X.; Gao, X.; Liu, Z.; Zhang, X.; Li, S.; Zhao, Y. Maize-YOLO: A New High-Precision and Real-Time Method for Maize Pest Detection. Insects 2023, 14, 278. [Google Scholar] [CrossRef]

- Wang, C.; Bochkovskiy, A.; Liao, H.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Alexey, B.; Wang, C.Y.; Liao, H.Y. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jiang, W.; Xu, H.F.; Chen, Y.; Zhao, W.; Xu, W. An Improved Edge-Adaptive Image Scaling Algorithm. In Proceedings of the 2009 IEEE 8th International Conference on ASIC, Changsha, China, 20–23 October 2009; pp. 895–897. [Google Scholar]

- Gao, M.Y.; Du, Y.J.; Yang, Y.X.; Zhang, J. Adaptive anchor box mechanism to improve the accuracy in the object detection system. Multimed. Tools Appl. 2019, 78, 27383–27402. [Google Scholar] [CrossRef]

- Park, H.; Yoo, Y.; Seo, G.; Han, D.; Yun, S.; Kwak, N. C3: Concentrated-Comprehensive Convolution and its application to semantic segmentation. arXiv 2018, arXiv:1812.04920. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. Lect. Notes Comput. Sci. 2015, 37, 346–361. [Google Scholar] [CrossRef]

- Xu, B.; Wang, N.Y.; Chen, T.Q.; Li, M. Empirical Evaluation of Rectified Activations in Convolution Network. arXiv 2015, arXiv:1505.00853. [Google Scholar]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 2018, 107, 3–11. [Google Scholar] [CrossRef]

- Okada, M.; Yanagimoto, H.; Hashimoto, K. Sentiment Classification with Gated CNN and Spatial Pyramid Pooling. In Proceedings of the 2018 7th International Congress on Advanced Applied Informatics (IIAI-AAI), Yonago, Japan, 8–13 July 2018; pp. 133–138. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Zheng, Z.H.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the 34th AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar]

- Howard, A.G.; Zhu, M.L.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Xiong, H.Y.; Lu, T.W.; Zhang, H.Z. Real-Time Efficient Facial Landmark Detection Algorithms. In Proceedings of the 2020 3rd International Conference on Artificial Intelligence and Pattern Recognition, Xiamen, China, 25–27 September 2020; pp. 191–195. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.L.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Cheng, H.Y.; Ding, Y.C.; Yang, L. Real-Time Motion Detection Network Based on Single Linear Bottleneck and Pooling Compensation. Appl. Sci. 2022, 12, 8645. [Google Scholar] [CrossRef]

- Yae, S.; Ikehara, M. Inverted Residual Fourier Transformation for Lightweight Single Image Deblurring. IEEE Access 2023, 11, 29175–29182. [Google Scholar] [CrossRef]

- Li, C.L.; Peng, J.F.; Yuan, L.C.; Wang, G.; Liang, X.; Lin, L.; Chang, X. Block-wisely Supervised Neural Architecture Search with Knowledge Distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Electr Network, Seattle, WA, USA, 14–19 June 2020; pp. 1986–1995. [Google Scholar]

- Avenash, R.; Viswanath, P. Semantic Segmentation of Satellite Images using a Modified CNN with Hard-Swish Activation Function. In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISAPP), Prague, Czech Republic, 25–27 February 2019; pp. 413–420. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Maino, J.L.; Kearney, M.R. Ontogenetic and interspecific scaling of consumption in insects. Oikos 2015, 124, 1564–1570. [Google Scholar] [CrossRef]

Figure 1.

Network structure of Yolov5s model.

Figure 1.

Network structure of Yolov5s model.

Figure 2.

MobileNet_Block module structure.

Figure 2.

MobileNet_Block module structure.

Figure 3.

Improved backbone network structure.

Figure 3.

Improved backbone network structure.

Figure 4.

PAnet structure.

Figure 4.

PAnet structure.

Figure 5.

BiFPN structure.

Figure 5.

BiFPN structure.

Figure 6.

Neck network structure of the improved model.

Figure 6.

Neck network structure of the improved model.

Figure 7.

Intersection and union.

Figure 7.

Intersection and union.

Figure 8.

ECMB-Yolov5 network structure.

Figure 8.

ECMB-Yolov5 network structure.

Figure 9.

Representative samples of images of each type of pest. (a) Rice Leaf Roller. (b) Mole Cricket. (c) Red Spider. (d) Wheat Sawfly. (e) Flea Beetle. (f) Coccinella Septempunctata. (g) Ampelophaga. (h) Lycorma Delicatula.

Figure 9.

Representative samples of images of each type of pest. (a) Rice Leaf Roller. (b) Mole Cricket. (c) Red Spider. (d) Wheat Sawfly. (e) Flea Beetle. (f) Coccinella Septempunctata. (g) Ampelophaga. (h) Lycorma Delicatula.

Figure 10.

Number of labels for each category in the dataset.

Figure 10.

Number of labels for each category in the dataset.

Figure 11.

mAP@0.5 of the three models.

Figure 11.

mAP@0.5 of the three models.

Figure 12.

Convergence process diagram for mAP@0.5. (a) Convergence process diagram for precision. (b) Convergence process diagram for recall. (c) Convergence process diagram for mAP@0.5. (d) Convergence process diagram for mAP@0.5:0.95.

Figure 12.

Convergence process diagram for mAP@0.5. (a) Convergence process diagram for precision. (b) Convergence process diagram for recall. (c) Convergence process diagram for mAP@0.5. (d) Convergence process diagram for mAP@0.5:0.95.

Figure 13.

Comparison of mAP indexes for single category ablation experiment.

Figure 13.

Comparison of mAP indexes for single category ablation experiment.

Figure 14.

Jetson Nano device.

Figure 14.

Jetson Nano device.

Figure 15.

ECMB-Yolov5 model detection results. (a) Rice Leaf Roller. (b) Mole Cricket. (c) Red Spider. (d) Wheat Sawfly. (e) Flea Beetle. (f) Coccinella Septempunctata. (g) Ampelophaga. (h) Lycorma Delicatula.

Figure 15.

ECMB-Yolov5 model detection results. (a) Rice Leaf Roller. (b) Mole Cricket. (c) Red Spider. (d) Wheat Sawfly. (e) Flea Beetle. (f) Coccinella Septempunctata. (g) Ampelophaga. (h) Lycorma Delicatula.

Figure 16.

Yolov5s model detection results. (a) Rice Leaf Roller. (b) Mole Cricket. (c) Red Spider. (d) Wheat Sawfly. (e) Flea Beetle. (f) Coccinella Septempunctata. (g) Ampelophaga. (h) Lycorma Delicatula.

Figure 16.

Yolov5s model detection results. (a) Rice Leaf Roller. (b) Mole Cricket. (c) Red Spider. (d) Wheat Sawfly. (e) Flea Beetle. (f) Coccinella Septempunctata. (g) Ampelophaga. (h) Lycorma Delicatula.

Table 1.

Backbone network structure and parameter configuration.

Table 1.

Backbone network structure and parameter configuration.

| Serial Number | n | Params | Module | Configuration | Output Size |

|---|

| 0 | 1 | 232 | Conv_BN_H-Swish | [3, 8, 2] | 8 × 320 × 320 |

| 1 | 1 | 628 | MobileNet_Block | [8, 8, 16, 3, 2, 1, 0] | 8 × 160 × 160 |

| 2 | 1 | 2696 | MobileNet_Block | [8, 16, 72, 3, 2, 0, 0] | 16 × 80 × 80 |

| 3 | 1 | 3992 | MobileNet_Block | [16, 16, 88, 3, 1, 0, 0] | 16 × 80 × 80 |

| 4 | 1 | 3 | ECA_Layer | [16, 3] | 16 × 80 × 80 |

| 5 | 1 | 11,400 | MobileNet_Block | [16, 24, 96, 5, 2, 1, 1] | 24 × 40 × 40 |

| 6 | 1 | 47,628 | MobileNet_Block | [24, 24, 240, 5, 1, 1, 1] | 24 × 40 × 40 |

| 7 | 1 | 47,628 | MobileNet_Block | [24, 24, 240, 5, 1, 1, 1] | 24 × 40 × 40 |

| 8 | 1 | 16,638 | MobileNet_Block | [24, 24, 120, 5, 1, 1, 1] | 24 × 40 × 40 |

| 9 | 1 | 21,684 | MobileNet_Block | [24, 24, 144, 5, 1, 1, 1] | 24 × 40 × 40 |

| 10 | 1 | 71,016 | MobileNet_Block | [24, 48, 288, 5, 2, 1, 1] | 48 × 20 × 20 |

| 11 | 1 | 238,704 | MobileNet_Block | [48, 48, 576, 5, 1, 1, 1] | 48 × 20 × 20 |

| 12 | 1 | 238,704 | MobileNet_Block | [48, 48, 576, 5, 1, 1, 1] | 48 × 20 × 20 |

Table 2.

Experimental data results of different backbone networks.

Table 2.

Experimental data results of different backbone networks.

| Models | Parameters | Memory (MB) | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) |

|---|

| Yolov5s-ShuffleNetV2 | 3,807,605 | 8.0 | 88.2 | 83.5 | 87.5 | 56.9 |

| Yolov5s-GhostNet | 3,694,605 | 7.8 | 91.6 | 85.4 | 89.9 | 63.9 |

| Yolov5s-MobileNetV3 | 1,384,203 | 3.1 | 93.9 | 94.5 | 98.1 | 68.2 |

Table 3.

Results of ablation experimental data for each model.

Table 3.

Results of ablation experimental data for each model.

| Models | Parameters | Memory (MB) | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) |

|---|

| Yolov5s | 7,031,701 | 14.4 | 99.4 | 99.9 | 99.4 | 89.6 |

| Yolov5s-BiFPN | 7,097,246 | 14.6 | 99.5 | 99.9 | 99.5 | 89.9 |

| Yolov5s-MobileNetV3 | 1,384,203 | 3.1 | 93.9 | 94.5 | 98.1 | 68.2 |

| Yolov5s-MobileNetV3-BiFPN | 1,387,284 | 3.1 | 94.6 | 94.8 | 98.2 | 68.5 |

| ECMB-Yolov5 | 1,387,287 | 3.1 | 94.6 | 95.7 | 98.6 | 69.6 |

Table 4.

Experimental results for comparison with common models.

Table 4.

Experimental results for comparison with common models.

| Models | Parameters | Memory (MB) | P (%) | R (%) | mAP@0.5 (%) |

|---|

| Faster R-CNN | 28.32 × 106 | 108.4 | 55.2 | 96.1 | 91.5 |

| Yolov3 | 61.53 × 106 | 235.2 | 96.7 | 83.6 | 91.3 |

| Yolov4 | 63.36 × 106 | 244.5 | 88.8 | 85.3 | 89.4 |

| Yolov4-tiny | 5.92 × 106 | 22.5 | 92.3 | 84.1 | 90.2 |

| Yolov5s | 7.03 × 106 | 14.4 | 99.6 | 99.9 | 99.5 |

| Yolov7 | 36.5 × 106 | 74.9 | 95.2 | 95.1 | 97.7 |

| ECMB-Yolov5 | 1.39 × 106 | 3.1 | 94.6 | 95.7 | 98.6 |

Table 5.

Jetson Nano hardware configuration.

Table 5.

Jetson Nano hardware configuration.

| Names | Specifications |

|---|

| CPU | Quad-Core ARM® Cortex®-A57 MPCore Processor |

| GPU | NVIDIA Maxwell™ architecture with 128 NVIDIA CUDA® cores |

| Memory | 4 GB 64-bit LPDDR4 |

Table 6.

Comparison of experimental results on Jetson Nano.

Table 6.

Comparison of experimental results on Jetson Nano.

| Models | Preprocess (ms) | Inference (ms) | NMS (ms) | GFLOPS | FPS |

|---|

| ECMB-Yolov5 | 2.3 | 55.2 | 8.5 | 2.3 | 15.2 |

| Yolov5s | 2.4 | 94.3 | 8.9 | 15.8 | 9.5 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).