Utilisation of Deep Learning with Multimodal Data Fusion for Determination of Pineapple Quality Using Thermal Imaging

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Design

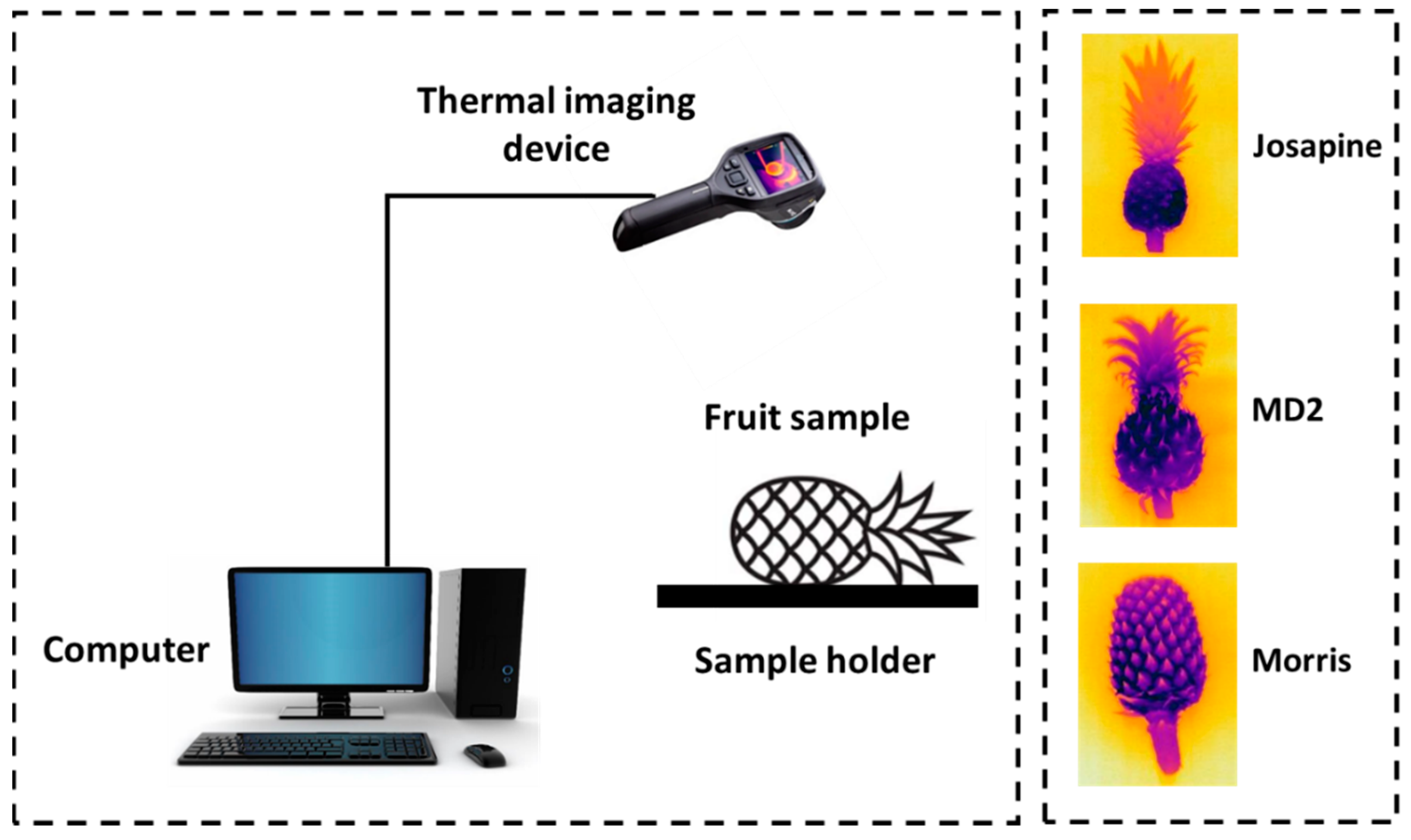

2.2. Data Acquisition

2.3. Fruit Quality Determination

2.4. Multimodal Data Fusion

2.5. Data Analysis

3. Results and Discussion

3.1. Measurement of Fruit Quality

3.2. Evaluation of Trained Networks

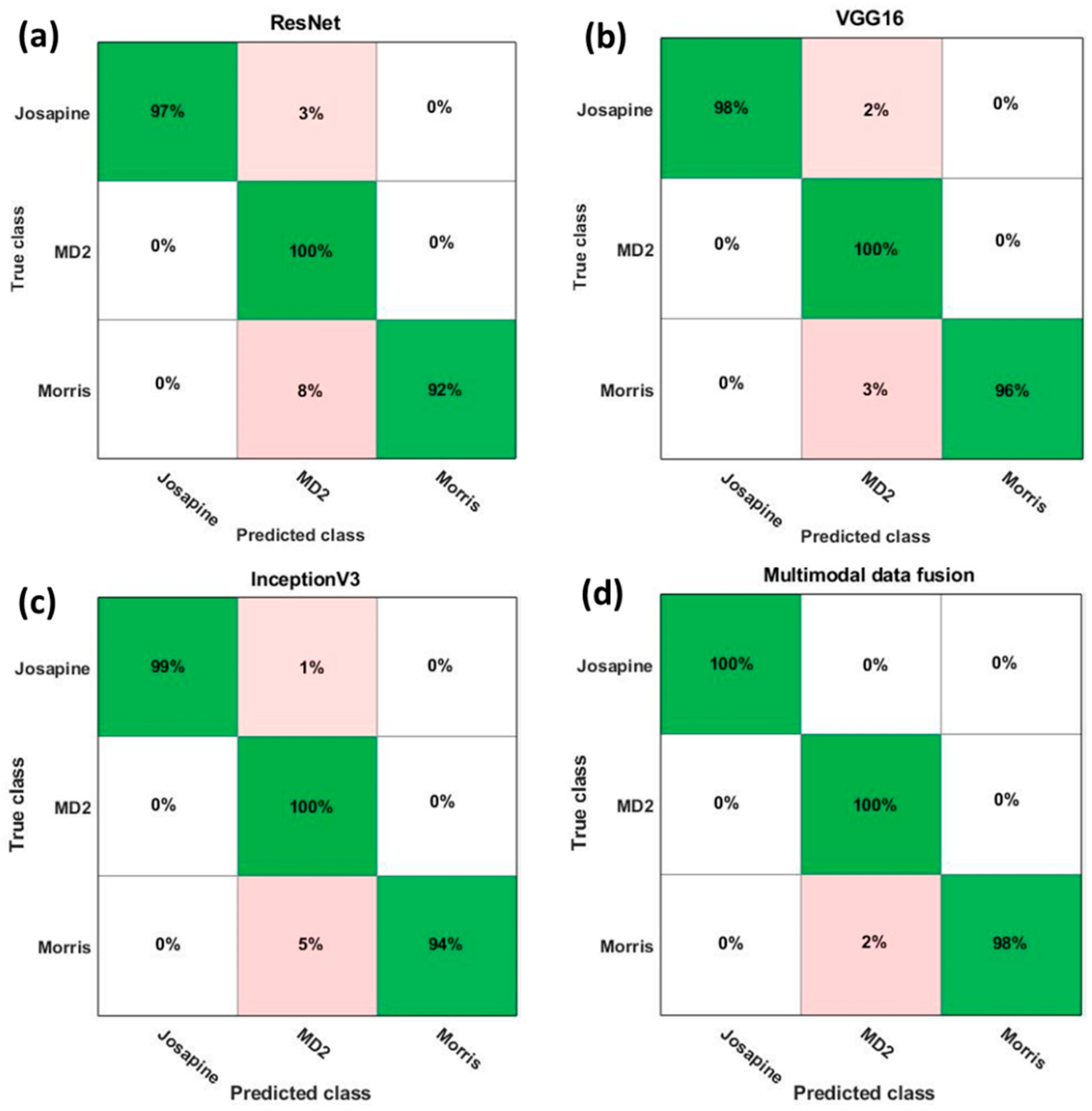

3.3. Modal Comparison of Different Deep Learning Models

3.4. Performance of Multimodal Data Fusion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Steingass, C.B.; Grauwet, T.; Carle, R. Influence of Harvest Maturity and Fruit Logistics on Pineapple (Ananas Comosus [L.] Merr.) Volatiles Assessed by Headspace Solid Phase Microextraction and Gas Chromatography-Mass Spectrometry (HS-SPME-GC/MS). Food Chem. 2014, 150, 382–391. [Google Scholar] [CrossRef] [PubMed]

- Statista Leading Countries in Pineapple Production Worldwide in 2019. Available online: https://www.statista.com/statistics/298517/global-pineapple-production-by-leading-countries/ (accessed on 3 May 2021).

- Joy, P.P.; Rajuva, T.A.R. Harvesting and Postharvest Handling of Pineapple; John Wiley & Sons: Hoboken, NJ, USA, 2016. [Google Scholar]

- Garillos-Manliguez, C.A.; Chiang, J.Y. Multimodal Deep Learning and Visible-Light and Hyperspectral Imaging for Fruit Maturity Estimation. Sensors 2021, 21, 1288. [Google Scholar] [CrossRef] [PubMed]

- Ahn, D.H.; Choi, J.Y.; Kim, H.C.; Cho, J.S.; Moon, K.D.; Park, T. Estimating the Composition of Food Nutrients from Hyperspectral Signals Based on Deep Neural Networks. Sensors 2019, 19, 1560. [Google Scholar] [CrossRef] [PubMed]

- Gené-Mola, J.; Vilaplana, V.; Rosell-Polo, J.R.; Morros, J.R.; Ruiz-Hidalgo, J.; Gregorio, E. Multi-Modal Deep Learning for Fuji Apple Detection Using RGB-D Cameras and Their Radiometric Capabilities. Comput. Electron. Agric. 2019, 162, 689–698. [Google Scholar] [CrossRef]

- Du, Z.; Zeng, X.; Li, X.; Ding, X.; Cao, J.; Jiang, W. Recent Advances in Imaging Techniques for Bruise Detection in Fruits and Vegetables. Trends Food Sci. Technol. 2020, 99, 133–141. [Google Scholar] [CrossRef]

- Döner, D.; Çokgezme, Ö.F.; Çevik, M.; Engin, M.; İçier, F. Thermal Image Processing Technique for Determination of Temperature Distributions of Minced Beef Thawed by Ohmic and Conventional Methods. Food Bioprocess Technol. 2020, 13, 1878–1892. [Google Scholar] [CrossRef]

- Badia-Melis, R.; Qian, J.P.; Fan, B.L.; Hoyos-Echevarria, P.; Ruiz-García, L.; Yang, X.T. Artificial Neural Networks and Thermal Image for Temperature Prediction in Apples. Food Bioprocess Technol. 2016, 9, 1089–1099. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, M.; Mujumdar, A.S.; Mothibe, K.J. Microwave-Assisted Pulse-Spouted Bed Freeze-Drying of Stem Lettuce Slices—Effect on Product Quality. Food Bioprocess Technol. 2013, 6, 3530–3543. [Google Scholar] [CrossRef]

- Xu, B.; Wei, B.; Ren, X.; Liu, Y.; Jiang, H.; Zhou, C.; Ma, H.; Chalamaiah, M.; Liang, Q.; Wang, Z. Dielectric Pretreatment of Rapeseed 1: Influence on the Drying Characteristics of the Seeds and Physico-Chemical Properties of Cold-Pressed Oil. Food Bioprocess Technol. 2018, 11, 1236–1247. [Google Scholar] [CrossRef]

- Manickavasagan, A.; Jayas, D.S.; White, N.D.G.; Paliwal, J. Wheat Class Identification Using Thermal Imaging. Food Bioprocess Technol. 2010, 3, 450–460. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, M.; Mujumdar, A.S.; Lim, R.-X. Analysis of Temperature Distribution and SEM Images of Microwave Freeze Drying Banana Chips. Food Bioprocess Technol. 2013, 6, 1144–1152. [Google Scholar] [CrossRef]

- Ma, R.; Yu, S.; Tian, Y.; Wang, K.; Sun, C.; Li, X.; Zhang, J.; Chen, K.; Fang, J. Effect of Non-Thermal Plasma-Activated Water on Fruit Decay and Quality in Postharvest Chinese Bayberries. Food Bioprocess Technol. 2016, 9, 1825–1834. [Google Scholar] [CrossRef]

- Mohd Ali, M.; Hashim, N.; Abd Aziz, S.; Lasekan, O. Quality Prediction of Different Pineapple (Ananas comosus) Varieties during Storage Using Infrared Thermal Imaging Technique. Food Control 2022, 138, 108988. [Google Scholar] [CrossRef]

- Mohd Ali, M.; Hashim, N.; Abd Aziz, S.; Lasekan, O. Characterisation of Pineapple Cultivars under Different Storage Conditions Using Infrared Thermal Imaging Coupled with Machine Learning Algorithms. Agriculture 2022, 12, 1013. [Google Scholar] [CrossRef]

- Guo, B.; Li, B.; Huang, Y.; Hao, F.; Xu, B.; Dong, Y. Bruise Detection and Classification of Strawberries Based on Thermal Images. Food Bioprocess Technol. 2022, 15, 1133–1141. [Google Scholar] [CrossRef]

- Xie, W.; Wei, S.; Zheng, Z.; Jiang, Y.; Yang, D. Recognition of Defective Carrots Based on Deep Learning and Transfer Learning. Food Bioprocess Technol. 2021, 14, 1361–1374. [Google Scholar] [CrossRef]

- Benmouna, B.; García, G.; Sajad, M.; Ruben, S.; Beltran, F. Convolutional Neural Networks for Estimating the Ripening State of Fuji Apples Using Visible and Near-Infrared Spectroscopy. Food Bioprocess Technol. 2022, 15, 2226–2236. [Google Scholar] [CrossRef]

- Lin, P.; Li, X.L.; Chen, Y.M.; He, Y. A Deep Convolutional Neural Network Architecture for Boosting Image Discrimination Accuracy of Rice Species. Food Bioprocess Technol. 2018, 11, 765–773. [Google Scholar] [CrossRef]

- Assadzadeh, S.; Walker, C.K.; Panozzo, J.F. Deep Learning Segmentation in Bulk Grain Images for Prediction of Grain Market Quality. Food Bioprocess Technol. 2022, 15, 1615–1628. [Google Scholar] [CrossRef]

- Zhou, L.; Wang, X.; Zhang, C.; Zhao, N.; Taha, M.F.; He, Y.; Qiu, Z. Powdery Food Identification Using NIR Spectroscopy and Extensible Deep Learning Model. Food Bioprocess Technol. 2022, 15, 2354–2362. [Google Scholar] [CrossRef]

- Kanezaki, A.; Kuga, R.; Sugano, Y.; Matsushita, Y. Deep Learning for Multimodal Data Fusion; Elsevier Inc.: Amsterdam, The Netherlands, 2019; ISBN 9780128173589. [Google Scholar]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef] [PubMed]

- Noreen, N.; Palaniappan, S.; Qayyum, A.; Ahmad, I.; Imran, M.; Shoaib, M. A Deep Learning Model Based on Concatenation Approach for the Diagnosis of Brain Tumor. IEEE Access 2020, 8, 55135–55144. [Google Scholar] [CrossRef]

- Zhou, J.; Li, J.; Wang, C.; Wu, H.; Zhao, C.; Teng, G. Crop Disease Identification and Interpretation Method Based on Multimodal Deep Learning. Comput. Electron. Agric. 2021, 189, 106408. [Google Scholar] [CrossRef]

- Alves, T.S.; Pinto, M.A.; Ventura, P.; Neves, C.J.; Biron, D.G.; Junior, A.C.; De Paula Filho, P.L.; Rodrigues, P.J. Automatic Detection and Classification of Honey Bee Comb Cells Using Deep Learning. Comput. Electron. Agric. 2020, 170, 105244. [Google Scholar] [CrossRef]

- Duong, L.T.; Nguyen, P.T.; Di Sipio, C.; Di Ruscio, D. Automated Fruit Recognition Using EfficientNet and MixNet. Comput. Electron. Agric. 2020, 171, 835. [Google Scholar] [CrossRef]

- Villacrés, J.F.; Cheein, F.A. Detection and Characterization of Cherries: A Deep Learning Usability Case Study in Chile. Agronomy 2020, 10, 835. [Google Scholar] [CrossRef]

- Ngugi, L.C.; Abelwahab, M.; Abo-Zahhad, M. Tomato Leaf Segmentation Algorithms for Mobile Phone Applications Using Deep Learning. Comput. Electron. Agric. 2020, 178, 105788. [Google Scholar] [CrossRef]

- Hu, Z.; Tang, J.; Zhang, P.; Jiang, J. Deep Learning for the Identification of Bruised Apples by Fusing 3D Deep Features for Apple Grading Systems. Mech. Syst. Signal Process. 2020, 145, 106922. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep Learning—Method Overview and Review of Use for Fruit Detection and Yield Estimation. Comput. Electron. Agric. 2019, 162, 219–234. [Google Scholar] [CrossRef]

- Siow, L.-F.; Lee, K.-H. Determination of Physicochemical Properties of Osmo-Dehydrofrozen Pineapples. Borneo Sci. 2012, 31, 71–84. [Google Scholar]

- Padrón-Mederos, M.; Rodríguez-Galdón, B.; Díaz-Romero, C.; Lobo-Rodrigo, M.G.; Rodríguez-Rodríguez, E.M. Quality Evaluation of Minimally Fresh-Cut Processed Pineapples. LWT Food Sci. Technol. 2020, 129, 109607. [Google Scholar] [CrossRef]

- Katarzyna, R.; Paweł, M. A Vision-Based Method Utilizing Deep Convolutional Neural Networks for Fruit Variety Classification in Uncertainty Conditions of Retail Sales. Appl. Sci. 2019, 9, 3971. [Google Scholar] [CrossRef]

- Khan, R.; Debnath, R. Multi Class Fruit Classification Using Efficient Object Detection and Recognition Techniques. Int. J. Image Graph. Signal Process. 2019, 11, 1. [Google Scholar] [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. Deepfruits: A Fruit Detection System Using Deep Neural Networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef]

- Ganesh, P.; Volle, K.; Burks, T.F.; Mehta, S.S. Deep Orange: Mask R-CNN Based Orange Detection and Segmentation. IFAC-PapersOnLine 2019, 52, 70–75. [Google Scholar] [CrossRef]

- Zhang, W.; Zhang, Y.; Zhai, J.; Zhao, D.; Xu, L.; Zhou, J.; Li, Z.; Yang, S. Multi-Source Data Fusion Using Deep Learning for Smart Refrigerators. Comput. Ind. 2018, 95, 15–21. [Google Scholar] [CrossRef]

- Sun, Y.; Lu, R.; Lu, Y.; Tu, K.; Pan, L. Detection of Early Decay in Peaches by Structured-Illumination Reflectance Imaging. Postharvest Biol. Technol. 2019, 151, 68–78. [Google Scholar] [CrossRef]

- Liu, J.; Li, T.; Xie, P.; Du, S.; Teng, F.; Yang, X. Urban Big Data Fusion Based on Deep Learning: An Overview. Inf. Fusion 2020, 53, 123–133. [Google Scholar] [CrossRef]

- Lu, T.; Yu, F.; Xue, C.; Han, B. Identification, Classification, and Quantification of Three Physical Mechanisms in Oil-in-Water Emulsions Using AlexNet with Transfer Learning. J. Food Eng. 2021, 288, 110220. [Google Scholar] [CrossRef]

- Weng, S.; Tang, P.; Yuan, H.; Guo, B.; Yu, S.; Huang, L.; Xu, C. Hyperspectral Imaging for Accurate Determination of Rice Variety Using a Deep Learning Network with Multi-Feature Fusion. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2020, 234, 118237. [Google Scholar] [CrossRef]

- Radu, V.; Tong, C.; Bhattacharya, S.; Lane, N.D.; Mascolo, C.; Marina, M.K.; Kawsar, F. Multimodal Deep Learning for Activity and Context Recognition. Proc. ACM Interactive, Mobile, Wearable Ubiquitous Technol. 2018, 1, 157. [Google Scholar] [CrossRef]

- Huang, S.C.; Pareek, A.; Zamanian, R.; Banerjee, I.; Lungren, M.P. Multimodal Fusion with Deep Neural Networks for Leveraging CT Imaging and Electronic Health Record: A Case-Study in Pulmonary Embolism Detection. Sci. Rep. 2020, 10, 22147. [Google Scholar] [CrossRef] [PubMed]

- Pandeya, Y.R.; Lee, J. Deep Learning-Based Late Fusion of Multimodal Information for Emotion Classification of Music Video. Multimed. Tools Appl. 2021, 80, 2887–2905. [Google Scholar] [CrossRef]

| Network Architecture | Number of Parameter (Million) | Depth |

|---|---|---|

| ResNet | 11.5 | 18 |

| VGG16 | 138.4 | 16 |

| InceptionV3 | 23.9 | 48 |

| Multimodal data fusion | 173.8 | 82 |

| Variety | Dataset | SSC (%) | Firmness (N) | Moisture Content (%) |

|---|---|---|---|---|

| Josapine | Training set | 12.30 ± 1.16 | 1.01 ± 0.06 | 90.76 ± 0.68 |

| Testing set | 12.50 ± 0.95 | 0.63 ± 0.52 | 87.27 ± 0.39 | |

| MD2 | Training set | 13.50 ± 1.48 | 1.43 ± 0.58 | 85.72 ± 0.84 |

| Testing set | 12.10 ± 0.26 | 1.01 ± 0.05 | 88.78 ± 0.89 | |

| Morris | Training set | 8.20 ± 0.54 | 1.47 ± 0.93 | 91.75 ± 0.01 |

| Testing set | 9.70 ± 0.42 | 2.47 ± 0.15 | 70.72 ± 1.94 |

| Hyperparameters | Values |

|---|---|

| Classes | 3 |

| Batch size | 25 |

| Learning rate | 0.0001 |

| Epochs | 100 |

| Loss function | Cross-entropy |

| Momentum | 0.9 |

| Weight decay | 0.0005 |

| Optimizer | Stochastic gradient descent with momentum |

| Deep Learning Models | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|

| ResNet | 0.8932 | 0.8812 | 0.9205 | 0.8385 |

| VGG16 | 0.9110 | 0.8555 | 0.9299 | 0.8999 |

| InceptionV3 | 0.9049 | 0.8963 | 0.9258 | 0.9256 |

| Multimodal data fusion | 0.9495 | 0.9580 | 0.9473 | 0.9687 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mohd Ali, M.; Hashim, N.; Abd Aziz, S.; Lasekan, O. Utilisation of Deep Learning with Multimodal Data Fusion for Determination of Pineapple Quality Using Thermal Imaging. Agronomy 2023, 13, 401. https://doi.org/10.3390/agronomy13020401

Mohd Ali M, Hashim N, Abd Aziz S, Lasekan O. Utilisation of Deep Learning with Multimodal Data Fusion for Determination of Pineapple Quality Using Thermal Imaging. Agronomy. 2023; 13(2):401. https://doi.org/10.3390/agronomy13020401

Chicago/Turabian StyleMohd Ali, Maimunah, Norhashila Hashim, Samsuzana Abd Aziz, and Ola Lasekan. 2023. "Utilisation of Deep Learning with Multimodal Data Fusion for Determination of Pineapple Quality Using Thermal Imaging" Agronomy 13, no. 2: 401. https://doi.org/10.3390/agronomy13020401

APA StyleMohd Ali, M., Hashim, N., Abd Aziz, S., & Lasekan, O. (2023). Utilisation of Deep Learning with Multimodal Data Fusion for Determination of Pineapple Quality Using Thermal Imaging. Agronomy, 13(2), 401. https://doi.org/10.3390/agronomy13020401