In-Season Prediction of Corn Grain Yield through PlanetScope and Sentinel-2 Images

Abstract

1. Introduction

2. Materials and Methods

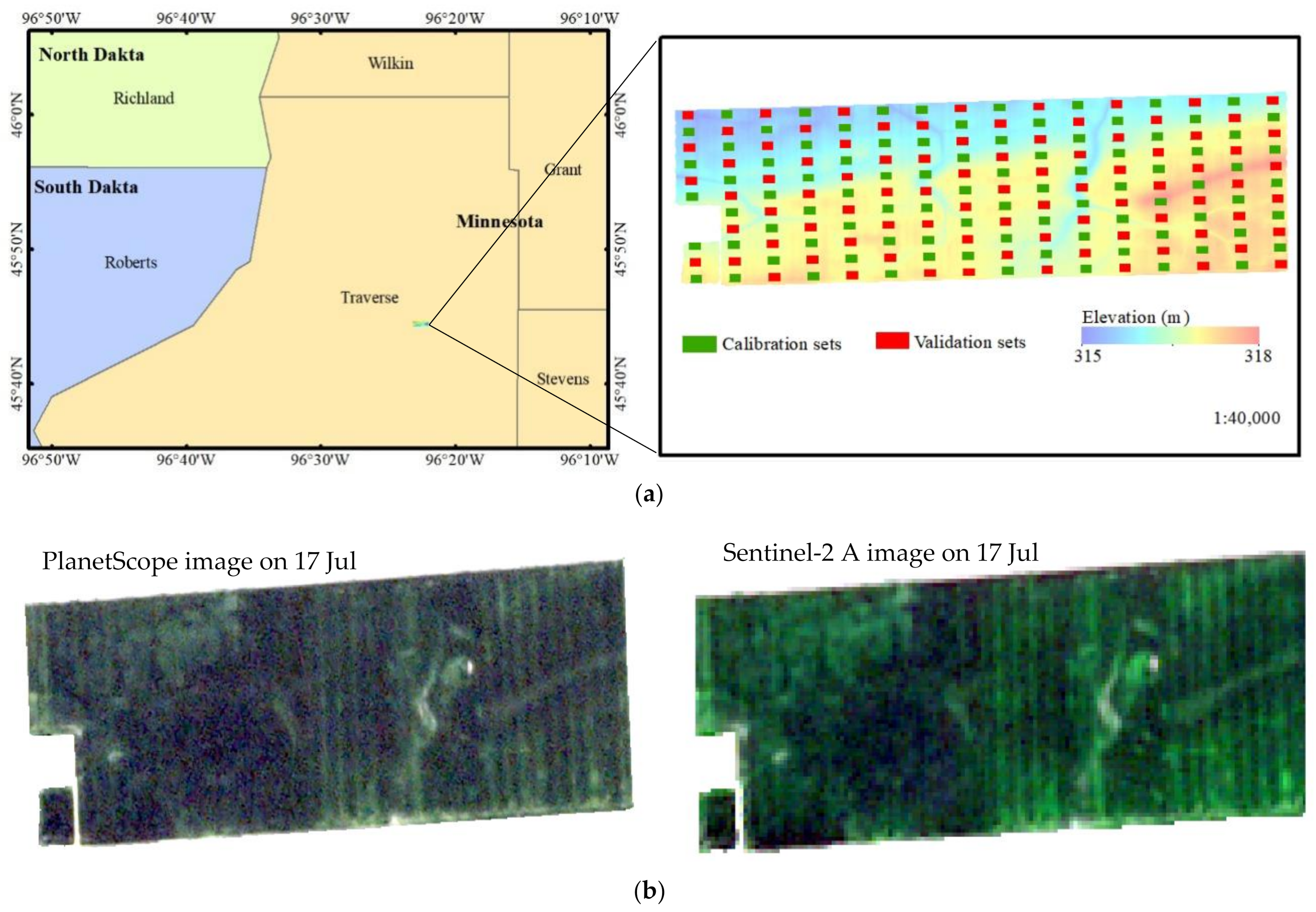

2.1. Field Site and Grain Yield Acquisition

2.2. Satellite Data Collection and Preprocessing

2.2.1. PlanetScope Image Processing

2.2.2. Sentinel-2A Image Processing

2.3. Vegetation Indices

2.4. Data Analysis

2.5. Calibration and Validation Datasets

3. Results

3.1. Description of the Yield Data

3.2. Correlation between Yield and Environment Variables

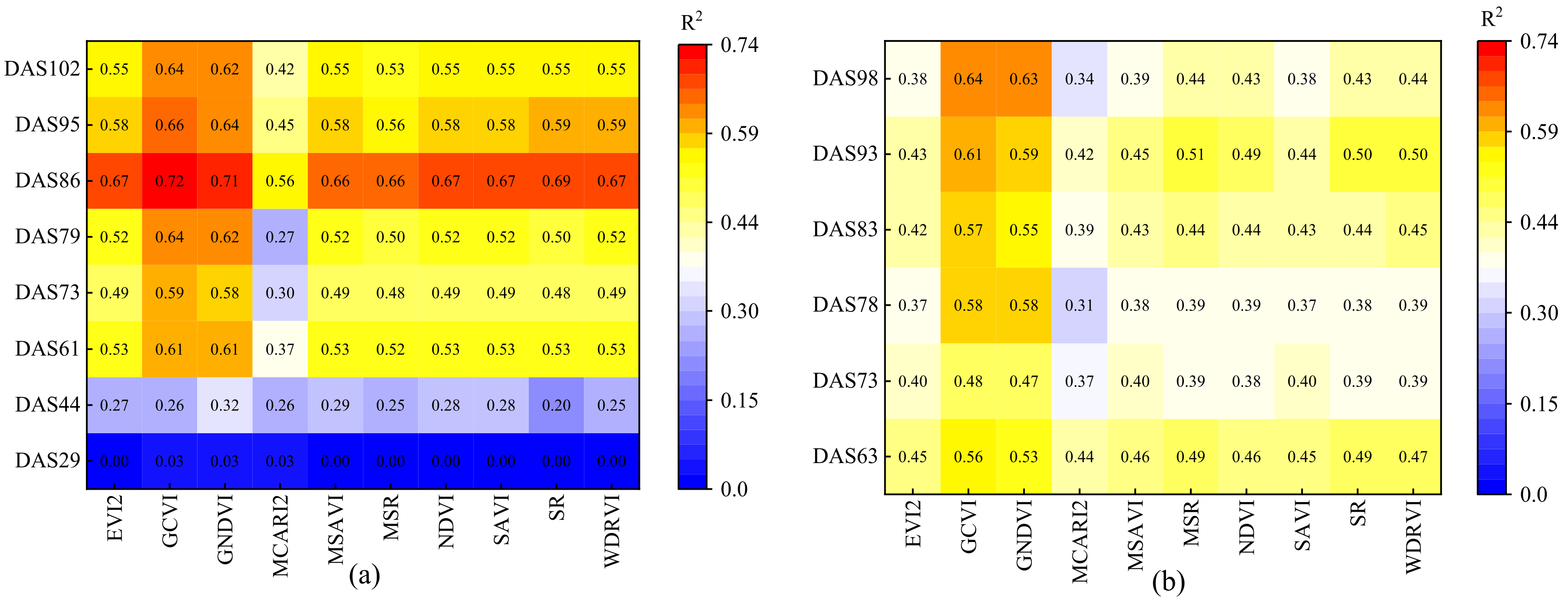

3.3. Correlation between Yield and Vegetation Index

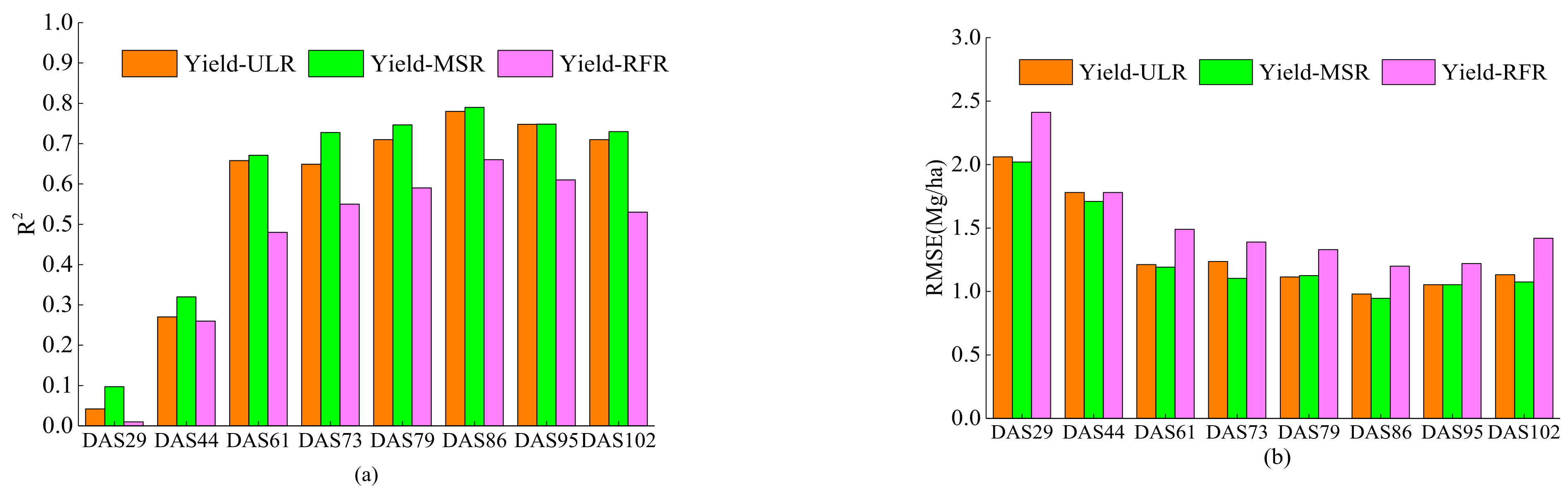

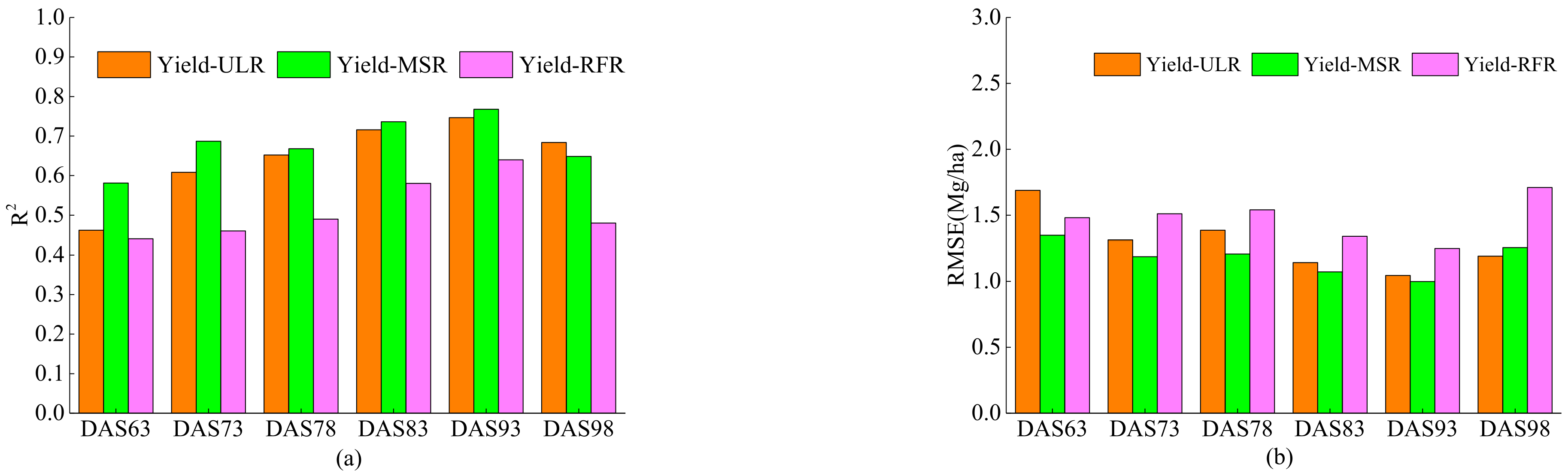

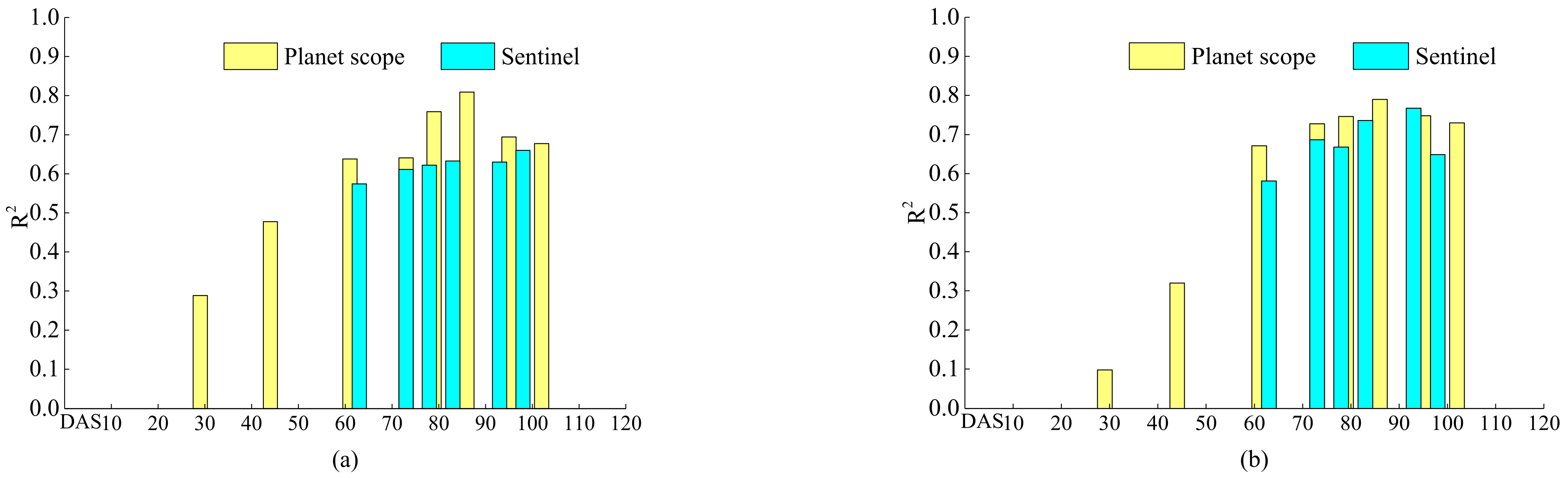

3.4. Yield Estimation and Validation

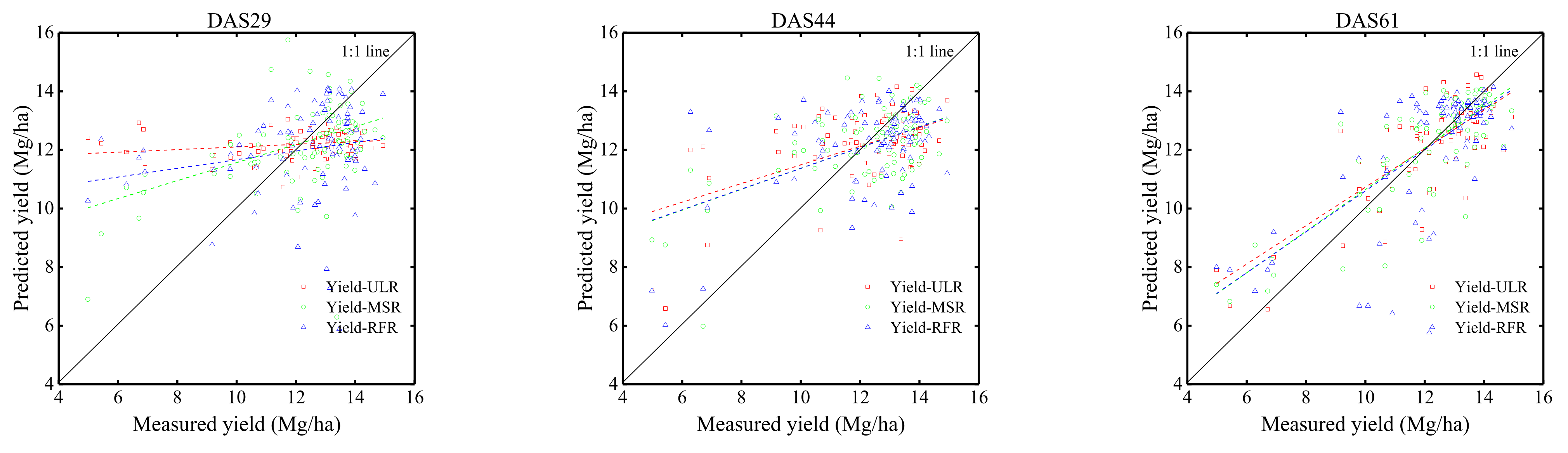

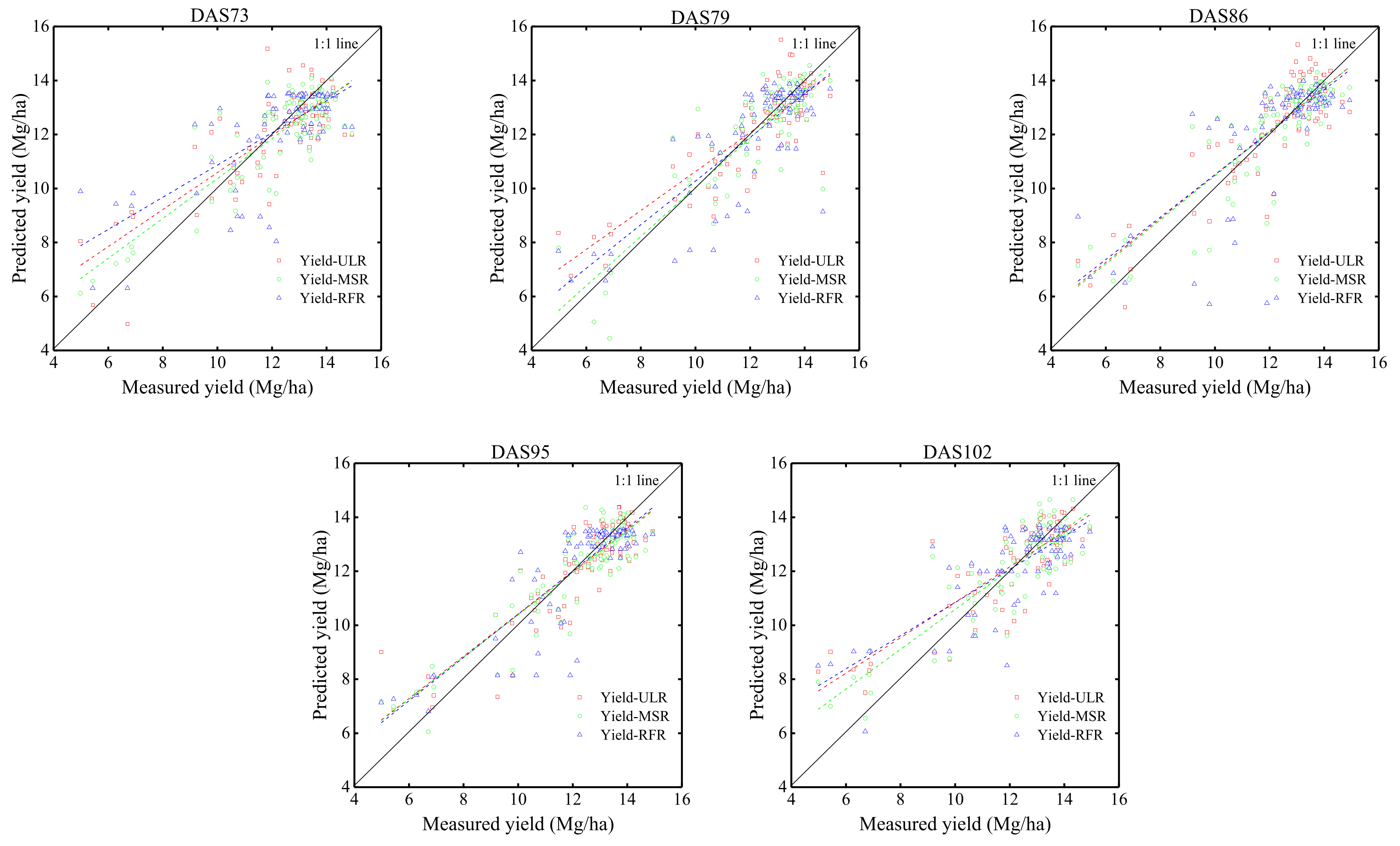

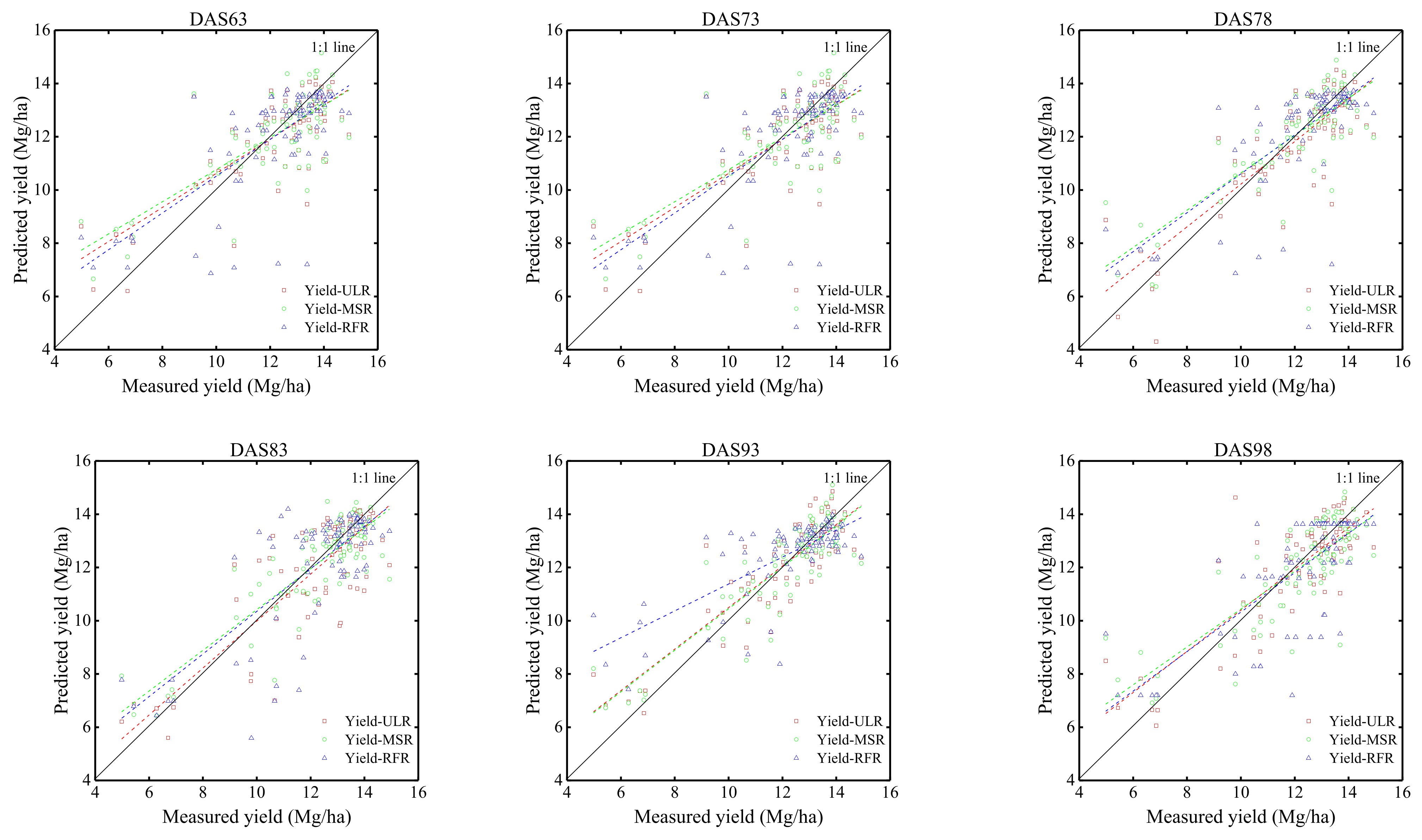

3.4.1. Yield-ULR Model

3.4.2. Yield-SMLR Model

3.4.3. Yield-RFR Model

3.4.4. Model Comparison and Validation

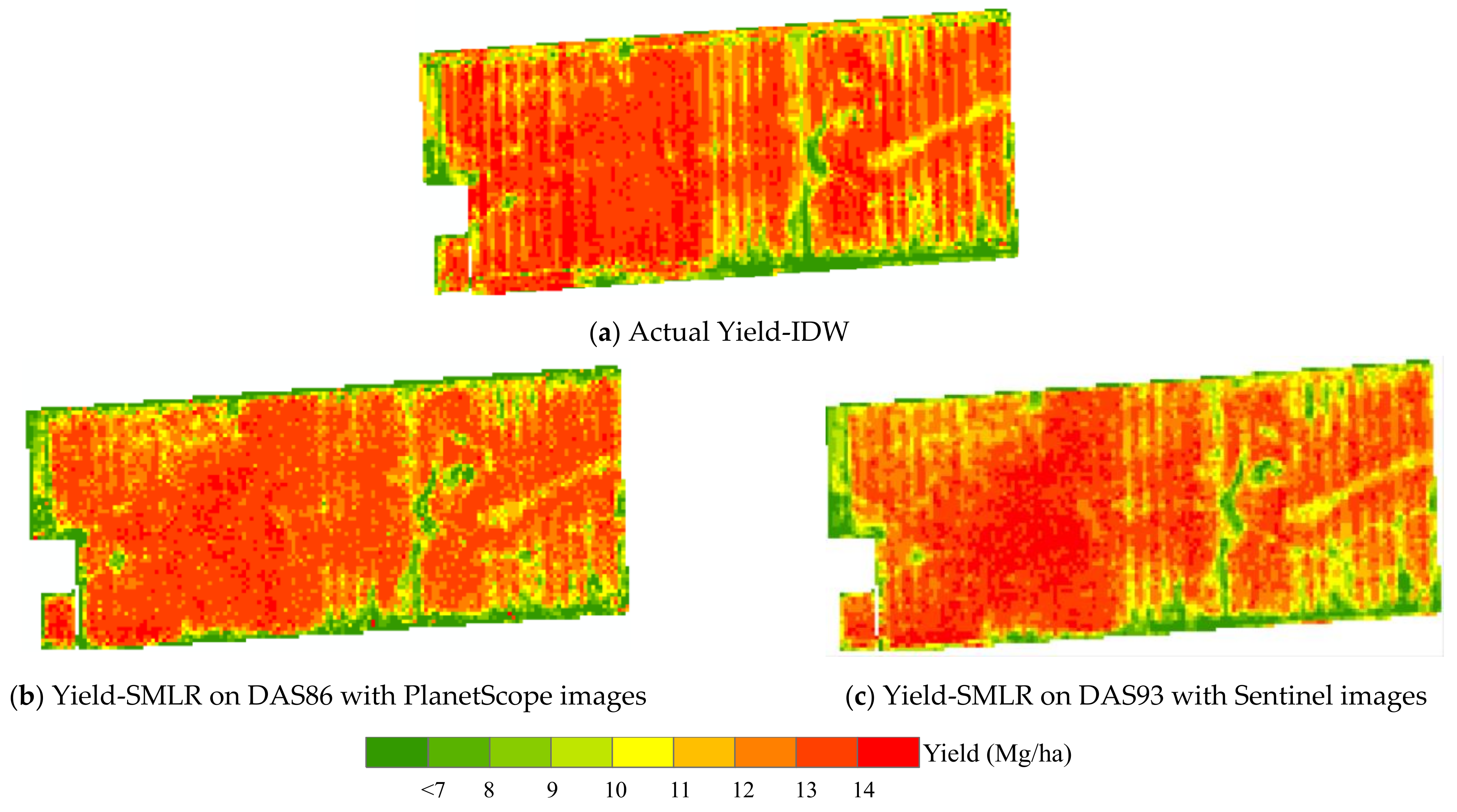

3.4.5. Corn Yield Mapping on Key Growth Stages with SMLR Method

4. Discussion

4.1. Vegetation Index for Corn Yield Estimation

4.2. Model Selection for Corn Yield Estimation

4.3. Remote Sensing Data Selection for Yield Estimation

4.4. Timing for Corn Yield Estimation by Remotely Sensed Data

4.5. Research Challenge

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Medina, H.; Tian, D.; Abebe, A. On optimizing a MODIS-based framework for in-season corn yield forecast. Int. J. Appl. Earth Obs. 2021, 95, 102258. [Google Scholar] [CrossRef]

- Tian, H.; Wang, P.; Tansey, K.; Han, D.; Zhang, J.; Zhang, S.; Li, H. A deep learning framework under attention mechanism for wheat yield estimation using remotely sensed indices in the Guanzhong Plain, PR China. Int. J. Appl. Earth Obs. 2021, 102, 102375. [Google Scholar] [CrossRef]

- Zhao, Y.; Potgieter, A.B.; Zhang, M.; Wu, B.; Hammer, G.L. Predicting wheat yield at the field scale by combining high-resolution Sentinel-2 satellite imagery and crop modelling. Remote Sens. 2020, 12, 1024. [Google Scholar] [CrossRef]

- Chahbi, A.; Zribi, M.; Lili-Chabaane, Z.; Duchemin, B.; Shabou, M.; Mougenot, B.; Boulet, G. Estimation of the dynamics and yields of cereals in a semi-arid area using remote sensing and the SAFY growth model. J. Remote Sens. 2014, 35, 1004–1028. [Google Scholar] [CrossRef]

- Wu, S.; Yang, P.; Ren, J.; Chen, Z.; Li, H. Regional winter wheat yield estimation based on the WOFOST model and a novel VW-4DEnSRF assimilation algorithm. Remote Sens. Environ. 2021, 255, 112276. [Google Scholar] [CrossRef]

- Dhakar, R.; Sehgal, V.K.; Chakraborty, D.; Sahoo, R.N.; Mukherjee, J.; Ines, A.V.M.; Shirasth, P.B.; Roy, S.B. Field scale spatial wheat yield forecasting system under limited field data availability by integrating crop simulation model with weather forecast and satellite remote sensing. Agric. Syst. 2022, 195, 103299. [Google Scholar] [CrossRef]

- Hunt, M.L.; Blackburn, G.A.; Carrasco, L.; Redhead, J.W.; Rowland, C.S. High resolution wheat yield mapping using Sentinel-2. Remote Sens. Environ. 2019, 233, 111410. [Google Scholar] [CrossRef]

- Johnson, D.M. An assessment of pre-and within-season remotely sensed variables for forecasting corn and soybean yields in the United States. Remote Sens. Environ. 2014, 141, 116–128. [Google Scholar] [CrossRef]

- Liaqat, M.U.; Cheema, M.J.M.; Huang, W.; Mahmood, T.; Zaman, M.; Khan, M.M. Evaluation of MODIS and Landsat multiband vegetation indices used for wheat yield estimation in irrigated Indus Basin. Comput. Electron. Agric. 2017, 138, 39–47. [Google Scholar] [CrossRef]

- Wang, J.; Dai, Q.; Shang, J.; Jin, X.; Sun, Q.; Zhou, G.; Dai, Q. Field-scale rice yield estimation using sentinel-1A synthetic aperture radar (SAR) data in coastal saline region of Jiangsu Province, China. Remote Sens. 2019, 11, 2274. [Google Scholar] [CrossRef]

- Segarra, J.; González-Torralba, J.; Aranjuelo, Í.; Araus, J.L.; Kefauver, S.C. Estimating wheat grain yield using Sentinel-2 imagery and exploring topographic features and rainfall effects on wheat performance in Navarre, Spain. Remote Sens. 2020, 12, 2278. [Google Scholar] [CrossRef]

- Azadbakht, M.; Ashourloo, D.; Aghighi, H.; Homayouni, S.; Shahrabi, H.S.; Matkan, A.; Radiom, S. Alfalfa yield estimation based on time series of Landsat 8 and PROBA-V images: An investigation of machine learning techniques and spectral-temporal features. Remote Sens. Appl. Soc. Environ. 2022, 25, 100657. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Johnson, M.D.; Hsieh, W.W.; Cannon, A.J.; Davidson, A.; Bédard, F. Crop yield forecasting on the Canadian Prairies by remotely sensed vegetation indices and machine learning methods. Agric. For. Meteorol. 2016, 218, 74–84. [Google Scholar] [CrossRef]

- Zhang, M.; Zhou, J.; Sudduth, K.A.; Kitchen, N.R. Estimation of maize yield and effects of variable-rate nitrogen application using UAV-based RGB imagery. Biosyst. Eng. 2020, 189, 24–35. [Google Scholar] [CrossRef]

- Yang, W.; Nigon, T.; Hao, Z.; Paiao, G.D.; Fernández, F.G.; Mulla, D.; Yand, C. Estimation of corn yield based on hyperspectral imagery and convolutional neural network. Comput. Electron. Agric. 2021, 184, 106092. [Google Scholar] [CrossRef]

- Wan, L.; Cen, H.; Zhu, J.; Zhang, J.; Zhu, Y.; Sun, D.; Du, X.; Zhai, L.; Weng, H.; Li, Y.; et al. Grain yield prediction of rice using multi-temporal UAV-based RGB and multispectral images and model transfer–a case study of small farmlands in the South of China. Agric. For. Meteorol. 2020, 291, 108096. [Google Scholar] [CrossRef]

- Franch, B.; Bautista, A.S.; Fita, D.; Rubio, C.; Tarrazó-Serrano, D.; Sánchez, A.; Skakun, S.; Vermote, E.; Becker-Reshef, I.; Uris, A. Within-Field Rice Yield Estimation Based on Sentinel-2 Satellite Data. Remote Sens. 2021, 13, 4095. [Google Scholar] [CrossRef]

- Fortin, J.G.; Anctil, F.; Parent, L.É.; Bolinder, M.A. Site-specific early season potato yield forecast by neural network in Eastern Canada. Precis. Agric. 2011, 12, 905–923. [Google Scholar] [CrossRef]

- Feng, A.; Zhou, J.; Vories, E.D.; Sudduth, K.A.; Zhang, M. Yield estimation in cotton using UAV-based multi-sensor imagery. Biosyst. Eng. 2020, 193, 101–114. [Google Scholar] [CrossRef]

- Ashapure, A.; Jung, J.; Chang, A.; Oh, S.; Yeom, J.; Maeda, M.; Maeda, A.; Dube, N.; Landivar, J.; Hague, S.; et al. Developing a machine learning based cotton yield estimation framework using multi-temporal UAS data. ISPRS J. Photogramm. Remote Sens. 2020, 169, 180–194. [Google Scholar] [CrossRef]

- Xu, W.; Chen, P.; Zhan, Y.; Chen, S.; Zhang, L.; Lan, Y. Cotton yield estimation model based on machine learning using time series UAV remote sensing data. Int. J. Appl. Earth Obs. 2021, 104, 102511. [Google Scholar] [CrossRef]

- Gutiérrez, S.; Wendel, A.; Underwood, J. Ground based hyperspectral imaging for extensive mango yield estimation. Comput. Electron. Agric. 2019, 157, 126–135. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Martínez-Guanter, J.; Egea, G.; Raja, P.; Pérez-Ruiz, M. Deep learning techniques for estimation of the yield and size of citrus fruits using a UAV. Eur. J. Agron. 2020, 115, 126030. [Google Scholar] [CrossRef]

- Sumesh, K.C.; Ninsawat, S.; Som-ard, J. Integration of RGB-based vegetation index, crop surface model and object-based image analysis approach for sugarcane yield estimation using unmanned aerial vehicle. Comput. Electron. Agric. 2021, 180, 105903. [Google Scholar]

- Doraiswamy, P.C.; Hatfield, J.L.; Jackson, T.J.; Akhmedov, B.; Prueger, J.; Stern, A. Crop condition and yield simulations using Landsat and MODIS. Remote Sens. Environ. 2004, 92, 548–559. [Google Scholar] [CrossRef]

- Guan, K.; Wu, J.; Kimball, J.S.; Anderson, M.C.; Frolking, S.; Li, B.; Hain, C.R.; Lobell, D.B. The shared and unique values of optical, fluorescence, thermal and microwave satellite data for estimating large-scale crop yields. Remote Sens. Environ. 2017, 199, 333–349. [Google Scholar] [CrossRef]

- Sakamoto, T. Incorporating environmental variables into a MODIS-based crop yield estimation method for United States corn and soybeans through the use of a random forest regression algorithm. ISPRS J. Photogramm. Remote Sens. 2020, 160, 208–228. [Google Scholar] [CrossRef]

- Siyal, A.A.; Dempewolf, J.; Becker-Reshef, I. Rice yield estimation using Landsat ETM + Data. J. Appl. Remote Sens. 2015, 9, 095986. [Google Scholar] [CrossRef]

- Claverie, M.; Ju, J.; Masek, J.G.; Dungan, J.L.; Vermote, E.F.; Roger, J.C.; Skakun, S.V.; Justice, C. The Harmonized Landsat and Sentinel-2 surface reflectance data set. Remote Sens. Environ. 2018, 219, 145–161. [Google Scholar] [CrossRef]

- Lambert, M.J.; Traoré, P.C.S.; Blaes, X.; Baret, P.; Defourny, P. Estimating smallholder crops production at village level from Sentinel-2 time series in Mali’s cotton belt. Remote Sens. Environ. 2018, 216, 647–657. [Google Scholar] [CrossRef]

- Schwalbert, R.A.; Amado, T.J.C.; Nieto, L.; Varela, S.; Corassa, G.M.; Horbe, T.A.N.; Rice, C.W.; Peralta, N.R.; Ciampitti, I.A. Forecasting maize yield at field scale based on high-resolution satellite imagery. Biosyst. Eng. 2018, 171, 179–192. [Google Scholar] [CrossRef]

- Skakun, S.; Kalecinski, N.I.; Brown, M.G.L.; Johnson, D.M.; Vermote, E.F.; Roger, J.; Franch, B. Assessing within-field corn and soybean yield variability from WorldView-3, Planet, Sentinel-2, and Landsat 8 satellite imagery. Remote Sens. 2021, 13, 872. [Google Scholar] [CrossRef]

- Kayad, A.; Sozzi, M.; Gatto, S.; Marinello, F.; Pirotti, F. Monitoring within-field variability of corn yield using Sentinel-2 and machine learning techniques. Remote Sens. 2019, 11, 2873. [Google Scholar] [CrossRef]

- Tomíček, J.; Mišurec, J.; Lukeš, P. Prototyping a Generic Algorithm for Crop Parameter Retrieval across the Season Using Radiative Transfer Model Inversion and Sentinel-2 Satellite Observations. Remote Sens. 2021, 13, 3659. [Google Scholar] [CrossRef]

- Mudereri, B.T.; Dube, T.; Adel-Rahman, E.M.; Niassy, S.; Kimathi, E.; Landmann, T. A comparative analysis of PlanetScope and Sentinel-2 space-borne sensors in mapping Striga weed using Guided Regula rised Random Forest classification ensemble. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 701–708. [Google Scholar] [CrossRef]

- Sadeh, Y.; Zhu, X.; Dunkerley, D.; Walker, J.P.; Zhang, Y.; Rozenstein, O.; Manivasagam, V.S.; Chenu, K. Fusion of Sentinel-2 and PlanetScope time-series data into daily 3 m surface reflectance and wheat LAI monitoring. Int. J. Appl. Earth Obs. 2021, 96, 102260. [Google Scholar] [CrossRef]

- Pantazi, X.E.; Moshou, D.; Alexandridis, T.; Whetton, R.L.; Mouazen, A.M. Wheat yield prediction using machine learning and advanced sensing techniques. Comput. Electron. Agric. 2016, 121, 57–65. [Google Scholar] [CrossRef]

- Gaso, D.V.; Berger, A.G.; Ciganda, V.S. Predicting wheat grain yield and spatial variability at field scale using a simple regression or a crop model in conjunction with Landsat images. Comput. Electron. Agric. 2019, 159, 75–83. [Google Scholar] [CrossRef]

- Řezník, T.; Pavelka, T.; Herman, L.; Lukas, V.; Širucek, P.; Leitgeb, S.; Leitner, F. Prediction of yield productivity zones from Landsat 8 and Sentinel-2A/B and their evaluation using farm machinery measurements. Remote Sens. 2020, 12, 1917. [Google Scholar] [CrossRef]

- Jaafar, H.H.; Ahmad, F.A. Crop yield prediction from remotely sensed vegetation indices and primary productivity in arid and semi-arid lands. J. Remote Sens. 2015, 36, 4570–4589. [Google Scholar] [CrossRef]

- Battude, M.; Bitar, A.A.; Morin, D.M.; Cros, J.; Huc, M.; Sicre, C.M.; Dantec, V.L.; Demarez, V. Estimating maize biomass and yield over large areas using high spatial and temporal resolution Sentinel-2 like remote sensing data. Remote Sens. Environ. 2016, 184, 668–681. [Google Scholar] [CrossRef]

- Shanahan, J.F.; Schepers, J.S.; Francis, D.D.; Varvel, G.E.; Wilhelm, W.W.; Tringe, J.M.; Schlemmer, M.R.; Major, D.J. Use of remote-sensing imagery to estimate corn grain yield. Agron. J. 2001, 93, 583–589. [Google Scholar] [CrossRef]

- Panda, S.S.; Ames, D.P.; Panigrahi, S. Application of vegetation indices for agricultural crop yield prediction using neural network techniques. Remote Sens. 2010, 2, 673–696. [Google Scholar] [CrossRef]

- Unganai, L.S.; Kogan, F.N. Drought monitoring and corn yield estimation in Southern Africa from AVHRR data. Remote Sens. Environ. 1998, 63, 219–232. [Google Scholar] [CrossRef]

- Chlingaryan, A.; Sukkarieh, S.; Whelan, B. Machine learning approaches for crop yield prediction and nitrogen status estimation in precision agriculture: A review. Comput. Electron. Agric. 2018, 151, 61–69. [Google Scholar] [CrossRef]

- Bierman, P.M.; Rosen, C.J.; Venterea, R.T.; Lamb, J.A. Survey of nitrogen fertilizer use on corn in Minnesota. Agric. Syst. 2012, 109, 43–52. [Google Scholar] [CrossRef]

- Planet Team. Planet Imagery Product Specifications; Planet Labs Inc.: San Francisco, CA, USA, 2018; Available online: https://www.planet.com/products/satellite-imagery/files/Planet_Combined_Imagery_Product_Specs_December2017.pdf (accessed on 12 April 2018).

- Jordan, C.F. Derivation of leaf-area index from quality of light on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Chen, J.M. Evaluation of vegetation indices and a modified simple ratio for boreal applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Rouse, J.W.; Hass, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the great plains with ERTS. Third Earth Resour. Technol. Satell. Symp. 1973, 1, 309–317. [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Baret, F.; Jacquemoud, S.; Hanocq, J.F. The soil line concept in remote sensing. Remote Sens. Rev. 1993, 7, 65–82. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide dynamic range vegetation index for remote quantification of biophysical characteristics of vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Arkebauer, T.J.; Rundquist, D.C.; Keydan, G.; Leavitt, B. Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophys. Res. Lett. 2003, 30, 1248. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. 2017. Available online: https://www.R-project.org (accessed on 12 April 2018).

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Lobell, D.B.; Thau, D.; Seifert, C.; Engle, E.; Little, B. A scalable satellite-based crop yield mapper. Remote Sens. Environ. 2015, 164, 324–333. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Mkhabela, M.S.; Bullock, P.; Raj, S.; Wang, S.; Yang, Y. Crop yield forecasting on the Canadian Prairies using MODIS NDVI data. Agric. For Meteorol. 2011, 151, 385–393. [Google Scholar] [CrossRef]

- Gopal, P.M.; Bhargavi, R. Performance evaluation of best feature subsets for crop yield prediction using machine learning algorithms. Appl. Artif. Intell. 2019, 33, 621–642. [Google Scholar]

- Al-Gaadi, K.A.; Hassaballa, A.A.; Tola, E.K.; Kayad, A.G.; Madugundu, R.; Alblewi, B.; Assiri, F. Prediction of potato crop yield using precision agriculture techniques. PLoS ONE 2016, 11, e0162219. [Google Scholar] [CrossRef] [PubMed]

- Nagy, A.; Szabó, A.; Adeniyi, O.D.; Tamás, J. Wheat Yield Forecasting for the Tisza River Catchment Using Landsat 8 NDVI and SAVI Time Series and Reported Crop Statistics. Agronomy 2021, 11, 652. [Google Scholar] [CrossRef]

- Kayad, A.; Sozzi, M.; Paraforos, D.S.; Rodrigues, F.A., Jr.; Cohen, Y.; Fountas, S.; Francisco, M.; Pezzuolo, A.; Grigolato, S.; Marinello, F. How many gigabytes per hectare are available in the digital agriculture era? A digitization footprint estimation. Comput. Electron. Agric. 2022, 198, 107080. [Google Scholar] [CrossRef]

| Image | Acquisition Date | Days after Seeding (DAS) | Growth Stage | Product Level |

|---|---|---|---|---|

| PlanetScope | 3 Jun., 18 Jun., 5 Jul., 17 Jul. | 29, 44, 61, 73 | V6, V10, V17, VT | Level 3B |

| 23 Jul., 30 Jul., 8 Aug., 15 Aug. | 79, 86, 95, 102 | R1, R2, R3, R4 | ||

| Sentinel-2A | 7 Jul., 17 Jul. 22 Jul., 27 Jul., 6 Aug., 11 Aug. | 63, 73 78, 83, 93, 98 | V17, VT R1, R2, R3, R3 | Level 1C |

| Vegetation Index | Equation | References |

|---|---|---|

| SR (Simple Ratio) | [49] | |

| MSR (Modified Simple Ratio) | [50] | |

| NDVI (Normalized Differenced Vegetation Index) | [51] | |

| GNDVI (Green Normalized Differenced Vegetation Index) | [52] | |

| SAVI (Optimized Soil-Adjusted Vegetation Index) | [53] | |

| EVI2 (Enhanced Vegetation Index) | [54] | |

| MCARI2 (Modified chlorophyll absorption reflectivity index) | [55] | |

| MSAVI (Modified soil-adjusted vegetation index) | [56] | |

| WDRVI (Wide dynamic range vegetation index) | [57] | |

| GCVI (Green chlorophyll vegetation index) | [58] |

| Yield-ULR Model Based on PlanetScope Images | Yield-ULR Model Based on Sentinel-2 Images | ||||||

|---|---|---|---|---|---|---|---|

| Growth Stage | Models | R2 | RMSE | Growth Stage | Models | R2 | RMSE |

| DAS29 | −11.06MCARI2 + 16.15 | 0.03 | 2.27 | ||||

| DAS44 | 34.68GNDVI − 9.40 | 0.32 | 1.91 | ||||

| DAS61 | 1.99GCVI − 4.99 | 0.61 | 1.45 | DAS63 | 1.46GCVI + 1.10 | 0.56 | 1.53 |

| DAS73 | 1.69GCVI − 5.44 | 0.60 | 1.46 | DAS73 | 2.76GCVI − 1.28 | 0.48 | 1.65 |

| DAS79 | 1.69GCVI − 493 | 0.64 | 1.38 | DAS78 | 1.54GCVI − 1.03 | 0.58 | 1.49 |

| DAS86 | 2.20GCVI − 6.78 | 0.72 | 1.22 | DAS83 | 1.32GCVI + 0.31 | 0.57 | 1.51 |

| DAS95 | 1.81GCVI − 3.85 | 0.65 | 1.37 | DAS93 | 1.27GCVI + 1.64 | 0.61 | 1.45 |

| DAS102 | 2.21GCVI − 4.71 | 0.64 | 1.39 | DAS98 | 1.01GCVI + 3.06 | 0.64 | 1.39 |

| Growth Stage | Independent Variables | AIC | R2 | RMSE |

|---|---|---|---|---|

| DAS29 | GCVI, GNDVI, MCARI2, SAVI, SR, WDRVI | 129.97 | 0.29 | 1.95 |

| DAS44 | MSAVI, NDVI, SAVI, SR, WDRVI | 101.10 | 0.48 | 1.67 |

| DAS61 | EVI2, GCVI, GNDVI, MCARI2, NDVI | 69.20 | 0.64 | 1.39 |

| DAS73 | EVI2, GCVI, GNDVI, MCARI2, SR, WDRVI | 70.48 | 0.64 | 1.38 |

| DAS79 | EVI2, GCVI, GNDVI, NDVI, SR, WDRVI | 35.79 | 0.76 | 1.13 |

| DAS86 | EVI2, GCVI, GNDVI, MCARI2, SR, WDRVI | 15.51 | 0.81 | 1.01 |

| DAS95 | EVI2, GCVI, GNDVI, NDVI, SR, WDRVI | 56.52 | 0.69 | 1.28 |

| DAS102 | EVI2, GCVI, GNDVI, MCARI2, SR, WDRVI | 61.24 | 0.68 | 1.31 |

| Growth Stage | Independent Variables | AIC | R2 | RMSE |

|---|---|---|---|---|

| DAS63 | MCARI2, MSR, SAVI, SR, WDRVI | 83.33 | 0.57 | 1.51 |

| DAS73 | EVI2, GCVI, GNDVI, MCARI2, MSAVI, NDVI, SAVI, SR, WDRVI | 83.36 | 0.61 | 1.44 |

| DAS78 | EVI2, MCARI2, NDVI, SAVI, WDRVI | 73.00 | 0.62 | 1.43 |

| DAS83 | EVI2, GCVI, GNDVI, MCARI2, MSAVI, NDVI, SAVI, SR, WDRVI | 78.20 | 0.63 | 1.40 |

| DAS93 | EVI2, GNDVI, MCARI2, MSR, SAVI, SR, WDRVI | 75.13 | 0.63 | 1.41 |

| DAS98 | EVI2, MCARI2, MSAVI, MSR, SAVI, WDRVI | 54.51 | 0.66 | 1.26 |

| Yield-RFR Model with PlanetScope Images | Yield-RFR Model with Sentinel Images | ||||||

|---|---|---|---|---|---|---|---|

| Growth Stage | Parameters | R2 | RMSE | Growth Stage | Parameters | R2 | RMSE |

| DAS29 | 10, 70%, 5 | 0.75 | 1.10 | ||||

| DAS44 | 10, 80%, 8 | 0.82 | 0.96 | ||||

| DAS61 | 7, 70%, 6 | 0.85 | 0.86 | DAS63 | 9, 90%, 3 | 0.81 | 1.01 |

| DAS73 | 3, 60%, 10 | 0.79 | 1.05 | DAS73 | 5, 100%, 4 | 0.78 | 1.07 |

| DAS79 | 5, 80%, 7 | 0.86 | 0.84 | DAS78 | 7, 50%, 3 | 0.82 | 0.97 |

| DAS86 | 9, 100%, 10 | 0.90 | 0.73 | DAS83 | 5, 50%, 10 | 0.85 | 0.86 |

| DAS95 | 3, 100%, 12 | 0.80 | 1.05 | DAS93 | 9, 70%, 12 | 0.89 | 0.75 |

| DAS102 | 3, 50%, 7 | 0.78 | 1.09 | DAS98 | 7, 50%, 3 | 0.85 | 0.88 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, F.; Miao, Y.; Chen, X.; Sun, Z.; Stueve, K.; Yuan, F. In-Season Prediction of Corn Grain Yield through PlanetScope and Sentinel-2 Images. Agronomy 2022, 12, 3176. https://doi.org/10.3390/agronomy12123176

Li F, Miao Y, Chen X, Sun Z, Stueve K, Yuan F. In-Season Prediction of Corn Grain Yield through PlanetScope and Sentinel-2 Images. Agronomy. 2022; 12(12):3176. https://doi.org/10.3390/agronomy12123176

Chicago/Turabian StyleLi, Fenling, Yuxin Miao, Xiaokai Chen, Zhitong Sun, Kirk Stueve, and Fei Yuan. 2022. "In-Season Prediction of Corn Grain Yield through PlanetScope and Sentinel-2 Images" Agronomy 12, no. 12: 3176. https://doi.org/10.3390/agronomy12123176

APA StyleLi, F., Miao, Y., Chen, X., Sun, Z., Stueve, K., & Yuan, F. (2022). In-Season Prediction of Corn Grain Yield through PlanetScope and Sentinel-2 Images. Agronomy, 12(12), 3176. https://doi.org/10.3390/agronomy12123176