Litchi Detection in a Complex Natural Environment Using the YOLOv5-Litchi Model

Abstract

1. Introduction

2. Materials and Methods

2.1. Materials

2.1.1. Data Collection

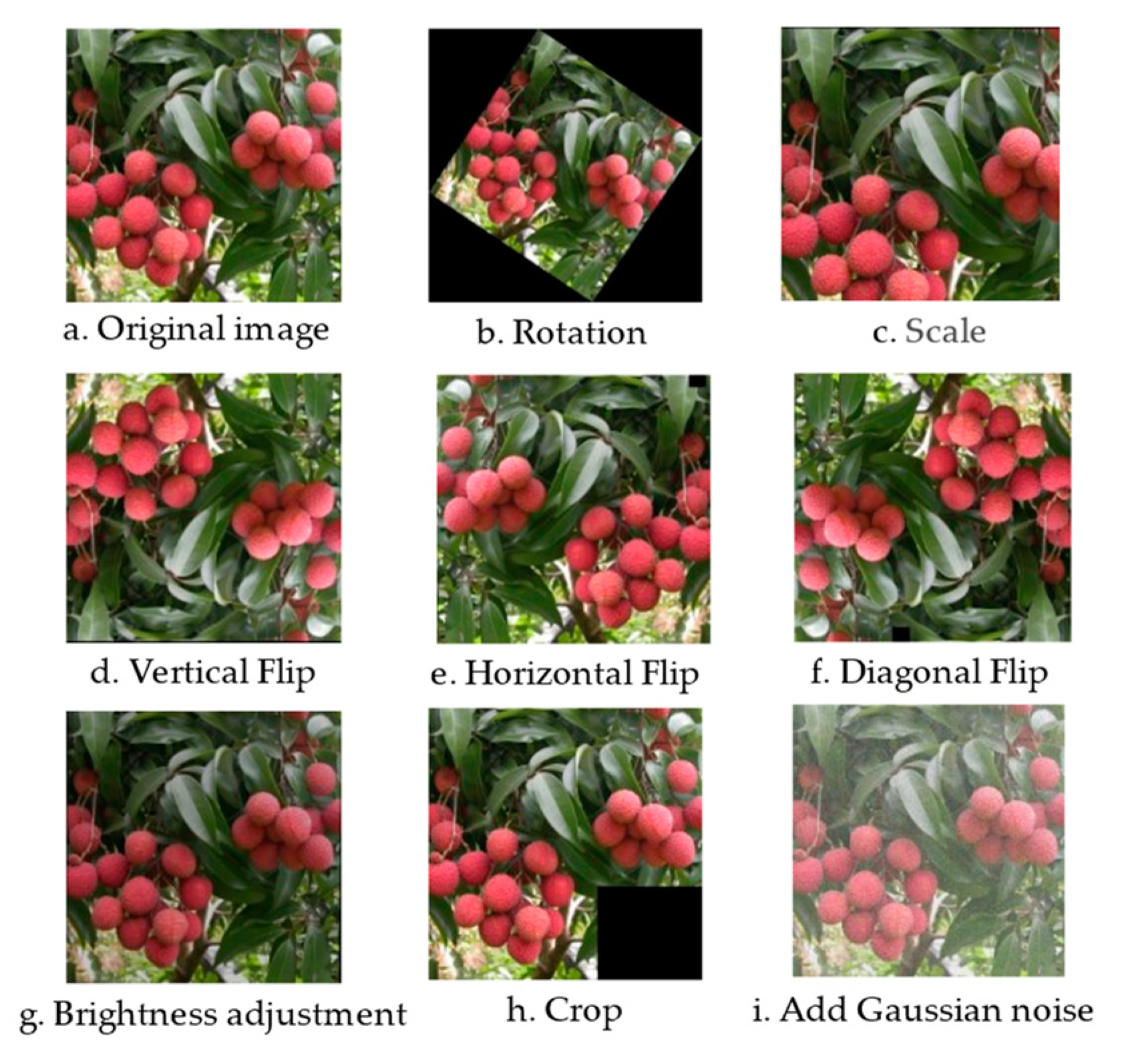

2.1.2. Data Augmentation

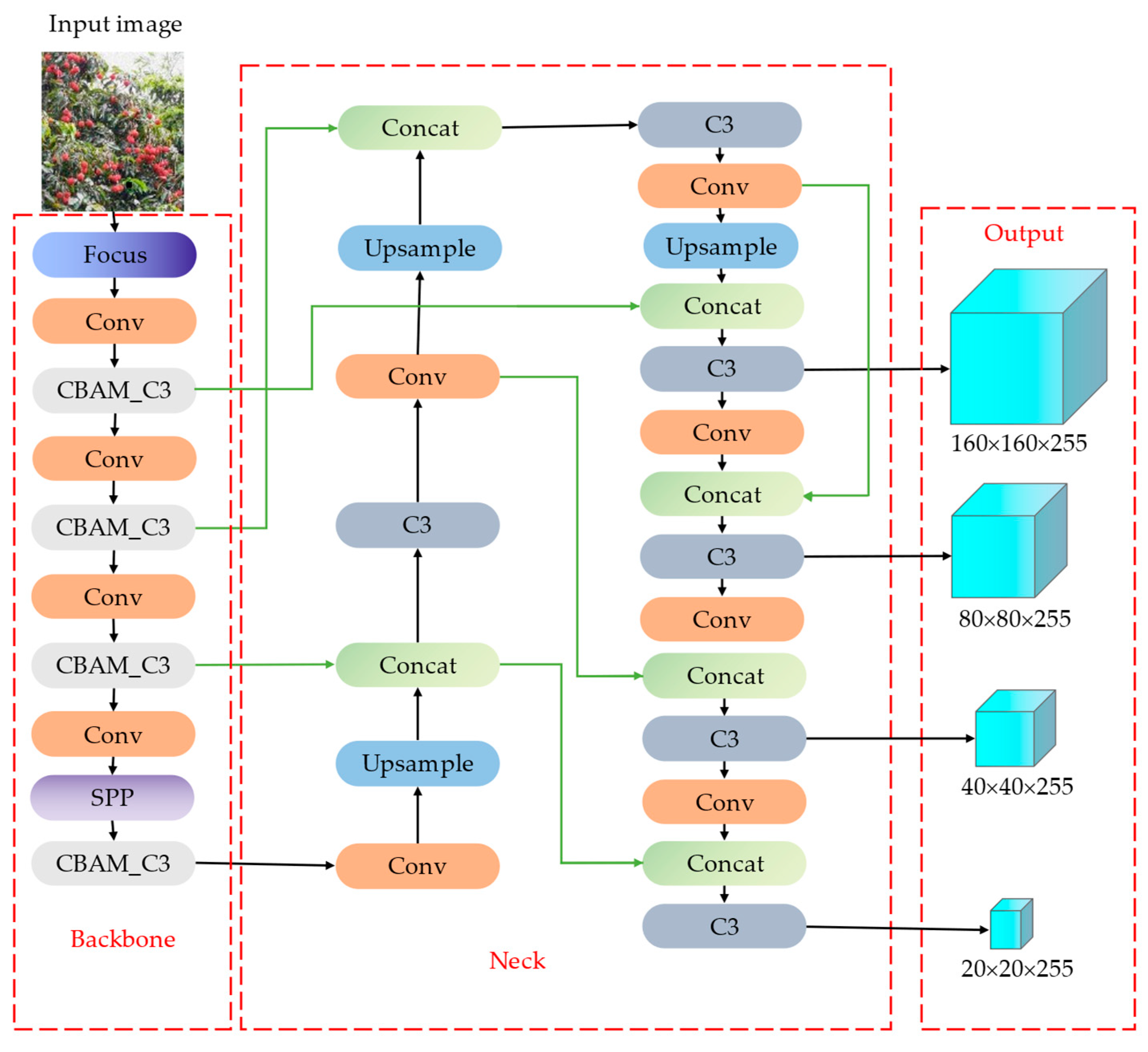

2.2. YOLOv5-Litchi Construction

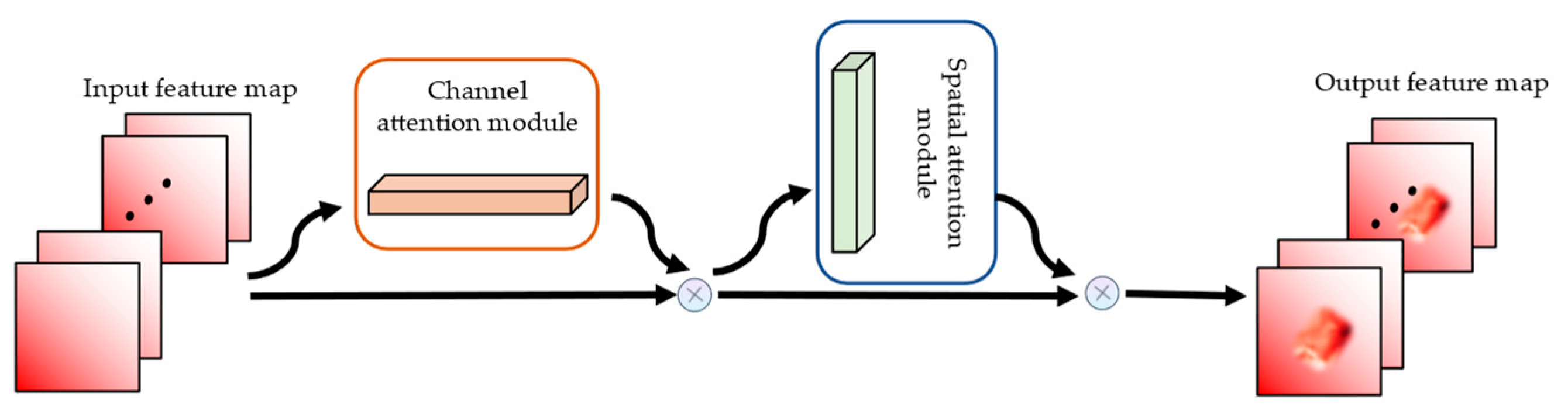

2.2.1. Convolutional Block Attention Module

2.2.2. Small-Object Detection Layer

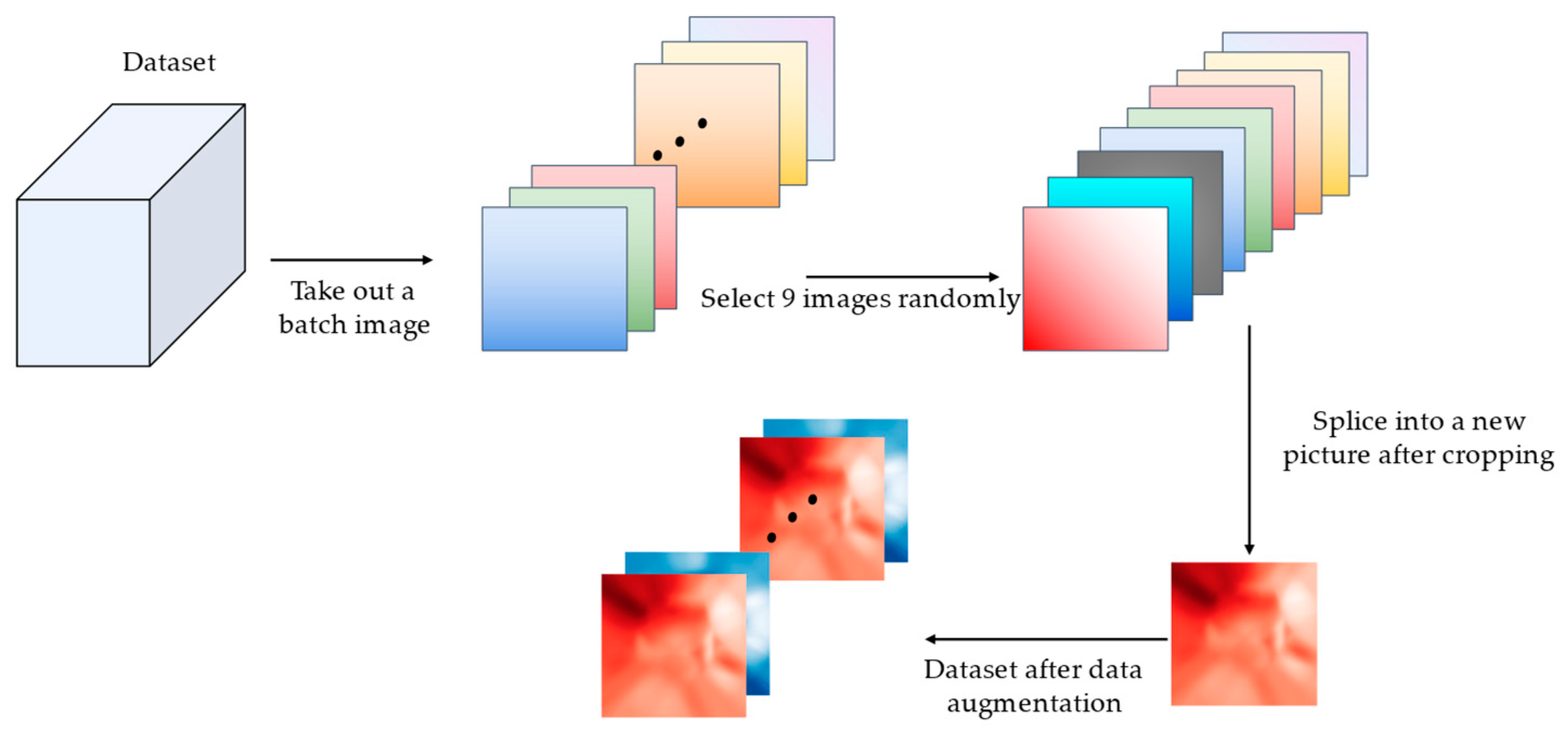

2.2.3. Mosaic-9 Data Enhancement

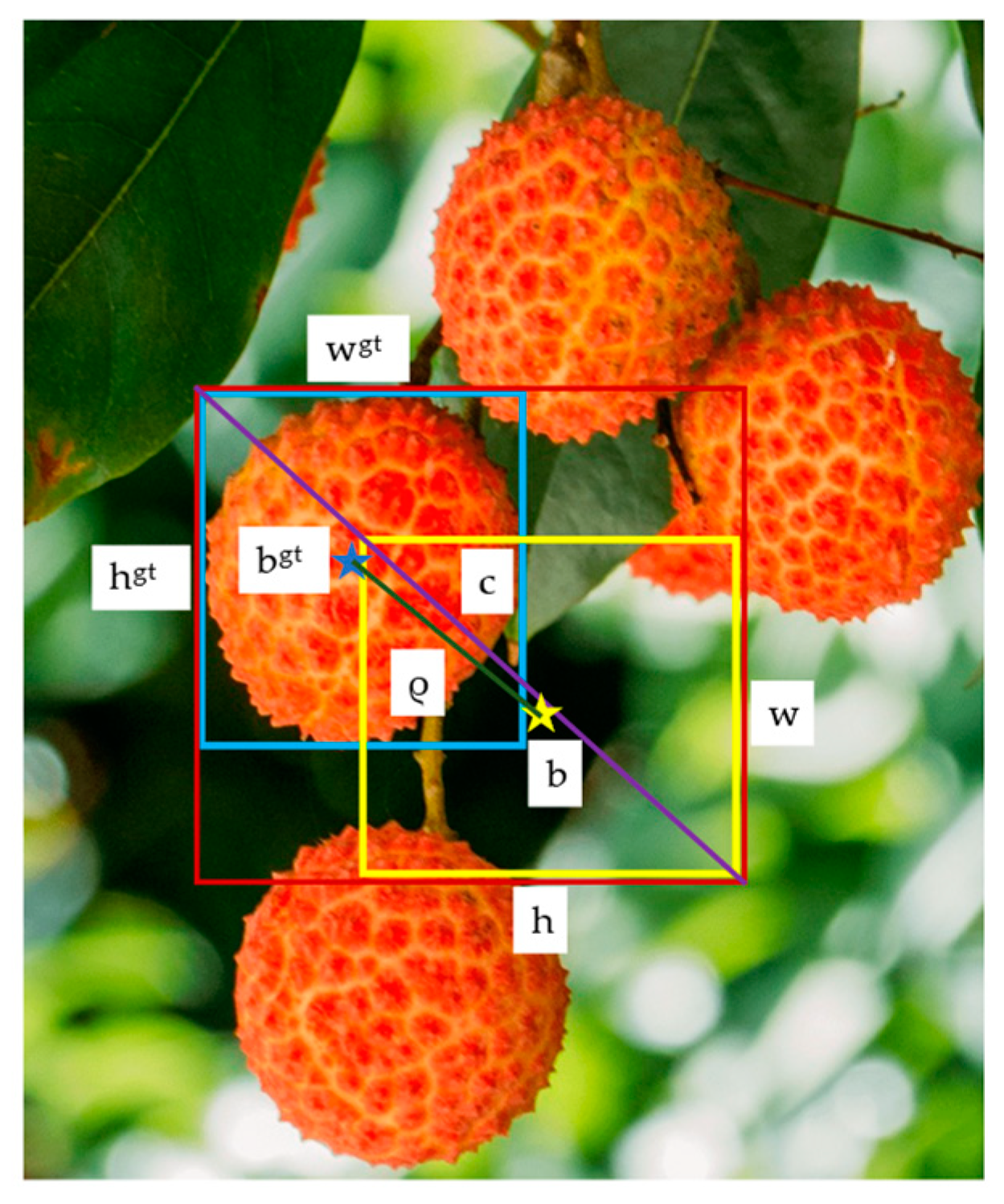

2.2.4. Complete Intersection over Union Loss Function

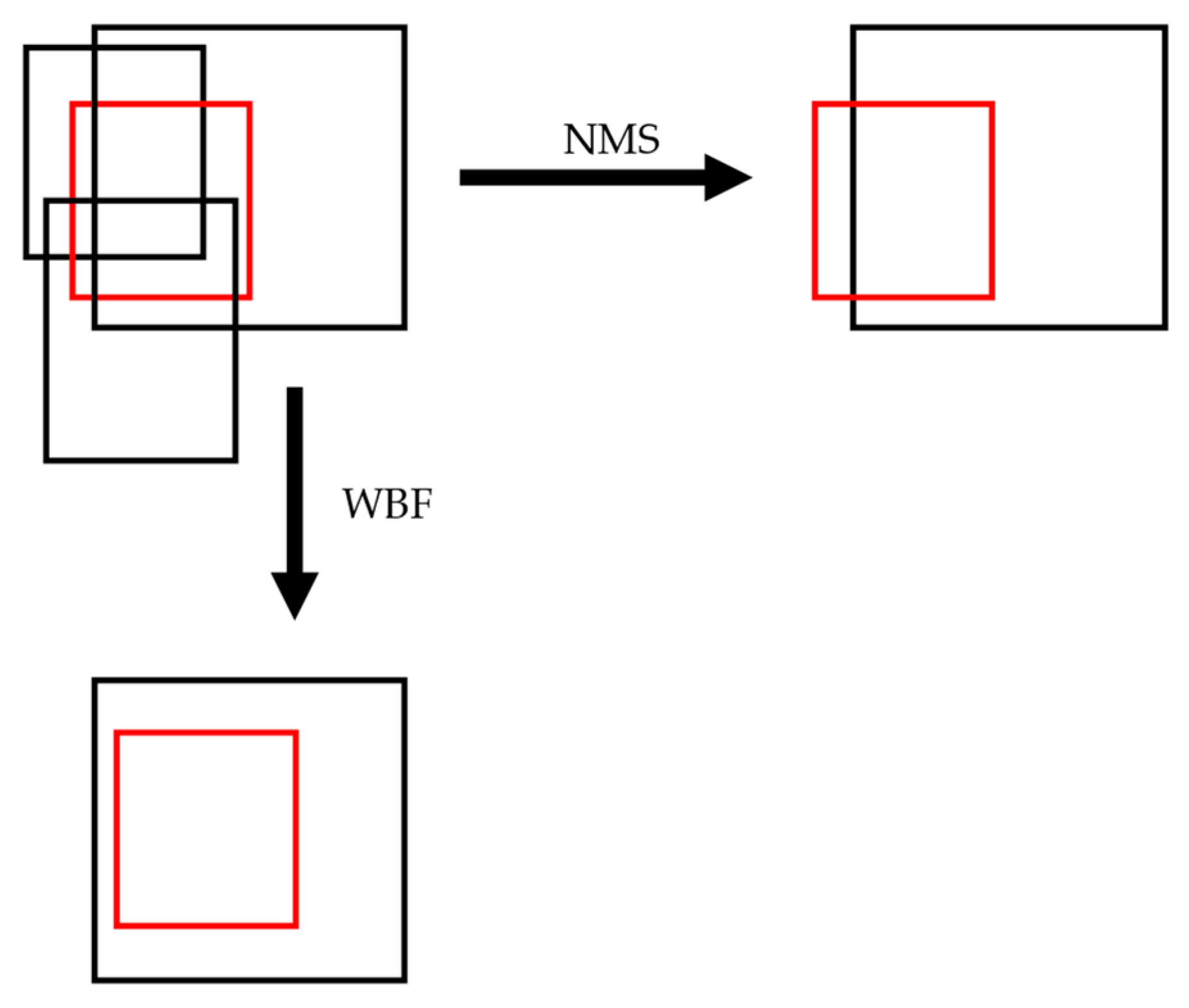

2.2.5. Weighted-Boxes Fusion

2.3. Training Equipment and Methods

3. Results

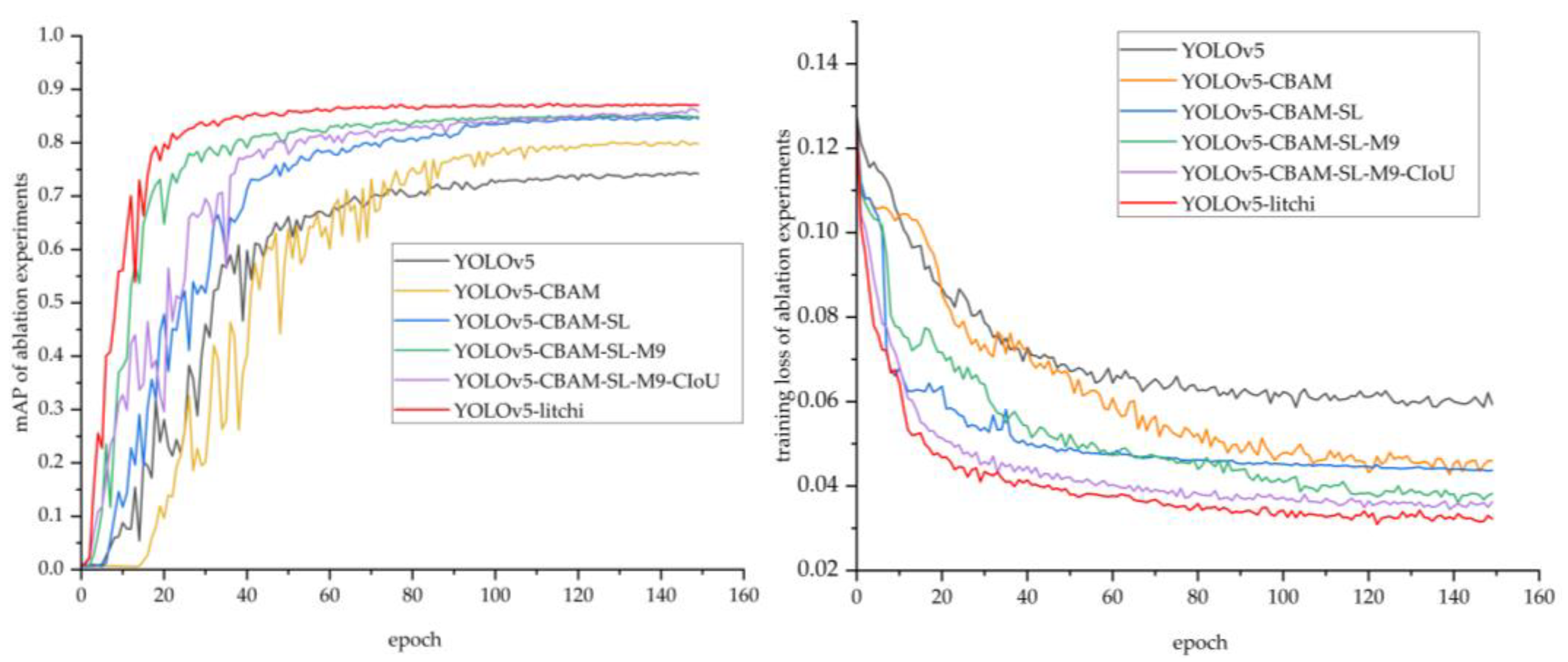

3.1. Ablation Experiments

3.2. Comparison of Different Network Models

3.3. Comparison of Different Amounts of Litchis

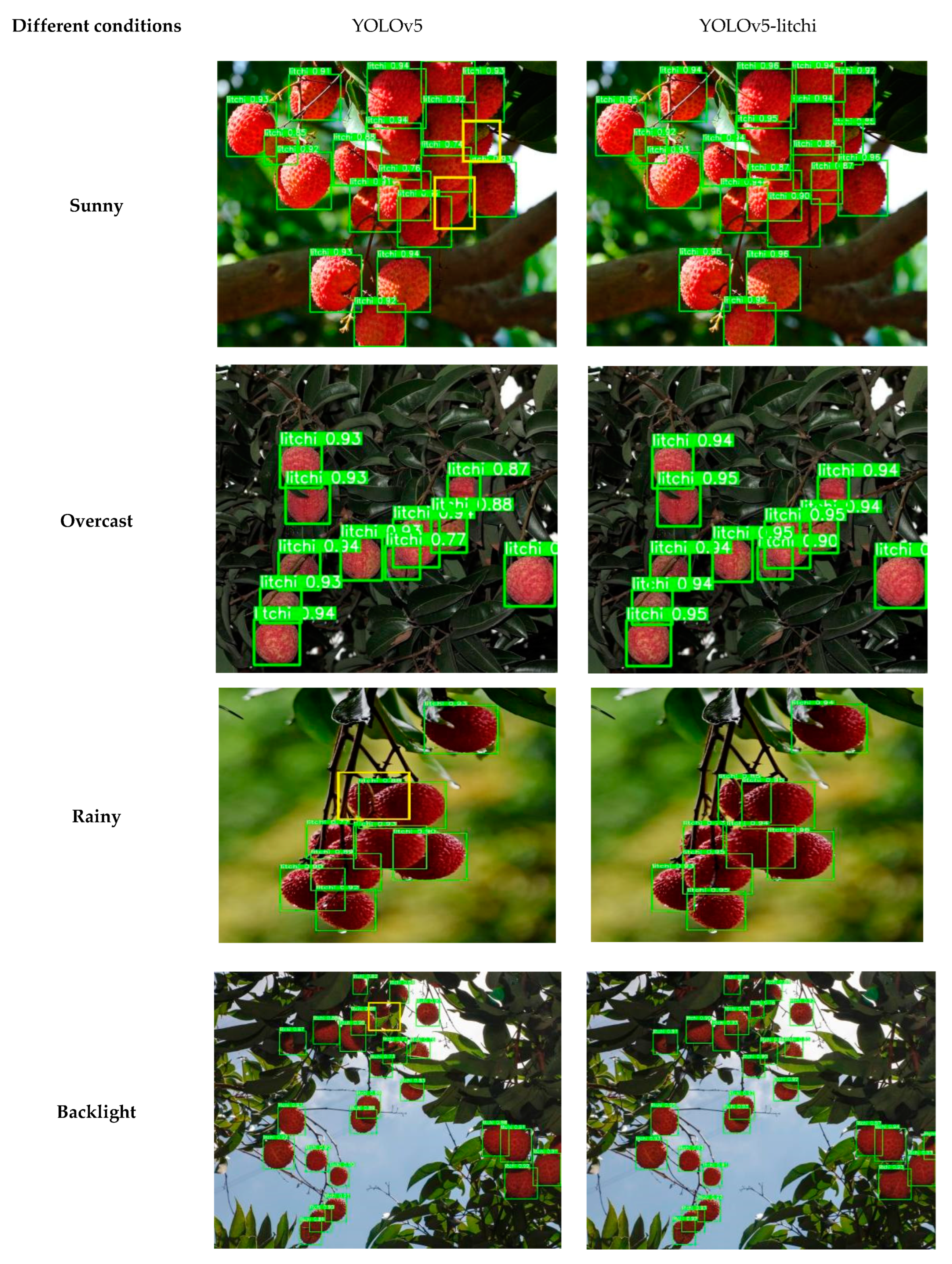

3.4. Comparison of Different Weather Conditions

4. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xiong, J.; Lin, R.; Liu, Z.; He, Z.; Tang, L.; Yang, Z.; Zou, X. The Recognition of Litchi Clusters and the Calculation of Picking Point in a Nocturnal Natural Environment. Biosyst. Eng. 2018, 166, 44–57. [Google Scholar] [CrossRef]

- Liang, C.; Xiong, J.; Zheng, Z.; Zhong, Z.; Li, Z.; Chen, S.; Yang, Z. A Visual Detection Method for Nighttime Litchi Fruits and Fruiting Stems. Comput. Electron. Agric. 2020, 169, 105192. [Google Scholar] [CrossRef]

- Qi, X.; Dong, J.; Lan, Y.; Zhu, H. Method for Identifying Litchi Picking Position Based on YOLOv5 and PSPNet. Remote Sens. 2022, 14, 2004. [Google Scholar] [CrossRef]

- Xie, J.; Chen, Y.; Gao, P.; Sun, D.; Xue, X.; Yin, D.; Han, Y.; Wang, W. Smart Fuzzy Irrigation System for Litchi Orchards. Comput. Electron. Agric. 2022, 201, 107287. [Google Scholar] [CrossRef]

- Ramos, P.J.; Prieto, F.A.; Montoya, E.C.; Oliveros, C.E. Automatic Fruit Count on Coffee Branches Using Computer Vision. Comput. Electron. Agric. 2017, 137, 9–22. [Google Scholar] [CrossRef]

- Aquino, A.; Millan, B.; Diago, M.-P.; Tardaguila, J. Automated Early Yield Prediction in Vineyards from On-the-Go Image Acquisition. Comput. Electron. Agric. 2018, 144, 26–36. [Google Scholar] [CrossRef]

- Chen, M.; Tang, Y.; Zou, X.; Huang, Z.; Zhou, H.; Chen, S. 3D Global Mapping of Large-Scale Unstructured Orchard Integrating Eye-in-Hand Stereo Vision and SLAM. Comput. Electron. Agric. 2021, 187, 106237. [Google Scholar] [CrossRef]

- de Castro, F.; Gladston, A. Detection of Small Oranges Using YOLOv3 Feature Pyramid Mechanism. Int. J. Nat. Comput. Res. 2021, 10, 23–37. [Google Scholar] [CrossRef]

- Maheswari, P.; Raja, P.; Apolo-Apolo, O.E.; Pérez-Ruiz, M. Intelligent Fruit Yield Estimation for Orchards Using Deep Learning Based Semantic Segmentation Techniques—A Review. Front. Plant Sci. 2021, 12, 684328. [Google Scholar] [CrossRef]

- Yu, H.; Song, S.; Ma, S.; Sinnott, R.O. Estimating Fruit Crop Yield through Deep Learning. In Proceedings of the 6th IEEE/ACM International Conference on Big Data Computing, Applications and Technologies, Auckland, New Zealand, 2 December 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 145–148. [Google Scholar]

- Zhuang, J.; Hou, C.; Tang, Y.; He, Y.; Guo, Q.; Zhong, Z.; Luo, S. Computer Vision-Based Localisation of Picking Points for Automatic Litchi Harvesting Applications towards Natural Scenarios. Biosyst. Eng. 2019, 187, 1–20. [Google Scholar] [CrossRef]

- Fu, L.; Yang, Z.; Wu, F.; Zou, X.; Lin, J.; Cao, Y.; Duan, J. YOLO-Banana: A Lightweight Neural Network for Rapid Detection of Banana Bunches and Stalks in the Natural Environment. Agronomy 2022, 12, 391. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep Learning–Method Overview and Review of Use for Fruit Detection and Yield Estimation. Comput. Electron. Agric. 2019, 162, 219–234. [Google Scholar] [CrossRef]

- Fu, L.; Gao, F.; Wu, J.; Li, R.; Karkee, M.; Zhang, Q. Application of Consumer RGB-D Cameras for Fruit Detection and Localization in Field: A Critical Review. Comput. Electron. Agric. 2020, 177, 105687. [Google Scholar] [CrossRef]

- Chen, Y.; Lee, W.S.; Gan, H.; Peres, N.; Fraisse, C.; Zhang, Y.; He, Y. Strawberry Yield Prediction Based on a Deep Neural Network Using High-Resolution Aerial Orthoimages. Remote Sens. 2019, 11, 1584. [Google Scholar] [CrossRef]

- Wang, Z.; Walsh, K.; Koirala, A. Mango Fruit Load Estimation Using a Video Based MangoYOLO—Kalman Filter—Hungarian Algorithm Method. Sensors 2019, 19, 2742. [Google Scholar] [CrossRef] [PubMed]

- Fu, L.; Feng, Y.; Wu, J.; Liu, Z.; Gao, F.; Majeed, Y.; Al-Mallahi, A.; Zhang, Q.; Li, R.; Cui, Y. Fast and Accurate Detection of Kiwifruit in Orchard Using Improved YOLOv3-Tiny Model. Precis. Agric. 2021, 22, 754–776. [Google Scholar] [CrossRef]

- Sozzi, M.; Cantalamessa, S.; Cogato, A.; Kayad, A.; Marinello, F. Automatic Bunch Detection in White Grape Varieties Using YOLOv3, YOLOv4, and YOLOv5 Deep Learning Algorithms. Agronomy 2022, 12, 319. [Google Scholar] [CrossRef]

- HongXing, P.; Bo, H.; YuanYuan, S.; ZeSen, L.; ChaoWu, Z.; Yan, C.; JunTao, X. General improved SSD model for picking object recognition of multiple fruits in natural environment. Trans. Chin. Soc. Agric. Eng. 2018, 34, 155–162. [Google Scholar]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple Detection during Different Growth Stages in Orchards Using the Improved YOLO-V3 Model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Gao, F.; Fu, L.; Zhang, X.; Majeed, Y.; Li, R.; Karkee, M.; Zhang, Q. Multi-Class Fruit-on-Plant Detection for Apple in SNAP System Using Faster R-CNN. Comput. Electron. Agric. 2020, 176, 105634. [Google Scholar] [CrossRef]

- Dorj, U.-O.; Lee, M.; Yun, S. An Yield Estimation in Citrus Orchards via Fruit Detection and Counting Using Image Processing. Comput. Electron. Agric. 2017, 140, 103–112. [Google Scholar] [CrossRef]

- Bargoti, S.; Underwood, J.P. Image Segmentation for Fruit Detection and Yield Estimation in Apple Orchards. J. Field Robot. 2017, 34, 1039–1060. [Google Scholar] [CrossRef]

- Zhou, Z.; Song, Z.; Fu, L.; Gao, F.; Li, R.; Cui, Y. Real-Time Kiwifruit Detection in Orchard Using Deep Learning on AndroidTM Smartphones for Yield Estimation. Comput. Electron. Agric. 2020, 179, 105856. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Martínez-Guanter, J.; Egea, G.; Raja, P.; Pérez-Ruiz, M. Deep Learning Techniques for Estimation of the Yield and Size of Citrus Fruits Using a UAV. Eur. J. Agron. 2020, 115, 126030. [Google Scholar] [CrossRef]

- Mekhalfi, M.L.; Nicolò, C.; Ianniello, I.; Calamita, F.; Goller, R.; Barazzuol, M.; Melgani, F. Vision System for Automatic On-Tree Kiwifruit Counting and Yield Estimation. Sensors 2020, 20, 4214. [Google Scholar] [CrossRef]

- Yang, B.; Gao, Z.; Gao, Y.; Zhu, Y. Rapid Detection and Counting of Wheat Ears in the Field Using YOLOv4 with Attention Module. Agronomy 2021, 11, 1202. [Google Scholar] [CrossRef]

- Osman, Y.; Dennis, R.; Elgazzar, K. Yield Estimation and Visualization Solution for Precision Agriculture. Sensors 2021, 21, 6657. [Google Scholar] [CrossRef]

- Peng, H.; Xue, C.; Shao, Y.; Chen, K.; Liu, H.; Xiong, J.; Chen, H.; Gao, Z.; Yang, Z. Litchi Detection in the Field Using an Improved YOLOv3 Model. Int. J. Agric. Biol. Eng. 2022, 15, 211–220. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, S.; Zou, T.; Dong, L.; Peng, Z.; Wang, H. A Dense Litchi Target Recognition Algorithm for Large Scenes. Math. Probl. Eng. 2022, 2022, 4648105. [Google Scholar] [CrossRef]

- Wang, H.; Dong, L.; Zhou, H.; Luo, L.; Lin, G.; Wu, J.; Tang, Y. YOLOv3-Litchi Detection Method of Densely Distributed Litchi in Large Vision Scenes. Math. Probl. Eng. 2021, 2021, 8883015. [Google Scholar] [CrossRef]

- Peng, H.; Li, J.; Xu, H.; Chen, H.; Xing, Z.; He, H.; Xiong, J. Litchi detection based on multiple feature enhancement and feature fusion SSD. Trans. Chin. Soc. Agric. Eng. 2022, 38, 169–177. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Xue, Z.; Lin, H.; Wang, F. A Small Target Forest Fire Detection Model Based on YOLOv5 Improvement. Forests 2022, 13, 1332. [Google Scholar] [CrossRef]

- Liu, H.; Sun, F.; Gu, J.; Deng, L. SF-YOLOv5: A Lightweight Small Object Detection Algorithm Based on Improved Feature Fusion Mode. Sensors 2022, 22, 5817. [Google Scholar] [CrossRef] [PubMed]

- Zhang, B.; Sun, C.-F.; Fang, S.-Q.; Zhao, Y.-H.; Su, S. Workshop Safety Helmet Wearing Detection Model Based on SCM-YOLO. Sensors 2022, 22, 6702. [Google Scholar] [CrossRef]

- Zhang, L.; Li, Y.; Chen, H.; Wu, W.; Chen, K.; Wang, S. Anchor-Free YOLOv3 for Mass Detection in Mammogram. Expert Syst. Appl. 2022, 191, 116273. [Google Scholar] [CrossRef]

- Xue, J.; Cheng, F.; Li, Y.; Song, Y.; Mao, T. Detection of Farmland Obstacles Based on an Improved YOLOv5s Algorithm by Using CIoU and Anchor Box Scale Clustering. Sensors 2022, 22, 1790. [Google Scholar] [CrossRef]

- Solovyev, R.; Wang, W.; Gabruseva, T. Weighted Boxes Fusion: Ensembling Boxes from Different Object Detection Models. Image Vis. Comput. 2021, 107, 104117. [Google Scholar] [CrossRef]

| Configuration | Parameter |

|---|---|

| CPU | Intel (R) Xeon (R) Gold 6240 CPU@2.60 GHz |

| GPU | Nvidia RTX 3090*2 |

| Operating system | Windows 10 |

| Development environment | Pycharm professional edition |

| Library | Python 3.8, PyTorch 1.7 |

| Model | Precision (%) | F1 Score | Training Loss | Recall (%) | mAP(%) |

|---|---|---|---|---|---|

| YOLOv5 | 84.6 | 0.74 | 0.059 | 66.1 | 74.2 |

| YOLOv5-M9 | 84.7 | 0.75 | 0.057 | 67.9 | 75.7 |

| YOLOv5-CIoU | 86.7 | 0.77 | 0.056 | 68.9 | 77.3 |

| YOLOv5-WBF | 85.0 | 0.77 | 0.054 | 70.0 | 77.5 |

| YOLOv5-CBAM | 87.2 | 0.80 | 0.046 | 72.8 | 80.1 |

| YOLOv5-SL | 87.3 | 0.80 | 0.044 | 73.1 | 80.5 |

| YOLOv5-SL-CBAM | 87.7 | 0.83 | 0.041 | 78.4 | 84.5 |

| YOLOv5-SL-CBAM-M9 | 88.8 | 0.83 | 0.039 | 78.3 | 84.9 |

| YOLOv5-SL-CBAM-M9-CIoU | 89.5 | 0.84 | 0.036 | 79.8 | 85.9 |

| YOLOv5-litchi | 90.9 | 0.86 | 0.032 | 81.1 | 87.1 |

| Detection Model | Backbone Network | Model Size (Mb) | mAP(%) | Recall (%) | Detection Time (ms) |

|---|---|---|---|---|---|

| SSD | VGG16 | 157.0 | 70.1% | 59.8% | 14.5 |

| Faster-RCNN | ResNet-50 | 315.0 | 76.5% | 76.2% | 93.8 |

| YOLOv4 | CSPDarknet53 | 244.0 | 72.6% | 65.2% | 43.2 |

| YOLOv5 | CSPDarknet53 | 40.4 | 74.2% | 66.1% | 15.8 |

| YOLOv5-litchi | CSPDarknet53 | 44.8 | 87.1% | 81.1% | 25.0 |

| Weather Condition | Detection Algorithm | Real Numbers | Predicted Numbers | Average Confidence (%) |

|---|---|---|---|---|

| Sunny | YOLOv5 | 21 | 18 | 89.5% |

| YOLOv5-litchi | 21 | 20 | 92.7% | |

| Overcast | YOLOv5 | 11 | 11 | 90.8% |

| YOLOv5-litchi | 11 | 11 | 94.1% | |

| Rainy | YOLOv5 | 9 | 8 | 89.0% |

| YOLOv5-litchi | 9 | 9 | 91.1% | |

| Backlight | YOLOv5 | 28 | 24 | 86.3% |

| YOLOv5-litchi | 28 | 26 | 90.7% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, J.; Peng, J.; Wang, J.; Chen, B.; Jing, T.; Sun, D.; Gao, P.; Wang, W.; Lu, J.; Yetan, R.; et al. Litchi Detection in a Complex Natural Environment Using the YOLOv5-Litchi Model. Agronomy 2022, 12, 3054. https://doi.org/10.3390/agronomy12123054

Xie J, Peng J, Wang J, Chen B, Jing T, Sun D, Gao P, Wang W, Lu J, Yetan R, et al. Litchi Detection in a Complex Natural Environment Using the YOLOv5-Litchi Model. Agronomy. 2022; 12(12):3054. https://doi.org/10.3390/agronomy12123054

Chicago/Turabian StyleXie, Jiaxing, Jiajun Peng, Jiaxin Wang, Binhan Chen, Tingwei Jing, Daozong Sun, Peng Gao, Weixing Wang, Jianqiang Lu, Rundong Yetan, and et al. 2022. "Litchi Detection in a Complex Natural Environment Using the YOLOv5-Litchi Model" Agronomy 12, no. 12: 3054. https://doi.org/10.3390/agronomy12123054

APA StyleXie, J., Peng, J., Wang, J., Chen, B., Jing, T., Sun, D., Gao, P., Wang, W., Lu, J., Yetan, R., & Li, J. (2022). Litchi Detection in a Complex Natural Environment Using the YOLOv5-Litchi Model. Agronomy, 12(12), 3054. https://doi.org/10.3390/agronomy12123054