Deep Learning-Based Weed Detection in Turf: A Review

Abstract

:1. Introduction

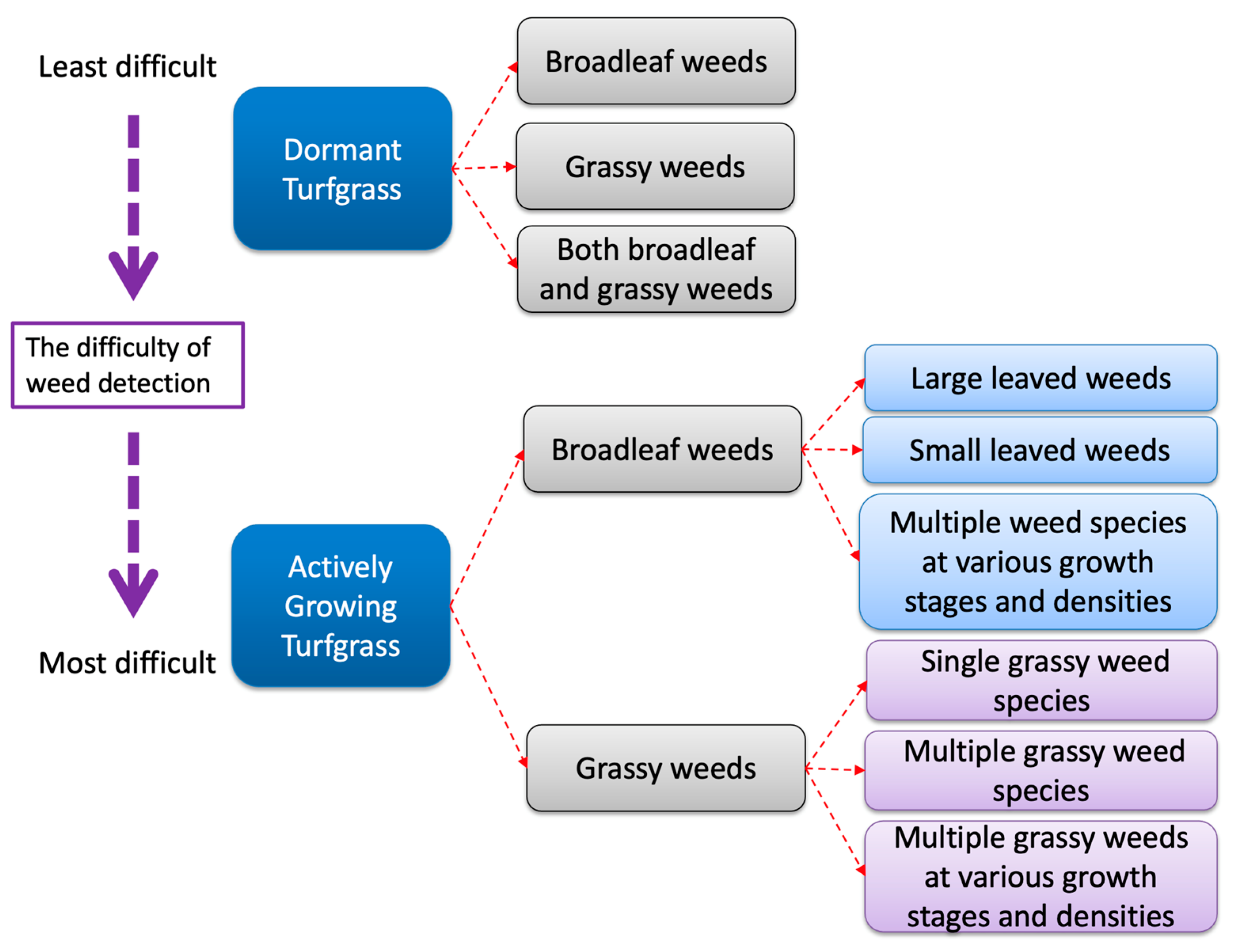

2. Detection of Weeds Growing in Turf

2.1. Image Classification versus Object Detection

2.2. Detection of Weeds in Dormant Turfgrass

2.3. Detection of Broadleaf Weeds in Actively Growing Turfgrass

2.4. Detection of Grass or Grass-Like Weeds in Actively Growing Turfgrasss

2.5. Weed Localization

2.6. Detection of Weeds Growing in Various Turfgrass Surface Conditions

2.7. Detection of Weeds at Various Densities and Growth Stages

2.8. Detection of Weeds Based on Herbicide Weed Control Spectrum

3. Future Research Directions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Milesi, C.; Elvidge, C.; Dietz, J.; Tuttle, B.; Nemani, R.; Running, S. A strategy for mapping and modeling the ecological effects of US lawns. J. Turfgrass Manag. 2005, 1, 83–97. [Google Scholar]

- Beditz, J. The development and growth of the US golf market. In Science and Golf II; Taylor & Francis: Abingdon, UK, 2002; pp. 678–686. [Google Scholar]

- Stier, J.C.; Steinke, K.; Ervin, E.H.; Higginson, F.R.; McMaugh, P.E. Turfgrass benefits and issues. Turfgrass Biol. Use Manag. 2013, 56, 105–145. [Google Scholar]

- Busey, P. Cultural management of weeds in turfgrass: A review. Crop Sci. 2003, 43, 1899–1911. [Google Scholar] [CrossRef]

- Hao, Z.; Bagavathiannan, M.; Li, Y.; Qu, M.; Wang, Z.; Yu, J. Wood vinegar for control of broadleaf weeds in dormant turfgrass. Weed Technol. 2021, 35, 901–907. [Google Scholar] [CrossRef]

- McElroy, J.; Martins, D. Use of herbicides on turfgrass. Planta Daninha 2013, 31, 455–467. [Google Scholar] [CrossRef] [Green Version]

- Gómez de Barreda, D.; Yu, J.; McCullough, P.E. Seedling tolerance of cool-season turfgrasses to metamifop. HortScience 2013, 48, 1313–1316. [Google Scholar] [CrossRef]

- McCullough, P.E.; Yu, J.; de Barreda, D.G. Seashore paspalum (Paspalum vaginatum) tolerance to pronamide applications for annual bluegrass control. Weed Technol. 2012, 26, 289–293. [Google Scholar] [CrossRef]

- Tate, T.M.; Meyer, W.A.; McCullough, P.E.; Yu, J. Evaluation of mesotrione tolerance levels and [14C] mesotrione absorption and translocation in three fine fescue species. Weed Sci. 2019, 67, 497–503. [Google Scholar] [CrossRef]

- McCullough, P.E.; Yu, J.; Raymer, P.L.; Chen, Z. First report of ACCase-resistant goosegrass (Eleusine indica) in the United States. Weed Sci. 2016, 64, 399–408. [Google Scholar] [CrossRef]

- Pearsaul, D.G.; Leon, R.G.; Sellers, B.A.; Silveira, M.L.; Odero, D.C. Evaluation of verticutting and herbicides for tropical signalgrass (Urochloa subquadripara) control in turf. Weed Technol. 2018, 32, 392–397. [Google Scholar] [CrossRef]

- Balogh, J.C.; Anderson, J.L. Environmental Impacts of Turfgrass Pesticides; Golf Course Management & Construction: Boca Raton, FL, USA, 2020; pp. 221–353. [Google Scholar]

- Tappe, W.; Groeneweg, J.; Jantsch, B. Diffuse atrazine pollution in German aquifers. Biodegradation 2002, 13, 3–10. [Google Scholar] [CrossRef] [PubMed]

- Nitschke, L.; Schüssler, W. Surface water pollution by herbicides from effluents of waste water treatment plants. Chemosphere 1998, 36, 35–41. [Google Scholar] [CrossRef] [PubMed]

- Starrett, S.; Christians, N.; Al Austin, T. Movement of herbicides under two irrigation regimes applied to turfgrass. Adv. Environ. Res. 2000, 4, 169–176. [Google Scholar] [CrossRef]

- Petrovic, A.M.; Easton, Z.M. The role of turfgrass management in the water quality of urban environments. Int. Turfgrass Soc. Res. J. 2005, 10, 55–69. [Google Scholar]

- Yu, J.; McCullough, P.E. Triclopyr reduces foliar bleaching from mesotrione and enhances efficacy for smooth crabgrass control by altering uptake and translocation. Weed Technol. 2016, 30, 516–523. [Google Scholar] [CrossRef] [Green Version]

- Pimentel, D.; Zuniga, R.; Morrison, D. Update on the environmental and economic costs associated with alien-invasive species in the United States. Ecol. Econ. 2005, 52, 273–288. [Google Scholar] [CrossRef]

- Mahoney, D.J.; Gannon, T.W.; Jeffries, M.D.; Matteson, A.R.; Polizzotto, M.L. Management considerations to minimize environmental impacts of arsenic following monosodium methylarsenate (MSMA) applications to turfgrass. J. Environ. Manag. 2015, 150, 444–450. [Google Scholar] [CrossRef] [Green Version]

- Mulligan, C.; Yong, R.; Gibbs, B. Remediation technologies for metal-contaminated soils and groundwater: An evaluation. Eng. Geol. 2001, 60, 193–207. [Google Scholar] [CrossRef]

- Busey, P. Managing goosegrass II. Removal. Golf Course Manag. 2004, 72, 132–136. [Google Scholar]

- Shi, J.; Li, Z.; Zhu, T.; Wang, D.; Ni, C. Defect detection of industry wood veneer based on NAS and multi-channel mask R-CNN. Sensors 2020, 20, 4398. [Google Scholar] [CrossRef]

- He, T.; Liu, Y.; Yu, Y.; Zhao, Q.; Hu, Z. Application of deep convolutional neural network on feature extraction and detection of wood defects. Measurement 2020, 152, 107357. [Google Scholar] [CrossRef]

- Zhou, H.; Zhuang, Z.; Liu, Y.; Liu, Y.; Zhang, X. Defect Classification of Green Plums Based on Deep Learning. Sensors 2020, 20, 6993. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Le, V.N.T.; Ahderom, S.; Alameh, K. Performances of the lbp based algorithm over cnn models for detecting crops and weeds with similar morphologies. Sensors 2020, 20, 2193. [Google Scholar] [CrossRef]

- Liu, B.; Bruch, R. Weed Detection for Selective Spraying: A Review. Curr. Robot. Rep. 2020, 1, 19–26. [Google Scholar] [CrossRef] [Green Version]

- Sharpe, S.M.; Schumann, A.W.; Boyd, N.S. Detection of Carolina geranium (Geranium carolinianum) growing in competition with strawberry using convolutional neural networks. Weed Sci. 2019, 67, 239–245. [Google Scholar] [CrossRef]

- Jin, X.; Che, J.; Chen, Y. Weed identification using deep learning and image processing in vegetable plantation. IEEE Access 2021, 9, 10940–10950. [Google Scholar] [CrossRef]

- Zhuang, J.; Li, X.; Bagavathiannan, M.; Jin, X.; Yang, J.; Meng, W.; Li, T.; Li, L.; Wang, Y.; Chen, Y. Evaluation of different deep convolutional neural networks for detection of broadleaf weed seedlings in wheat. Pest Manag. Sci. 2022, 78, 521–529. [Google Scholar] [CrossRef] [PubMed]

- Jin, X.; Sun, Y.; Che, J.; Bagavathiannan, M.; Yu, J.; Chen, Y. A novel deep learning-based method for detection of weeds in vegetables. Pest Manag. Sci. 2022, 78, 1861–1869. [Google Scholar] [CrossRef] [PubMed]

- Chostner, B. See & Spray: The next generation of weed control. Resour. Mag. 2017, 24, 4–5. [Google Scholar]

- Dyrmann, M.; Karstoft, H.; Midtiby, H.S. Plant species classification using deep convolutional neural network. Biosyst. Eng. 2016, 151, 72–80. [Google Scholar] [CrossRef]

- Ghosal, S.; Blystone, D.; Singh, A.K.; Ganapathysubramanian, B.; Singh, A.; Sarkar, S. An explainable deep machine vision framework for plant stress phenotyping. Proc. Natl. Acad. Sci. USA 2018, 115, 4613–4618. [Google Scholar] [CrossRef] [Green Version]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [PubMed] [Green Version]

- Wang, A.; Zhang, W.; Wei, X. A review on weed detection using ground-based machine vision and image processing techniques. Comput. Electron. Agric. 2019, 158, 226–240. [Google Scholar] [CrossRef]

- Yu, J.; Sharpe, S.M.; Schumann, A.W.; Boyd, N.S. Deep learning for image-based weed detection in turfgrass. Eur. J. Agron. 2019, 104, 78–84. [Google Scholar] [CrossRef]

- Yu, J.; Sharpe, S.M.; Schumann, A.W.; Boyd, N.S. Detection of broadleaf weeds growing in turfgrass with convolutional neural networks. Pest Manag. Sci. 2019, 75, 2211–2218. [Google Scholar] [CrossRef]

- Xie, S.; Hu, C.; Bagavathiannan, M.; Song, D. Toward Robotic Weed Control: Detection of Nutsedge Weed in Bermudagrass Turf Using Inaccurate and Insufficient Training Data. arXiv 2021, arXiv:2106.08897. [Google Scholar] [CrossRef]

- Medrano, R. Feasibility of Real-Time Weed Detection in Turfgrass on an Edge Device. Master’s Thesis, The California State Univeristy, Camarillo, CA, USA, 2021. [Google Scholar]

- Sharpe, S.M.; Schumann, A.W.; Boyd, N.S. Goosegrass detection in strawberry and tomato using a convolutional neural network. Sci. Rep. 2020, 10, 9548. [Google Scholar] [CrossRef] [PubMed]

- Jin, X.; Bagavathiannan, M.; McCullough, P.E.; Chen, Y.; Yu, J. A deep learning-based method for classification, detection, and localization of weeds in turfgrass. Pest Manag. Sci. 2022, 78, 4809–4821. [Google Scholar] [CrossRef]

- Jin, X.; Bagavathiannan, M.; Maity, A.; Chen, Y.; Yu, J. Deep learning for detecting herbicide weed control spectrum in turfgrass. Plant Methods 2022, 18, 94. [Google Scholar] [CrossRef]

- Yu, J.; Schumann, A.W.; Cao, Z.; Sharpe, S.M.; Boyd, N.S. Weed Detection in Perennial Ryegrass With Deep Learning Convolutional Neural Network. Front. Plant Sci. 2019, 10, 1422. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yu, J.; Schumann, A.W.; Sharpe, S.M.; Li, X.; Boyd, N.S. Detection of grassy weeds in bermudagrass with deep convolutional neural networks. Weed Sci. 2020, 68, 545–552. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Zhuang, J.; Jin, X.; Chen, Y.; Meng, W.; Wang, Y.; Yu, J.; Muthukumar, B. Drought stress impact on the performance of deep convolutional neural networks for weed detection in Bahiagrass. Grass Forage Sci. 2022. early view. [Google Scholar] [CrossRef]

- Reed, T.V.; Yu, J.; McCullough, P.E. Aminocyclopyrachlor efficacy for controlling Virginia buttonweed (Diodia virginiana) and smooth crabgrass (Digitaria ischaemum) in tall fescue. Weed Technol. 2013, 27, 488–491. [Google Scholar] [CrossRef]

- Tate, T.M.; McCullough, P.E.; Harrison, M.L.; Chen, Z.; Raymer, P.L. Characterization of mutations conferring inherent resistance to acetyl coenzyme A carboxylase-inhibiting herbicides in turfgrass and grassy weeds. Crop Sci. 2021, 61, 3164–3178. [Google Scholar] [CrossRef]

- Hasan, A.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- Quan, L.; Feng, H.; Lv, Y.; Wang, Q.; Zhang, C.; Liu, J.; Yuan, Z. Maize seedling detection under different growth stages and complex field environments based on an improved Faster R–CNN. Biosyst. Eng. 2019, 184, 1–23. [Google Scholar] [CrossRef]

- Yang, J.; Bagavathiannan, M.; Wang, Y.; Chen, Y.; Yu, J. A comparative evaluation of convolutional neural networks, training image sizes, and deep learning optimizers for weed detection in Alfalfa. Weed Technol. 2022, 36, 512–522. [Google Scholar] [CrossRef]

- Kerr, R.A.; Zhebentyayeva, T.; Saski, C.; McCarty, L.B. Comprehensive phenotypic characterization and genetic distinction of distinct goosegrass (Eleusine indica L. Gaertn.) ecotypes. J. Plant Sci. Phytopathol. 2019, 3, 95–100. [Google Scholar] [CrossRef] [Green Version]

- Saidi, N.; Kadir, J.; Hong, L.W. Genetic diversity and morphological variations of goosegrass [Eleusine indica (L.) Gaertn] ecotypes in Malaysia. Weed Turf. Sci. 2016, 5, 144–154. [Google Scholar] [CrossRef]

- Zhu, X.; Goldberg, A.B. Introduction to semi-supervised learning. Synth. Lect. Artif. Intell. Mach. Learn. 2009, 3, 1–130. [Google Scholar]

- Alexey, B.; Wang, C.; Mark Liao, H. Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. Scaled-yolov4: Scaling cross stage partial network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13029–13038. [Google Scholar]

- Ultralytics. yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 15 November 2022).

| Turfgrass Species | Turfgrass Conditions | Weeds | Deep Learning Models | Brief Summary | Reference |

|---|---|---|---|---|---|

| Bermudagrass | Dormant | Annual bluegrass or annual bluegrass grows in proximity to various broadleaf weeds | DetectNet, GoogLeNet, and VGGNet | DetectNet exhibited high F1 scores (≥0.99) to detect annual bluegrass, broadleaf weeds, or annual bluegrass occulted with broadleaf weeds. VGGNet reliably detected various broadleaf weeds (≥0.96). | Yu et al. [37,38] |

| Bermudagrass | Actively growing | Dollarweed, old world diamond-flower, and Florida pusley | DetectNet, GoogLeNet, and VGGNet | VGGNet outperformed GoogLeNet and achieved high F1 scores (≥0.95) with high recall (0.99) to detect all three weed species growing in bermudagrass turf. | Yu et al. [37] |

| Bermudagrass | Actively growing | Crabgrass species, doveweed, dallisgrass, and tropical Signalgrass | AlexNet, GoogLeNet, and VGGNet | VGGNet achieved high F1 scores (1.00) to detect all four weed species regardless of weed densities. | Yu et al. [45] |

| Bermudagrass | Actively growing | A mix of yellow and purple nutsedge weeds | Mask R-CNN | Mask R-CNN trained with synthetic data (generated with a nutsedge skeleton-based probabilistic map) and raw data reduced labeling time by 95% compared to the Mask R-CNN trained with the raw data. | Xie et al. [39] |

| Bermudagrass | Actively growing | Common dandelion, dallisgrass, purple nutsedge, and white clover | DenseNet, EfficientNetV2, ResNet, RegNet, and VGGNet | A custom software was built to generate grid cell maps on the input images. When used in conjunction with the developed software, the image classification neural networks effectively detected and discriminated the grid cells containing weeds and turfgrass only. | Jin et al. [42] |

| Bermudagrass | Actively growing | Crabgrass, dallisgrass, dollarweed, goosegrass, old world diamond-flower, tropical signalgrass, Virginia buttonweed, and white clover growing in actively growing bermudagrass turf | GoogLeNet, MobileNet-v3, ShuffleNet-v2, and VGGNet | The research evaluated the feasibility of using image classification neural networks for detecting and discriminating weed species according to their susceptibilities to ACCase-inhibiting and synthetic auxin herbicides. ShuffleNet-v2 performed best in terms of overall accuracy and image processing speed compared to GoogLeNet, MobileNet-v3, and VGGNet. | Jin et al. [43] |

| Bermudagrass | Actively growing | Dandelion | YOLOv4, YOLOv4-tiny, and YOLOv5 | YOLOv5 achieved 97% precision, 91% recall, and 41.2 frames per second to detect dandelion with Deepstrem on NVIDIA Jetson Nano 4GB. | Medrano [40] |

| Bahiagrass | Drought-stressed or actively growing | Florida pusley | YOLO-v3, Faster R-CNN, VFNet, AlexNet, GoogLeNet, and VGGNet | The object detection neural networks, including YOLOv3, faster region-based convolutional network, and variable filter net did not effectively detect Florida pusley growing in drought-stressed or unstressed bahiagrass, while the developed image classification neural networks AlexNet, GoogLeNet, and VGGNet effectively detected Florida pusley growing in drought-stressed or unstressed bahiagrass. | Zhuang et al. [50] |

| Perennial ryegrass | Actively growing | Dandelion, ground ivy, and spotted spurge | AlexNet, DetectNet, GoogLeNet, and VGGNet | DetectNet exhibited high F1 scores (≥0.98) to detect dandelion. VGGNet achieved high F1 scores (≥0.92) with high recall (≥0.99) to detect all three weed species. | Yu et al. [44] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, X.; Liu, T.; Chen, Y.; Yu, J. Deep Learning-Based Weed Detection in Turf: A Review. Agronomy 2022, 12, 3051. https://doi.org/10.3390/agronomy12123051

Jin X, Liu T, Chen Y, Yu J. Deep Learning-Based Weed Detection in Turf: A Review. Agronomy. 2022; 12(12):3051. https://doi.org/10.3390/agronomy12123051

Chicago/Turabian StyleJin, Xiaojun, Teng Liu, Yong Chen, and Jialin Yu. 2022. "Deep Learning-Based Weed Detection in Turf: A Review" Agronomy 12, no. 12: 3051. https://doi.org/10.3390/agronomy12123051

APA StyleJin, X., Liu, T., Chen, Y., & Yu, J. (2022). Deep Learning-Based Weed Detection in Turf: A Review. Agronomy, 12(12), 3051. https://doi.org/10.3390/agronomy12123051