Extraction of Winter-Wheat Planting Areas Using a Combination of U-Net and CBAM

Abstract

:1. Introduction

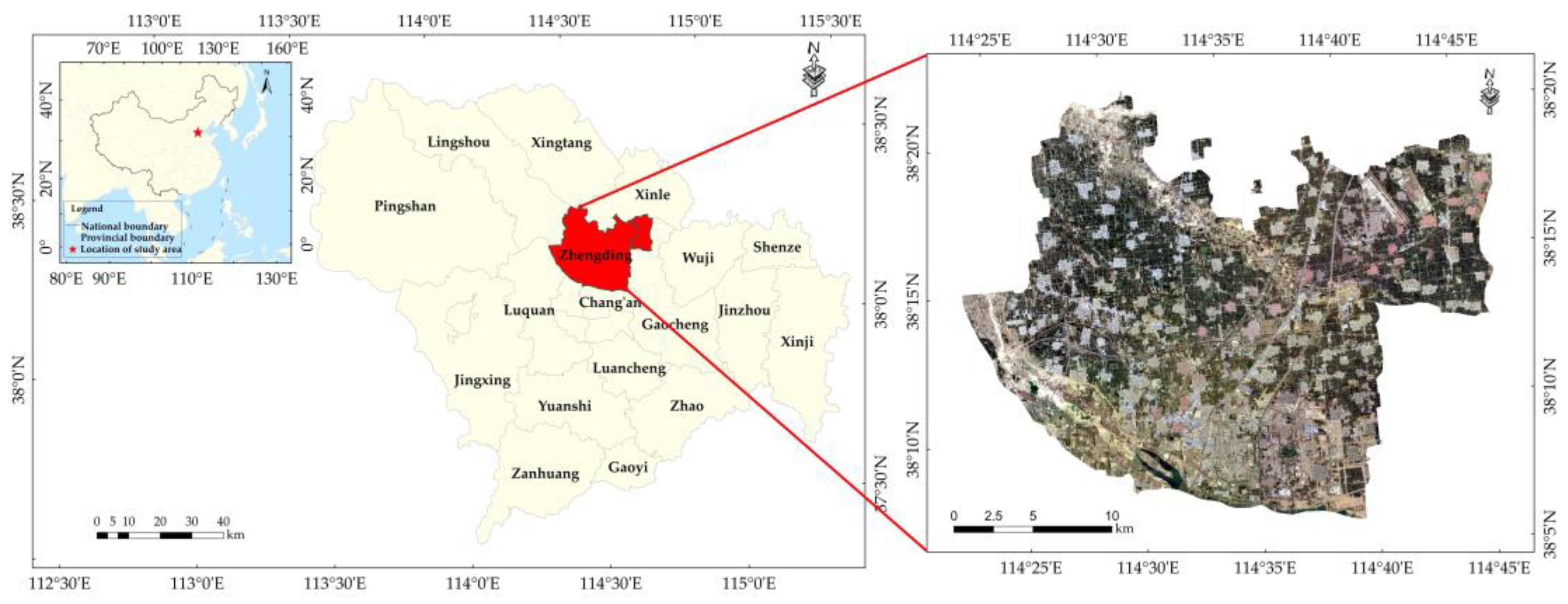

2. Study Area and Data Sources

2.1. Study Area

2.2. Growth Cycles of Winter Wheat in the Study Area

2.3. Remote Sensing Imagery

2.3.1. Sentinel-2 Data

2.3.2. GF-6 Data

3. Methodology

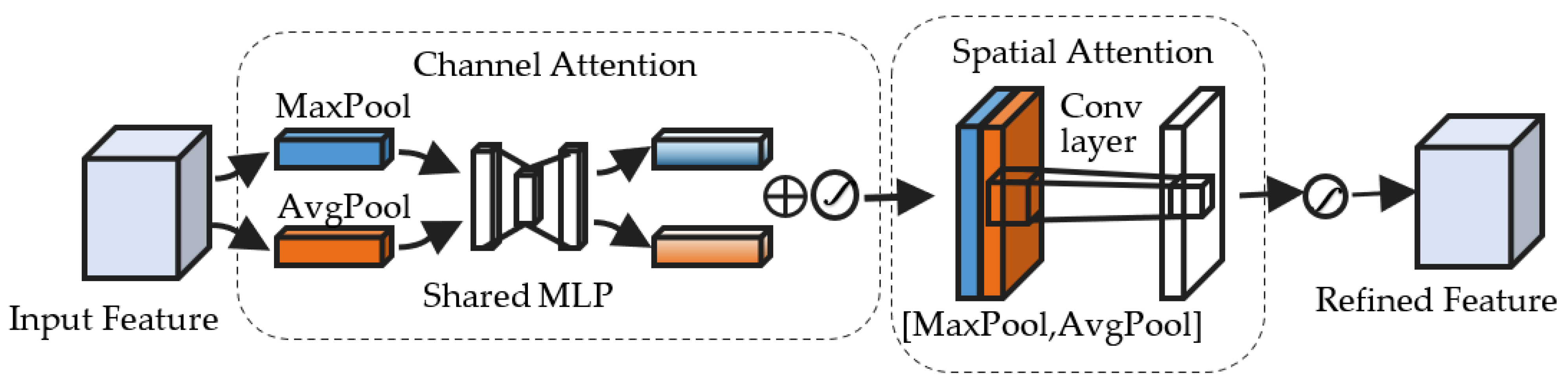

3.1. Convolutional Block Attention Module

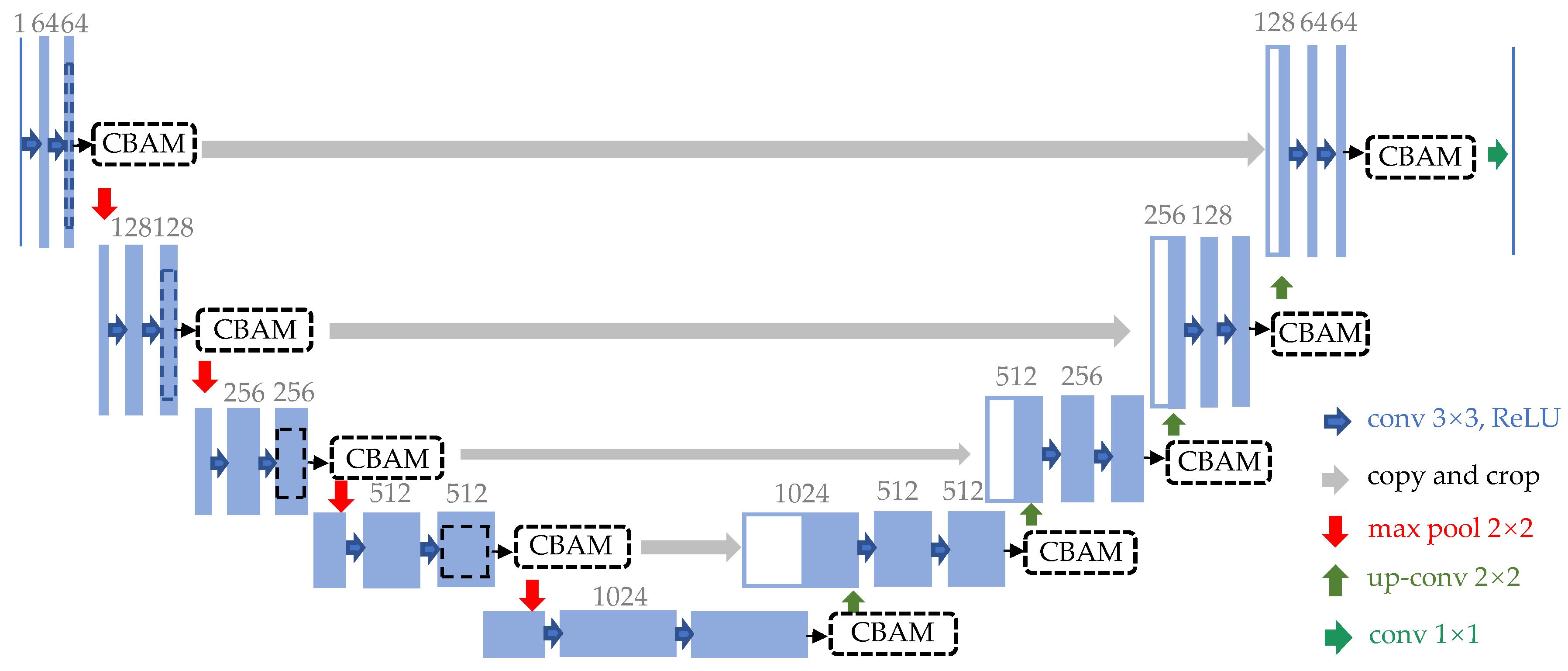

3.2. Structure of the U-Net-CBAM

4. Training of U-Net-CBAM and Evaluation Metrics

4.1. Image Label Datasets

4.2. Model Training

- (1)

- Determine the hyperparameters in the training process and initialize the parameters of the U-Net-CBAM model;

- (2)

- Input the images and labels from the training set in the GF-6 image dataset and the Sentinel-2 image dataset into the U-Net-CBAM model, respectively;

- (3)

- Perform forward propagation on the current training data using the U-Net-CBAM model;

- (4)

- Calculate the loss and back-propagate to the U-Net-CBAM model;

- (5)

- Use the Adam optimizer to update the parameters of the U-Net-CBAM model based on the loss values, and repeat steps 2–4 until the loss is lower than a predetermined threshold.

4.3. Evaluation Metrics

4.4. Comparison Models

5. Results and Discussion

5.1. Identification of Winter Wheat

5.2. Comparison of Identified Results

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Chen, Z.; Liu, H.; Zhou, Q.; Yang, G.; Liu, J. Sampling and scaling scheme for monitoring the change of winter wheat acreage in China. Trans. Chin. Soc. Agric. Eng. 2000, 16, 126–129. [Google Scholar]

- Jiao, X.; Yang, B.; Pei, Z. Paddy rice area estimation using a stratified sampling method with remote sensing in China. Trans. Chin. Soc. Agric. Eng. 2006, 22, 105–110. [Google Scholar]

- Ma, Q.; Min, X.; Xu, X. Initial application of satellite remote sensing technology in agricultural investigation. J. Chin. Agri. Resour. Regional Plann. 2003, 24, 14–16. [Google Scholar]

- Wu, M.; Yang, L.; Yu, B.; Wang, Y.; Zhao, X.; Niu, Z.; Wang, C. Mapping crops acreages based on remote sensing and sampling investigation by multivariate probability proportional to size. Trans. Chin. Soc. Agric. Eng. 2014, 30, 146–152. [Google Scholar]

- Georgi, C.; Spengler, D.; Itzerott, S.; Kleinschmit, B. Automatic delineation algorithm for site-specific management zones based on satellite remote sensing data. Precision Agric. 2018, 19, 684–707. [Google Scholar] [CrossRef] [Green Version]

- Wang, D.; Fang, S.; Yang, Z.; Wang, L.; Tang, W.; Li, Y.; Tong, C. A regional mapping method for oilseed rape based on HSV transformation and spectral features. ISPRS Int. J. Geo-Inform. 2018, 7, 224. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Liu, J.; Yao, B.; Ji, F.; Yang, F. Area change monitoring of winter wheat based on relationship analysis of GF-1 NDVI among different years. Trans. Chin. Soc. Agric. Eng. 2018, 34, 184–191. [Google Scholar]

- Dribault, Y.; Chokmani, K.; Bernier, M. Monitoring seasonal hydrological dynamics of minerotrophic peatlands using multi-date GeoEye-1 very high resolution imagery and object-based classification. Remote Sens. 2012, 4, 1887–1912. [Google Scholar] [CrossRef] [Green Version]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Song, D.; Zhang, C.; Yang, X.; Li, F.; Han, Y.; Gao, S.; Dong, H. Extracting winter wheat spatial distribution information from GF-2 image. J. Remote Sens. 2020, 24, 596–608. [Google Scholar]

- Hu, Q.; Wu, W.; Xia, T.; Yu, Q.; Yang, P.; Li, Z.; Song, Q. Exploring the use of Google Earth imagery and object-based methods in land use/cover mapping. Remote Sens. 2013, 5, 6026–6042. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Jiang, T.; Liu, X.; Wu, L. Method for mapping rice fields in complex landscape areas based on pre-trained convolutional neural network from HJ-1 A/B data. ISPRS Int. J. Geo-Inform. 2018, 7, 418. [Google Scholar] [CrossRef] [Green Version]

- Li, C.; Chen, W.; Wang, Y.; Ma, C.; Wang, Y. Extraction of winter wheat planting area in county based on multi-sensor sentinel data. Trans. Chin. Soc. Agric. Mach. 2021, 52, 207–215. [Google Scholar]

- Ge, G.; Li, W.; Jing, Y. Area of winter wheat extracted on NDVI density slicing. J. Triticeae Crops 2014, 34, 997–1002. [Google Scholar]

- Bazzi, H.; Baghdadi, N.; El Hajj, M.; Zribi, M.; Minh, D.H.T.; Ndikumana, E.; Courault, D.; Belhouchette, H. Mapping paddy rice using Sentinel-1 SAR time series in Camargue, France. Remote Sens. 2019, 11, 887. [Google Scholar] [CrossRef] [Green Version]

- Yang, C.; Everitt, J.H.; Murden, D. Evaluating high resolution SPOT 5 satellite imagery for crop identification. Comput. Electron. Agric. 2011, 75, 347–354. [Google Scholar] [CrossRef]

- Mansaray, L.R.; Wang, F.; Huang, J.; Yang, L.; Kanu, A.S. Accuracies of support vector machine and random forest in rice mapping with Sentinel-1A, Landsat-8 and Sentinel-2A datasets. Geocarto Int. 2020, 35, 1088–1108. [Google Scholar] [CrossRef]

- Li, D.R.; Zhang, L.P.; Xia, G.S. Automatic analysis and mining of remote sensing big data. Acta Geod. Cartogr. Sinica 2014, 43, 1211–1216. [Google Scholar]

- Zhong, Y.; Lin, X.; Zhang, L. A support vector conditional random fields classifier with a Mahalanobis distance boundary constraint for high spatial resolution remote sensing imagery. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2014, 7, 1314–1330. [Google Scholar] [CrossRef]

- Fu, T.; Ma, L.; Li, M.; Johnson, B.A. Using convolutional neural network to identify irregular segmentation objects from very high-resolution remote sensing imagery. J. Appl. Remote Sens. 2018, 12, 025010. [Google Scholar] [CrossRef]

- Liang, X.; Liu, S.; Shen, X.; Yang, J.; Liu, L.; Dong, J.; Lin, L.; Yan, S. Deep human parsing with active template regression. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 2402–2414. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hao, H.; Liu, W.; Xing, W.; Zhang, S. Multilabel learning based adaptive graph convolutional network for human parsing. Pattern Recogn. 2022, 127, 108593. [Google Scholar] [CrossRef]

- Wu, M.C.; Alkhaleefah, M.; Chang, L.; Chang, Y.L.; Shie, M.H.; Liu, S.J.; Chang, W.Y. Recurrent deep learning for rice fields detection from SAR images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 1548–1551. [Google Scholar]

- Sun, Z.; Di, L.; Fang, H.; Burgess, A. Deep learning classification for crop types in north dakota. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2020, 13, 2200–2213. [Google Scholar] [CrossRef]

- Huang, Y.; Tang, L.; Jing, D.; Li, Z.; Tian, Y.; Zhou, S. Research on crop planting area classification from remote sensing image based on deep learning. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019; pp. 1–4. [Google Scholar]

- Ji, S.; Zhang, C.; Xu, A.; Shi, Y.; Duan, Y. 3D convolutional neural networks for crop classification with multi-temporal remote sensing images. Remote Sens. 2018, 10, 75. [Google Scholar] [CrossRef] [Green Version]

- Zhao, X.; Yuan, Y.; Song, M.; Ding, Y.; Lin, F.; Liang, D.; Zhang, D. Use of unmanned aerial vehicle imagery and deep learning unet to extract rice lodging. Sensors 2019, 19, 3859. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Du, Z.; Yang, J.; Ou, C.; Zhang, T. Smallholder crop area mapped with a semantic segmentation deep learning method. Remote Sens. 2019, 11, 888. [Google Scholar] [CrossRef] [Green Version]

- Shijiazhuang City Bureau of Statistics. Shijiazhuang Statistical Yearbook-19; China Statistics Publishing House: Beijing, China, 2020.

- Broeske, M.; Gaska, J.; Roth, A. Winter Wheat Development and Growth Staging, Netrient and Pest Management Program; University of Washington Madison: Madison, WI, USA, 2022. [Google Scholar]

- Li, S.; Zhao, L.; Li, J.; Chen, Q. Segmentation of Hippocampus based on 3DUnet-CBAM Model. In Proceedings of the 4th IEEE International Conference on Advanced Electronic Materials, Computers and Software Engineering (AEMCSE), Changsha, China, 26–28 March 2021; pp. 595–599. [Google Scholar]

- Cun, X.; Pun, C.-M. Improving the harmony of the composite image by spatial-separated attention module. IEEE Trans. Image Process. 2020, 29, 4759–4771. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Hu, H.; Li, Z.; Li, L.; Yang, H.; Zhu, H. Classification of very high-resolution remote sensing imagery using a fully convolutional network with global and local context information enhancements. IEEE Access 2020, 8, 14606–14619. [Google Scholar] [CrossRef]

- Breuel, T.M. The effects of hyperparameters on SGD training of neural networks. arXiv 2015, arXiv:1508.02788. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wang, H.; Wang, Y.; Zhang, Q.; Xiang, S.; Pan, C. Gated convolutional neural network for semantic segmentation in high-resolution images. Remote Sens. 2017, 9, 446. [Google Scholar] [CrossRef]

| Growth Cycle | Time | Photo | Growth Cycle | Time | Photo |

|---|---|---|---|---|---|

| Seed sowing | Early October |  | Elongation | Mid- to late April of the following year |  |

| Seedling emergence | Mid- to late October |  | Heading | Early May of the following year |  |

| Tillering | November |  | Milk-ripening | Mid-May to early June of the following year |  |

| Wintering | January to early March of the following year |  | Maturing | Mid- to late June of the following year |  |

| Reviving | Mid-March to early April of the following year |  |

| Satellite Name | Band Number | Band Name | Spectral Range (μm) | Spatial Resolution (m) |

|---|---|---|---|---|

| Sentinel-2A | B1 | Coastal Aerosol | 0.433–0.533 | 60 |

| B2 | Blue | 0.458–0.523 | 10 | |

| B3 | Green | 0.543–0.578 | 10 | |

| B4 | Red | 0.65–0.68 | 10 | |

| B5 | Vegetation Red Edge | 0.698–0.713 | 20 | |

| B6 | Vegetation Red Edge | 0.733–0.748 | 20 | |

| B7 | Vegetation Red Edge | 0.773–0.793 | 20 | |

| B8 | NIR | 0.785–0.9 | 10 | |

| B8A | Narrow NIR | 0.855–0.875 | 20 | |

| B9 | Water Vapor | 0.935–0.955 | 60 | |

| B10 | SWIR-Cirrus | 1.36–1.39 | 60 | |

| B11 | SWIR | 1.565–1.655 | 20 | |

| B12 | SWIR | 2.1–2.28 | 20 | |

| GF-6 | P | Panchromatic | 0.45–0.90 | 2 |

| B1 | Blue | 0.45–0.52 | 8 | |

| B2 | Green | 0.52–0.60 | 8 | |

| B3 | Red | 0.63–0.69 | 8 | |

| B4 | NIR | 0.76–0.90 | 8 |

| Hyperparameter | Value |

|---|---|

| Batch size | 4 |

| Learning rate | 0.0001 |

| Beta1 for Adam | 0.5 |

| Beta2 for Adam | 0.999 |

| Epochs | 100 |

| Datasets | Approach | Predicted | Winter Wheat | Nonwinter Wheat |

|---|---|---|---|---|

| GF-6 dataset | SegNet | Winter wheat | 0.772 | 0.021 |

| Nonwinter wheat | 0.103 | 0.104 | ||

| DeepLabV3+ | Winter wheat | 0.759 | 0.033 | |

| Nonwinter wheat | 0.075 | 0.133 | ||

| U-Net | Winter wheat | 0.766 | 0.025 | |

| Nonwinter wheat | 0.078 | 0.131 | ||

| U-Net-CBAM | Winter wheat | 0.761 | 0.033 | |

| Nonwinter wheat | 0.055 | 0.151 | ||

| Sentinel-2 dataset | SegNet | Winter wheat | 0.764 | 0.029 |

| Nonwinter wheat | 0.062 | 0.145 | ||

| DeepLabV3+ | Winter wheat | 0.766 | 0.026 | |

| Nonwinter wheat | 0.046 | 0.162 | ||

| U-Net | Winter wheat | 0.765 | 0.029 | |

| Nonwinter wheat | 0.048 | 0.158 | ||

| U-Net-CBAM | Winter wheat | 0.762 | 0.031 | |

| Nonwinter wheat | 0.035 | 0.172 |

| Datasets | Approach | Precision | MIoU | Recall | OA | F1 |

|---|---|---|---|---|---|---|

| GF-6 dataset | SegNet | 0.740 | 0.667 | 0.873 | 0.883 | 0.782 |

| DeepLabV3+ | 0.806 | 0.725 | 0.865 | 0.899 | 0.831 | |

| U-Net | 0.807 | 0.735 | 0.884 | 0.905 | 0.837 | |

| U-Net-CBAM | 0.849 | 0.771 | 0.882 | 0.916 | 0.864 | |

| Sentinel-2 dataset | SegNet | 0.838 | 0.766 | 0.889 | 0.915 | 0.860 |

| DeepLabV3+ | 0.881 | 0.815 | 0.910 | 0.934 | 0.894 | |

| U-Net | 0.871 | 0.802 | 0.903 | 0.929 | 0.886 | |

| U-Net-CBAM | 0.900 | 0.831 | 0.907 | 0.939 | 0.905 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, J.; Wang, J.; Qian, H.; Zhan, Y.; Lei, Y. Extraction of Winter-Wheat Planting Areas Using a Combination of U-Net and CBAM. Agronomy 2022, 12, 2965. https://doi.org/10.3390/agronomy12122965

Zhao J, Wang J, Qian H, Zhan Y, Lei Y. Extraction of Winter-Wheat Planting Areas Using a Combination of U-Net and CBAM. Agronomy. 2022; 12(12):2965. https://doi.org/10.3390/agronomy12122965

Chicago/Turabian StyleZhao, Jinling, Juan Wang, Haiming Qian, Yuanyuan Zhan, and Yu Lei. 2022. "Extraction of Winter-Wheat Planting Areas Using a Combination of U-Net and CBAM" Agronomy 12, no. 12: 2965. https://doi.org/10.3390/agronomy12122965