Detection Method and Experimental Research of Leafy Vegetable Seedlings Transplanting Based on a Machine Vision

Abstract

1. Introduction

2. Materials and Methods

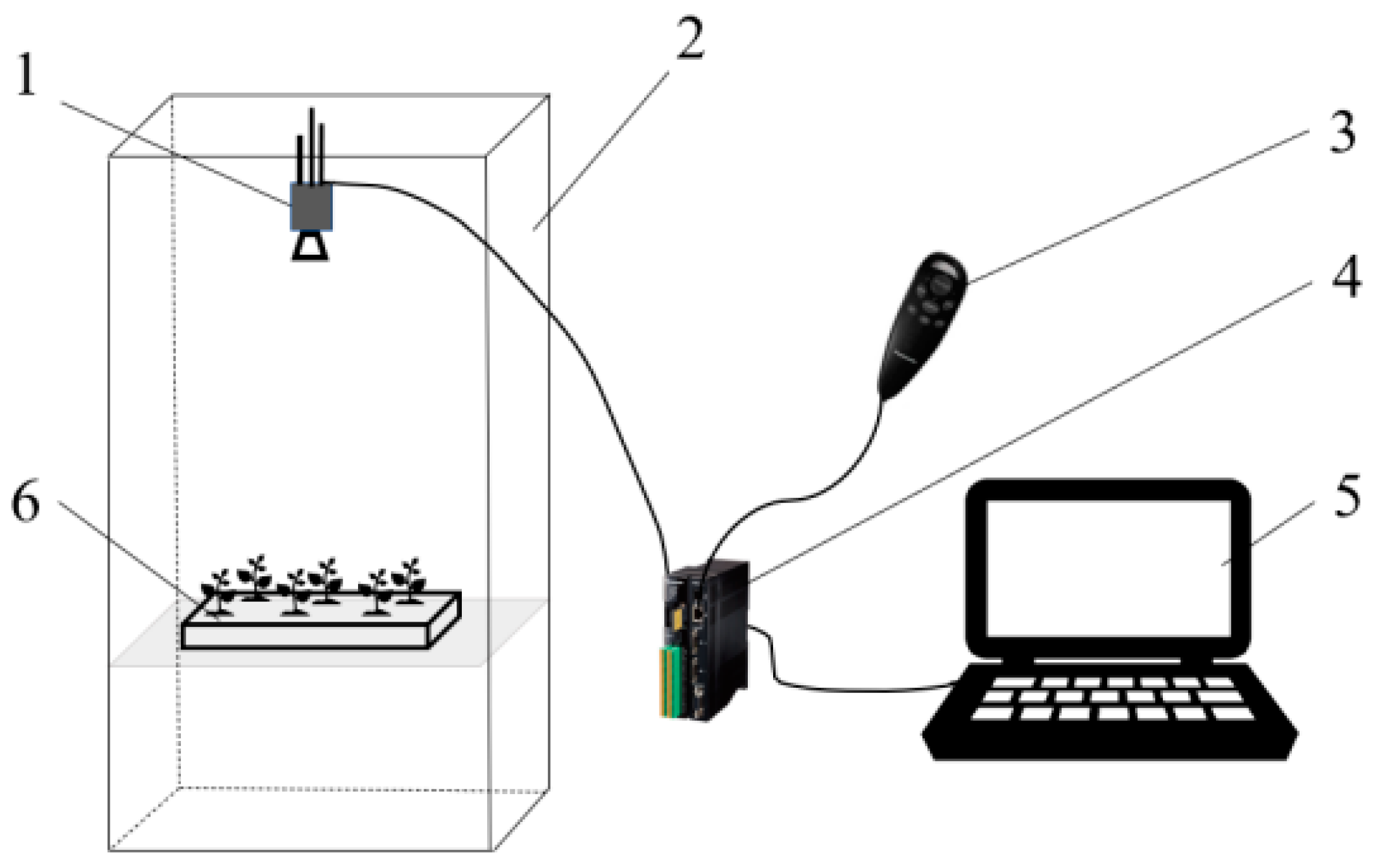

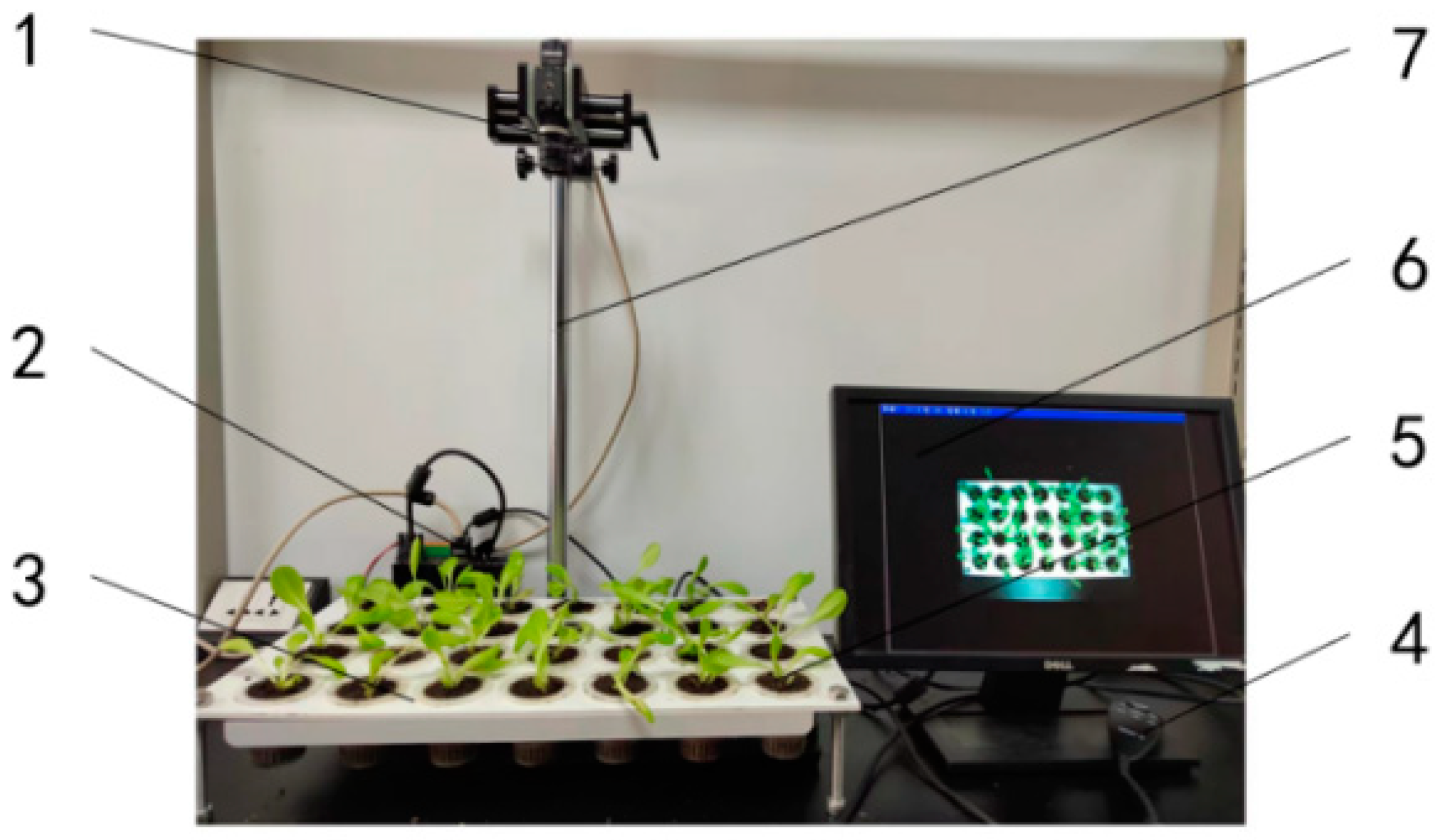

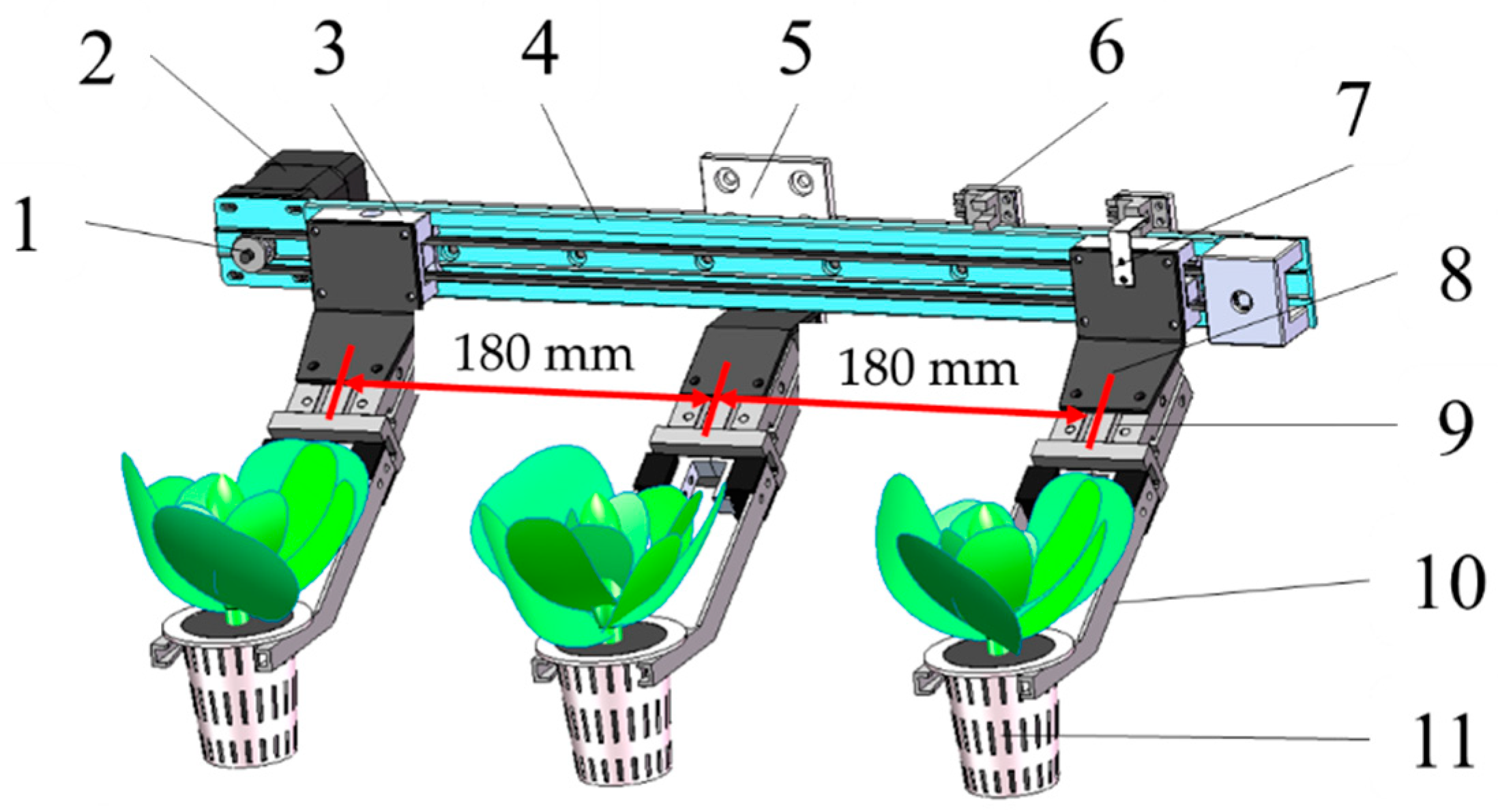

2.1. Hardware System

2.2. Software System

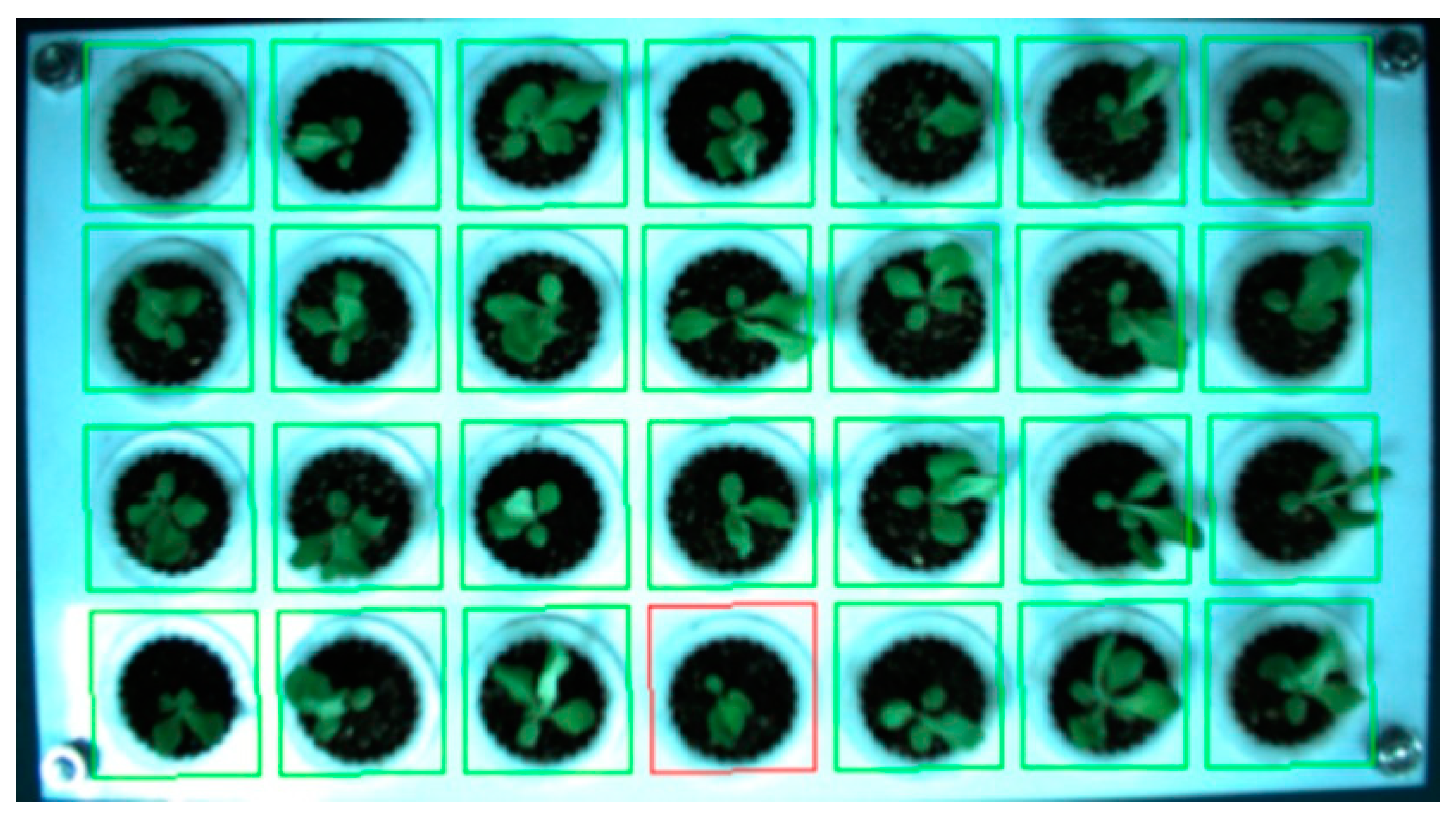

2.3. Image Detection Method

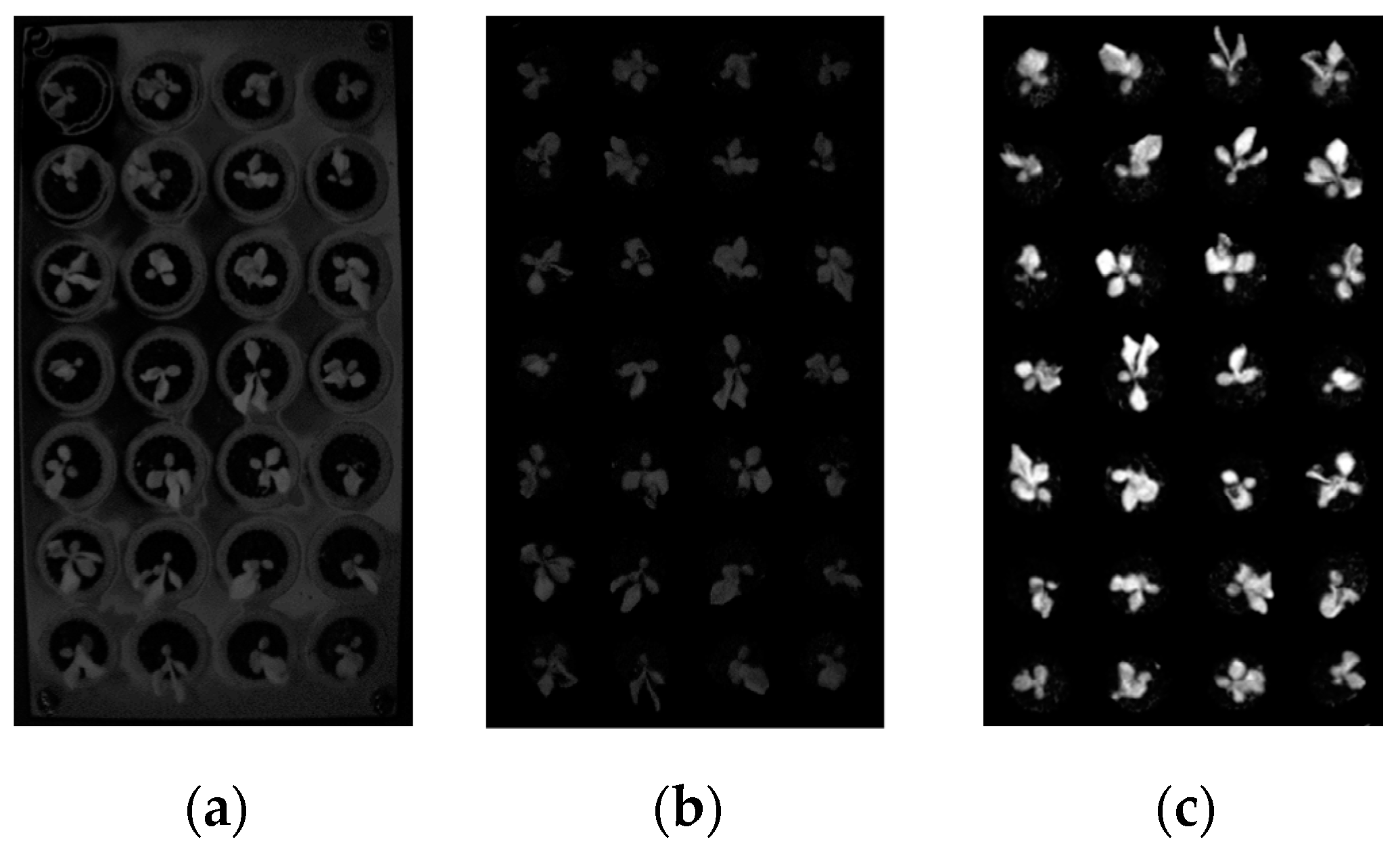

2.3.1. Grayscale Image

2.3.2. Threshold Segmentation

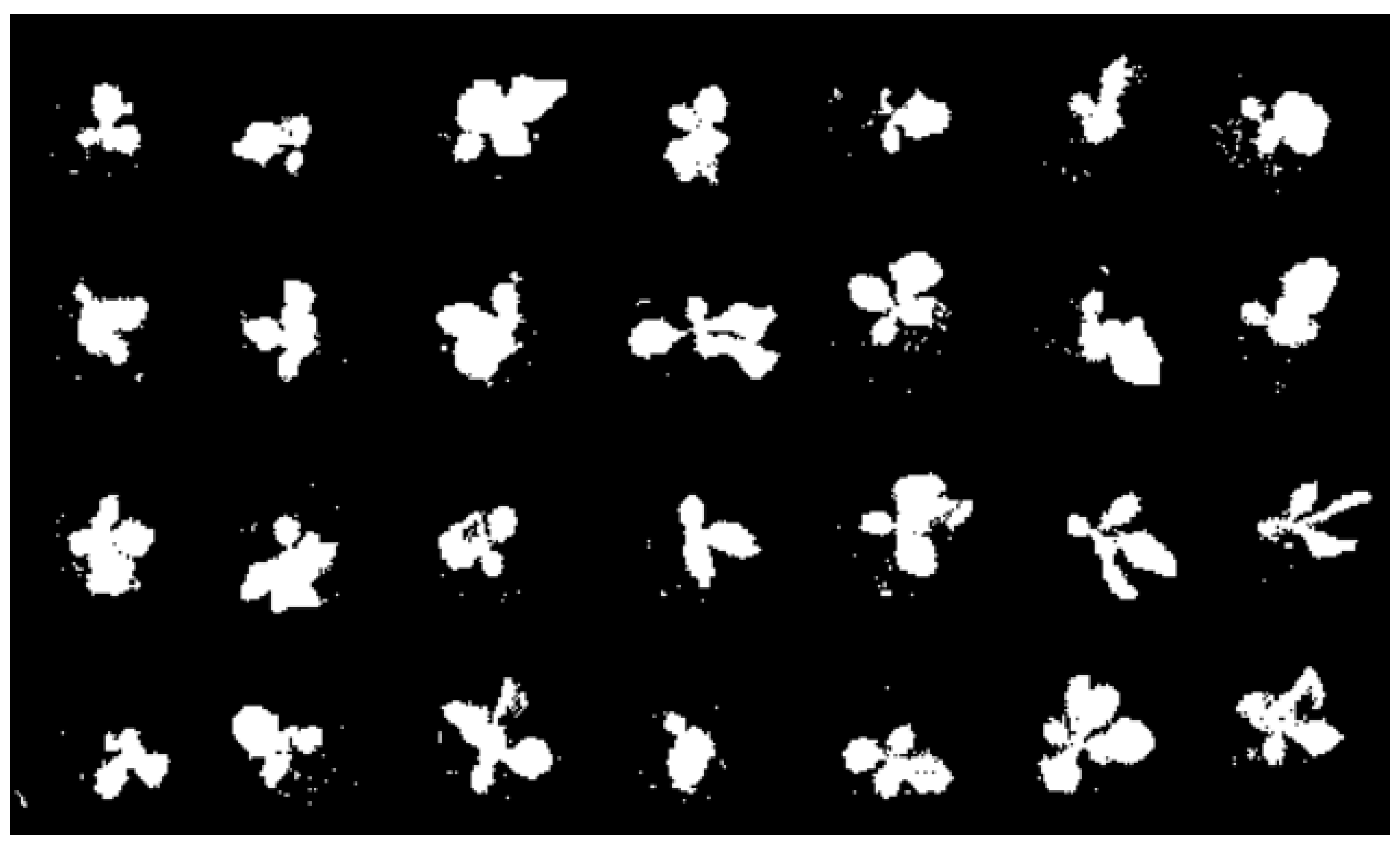

2.3.3. Corrosion and Expansion

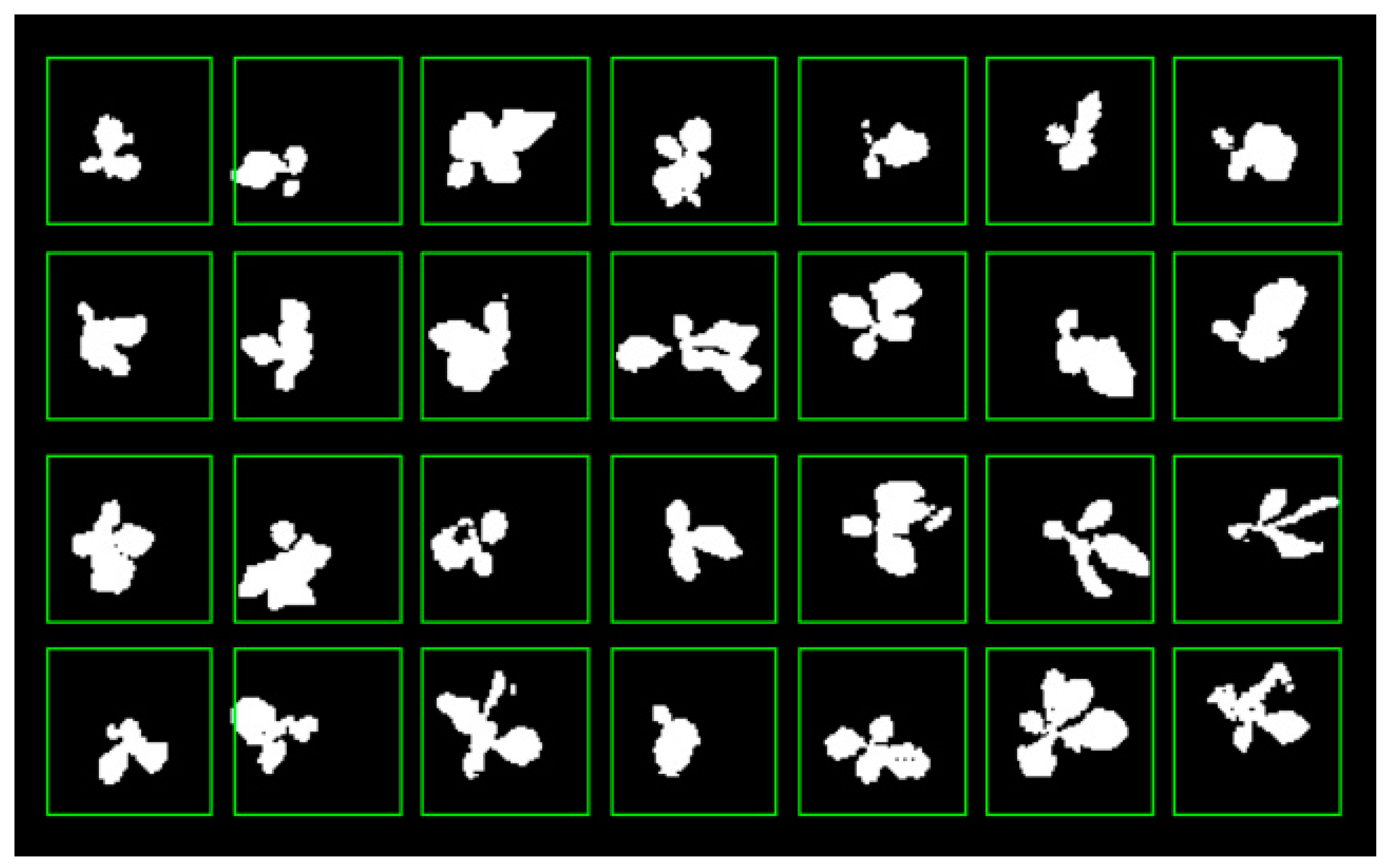

2.3.4. Locale

2.3.5. Judgment and Output

2.4. Test Content

3. Results and Discussion

3.1. Test Results

- The empty cell is judged as an unqualified seedling, which is caused by a small number of leaves blocking the empty cell in the neighboring detection area;

- The unqualified seedling is judged as an empty cell, which occurs when the seedling is particularly small or when the position of the seedling is at the edge of the detection area, and most of the leaves grow to the adjacent detection area;

- The empty cell is judged as a qualified seedling, which happens when a large number of leaves is in the detection area adjacent to the empty cell block the empty cell;

- Qualified seedlings are judged as empty cells, which happens when qualified seedlings are located at the edge of the detection area, and most of the leaves grow to the adjacent detection area;

- Unqualified seedlings are judged as qualified seedlings, which happens when the unqualified seedlings adjacent to the detection area block the unqualified seedlings without leaves or two unqualified seedlings grow into the same detection area;

- Qualified seedlings are judged as unqualified seedlings, because the leaves of the seedlings near the edge of the detection area grow to the adjacent detection area.

3.2. Discussion

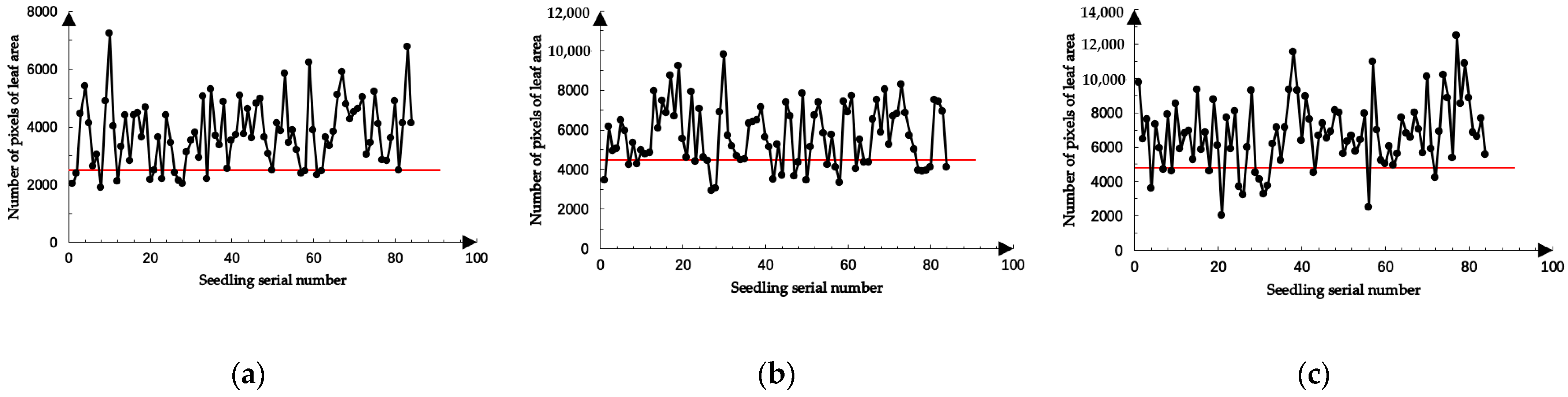

3.2.1. The Effect of Seedling Age on Detection Accuracy

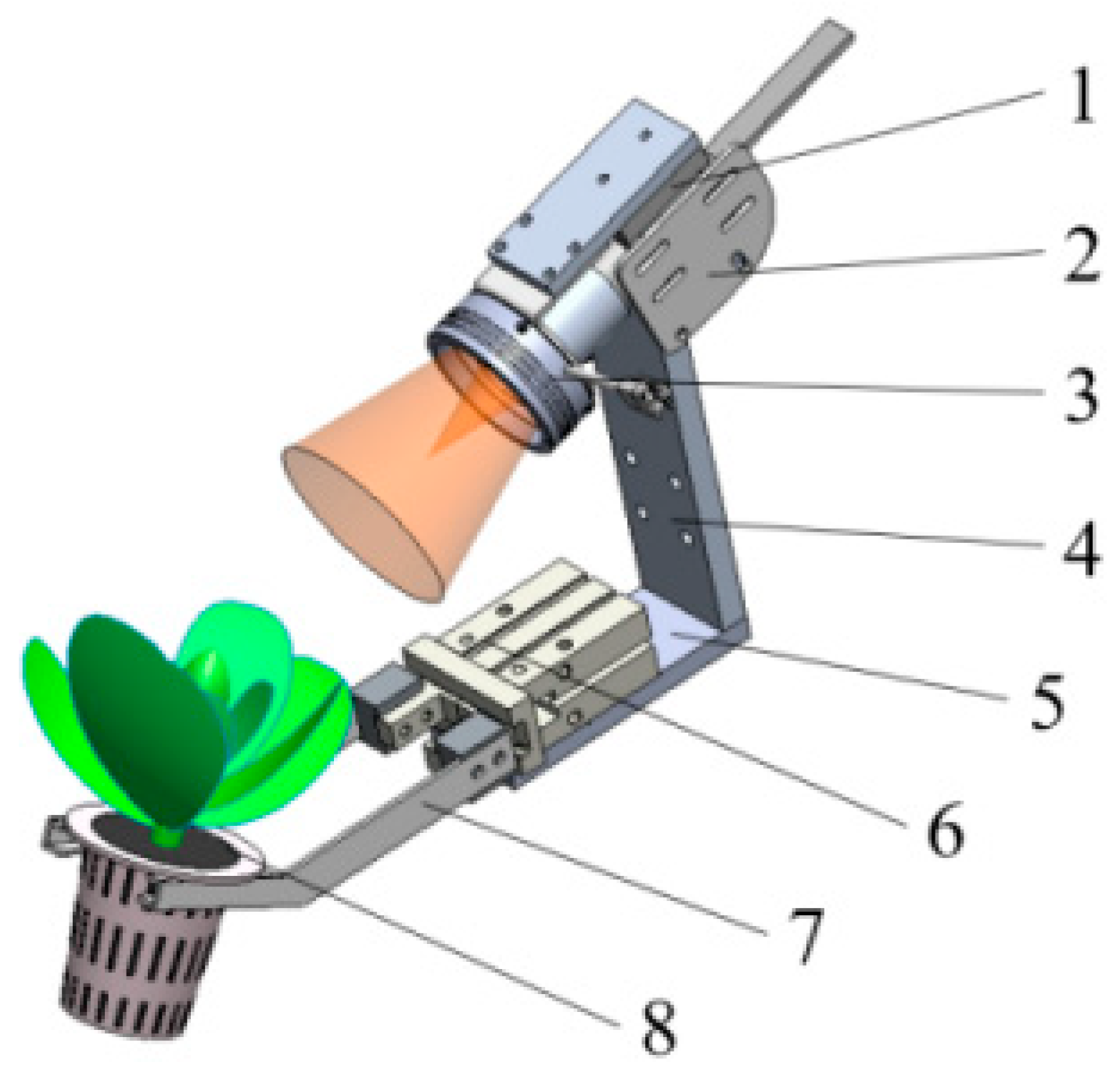

3.2.2. Transplanting Actuator Scheme Design

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Qi, F.; Wei, X.; Zhang, Y. Development status and future research emphases on greenhouse horticultural equipment and its relative technology in China. Trans. Chin. Soc. Agric. Eng. 2017, 33, 1–9. [Google Scholar] [CrossRef]

- Qi, F.; Li, K.; Li, S.; He, F.; Zhou, X. Development of intelligent equipment for protected horticulture in world and enlightenment to China. Trans. Chin. Soc. Agric. Eng. 2019, 35, 183–195. [Google Scholar] [CrossRef]

- Liu, C.; Gong, L.; Yuan, J.; Li, Y. Current Status and Development Trends of Agricultural Robots. Trans. Chin. Soc. Agric. Mach. 2022, 53, 1–22. [Google Scholar]

- Zhao, J.; Zheng, H.; Dong, Y.; Yang, Y. Forecast research and development trend of international agricultural robot and its suitability to China. J. Chin. Agric. Mech. 2021, 42, 157–162. [Google Scholar]

- Wang, F.; Fan, C.; Li, Z.; Zhang, S.; Xia, P. Application status and development trend of robots in the field of facility agriculture. J. Chin. Agric. Mech. 2020, 41, 93–98. [Google Scholar] [CrossRef]

- Li, Z.; Yang, Q.; Sha, D.; Ma, W. Research progress on the full mechanization production of hydroponic leafy vegetables in plant factory. J. China Agric. Univ. 2022, 27, 12–21. [Google Scholar]

- Qin, S.; Gu, S.; Wang, Y. The production system of hydroponic leafy vegetables mechanical large-scale in European. J. Agric. Mech. Res. 2017, 39, 264–268. [Google Scholar]

- Lu, J. Research on Key Technologies of Automated Production System for Hydroponic Leaf Vegetables. Master’s Thesis, Anhui University of Technology, Maanshan, China, 2019. [Google Scholar]

- Tong, J.; Meng, Q.; Gu, S.; Wu, C.; Ma, K. Design and experiment of high-speed sparse transplanting mechanism for hydroponics pot seedlings in greenhouses. Trans. Chin. Soc. Agric. Eng. 2021, 37, 1–9. [Google Scholar] [CrossRef]

- Ma, K. Design and Experiment on Transplanting Machine for Hydroponics Leaf Vegetable Seedlings with Multi-End Effectors. Master’s Thesis, Zhejiang Sci-Tech University, Hangzhou, China, 2019. [Google Scholar]

- Li, B.; Gu, S.; Chu, Q.; Lü, Y.; Hu, J.; Xie, Z.; Yang, Y.; Jiang, H. Design and experiment on manipulator for transplanting leaf vegetables seedling cultivated by coco-peat. Trans. Chin. Soc. Agric. Eng. 2017, 33, 18–24. [Google Scholar] [CrossRef]

- Wang, Y.; Xiao, X.; Liang, X.; Wang, J.; Wu, C.; Chen, J. Plug hole positioning and seedling shortage detecting system on automatic seedling supplementing test-bed for vegetable plug seedlings. Trans. Chin. Soc. Agric. Eng. 2018, 34, 35–41. [Google Scholar] [CrossRef]

- Iron Ox. Available online: https://ironox.com/technology (accessed on 28 June 2022).

- Visser. Available online: https://www.visser.eu/plug-transplanters (accessed on 28 June 2022).

- Ling, P.; Ruzhitsky, V.N. Machine vision techniques for measuring the canopy of tomato seedling. J. Agric. Eng. Res. 1996, 65, 85–95. [Google Scholar] [CrossRef]

- Onyango, C.M.; Marchant, J.A. Segmentation of row crop plants from weeds using colour and morphology. Comput. Electron. Agric. 2003, 39, 141–155. [Google Scholar] [CrossRef]

- Jiang, H.; Shi, J.; Ren, Y.; Ying, Y. Application of machine vision on automatic seedling transplanting. Trans. Chin. Soc. Agric. Eng. 2009, 25, 127–131. [Google Scholar]

- Tong, J.; Yu, J.; Wu, C.; Yu, G.; Du, X.; Shi, H. Health information acquisition and position calculation of plug seedling in greenhouse seedling bed. Comput. Electron. Agric. 2021, 185, 106146. [Google Scholar] [CrossRef]

- Zhang, G.; Fan, K.; Wang, H.; Cai, F.; Gu, S. Experimental study on detection of plug tray seedlings based on machine vision. J. Agric. Mech. Res. 2020, 42, 175–179. [Google Scholar]

- Ryu, K.H.; Kim, G.; Han, J.S. Development of a robotic transplanter for bedding plants. J. Agric. Eng. Res. 2001, 78, 141–146. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, X.; Jiang, K.; Fan, P.; Wang, X. Development and experiment on system for tray-seedling on-line measurement based on line structured-light vision. Trans. Chin. Soc. Agric. Eng. 2013, 29, 143–149. [Google Scholar] [CrossRef]

- Hu, F.; Yin, W.; Chen, C.; Xu, B. Recognition and localization of plug seedling based on machine vision. J. Northwest AF Univ. Nat. Sci. Ed. 2013, 41, 183–188. [Google Scholar]

- Yang, Y.; Fan, K.; Han, J.; Yang, Y.; Chu, Q.; Zhou, Z.; Gu, S. Quality inspection of Spathiphyllum plug seedlings based on the side view images of the seedling stem under the leaves. Trans. Chin. Soc. Agric. Eng. 2021, 37, 194–201. [Google Scholar] [CrossRef]

- Jin, X.; Tang, L.; Ji, J.; Wang, C.; Wan, S. Potential analysis of an automatic transplanting method for healthy potted seedlings using computer vision. Int. J. Agric. Biol. Eng. 2021, 14, 7. [Google Scholar] [CrossRef]

- Jin, X.; Tang, L.; Li, R.; Zhao, B.; Ji, J.; Ma, Y. Edge recognition and reduced transplantation loss of leafy vegetable seedlings with Intel RealsSense D415 depth camera. Comput. Electron. Agric. 2022, 198, 107030. [Google Scholar] [CrossRef]

- Zhang, G.; Wen, Y.; Tan, Y.; Yuan, T.; Zhang, J.; Chen, Y.; Zhu, S.; Duan, D.; Tian, J.; Zhang, Y. Identification of Cabbage Seedling Defects in a Fast Automatic Transplanter Based on the maxIOU Algorithm. Agronomy 2020, 10, 65. [Google Scholar] [CrossRef]

- Wen, Y.; Zhang, L.; Huang, X.; Yuan, T.; Zhang, J.; Tan, Y.; Feng, Z. Design of and Experiment with Seedling Selection System for Automatic Transplanter for Vegetable Plug Seedlings. Agronomy 2021, 11, 2031. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, X.; Gong, J.; Xue, X. Implementation of leaf area measurement system based on machine vision. Prog. Nat. Sci. 2004, 11, 97–102. [Google Scholar]

- Zhang, Z.; Luo, X.; Zang, Y.; Hou, F.; Xu, X. Segmentation algorithm based on color feature for green crop plants. Trans. Chin. Soc. Agric. Eng. 2011, 27, 183–189. [Google Scholar]

- Xu, P.; Zhang, T.; Chen, L.; Huang, W.; Jiang, K. Study on the Method of Matched Splice Grafting for Melon Seedlings Based on Visual Image. Agriculture 2022, 12, 929. [Google Scholar] [CrossRef]

| Row No. | Column No. | ||||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | |

| 1 | 2019 | 2376 | 4443 | 5396 | 4120 | 2620 | 3043 |

| 2 | 1889 | 4877 | 7218 | 4013 | 2102 | 3312 | 4383 |

| 3 | 2815 | 4408 | 4474 | 3638 | 4667 | 2163 | 2489 |

| 4 | 3644 | 2184 | 4400 | 3450 | 2407 | 2134 | 2032 |

| 5 | 3122 | 3533 | 3806 | 2916 | 5040 | 2199 | 5281 |

| 6 | 3689 | 3350 | 4855 | 2555 | 3525 | 3724 | 5073 |

| 7 | 3741 | 4624 | 3604 | 4817 | 4975 | 3641 | 3049 |

| 8 | 2480 | 4124 | 3851 | 5835 | 3451 | 3872 | 3209 |

| 9 | 2375 | 2470 | 6226 | 3890 | 2335 | 2464 | 3642 |

| 10 | 3341 | 3835 | 5111 | 5903 | 4765 | 4254 | 4503 |

| 11 | 4609 | 5015 | 3025 | 3438 | 5222 | 4096 | 2854 |

| 12 | 2817 | 3606 | 4873 | 2479 | 4131 | 6755 | 4135 |

| Seedling Age (d) | The Number of Unqualified Seedlings (Plants) | The Number of Unqualified Seedlings Detected (Plants) | The Number of Qualified Seedlings (Plants) | The Number of Qualified Seedlings Detected (Plants) | Detection Accuracy Rate of Unqualified Seedlings (%) | Detection Accuracy Rate of Qualified Seedlings (%) | Comprehensive Detection Accuracy Rate (%) | Cross-Border Leaves |

|---|---|---|---|---|---|---|---|---|

| 17 | 17 | 17 | 67 | 67 | 100.00 | 100.00 | 100.00 | none |

| 20 | 17 | 17 | 67 | 60 | 100.00 | 89.55 | 91.67 | a few |

| 22 | 8 | 5 | 76 | 67 | 62.50 | 88.16 | 85.71 | many |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, W.; Gao, J.; Zhao, C.; Jiang, K.; Zheng, W.; Tian, Y. Detection Method and Experimental Research of Leafy Vegetable Seedlings Transplanting Based on a Machine Vision. Agronomy 2022, 12, 2899. https://doi.org/10.3390/agronomy12112899

Fu W, Gao J, Zhao C, Jiang K, Zheng W, Tian Y. Detection Method and Experimental Research of Leafy Vegetable Seedlings Transplanting Based on a Machine Vision. Agronomy. 2022; 12(11):2899. https://doi.org/10.3390/agronomy12112899

Chicago/Turabian StyleFu, Wei, Jinqiu Gao, Chunjiang Zhao, Kai Jiang, Wengang Zheng, and Yanshan Tian. 2022. "Detection Method and Experimental Research of Leafy Vegetable Seedlings Transplanting Based on a Machine Vision" Agronomy 12, no. 11: 2899. https://doi.org/10.3390/agronomy12112899

APA StyleFu, W., Gao, J., Zhao, C., Jiang, K., Zheng, W., & Tian, Y. (2022). Detection Method and Experimental Research of Leafy Vegetable Seedlings Transplanting Based on a Machine Vision. Agronomy, 12(11), 2899. https://doi.org/10.3390/agronomy12112899