1. Introduction

The planting structure of wheat fields reflects the spatial distribution information of wheat fields in an area or production unit [

1]. Obtaining the planting structure information of wheat fields efficiently and accurately is of great significance for wheat field yield estimation, agricultural condition monitoring and agricultural structure adjustment [

2]. In the field of scientific research, due to the large differences in the research objectives of experts in different fields, the complex planting structure of wheat experimental fields, the large variety of wheat varieties, and the large differences in traits, it is difficult for the ground object classification method based on machine learning to meet the current needs. With the rapid development of UAV remote sensing technology and deep learning methods, the method of semantic segmentation of UAV remote sensing images based on fully convolutional neural network technology is increasingly applied to the research of farmland object classification [

3,

4,

5,

6,

7]. The deep learning method can quickly and accurately realize the classification of ground object in complex wheat field planting areas and provide technical support for wheat field yield estimation, agricultural condition monitoring, and agricultural structure adjustment.

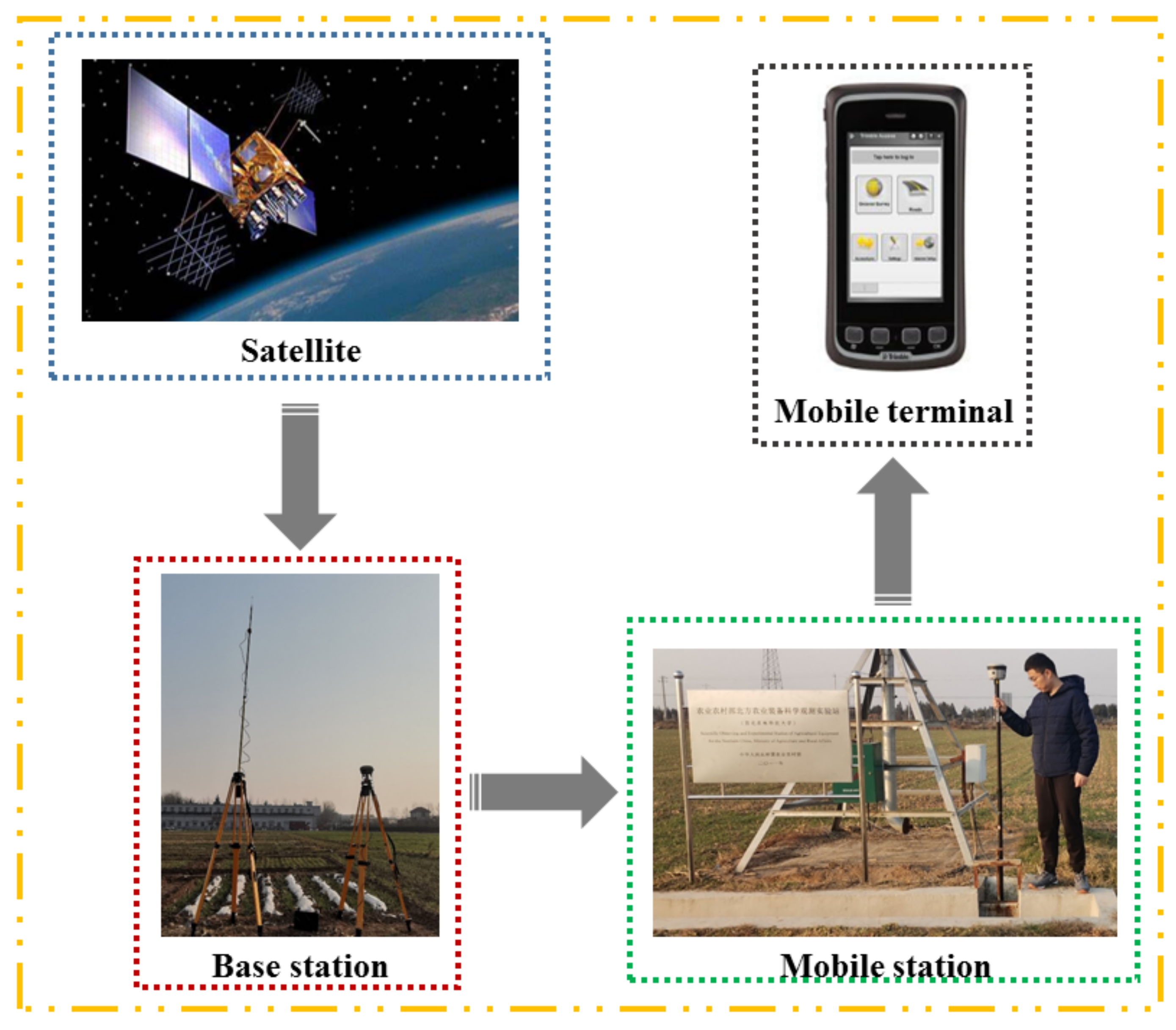

Remote sensing technologies include satellite remote sensing and UAV remote sensing. Satellite remote sensing can obtain large-scale remote sensing images of land parcels, and UAV remote sensing can obtain small-scale land remote sensing images with higher spatial resolution [

8]. Based on farmland satellite remote sensing images, there have been many studies using traditional machine learning methods and deep learning-based semantic segmentation methods to realize the classification of farmland objects, which have achieved a good classification effect. For example, Al Awar B et al. [

9] took a planting area in the Bekaa Valley of Lebanon, where the main crops are wheat and potato, as the research area, used Sentinel-1 and Sentinel-2 satellite remote sensing images as dataset, and adopted Support Vector Machine (SVM), Random Forest (RF), Classification and Regression Tree (CART) and Back Propagation Network (BPN) for crop classification research. The results found that the overall accuracy (OA) of these four classification models can reach more than 95%. Zheng Wenhui et al. [

10] selected the Loess Plateau mulching dry agricultural planting area as the research area based on Google Earth Engine cloud platform data and Landsat-8 reflectivity data, using Random Forest (RF), Support Vector Machine (SVM), Decision Tree (DT), and Minimum Distance (MD) to classify farmland objects. The result showed that under the condition of artificial feature engineering, the overall accuracy (OA) of machine learning object classification can reach 95.5%. Song Tingqiang et al. [

11] selected a crop planting area in Gaomi City, Shandong Province as the research area based on the GF-2 satellite high spatial resolution panchromatic images and multispectral images, and adopted an improved Multi-temporal Spatial Segmentation Network (MSSN) to realize the classification of farmland objects. The experimental result showed that the Pixel Accuracy (PA) of the model on the test set was 95%, the F1 score was 0.92, and the Intersection over Union (IoU) was 0.93. Xu Lu et al. [

12] selected a farmland in Guangping County of Hebei Province and Luobei County of Heilongjiang Province as the research areas based on the high spatial resolution remote sensing image of Gaofen-2 (GF-2), firstly adopted the Depthwise Separable Convolution U-Net (DSCU-net) to realize the segmentation of the entire image, and then the extended Multi-channel Rich Convolutional Feature network (RCF) to further delineate the boundaries of cultivated land plots. The experimental result showed that the overall accuracy (OA) of classification in the two regions was around 90%. In summary, both the traditional machine learning algorithm and the semantic segmentation algorithm based on the fully convolutional neural network can show satisfactory results in the classification of agricultural satellite remote sensing images.

Compared with satellite remote sensing, UAV remote sensing has the characteristics of high flexibility, short period, less environmental impact, and easy access to small-scale agricultural remote sensing data [

13]. In addition, satellite remote sensing can only obtain remote sensing image data with meter-level spatial resolution, while UAV remote sensing can obtain remote sensing image data with high spatial resolution at centimeter level. Therefore, UAV agricultural remote sensing image data has more detailed information, and it is easy to misclassify in the process of image classification, which brings great challenges to agricultural land classification to a certain extent. For such agricultural remote sensing images with more detailed information, due to the complexity and fragmentation of ground object and the surrounding environment at high spatial resolution, the accuracy of traditional classification methods has been unable to meet the standards of agricultural problems. The classification method based on convolutional neural network can effectively learn image features related to the target category [

14]. Therefore, most of the current researches on the high spatial resolution UAV agricultural remote sensing images use the classification method based on the fully convolutional neural network to conduct ground object classification research. For example, Chen Yuqing et al. [

15] used a remote sensing image of a farmland in Kaifeng City, Henan Province as a dataset. The dataset contained farmland and two other types of ground object. The improved Deeplab V3+ model was used for land classification. The experimental result showed that the Mean Pixel Accuracy (MPA) can reach 97.16%, which effectively improves the information extraction accuracy of farmland edges and small farmlands. Qinchen Yang et al. [

16] used UAV remote sensing images of an agricultural planting area in the Hetao irrigation area of Inner Mongolia as a dataset. The dataset contained five types of land objects, including roads, green plants, cultivated land, wasteland and others. Two semantic segmentation algorithms, SegNet and FCN, and traditional Support Vector Machine (SVM) were used to conduct ground object classification research. The result showed that SegNet and FCN are significantly better than the traditional Support Vector Machine (SVM) in terms of accuracy and speed, and their Mean Pixel Accuracy (MPA) were 89.62% and 90.6%, respectively. Yang Shuqin et al. [

17] used the UAV multispectral remote sensing images of Shahao Canal Irrigation Area in Hetao Irrigation District of Inner Mongolia Autonomous Region as a dataset. The dataset contained sunflower, zucchini, corn and other four types of ground object; the improved Deeplab V3+ and Support Vector Machine (SVM) to classify farmland objects were used. The result showed that the Mean Pixel Accuracy (MPA) of the improved Deeplab V3+ was 93.06%, which is 17.75% higher than the Support Vector Machine (SVM). To sum up, in terms of high spatial resolution UAV agricultural remote sensing images with more detailed information, the semantic segmentation model based on deep learning has satisfactory results in the classification of farmland objects.

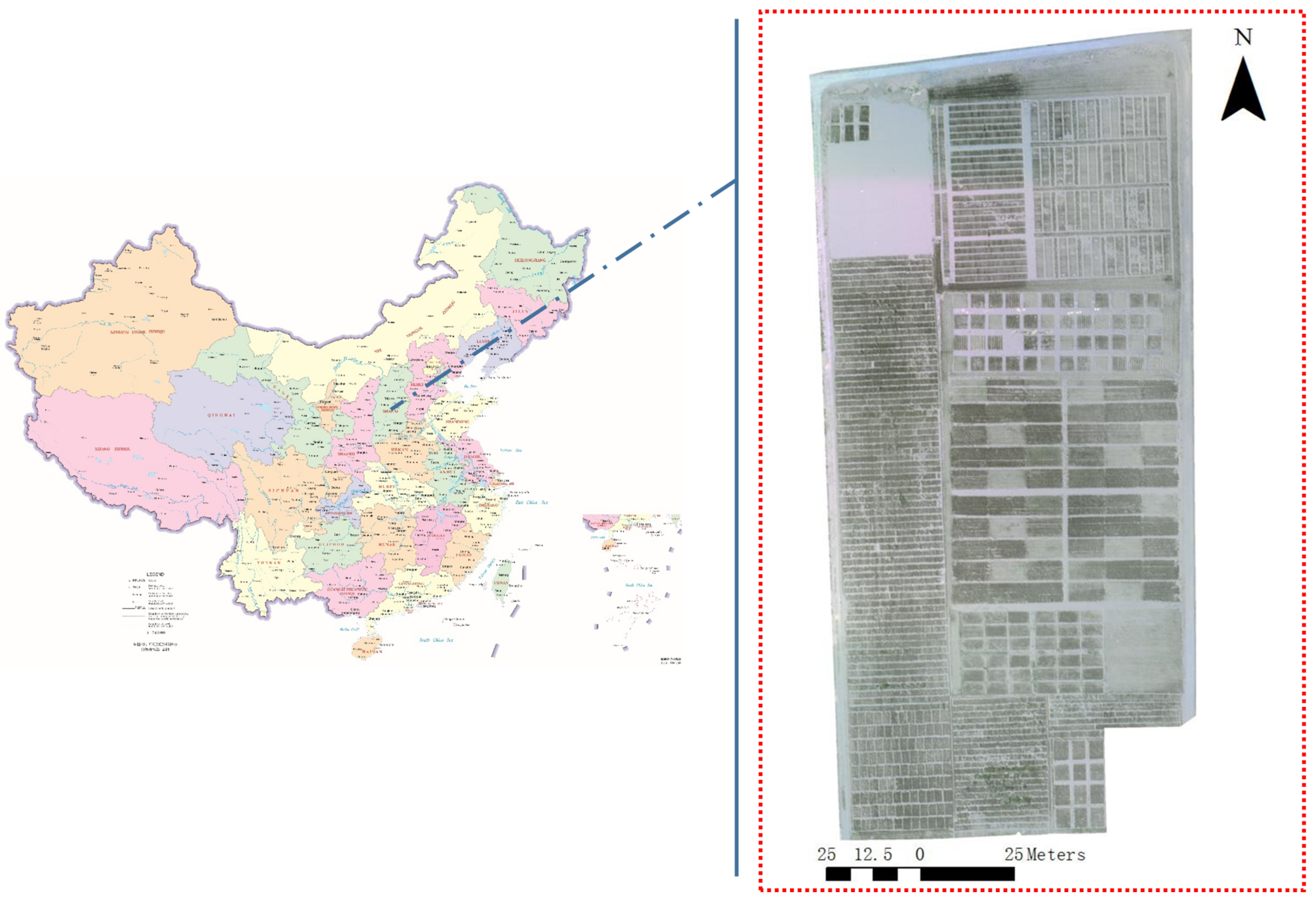

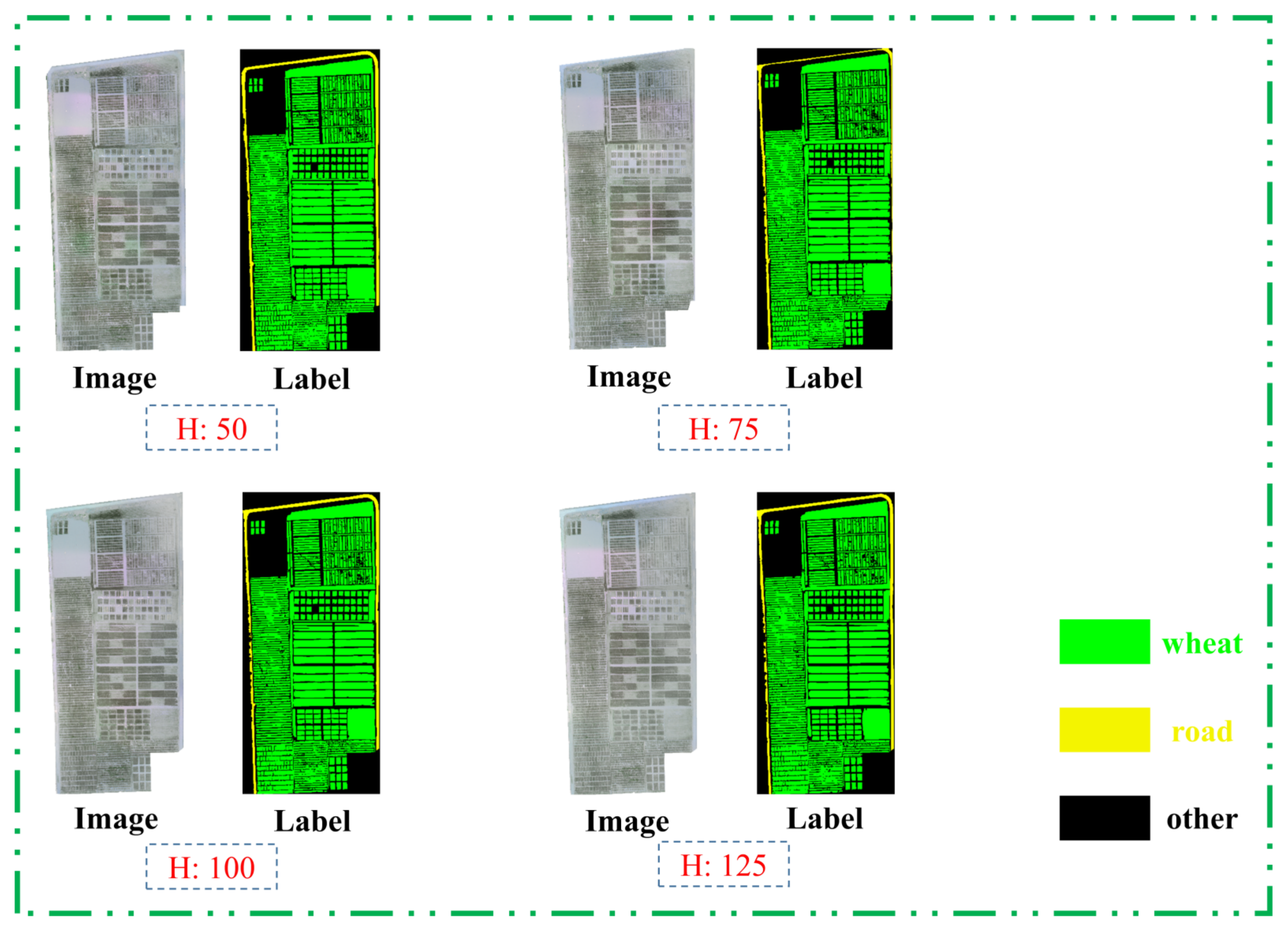

The above-mentioned farmland object classification based on satellite remote sensing or UAV remote sensing was all carried out between different types of objects. So far, there have been few relevant reports on the classification of similar crops with different traits. At present, with the continuous advancement of breeding work, different varieties of crops of the same type may be planted in the same area, and some varieties possess large differences in traits. Especially in the classification of farmland objects on the high spatial resolution remote sensing images of UAVs, it is very easy to cause similar farmlands with large differences in traits to be incorrectly classified. Based on UAV remote sensing technology and the deep learning classification method of multi-scale feature fusion, this study carried out ground object classification for complex wheat fields planted with different varieties of wheat and with large differences in traits between some wheats.

5. Conclusions

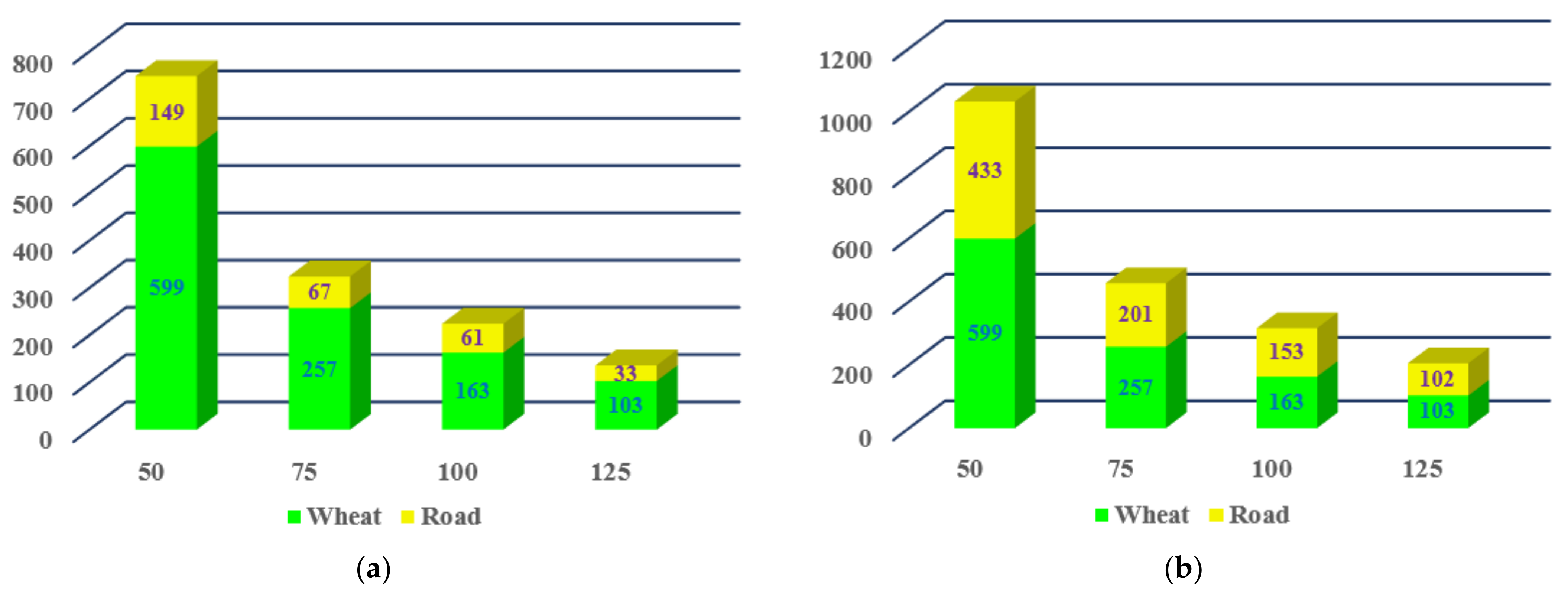

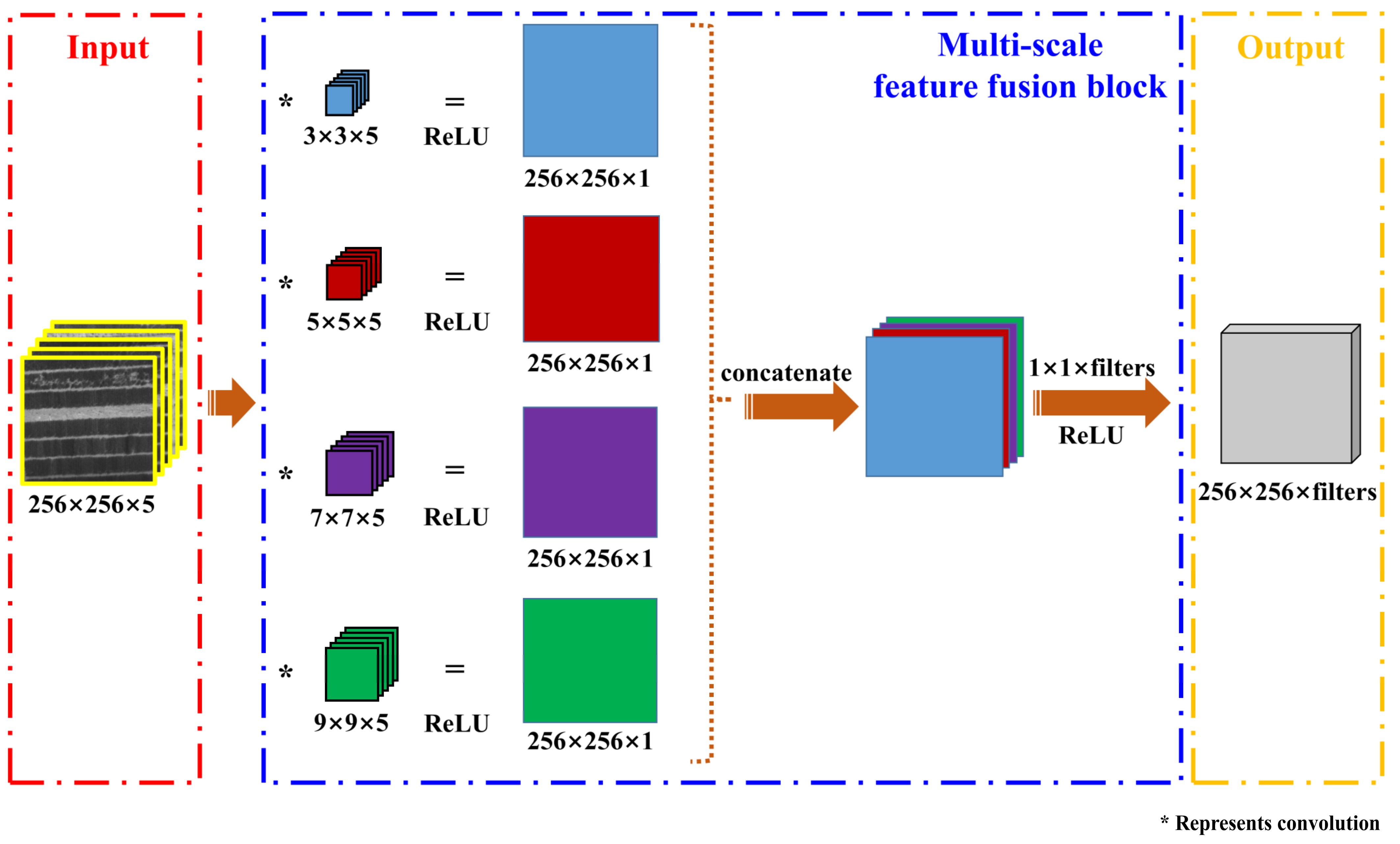

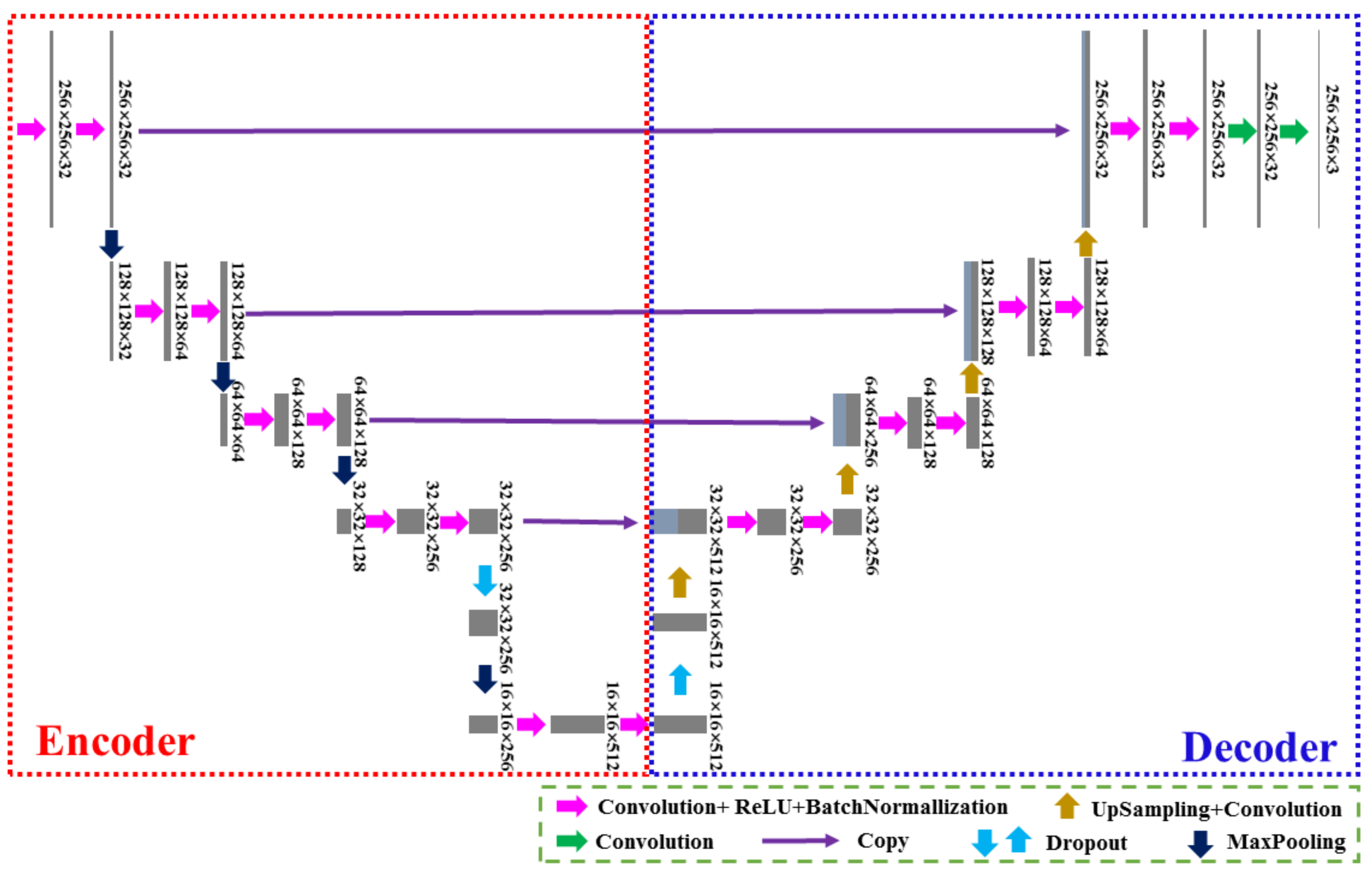

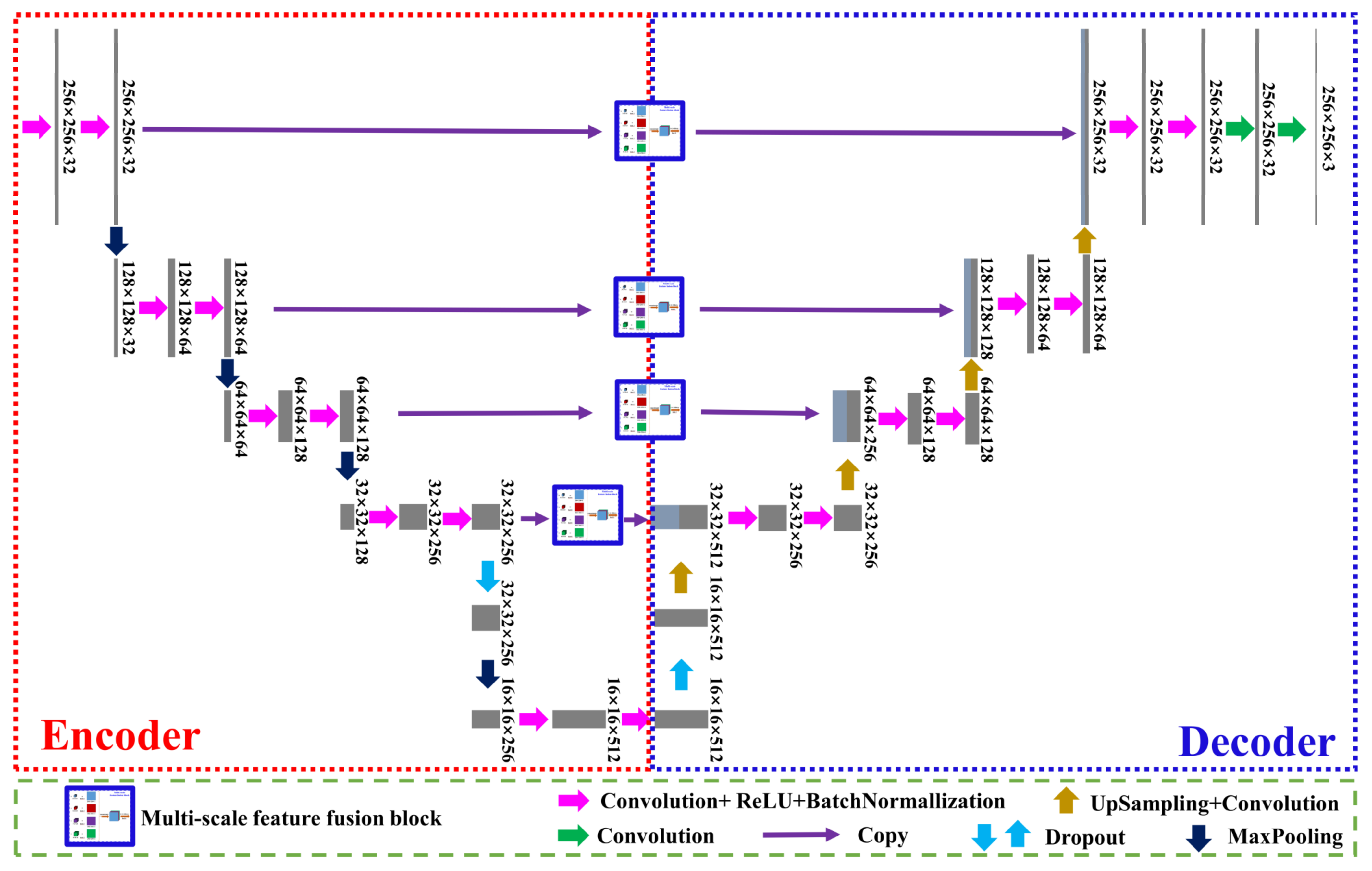

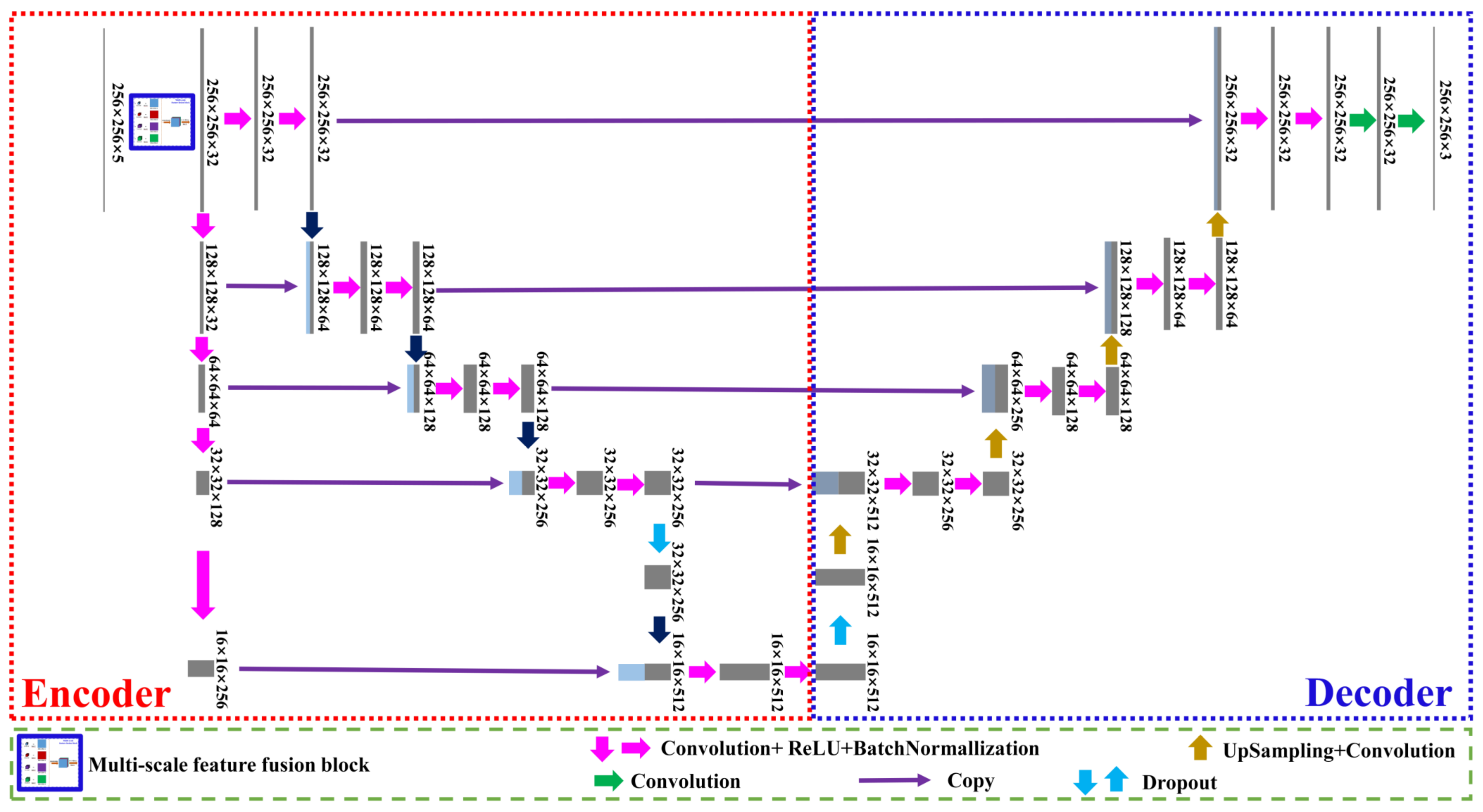

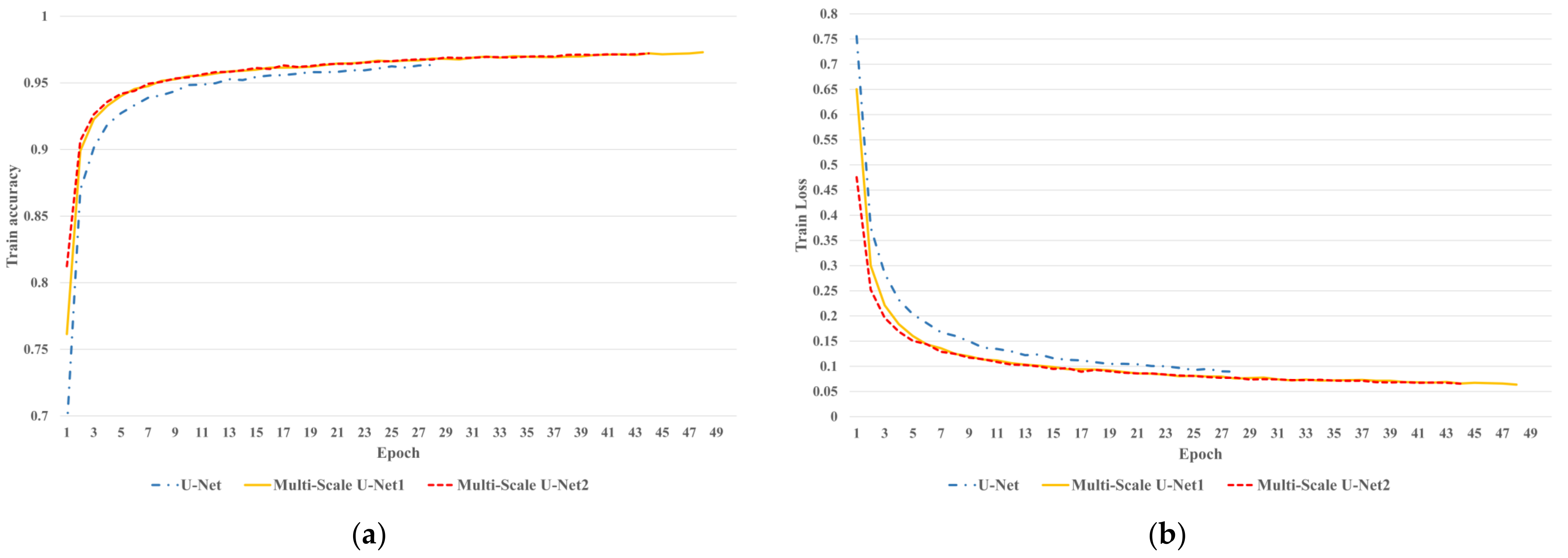

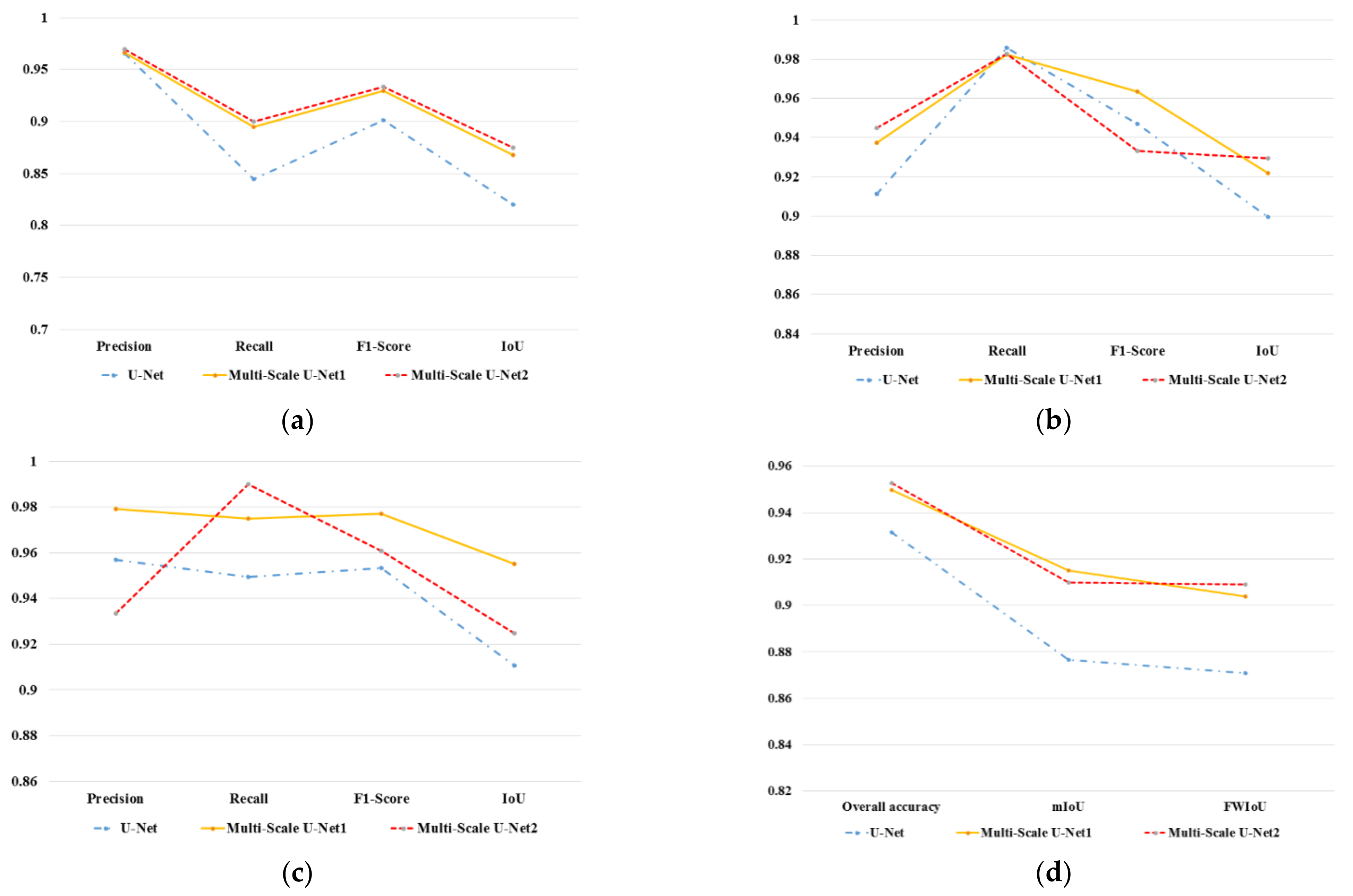

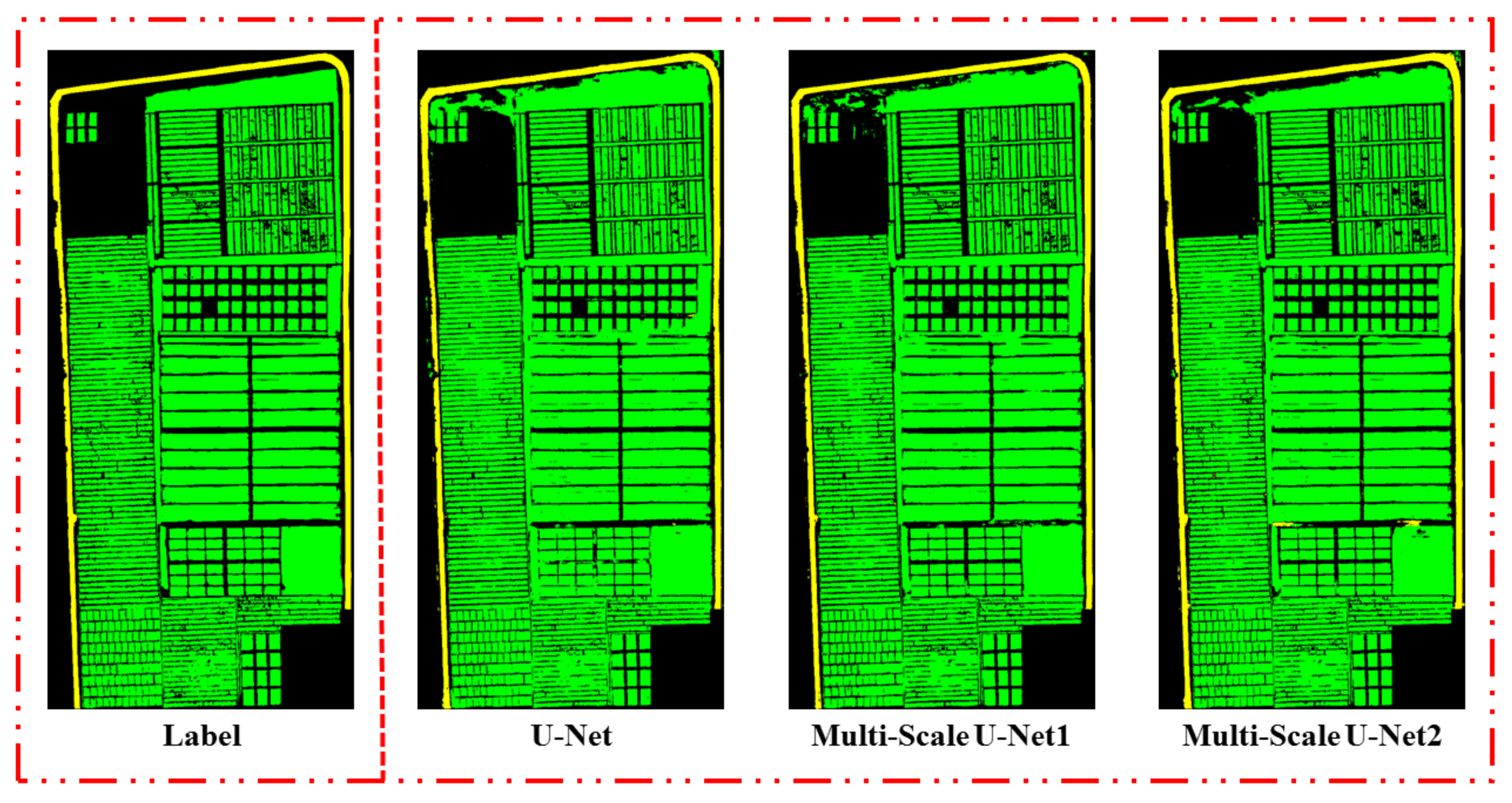

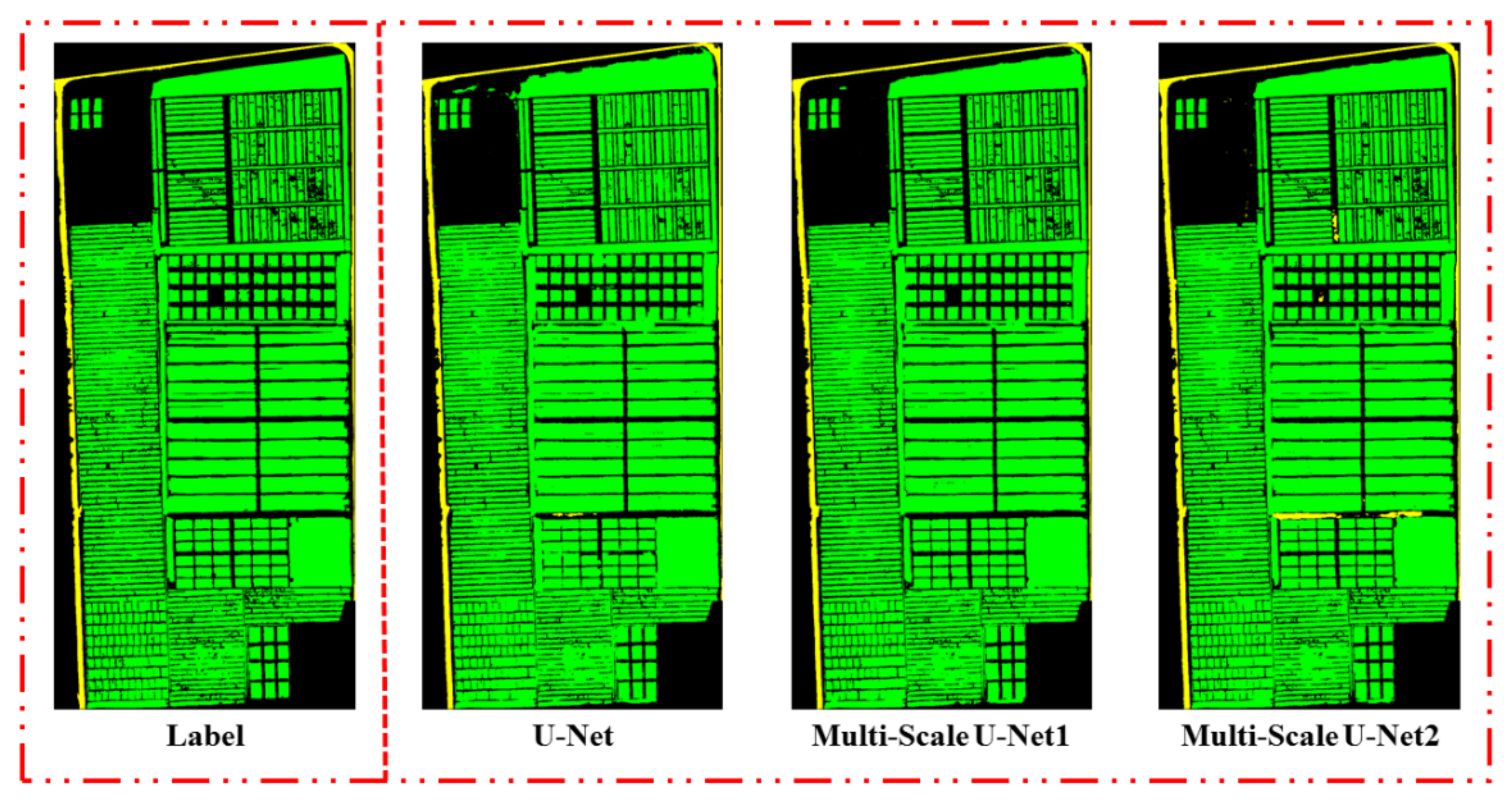

In this study, the multi-scale feature fusion method based on U-Net has effectively improved the classification accuracy of high spatial resolution UAV remote sensing images and achieved satisfactory classification results in complex wheat fields with diverse varieties. The overall classification accuracy of Multi-Scale U-Net1 and Multi-Scale U-Net2 in complex wheat fields can reach 94.97% and 95.26%, respectively.

This study uses UAV to obtain remote sensing images of complex wheat fields with different high spatial resolutions, and uses Multi-Scale U-Net1 and Multi-Scale U-Net2 with multi-scale feature fusion block to test on the obtained remote sensing images. The results show that the classification accuracy of the two models is higher than 94%, and the classification accuracy is improved compared with the U-Net model. In this study, it is found that adding a multi-scale feature fusion block based on U-Net can achieve high-precision classification of UAV remote sensing images in complex wheat field scenes. Based on the prediction results of different high spatial resolution remote sensing images, this study found that with the increase of spatial resolution, the classification accuracy of the model will be reduced. Therefore, it is difficult to conduct ground object classification research based on UAV remote sensing images in complex wheat field scenes with diverse varieties. The study itself has certain limitations, but the multi-scale feature fusion method proposed in this study can effectively improve the classification accuracy of the model and accurately complete the classification of UAV remote sensing images in complex wheat field scenes.

Although the multi-scale feature fusion method proposed in this study can effectively improve the ground object classification accuracy of UAV remote sensing images in complex wheat field scenes, the model itself has a large number of parameters, and the study only focuses on one growth cycle of wheat. Therefore, in later research, the study of the classification of ground object can be carried out for the complex wheat field scenes with lightweight models and multiple growth cycles.