An Overview of Cooperative Robotics in Agriculture

Abstract

:1. Introduction

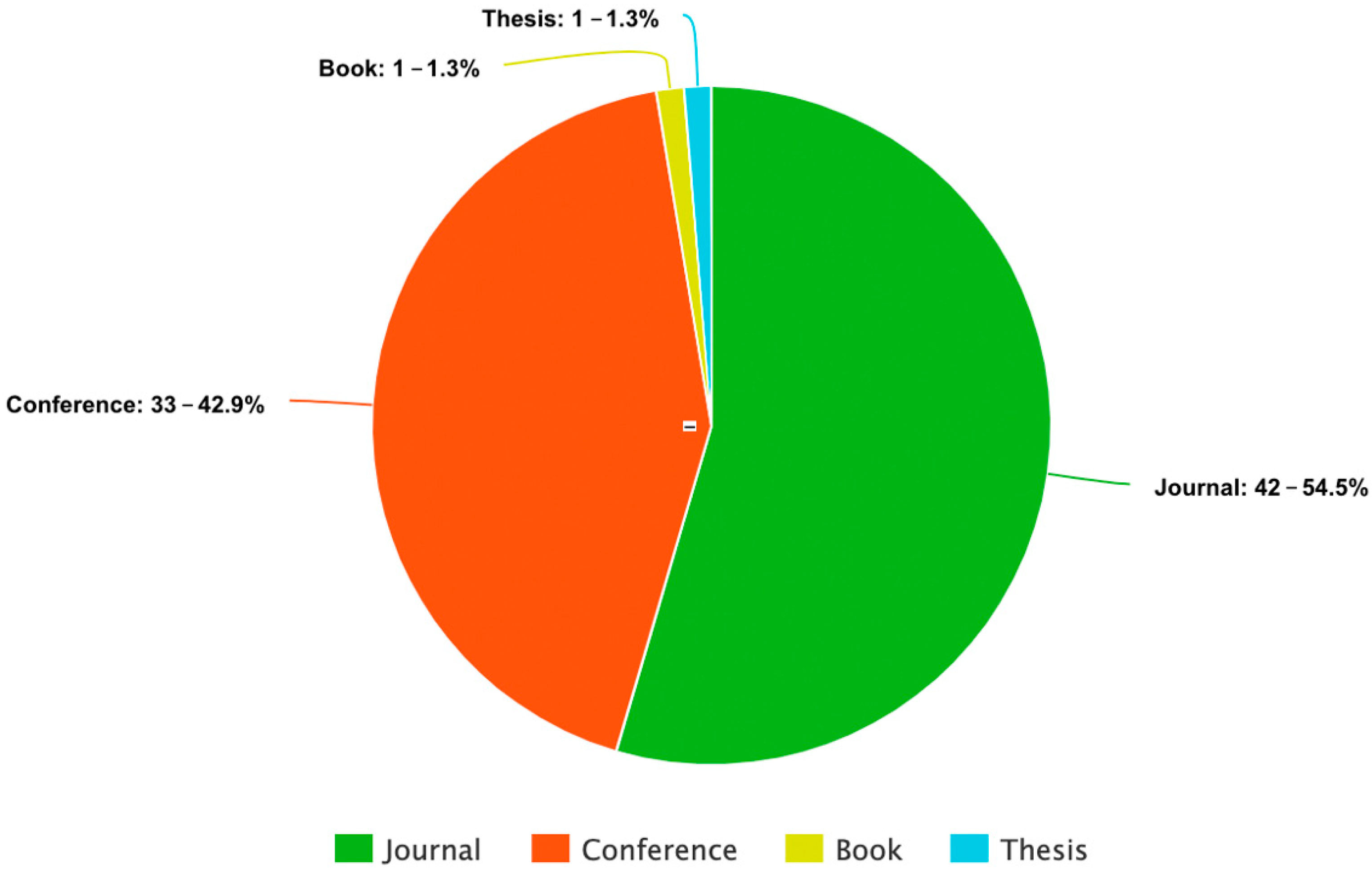

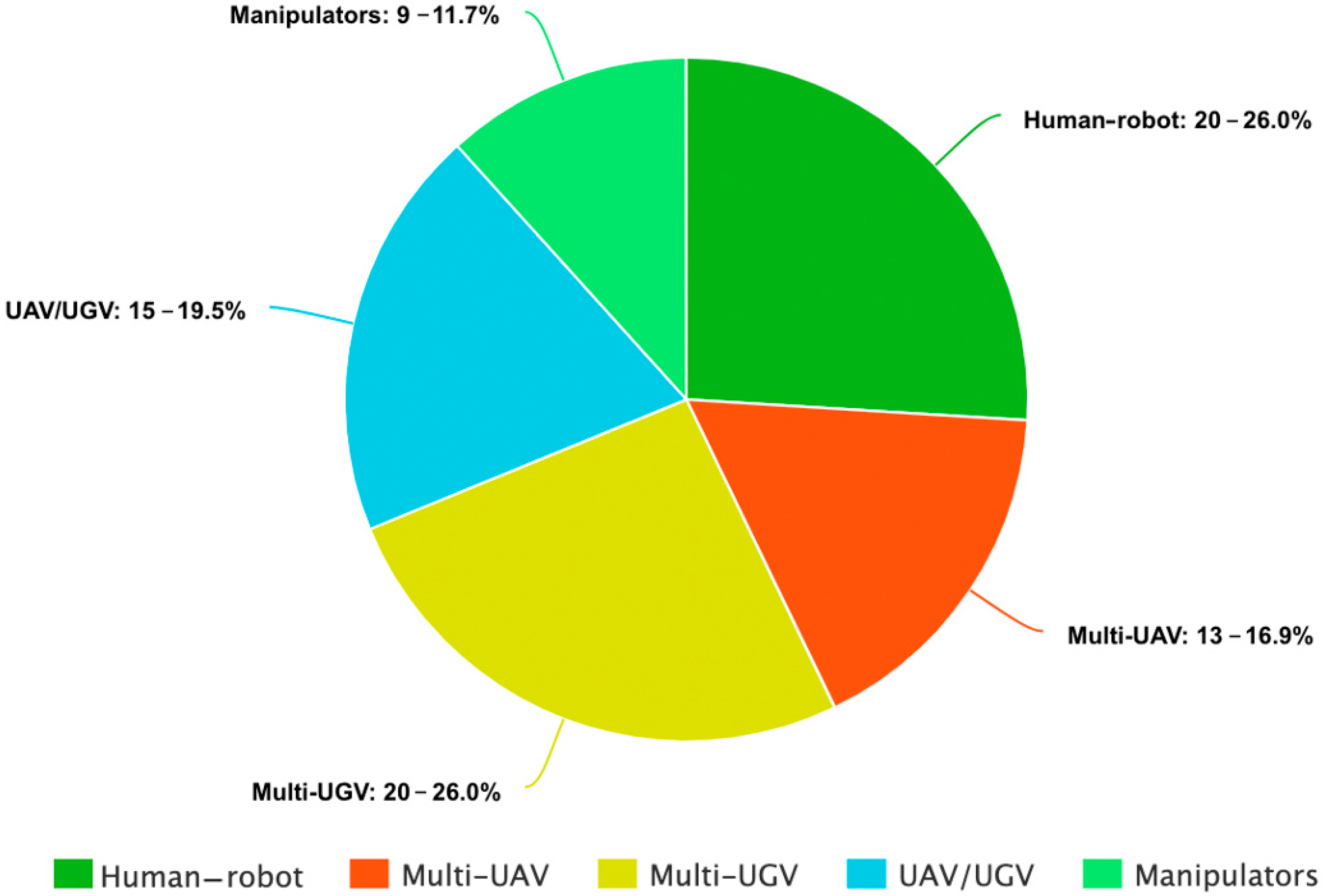

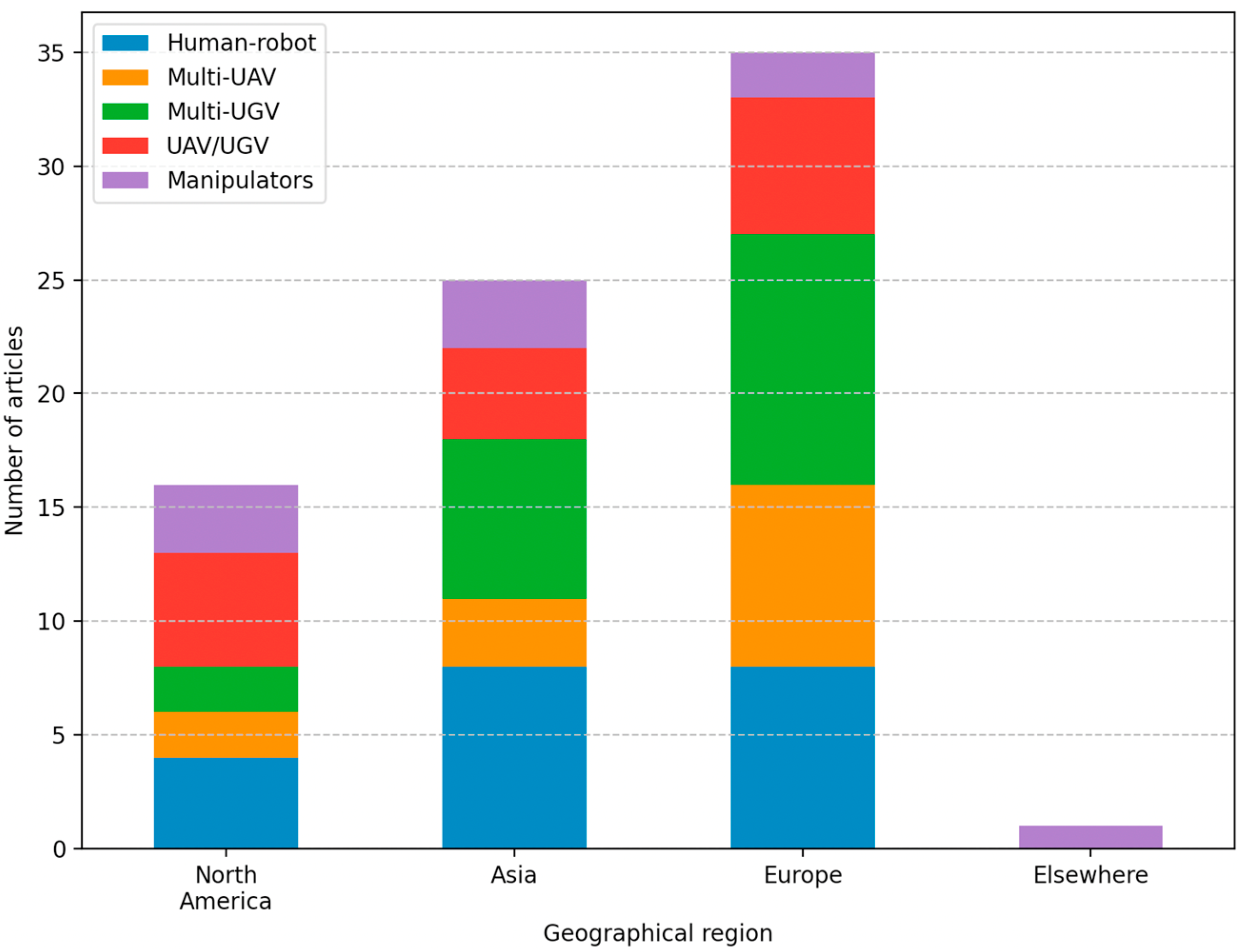

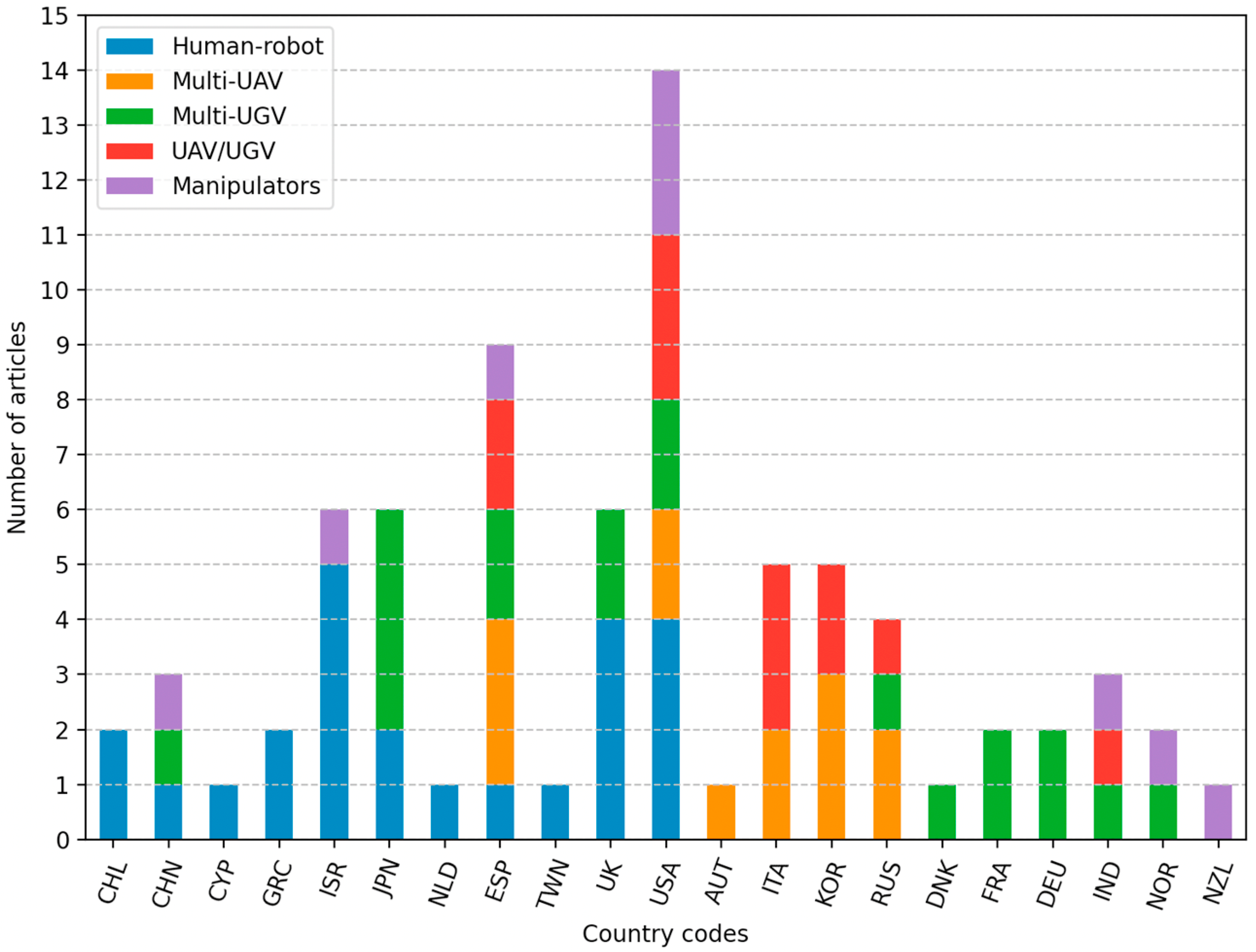

2. Materials and Methods

3. Cooperative Agricultural Robotics

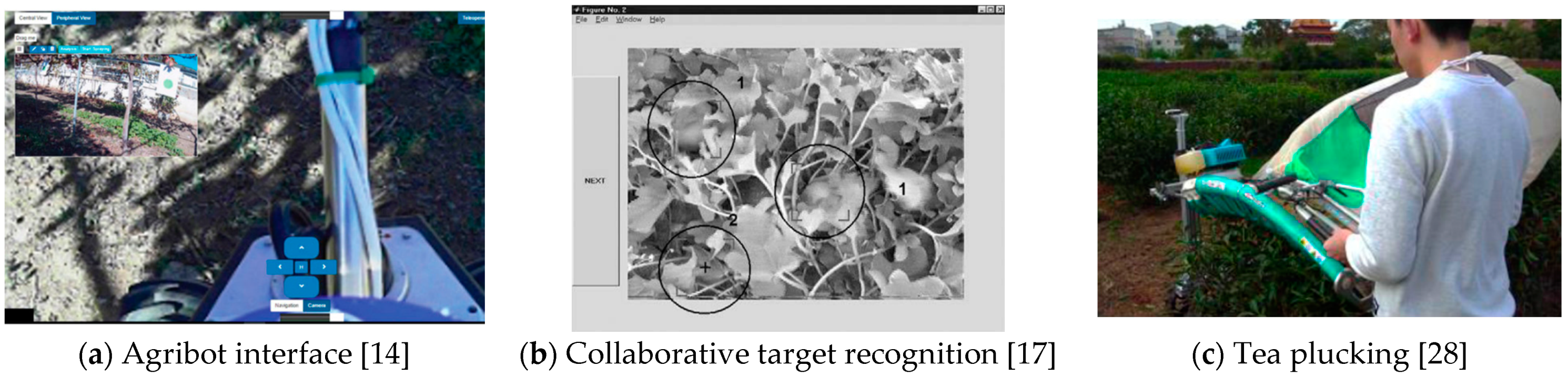

3.1. Human–Robot Cooperation (Human–Robot)

3.2. UAV Robot Teams (Multi-UAV)

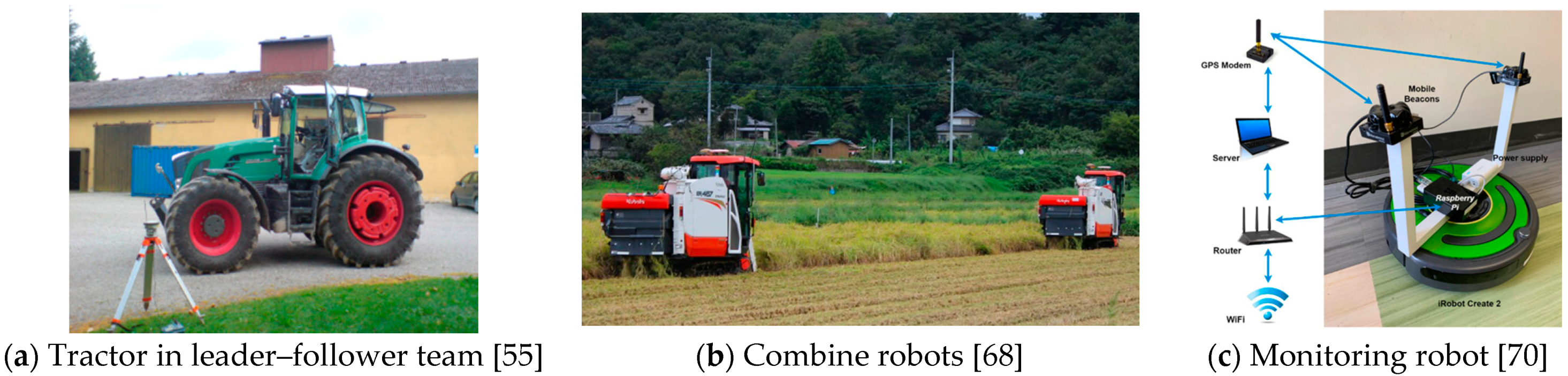

3.3. UGV Robot Teams (Multi-UGV)

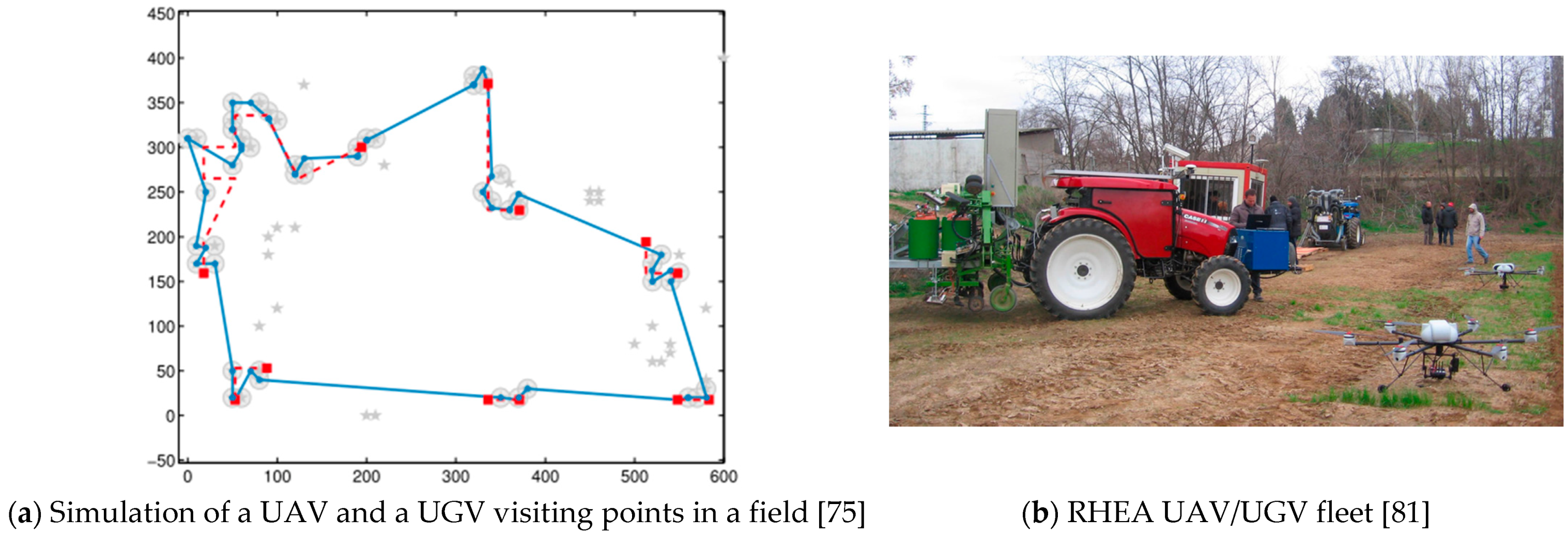

3.4. UGV and UAV Teams (UAV/UGV)

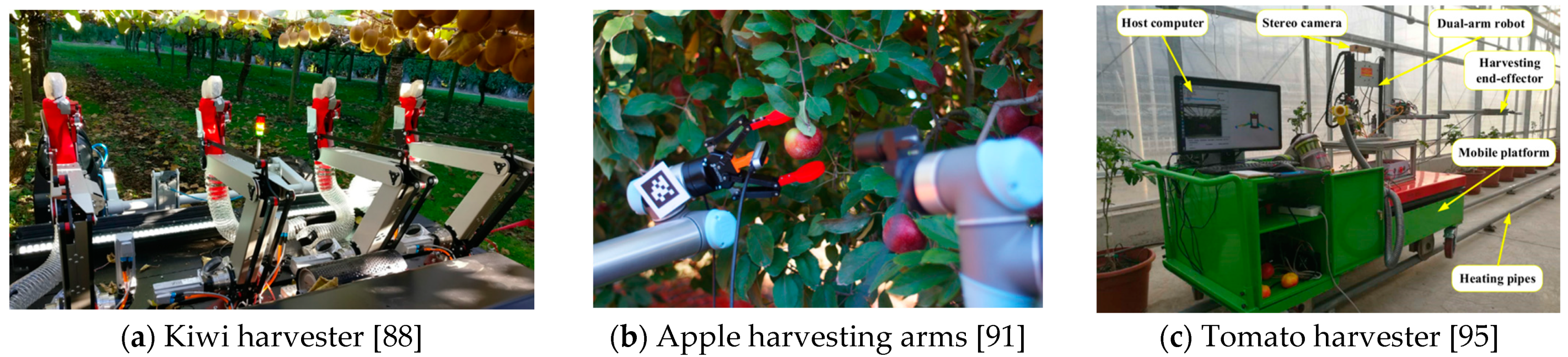

3.5. Cooperative Manipulation (Manipulators)

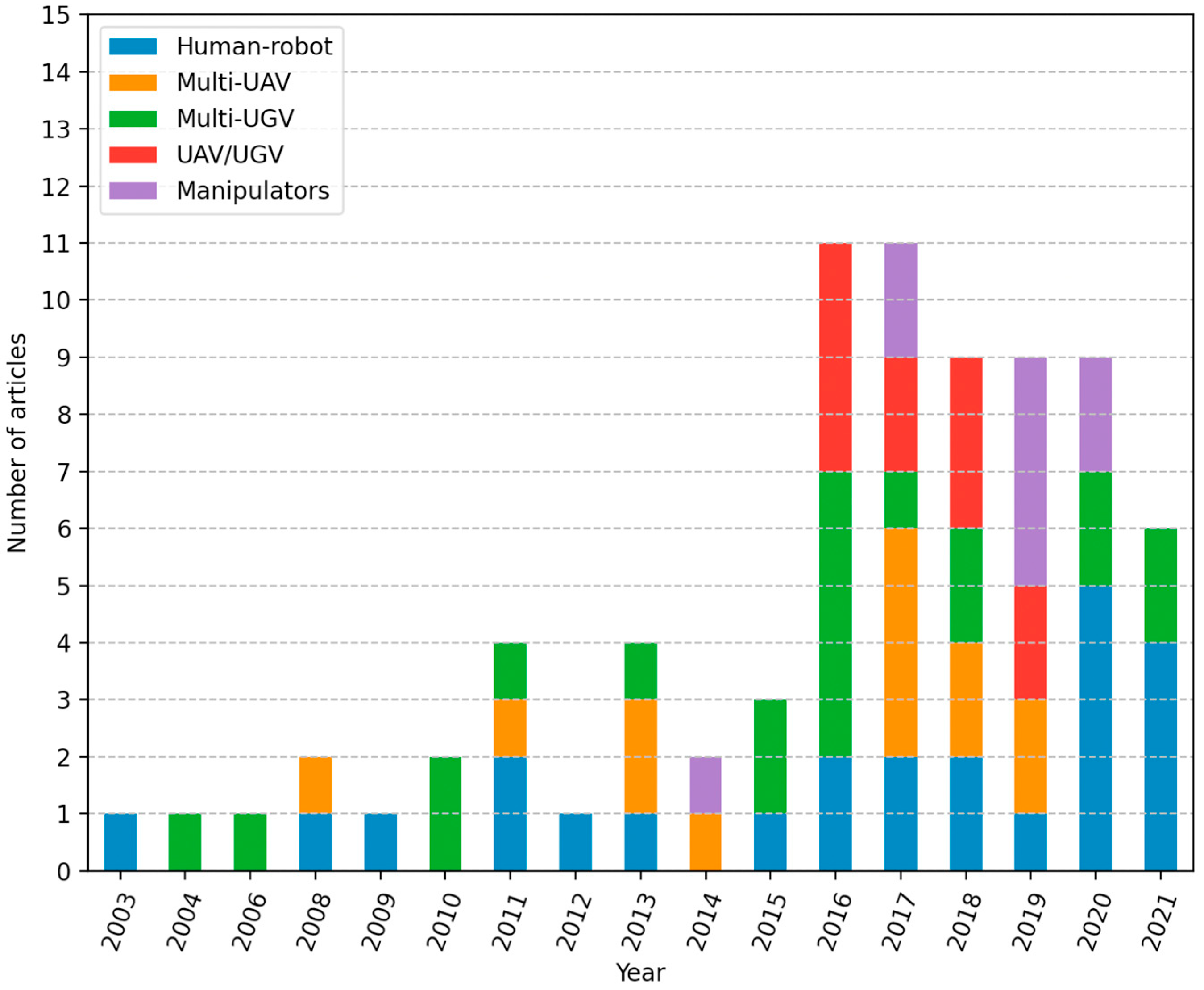

3.6. Trends

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Lowenberg-DeBoer, J.; Erickson, B. Setting the Record Straight on Precision Agriculture Adoption. Agron. J. 2019, 111, 1552–1569. [Google Scholar] [CrossRef] [Green Version]

- Marinoudi, V.; Sørensen, C.G.; Pearson, S.; Bochtis, D. Robotics and labour in agriculture. A context consideration. Biosyst. Eng. 2019, 184, 111–121. [Google Scholar] [CrossRef]

- Bac, C.W.; van Henten, E.J.; Hemming, J.; Edan, Y. Harvesting Robots for High-value Crops: State-of-the-art Review and Challenges Ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Fountas, S.; Mylonas, N.; Malounas, I.; Rodias, E.; Hellmann Santos, C.; Pekkeriet, E. Agricultural Robotics for Field Operations. Sensors 2020, 20, 2672. [Google Scholar] [CrossRef]

- Oliveira, L.F.P.; Moreira, A.P.; Silva, M.F. Advances in Agriculture Robotics: A State-of-the-Art Review and Challenges Ahead. Robotics 2021, 10, 52. [Google Scholar] [CrossRef]

- Skillful Viniculture Technology (SVTECH), Action “Reinforcement of the Research and Innovation Infrastructure”, Operational Programme “Competitiveness, Entrepreneurship and Innovation”, NSRF (National Strategic Reference Framework) 2014–2020. Available online: http://evtar.eu/en/home_en/ (accessed on 10 May 2021).

- Vrochidou, E.; Tziridis, K.; Nikolaou, A.; Kalampokas, T.; Papakostas, G.A.; Pachidis, T.P.; Mamalis, S.; Koundouras, S.; Kaburlasos, V.G. An Autonomous Grape-Harvester Robot: Integrated System Architecture. Electronics 2021, 10, 1056. [Google Scholar] [CrossRef]

- Welfare, K.S.; Hallowell, M.R.; Shah, J.A.; Riek, L.D. Consider the Human Work Experience When Integrating Robotics in the Workplace. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Korea, 11–14 March 2019; pp. 75–84. [Google Scholar]

- Vasconez, J.P.; Kantor, G.A.; Auat Cheein, F.A. Human–robot interaction in agriculture: A survey and current challenges. Biosyst. Eng. 2019, 179, 35–48. [Google Scholar] [CrossRef]

- Cheein, F.A.; Herrera, D.; Gimenez, J.; Carelli, R.; Torres-Torriti, M.; Rosell-Polo, J.R.; Escola, A.; Arno, J. Human-robot interaction in precision agriculture: Sharing the workspace with service units. In Proceedings of the 2015 IEEE International Conference on Industrial Technology (ICIT), Seville, Spain, 17–19 March 2015; pp. 289–295. [Google Scholar] [CrossRef]

- van Henten, E.J.; Bac, C.W.; Hemming, J.; Edan, Y. Robotics in protected cultivation. IFAC Proc. Vol. 2013, 46, 170–177. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations: Concepts and components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Sheridan, T.B. Human–Robot Interaction. Hum. Factors J. Hum. Factors Ergon. Soc. 2016, 58, 525–532. [Google Scholar] [CrossRef]

- Adamides, G.; Katsanos, C.; Constantinou, I.; Christou, G.; Xenos, M.; Hadzilacos, T.; Edan, Y. Design and development of a semi-autonomous agricultural vineyard sprayer: Human–robot interaction aspects. J. Field Robot. 2017, 34, 1407–1426. [Google Scholar] [CrossRef]

- Gomez-Gil, J.; San-Jose-Gonzalez, I.; Nicolas-Alonso, L.F.; Alonso-Garcia, S. Steering a Tractor by Means of an EMG-Based Human-Machine Interface. Sensors 2011, 11, 7110–7126. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Murakami, N.; Ito, A.; Will, J.D.; Steffen, M.; Inoue, K.; Kita, K.; Miyaura, S. Development of a teleoperation system for agricultural vehicles. Comput. Electron. Agric. 2008, 63, 81–88. [Google Scholar] [CrossRef]

- Bechar, A.; Edan, Y. Human-robot collaboration for improved target recognition of agricultural robots. Ind. Robot Int. J. 2003, 30, 432–436. [Google Scholar] [CrossRef]

- Tkach, I.; Bechar, A.; Edan, Y. Switching Between Collaboration Levels in a Human–Robot Target Recognition System. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2011, 41, 955–967. [Google Scholar] [CrossRef]

- Bechar, A.; Meyer, J.; Edan, Y. An Objective Function to Evaluate Performance of Human–Robot Collaboration in Target Recognition Tasks. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2009, 39, 611–620. [Google Scholar] [CrossRef]

- Rysz, M.W.; Mehta, S.S. A risk-averse optimization approach to human-robot collaboration in robotic fruit harvesting. Comput. Electron. Agric. 2021, 182, 106018. [Google Scholar] [CrossRef]

- Anagnostis, A.; Benos, L.; Tsaopoulos, D.; Tagarakis, A.; Tsolakis, N.; Bochtis, D. Human Activity Recognition through Recurrent Neural Networks for Human–Robot Interaction in Agriculture. Appl. Sci. 2021, 11, 2188. [Google Scholar] [CrossRef]

- Yang, L.; Noguchi, N. Human detection for a robot tractor using omni-directional stereo vision. Comput. Electron. Agric. 2012, 89, 116–125. [Google Scholar] [CrossRef]

- Berenstein, R.; Edan, Y. Human-robot collaborative site-specific sprayer. J. Field Robot. 2017, 34, 1519–1530. [Google Scholar] [CrossRef]

- Huang, Z.; Miyauchi, G.; Gomez, A.S.; Bird, R.; Kalsi, A.S.; Jansen, C.; Liu, Z.; Parsons, S.; Sklar, E. An Experiment on Human-Robot Interaction in a Simulated Agricultural Task. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2020; Volume 12228 LNAI, pp. 221–233. ISBN 9783030634858. [Google Scholar]

- Huang, Z.; Gomez, A.; Bird, R.; Kalsi, A.; Jansen, C.; Liu, Z.; Miyauchi, G.; Parsons, S.; Sklar, E. Understanding human responses to errors in a collaborative human-robot selective harvesting task. In Proceedings of the UKRAS20 Conference: “Robots into the Real World” Proceedings, Lincoln, England, 17 April 2020; EPSRC UK-RAS Network, 2020. pp. 89–91. [Google Scholar]

- Kim, M.; Koh, I.; Jeon, H.; Choi, J.; Min, B.C.; Matson, E.T.; Gallagher, J. A HARMS-based heterogeneous human-robot team for gathering and collecting. Adv. Robot. Res. 2018, 3, 201–217. [Google Scholar] [CrossRef]

- Zhou, X.; He, J.; Chen, D.; Li, J.; Jiang, C.; Ji, M.; He, M. Human-robot skills transfer interface for UAV-based precision pesticide in dynamic environments. Assem. Autom. 2021, 41, 345–357. [Google Scholar] [CrossRef]

- Lai, Y.-L.; Chen, P.-L.; Yen, P.-L. A Human-Robot Cooperative Vehicle for Tea Plucking. In Proceedings of the 2020 7th International Conference on Control, Decision and Information Technologies (CoDIT), Prague, Czech Republic, 29 June–2 July 2020; Volume 2, pp. 217–222. [Google Scholar]

- Baylis, L.C. Organizational Culture and Trust within Agricultural Human-Robot Teams. Ph.D. Thesis, Grand Canyon University, Phoenix, AZ, USA, 2020. [Google Scholar]

- Rose, D.C.; Lyon, J.; de Boon, A.; Hanheide, M.; Pearson, S. Responsible development of autonomous robotics in agriculture. Nat. Food 2021, 2, 306–309. [Google Scholar] [CrossRef]

- Benos, L.; Bechar, A.; Bochtis, D. Safety and ergonomics in human-robot interactive agricultural operations. Biosyst. Eng. 2020, 200, 55–72. [Google Scholar] [CrossRef]

- Baxter, P.; Cielniak, G.; Hanheide, M.; From, P. Safe Human-Robot Interaction in Agriculture. In Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; ACM: New York, NY, USA, 2018; pp. 59–60. [Google Scholar]

- Alsalam, B.H.Y.; Morton, K.; Campbell, D.; Gonzalez, F. Autonomous UAV with vision based on-board decision making for remote sensing and precision agriculture. In Proceedings of the 2017 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2017; pp. 1–12. [Google Scholar]

- Santesteban, L.G.; Di Gennaro, S.F.; Herrero-Langreo, A.; Miranda, C.; Royo, J.B.; Matese, A. High-resolution UAV-based thermal imaging to estimate the instantaneous and seasonal variability of plant water status within a vineyard. Agric. Water Manag. 2017, 183, 49–59. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Serrano, N.; Arquero, O.; Peña, J.M. High-throughput 3-D monitoring of agricultural-tree plantations with Unmanned Aerial Vehicle (UAV) technology. PLoS ONE 2015, 10, e0130479. [Google Scholar] [CrossRef] [Green Version]

- Long, D.; McCarthy, C.; Jensen, T. Row and water front detection from UAV thermal-infrared imagery for furrow irrigation monitoring. In Proceedings of the 2016 IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Banff, AB, Canada, 12–15 July 2016; pp. 300–305. [Google Scholar]

- Faiçal, B.S.; Freitas, H.; Gomes, P.H.; Mano, L.Y.; Pessin, G.; de Carvalho, A.C.P.L.F.; Krishnamachari, B.; Ueyama, J. An adaptive approach for UAV-based pesticide spraying in dynamic environments. Comput. Electron. Agric. 2017, 138, 210–223. [Google Scholar] [CrossRef]

- Doering, D.; Benenmann, A.; Lerm, R.; de Freitas, E.P.; Muller, I.; Winter, J.M.; Pereira, C.E. Design and Optimization of a Heterogeneous Platform for multiple UAV use in Precision Agriculture Applications. IFAC Proc. Vol. 2014, 47, 12272–12277. [Google Scholar] [CrossRef] [Green Version]

- del Cerro, J.; Cruz Ulloa, C.; Barrientos, A.; de León Rivas, J. Unmanned Aerial Vehicles in Agriculture: A Survey. Agronomy 2021, 11, 203. [Google Scholar] [CrossRef]

- Vu, Q.; Nguyen, V.; Solenaya, O.; Ronzhin, A. Group Control of Heterogeneous Robots and Unmanned Aerial Vehicles in Agriculture Tasks. In Proceedings of the International Conference on Interactive Collaborative Robotics (ICR 2017), Hatfield, UK, 12–16 September 2017; Ronzhin, A., Rigoll, G., Meshcheryakov, R., Eds.; Springer: Cham, Switzerland, 2017; Volume 10459, pp. 260–267, ISBN 978-3-319-66470-5. [Google Scholar]

- Chao, H.; Baumann, M.; Jensen, A.; Chen, Y.; Cao, Y.; Ren, W.; McKee, M. Band-reconfigurable Multi-UAV-based Cooperative Remote Sensing for Real-time Water Management and Distributed Irrigation Control. IFAC Proc. Vol. 2008, 41, 11744–11749. [Google Scholar] [CrossRef]

- Albani, D.; Manoni, T.; Arik, A.; Nardi, D.; Trianni, V. Field Coverage for Weed Mapping: Toward Experiments with a UAV Swarm. In Lecture Notes of the Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering, LNICST; Springer: Berlin/Heidelberg, Germany, 2019; Volume 289, pp. 132–146. ISBN 9783030242015. [Google Scholar]

- Albani, D.; IJsselmuiden, J.; Haken, R.; Trianni, V. Monitoring and mapping with robot swarms for agricultural applications. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Del Cerro, J.; Barrientos, A.; Sanz, D.; Valente, J. Aerial Fleet in RHEA Project: A High Vantage Point Contributions to ROBOT 2013; Armada, M.A., Sanfeliu, A., Ferre, M., Eds.; Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2014; Volume 252, ISBN 978-3-319-03412-6. [Google Scholar]

- Valente, J.; Del Cerro, J.; Barrientos, A.; Sanz, D. Aerial coverage optimization in precision agriculture management: A musical harmony inspired approach. Comput. Electron. Agric. 2013, 99, 153–159. [Google Scholar] [CrossRef] [Green Version]

- Ju, C.; Son, H. Il A distributed swarm control for an agricultural multiple unmanned aerial vehicle system. Proc. Inst. Mech. Eng. Part I J. Syst. Control Eng. 2019, 233, 1298–1308. [Google Scholar] [CrossRef]

- Skobelev, P.; Budaev, D.; Gusev, N.; Voschuk, G. Designing Multi-agent Swarm of UAV for Precise Agriculture. In Highlights of Practical Applications of Agents, Multi-Agent Systems, and Complexity: The PAAMS Collection; Bajo, J., Corchado, J.M., Navarro Martínez, E.M., Osaba Icedo, E., Mathieu, P., Hoffa-Dąbrowska, P., del Val, E., Giroux, S., Castro, A.J.M., Sánchez-Pi, N., et al., Eds.; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2018; Volume 887, pp. 47–59. ISBN 978-3-319-94778-5. [Google Scholar]

- Barrientos, A.; Colorado, J.; del Cerro, J.; Martinez, A.; Rossi, C.; Sanz, D.; Valente, J. Aerial remote sensing in agriculture: A practical approach to area coverage and path planning for fleets of mini aerial robots. J. Field Robot. 2011, 28, 667–689. [Google Scholar] [CrossRef] [Green Version]

- Drenjanac, D.; Tomic, S.D.K.; Klausner, L.; Kühn, E. Harnessing coherence of area decomposition and semantic shared spaces for task allocation in a robotic fleet. Inf. Process. Agric. 2014, 1, 23–33. [Google Scholar] [CrossRef] [Green Version]

- Ju, C.; Son, H. Multiple UAV Systems for Agricultural Applications: Control, Implementation, and Evaluation. Electronics 2018, 7, 162. [Google Scholar] [CrossRef] [Green Version]

- Ju, C.; Park, S.; Park, S.; Son, H. Il A Haptic Teleoperation of Agricultural Multi-UAV. In Proceedings of the Workshop on Agricultural Robotics: Learning from Industry 4.0 and Moving into the Future at the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 28 September 2017; pp. 1–6. [Google Scholar]

- Nolan, P.; Paley, D.A.; Kroeger, K. Multi-UAS path planning for non-uniform data collection in precision agriculture. In Proceedings of the 2017 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2017; pp. 1–12. [Google Scholar]

- Bochtis, D.D.; Sørensen, C.G.; Green, O.; Moshou, D.; Olesen, J. Effect of controlled traffic on field efficiency. Biosyst. Eng. 2010, 106, 14–25. [Google Scholar] [CrossRef]

- Noguchi, N.; Will, J.; Reid, J.; Zhang, Q. Development of a master-slave robot system for farm operations. Comput. Electron. Agric. 2004, 44, 1–19. [Google Scholar] [CrossRef]

- Zhang, X.; Geimer, M.; Noack, P.O.; Grandl, L. Development of an intelligent master-slave system between agricultural vehicles. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 250–255. [Google Scholar]

- Zhang, C.; Noguchi, N.; Yang, L. Leader–follower system using two robot tractors to improve work efficiency. Comput. Electron. Agric. 2016, 121, 269–281. [Google Scholar] [CrossRef]

- Noguchi, N.; Barawid, O.C. Robot Farming System Using Multiple Robot Tractors in Japan Agriculture. IFAC Proc. Vol. 2011, 44, 633–637. [Google Scholar] [CrossRef] [Green Version]

- Li, N.; Remeikas, C.; Xu, Y.; Jayasuriya, S.; Ehsani, R. Task Assignment and Trajectory Planning Algorithm for a Class of Cooperative Agricultural Robots. J. Dyn. Syst. Meas. Control 2015, 137, 1–9. [Google Scholar] [CrossRef]

- Conesa-Muñoz, J.; Bengochea-Guevara, J.M.; Andujar, D.; Ribeiro, A. Route planning for agricultural tasks: A general approach for fleets of autonomous vehicles in site-specific herbicide applications. Comput. Electron. Agric. 2016, 127, 204–220. [Google Scholar] [CrossRef]

- Blender, T.; Buchner, T.; Fernandez, B.; Pichlmaier, B.; Schlegel, C. Managing a Mobile Agricultural Robot Swarm for a seeding task. In Proceedings of the IECON 2016-42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 23–26 October 2016; pp. 6879–6886. [Google Scholar]

- Anil, H.; Nikhil, K.S.; Chaitra, V.; Sharan, B.S.G. Revolutionizing Farming Using Swarm Robotics. In Proceedings of the 2015 6th International Conference on Intelligent Systems, Modelling and Simulation, Kuala Lumpur, Malaysia, 9–12 February 2015; pp. 141–147. [Google Scholar]

- Janani, A.; Alboul, L.; Penders, J. Multi Robot Cooperative Area Coverage, Case Study: Spraying. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9716, pp. 165–176. ISBN 9783319403786. [Google Scholar]

- Janani, A.; Alboul, L.; Penders, J. Multi-agent cooperative area coverage: Case study ploughing. In Proceedings of the 2016 International Conference on Autonomous Agents & Multiagent Systems, AAMAS, Singapore, Singapore, 9–13 May 2016; pp. 1397–1398. [Google Scholar]

- Tourrette, T.; Deremetz, M.; Naud, O.; Lenain, R.; Laneurit, J.; De Rudnicki, V. Close Coordination of Mobile Robots Using Radio Beacons: A New Concept Aimed at Smart Spraying in Agriculture. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7727–7734. [Google Scholar]

- Hameed, I.A. A Coverage Planner for Multi-Robot Systems in Agriculture. In Proceedings of the 2018 IEEE International Conference on Real-time Computing and Robotics (RCAR), Kandima, Maldives, 1–5 August 2018; pp. 698–704. [Google Scholar]

- Arguenon, V.; Bergues-Lagarde, A.; Rosenberger, C.; Bro, P.; Smari, W. Multi-Agent Based Prototyping of Agriculture Robots. In Proceedings of the International Symposium on Collaborative Technologies and Systems (CTS’06), Las Vegas, NV, USA, 14–17 May 2006; Volume 2006, pp. 282–288. [Google Scholar]

- Emmi, L.; Paredes-Madrid, L.; Ribeiro, A.; Pajares, G.; Gonzalez-de-Santos, P. Fleets of robots for precision agriculture: A simulation environment. Ind. Robot Int. J. 2013, 40, 41–58. [Google Scholar] [CrossRef]

- Iida, M.; Harada, S.; Sasaki, R.; Zhang, Y.; Asada, R.; Suguri, M.; Masuda, R. Multi-Combine Robot System for Rice Harvesting Operation. In Proceedings of the 2017 ASABE Annual International Meeting, Spokane, WA, USA, 16–19 July 2017; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2017; pp. 1–5. [Google Scholar]

- Teslya, N.; Smirnov, A.; Ionov, A.; Kudrov, A. Multi-robot Coalition Formation for Precision Agriculture Scenario Based on Gazebo Simulator. In Smart Innovation, Systems and Technologies; Springer: Berlin/Heidelberg, Germany, 2021; pp. 329–341. ISBN 9789811555794. [Google Scholar]

- Davoodi, M.; Faryadi, S.; Velni, J.M. A Graph Theoretic-Based Approach for Deploying Heterogeneous Multi-agent Systems with Application in Precision Agriculture. J. Intell. Robot. Syst. 2021, 101, 10. [Google Scholar] [CrossRef]

- Wu, C.; Chen, Z.; Wang, D.; Song, B.; Liang, Y.; Yang, L.; Bochtis, D.D. A Cloud-Based In-Field Fleet Coordination System for Multiple Operations. Energies 2020, 13, 775. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.; Son, H. Il A Voronoi Diagram-Based Workspace Partition for Weak Cooperation of Multi-Robot System in Orchard. IEEE Access 2020, 8, 20676–20686. [Google Scholar] [CrossRef]

- Vu, Q.; Raković, M.; Delic, V.; Ronzhin, A. Trends in Development of UAV-UGV Cooperation Approaches in Precision Agriculture. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2018; Volume 11097 LNAI, pp. 213–221. ISBN 9783319995816. [Google Scholar]

- Menendez-Aponte, P.; Garcia, C.; Freese, D.; Defterli, S.; Xu, Y. Software and hardware architectures in cooperative aerial and ground robots for agricultural disease detection. In Proceedings of the 2016 International Conference on Collaboration Technologies and Systems (CTS), Orlando, FL, USA, 31 October–4 November 2016; pp. 354–358. [Google Scholar] [CrossRef]

- Tokekar, P.; Hook, J.V.; Mulla, D.; Isler, V. Sensor Planning for a Symbiotic UAV and UGV System for Precision Agriculture. IEEE Trans. Robot. 2016, 32, 1498–1511. [Google Scholar] [CrossRef]

- Ni, J.; Wang, X.; Tang, M.; Cao, W.; Shi, P.; Yang, S.X. An Improved Real-Time Path Planning Method Based on Dragonfly Algorithm for Heterogeneous Multi-Robot System. IEEE Access 2020, 8, 140558–140568. [Google Scholar] [CrossRef]

- Li, J.; Deng, G.; Luo, C.; Lin, Q.; Yan, Q.; Ming, Z. A Hybrid Path Planning Method in Unmanned Air/Ground Vehicle (UAV/UGV) Cooperative Systems. IEEE Trans. Veh. Technol. 2016, 65, 9585–9596. [Google Scholar] [CrossRef]

- Peterson, J.; Li, W.; Cesar-Tondreau, B.; Bird, J.; Kochersberger, K.; Czaja, W.; McLean, M. Experiments in unmanned aerial vehicle/unmanned ground vehicle radiation search. J. Field Robot. 2019, 36, 818–845. [Google Scholar] [CrossRef]

- Wang, Z.; McDonald, S.T. Convex relaxation for optimal rendezvous of unmanned aerial and ground vehicles. Aerosp. Sci. Technol. 2020, 99, 105756. [Google Scholar] [CrossRef]

- Conesa-Muñoz, J.; Valente, J.; del Cerro, J.; Barrientos, A.; Ribeiro, A. A Multi-Robot Sense-Act Approach to Lead to a Proper Acting in Environmental Incidents. Sensors 2016, 16, 1269. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez-de-Santos, P.; Ribeiro, A.; Fernandez-Quintanilla, C.; Lopez-Granados, F.; Brandstoetter, M.; Tomic, S.; Pedrazzi, S.; Peruzzi, A.; Pajares, G.; Kaplanis, G.; et al. Fleets of robots for environmentally-safe pest control in agriculture. Precis. Agric. 2017, 18, 574–614. [Google Scholar] [CrossRef]

- Potena, C.; Khanna, R.; Nieto, J.; Nardi, D.; Pretto, A. Collaborative UAV-UGV Environment Reconstruction in Precision Agriculture. In Proceedings of the IEEE/RSJ IROS Workshop” Vision-Based Drones: What’s Next, Madrid, Spain, 1–5 October 2018; pp. 1–6. [Google Scholar]

- Potena, C.; Khanna, R.; Nieto, J.; Siegwart, R.; Nardi, D.; Pretto, A. AgriColMap: Aerial-Ground Collaborative 3D Mapping for Precision Farming. IEEE Robot. Autom. Lett. 2019, 4, 1085–1092. [Google Scholar] [CrossRef] [Green Version]

- Bhandari, S.; Raheja, A.; Green, R.L.; Do, D. Towards collaboration between unmanned aerial and ground vehicles for precision agriculture. In Proceedings of the Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping II, Anaheim, CA, USA, 10–11 April 2017; Thomasson, J.A., McKee, M., Moorhead, R.J., Eds.; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10218, p. 1021806. [Google Scholar]

- Grassi, R.; Rea, P.; Ottaviano, E.; Maggiore, P. Application of an Inspection Robot Composed by Collaborative Terrestrial and Aerial Modules for an Operation in Agriculture. In Mechanisms and Machine Science; Springer: Berlin/Heidelberg, Germany, 2018; Volume 49, pp. 539–546. ISBN 9783319612751. [Google Scholar]

- Vasudevan, A.; Kumar, D.A.; Bhuvaneswari, N.S. Precision farming using unmanned aerial and ground vehicles. In Proceedings of the 2016 IEEE Technological Innovations in ICT for Agriculture and Rural Development (TIAR), Chennai, India, 15–16 July 2016; pp. 146–150. [Google Scholar]

- Ju, C.; Son, H. Il Hybrid Systems based Modeling and Control of Heterogeneous Agricultural Robots for Field Operations. In Proceedings of the 2019 ASABE Annual International Meeting, Boston, MA, USA, 7–10 July 2019; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2019; pp. 3–15. [Google Scholar]

- Williams, H.A.M.; Jones, M.H.; Nejati, M.; Seabright, M.J.; Bell, J.; Penhall, N.D.; Barnett, J.J.; Duke, M.D.; Scarfe, A.J.; Ahn, H.S.; et al. Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 2019, 181, 140–156. [Google Scholar] [CrossRef]

- Zion, B.; Mann, M.; Levin, D.; Shilo, A.; Rubinstein, D.; Shmulevich, I. Harvest-order planning for a multiarm robotic harvester. Comput. Electron. Agric. 2014, 103, 75–81. [Google Scholar] [CrossRef]

- Xiong, Y.; Ge, Y.; Grimstad, L.; From, P.J. An autonomous strawberry-harvesting robot: Design, development, integration, and field evaluation. J. Field Robot. 2020, 37, 202–224. [Google Scholar] [CrossRef] [Green Version]

- Sarabu, H.; Ahlin, K.; Hu, A.-P. Graph-Based Cooperative Robot Path Planning in Agricultural Environments. In Proceedings of the 2019 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Hong Kong, China, 8–12 July 2019; pp. 519–525. [Google Scholar]

- Ahlin, K.J.; Hu, A.-P.; Sadegh, N. Apple Picking Using Dual Robot Arms Operating Within an Unknown Tree. In Proceedings of the 2017 ASABE Annual International Meeting, Spokane, WA, USA, 16–19 July 2017; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2017; pp. 1–11. [Google Scholar]

- Sepulveda, D.; Fernandez, R.; Navas, E.; Armada, M.; Gonzalez-De-Santos, P. Robotic Aubergine Harvesting Using Dual-Arm Manipulation. IEEE Access 2020, 8, 121889–121904. [Google Scholar] [CrossRef]

- Davidson, J.R.; Hohimer, C.J.; Mo, C.; Karkee, M. Dual robot coordination for apple harvesting. In Proceedings of the 2017 ASABE Annual International Meeting, Spokane, WA, USA, 16–19 July 2017; pp. 1–9. [Google Scholar] [CrossRef]

- Ling, X.; Zhao, Y.; Gong, L.; Liu, C.; Wang, T. Dual-arm cooperation and implementing for robotic harvesting tomato using binocular vision. Rob. Auton. Syst. 2019, 114, 134–143. [Google Scholar] [CrossRef]

- Pramod, A.S.; Jithinmon, T.V. Development of mobile dual PR arm agricultural robot. J. Phys. Conf. Ser. 2019, 1240, 012034. [Google Scholar] [CrossRef]

- BACCHUS—Mobile Robotic Platforms for Active Inspection & Harvesting in Agricultural Areas. European Union’s Horizon 2020 research and innovation programme under grant agreement No 871704. Available online: https://bacchus-project.eu/ (accessed on 10 May 2021).

- Kaburlasos, V.G. The Lattice Computing (LC) Paradigm. In Proceedings of the Fifthteenth International Conference on Concept Lattices and Their Applications (CLA 2020), Tallinn, Estonia, 29 June–1 July 2020; Volume 2668, pp. 1–7. [Google Scholar]

- Bazinas, C.; Vrochidou, E.; Lytridis, C.; Kaburlasos, V.G. Time-Series of Distributions Forecasting in Agricultural Applications: An Intervals’ Numbers Approach. Eng. Proc. 2021, 5, 12. [Google Scholar] [CrossRef]

| Ref. | Task | Objective | Type of Study | Cooperation Strategy |

|---|---|---|---|---|

| [14] | Spraying | Vineyard | Field trial | User confirmation of machine vision |

| [15] | Driving | N/A | Field trial | EMG interface |

| [16] | Driving | N/A | Field trial | Teleoperation platform |

| [17,18,19] | Target recognition | Melon | Lab experiments | User confirmation of machine vision |

| [20] | Harvesting | Citrus | Simulations | Risk-averse collaboration |

| [21] | Transportation | N/A | Field trial | Activity recognition |

| [22] | Human detection | N/A | Field trial | Stereo vision |

| [23] | Spraying | Vineyard | Field trial | User confirmation of machine vision |

| [24,25] | Harvesting | Strawberry | Simulation | User confirmation of machine vision |

| [26] | Harvesting | N/A | Lab experiments | Layered task selection |

| [27] | Spraying | Canola | Simulation and field trial | Skills transfer interface |

| [28] | Harvesting | Tea | Field trial | Motion coordination |

| [29] | N/A | N/A | Correlational study | Acceptance issues |

| [30] | N/A | N/A | Design principles | Safety issues |

| [31] | N/A | N/A | Design principles | Safety and ergonomics issues |

| [32] | Transportation | Strawberry | Field trial | Safety issues |

| Ref. | Task | Objective | Type of Study | Robot Team | Cooperation Strategy |

|---|---|---|---|---|---|

| [40] | N/A | N/A | Formal description | Variable number of UAVs | N/A |

| [41] | Irrigation control | N/A | Field tests | Variable number of UAVs | Coverage control |

| [42] | Weed mapping | N/A | Simulation | Variable number of UAVs | Coverage control |

| [43] | Field monitoring | N/A | Simulation | Variable number of UAVs | Individual random walk |

| [44,45] | Pest control | Maize | Architecture design | N/A | Central robot management system |

| [46] | Disease detection | N/A | Simulation | Four UAVs | Formation control |

| [47] | Surveying | N/A | Simulation and field tests | Up to 10 UAVs | Distributed mission planning |

| [48] | Mapping | Vineyard | Field tests | Three UAVs | Centralized path planning |

| [49] | Weed control | N/A | Simulation | Three UAVs | Centralized area decomposition |

| [50] | N/A | N/A | Field tests | Three UAVs | Formation control |

| [51] | Remote sensing | N/A | Simulation | Four UAVs | Formation control |

| [52] | Crop health surveying | N/A | Simulation and field tests | Three UAVs | Centralized path planning |

| Ref. | Task | Objective | Type of Study | Robot Team | Cooperation Strategy |

|---|---|---|---|---|---|

| [53] | N/A | N/A | Simulation | An application unit and a refilling unit | Leader–follower |

| [54] | N/A | N/A | Simulation | A master and a slave vehicle | Master–slave |

| [55,56] | N/A | N/A | Field trials | A master and a slave tractor | Master–slave |

| [57] | Planting, seeding, transplanting, and harvesting | Rice | Architecture design | A robot for data acquisition and two robot tractors for farming operations | Central robot management system |

| [58] | Harvesting | Citrus | Simulation | A virtual leader robot and three follower robots | Formation selection or individual trajectory selection |

| [59] | Herbicide application | N/A | Simulation | Multiple heterogeneous robots | Route planning |

| [60] | Seeding | N/A | Simulation and field tests | Variable number of robots | Central robot management system |

| [61] | Ploughing, irrigation, seeding, and harvesting | N/A | Lab experiments | Multiple heterogeneous robots | Central robot management system |

| [62,63] | Spraying, ploughing | N/A | Simulation | Variable number of robots | Use of information stored at checkpoints |

| [64] | Spraying | N/A | Lab experiments | A leader robot and a follower robot | Formation control |

| [65] | N/A | N/A | Simulation | Variable number of robots | Central robot management system |

| [66] | Harvesting, transport | Grapes | Simulation | One harvesting robot and two transport robots | Central robot management system |

| [67] | Weed management | N/A | Simulation | Variable number of robots | Central robot management system |

| [68] | Harvesting | Rice | Field trials | Two combine robots | Leader–follower |

| [69] | Harvesting | N/A | Simulation | Variable number of heterogeneous robots | Central robot management system |

| [70] | Monitoring | N/A | Simulation and field trials | Two robots | Route planning |

| [71] | Coordination | N/A | Simulation and field trials | Three robot tractors | Central robot management system |

| [72] | Spraying | N/A | Simulation with real data | 2 to 10 robots | Central robot management system |

| Ref. | Task | Objective | Type of Study | Robot Team | Cooperation Strategy |

|---|---|---|---|---|---|

| [74] | Disease detection | Strawberry | Architecture design | One UAV and one UGV | UGV visiting locations identified by the UAV |

| [75] | Fertilization | Not specified | Simulation | One UAV and one UGV | UGV visiting locations identified by the UAV |

| [80,81] | Pest control | Winter cereal | Field trials | Two six-rotor drones and three tractors | UGVs visiting locations identified by the UAVs |

| [82,83] | Mapping | Not specified | Simulation with real data | One UAV and one simulated UGV | Map data fusion |

| [84] | Crop management | Lettuce | Architecture design | One UAV and one UGV | UGV visiting locations identified by the UAV |

| [85] | Inspection | Not specified | Architecture design | One UAV and one UGV | Transportation of UAV by the UGV |

| [86] | Crop status mapping | Not specified | Architecture design | One UAV and one UGV | Crop data fusion |

| [87] | N/A | N/A | Simulation | Three UAVs and one UGV | Leader-follower formation control |

| Ref. | Task | Objective | Type of Study | Mode of Operation | Manipulators |

|---|---|---|---|---|---|

| [88] | Harvesting | Kiwi | Field trials | Arm coordination | Four 3-DoF arms |

| [89] | Harvesting | Mellon | Simulation | Arm coordination | Variable number of 3-DoF arms |

| [90] | Harvesting | Strawberry | Field trials | Arm coordination | Two single-rail 5-DoF arms |

| [91] | Harvesting | Apple | Simulation and lab experiments | Arm collaboration | Two 6-DoF arms |

| [92] | Harvesting | Apple | Simulation | Arm collaboration | Two 6-DoF arms |

| [93] | Harvesting | Aubergine | Lab experiments | Arm collaboration and coordination | Two 6-DoF arms |

| [94] | Harvesting | Apple | Lab experiments | Arm collaboration | An 8-DOF arm and a 2-DoF arm |

| [95] | Harvesting | Tomato | Field experiments | Arm collaboration | Two mirrored 3-DoF arms |

| [96] | Planting and watering | N/A | Field experiments | Arm coordination | Two 2-DoF arms |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lytridis, C.; Kaburlasos, V.G.; Pachidis, T.; Manios, M.; Vrochidou, E.; Kalampokas, T.; Chatzistamatis, S. An Overview of Cooperative Robotics in Agriculture. Agronomy 2021, 11, 1818. https://doi.org/10.3390/agronomy11091818

Lytridis C, Kaburlasos VG, Pachidis T, Manios M, Vrochidou E, Kalampokas T, Chatzistamatis S. An Overview of Cooperative Robotics in Agriculture. Agronomy. 2021; 11(9):1818. https://doi.org/10.3390/agronomy11091818

Chicago/Turabian StyleLytridis, Chris, Vassilis G. Kaburlasos, Theodore Pachidis, Michalis Manios, Eleni Vrochidou, Theofanis Kalampokas, and Stamatis Chatzistamatis. 2021. "An Overview of Cooperative Robotics in Agriculture" Agronomy 11, no. 9: 1818. https://doi.org/10.3390/agronomy11091818

APA StyleLytridis, C., Kaburlasos, V. G., Pachidis, T., Manios, M., Vrochidou, E., Kalampokas, T., & Chatzistamatis, S. (2021). An Overview of Cooperative Robotics in Agriculture. Agronomy, 11(9), 1818. https://doi.org/10.3390/agronomy11091818