In this section, the analysis of the asymptotical (when the number of measurements tends to infinity) properties of the optimal regularized model is conducted. The rate of the convergence of the optimal identified model to the optimal model, which does not depend on the measurement data, is analyzed. The choice of the regularization parameter for the nonlinear Tikhonov regularization scheme is discussed, and the guaranteed model approximation rule is discussed. The resulting identification algorithm is described, and the applicability of the concept of the sampling-instant-independent identification to two classes of polymer relaxation modulus models is analyzed. Next, the compactness assumption concerning the set of admissible model parameters, which are natural in the context of viscoelastic models of polymers and convenient when analyzing the mathematical properties of the method, will be weakened by omitting the upper parameter constraints, which simplifies the numerical search for the optimal model parameters. Finally, the analytical properties of the presented identification method are verified by numerical studies. It has been assumed that the “real” material is described by a bimodal Gauss-like relaxation spectrum, which is often used to describe the rheological properties of various polymers [

15,

16,

17] and polymers used in food technology [

18,

21,

22]. Generalized Maxwell and KWW models are determined using the noise-corrupted data from the randomized experiment. Both the asymptotic properties and the influence of the measurement noises on the solution have been studied.

3.1. Convergence Analysis

Now, we wish to investigate the stochastic-type asymptotic properties of the regularized approximation task given by Equation (13). Since for any

and, by (7), the expected value

Property 2 from [

14] directly implies the next proposition.

Proposition 1. Let . When Assumptions 1–7 are satisfied, then

where

means “with probability one”.

The result (17) means that the regularized identification index (10) is arbitrarily close, uniformly in over the set , to its expected value, c.f., Equation (16).

Now we can proceed to the main results. Proposition 1 enables us to relate the relaxation modulus model parameter

, solving the regularized task expressed by Equation (13) for the empirical index

to the parameter

that minimize the regularized deterministic function

in the optimization task (15). Namely, from the uniform in

convergence of the regularized index

in Equation (17) for any

, we conclude immediately the following, c.f., Assertion in [

14] or Equation (3.5) in [

13]:

Proposition 2. Let . Assume that Assumptions 1–7 are in force, are independently and randomly selected from , each according to probability distributions with density . If the minima of the optimization tasks (15) and (13) are unique, thenandfor all . If the parameters solving the optimization tasks (15) and (13) are not unique, then for any convergent subsequence with ,and for all and some , the asymptotic property (19) holds. By the compactness of (Assumption 2), for any , the existence of a convergent subsequence so that (20) holds is guaranteed.

Thus, for any fixed

, under the taken assumptions, the regularized parameter

of the relaxation modulus model is a strongly consistent estimate of some parameter

. Moreover, since the model

,

is Lipschitz on

uniformly in

, then the almost-sure convergence of

to the respective parameter

in Equation (18) implies that, c.f., ([

14]: Remark 2):

i.e., that

is a strongly uniformly consistent estimate of the best model

in the assumed class of models defined by Equation (2) for

.

Summarizing, when Assumptions 1–7 are satisfied, the arbitrarily precise approximation of the optimal relaxation modulus model (with the regularized parameter ) can be obtained (almost everywhere) as the number of mesurements grows large, despite the fact that the real description of the relaxation modulus is completely unknown.

3.2. Rate of Convergence

Taking into account the convergence in Equations (18) and (20), the question immediately arises of how fast tends to some as grows large. The distance between the model parameters and will be estimated in terms of the regularized integral identification index (14), i.e., in the sense of the difference . We will examine how fast, for a given , the probability tends to zero as increases.

In

Appendix A.1, for any

and any

, using the well-known Hoeffding’s inequality [

24], the following upper bound is derived:

where

with some positive constant

defined through inequality (A4) and the constants

and

defined in Assumption 1 and Equation (3), respectively.

The inequality (22) shows connections between the convergence rate and the number of measurements

and the measurement noises. In particular, if

is fixed, then the bounds for

tend to zero at an exponential rate as

increases. The rate of convergence is higher the lower are

,

and

, defined through inequality (A4) and Assumptions 5 and 6, respectively, i.e., the measurement noises are weaker. Additionally, analyzing (22), it is easy to see that stronger measurement disturbances reduce the convergence rate. The decrease in the speed is greater the larger that

and

are. This is not a surprise, since with large noises, the measurements are not very adequate compared to the true relaxation modulus. Notice, however, that for a fixed

,

with

still tending to zero as

tends to infinity at a quasi-exponential rate.

3.3. Choice of the Regularization Parameter

Tikhonov regularization has been investigated extensively, both for the solution of linear as well as nonlinear ill posed problems. (see [

11,

12,

22] for a survey on continuous regularization methods and references therein). For the minimization of the Tikhonov functional

for nonlinear ill-posed problems, usually, iterative methods are used. In [

22], the regularization schemes based on different iteration methods, e.g., nonlinear Landweber iteration, level set methods, multilevel methods and Newton type methods, are presented. An analysis of the convexity of the Tikhonov functional, which guarantees global convergence of a wild class of numerical methods, has been carried out by Chavent and Kunisch [

25,

26].

There are different ways to decide on a suitable choice of the regularization parameter

[

12,

27]. Here, we apply the guaranteed model approximation rule, which does not depend on a priori knowledge about the noise variance. The idea of this rule was first applied by Stankiewicz [

28] for the identification of the relaxation time spectrum, and next, it has been successfully used for the Maxwell model identification task [

29].

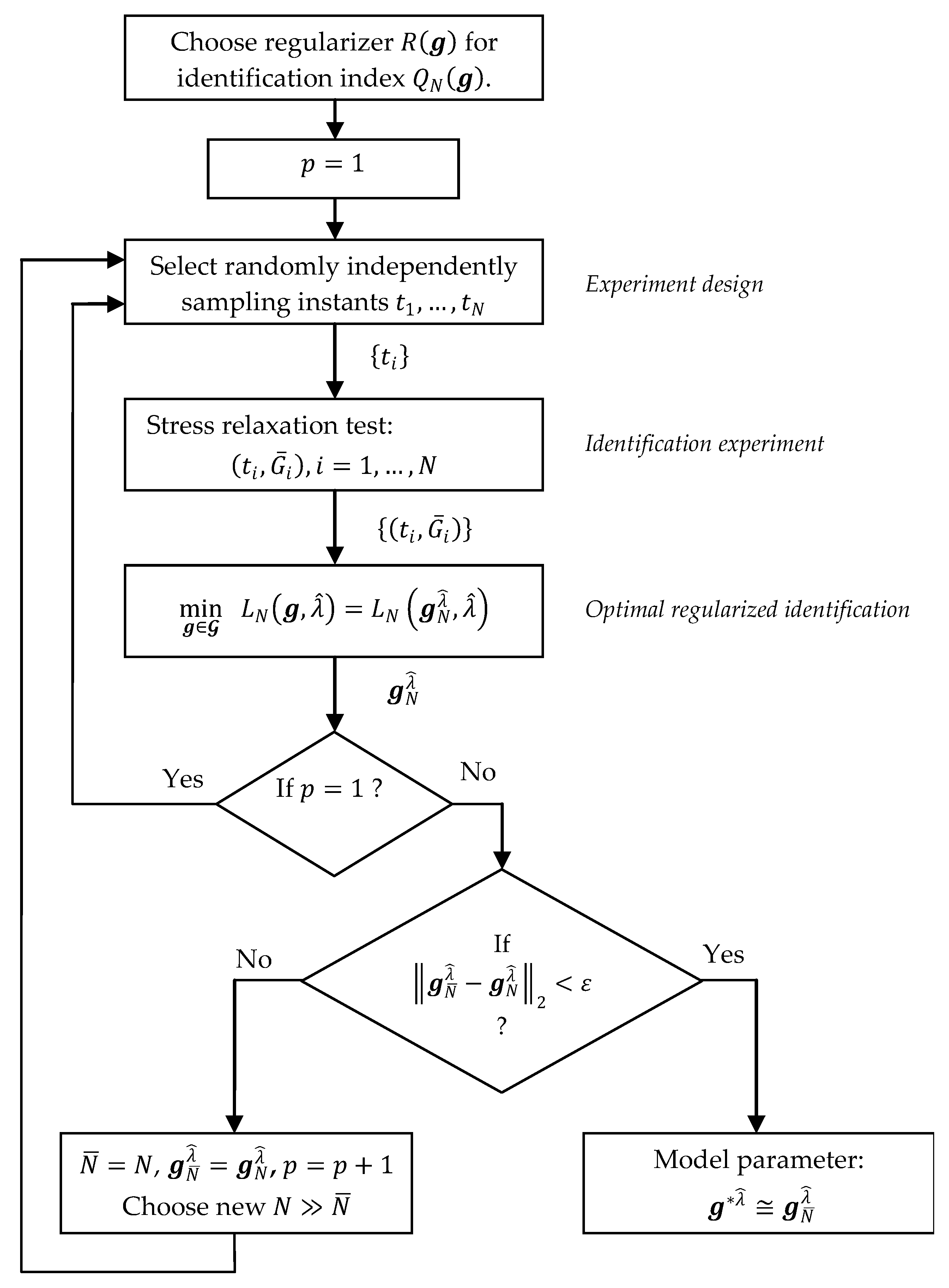

Guaranteed Model Approximation (GMA) Rule

Suppose that

is the optimal model parameter (usually not unique) minimizing the original empirical model approximation index

(8) without regularization. The GMA technique when applied to a regularized task (13) relies on choosing as the regularization parameter the

for which the assumed quality

of the model approximation index so that

is achieved for the minimizer

in the regularized optimization task (13), i.e.,

Thus, as a result, the vector of the optimal regularized model parameters

is determined. This rule is a quite natural strategy in the context of the relaxation modulus model approximation task, since the value of the mean-squares index (8) is directly taken into account. A certain interpretation of the GMA rule is given by the following result: for the proof, see

Appendix A.2.

Proposition 3. Assume . The regularized solution , defined by (24), is the solution of the following optimization task By Theorem 1, the GMA rule, Equation (24), relies on such a selection of the relaxation modulus model that the regularizer for the parameter

is the smallest among all admissible models, so that

. Therefore, the best smoothness (in the sense of the assumed regularizer

) of the model parameter vector

is achieved. The effectiveness of this approach in the context of Maxwell model identification has been verified by the early paper [

29], where the functional

(10) with the quadratic regularizer

was applied in the regularized optimization task (13).

3.5. Applicability to Polymer Relaxation Modulus Models

The exponential Maxwell model and the exponential stretched Kohlrausch–Williams–Watts model are the best known linear rheological models of polymers [

35]. To approximate nonexponential relaxation, inverse power laws were also used [

36,

37], especially the fractional Scott–Blair model [

38]. Fractional viscoelasticity, described for example by the fractional Maxwell model, appears to be an appealing tool to describe the relaxation processes in polymers exhibiting both exponential and nonexponential types [

38]. However, the applicability of the idea of identification that is asymptotically independent of the time instants used in the stress relaxation experiment to the fractional order model determination will be the subject of a separate paper.

The generalized Maxwell model, with relaxation modulus described by a linear combination of exponential terms, is still one of the most widely used rheological models of polymers. Application examples from just the last few years include studies on the long-term behavior of semi-crystalline bio-based fibers [

39]; modeling the stress relaxation in stress-induced polymer crystallization [

40]; a description of the stress relaxation after low- and high-rate deformation of polyurethanes [

41]; and studying viscoelastic properties of hydroxyl-terminated polybutadiene (HTPB)-based composite propellants [

42]. The Maxwell model has been successfully applied to stress relaxation predictions of many polymer composites [

5]. For example, to the modeling of the viscoelastic properties of barium titanate (BTO)-elastomer (Ecoflex) composites [

43]; modern photocurable MED610 resin, which is used mainly in medicine and dentistry [

44]; perfluorosulfonic acid-based materials (applied in proton exchange membrane fuel cells) [

45]; and the viscoelastic behavior of virgin EPDM/reclaimed rubber blends [

46].

The Kohlrausch–Williams–Watts (KWW) relaxation function has been widely used to describe the relaxation behavior of glass-forming liquids and complex systems [

47]. However, in the ongoing debate on the application of the KWW function to relaxation phenomena in different polymers [

48,

49], in particular to liquids and glasses, this model is widely used. Examples of research published this year alone include modeling of the mechanical properties of highly elastic and tough polymer binders with interweaving polyacrylic acid (PAA) with a poly(urea-urethane) (PUU) elastomer [

50]; modeling of the relaxation curve describing the local dynamics of ions and hydration water near the RNA interface [

51]; studying the relaxation processes for bulk antipsychotic API aripiprazole (APZ) and the active pharmaceutical ingredients (API) incorporating anodic aluminum oxide (AAO) or silica (SiO

2) systems that are collected during the “slow-heating” procedure [

52]; description of the rheological properties of the cross-linked blends of Xanthan gum and polyvinylpyrrolidone-based solid polymer electrolyte [

53]; studies concerning the stress relaxation process in annealed metallic and polymer glasses [

54]; and the stress relaxation behavior of glass-fiber-reinforced thermoplastic composites [

55].

Although these models are continuous and differentiable with respect to the parameters (Assumption 3) and although the parameters are positive or non-negative due to the physical interpretation, the satisfaction of Assumptions 2–5, which are related to the models and the sets of their admissible parameters, is not obvious. We analyze them separately for the two classes of models.

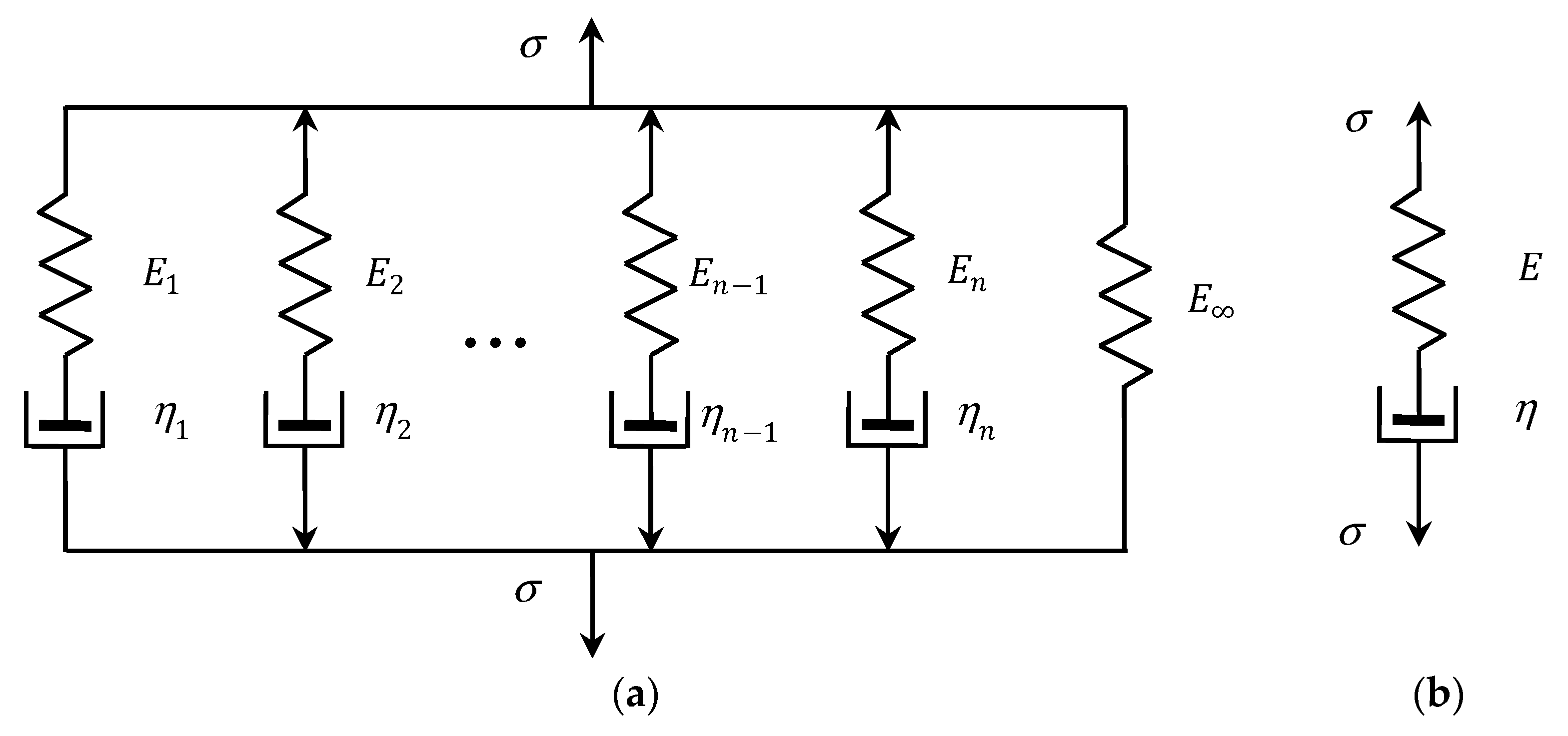

3.5.1. Generalized Maxwell Model

The generalized discrete Maxwell model, which is used to describe the relaxation modulus

of linear viscoelastic materials, consists of a spring and

Maxwell units that are connected in parallel, as illustrated in

Figure 2a. A Maxwell unit is a series arrangement of Hooke and Newton’s elements: an ideal spring in a series with a dashpot, c.f.,

Figure 2b. This model presents a relaxation of an exponential type given by a finite Dirichlet–Prony series [

3]:

with the vector of the model parameters defined as

where

,

and

are the parameters representing the elastic modulus (relaxation strengths), relaxation frequencies and equilibrium modulus (long-term modulus), respectively. The elastic modulus

and the partial viscosity

associated with the

Maxwell mode determine the relaxation frequency

and the relaxation time

. The restriction that these parameters are non-negative and bounded must be given to satisfy the physical meaning. Thus,

, where

is an arbitrary compact subset of

.

For the function

(26), for any

, we have

, and by Equations (26) and (27), the gradient

In view of the boundness of the function for any (whether is bounded or not), Assumptions 2–4 are satisfied for any , provided that is a compact subset of the subspace .

By (26), the following inequalities hold

whence, by virtue of the inequality

[

56] of the vector norm equivalence, where

is the 1-norm (also called the Taxicab norm or Manhattan norm) in the space

, we immediately obtain the following property:

Property 1. For the relaxation modulus (26) of the Maxwell model, the estimation holds for an arbitrary and arbitrary vector (27) of non-negative model parameters.

Property 1 and similar properties for subsequent models allow us to omit the upper constraints imposed on the model parameters in the optimization task (13) when the smoothing functions (11) or (12) are used.

3.5.2. KWW Model

The exponential stretched Kohlrausch–Williams–Watts (KWW) model [

8,

9] describes the relaxation modulus as follows:

where an adjustable parameter

is the initial relaxation modulus, a stretching parameter

is the exponent-spread factor, which quantitatively characterizes the non-Debye (nonexponential) character of the relaxation function, and

and

are, respectively, the characteristic relaxation time and frequency. Thus, the vector of the KWW model’s non-negative parameters is defined as

For any

function,

(28) is continuous and differentiable with respect to

; the gradient is as follows:

and

. The boundness of the first gradient element is obvious. By the inequality

, that holds for any

, we have

The right constraint in (30) is bounded whenever the relaxation frequency

, where

is a small positive constant. However, for any positive

and

, the derivative

becomes unbounded when

. The third derivative

for any positive

and

tends to zero, by negative values, when

and is bounded for any bounded parameter

. Summarizing, the compact set of admissible model parameters is such that

for an arbitrary

. By assumption, a stretching parameter

; however, from the model identification point of view, it is convenient to expand this set to

. For

, the relaxation modulus model is trivial, and

for any

, i.e., its boundness, differentiability and continuity are preserved.

The next property follows directly from the inequality , yielded by (28).

Property 2. For the relaxation modulus (28) of the KWW model, the estimation holds for an arbitrary and arbitrary vector (29) of non-negative model parameters.

3.6. Unconstrained Optimization

The compactness of the set of admissible model parameters

was significant for the convergence results (18)–(21). Due to the compactness of

, the existence of the optimal solutions to regularized tasks (13) and (15) is obvious. However, if the quadratic regularizers expressed by Equations (11) or (12) are applied, the upper constraints on the model parameters can be neglected, provided that they are not motivated by the parameters’ physical meaning. The following two lemmas are instrumental, proved in

Appendix A.3 and

Appendix A.4:

Lemma 1. Let . If Assumptions 1–3 and 6 hold, the weight matrix of the regularizer (11) is a positive definite and the relaxation modulus model (2) is such that for any , where , then a compact subset of exists:whereso thatwhere is the minimal eigenvalue of . Lemma 2. Let . If Assumptions 1–3 are satisfied, the weight matrix of the regularizer (11) is a positive definite, and the relaxation modulus model (2) is such that for any , where , then a compact subset of exists:whereso that Letting , we obtain a regularizer (12) instead of that expressed by (11), and therefore, the above lemmas hold also for the regularizer when applied during numerical studies.

Since the quality indices and are continuous with respect to , and the sets (34) and (31) are compact in the space , in view of the above lemmas, for any there exist the solutions to the upper-constrained regularized optimal approximation tasks given by the right hand sides of Equations (33) and (36) as well as the upper-unconstrained optimal approximation task expressed by the left hand sides of these equations. Since , further, we have . Therefore, both the optimization tasks (33) and (36) can be reduced to the set . If the previously defined set of admissible model parameters is such that , then the upper constraints in the optimization tasks (15) and (13) can be neglected in view of Lemmas 1 and 2. If is not a subset of , then, for example, expanding the set of model parameters to can be used to simplify numerical optimization tasks.

3.7. Numerical Studies

We now present the results of the numerical studies of the asymptotic properties of the identification algorithm and the influence of the measurement noises on the optimal model. In the context of an ill-posed problem, simulation studies allow us, apart from the theoretical analysis carried out above, to demonstrate the validity and effectiveness of the proposed identification method.

It is assumed that material viscoelastic properties are described by the double-mode Gauss-like relaxation spectrum. The Gaussian-like distributions were used to describe the rheological properties of many polymers, e.g., poly(methyl methacrylate) [

16], polyethylene [

15], native starch gels [

20], polyacrylamide gels [

17], glass [

57] and carboxymethylcellulose [

19]. The spectra of a Gaussian character were determined for bimodal polyethylene by Kwakye-Nimo et al. [

15] and for soft polyacrylamide gels by Pérez-Calixto et al. [

17]. Also, the spectra of various biopolymers determined by many researchers are Gaussian in nature, for example, cold gel-like emulsions stabilized with bovine gelatin [

18], fresh egg white-hydrocolloids foams [

19], some (wheat, potato, corn and banana) native starch gels [

20], xanthan gum water solution [

19], carboxymethylcellulose (CMC) [

19], wood [

58,

59], and fresh egg white-hydrocolloids [

19]. Three-, five- and seven-parameter Maxwell models (26) were determined. Next, the KWW model (28) was considered.

The “real” material and all the models were simulated in Matlab R2023b, using the special function erfc for the Gauss-like distribution.

3.8. Simulated Material

Consider viscoelastic material of a relaxation spectrum, described by the double-mode Gauss-like distribution considered in [

15,

60,

61]:

inspired by polyethylene data from [

15], especially the HDPE 1 sample from [

15] (Table 1 and Figure 8b), where the parameters are as follows: [

60,

61]:

,

,

,

,

and

. It is shown in [

60] that the related real relaxation modulus is

Following [

60,

61], the time interval

seconds is assumed for numerical simulations. Therefore, the weighting function in the index

(5) is

.

In the simulated stress relaxation experiment, sampling instants were selected randomly according to the uniform distribution on . Additive measurement noises were generated independently by random choice with a normal distribution, with zero mean value and variance . For the analysis of the asymptotic properties of the scheme has been used. In order to study the influence of the noises on the parameters of the optimal regularized models, the noises have been generated with the standard deviation .

For every class of models (Maxwell, KWW) and for any pair

, the simulated experiment was performed. Next, for any class of models and any

, the regularization parameter

was selected according to the GMA rule, using the experiment data for the smallest

. For this purpose, the parameter vectors

, minimizing the mean quadratic identification nonregularized index

(8) in the optimization task (9), were determined for

. Through the inspection of the relation between elements of the vector

, the diagonal positive definite weight matrices

were selected for the regularizer

(12). Next, the model approximation indices

for the GMA rule (24) were assumed, and optimal regularization parameters

related to the noises of the standard deviations

were found, so that the GMA condition (24) holds. For successive models (three-, five- and seven-parameter Maxwell models and the KWW model), the vectors

, indices

and assumed

and the regularization parameters

are given in

Table A1,

Table A2,

Table A3 and

Table A4 in

Appendix B. In the same tables, the elements of the vectors

which solve the optimization task (15) were presented, together with the related integral model approximation indices

. In the last rows of these tables the diagonal elements

,

, of the weight matrices

(38) were also given.

3.9. Asymptotic Properties

Then, for every class of models and any pair

, the optimal model parameter

was determined by solving the regularized identification task (13) for

. The elements of the parameter vectors

, the indices

and

, as well as the relative percentage errors of the approximation of the measurement-independent parameters

, defined as

are given in

Table 1,

Table 2,

Table 3 and

Table 4 for successive classes of models, and the weakest noises

.

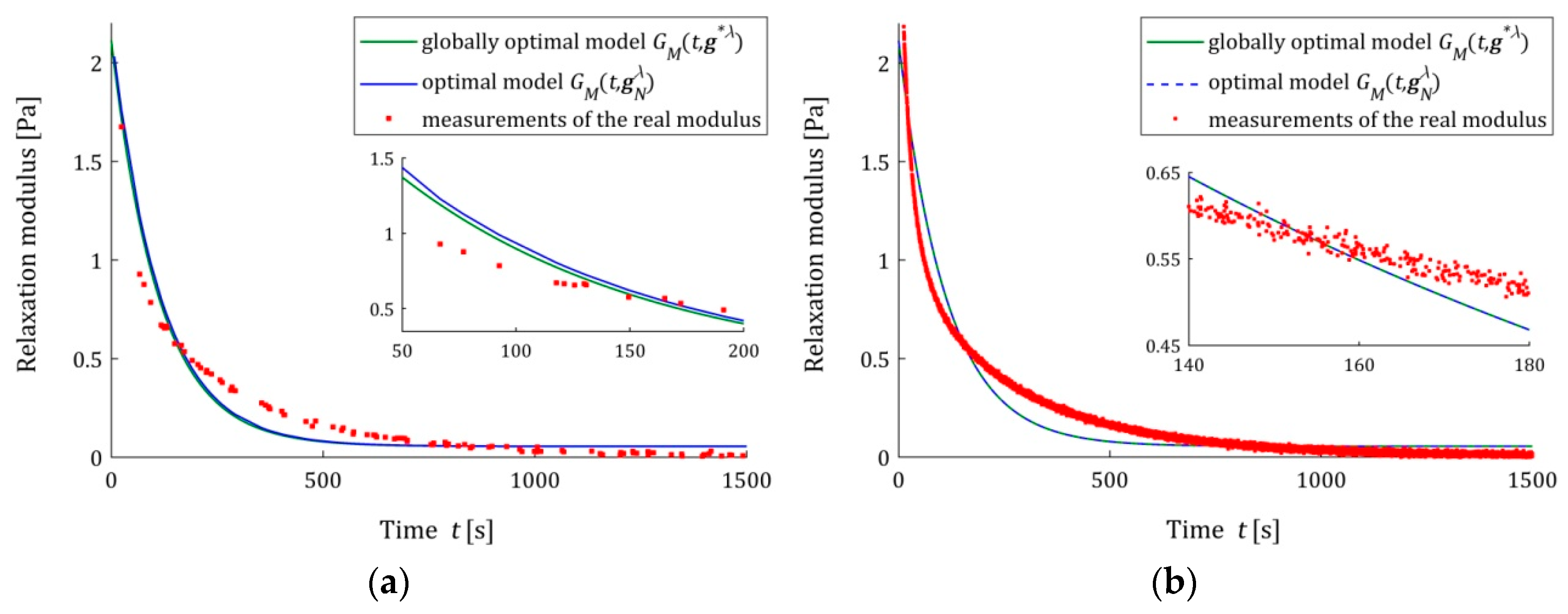

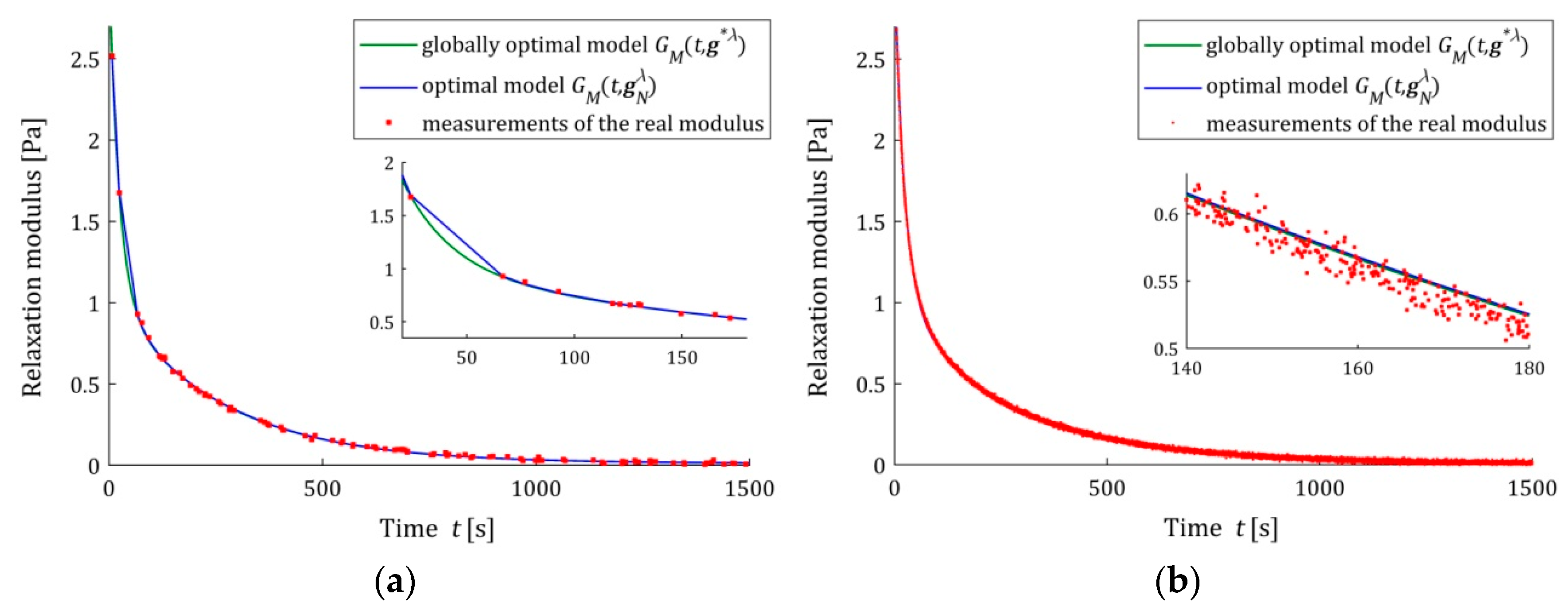

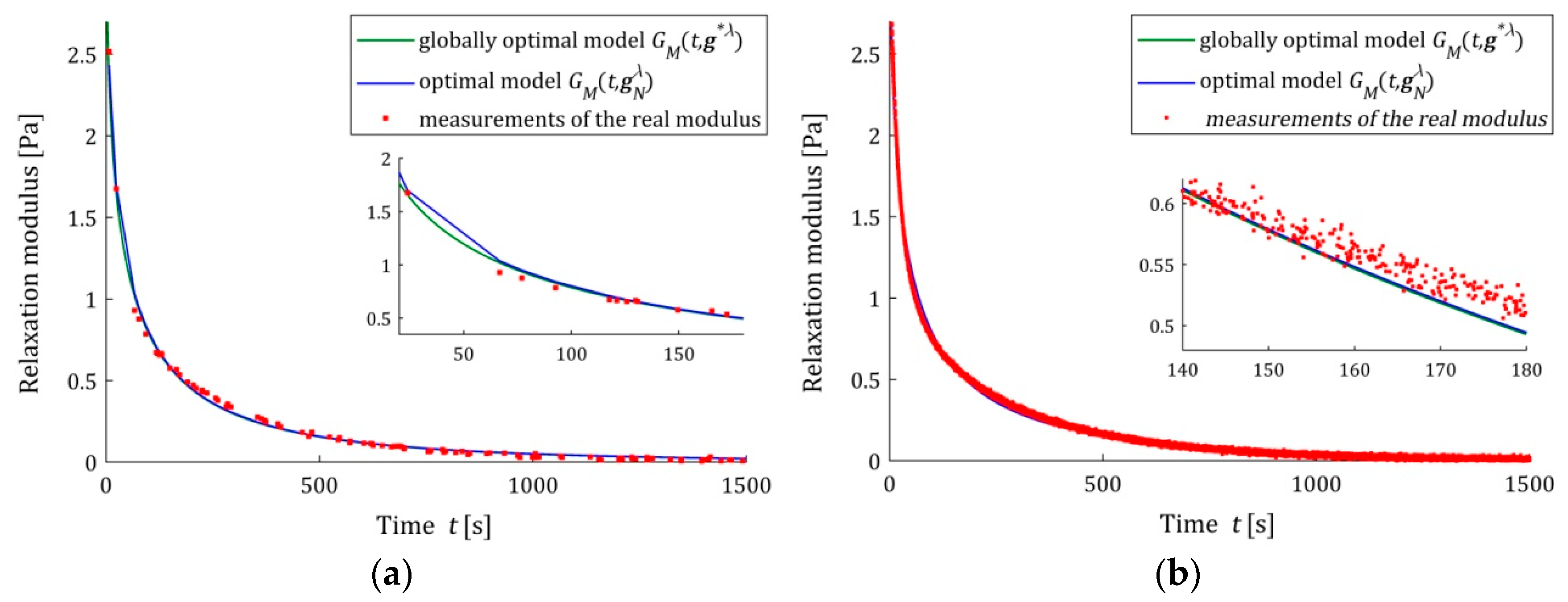

The analysis of the identification indices

and

indicates that the five- and seven-parameter Maxwell models provide a much better approximation of the measurement data and the real relaxation modulus

(37) than the three-parameter model and a better approximation than the KWW model. This is illustrated in

Figure 3,

Figure 4 and

Figure 5, where for the successive classes of model measurements, the

of the real modulus

fitted by the optimal models

are plotted for two numbers of measurements (

and

) and the strongest noises. The plots for the seven-parameter Maxwell model being visually almost identical to that for the five-parameter model and providing an excellent data fit, especially for

measurements, are omitted here; compare also indices

and

from

Table 2 and

Table 3. Although for

measurements, the models

and

differ in the initial time interval (see small subplots), for

measurements, they are practically identical. This applies to the five-parameter Maxwell model with an almost excellent fitting (

Figure 4b), as well as to the KWW (

Figure 5b) and three-parameter Maxwell (

Figure 3b) models with lower quality of the measurement data fit.

We see that also for the strongest noises, the model

tends to

as

increases, even when the accuracy of the measurement data approximation is not excellent for a given class of models. Not only for

, but also for smaller numbers of measurements, these models coincide, which is confirmed by the

(39) values from

Table 1,

Table 2,

Table 3 and

Table 4. The relative percentage errors

(39) of the parameters

and

discrepancy is smaller than 0.1% for

for the three-parameter Maxwell model with the worst fit to the measurement data and for

for the KWW model. However, for more accurate, in terms of

and

indices, in the five- and seven-parameter Maxwell models,

(39) does not exceed for

measurements, respectively, 0.02% and 0.001%.

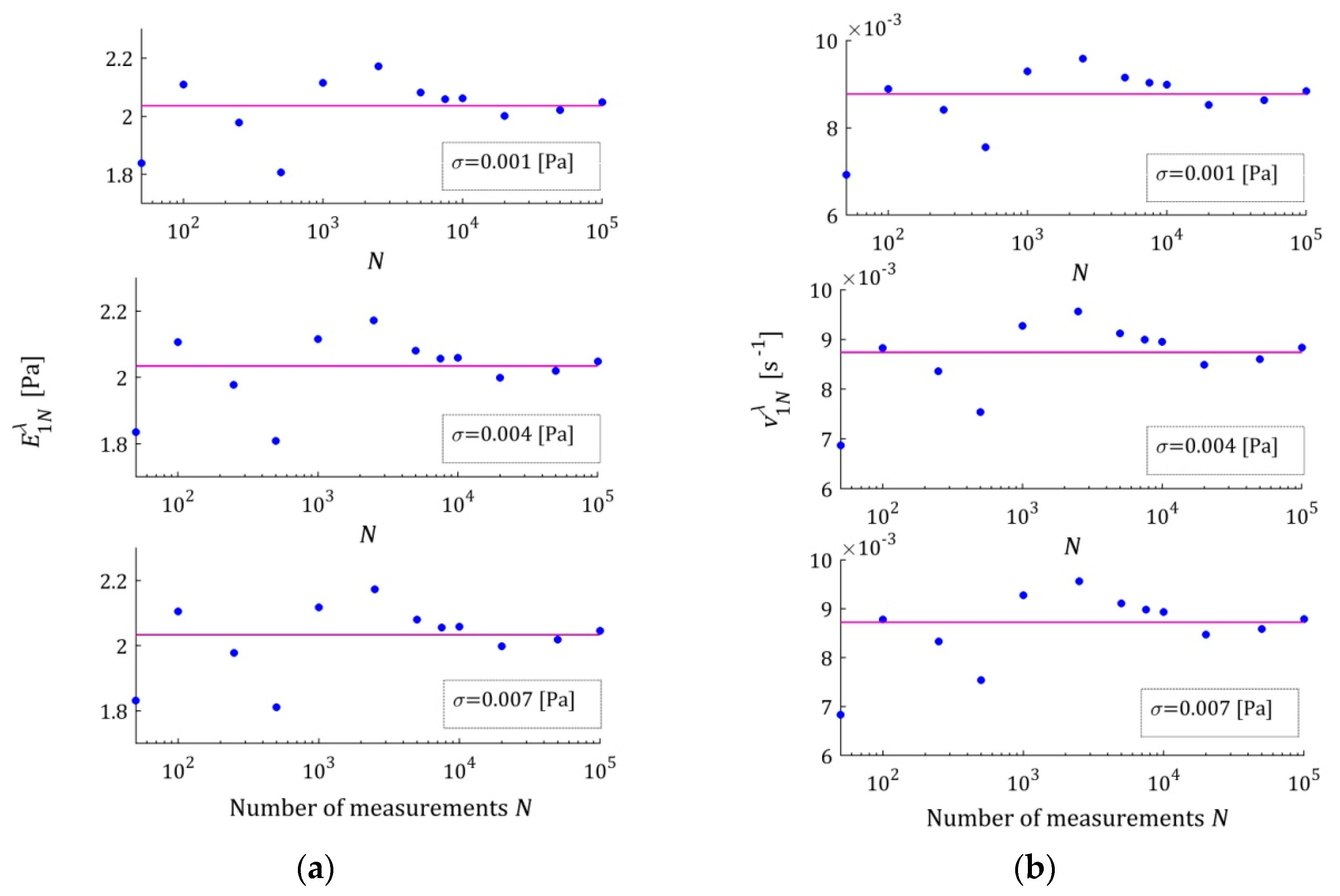

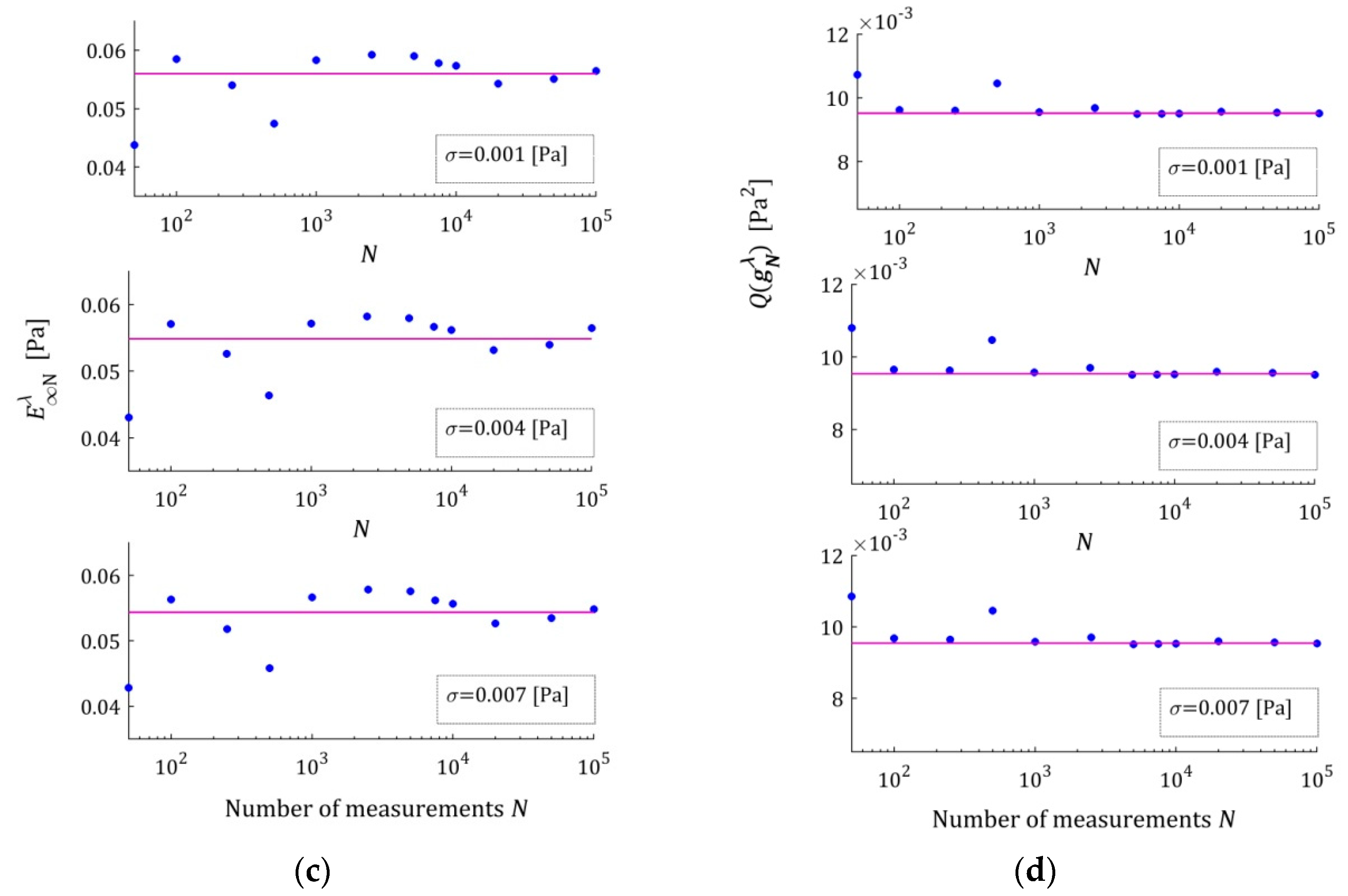

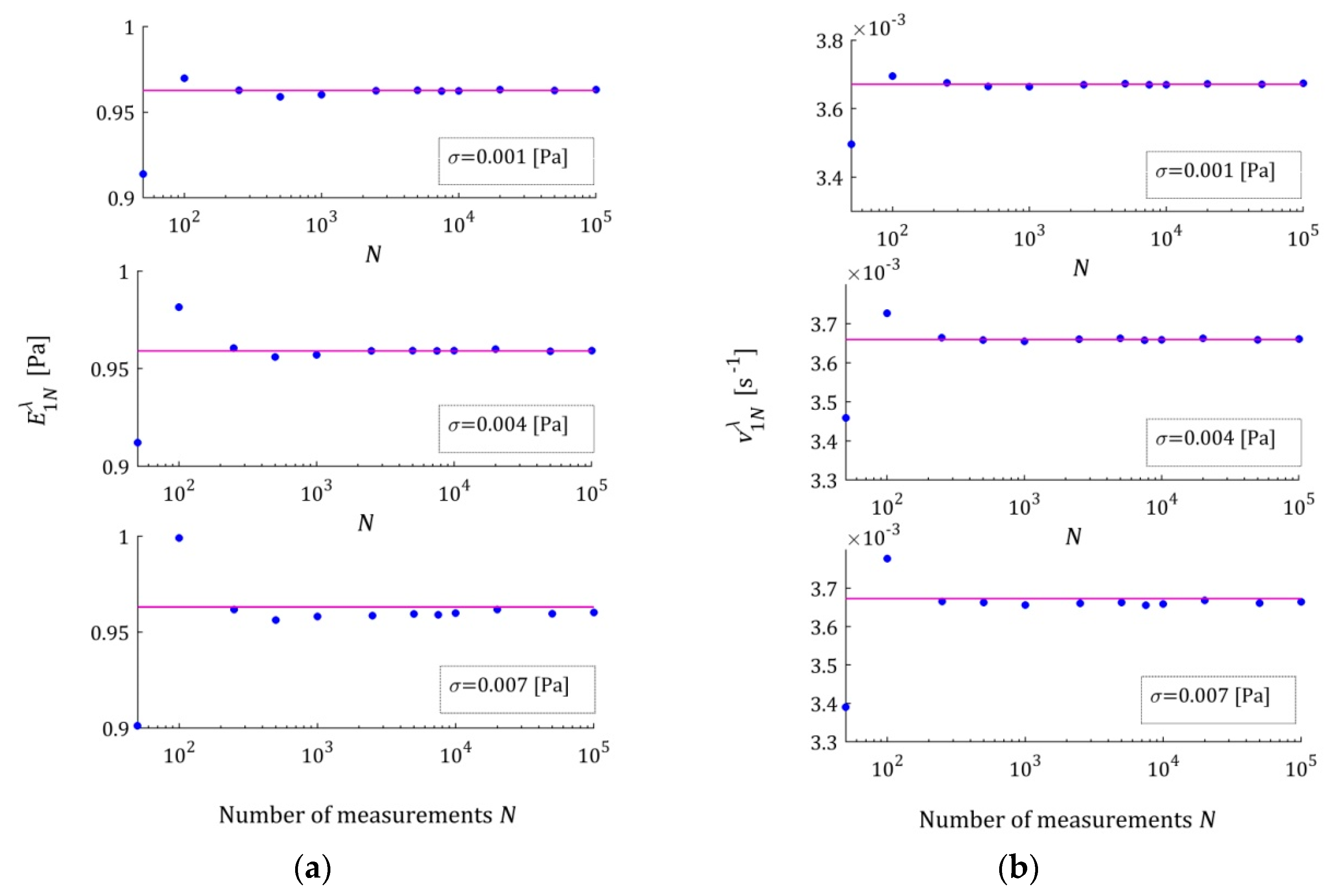

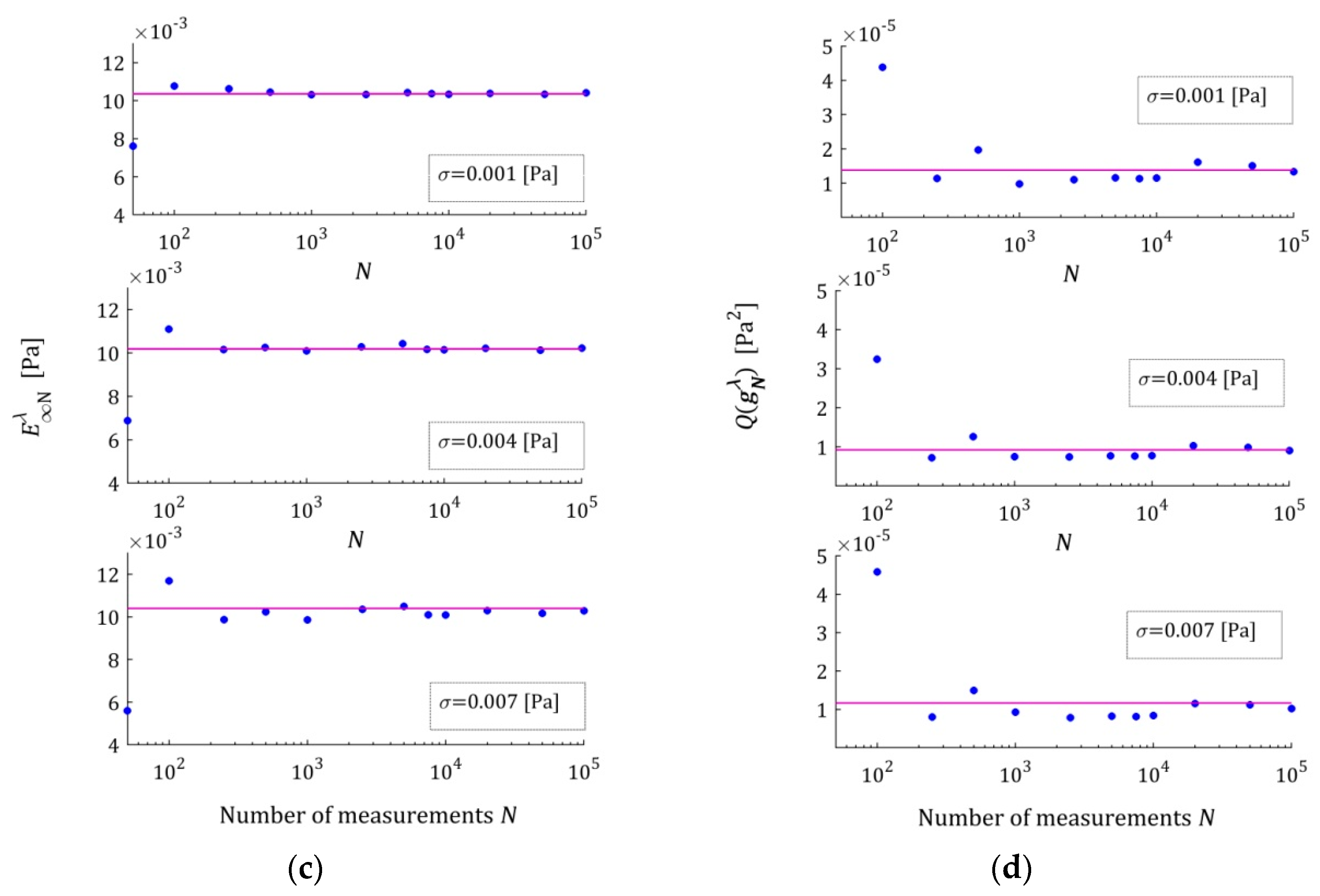

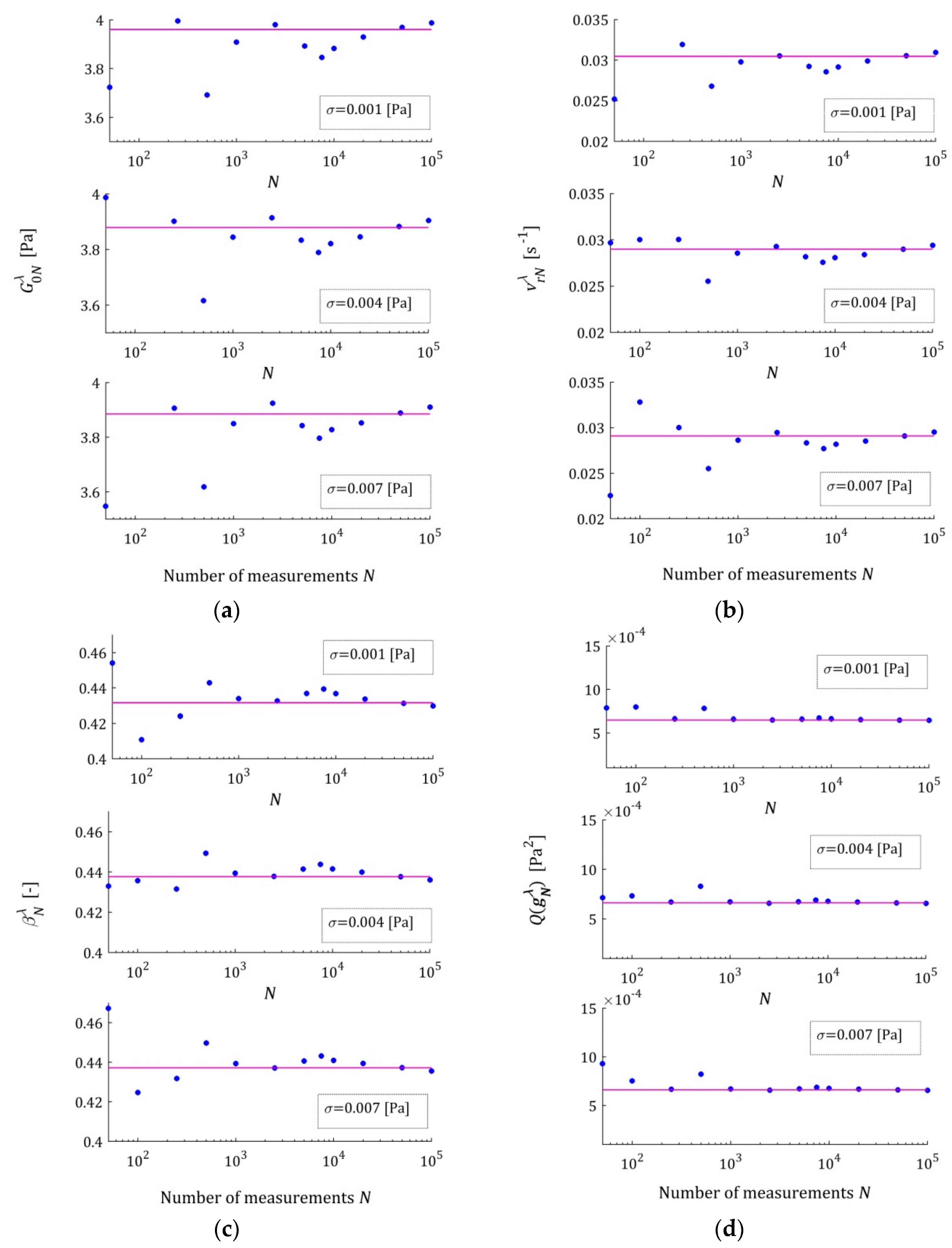

The dependence of the optimal model parameters

on the number of measurements

for the noises of

for the three-parameter Maxwell model are illustrated by

Figure A1a–c in

Appendix B.1. For the five-parameter Maxwell model, the elements of the optimal parameters

as the functions of

are illustrated by

Figure A2a–c,

Appendix B.2. Only extreme—the strongest and weakest—disturbances are considered here to limit the size of the figure. For all the noises of

, the elements of

for the seven-parameter Maxwell model are depicted in

Figure A3 and

Figure A4a–c in

Appendix B.3, while for the KWW model, they are depicted in

Figure A5a–c,

Appendix B.4. In any subplot, the values of the respective parameters of the globally optimal model

are plotted with horizontal violet lines. In these figures, a logarithmic scale is used on the horizontal axes. The asymptotic properties are also illustrated by

Figure A1d,

Figure A2d,

Figure A4d and

Figure A5d juxtaposing, for respective models, the integral index

as a function of

with the index

, marked with horizontal lines. In these figures, the caret for the

variable has been omitted to simplify the description of the plot axes.

These plots confirm the asymptotic properties of the proposed identification algorithm. A better model fit, for the five- and seven-parameter Maxwell models , implies smaller fluctuations in the estimates of its parameters and their faster convergence to the parameters of the sampling-point-independent model . This property translates into the speed of the convergence into .

3.10. Noise Robustness

Now, we are interested in the noise robustness properties of the regularized identification algorithm when the concept of experiment randomization is applied to determine the standard relaxation modulus models.

To examine the impact of the measurement noises for every pair , the experiment (simulated stress relaxation test) was repeated times, generating the measurement noises independently by random choice with a normal distribution, with zero mean value and variance .

Next, to estimate the approximation error of the relaxation modulus measurements for the

-element sample, the mean optimal relaxation modulus approximation error was determined:

where

is the vector of optimal model parameters determined for

j-th experiment repetition for given pair

,

.

The mean optimal integral error of the true relaxation modulus approximation

was also computed.

By generalization of the distance between the regularized vector of model parameters

and the measurement-independent vector

(for noise-free measurements), estimated by relative error

(39), for the

element sample, the mean relative error of the parameter

approximation was defined as

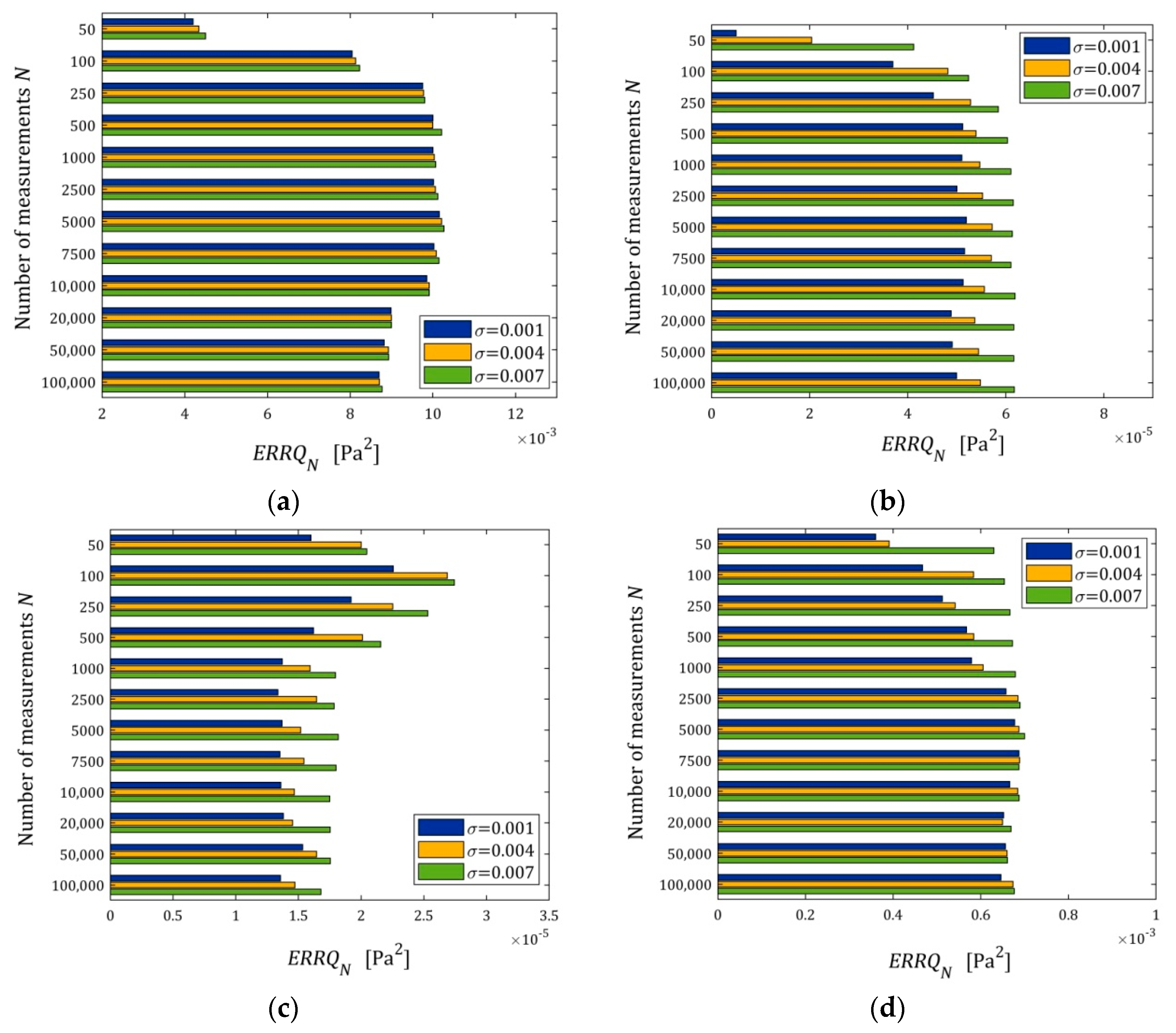

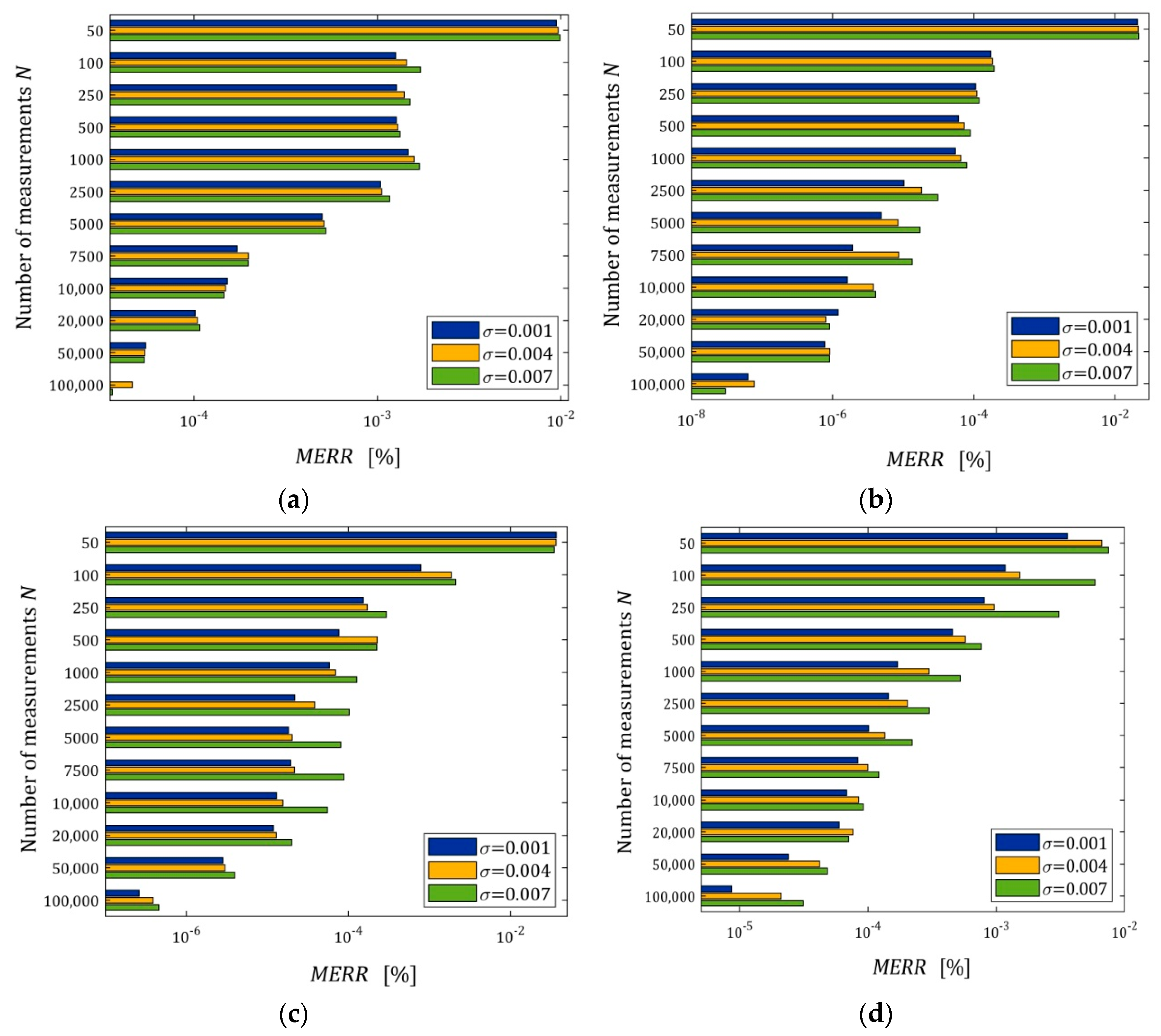

The index

(40) as a function of

and

is depicted in the bar in

Figure 6; linear scales are used for the index

axis.

We can see that for does not depend essentially on the number of measurements, neither for small nor large noises. For the five- and seven-parameter Maxwell model, the algorithm ensures very good quality of the measurement approximation even for large noises, and for the three-parameter and KWW model, the measurement data fit is ten times weaker than for the five-parameter Maxwell model, but it is still a good approximation. For these models, with a poorer approximation quality, the impact of noises on the approximation quality is weaker than for the five- and seven-parameter Maxwell models, for which the approximation error comes primarily from measurement noises, especially for the seven-parameter model. However, for the five- and seven-parameter Maxwell models, the impact of noises on the approximation quality is slightly larger, and the indices of the order are really very small.

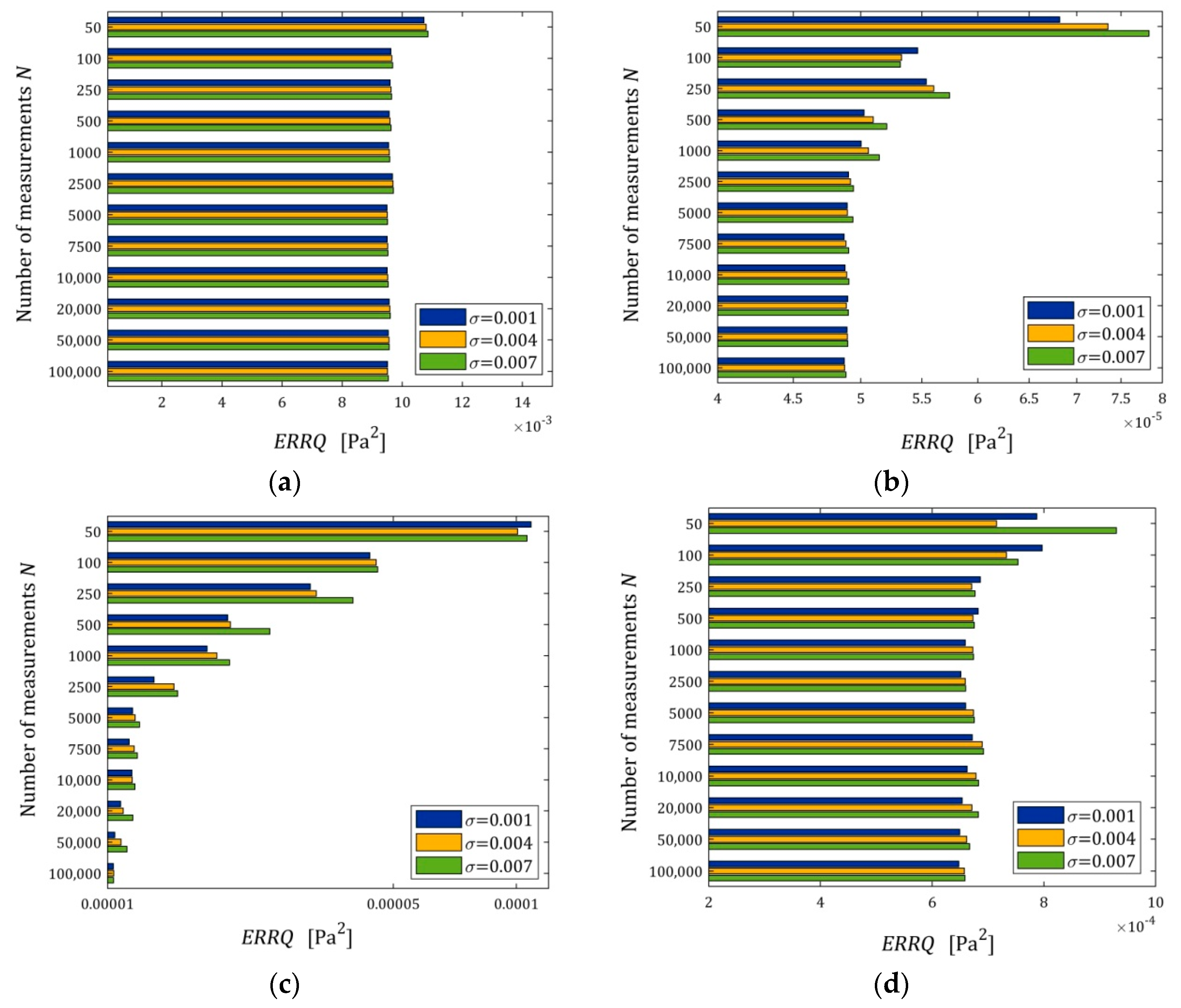

Figure 7 illustrates the dependence of the index

(41) on

and

; for the three-parameter Maxwell model and the KWW model, linear scales are used for the

axis, while for the five- and seven-parameter Maxwell models, a logarithmic scale is applied.

The mean integral error

is, generally, a decreasing function of the number of sampling points and an increasing function of the noise standard deviation, as depicted in

Figure 7. This is particularly visible in

Figure 7c for the seven-parameter Maxwell model, which has an excellent fit to the measurement data, compare also

Figure 6c. The interpretation of

Figure 7c becomes quite clear when we take into account the convergence analysis conducted above. As we have shown, the global integral index

(strictly the function

(14)) converges exponentially both with the increase in the number of measurements

and with the decrease in the noise variance

; compare the inequality in Equation (22) and the definition of

(23).

The relationships of the mean relative errors

(42) with

and

are depicted in

Figure 8; a logarithmic scale is used for the

axis in all subfigures. We can see that

decreases exponentially with the increasing number of measurements (logarithmic scale). This index is particularly small for more accurate models (five- and seven-parameter Maxwell models). It is of the order of

for

and even for the strongest disturbances, which practically means determining the globally optimal parameter

. The characteristics from

Figure 8 also confirm the noise robustness of the estimators

of the optimal parameter

, also for models that approximate the measurement data less well.

To sum up, not so much the dependence of the empirical index (40), but primarily the courses of the indices (42) and (41) as the functions of , indicate the asymptotic independence of the model from the sampling points. The five- or even seven-parameter Maxwell models are necessary for an almost excellent fitting of the data, if the sampling time instants are chosen in an appropriate way.