Abstract

We study the solution concepts of partial cooperative Cournot-Nash equilibria and partial cooperative Stackelberg equilibria. The partial cooperative Cournot-Nash equilibrium is axiomatically characterized by using notions of rationality, consistency and converse consistency with regard to reduced games. We also establish sufficient conditions for which partial cooperative Cournot-Nash equilibria and partial cooperative Stackelberg equilibria exist in supermodular games. Finally, we provide an application to strategic network formation where such solution concepts may be useful.

1. Introduction

The questions of coalition formation and cooperation are central to the theory of strategic behavior. When a subset of the agents forms a coalition, they often behave “cooperatively” in the sense that they choose and implement a joint course of action. Nevertheless, the players across those coalitions do not proceed jointly: their actions are chosen independently and non-cooperatively. Examples of such situations are the formation of a cartel of firms on a market, the signing of an environmental agreement across countries, the cooperation in R&D between firms and the formation of a coalition of political parties. All these examples share a joint cooperate/compete feature that has a long and checkered history within the framework of non-cooperative games. In this literature, it is assumed that coalitions are given, and each coalition, rather than maximizing its individual payoff, maximizes a group payoff function, which can range from a simple sum of individual payoff functions (Mallozzi and Tijs [1,2,3]) to a vector valued function choosing points on the Pareto frontier of the individual payoffs of the members of the coalition (Ray and Vohra [4], Ray [5]). In order to allow for payoff transfers among the players, we choose the sum of payoffs as the cooperators’s objective function rather than a point in the Pareto frontier.

The first way to analyze equilibria in these games is to assume that coalitions play in the spirit of the Nash equilibrium, namely an equilibrium is defined as a set of mutual best responses. The only difference is that individual players are now replaced by coalitions. Each coalition chooses a strategy vector to maximize the group payoff function. This has been the theme in articles such as Chakrabarti et al. [6], Chander and Tulkens [7], Deneckere and Davidson [8] and Ray and Vohra [4]. Other articles that have similar themes are Duggan [9], Salant et al. [10], Beaudry et al. [11], Carraro and Siniscalco [12,13] and Funaki and Yamato [14].

One can also look at a sequential structure, where coalitions do not proceed simultaneously but there is a sequence of moves and one coalition chooses its action followed by another coalition. Of course, the game becomes quite complicated and an open question would be: what is the order of moves? Due to this, one can analyze only very simple settings. The setting that has been analyzed here and also in Chakrabarti et al. [6] is that there is one non-singleton coalition and the rest of players are not part of any coalition. The coalition moves first, and the singleton players move subsequently. The issue in such games is how to define an equilibrium. Generally, while solving this game by backward induction, the coalition assumes that a Nash equilibrium will result in the second stage. If there is a unique Nash equilibrium for every choice made by the coalition, then the coalition can solve the game easily by backward induction. If on the other hand, there is more than one Nash equilibrium in the second stage of the game, the trend has been to focus on a certain focal Nash equilibrium. Two such focal equilibria are the one that is best from the point of view of the coalition, that is maximizes its group payoff function (the coalition is optimistic in its mental outlook) and the other is the worst Nash equilibrium, namely it minimizes the coalition’s group payoff function (the coalition is pessimistic or risk averse in this mental outlook). These ideas have been explored in Mallozzi and Tijs [1,2,3] and Chakrabarti et al. [6]. Other articles that have similar themes are Barrett [15], D’Aspremont et al. [16] and Diamantoudi and Sartzetakis [17].

In this article, we study the two types of sequences of moves: simultaneous moves (Cournot-Nash), where the group of cooperators and the remaining set of independent players choose their strategies simultaneously, and sequential moves (Stackelberg), where the group of cooperators moves first and the independent players choose their strategies subsequently. In these settings, the associated equilibrium concepts are called partial cooperative Cournot-Nash equilibrium and partial cooperative Stackelberg equilibrium respectively.

The relevance of such new solution concepts is highlighted in two directions. Firstly, we point out the importance of partial cooperation in strategic environments by considering a model of strategic network formation. This model has the structure of a social dilemma in the sense that the private interests are at odds with collective efficiency. The consequence is that the only Nash equilibrium corresponds to the Pareto-dominated empty network. On the other hand, whenever some players are allowed to cooperate, there is a partial cooperative Cournot-Nash equilibrium that results in the formation of a Bentham-efficient network, provided that the size of the set of cooperators is large enough compared to the cost of creating a link. Even though we focus on the partial cooperative Cournot-Nash equilibrium for the sake of exposition, a similar result can be obtained with the partial cooperative Stackelberg equilibrium.

Secondly, we characterize the concept of partial cooperative Cournot-Nash equilibrium using axioms that are similar in spirit to Peleg and Tijs [18]. Peleg and Tijs [18] have characterized the Nash equilibrium of a non-cooperative game using three axioms—one-person rationality, consistency and converse consistency. One player rationality is a basic notion of a player being an utility maximizer. For consistency, Peleg and Tijs [18] require that an equilibrium of a game is also the equilibrium of a reduced game, where the reduced game with respect to a certain coalition and a certain strategy tuple consists in fixing the strategies of players outside the coalition to be consistent with the strategy tuple. The remaining players are free to choose their strategies to maximize their reduced payoff function, which is in fact the original payoff function given the strategies of players outside the coalition. The interpretation is that if some players decide to choose their equilibrium strategies and leave, the members of the remaining coalition have no interest in reconsidering their strategies. Converse consistency says that if a strategy profile is an equilibrium in each reduced game, then it is also an equilibrium of the original game. The adaptation of consistency and converse consistency to our setting requires us to modify the reduction operator in order to account for the existence of cooperators. The basic idea is the following: if some cooperators choose their strategies and then leave the game, they transfer the sum of their payoffs to the remaining cooperators. In a sense, these transfers enable the remaining cooperators to evaluate the impact of their strategic choices on the whole group of cooperators. In other words, the group of cooperators never really breaks up when some of its members have left the game, which reflects the fact that it can be seen as having signed a binding agreement of cooperation. Payoff transfers can be found in other articles which mix cooperation and competition as for instance Jackson and Wilkie [19] and Kalai and Kalai [20]. In case the set of cooperators is empty, the reduced game considered in Peleg and Tijs [18] and ours coincide. However, we do not manage to find an axiomatic characterization of the partial cooperative Stackelberg equilibrium.

The application to strategic network formation and the characterization of the partial cooperative Cournot-Nash equilibrium conceal the fact that a partial cooperative equilibrium may fail to exist. In this article we also provide sufficient conditions for the existence of such equilibria by restricting ourselves to the class of supermodular games introduced by Topkis [21] and subsequently studied by Milgrom and Roberts [22], Vives [23], and Zhou [24], among others. The interesting feature of these games is that they allow existence of a Nash equilibrium while doing away with the assumptions of convexity of the strategy sets and quasi-concavity of the payoff functions as was the case in the analysis of Nash [25]. We prove the existence of partial cooperative Cournot-Nash and Stackelberg equilibria in supermodular games. In case these games are not supermodular, we show that a partial cooperative Stackelberg equilibrium exists if the strategy sets are compact, payoff functions are continuous and there exists a Nash equilibrium at the second stage of the game.

The rest of the article proceeds as follows. Section 2 introduces the notation and terminology. In Section 3, we present a model of network formation and discuss the effect of introducing a set of cooperators on the set of stable and efficient networks. In Section 4, we provide the axiomatic characterization of the partial cooperative Cournot-Nash equilibrium. In Section 5, we explore existence of a partial cooperative equilibrium in supermodular games. Finally, Section 6 concludes.

2. Preliminaries

Weak set inclusion is denoted by ⊆, proper set inclusion by ⊂. A game in strategic form is a system , where I is a nonempty and finite set of players, is the non-empty set of strategies of player i, and is the payoff function of player i, where . Typical elements of X are strategy profiles denoted by x and y. For any nonempty subset S of I, we let , with typical element , and use X and instead of and respectively. For any and any S, let . A strategy profile is a Nash equilibrium of Γ if for any and any , where stands for . We denote by G the set of all games in strategic form.

A group of cooperators for a game with player set I consists in a (possibly empty) subset C of I. A game in strategic form with partial cooperation is a pair where is a game in strategic form and is a group of cooperators. Cooperators are assumed to choose their strategies by maximizing the joint payoff of the group members given by=

for each . Non-cooperators play as singletons and maximize their individual payoff functions. There are two main assumptions regarding the sequence of moves in the above set-up that are popular in the literature: (a) simultaneous moves (Cournot-Nash), where all the players choose their strategies simultaneously and (b) sequential moves (Stackelberg), where the cooperators enjoy a first mover advantage and non-cooperators choose their strategies subsequently.

We begin with the first. A strategy profile is a partial cooperative Cournot-Nash equilibrium of if

for any and any , and

for any .

Coming to the second, there are two stages in the game. In the first stage, the cooperators choose a joint strategy . In the second stage, the players in play the reduced game , i.e., the -person game in strategic form where each has strategy space and payoff function being given by for each . By , we denote the correspondence mapping to the set of Nash equilibrium profiles of game .

First, let us consider the class of games where the correspondence is non-empty and single-valued. Then has a unique Nash equilibrium denoted by for any . The cooperators maximize the joint payoff defined in (1) and solve the problem

A vector such that solves (2) is called a partial cooperative Stackelberg equilibrium of .

Next, let us consider the case where the correspondence is a non-empty valued correspondence that is not necessary single-valued. Then given the choice of the cooperators, the game may have multiple Nash equilibria. If the group of cooperators have a pessimistic view about the non-cooperator choice, the cooperators will maximize the function

On the other hand if the group members are optimistic, the cooperators will maximize the function

The equilibria that result are called the pessimistic and optimistic partial cooperative Stackelberg equilibria of respectively. Therefore, a vector such that

and is called a pessimistic partial cooperative equilibrium of . Similarly, a vector such that

and is called an optimistic partial cooperative Stackelberg equilibrium of . For simplicity, we will write instead of . Also, for future reference, define correspondences and where for any arbitrary ,

and

These notions of equilibria, in the order they are presented have been depicted in Chakrabarti et al. [6], Mallozzi and Tijs [1], Chakrabarti et al. [6] and Mallozzi and Tijs [2] respectively. Mallozzi and Tijs [1] investigate a class of games in which a partial cooperative Stackelberg equilibrium always exists, namely the class of symmetric potential games with a strictly concave potential function. Mallozzi and Tijs [3] show that the joint payoff of the cooperators under an optimistic partial cooperative Stackelberg equilibrium is greater than or equal to that under a partial cooperative Cournot-Nash equilibrium. Chakrabarti et al. [6] have investigated the existence of partial cooperative Cournot-Nash equilibrium and the pessimistic partial cooperative Stackelberg equilibrium in symmetric games under standard assumptions for existence of a Nash equilibrium.

Before we end this section, we would like to compare these notions to the notion of coalitional equilibrium of Ray [5].1 A vector is called a coalitional equilibrium of the game , if for all ,

for all , and there does not exist any such that

for all .

The principal difference between the coalitional equilibrium and the partial cooperative Cournot-Nash equilibrium is that the former assumes that no transfers are possible among the group members. So, the group of cooperators chooses a payoff vector that lies in the Pareto frontier of the set of payoff vectors that are attainable by the group of cooperators given the strategies chosen by the non-cooperators.

3. Network Formation with Consent

In order to highlight the impact of partial cooperation on strategic interaction, we consider the non-cooperative model of costly network formation with consent proposed by Myerson [26]. Firstly, let be a finite player set. The bilateral communication possibilities between the players are represented by an undirected graph on I, denoted by , where the set of nodes coincides with the set of players I, and the set of links L is a subset of the set of unordered pairs of elements of I. For simplicity, we write to represent the link . For each player , the set denotes the neighborhood of i in . Player i is a leaf in if . A sequence of distinct players is a path in if for . Let be the set of players j such that there exists a path between i and j.

Formally, the non-cooperative model of costly network formation with consent is described by a game with the following features. Each player has an action set . Player i seeks contact with player j if . Link forms if both players seek contact. Each profile induces a network such that

If link forms then both players support a cost . We assume that the value of network for player i depends on the number of players to whom i is connected but not on the distance between two connected players. Player i’s payoff is equal to his value of the network minus the cost c for any created link as in the connection model without decay in Jackson and Wolinsky [27]:

A network is an equilibrium network if is a partial cooperative equilibrium of for some . A network is (Bentham) efficient if for each

We assume , that is, the cost of creating a link is larger than the direct value of that link. We also impose the upper bound , which ensures the existence of a nonempty efficient network in which each player obtains a positive payoff. Given these assumptions, it is easy to see that every Nash equilibrium of game Γ induces the formation of the empty network.

The absence of nonempty networks arising from Nash equilibria can be avoided by several mechanisms. One of them consists in repeating the game over finitely many periods and assuming that the players have limited capacities to implement repeated game strategies (see Béal and Quérou [28]). In this paragraph, we show that the partial cooperation of a group yields an even stronger result: equilibrium networks can be not only nonempty but also efficient. In other words, partial cooperation can eliminate the tension between stability and efficiency of networks.

Proposition 1 Assume .

- (i)

- Every Nash equilibrium of Γ induces the empty network.

- (ii)

- For each group such that , there exists a partial cooperative Cournot-Nash equilibrium of that results in the formation of an efficient nonempty network.

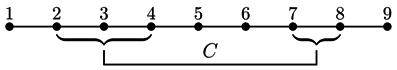

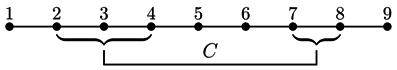

The basic idea of the proof given in the appendix is as follows. For each group C such that , we construct a line network in which the members of the group have strategic locations. More specifically, we can assume that the players on the line are ordered from 1 to n. If , the members of C are the neighbors, 2 and , of the two leaves. If , the group consists of players 2, 3 and . If , the group consists of players 2, 3, and , and so on until . An illustration of the locations of group members on a line network is given below for the case where and :

In the proof of Proposition 1, we show that an action profile that induces such a network is a partial cooperative Cournot-Nash equilibrium. Observe that a neighbor of a leaf, say player 2, would increase his own payoff if he cuts his link with player 1 since he would lose one connection and reduce his cost by . This is the reason why such a player has to belong to the group: taking into account the sum of the payoffs of the group members, it is not interesting for 2 to delete link 12 since connections (with player 1) would be lost among group members while only player 2 reduces his cost by c. In other words, cutting link 12 affect the connections of each group member and this total loss is larger than the cost reduction of c provided that .

4. Axiomatization of the Partial Cooperative Cournot-Nash Equilibrium

The relevance of the partial cooperative equilibria can also be defended axiomatically. Peleg and Tijs [18] have characterized the Nash equilibrium of a non-cooperative game in terms of three properties: one-person rationality, consistency and converse consistency. In this section we show that a similar characterization is possible for the notion of partial cooperative Cournot-Nash equilibrium. To embark on such an endeavour, we need to define an appropriate concept of reduced game.

4.1. More Definitions

In this section, let denote the universe of players. For any game and any subset K of , denotes the unique game with partial cooperation associated to Γ and K, where the group of cooperators consists in those players of I who belong to K, that is . Recall that can be empty. We denote by the set of all games with partial cooperation induced by K and defined by:

Because game is associated to game in a unique way, we will henceforth refer to as Γ in order to save on notations. A solution concept for is a function ϕ which assigns to every a subset of X. Let PCE denote the solution concept on which assigns to every game the set PCE of strategy profiles such that x is a partial cooperative Cournot-Nash equilibrium of Γ with group . We shall refer to PCE as the partial equilibrium correspondence.

We adapt the notion of a reduced game used in Peleg and Tijs [18] in order to account for the existence of a group of cooperators. Given a game , any non-empty and any , the reduced game of Γ with respect to S and x, denoted by , is the system where

for each .2 Note that the class of games with partial cooperation is closed under the reduction operation, that is, if game belongs to , then , , and imply with set of cooperators .

The interpretation of the reduced game is as follows. In the reduced game , each player in S obtains his payoff in the original game with partial cooperation Γ given that the members of play in accordance with x. In addition, if some but not all cooperators belong to , they equally transfer the sum of the payoffs they would have received with in Γ to the cooperators who play the reduced game . The group can be seen as a single entity interrelated by a binding agreement. In the reduced game, the departed members do not actively participate anymore in the choice of a strategy profile. Still they passively participate by transferring a part of their payoff to each of the remaining members. Thus the remaining members are able to measure the consequences of their choices on the whole group.

4.2. Axioms

We provide axioms of solution concepts on the class of games . In order to take into account the particular structure of a game with partial cooperation, we consider variations of several axioms defined in Peleg and Tijs [18]. Throughout this section, ϕ is an arbitrary solution concept on .

Group rationality: For any game such that or , it holds that .

Group consistency: For each such that , each and each , , .

For each such that and , we define

Group converse consistency: For each such that ,

Observe that if , then group rationality, group consistency and group converse consistency are equivalent to the axioms one-person rationality, consistency and converse consistency defined in Peleg and Tijs [18] respectively.

4.3. Characterization

The three axioms that we defined in the previous section uniquely characterize the partial cooperative Cournot-Nash equilibrium correspondence on the class of games .

Theorem 1 The PCE correspondence is the unique solution on that satisfies group rationality, group consistency and group converse consistency.

[Existence] We show that PCE satisfies the three axioms on .

Group rationality: Consider any game such that or . In case , then all players in Γ belong to the group of cooperators associated with Γ. Together with the definition of the payoff function of the group of cooperators in Γ, this means that the set of best responses of cooperators in Γ coincides with the set of maximizers of the sum of the payoff functions of players in I. Hence, if and only if . In case but , arguments are similar.

Group consistency: Consider any game such that , any and any , . First, pick a player if such a player exists. By construction, player i’s payoff in the reduced game is for each . Because , it holds that for any . Hence, for any . Second, in case , consider the subset of the group of cooperators who play in . We have

for each since it is assumed that . For the particular case when players in play , this inequality is

for each , which is equivalent to

for each . We proved that , and because S was chosen arbitrarily, we conclude that PCE satisfies group consistency.

Group converse consistency: Consider a game such that and , and consider such that for each , . To show: .

First, pick a player , and consider the reduced game . By assumption , which implies that . By definition of , this is equivalent to .

Second, in case , consider the subset of cooperators who play in Γ and the reduced game . Note that implies . Since for each , , we have , and so

which is equivalent to

by construction of function in . This proves that as desired.

[Uniqueness] Consider any solution ϕ which satisfies the three axioms on . To show: . Let be any game in . We distinguish two cases. In a first case, suppose that . Then all players in I are members of the group. Because ϕ satisfies group rationality, we have .

In a second case, suppose that . Firstly, we prove that for each . So let Γ be any game in . If then the inclusion is trivially satisfied. Otherwise, choose any . Pick any and consider the reduced game . By group consistency of ϕ, we have . In the reduced game , group rationality yields for each , which is equivalent to for each . Next, consider the group of cooperators involved in game Γ. Once again, group consistency implies . In this reduced game, group rationality yields or equivalently for each . We proved .

Secondly, we prove that . The proof is by induction on the number of players. So, consider any game such that . Denote by i the unique element of I. By group rationality, it holds that , and it does not matter whether or not. Now assume that for any game such that and . Next, consider a game such that and . Pick any . By group consistency of PCE, for each , . Since , the induction hypothesis implies that . Since ϕ satisfies group converse consistency, . Hence, . This completes the proof.

5. Existence in Supermodular Games

Before we formally define supermodular games, we start with the following result which proves existence of optimistic and pessimistic partial cooperative equilibria under standard assumptions of continuity of the payoff functions and compactness of the strategy sets, provided we can guarantee that the reduced game admits at least one Nash equilibrium for every . This Lemma will be used later on for proving the existence of an optimistic and a pessimistic partial cooperative Stackelberg equilibrium.

Lemma 1 Consider a game , where and . For each , suppose that is a compact subset of a Euclidean space and that is continuous. If the correspondence is non-empty valued, then both an optimistic and a pessimistic partial cooperative equilibrium of exist.

The proof of Lemma 1 is quite involved, so we have relegated it to the Appendix.

5.1. Supermodular Games

Consider a set W partially ordered by the binary relation ⪯ and let V be a subset of W. If w is in W and () for each v in V, then we say w is an upper bound (lower bound) of V with respect to W. An upper bound (lower bound) of a set that belongs to the set itself is a maximum (minimum). If the set of upper bounds (lower bounds) of V with respect to W has a minimum (maximum), we say it is the least upper bound or supremum (greatest lower bound or infimum) of V with respect to W and is denoted by (). For two elements, v and w of W, if their supremum (infimum) lies in W, it is their join (meet) and denoted by ().

A partially ordered set that contains the join and meet of each pair of its elements is a lattice. An example of a lattice is a Euclidean space where the relevant binary relation is simply the usual vector ordering relation ≤. Namely, for and , if for all . The join and meet of a pair of elements v and w are simply the coordinate-wise maximum and minimum, namely,

and

For a lattice W, let be non-empty. If both and belong to V for all , then we say that W is complete lattice. By putting , it follows that a complete lattice has a greatest and least element. A lattice W for which either or for all is called a chain.

If V is a subset of a lattice W and V contains the join and meet (with respect to W) of each pair of elements of V, then V is a sublattice of W.

Let V be some sublattice of . A function is said to be supermodular on V (sometimes, we say supermodular in ) if for all , it is the case that

Let W and Θ be subsets of a Euclidean space partially ordered by ≥. A function is said to satisfy increasing differences in if for all such that and all such that , it is the case that

Next, we present a lemma linking these notions.

Lemma 2 Suppose is supermodular in . Then,

- (i)

- f is supermodular in w for each fixed θ, i.e., for any fixed , and for any w and in W, we have

- (ii)

- f satisfies increasing differences in .

For a proof, see Sundaram ([29], p. 257). In fact an equivalence can be derived by a slightly stronger notion of increasing differences. To see this, let . A function satisfies increasing differences on V if for all distinct i and j in , all such that and all such that , it is the case that

Then, we can show the following.

Lemma 3 Let . A function is supermodular on V if and only if f has increasing differences on V.

For a proof, see Sundaram ([29], p. 264). Finally, the game is supermodular if for each :

- is a sublattice of some Euclidean space;

- is supermodular on for each ;

- has increasing differences in .

Next, we present the following result.

Theorem 2 (Zhou, 1994) Consider a supermodular game . If is compact and is upper semi-continuous on for each fixed and each , then the set of Nash equilibria constitutes a non-empty complete lattice, and hence has a maximum and a minimum.

5.2. The Partial Cooperative Stackelberg Equilibrium

First, we prove the existence of an optimistic and a pessimistic partial cooperative Stackelberg equilibrium for supermodular games. Using Lemma 1 and Theorem 2, the Theorem follows.

Theorem 3 Consider a supermodular game . For each , assume that is compact and is continuous. Then, for any , both an optimistic and a pessimistic partial cooperative Stackelberg equilibrium of exist.

Consider any . Given Lemma 1, all that needs to be proven is that the correspondence is non-empty valued. Consider an arbitrary and the reduced game . Clearly is a supermodular game and satisfies all the assumptions of Theorem 2. Hence, admits a Nash equilibrium for every making non-empty valued.

We can weaken continuity of to upper semi-continuity of on and get existence of an optimistic and a pessimistic partial cooperative Stackelberg equilibrium, but this requires additional assumptions regarding the nature of strategy sets and/or payoff functions. We summarize them in the form of the following proposition.

Proposition 2 Consider a supermodular game . For each , let be compact and be upper semi-continuous on for each fixed . Then, for any , both an optimistic and a pessimistic partial cooperative Stackelberg equilibrium of exist if either of the following conditions hold:

- (a)

- X is finite.

- (b)

- is finite and, for all , is monotone increasing (or monotone decreasing) in , i.e., implies (or

Firstly, for any given , consider the reduced game . It is a supermodular game and satisfies all the conditions of Theorem 2. Therefore, the set of Nash equilibria of is a non-empty complete lattice and admits a greatest and a least element.

(a) For every , being a nonempty subset of a finite set , is finite. Since any finite set of real numbers has a maximum and minimum, and are non-empty. So, and are well-defined functions. Now, consider the sets of real numbers and . Given that is finite, these are finite sets of real numbers as well and hence, admit a maximum.

(b) If is monotone increasing in , then contains the least element and contains the greatest element of the complete lattice . So, and are well-defined functions. Once again, finiteness of implies that and admit a maximum. The proof when is monotone decreasing is similar and is omitted.

5.3. The Partial Cooperative Cournot-Nash Equilibrium

For a supermodular game to admit a partial cooperative Cournot-Nash equilibrium, we require the strategy sets to be compact, and the payoff functions to be upper semi-continuous on the entire domain of all strategy tuples. To prove this, we begin by creating an artificial game and showing that the Nash equilibrium of this game corresponds to the partial cooperative Cournot-Nash equilibrium in the original game. To this end, consider the non-cooperative game corresponding to the game with partial cooperation defined in Section 2 where

Lemma 4 Given , a Nash equilibrium of is a partial cooperative Cournot-Nash equilibrium of .

Fix any . Towards a contradiction, consider a Nash equilibrium of that is not a partial cooperative Cournot-Nash equilibrium of . Then, either for some and or for some . Both cases contradict that x is a Nash equilibrium of .

Lemma 4 directly leads us to our existence result by showing that under appropriate restrictions satisfies the conditions of Theorem 2 and hence admits a Nash equilibrium.

Theorem 4 Consider a supermodular game . For each , suppose that is compact and is upper semi-continuous and supermodular on X. For any , a partial cooperative Cournot-Nash equilibrium of exists.

Consider the non-cooperative game constructed from and . We will prove that satisfies all the conditions of Theorem 2. The cartesian product of sublattices is a sublattice, which makes a sublattice of some Euclidean space. The sum of supermodular functions on a lattice is also supermodular. Since is supermodular on X for each , it follows that F is supermodular on X. Hence, by Lemma 2, is supermodular on for all . Further, the fact that F is supermodular on X implies by Lemma 2 that F has increasing differences in . The finite sum of upper semi-continuous functions is upper semi-continuous so that F is upper semi-continuous on X and is upper semi-continuous on for all . The game satisfies all the conditions of Theorem 2, and hence admits a Nash equilibrium. Therefore, using Lemma 4, admits a partial cooperative Cournot-Nash equilibrium.

6. Conclusions

We would like to end by briefly considering some topics of further research. First, consider the partial cooperative Cournot-Nash equilibrium. Obviously, we are looking at the second stage of a two stage process, the first stage being a coalition formation game. A complete analysis would therefore make the formation of the group endogenous and look at stable coalitions. However, to do this kind of analysis, one needs to specify in significant details, for instance the nature of the payoff function. Such analysis has been carried out in Carraro and Siniscalco [12,13].

The other direction in which to take this kind of analysis is to consider other coalition structures. Here, we have analyzed the case of one non-singleton coalition because this is the dominant theme in several articles. But one can also look at multiple non-singleton coalitions. For instance, see Beaudry et al. [11]. Our results can be generalized into these settings without significant hurdles.

With regard to the partial cooperative Stackelberg equilibrium, one can look at settings where such an equilibrium would normally arise. For instance, see D’Aspremont et al. [16] and Barrett [15] for an analysis of stable coalitions in this setting. One can also look at axiomatization of these equilibria analogous to our axiomatization of the partial cooperative Cournot-Nash equilibria, an issue that we have tried to tackle without much success.

Acknowledgments

The authors thank an associate editor and two referees for valuable suggestions. Financial support by the French National Agency for Research (ANR)—research program “Models of Influence and Network Theory” ANR.09.BLANC-0321.03—is gratefully acknowledged. The third author thanks the funding of the OTKA (Hungarian Fund for Scientific Research) for the project “The Strong the Weak and the Cunning: Power and Strategy in Voting Games” (NF-72610).

References

- Mallozzi, L.; Tijs, S. Conflict and Cooperation in Symmetric Potential Games. Int. Game Theor. Rev. 2008a, 10, 245–256. [Google Scholar] [CrossRef]

- Mallozzi, L.; Tijs, S. Partial Cooperation and Non-Signatories Multiple Decision. AUCO Czech Econ. Rev. 2008b, 2, 21–27. [Google Scholar]

- Mallozzi, L.; Tijs, S. Stackelberg vs. Nash assumption in IEA Models. J. Opt. Appl. (Forthcoming).

- Ray, D.; Vohra, R. Equilibrium Binding Agreements. J. Econ. Theory 1997, 73, 30–78. [Google Scholar] [CrossRef]

- Ray, D. A Game Theoretic Perspective of Coalition Formation; Oxford University Press: New York, NY, USA, 2007. [Google Scholar]

- Chakrabarti, S.; Gilles, R.P.; Lazarova, E. Partial Cooperation in Symmetric Games; School of Management and Economics, Queen’s University Belfast: Belfast, UK, 2009; (Working Paper). [Google Scholar]

- Chander, P.; Tulkens, H. A Core of an Economy with Multilateral Environmental Externalities. Int. J. Game Theory 1997, 26, 379–401. [Google Scholar] [CrossRef]

- Deneckere, R.; Davidson, C. Incentives to Form Coalitions with Bertrand Competition. RAND J. Econ. 1985, 16, 473–486. [Google Scholar] [CrossRef]

- Duggan, J. Non-Cooperative Games among Groups; Department of Political Science and Department of Economics, University of Rochester: Rochester, NY, USA, 2001; (Working Paper). [Google Scholar]

- Salant, S.W.; Switzer, S.; Reynolds, R.J. Losses from Horizontal Merger: The Effects of Exogenous Change in the Industry Structure on Cournot-Nash Equilibrium. Quart. J. Econ. 1983, 98, 184–199. [Google Scholar] [CrossRef]

- Beaudry, P.; Cahuc, P.; Kempf, H. Is it Harmful to Allow Partial Cooperation. Scand. J. Econ. 2000, 102, 1–21. [Google Scholar] [CrossRef]

- Carraro, C; Siniscalco, D. The International Dimension of Environmental Policy. Eur. Econ. Rev. 1992, 36, 379–387. [Google Scholar]

- Carraro, C.; Siniscalco, D. Strategies for International Protection of the Environment. J. Public Econ. 1993, 52, 309–328. [Google Scholar] [CrossRef]

- Funaki, Y.; Yamato, T. The Core of an Economy with a Common Pool Resource: A Partition Function Form Approach. Int. J. Game Theory 1999, 28, 157–171. [Google Scholar] [CrossRef]

- Barrett, S. Self-Enforcing International Environmental Agreements. Oxford Econ. Pap. 1994, 46, 804–878. [Google Scholar]

- D’Aspremont, C.; Jacquemin, A.; Gabszewicz, J.J.; Weymark, J.A. On the Stability of Collusive Price Leadership. Can. J. Econ. 1983, 16, 17–25. [Google Scholar]

- Diamantoudi, E.; Sartzetakis, E.S. Stable International Environmental Agreements: An Analytical Approach. J. Public Econ. Theory 2006, 8, 247–263. [Google Scholar] [CrossRef]

- Peleg, B.; Tijs, S. The Consistency Principle for Games in Strategic Form. Int. J. Game Theory 1996, 25, 13–34. [Google Scholar] [CrossRef]

- Jackson, M.O.; Wilkie, S. Endogenous Games and Mechanisms: Side Payments Among Players. Rev. Econ. Stud. 2005, 72, 543–566. [Google Scholar] [CrossRef]

- Kalai, A.D.; Kalai, E. Engineering Cooperation in Two-Player strategic Games. Microsoft Research and Kellogg School of Management, Northwestern University: Evanston, IL, USA, 2010; (Working Paper). [Google Scholar]

- Topkis, D.M. Equilibrium Points in Nonzero-sum Submodular Games. SIAM J. Contr. Optimizat. 1979, 17, 773–787. [Google Scholar] [CrossRef]

- Milgrom, P.; Roberts, J. Rationalizability, Learning and Equilibrium in Games with Strategic Complementarities. Econometrica 1990, 58, 1255–1277. [Google Scholar] [CrossRef]

- Vives, X. Nash Equilibrium with Strategic Complementarities. J. Math. Econ. 1990, 19, 305–329. [Google Scholar] [CrossRef]

- Zhou, L. The Set of Nash Equilibria of a Supermodular Game is a Complete Lattice. Game. Econ. Behav. 1994, 7, 295–300. [Google Scholar] [CrossRef]

- Nash, J.F. Equilibrium Points in N-person Games. Proc. Nat. Acad. Sci. 1950, 36, 48–49. [Google Scholar] [CrossRef] [PubMed]

- Myerson, R.B. Game Theory: Analysis of Conflict; Harvard University Press: Cambridge, MA, USA, 1991. [Google Scholar]

- Jackson, M.O.; Wolinsky, A. A Strategic Model of Social and Economic Networks. J. Econ. Theory 1996, 71, 44–74. [Google Scholar] [CrossRef]

- Béal, S.; Quérou, N. Bounded Rationality in Repeated Network Formation. Math. Soc. Sci. 2007, 54, 71–89. [Google Scholar] [CrossRef] [Green Version]

- Sundaram, R.K. A First Course in Optimization Theory; Cambridge University Press: New York, NY, USA, 1996. [Google Scholar]

- Berge, C. Topological Spaces: Including a Treatment of Multi-Valued Functions, Vector Spaces and Convexity, 1963; reprinted by Dover Publication, Inc.: Mineola, NY, USA, 1997. [Google Scholar]

- Moore, J.C. Mathematical Methods for Economic Theory 2; Springer-Verlag: Heidelberg, Germany, 1999. [Google Scholar]

Appendix

Proof of Proposition 1

The graph is the subgraph of induced by S. Two players i and j are connected in if or if there exists a path from i to j. A coalition T of I is a component of a graph if the subgraph is maximally connected, i.e., if the subgraph is connected and for each , the subgraph is not connected. Note that the collection of components of forms a partition of I. A tree is a graph such that every pair of players is connected by a unique path. Note that a tree on I has exactly links. A line is a tree with exactly two leaves. A forest is a graph such that each subgraph induced by a component T is a tree.

[Proposition 1] For a proof of point (i), see Proposition 1 in Béal and Quérou [28]. For the proof of point (ii), we start by proving that under condition , a network is efficient if and only if it is a tree network. Firstly, a tree network is more efficient than the empty network if and this inequality holds if . Secondly, any network that is not a forest cannot be efficient since some links can be deleted without altering the value of the network. Therefore, the set of efficient networks is included in the set of nonempty forests. Thirdly, we show that a tree is more efficient than any forest with at least two components. So consider any nonempty forest with K components, . We denote by the number of players in component , . The total payoff of forest is

Because for each , we obtain

Moreover,

so that

which proves efficiency of tree networks. Next, let be any group of size at least . For any , denotes the largest integer smaller than or equal to k. Three cases have to be distinguished.

Case (a). If , the result is immediate since the line network is an efficient network by assumption .

Case (b). If , let if is odd or if is even. Consider the strategy profile x such that

and for each ,

Remark that is a line network described by the path . Let us prove that x is a partial cooperative Cournot-Nash equilibrium of . A player cannot add a link by himself to since for each . Similarly, it is not in the interest of the group to add more links since the extra links would not bring more value to the network.3 Therefore, it is sufficient to test whether the group and players in have an incentive to cut some of their links. It is easy to check that the assumption implies for each and thus , which means that neither the group nor any player in would benefit from cutting all their links. Next, observe that for each while deleting their unique link entails a null payoff. It remains to check deviations for the group of cooperators and for players in . Firstly, consider the case of a player . If player i cuts one of his two links, he will lose at least connections while paying for one less link. Such a deviation is deterred if

Since , this condition is met whenever . Secondly, consider the case of the group. The best deviation that the group can consider consists in cutting link 12 or link . Cutting one such link implies a loss of one connection for each member of the group and a cost reduction of c. The deviation is deterred if . Since both for each and , this condition is verified whenever the condition in the statement of the proposition holds. Once again, the previous inequality prevents deviations in which the group deletes links 12 and .

Case (c). If , the proof is similar except that one of the two leaves is added to the group.

This proves that x is a partial cooperative Cournot-Nash equilibrium assumption of that induces the formation of an efficient nonempty network.

Proof of Lemma 1

In this section, we consider games for which it is assumed that the correspondence is non-empty valued for each and each . First, we prove that the correspondence is closed and compact-valued.

Proposition 3 Consider a non-cooperative game such that, for each , is a compact subset of a Euclidean space and is continuous. For any , if the correspondence is upper hemi-continuous and compact-valued.

Consider any satisfying the conditions above and any . First, we show that the correspondence is closed. Consider two convergent sequences, and such that for each . We shall prove that . By the definition of a Nash equilibrium,

for each and any arbitrary . Given is continuous and the fact that and , it holds that for any ,

This proves that . Hence, is a closed correspondence. Next, because a closed correspondence is closed-valued, is closed-valued and hence is a closed set for all . Given that is compact, being a closed subset of a compact set is compact as well, making compact-valued. Finally, since a closed and compact-valued correspondence is upper hemi-continuous, we conclude that is upper hemi-continuous.

Next, we show that functions and are well-defined.

Proposition 4 Consider a non-cooperative game such that, for each , is a compact subset of a Euclidean space and is continuous. For any , functions and are well-defined.

Consider any satisfying the conditions above and any . For any , consider the function defined as for each . From the continuity of , is continuous. In addition, we would like to maximize on the subset , which is a compact set by the proof of Proposition 3. As a consequence, a maximum and a minimum of on exists for each . Thus and are non-empty for every , which implies that functions and are well-defined.

The proof of Lemma 1 uses the following Berge’s Theorem in order to show that the two correspondences and are upper hemi-continuous. Let and .

Theorem 5 (Berge, 1963) Let be an upper hemi-continuous and non-empty valued correspondence and an upper semi-continuous function. Then the function defined as

is upper semi-continuous.

For a proof, see Theorem 2 pp. 116 in Berge [30] or Proposition 12.3 pp. 278 in Moore [31].

[Proof of Lemma 1] We only detail the proof of existence of an optimistic partial cooperative equilibrium since the proof of existence of a pessimistic partial cooperative equilibrium is similar. Consider any satisfying the conditions above and any . From Proposition 3, is upper hemi-continuous and compact-valued. Then, upper semi-continuity of follows from an application of Theorem 5 to correspondence , function and . Since the upper semi-continuous function is defined on the compact set , it admits a maximum. Hence, an optimistic partial cooperative equilibrium exists.

- 1.See also Ray and Vohra [4].

- 2.Note that .

- 3.For completeness, note that the group can simultaneously delete and add links between its members. However any such change in the network configuration cannot improve the value of the network for the group.

© 2010 by the authors; licensee MDPI, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).