Relevance and Evolution of Benchmarking in Computer Systems: A Comprehensive Historical and Conceptual Review

Abstract

1. Introduction

2. Scope and Approach

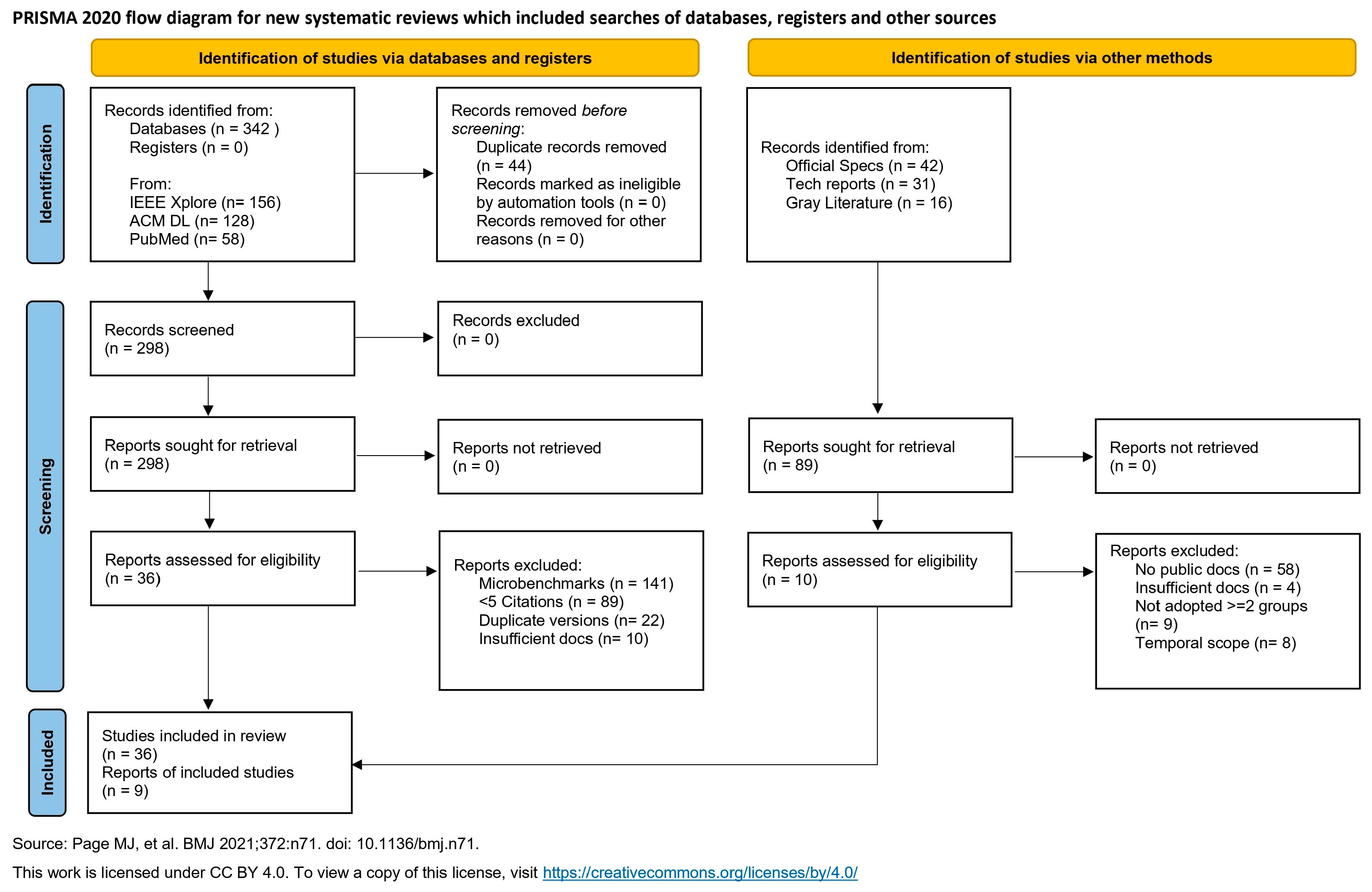

2.1. Review Methodology

- Selection criteria

- a.

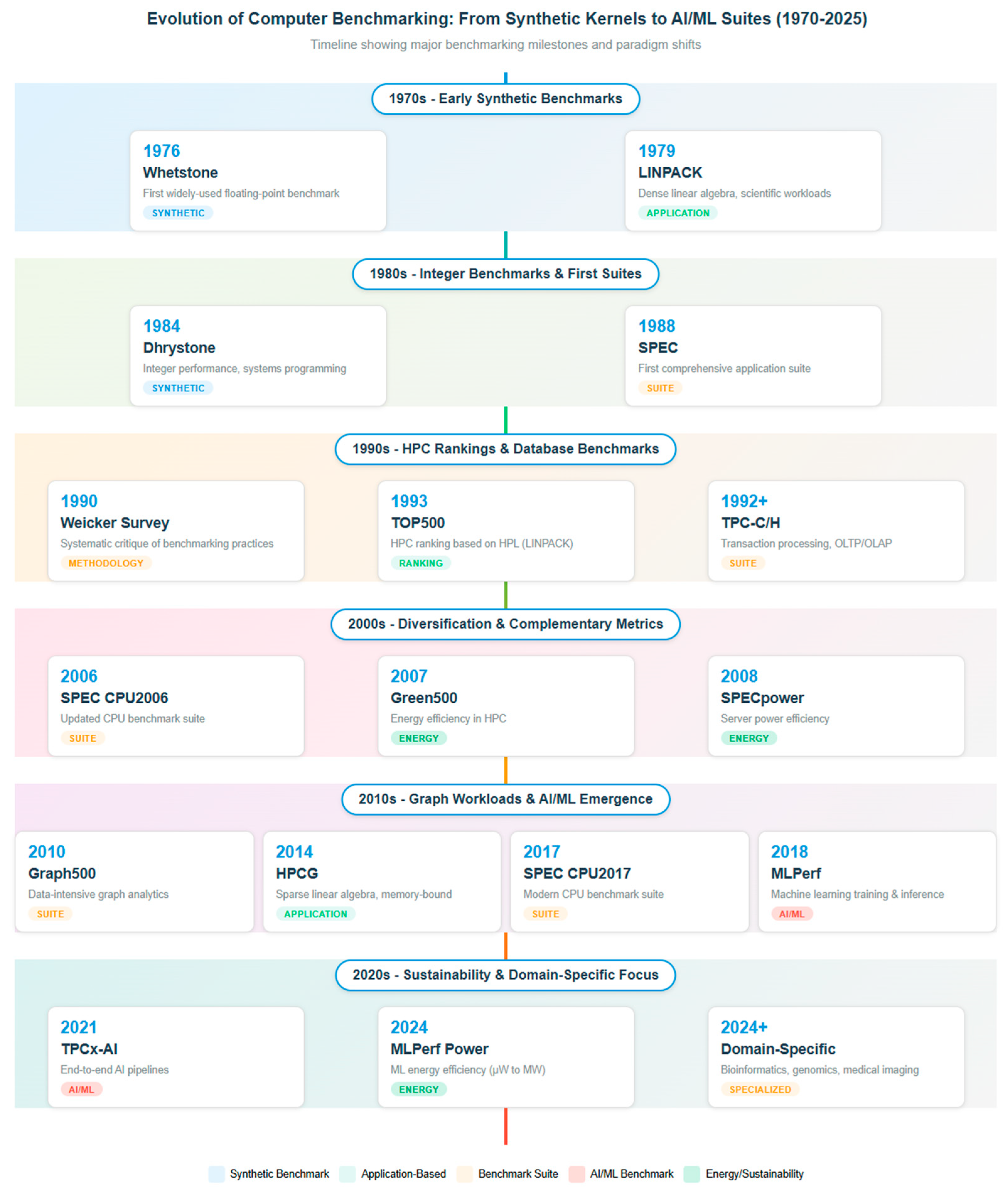

- Temporal Scope: Benchmarks published during 1970−2025, with emphasis on post-1990 developments building on Weicker’s survey [1].

- b.

- Inclusion Criteria: (i) Benchmarks with formal specifications or standardized implementations; (ii) adoption by ≥2 independent research groups or industry consortia; and (iii) availability of published performance results in peer-reviewed venues or official repositories.

- c.

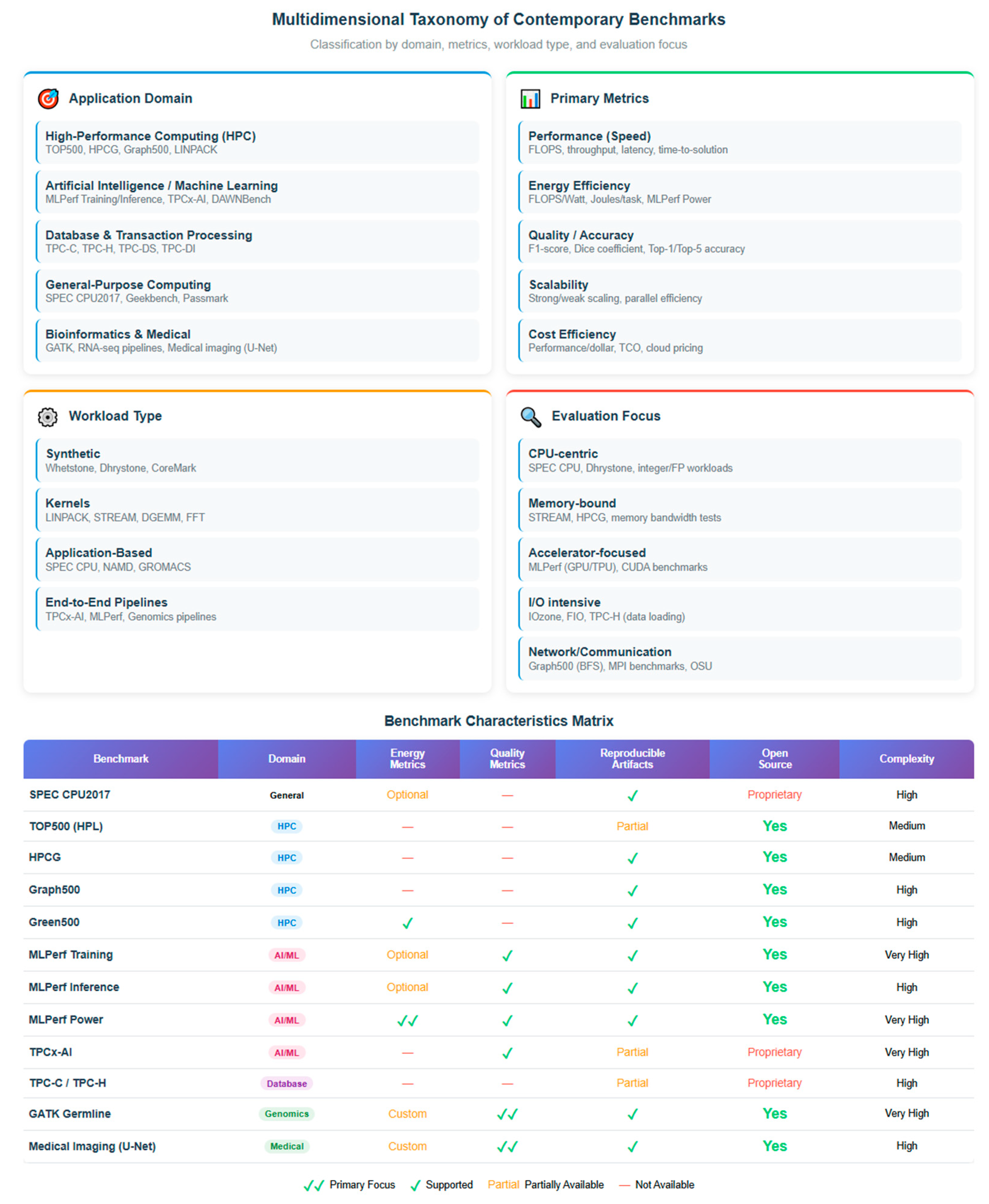

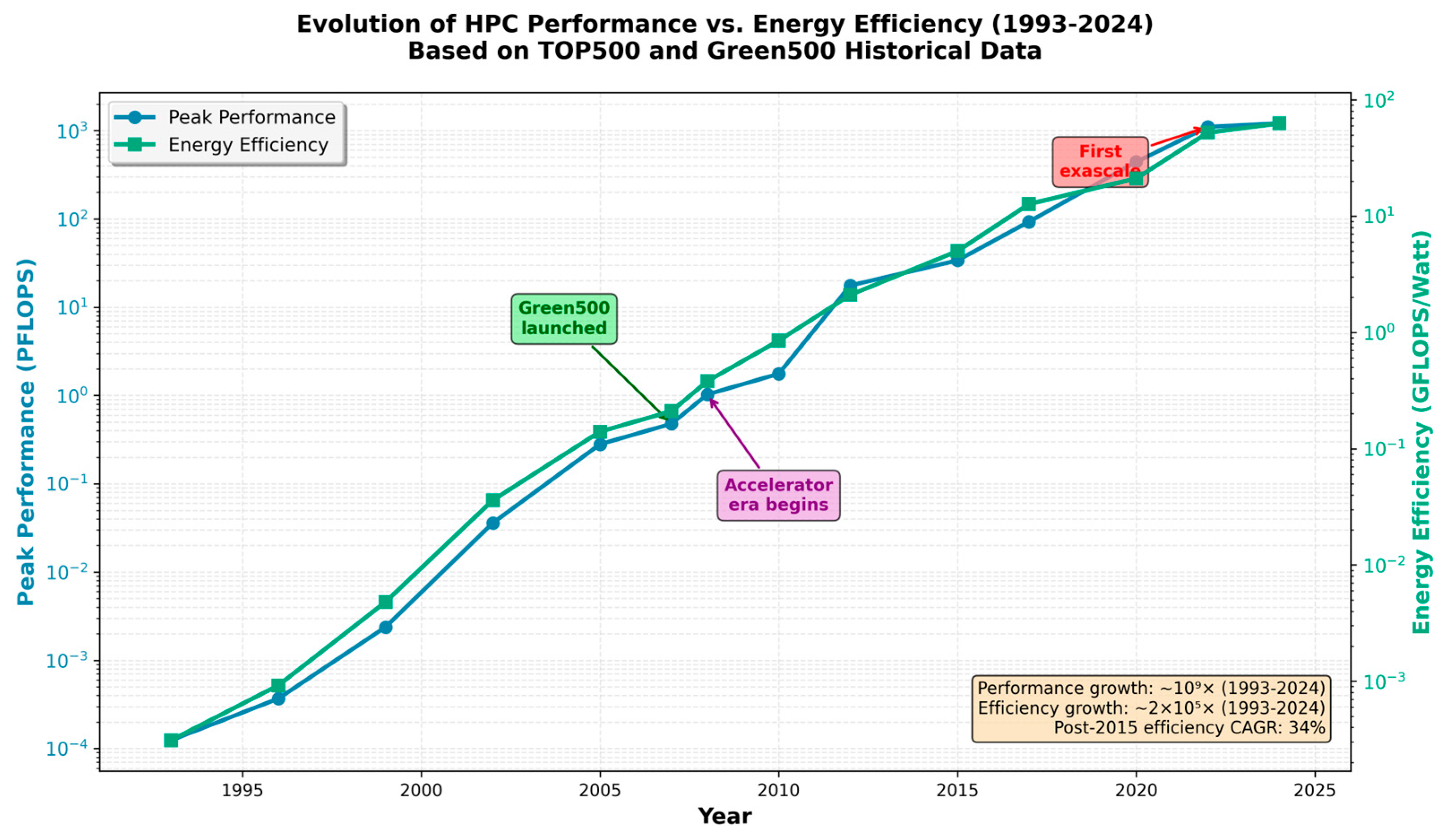

- Domain Focus: General-purpose computing (SPEC CPU), HPC (TOP500/HPCG/Graph500), AI/ML (MLPerf, TPCx-AI), and biomedical sciences (genomics, imaging).

- d.

- Exclusion Criteria: Proprietary benchmarks without public documentation; microbenchmarks targeting single hardware features; and benchmarks cited in <5 publications.

- Information sources

- a.

- Primary Sources: Official benchmark specifications from SPEC, TPC, MLCommons, and Graph500 Steering Committee.

- b.

- Academic Databases: IEEE Xplore, ACM Digital Library, PubMed (search terms: “benchmark* AND (performance OR evaluation OR reproducibility)”).

- c.

- Gray Literature: Technical reports from national laboratories (LLNL, ANL) and manufacturer white papers with traceable data.

- Data extraction: For each benchmark, we systematically documented:

- a.

- Design methodology (synthetic vs. application-based);

- b.

- Measured metrics (speed, energy, accuracy);

- c.

- Reproducibility provisions (run rules, artifact availability);

- d.

- Domain representativeness claims and validation evidence.

- Synthesis approach: No meta-analytic statistics were computed due to heterogeneity in reporting formats. Instead, we provide qualitative synthesis organized by historical periods (pre−1990, 1990−2010, 2010−2025) and thematic analysis of recurring methodological challenges identified by Weicker [1].

2.2. Limitations

3. Weicker: Contributions and Enduring Pitfalls

3.1. From Synthetic Kernels to Suites and Rankings

3.2. AI/ML Benchmarks and End-to-End Pipelines

- Bias in Hardware: The reference implementations and quality targets of MLPerf frequently prioritize architectures characterized by high memory bandwidth and tensor-processing units (TPUs), which are specialized AI accelerators. Systems designed for conventional HPC workloads may exhibit relatively inferior performance, despite their ability to execute production ML workloads effectively. This architectural bias may skew procurement decisions if not explicitly recognized.

- Representativeness and Dataset Variability: MLPerf uses stable datasets (ImageNet for vision, SQuAD for NLP (Natural Language Processing)); however, operational installations meet variable data distributions, domain shifts, and hostile inputs not captured in benchmarks. In medical imaging and scientific computing, model performance on benchmark datasets may not translate to real-world circumstances.

- Issues with Reproducibility: In spite of run rules, various things reduce reproducibility: TensorFlow and PyTorch versions evolve quickly; GPU/TPU execution is non-deterministic; compiler and library optimizations differ across platforms; and hyperparameter search algorithms are not disclosed. Exact replication often requires vendor-specific customization even with published setups.

- Metric and Model Churn: Benchmark tasks and quality criteria become obsolete as ML research develops. MLPerf sometimes retires tasks and introduces new ones, complicating longitudinal comparisons. Standard measurements like top-1 accuracy may overlook fairness, robustness, calibration, and other production-critical attributes.

- Tools for Vendor Optimization: Like Weicker’s [1] warning about ‘teaching to the test,’ vendors may over-optimize for benchmark-specific patterns, resulting in performance benefits that do not transfer to diverse workloads. Fused operations for benchmark models, caching schemes using fixed input sizes, and numerical accuracy choices that degrade non-benchmark data are examples.

3.3. Energy, Sustainability, and Multi-Objective Metrics

- Instrumentation: Sensor type (e.g., RAPL (Running Average Power Limit) counters for CPUs, NVML (NVIDIA Management Library) for GPUs, external power analyzers), calibration certificates, and measurement boundaries (chip-level, board-level, or system-level, including cooling).

- Sampling: Polling frequency (e.g., RAPL’s ~1 ms resolution vs. external meters at 1–10 kHz), synchronizat9ion with workload phases, and treatment of idle/baseline power.

- Uncertainty quantification: Sensor accuracy specifications (typically ±1–5% for RAPL [35]), propagation through integration (joules = ∫power × dt), and statistical variance across runs (MLPerf requires ≥ 3 replicates).

- Reporting: MLPerf Power mandates separate “performance run” (latency/throughput) and “power run” (energy under load) to prevent Heisenberg-like measurement interference where instrumentation overhead distorts results [15].

3.4. Reproducibility and Artifact Evaluation

- Immutable environments (containers with digests; pinned compilers/libraries; exact kernel/driver versions);

- Hardware disclosures (CPU/GPU models, microcode, memory topology, interconnect);

- Run rules and configs (threads, affinities, input sizes, batch sizes, warm-ups, seeds);

- Open artifacts (configs, scripts, manifests) deposited in a long-lived repository.

3.5. Domain-Specific Benchmarks: Biomedical and Bioinformatics

- Genomics (Germline Variant Calling): Evaluate on Genome in a Bottle (GIAB) truth sets with stratification by genomic context. Metrics: F1 for SNPs (Single Nucleotide Polymorphisms) and indels (insertions and deletions), precision–recall curves by region, confidence regions, and error analyses by category. Report the pipeline (mapping → duplicate marking → recalibration → calling → filtering), software versions (GATK (Genome Analysis Toolkit), BWA (Burrows–Wheeler Aligner), or others), random seeds, and accuracy–performance tradeoffs (time/J and cost per sample) [16,17].

- Medical Imaging (Segmentation): U-Net/3D-U-Net architectures for organ/lesion segmentation. Quality metrics: Dice, Hausdorff distance, calibration; system metrics: P50/P99 latency, throughput (images/s), energy per inference, and reproducibility (versioned weights and inference scripts). Account for domain shift across sites and scanners [4].

- Domain-specific Good Practices. (i) Datasets with appropriate licensing and consent; (ii) anonymization and ethical review; (iii) model cards and data sheets; (iv) joint reporting of accuracy–cost–energy; and (v) stress tests (batch size, image resolution, noise) and hyperparameter sensitivity [5].

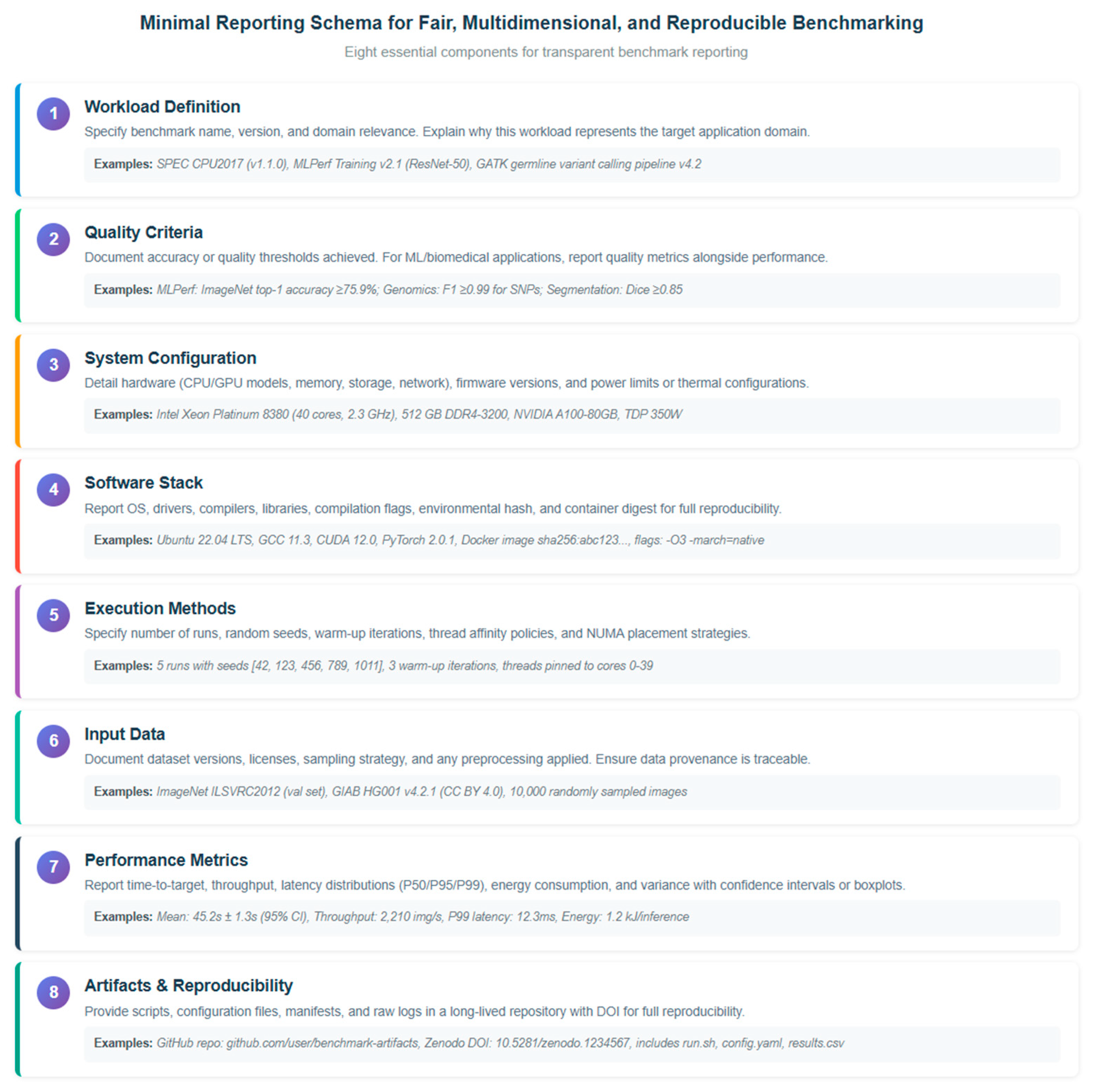

3.6. A Minimal Reporting Schema

- Workload definition: name, version, and domain relevance.

- Quality criteria: accuracy/quality thresholds met (if applicable).

- System: hardware (CPU/GPU, memory, storage, network), firmware, and power limits.

- Software: OS (operating system), drivers, compilers, libraries, flags; environmental hash; and container digest.

- Methods: runs, seeds, warmups, affinity policies, and NUMA (Non-Uniform Memory Access) placement.

- Inputs: dataset versions and licenses; sampling strategy.

- Metrics: time-to-target, throughput, latency distributions, energy, and variance (CI/boxplots).

- Artifacts: scripts/configs and raw logs; repository link and DOI.

- ACM Artifact Review Badges [38]: Components 4 (Software Stack) and 8 (Artifacts) directly support “Artifacts Available” and “Results Reproduced” badges through documented environments and persistent repositories.

- FAIR Principles [39]: Component 6 (Input Data) requires dataset licensing and versioning, enabling findability and accessibility; Component 8 mandates DOI assignment (interoperability/reusability).

- IEEE Standard 2675-2021 [40]: Our schema extends DevOps (Developers Operations)oriented practices to performance benchmarking by mandating container digests (Component 4) and statistical variance reporting (Component 7).

- Reproducibility Hierarchy [6]: The schema targets Level 3 (computational reproducibility) by requiring immutable execution environments but enables Level 4 (empirical reproducibility) when quality criteria (Component 2) include uncertainty quantification.

3.7. Case Study: Applying the Reporting Schema to Genomic Variant Calling

4. Results

Illustrative Example: Benchmark-Dependent Rankings

- System A: High-frequency CPU with large L3 cache, optimized for single-thread performance.

- System B: Multi-core processor with moderate clock speed, optimized for parallel throughput.

- System C: Accelerator-equipped system with GPU and specialized AI cores.

5. Discussion

6. Conclusions

6.1. Practical Implications of the Proposed Framework

- For researchers and benchmark designers:

- -

- Before publication: Ensure full disclosure of system configuration (hardware, firmware, software stack with version hashes), execution methodology (runs, seeds, affinity), and raw data artifacts (logs, configs) deposited in permanent repositories with DOIs.

- -

- For reproducibility: Use immutable environments (containers with cryptographic digests), document all non-deterministic operations, and provide tools to automate re-execution.

- -

- For fairness: Adopt standardized run rules (as in SPEC, MLPerf) that prohibit benchmark-specific tuning not applicable to general workloads. Disclose any deviations and their rationale.

- For system evaluators and practitioners:

- -

- In procurement: Demand multi-benchmark evaluation across workloads representative of actual use cases. Single-number metrics (MIPS, FLOPS, even aggregated scores like SPECmark) are insufficient for informed decisions.

- -

- In reporting: Publish per-benchmark results with variance measures (confidence intervals, boxplots) rather than only aggregated means. This exposes performance variability and helps identify outliers.

- -

- In interpretation: Recognize that benchmark rankings are workload-dependent (as illustrated in Section Illustrative Example: Benchmark-Dependent Rankings). Match benchmark characteristics to actual deployment scenarios rather than seeking universal ‘best’ systems.

- For domain-specific applications (e.g., biomedicine):

- -

- Beyond runtime: Report quality metrics (F1, Dice, calibration) alongside execution time and energy. Fast but inaccurate models provide no clinical value.

- -

- Data provenance: Document dataset versions, licensing, patient consent, and institutional review approval. Ensure reproducibility extends to data preparation pipelines, not just model training.

- -

- Sensitivity analysis: Test performance across batch sizes, input resolutions, noise levels, and data distributions to assess robustness and generalization.

- For journal editors and conference organizers:

- -

- Artifact review policies: Mandate the availability of code, configurations, and documentation as a condition for publication of performance claims. Follow models from systems conferences (ACM badges for reproducibility).

- -

- Energy disclosure: Require energy measurements for computationally intensive workloads (training large models, long-running simulations) using standardized instrumentation (e.g., as in MLPerf Power, SPECpower).

- -

- Transparency in vendor collaborations: Require disclosure of vendor-supplied tuning, hardware access, or co-authorship to identify potential conflicts of interest.

6.2. Open Challenges and Future Directions

- Balancing standardization and innovation: Rigid benchmark specifications promote comparability but may stifle architectural innovation that does not fit prescribed patterns. Future benchmarks should allow flexibility for novel approaches while maintaining measurement rigor.

- Addressing emerging workloads: Benchmarks lag rapidly evolving applications (large language models, quantum–classical hybrid computing, edge AI). Community processes must accelerate benchmark development and retirement cycles.

- Multi-objective optimization: As energy, cost, and quality become co-equal with performance, we need principled methods for Pareto-optimal reporting rather than reducing to scalar metrics. Visualization and interactive exploration tools can help stakeholders navigate trade-off spaces.

- Cross-domain integration: Current benchmarks remain siloed by domain. Real-world systems increasingly serve mixed workloads (AI inference alongside database queries, HPC simulations with in situ analytics). Benchmarks capturing co-scheduled, heterogeneous workloads are an open research area.

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| BFS | Breadth-First Search |

| BWA | Burrows–Wheeler Aligner |

| CAGR | Compound Annual Growth Rate |

| CI | Confidence Interval |

| CO2-eq | Carbon Dioxide Equivalent |

| DevOps | Developer Operations |

| DMIPS | Dhrystone Million Instructions Per Second |

| DOI | Digital Object Identifier |

| GATK | Genome Analysis Toolkit |

| GB | Gigabyte (memory unit) |

| GIAB | Genome in a Bottle (Consortium for high-precision genomes). |

| GM | Geometric Mean |

| GPU | Graphic Processing Unit |

| HPC | High-Performance Computing |

| HPCG | High-Performance Conjugate Gradient |

| HPL | High-Performance LINPACK |

| indels | Insertions and deletions |

| I/O | Input/Output |

| KAN | Kolmogorov-Arnold Networks |

| M | Millions |

| min | Minute (time unit) |

| MFLOPS | Mega Floating-Point Operations Per Second |

| MIPS | Millions of Instructions Per Second |

| ML | Machine Learning |

| NLP | Natural Language Processing |

| NUMA | Non-Uniform Memory Access |

| NVML | NVIDIA Management Library |

| OS | Operating System |

| RAPL | Running Average Power Limit |

| RNA-seq | Ribonucleic Acid sequencing |

| SNP | Single Nucleotide Polymorphisms |

| SPEC | Standard Performance Evaluation Corporation |

| SSSP | Single-Source Shortest Path |

| TDP | Thermal Design Power |

| TPC | Transaction Processing Performance Council |

| TPU | Tensor Processing Unit |

| W | Watt (electricity measure unit) |

| Wh | Watt hour (electricity measure unit) |

References

- Weicker, R.P. An Overview of Common Benchmarks. Computer 1990, 23, 65–75. [Google Scholar] [CrossRef]

- Eeckhout, L. Computer Architecture Performance Evaluation Methods, Synthesis Lectures on Computer Architecture; Springer: Cham, Switzerland, 2010. [Google Scholar] [CrossRef]

- Quinonero-Candela, J.; Sugiyama, M.; Schwaighofer, A.; Lawrence, N.D. Dataset Shift in Machine Learning; MIT Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Dobin, A.; Davis, C.A.; Schlesinger, F.; Drenkow, J.; Zaleski, C.; Jha, S.; Batut, P.; Chaisson, M.; Gingeras, T.R. STAR: Ultrafast Universal RNA-seq Aligner. Bioinformatics 2013, 29, 15–21. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In MICCAI 2015; LNCS 9351; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Peng, R.D. Reproducible Research in Computational Science. Science 2011, 334, 1226–1227. [Google Scholar] [CrossRef]

- Barroso, L.A.; Hölzle, U. The Case for Energy-Proportional Computing. Computer 2007, 40, 33–37. [Google Scholar] [CrossRef]

- Dixit, K.M. The SPEC Benchmarks. Parallel Comput. 1991, 17, 1195–1209. [Google Scholar] [CrossRef]

- Weicker, R.P. Dhrystone: A Synthetic Systems Programming Benchmark. Commun. ACM 1984, 27, 1013–1030. [Google Scholar] [CrossRef]

- Curnow, H.J.; Wichmann, B.A. A Synthetic Benchmark. Comput. J. 1976, 19, 43–49. [Google Scholar] [CrossRef]

- Dongarra, J.; Luszczek, P.; Petitet, A. The LINPACK Benchmark: Past, Present, and Future. Concurr. Comput. Pract. Exp. 2003, 15, 803–820. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Dongarra, J.J. Performance of Various Computers Using Standard Linear Equations Software. ACM SIGARCH Comput. Archit. News 1992, 20, 22–44. [Google Scholar] [CrossRef]

- Graph500 Steering Committee. Graph500 Benchmark Specification. Available online: https://graph500.org/ (accessed on 27 June 2025).

- MLCommons Power Working Group. MLPerf Power: Benchmarking the Energy Efficiency of Machine Learning Systems from µW to MW. arXiv 2024, arXiv:2410.12032. [Google Scholar]

- McKenna, A.; Hanna, M.; Banks, E.; Sivachenko, A.; Cibulskis, K.; Kernytsky, A.; Garimella, K.; Altshuler, D.; Gabriel, S.; Daly, M.; et al. The Genome Analysis Toolkit: A MapReduce Framework for Analyzing Next-Generation DNA Sequencing Data. Genome Res. 2010, 20, 1297–1303. [Google Scholar] [CrossRef]

- DePristo, M.A.; Banks, E.; Poplin, R.; Garimella, K.V.; Maguire, J.R.; Hartl, C.; Philippakis, A.A.; Angel, G.; Rivas, M.A.; Hanna, M.; et al. A Framework for Variation Discovery and Genotyping Using Next-Generation DNA Sequencing Data. Nat. Genet. 2011, 43, 491–498. [Google Scholar] [CrossRef]

- Zook, J.M.; Catoe, D.; McDaniel, J.; Vang, L.; Spies, N.; Sidow, A.; Weng, Z.; Liu, Y.; Mason, C.E.; Alexander, N.; et al. An Open Resource for Accurately Benchmarking Small Variant and Reference Calls. Nat. Biotechnol. 2019, 37, 561–566. [Google Scholar] [CrossRef]

- Krusche, P.; Trigg, L.; Boutros, P.C.; Mason, C.E.; De La Vega, F.M.; Moore, B.L.; Gonzalez-Porta, M.; Eberle, M.A.; Tezak, Z.; Lababidi, S.; et al. Best Practices for Benchmarking Germline Small-Variant Calls in Human Genomes. Nat. Biotechnol. 2019, 37, 555–560. [Google Scholar] [CrossRef]

- Mattson, P.; Reddi, V.J.; Cheng, C.; Coleman, C.; Diamos, G.; Kanter, D.; Micikevicius, P.; Patterson, D.; Schmuelling, G.; Tang, H.; et al. MLPerf: An Industry Standard Benchmark Suite for Machine Learning Performance. IEEE Micro 2020, 40, 8–16. [Google Scholar] [CrossRef]

- Bucek, J.; Lange, K.-D.; von Kistowski, J. SPEC CPU2017: Next-Generation Compute Benchmark. In ICPE Companion; ACM: New York, NY, USA, 2018; pp. 41–42. [Google Scholar] [CrossRef]

- TOP500. TOP500 Supercomputer Sites. Available online: https://www.top500.org/ (accessed on 27 June 2025).

- Williams, S.; Waterman, A.; Patterson, D. Roofline: An Insightful Visual Performance Model for Multicore Architectures. Commun. ACM 2009, 52, 65–76. [Google Scholar] [CrossRef]

- Dongarra, J.; Heroux, M.; Luszczek, P. High-Performance Conjugate-Gradient (HPCG) Benchmark: A New Metric for Ranking High-Performance Computing Systems. Int. J. High Perform. Comput. Appl. 2016, 30, 3–10. [Google Scholar] [CrossRef]

- SPEC. SPECpower_ssj2008. Available online: https://www.spec.org/power_ssj2008/ (accessed on 27 June 2025).

- Navarro-Torres, A.; Alastruey-Benedé, J.; Ibáñez-Marín, P.; Viñals-Yúfera, V. Memory Hierarchy Characterization of SPEC CPU2006 and SPEC CPU2017 on Intel Xeon Skylake-SP. PLoS ONE 2019, 14, e0220135. [Google Scholar] [CrossRef] [PubMed]

- Fleming, P.J.; Wallace, J.J. How not to lie with statistics: The correct way to summarize benchmark results. Commun. ACM 1986, 29, 218–221. [Google Scholar] [CrossRef]

- Smith, J.E. Characterizing Computer Performance with a Single Number. Commun. ACM 1988, 31, 1202–1206. [Google Scholar] [CrossRef]

- Dongarra, J.; Strohmaier, E. TOP500 Supercomputer Sites: Historical Data Archive. Available online: https://www.top500.org/statistics/perfdevel/ (accessed on 15 October 2025).

- Poess, M.; Nambiar, R.O. TPC Benchmarking for Database Systems. SIGMOD Rec. 2008, 36, 101–106. [Google Scholar]

- Reddi, V.J.; Cheng, C.; Kanter, D.; Mattson, P.; Schmuelling, G.; Wu, C.J.; Anderson, B.; Breughe, M.; Charlebois, M.; Chou, W.; et al. MLPerf Inference Benchmark. In Proceedings of the 2020 ACM/IEEE 47th Annual International Symposium on Computer Architecture (ISCA), Valencia, Spain, 30 May–3 June 2020; pp. 446–459. [Google Scholar] [CrossRef]

- Brücke, C.; Härtling, P.; Palacios, R.D.E.; Patel, H.; Rabl, T. TPCx-AI—An Industry Standard Benchmark for Artificial Intelligence. Proc. VLDB Endow. 2023, 16, 3649–3662. [Google Scholar] [CrossRef]

- Standard Performance Evaluation Corporation (SPEC). SPEC CPU2017. Available online: https://www.spec.org/cpu2017/ (accessed on 27 June 2025).

- Gschwandtner, P.; Fahringer, T.; Prodan, R. Performance Analysis and Benchmarking of HPC Systems. In High Performance Computing; Springer: Berlin/Heidelberg, Germany, 2013; pp. 145–162. [Google Scholar]

- Hähnel, M.; Döbel, B.; Völp, M.; Härtig, H. Measuring Energy Consumption for Short Code Paths Using RAPL. SIGMETRICS Perform. Eval. Rev. 2012, 40, 13–14. [Google Scholar] [CrossRef]

- Baruzzo, G.; Hayer, K.E.; Kim, E.J.; Di Camillo, B.; FitzGerald, G.A.; Grant, G.R. Simulation-based comprehensive benchmarking of RNA-seq aligners. Nat. Methods 2017, 14, 135–139. [Google Scholar] [CrossRef]

- Houtgast, E.J.; Sima, V.M.; Bertels, K.; Al-Ars, Z. Hardware acceleration of BWA-MEM genomic short read mapping for longer read lengths. Comput. Biol. Chem. 2018, 75, 54–64. [Google Scholar] [CrossRef]

- Association for Computing Machinery. Artifact Review and Badging Version 1.1. Available online: https://www.acm.org/publications/policies/artifact-review-and-badging-current (accessed on 20 October 2025).

- Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J.; Appleton, G.; Axton, M.; Baak, A.; Blomberg, N.; Boiten, J.W.; da Silva Santos, L.B.; Bourne, P.E.; et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci. Data 2016, 3, 160018. [Google Scholar] [CrossRef] [PubMed]

- IEEE 2675-2021; IEEE Standard for DevOps: Building Reliable and Secure Systems Including Application Build, Package, and Deployment. IEEE Standards Association: Piscataway, NJ, USA, 2021. Available online: https://standards.ieee.org/ieee/2675/6830/ (accessed on 1 November 2025).

- Patro, R.; Duggal, G.; Love, M.I.; Irizarry, R.A.; Kingsford, C. Salmon provides fast and bias-aware quantification of transcript expression. Nat. Methods 2017, 14, 417–419. [Google Scholar] [CrossRef]

- Menon, V.; Farhat, N.; Maciejewski, A.A. Benchmarking Bioinformatics Applications on High-Performance Computing Architectures. J. Bioinform. Comput. Biol. 2012, 10, 1250023. [Google Scholar]

- Tröpgen, H.; Schöne, R.; Ilsche, T.; Hackenberg, D. 16 Years of SPECpower: An Analysis of x86 Energy Efficiency Trends. In Proceedings of the 2024 IEEE International Conference on Cluster Computing Workshops (CLUSTER Workshops), Kobe, Japan, 24–27 September 2024. [Google Scholar] [CrossRef]

- Che, S.; Boyer, M.; Meng, J.; Tarjan, D.; Sheaffer, J.W.; Lee, S.-H.; Skadron, K. Rodinia: A Benchmark Suite for Heterogeneous Computing. In Proceedings of the 2009 IEEE International Symposium on Workload Characterization (IISWC), Austin, TX, USA, 4–6 October 2009; pp. 44–54. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, Y.; Hu, F.; He, M.; Mao, Z.; Huang, X.; Ding, J. Predictive Modelling of Flexible EHD Pumps Using Kolmogorov–Arnold Networks. Biomimetics Intell. Robot. 2024, 4, 100184. [Google Scholar] [CrossRef]

- Mytkowicz, T.; Diwan, A.; Hauswirth, M.; Sweeney, P.F. Producing Wrong Data Without Doing Anything Obviously Wrong! In Proceedings of the 14th International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS’09), Washington, DC, USA, 7–11 March 2009; pp. 265–276. [Google Scholar] [CrossRef]

- Reddi, V.J.; Kanter, D.; Mattson, P. MLPerf: Advancing Machine Learning Benchmarking. IEEE Micro 2024, 44, 56–64. [Google Scholar]

| Benchmark | Year | Primary Purpose | Strengths | Limitations |

|---|---|---|---|---|

| Whetstone | 1976 | Measure floating-point performance | First widely used synthetic benchmark; reproducible | Poor representativeness; compiler dependent |

| Dhrystone | 1984 | Measure integer performance | Simplicity; portability; massive popularity (DMIPS) | Easily optimized; not reflective of real workloads |

| LINPACK | 1979 | Solve dense systems of linear equations | Representative of scientific workloads; precursor of TOP500 | Very specific to linear algebra; limited generality |

| SPEC | 1988 | Suite of real-world applications (C, Fortran) | Greater realism; industrial standardization | Costly to execute; administratively complex |

| No. | Schema Component | GETK Best Practices Example | Coverage Assessment |

|---|---|---|---|

| 1 | Workload Definition | GATK 4.2.0.0 germline short variant discovery pipeline (HaplotypeCaller) on whole-genome sequencing (WGS) data; representative of clinical diagnostic workflows | Complete |

| 2 | Quality Criteria | F1-score = 0.9990 for SNPs (>0.999 required), F1-score = 0.9972 for indels on GIAB HG002 truth set v4.2.1; stratified by high-confidence regions | Complete |

| 3 | System Configuration | Dual Intel Xeon Platinum 8360Y (36 cores each, 2.4 GHz base, 3.5 GHz boost); 512 GB DDR4-3200 ECC RAM; 2 × 960 GB NVMe SSD (RAID 0); no GPU; TDP: 250 W per socket | Complete |

| 4 | Software Stack | Ubuntu 20.04.4 LTS (kernel 5.4.0-125); OpenJDK 11.0.16; GATK 4.2.0.0; BWA-MEM 0.7.17; Picard 2.26.10; SAMtools 1.15.1; compilation flags: default JVM heap 32 GB; Docker image: broadinstitute/gatk:4.2.0.0 (sha256:7a5e… [truncated]) | Complete |

| 5 | Execution Methods | 5 independent replicates per sample; random seeds not documented for GATK (affects downsampling reproducibility); thread affinity not specified; NUMA placement: default OS scheduler; no explicit warm-up runs reported | Partial |

| 6 | Input Data | Genome in a Bottle (GIAB) HG002 (Ashkenazi Jew son), 30× WGS Illumina NovaSeq 6000 (2 × 150 bp); FASTQ files: NCBI SRA accession SRR7890936; reference: GRCh38 with decoys (1000G DHS); license: CC BY 4.0; data size: ~300 GB raw FASTQ | Complete |

| 7 | Performance Metrics | Mean wall-clock time: 4.2 h ± 0.3 h (95% CI) for complete pipeline (alignment through VCF); peak memory: 47 GB; throughput: N/A (single sample); energy consumption: not reported. Latency breakdown: alignment (1.8 h), marking duplicates (0.4 h), BQSR (0.6 h), HaplotypeCaller (1.4 h) | Partial |

| 8 | Artifacts and Reproducibility | GitHub repository: sample configuration files (JSON) and Cromwell WDL workflow available at https://github.com/gatk-workflows/gatk4-germline-snps-indels commit abc123f; raw output VCFs NOT deposited; no persistent DOI for computational artifacts; execution logs: summarized in Supplementary Materials but not machine-readable format | Partial |

| Domain | Main Benchmark(s) | Metrics Evaluated | Current Challenges |

|---|---|---|---|

| High-Performance Computing (HPC) | TOP500 (LINPACK), HPCG, Graph500 | FLOPS, efficiency in linear algebra, and graph workloads | Overemphasis on LINPACK; poor representativeness of heterogeneous workloads |

| Artificial Intelligence | MLPerf | Training and inference performance of deep learning models | Hardware-specific bias; reproducibility complexity |

| Databases and Cloud Computing | TPC (TPC-C, TPC-H, etc.) | Throughput, latency, scalability | Rapid evolution of distributed architecture; cost-efficiency metrics |

| Biomedical Sciences | Genomics and medical imaging benchmarks | Processing time, accuracy, reproducibility | Clinical relevance; diversity of workloads |

| Benchmark Type | System A Rank | System B Rank | System C Rank | Why? |

|---|---|---|---|---|

| Dhrystone (integer) | 1 | 3 | 2 | Favors high clock frequency, small code footprint |

| LINPACK/HPL (dense FP) | 3 | 1 | 2 | Rewards parallelism, memory bandwidth |

| SPEC CPU2017 (mixed) | 1 | 2 | 3 | Application mix emphasizes single-core performance |

| Graph500 (sparse, irregular) | 2 | 1 | 3 | Memory latency and irregular memory access patterns dominate |

| MLPerf Training (AI) | 3 | 2 | 1 | Specialized tensor operations and high memory bandwidth |

| TPC-C (OLTP database) | 2 | 1 | 3 | I/O latency and multi-threaded concurrency |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zablah, I.; Sosa-Díaz, L.; Garcia-Loureiro, A. Relevance and Evolution of Benchmarking in Computer Systems: A Comprehensive Historical and Conceptual Review. Computers 2025, 14, 516. https://doi.org/10.3390/computers14120516

Zablah I, Sosa-Díaz L, Garcia-Loureiro A. Relevance and Evolution of Benchmarking in Computer Systems: A Comprehensive Historical and Conceptual Review. Computers. 2025; 14(12):516. https://doi.org/10.3390/computers14120516

Chicago/Turabian StyleZablah, Isaac, Lilian Sosa-Díaz, and Antonio Garcia-Loureiro. 2025. "Relevance and Evolution of Benchmarking in Computer Systems: A Comprehensive Historical and Conceptual Review" Computers 14, no. 12: 516. https://doi.org/10.3390/computers14120516

APA StyleZablah, I., Sosa-Díaz, L., & Garcia-Loureiro, A. (2025). Relevance and Evolution of Benchmarking in Computer Systems: A Comprehensive Historical and Conceptual Review. Computers, 14(12), 516. https://doi.org/10.3390/computers14120516