Abstract

In this paper, we explore a framework for assessing the implementation of IT Strategic planning, grounded in the combination and iteration of different methods. It is a part of an Action Design Research exercise being made up at a leading online European university. The assessment mixes three main dimensions (strategy, performance and governance), extracted from the professional and academic research. Its application to this context through a varied scaffolding of methods, tools and techniques seems robust and helpful to work out with the business and IT senior stakeholders. It allows a quick deployment, even in a complex institutional environment.

1. Introduction

IT strategy formulation, which has been known as Strategic Information System Planning (SISP), is experiencing a period of far reaching renewal. It affects both the content and the processes of making strategies [1]. This is due to two reasons of different nature: the convergence of business and IT strategies in what has been called Digital Transformation [2] and, from a research standpoint, the reinforcement of the “Strategy as Practice” school [3,4]. Strategy is now considered an ongoing social process, whereas the literature has drawn to “the realities of strategy formation” [5] (p. 372), with an increased focus on incremental planning, program implementation and organizational learning. However, along this evolution, not much attention has been paid to IS strategy implementation by itself, not to say the assessment of the implementation process and outcomes [6,7].

On our part, following Earl [8] and Peppard [9], we pose the assumption that evaluating the implementation of the IT strategic plans on a periodical basis is key: (a) to attain the results of the intended strategies; (b) to adapt and update them to emerging threats and opportunities; (c) to facilitate common understanding and ownership of the information projects between the business and the IT leaders; and d) to ensure organizational learning and transformation. The latter has been considered one of the most challenging issues that face academic institutions [10]. This kind of exercise needs to be carefully adapted to each individual context, taking into account the design criteria of the original Plan and the conditions of execution over time, and be properly conducted, executed and communicated.

This article is a part of a long range practice-oriented research on the process of IT strategy making at the Universitat Oberta de Catalunya (UOC), a leading European online university. We participated in the preparation of its SISP (named Information Systems Master Plan, ISMP) [11] in 2014, the evaluation of its execution that was conducted through the Summer of 2017, and its update in 2018. The first author of this paper (hereafter, “the researcher”) was a member of the lead team of the project, working in a cooperative Action Design Research mode [12]. With this approach, we intend both to make research being involved in a specific customer practice (“action”) and to create artifacts that may prove useful for practitioners and academicians (“design”). The piece we present here collects the process, methods and results of the evaluation or assessment phase and the major consequences for the update and further development of the Plan.

Our aim is to validate existing models for assessing the implementation of IT Strategies in complex organizations and to explore a novel framework that may prove useful both for practitioners and researchers. Therefore, our goal is not to provide an external oversight or audit of the institutional practises or to compare the results of the assessment with an existing construct or with other results, but to analyze the assessment process in itself, in order to improve the existing methods and explore avenues of generalization of the proposed scheme in different contexts.

For that purpose, following the suggestion of “cycles and choices” by Salmela and Spil [13], we designed a tentative analytical model made up of three dimensions and two related concepts for each dimension. These categories are: (1) Strategy (meaning alignment and ability to respond); (2) Performance (that includes benefits realization and program execution); and (3) Governance (covering satisfaction of the major stakeholders and the mechanisms of program governance). We interpreted each dimension in the light of the relevant literature, both professional and academic, from very different fields. We found that, when dealing with execution, the researcher is drawn beyond strategic planning to studies on impact, success, satisfaction, architecture, and program management, among others.

For the “alignment” dimension, we preferably used the canonical SAM model by Henderson and Venkatraman [14] and some of its updates. For “intended vs. realized strategies”, we took the conceptual approach of Chan et al. [15] and its methodological applications by Kopmann et al. [16] and Spil and Salmela [13]. For “benefits realization”, we chose standard benefits libraries, such as the one provided by Hunter et al. [17]. For “program management”, we considered those authors who show a more strategic orientation, e.g., Dye and Pennypacker [18] and Thiry [19]. For “satisfaction” of stakeholders, we followed the guidelines from Galliers [20] and some classical references on impact and success of IS. For “governance”, we chose professional sources, such as Isaca’s COBIT [21], among others. Section 2.1 presents for a complete depiction of our main references.

This manuscript is an extension of an EMCIS 2018 paper [22]. We provide a more in-depth analysis of the state of the art, a detailed layout of the methods that make up the analytical framework and a further discussion of the case. This includes additional materials about its planning, budget, execution and a new section on the consequences for the updating of the Plan and its governance. We have also incorporated some quotations showing the point of view of the leading participants and our own observations.

In the remainder of this paper, we summarize in Section 2 relevant research in the field of IT strategy, with a special focus on evaluation of IT strategy execution; we also present and discuss there the main references that ground our proposed analytical model. Section 3 provides basic information of the context and the setting of the research. Section 4 shows the research approach, methods and tools and the project organization and planning. Section 5 highlights the main results of the evaluation process, presents some samples of the artifacts and outcomes of the exercise and summarizes the consequences and further developments that are being applied by the institution after the evaluation project. Finally, Section 6 concludes with a piece of discussion and proposals for academicians and practitioners.

2. Related Research

The study of Strategic Information Systems Planning (SISP) as a formal exercise “to provide an organization with a comprehensive portfolio of IT assets to support its major business processes and to enable its transformation, achieving durable competitive advantage” [23] has been the object of substantial scholar attention since the 1980s. The works by Earl [8], refined over time, and Letherer [23] with different authors, are still the reference of the classical school of IT strategic planning. In Earl’s view, plans should be checked and updated every year. Taking into account reported lack of implementation or severe implementation issues, focus was put on the quality of the design and formulation of the IT strategy and a nice series of articles was published intending to identify critical success factors (CSF) and prescriptions for better strategy planning [23,24,25,26,27,28,29,30,31]. The major empirical basis on CSFs for better planning is the massive research by Doherty et al. [29], after Earl’s model.

Nevertheless, much less interest has been paid to IS strategy implementation, let alone the evaluation of the implementation process and results, which is the focus of this work [6,8,15,20,32,33,34,35,36,37]. In 2008, Teubner and Mocker [6] reviewed a sample of 434 papers published in major MIS journals between 1977 and 2001. Of those, only 21 were related to implementation. Although with a different methodology, in 2013, Amrollahi et al. [7] found nine papers on implementation and eight on evaluation, out of 102 papers on SISP published between 2000 and 2009. After the revision of those papers and some more recent ones, we chose the comprehensive approach to continuous planning made by Salmela and Spil [13], who proposed a framework of “cycles” and “choices” of planning that could be flexibly adapted to the needs, context and maturity of each organization and could be improved and refined over time.

Nowadays, the prevalent school on the field of IT strategy (as it had been shortly before for business strategy) advocates for the consideration of strategic planning as a contingent [38] and ongoing social process in organizations [39,40], addressed to build-up new capabilities, blurring the dichotomy of MIS and business domains [41]. This has been called strategizing [1] or strategy as practice [42]. Subsequently, a more comprehensive “practice turn” in strategy research has been proposed [3,4,13,43,44]. The literature has shifted towards “the realities of strategy formation” [5], such as incremental planning and cultural and organizational transformation. “Agile” strategic planning [45] or even in some cases the lack of an explicit and formal IT strategy plan have been praised as predictors of better IT strategy execution [46]. A summary of these trends is shown in Table 1.

Table 1.

SISP vs. SAP: Main features.

As regards higher education, SISP and IT strategy formulation as an instrument for industry transformation and the acquisition of competitive advantage are not rare in the literature [10,47,48,49,50,51,52]. This has been the object of single or multiple case studies in different contexts [53,54,55,56,57,58,59,60,61]. Sabherwal [48] and Clarke [56] analyzed the combination of different methods in strategy planning. Kirinic [52] introduced the concept of maturity levels. Effective use and adoption of IT and social and organizational issues, such as governance, management of stakeholders, technology adoption and change management (i.e., “strategies for winning faculty support for teaching with Technology” [49], have been the major topics of interest [10,62,63,64,65,66,67,68,69].

2.1. Main References

Following Spil and Salmela’s [13] approach, we selected from the analysis of the academic and professional literature and discussed with the customer (in this context, “Customer” is the usual term used in Action Research [70]) a model of assessment aimed to examine the main achievements and pitfalls over the execution of the Plan. In our conceptual research and practical intervention, we actually found that IT strategy execution is a space where strategy planning, IT governance, enterprise architecture, project portfolio management or the “success” of information systems, among other disciplines, necessarily meet.

From these suggestions and other of practical nature (information availability, coordination costs, and time-frame), we designed a scheme based on three major conceptual dimensions of analysis and two categories of key concepts for each dimension (Table 2): (a) the contribution of the IS strategy to the business strategy; (b) the effectiveness of the strategy implementation; and (c) the quality of governance. Next, we reflect on the main sources being used for each analytical dimension.

Table 2.

Main references.

- Strategy represents strategic alignment over time. We borrow first the Strategic Alignment Model (SAM) as proposed by Henderson and Venkatraman [14], taking into account some more recent discussions, e.g., those of Chan and Reich [71], Coltman et al. [72] and Juiz and Toomey [73]. As for the ability to cope with new threats and opportunities, we took the classical approach by Mintzberg and Waters [74] for business strategy and Chan et al. [15] for IT strategy. Kopmann et al. [16] and Salmela and Spil [13] provide further practical considerations for the method of application.

- Performance is related with the effectiveness of IS Strategy application, either from an external point of view (the acquisition of business benefits) or an internal one (the quality and efficiency of program execution). For business realization, we took the repertoire of methods and libraries of examples provided by different academic and professional authors, mainly Parker et al. [76], Ashurts et al. [77], Ward and Daniel [78] and Hunter et al. [17] from Gartner. As to program execution, we chose authors who provide a more strategically driven approach to project portfolio management, such as Dye and Pennypacker [18], Thiry [19], Meskendahl [79] and again Kopmann et al. [16].

- Under Governance, we include both the feedback provided by key participants about the implementation of the strategy and those issues related with program management and governance mechanisms. As to the first part, we took advantage of the guidelines provided by Galliers [20]; we also needed to take some classical references of the literature about impact as in Gable et al. [82], and success of IS as in the works by DeLone and McNeal [80,81] and Petter et al. [83]. Program governance and management, from a strategic standpoint, is well represented in the books by Dye and Pennypacker (eds.) [18], and Thiry [19] and the article by Bartenschlager et al. [84]. Finally, ISACA’s COBIT is a professional standard that is attracting increased interest of the academia, more specially in the higher education industry.

3. Research Context

The UOC is the oldest fully online university in the world. Founded in 1995, it now enrolls 70,000 students, has 400 full-time professors and 4300 associate part-time professors, provides 77 official graduate programs and runs a budget of 108.7 M€ (million of euros). It operates within a public–private funding and governance regime, in a highly-regulated environment. The current governing body, appointed in 2013, designed an ambitious growth and transformation strategy [85] of which the ISMP for the period 2014–2018 was an instrumental part. The annual budget allocated to the Plan is about 3 M€, out of a total IT budget of 8.7 M€. The IS department (led by an IT Director or “CIO”, who reports to the Chief Operations Officer) has 53 internal and 85 external full-time employees.

The IT expenditure vs. revenue ratio and the weight of the strategic or “transformational” projects within the portfolio of IT assets is substantial and could be compared with the figures of digital industries [86], such as software and Internet services. The fact of being a pure digital player makes paramount for the UOC the effective exploitation of information technologies in the global and rapidly evolving market of higher education and life-long learning [87,88,89,90,91,92].

The ISMP was structured in ten “strategic initiatives” (meaning groups of programs and projects aimed to a single business objective) and 42 individual projects to be deployed over a period of four years (2015–2018). Table 3 shows the major strategic initiatives that form the ISMP.

Table 3.

Structure of the information systems master plan.

The themes of the original ISMP were oriented to the transformation of business processes and IT infrastructure. It is worth noting the weight of those cross-organizational programs covering the core processes of the value chain (CRM, SIS, LMS, and BI), which amount to 29.3% of the total, and those considered “infrastructural” (IT Architecture and Infrastructure, Mobile, UX, Security), representing 43.1% of the planned budget. It is also noticeable the small proportion allocated to the Learning Management System, as compared to other management infrastructure processes. When discussing this issue with the former COO and main sponsor of the ISMP, he posed the lack of a clear view on how the LMS should evolve, the difficulty to articulate a strong leadership within the academy and the absence of robust mature technology solutions in the marketplace as the main reasons that explain this outcome.

Since its original conception, the ISMP was designed as: (a) a top-down transformation program; (b) addressed to renew the core business applications and the technology infrastructure base; (c) ruled by the top management and (d) led and executed by the CIO (Chief Information Officer); and (e) with the support of a program office [11].

As to the assessment, which is the object of this paper, it is the initial part of a broader exercise to update the ISMP, that is: (a) to re-align the Plan with the Strategic assumptions of the business (as expressed in the update of the institution Strategic Plan); (b) to identify new IT strategic initiatives for the years to come; (c) to get new funding for them; and (d) to set up new governance arrangements. In the words of the current COO and former CIO:

“We learned that Strategic Planning was the proper way to focus and preserve transformation and that transformation was not still complete... and maybe never will.”

The whole project of updating the Plan was executed from June 2017 to March 2018. The assessment phase, which is the object of this article, took place between June and September 2017.

4. Research Model: Methods and Arrangements

4.1. Research Methods

The overall approach of this research has been an Action Design Research [12] approach. A toolkit has been proposed for the deployment of the assessment. It is grounded on the analytical dimensions that we presented in the related research section and combines different methods, tools and techniques. For instance, a case study stance was carried out to understand the original ISMP and the changes produced over time. A quantitative and qualitative independent survey was ordered to capture the satisfaction and feedback of the major stakeholders. These different work-streams are correlated and the process works through a number of iterations. The timing, the content and the setting of individual and group interactions were critical, as it was their preparation through previous analysis of the bulk of materials produced by the program office and the project leaders. A summary of this toolkit is presented in Table 4. The assessment was completed in ten weeks. Forty-two people of different ranks (mainly top and middle managers) took part, with an estimated effort of 800 man hours (for a better understanding of the different participants and their roles, please see Section 4.2).

Table 4.

Research methods.

To complete our research purposes, an additional round of in-depth reflective interviews with members of the Project team, the sponsors and the Steering Committee were conducted between October and December 2017. Some of their insights are reflected on in this paper.

4.2. Project Organization

To conduct this effort and to prepare a proposal for the Executive Board of the University, a Steering Committee (SC) and a project team (PT) were settled. The researcher was commissioned by the university as the project co-leader. He took part in most of the workshops and meetings and carried out personally individual interviews with prominent members of the management and the faculty. This commission was made explicit, both as a support to the management and as an Action Research exercise. A Memorandum of Understanding between the institution and the researcher was signed. The researcher could work with scientific rigor, freedom of action and independence but his proposals regarding the method had to be adapted to the available information and the organizational context, within a demanding time-frame. He also intervened in the preparation and discussion of the conclusions with the PT. An organization chart of the project is shown in Table 5.

Table 5.

Project organization.

Let us consider the governance arrangements for the assessment project. First, it is worth reminding that the main object of the assessment was to build up a case for the updating and renewal of the ISMP. This explains the leading role of the CIO, who reports to the COO, and why both took the status of sponsors.

During the execution of the ISMP over the past years, the governance focus had been put on monitoring and controlling the program and approving budgets and major changes. A small committee formed by the CEO, two deputy Managing Directors (COO and CFO) and the CIO was in charge. In contrast, for the evaluation, the Steering Committee was extended with the involvement of the Vice-Chancellor of Teaching and the dean of one of the schools. Eventually, this sent an early signal to the academy in order to re-balance the power of decision and resource allocation and to increase the weight of those projects related with learning and research. Finally, this new scheme facilitated the involvement of a large number of participants in the evaluation process.

The project team included not only the members of the Program Management Office charged with the ISMP, but also the IT Demand Manager and her teams, i.e., the group of IT managers with a better knowledge and closeness to the leaders of the business and the academy and their needs. This combination should also allow better reflecting on the value provided by the Plan to the business outcomes and, to some extent, break the flawed customer–provider relationship between IT and users.

To our view, those arrangements were vital not only for the success of the project but also to the further governance of the whole program, i.e., the updated ISMP. The assessment acted as a trial and an advancement of subsequent schemes of planning, organization and governance.

4.3. Project Plan

A more detailed outline of the project plan is shown in Table 6. Four work-streams were set up, based on the conceptual dimensions of analysis and adapted to the practical working context: (1) Strategic Alignment; (2) Program Execution; (3) Benefits realization; and (4) Program Management. It was not produced properly in a sequential manner. The working plan was as follows. First, the IT teams put together the internal information and elaborated their own estimate. Later, the Project Management Office, also constituted as the project office of the assessment exercise, normalized these inputs. Finally, the project team, with the support of the Head of the IT Business Partnership group, prepared the sessions with the business units.

Table 6.

Project planning.

The latter was an unusual event for both parties: working out program execution or business benefits from IT projects (work-stream 2), arising success metrics (work-stream 3) and taking a common stance was not that easy. Often, the business units approach was to focus on the pending demands and taking advantage of the event to formulate new claims. Sometimes the IT leaders took a defensive stance. However, in most cases, the exercise was perceived as positive and established a common ground for the phases to come: building up a shared view of IT initiatives in terms of business benefits and a joint commitment to determine new demands for the updated program. It was also an opportunity of growth for the IT teams, more accustomed to execute well defined projects working with contractors and not so much to reflect on business value and to interact with business leaders and managers.The researcher participated in most of these workshops.

For work-streams 1 (strategic alignment) and 4 (program management), the approach was quite different. The project office prepared draft documents to compare the initial outline, the actual results and the alignment with the recently updated Strategic Plan of the UOC. The researcher, acting as project co-director, held individual discussions with the top management. It is worth noticing those differences in the approach. When dealing with business strategy issues, the project leaders needed to carefully evaluate for every section of the assignment which were the parties that should be involved and the working approach with them.

Finally, it is worth mentioning the importance of providing ongoing feedback to the sponsors and of preparing “professional” (not academic) materials and presentations for the SC.

5. Results

Next, we show the main results of the assessment process, arranged according the selected dimensions (Table 2) and work streams (Table 4). We also show some samples of the analysis and comment on them. Not surprisingly, the different dimensions showcase strong relationships and should be interpreted in a coherent discourse.

5.1. Strategy

5.1.1. Strategic Alignment

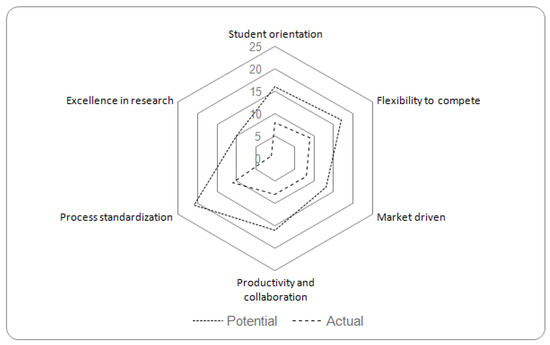

The main business objectives were grouped into six categories, and rated into five levels of accomplishment, according to the potential vs. actual impact of each IT strategic initiative against each category. Those categories were based upon the strategic axes of the Strategic Plan of the UOC 2015–2018 that had been used for the preparation of the ISMP: (1) Student and UOC community orientation; (2) Flexibility and scalability to globally compete and cooperate; (3) Market orientation and business competitiveness; (4) Communication, collaboration and networking; (5) Process standardization and personalization; and (6) Excellence in research and space for innovation.

An impact matrix was prepared and discussed with the project team and the results were presented in a radar chart. The most successful initiatives were related with “process standardization”, “productivity and collaboration” and “flexibility to compete”, as compared to lower results in “excellence in research” and “student orientation” (Figure 1). Actually, those project linked with the academic (learning and research) and academic support units show lower level of execution and higher deviations than the rest.

Figure 1.

Strategic alignment.

It may be said that the most relevant contribution of IT over this period has been to enable growth and provide scale advantages, by delivering technical infrastructure and business process support to serve more than double the number of students enrolled and almost triple the program offering, keeping constant the operational fixed costs. This seemed more than satisfying to the opinion of the SC. For one of the members of the SC:

“It is simple: priorities were stability and growth; so, infrastructure, sales and control were put on top of the list”.

5.1.2. Intended and Realized Strategies

This dimension is related with the difference between the projects included in the plan and the ones which were effectively executed. The difference amounts to 2.1 M€ in a list of nine large projects, out of a total expenditure of 8.3 M€ in 23 large projects. Two of those unplanned schemes are related to major business shifts, as the change of the branding concept and image and the new multimedia format of learning materials. Those decisions were made by the Board of Executive Directors. Some other changes were related with mandatory legal issues or management style and preferences of newly arrived top executives. It may be said that the organization showed flexibility to adapt to major strategic changes, at the expense of a budgetary deviation and a lower execution of some planned projects. In our observation, it also shows the political skills of the IT leaders to cope and adapt to supervened circumstances.

This observation deserved mixed judgment among the members of the SC. According to the CIO:

“After the approval of the Master Plan, blunt execution was the focus; this stance privileged those mature well-defined projects with a clear and strong leadership against other with more potential strategic impact. When new demands arrive, we could make room for them without losing the focus.”

For the former COO and main sponsor of the ISMP:

“The good news is that those demands were not managed as a free fighting among business units or departments, but as well discussed and approved priorities at the top level of the institution.”

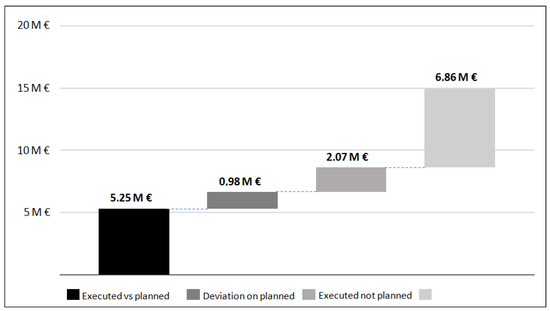

Figure 2 helps to have a complete depiction of the execution results at the moment of the assessment. The initial outline (“intended”) and its deviation in execution are shown in Columns 1 and 2. Column 3 represents the new (“emergent” or “unintended”) projects approved and executed over the period. Column 4 shows the work in progress. These figures also explain by themselves the need to update the plan and get additional funding to cope with current (pending as compared with the initial plan) and future demands.

Figure 2.

Budget breakdown as of June 2017.

5.2. Performance

5.2.1. Program Execution

We applied here the conventional “iron triangle” that compares the baselines of scope, time and cost with the realized outcomes. It explains the deviations within each planned project, not the emergence of new projects, which is explained in the above paragraph. For the scope dimension, we broke down each major program into individual projects and each project into separate phases and milestones. The results show an execution level of 89.0% in scope. The deviation in budget was of 14.2%. A detailed account of the execution by strategic initiative is shown in Table 7.

Table 7.

Project execution indicators.

Please notice that the figures depicted in the table compare the plan with the parts completed as in June 2017 (when the assessment took place). Thus, the completion of scope is 56% against 62.5% as planned, and so on. The conclusion of the team was that scope and budget were fundamentally on plan. Another interesting observation is the small number of projects that needed to be reviewed in scope, apparently meaning that the initial plan was actionable without major adjustments. On the other hand, it is worth pointing out the difference between completion of scope and budget in some cases, explained by the characteristics of public procurement.

Major factors affecting execution were discussed within the team and with the project leaders. The results are shown in Table 8. The results are quite consistent with some of the former considerations. Where there were a clear project definition and a prospect of solution (for instance, a standard ERP) or where a robust business and IT leadership existed and the governance was straightforward, execution excelled.

Table 8.

Factors affecting execution.

The project team also noted deficiencies in the project measurement and monitoring. The researcher observed some difficulties to secure reliable data on the status and evolution of some projects or the lack of more sophisticated project performance metrics, such as earned value or estimates to complete, let alone a numeric analysis of forecasting mistakes. This seems striking for an organization quite sophisticated in the fields of strategic planning or IT governance. For example, some days after the completion of the assessment an unexpected significant deviation in scope, time and cost in the student information system initiative was declared by the contractor. However, in any case, the execution of the planned projects of the ISMP seemed satisfactory to the SC, even noting the need for more professional program and project management arrangements. In the words of the CEO:

“The IT department has shown strong abilities to execute, but we should improve our professional project management capabilities.”

We reflect on this in the following section.

5.2.2. Benefits Realization

Of all the dimensions of analysis, this one was the least familiar and most difficult to deal with for the IT and business teams. It was also the most promising to share with mid-level managers, since it allows improving the quality and effectiveness of the dialogue between IT and business, in order to set or change priorities.

For its preparation, we first took several libraries of standard benefits, extracted from professional and academic sources (see Table 1), then shortlisted some of them for each major project and asked the IT project leaders to make a first review. Later, we went to the administrative and faculty management teams to provide them with the feedback of the analysis and open a discussion on the realization of benefits, specific performance impact and its measurement. In some cases, it was easy to identify key value indicators, get figures and find a direct or indirect relationship with the program effectiveness, while, in others, it was not that easy.

Unsurprisingly, the ability to get fresh and useful information on the business success was again greater in those projects which dealt with sales effectiveness and technology services. That is, the implementation of a popular standard solution with minor integration with legacy and operational systems allowed massive automation of manual procedures, improved conversion, increased effectiveness of sales staff and supported a significant rise in sales. Conversely, the renewal of the technical infrastructure increased systems up-time and response-time and severely reduced the cost to serve.

Table 9 provides some samples of indicators. We show separately those metrics which are suitable and measure value (left) versus those that only measure effort or activity (right). In our view, the interest of the exercise does not lie in how accurate or elegant are the results, but in the effort to set up new conversations among IT and business leaders. This experience was good enough to work out new demands, reflect on return on investment and set up new prioritization mechanisms when the preparation of the updated ISMP arrived, some months later. In the words of the current CIO:

“When dealing with demand, the major challenge is to introduce “value” into the equation. Many users, even business leaders, jump to ask for a solution, not much to reflect about business needs and benefits. Similarly, when dealing with IT staff, the challenge is to drawn from how many things we do to how much value we provide.”

Table 9.

Suitability of the definition of Key Performance Indicators (samples).

5.3. Governance

5.3.1. Key Stakeholder’s Satisfaction

The customer ordered a quantitative and qualitative survey in February 2017 to an external supplier, as a proxy to understand the awareness, acceptance and commitment of executives, managers and key users (senior referrals of IT in every functional area) about the ISMP and the performance of the IT services. This survey was used as an input for discussion in the various forums of the project. The main results are presented in Table 10.

Table 10.

Key stakeholders’ satisfaction with the ISMP.

It is worth noting the high rate of response. The results are rather positive. Interestingly, respondents, mainly from the faculty, show a relatively low level of awareness of the ISMP. Contribution to the corporate strategy achieves better scores than the response to individual needs. The major complaints from mid-level management were related to lack of information and response to demands of incremental improvements (evolutionary maintenance) on the current legacy systems.

was mentioned by a middle manager. The satisfaction survey and individual interviews voiced also complaints about poor information on the priority setting mechanisms and the overall progress of the Plan. As to our observation, the greater was the distance to decision making or the lower was the involvement in key projects, the greater was the disconnection or even disenchantment. As a second conflicting but logical outcome, the closer was the relationship with perceived failed or failing projects, the larger was the frustration.“When we go and ask for a minor improvement, they put that it will be solved with the Master Plan”

In our interviews, top business and IT management accepted these results as “basically expected”, since the focus of the ISMP was precisely on renovating the core of the enterprise IT and better responding to the corporate business strategy, and not as much on individual user demands. Nevertheless, the leader of the Office of the Plan accepted the lack of an active communication plan:

However, the SC still acknowledged the risks of losing adherence to the ISMP among users, mainly academicians.“We were so involved in execution that we forgot to explain what we were doing.”

5.3.2. Program Governance and Management

The execution of the ISMP had been governed by a small Steering Committee, chaired by the Managing Director of the UOC. The Vice-Chancellor of Teaching occasionally participated. The ordinary management had been charged to a Program Office of two people and ten project leaders from the IT department, with an uneven business counterpart for each project. The original governance model envisioned a broader picture with stronger involvement of the faculty. It was also planned to set-up governing committees for each strategic initiative. Nevertheless, during the implementation, straight execution was preferred to greater participation. In the words of one of the members of the project team:

On the other hand, one executive of the SC puts:“To some extent, program governance was replaced by project execution.”

“The organisation as a whole (be IT, administrative management and academy) was not mature for a more participatory scheme.”

When performing the “lessons learned” exercises with the IT project leaders, they highlighted lack of resources, lack of business involvement and resistance to change as the major pain issues. Table 11 shows the main outcomes of this exercise. When discussing project management issues with top business managers, some expressed concerns on the quality of the project control mechanisms and proposed to select and develop project managers by their leadership and managerial skills, and not so much by their technical capabilities.

Table 11.

Lessons learned according to the IT project leaders.

As to the lower involvement of the faculty, it was perceived as a reflex of the difficulties to articulate a common view and governance at institutional level. In the words of a member of the SC:

“What is happening with IT is not different that what happens with other issues.”

5.4. Overall Balance

After this review and the discussions with the different involved groups, the following conclusions were drawn as to the perception of the main stakeholders:

- The ISMP was regarded as a valuable tool for setting priorities to transform the IT base and to increase the IT effectiveness, ensuring alignment and providing value, particularly as regards business growth and stability.

- The level of execution and the agility to adapt the Plan to new business priorities were also considered overall satisfying and had allowed the institution to support its objectives of growth.

- The ability to raise the new demands or major change requests to an executive board was also noted.

- It was widely regarded that the focus on the ISMP had been at the expense of the day to day demands of improvement of the existing legacy applications and tools.

- Better execution results were shown in those projects where existed a clear project definition, the technology solution was simple and well identified and where there were stronger leaderships and lower coordination costs.

- The improvement of the corporate governance of IT was perceived as compulsory, with a major involvement of the faculty management leaders.

- Better prioritization mechanisms, communication policies and project management processes were demanded to be put in place, to ensure shared commitment of the different constituencies.

The overall balance was regarded by the SC as very positive. It is worth noting that some of the negative perceptions were considered predictable results and unavoidable “collateral effects” of the intended primal strategy as designed on the original ISMP. As put by the COO and main sponsor of the ISMP:

“Strategy making is a matter of yeses and nos. The plan was designed as a top-down strategy to renew the technology basis of a rapidly transforming organisation in a short time-frame. Focus was on transformation and execution. Other considerations were left behind, to some extent”.

5.5. Further Developments

This feedback has been taken into consideration for the update of the Plan and its governance mechanisms, which are currently being worked upon. Our research still includes the support of the governing and management teams and new results will be submitted for publication shortly.

Table 12 shows the kind of actions being deployed as compared to the results of the execution assessment. It’s worth noting the reflection taken by the institution upon the results of the assessment and the value conferred to the research in very practical terms.

Table 12.

Major consequences of the assessment.

Having a strategic information systems plan is still considered a key lever for institutional transformation. It has been reinforced with a corporate IT governance body and a stable funding framework. The reviewed ISMP for the period 2018–2020 gives a major weight to quality issues and learning and research projects as compared to the growth and stability priorities of the previous years. A new strategic initiative on research has been set-up. To secure both the agility to respond to new business priorities and the evolution of the existing IT and information assets, the institution is evolving to a scheme of comprehensive management of the IT portfolio, which is reviewed every 18 months. It includes mechanisms to involve middle managers from the administration and academia in priority setting and project delivery, all based in agility principles, which has been labeled “Agile Transformation Plan”. A new Program Portfolio Management Office is being put in place to both govern the existing programs and facilitate the transformation.

In the words of one of the leaders of the project team:

“If the first Master Plan was the one of an actionable strategy, the Plan as recently reviewed is for better governance and improved execution.”

6. Conclusions

6.1. Discussion

Although with new forms and labels, IT strategy making is still a major concern for IT and business executives and managers. The current paradigm advocates for an ongoing contingent social process of strategy formation or “strategy as practice”. This paper adheres to this stance. However, academic and professional literature has paid less attention to the evaluation of the implementation of IT strategies, notwithstanding reported lack of implementation or failures and pitfalls in the implementation attempts. In our view, plans should be assessed on a periodical basis to secure continuous business alignment, review priorities, reinforce organizational commitment with IT major plans, improve the conversation between business and IT and capture the benefits of investments. This article, after an extended review of the state of the art and an Action Design Research exercise, aims to contribute towards a dynamic framework for the assessment of the execution of IT strategic plans.

For this purpose, we have suggested selecting three main dimensions of analysis: (1) Strategy (that observes strategic alignment and the response to emergent business strategies); (2) Performance (in terms of benefits realization and program execution); and (3) Governance (including the perception of major stakeholders and the mechanisms of decision making). It is worth mentioning that, when studying execution, this work goes beyond the field of classical Strategic Planning up to other different domains, such as IT Governance, Project Portfolio Management, Enterprise Architecture and the concepts of impact and success of IS. Each dimension cannot be considered in isolation but it is related to the rest and makes a part of a comprehensive view. Of all these, the most difficult to acquire and to work with the teams is benefits realization, i.e., the way that the selection, management and execution of IT investments make a difference and creates value for the business. In our view, this is the most critical one, since it allows improving the quality and effectiveness of the dialogue and collaborative work between IT and business, to set priorities and to secure the return of IT investments.

With respect to the assessment model we have proposed, the selection of variables and metrics and their measurement should be reviewed through further research and actual implementation. We also suggest that a specific dimension related with organizational learning and business transformation should be extended and integrated within the model. Moreover, the variables related with benefits realization need to be refined within each specific context. To create better choices of analysis and intervention, we recommend a thorough examination of various contexts of application and the creation of improved maturity models.

According to the process and its results, the proposed method seems to be a quick, effective and efficient approach, in agreement with our initial working hypothesis and the literature. It also seems to be a practical tool to renew the conversation and take actions for improvement among the IT leaders, top management and major constituencies.

Regarding future work, we plan to repeat the exercise periodically, to validate and improve this approach. We will also compare the specific results of the analysis of our case and its comparison with other reported cases.

6.2. Practical Implications

The assessment occurs in a short time-frame through document analysis and intensive individual and group interactions. The governance, preparation, content, setting and selection of participants are all crucial. Decisions of who, in which role and how active they are over the process affect not only the effectiveness and quality of the exercise, but also the involvement of the different stakeholders, the visibility and credibility of the effort and, even more, the implications and further developments for the organization. The exercise is complemented with a number of reflective interviews to better understand the process, results and consequences for research purposes.

It may be said that the process is part of the product: the overall outcome seems to be an improved understanding and commitment (a buy-in) of the top and middle managers regarding the Plan. The exercise is not an audit, but an effective lever for the understanding and improvement of the organization’s performance. Planning and execution occur in a specific and evolving context. Thus, the methods need to be customized and the conclusions need to be interpreted under these conditions. A deep involvement of the researcher in the project team is relevant, without diminishing his independence and academic space.

As to the results of the assessment, in general terms, it may be stated that the closer the results are to the design criteria of the Plan (or the formal acceptance change criteria over its execution), the more successful may be considered the final results. Consequently, the “success” of the implementation of a strategic information system plan will be mainly measured by its ability to effectively achieve the objectives the organization wanted to achieve as perceived by its major stakeholders. This perception might be misled or biased to the eyes of the researcher for different reasons. The objective of the assessment is not to find the truth, but to confront those perceptions with evidence, create a helpful room for discussion and assist the management in order to react on the results. On the other hand, execution appears to be mainly related to the ability of IT and business to “mature” strategic initiatives as defined in the SISP into actionable projects, the certainty of the technical solutions at hand and the strength of leaderships. A tension appears between strategic impact and blunt execution. Finally, strategy implementation seems closely linked with project portfolio management and the results show the need of developing further capabilities in this area.

The results of the assessment have been taken into consideration for the update of the Plan and its governance mechanisms, which are being currently worked upon. Our research includes the support of the governing and management teams and new results will be submitted for publication shortly.

Author Contributions

Conceptualization, J.-R.R., R.C. and J.-M.M.-S.; data curation, J.-R.R.; investigation, J.-R.R.; methodology, J.-R.R., R.C. and J.-M.M.-S.; writing—original draft, J.-R.R., R.C. and J.-M.M.-S.; and writing—review and editing, J.-R.R., R.C. and J.-M.M.-S.

Funding

This research received no external funding.

Acknowledgments

The authors would like to thank Daniel Caballé, Clara Beleña and Eva Gil, UOC project team members, for their relentless commitment; and Rafael Macau (COO) and Emili Rubió (CIO) for their active sponsorship of the project.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sutherland, A.R.; Galliers, R.D. The evolving information systems strategy information systems management and strategy formulation: Applying and extending the ‘stages of growth’ concept. In Strategic Information Management: Challenges and Strategies in Managing Information Systems; Routledge: Abingdon, UK, 2014; pp. 47–77. [Google Scholar]

- Bharadwaj, A.; El Sawy, O.A.; Pavlou, P.A.; Venkatraman, N.V. Digital Business Strategy: Toward a Next Generation of Insights. MIS Q. 2013, 37, 471–482. [Google Scholar] [CrossRef]

- Peppard, J.; Galliers, R.; Thorogood, A. Information systems strategy as practice: Micro strategy and strategizing for IS. J. Strateg. Inf. Syst. 2014, 23, 1–10. [Google Scholar] [CrossRef]

- Whittington, R. Completing the practice turn in strategy research. Organ. Stud. 2006, 27, 613–634. [Google Scholar] [CrossRef]

- Johnson, G.; Whittington, R.; Scholes, K.; Angwin, D.; Regnér, P. Exploring Strategy: Text and Cases, 11st ed.; Pearson Education: London, UK, 2016. [Google Scholar]

- Teubner, R.A.; Mocker, M. A literature Overview on Strategic Information Systems Planning. Available online: http://dx.doi.org/10.2139/ssrn.1959494 (accessed on 13 September 2019).

- Amrollahi, A.; Ghapanchi, A.H.; Talaei-Khoei, A. A systematic literature review on strategic information systems planning: Insights from the past decade. Pac. Asia J. Assoc. Inf. Syst. 2013, 5, 4-1–4-28. [Google Scholar] [CrossRef]

- Earl, M.J. (Ed.) Information Management: The Organizational Dimension; Oxford University Press: Oxford, UK, 1996. [Google Scholar]

- Peppard, J.; Ward, J. The Strategic Management of Information Systems: Building a Digital Strategy; John Wiley Sons: Hoboken, NJ, USA, 2016. [Google Scholar]

- Bates, A.W.; Bates, T.; Sangrá, A. Managing Technology in Higher Education: Strategies for Transforming Teaching and Learning; John Wiley Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Rodríguez, J.-R. El Máster Plan (Plan Director) de Sistemas de Información de la UOC. Caso Práctico. Available online: http://openaccess.uoc.edu/webapps/o2/bitstream/10609/78267/6/Direcci%C3%B3n%20de%20sistemas%20de%20informaci%C3%B3n%20%28Executive%29_M%C3%B3dulo%205_Planificaci%C3%B3n%20estrat%C3%A9gica%20de%20sistemas%20de%20informaci%C3%B3n.pdf (accessed on 13 September 2019).

- Sein, M.K.; Henfridsson, O.; Purao, S.; Rossi, M.; Lindgren, R. Action design research. MIS Q. 2011, 35, 37–56. [Google Scholar] [CrossRef]

- Salmela, H.; Spil, T.A. Dynamic and emergent information systems strategy formulation and implementation. Int. J. Inf. Manag. 2002, 22, 441–460. [Google Scholar] [CrossRef]

- Henderson, J.C.; Venkatraman, H. Strategic alignment: Leveraging information technology for transforming organizations. IBM Syst. J. 1993, 32, 472–484. [Google Scholar] [CrossRef]

- Chan, Y.E.; Huff, S.L.; Copeland, D.G. Assessing realized information systems strategy. J. Strateg. Inf. Syst. 1997, 6, 273–298. [Google Scholar] [CrossRef]

- Kopmann, J.; Kock, A.; Killen, C.P.; Gemünden, H.G. The role of project portfolio management in fostering both deliberate and emergent strategy. Int. J. Proj. Manag. 2017, 35, 557–570. [Google Scholar] [CrossRef]

- Hunter, R.; Apfel, A.; McGee, K.; Handler, R.; Dreyfuss, C.; Smith, M.; Maurer, W. A Simple Framework to Translate IT Benefits Into Business Value Impact. Available online: https://www.gartner.com/en/documents/672507/a-simple-framework-to-translate-it-benefits-into-business (accessed on 13 September 2019).

- Pennypacker, J.S.; Dye, L. Project Portfolio Management and Managing Multiple Projects: Two Sides of the Same Coin; Marcel Dekker: New York, NY, USA, 2002. [Google Scholar]

- Thiry, M. Program Management; Gower: Farnham, UK, 2010. [Google Scholar]

- Galliers, R. Information Systems Planning in the United Kingdom and Australia: A Comparison of Current Practice; Oxford University Press: Oxford, UK, 1987. [Google Scholar]

- Isaca, C. Cobit 5: A Business Framework for the Governance and Management of Enterprise IT; ISACA: Rolling Meadows, IL, USA, 2014; ISBN 1963669381. [Google Scholar]

- Rodriguez, J.-R.; Clariso, R.; Marco-Simo, J. Strategy in the making: Assessing the execution of a strategic information systems plan. In European, Mediterranean, and Middle Eastern Conference on Information Systems; Springer: Cham, Switzerland, 2018; pp. 475–488. [Google Scholar]

- Lederer, A.; Sethi, V. The Implementation of Strategic Information Systems Planning Methodologies. MIS Q. 1988, 12, 445–461. [Google Scholar] [CrossRef]

- Galliers, R.D. Strategic information systems planning: Myths, reality and guide-lines for successful implementation. Eur. J. Inf. Syst. 1991, 1, 55–64. [Google Scholar] [CrossRef]

- Premkumar, G.; King, W. Assessing strategic information systems planning. Long Range Plan. 1991, 24, 41–58. [Google Scholar] [CrossRef]

- Lederer, A.L.; Sethi, V. Key prescriptions for strategic information systems planning. J. Manag. Inf. Syst. 1996, 13, 35–62. [Google Scholar] [CrossRef]

- Mentzas, G. Implementing an IS strategy—A team approach. Long Range Plan. 1997, 30, 84–95. [Google Scholar] [CrossRef]

- Segars, A.H.; Grover, V. Strategic information systems planning success: An investigation of the construct and its measurement. MIS Q. 1998, 22, 139–163. [Google Scholar] [CrossRef]

- Doherty, N.F.; Marples, C.G.; Suhaimi, A. The relative success of alternative approaches to strategic information systems planning: An empirical analysis. J. Strateg. Inf. Syst. 1999, 8, 263–283. [Google Scholar] [CrossRef]

- Teo, T.S.; Ang, J.S. An examination of major IS planning problems. Int. J. Inf. Manag. 2001, 21, 457–470. [Google Scholar] [CrossRef]

- Hartono, E.; Lederer, A.L.; Sethi, V.; Zhuang, Y. Key predictors of the implementation of strategic information systems plans. ACM SIGMIS Database DATABASE Adv. Inf. Syst. 2003, 34, 41–53. [Google Scholar] [CrossRef]

- Vitale, M.R.; Ives, B.; Beath, C.M. Linking information technology and corporate strategy: An organizational view. In Proceedings of the International Conference on Information Systems (ICIS), San Diego, CA, USA, 15–17 December 1986; p. 30. [Google Scholar]

- Sambamurthy, V.; Venkataraman, S.; DeSanctis, G. The design of information technology planning systems for varying organizational contexts. Eur. J. Inf. Syst. 1993, 2, 23–35. [Google Scholar] [CrossRef]

- Gottschalk, P. Strategic information systems planning: The IT strategy implementation matrix. Eur. J. Inf. Syst. 1999, 8, 107–118. [Google Scholar] [CrossRef]

- Da Cunha, P.R.; de Figueiredo, A.D. Information systems development as flowing wholeness. In Realigning Research and Practice in Information Systems Development; Springer: Boston, MA, USA, 2001; pp. 29–48. [Google Scholar]

- Brown, I.T. Testing and extending theory in strategic information systems plan-ning through literature analysis. Inf. Resour. Manag. J. 2004, 17, 20. [Google Scholar] [CrossRef]

- Chen, D.Q.; Mocker, M.; Preston, D.S.; Teubner, A. Information systems strategy: Reconceptualization, measurement, and implications. MIS Q. 2010, 34, 233–259. [Google Scholar] [CrossRef]

- Mikalef, P.; Pateli, A.; Batenburg, R.S.; Wetering, R.V.D. Purchasing alignment under multiple contingencies: A configuration theory approach. Ind. Manag. Data Syst. 2015, 115, 625–645. [Google Scholar] [CrossRef]

- Arvidsson, V.; Holmström, J.; Lyytinen, K. Information systems use as strategy practice: A multi-dimensional view of strategic information system implementation and use. J. Strateg. Inf. Syst. 2014, 23, 45–61. [Google Scholar] [CrossRef]

- Kamariotou, M.; Kitsios, F. Information systems phases and firm performance: A conceptual framework. In Strategic Innovative Marketing; Springer: Cham, Switzerland, 2017; pp. 553–560. [Google Scholar]

- Drnevich, P.; Croson, D. Information technology and business-level strategy: toward an integrated theoretical perspective. MIS Q. 2013, 483–509. [Google Scholar] [CrossRef]

- Vaara, E.; Whittington, R. Strategy-as-practice: Taking social practices seriously. Acad. Manag. Ann. 2012, 6, 285–336. [Google Scholar] [CrossRef]

- Jarzabkowski, P.; Kaplan, S.; Seidl, D.; Whittington, R. On the risk of studying practices in isolation: Linking what, who, and how in strategy research. Strateg. Organ. 2016, 14, 248–259. [Google Scholar] [CrossRef]

- Zelenkov, Y. Critical regular components of IT strategy: Decision making model and efficiency measurement. J. Manag. Anal. 2015, 2, 95–110. [Google Scholar] [CrossRef]

- Suomalainen, T.; Kuusela, R.; Tihinen, M. Continuous planning: An important aspect of agile and lean development. Int. J. Agile Syst. Manag. 2015, 8, 132–162. [Google Scholar] [CrossRef]

- Mirchandani, D.; Lederer, A. “Less is more”: Information systems planning in an uncertain environment. Inf. Syst. Manag. 2012, 29, 13–25. [Google Scholar] [CrossRef]

- Tellis, W.M. Application of a case study methodology. Qual. Rep. 1997, 3, 1–19. [Google Scholar]

- Sabherwal, R. The relationship between information system planning sophistication and information system success: An empirical assessment. Decis. Sci. 1999, 30, 137–167. [Google Scholar] [CrossRef]

- Bates, A. Managing technological change: Strategies for college and university leaders. In The Jossey-Bass Higher and Adult Education Series; Jossey-Bass Publishers: San Francisco, CA, USA, 2000. [Google Scholar]

- Bulchand, J.; Rodríguez, J. Information and communication technologies and information systems planning in higher education. Informatica (Ljubljana) 2003, 27, 275–284. [Google Scholar]

- Nguyen, F.; Frazee, J. Strategic technology planning in higher education. Perform. Improv. 2009, 48, 31–40. [Google Scholar] [CrossRef]

- Kirinic, V.; Kozina, M. Maturity assessment of strategy implementation in higher education institution. In Central European Conference on Information and Intelligent Systems; Faculty of Organization and Informatics Varazdin: Varaždin, Croatia, 2016; p. 169. [Google Scholar]

- Kobulnicky, P. Critical Factors in Information Technology Planning for the Academy. Cause/Effect 1999, 22, 19–26. [Google Scholar]

- Titthasiri, W. Information technology strategic planning process for institutions of higher education in Thailand. NECTEC Tech. J. 2000, 3, 153–164. [Google Scholar]

- Ishak, I.; Alias, R. Designing a Strategic Information System Planning Methodology for Malaysian Institutes of Higher Learning (ISP-IPTA); Universiti Teknologi Malaysia: Skudai, Malaysia, 2005. [Google Scholar]

- Clarke, S.; Lehaney, B. Mixing methodologies for information systems development and strategy: A higher education case study. J. Oper. Res. Soc. 2000, 51, 542–556. [Google Scholar] [CrossRef]

- Jaffer, S.; Ng’ambi, D.; Czerniewicz, L. The role of ICTs in higher education in South Africa: One strategy for addressing teaching and learning challenges. Int. J. Educ. Dev. Using ICT 2007, 3, 131–142. [Google Scholar]

- Goncalves, N.; Sapateiro, C. Aspects for Information Systems Implementation: Challenges and impacts. A higher education institution experience. Tékhne-Revista de Estudos Politécnicos 2008, 9, 225–241. [Google Scholar]

- Luic, L.; Boras, D. Strategic planning of the integrated business and information system-A precondition for successful higher education management. In Proceedings of the 32nd International Conference on Information Technology Interfaces (ITI 2010), Cavtat, Croatia, 21–24 June 2010. [Google Scholar]

- Barn, B.S.; Clark, T.; Hearne, G. Business and ICT alignment in higher education: A case study in measuring maturity. In Building Sustainable Information Systems; Linger, H., Fisher, J., Barnden, A., Barry, C., Lang, M., Schneider, C., Eds.; Springer: Boston, MA, USA, 2013. [Google Scholar]

- Soares, S.; Setyohady, D.B. Enterprise architecture modeling for oriental university in Timor Leste to support the strategic plan of integrated information system. In Proceedings of the 5th International Conference on Cyber and IT Service Management (CITSM), Denpasar, Indonesia, 8–10 August 2017; pp. 1–6. [Google Scholar]

- Rice, M.; Miller, M. Faculty involvement in planning for the use and integration of instructional and administrative technologies. J. Res. Comput. Educ. 2001, 33, 328–336. [Google Scholar] [CrossRef]

- Dempster, J.; Deepwell, F. Experiences of national projects in embedding learning technology into institutional practices in UK higher education. In Learning Technology in Transition: From Individual Enthusiasm to Institutional Implementation; Taylor & Francis: London, UK, 2003; pp. 45–62. [Google Scholar]

- Abel, R. Achieving Success in Internet-Supported Learning in Higher Education: Case Studies Illuminate Success Factors, Challenges, and Future Directions; Alliance for Higher Education Competitiveness: Lake Mary, FL, USA, 2005. [Google Scholar]

- Sharpe, R.; Benfield, G.; Francis, R. Implementing a university e-learning strategy: Levers for change within academic schools. Res. Learn. Technol. 2006, 14, 135–151. [Google Scholar] [CrossRef]

- Stensaker, B.; Maassen, P.; Borgan, M.; Oftebro, M.; Karseth, B. Use, updating and integration of ICT in higher education: Linking purpose, people and pedagogy. High. Educ. 2007, 54, 417–433. [Google Scholar] [CrossRef]

- Goolnik, G. Change Management Strategies When Undertaking eLearning Initiatives in Higher Education. J. Organ. Learn. Leadersh. 2012, 10, 16–28. [Google Scholar]

- Bianchi, I.; Rui, D. IT Governance mechanisms in higher education. Procedia Comput. Sci. 2016, 100, 941–946. [Google Scholar] [CrossRef]

- Khouja, M.; Rodriguez, I.; Halima, Y.; Moalla, S. IT Governance in Higher education Institutions: A systematic Literature review. Int. J. Hum. Cap. Inf. Technol. Prof. (IJHCITP) 2018, 9, 52–67. [Google Scholar] [CrossRef]

- Baskerville, R.; Wood-Harper, A.T. A Taxonomy of Action Research Methods; Institut for Informatik og Økonomistyring: Handelshøjskolen i København, Denmark, 1996. [Google Scholar]

- Chan, Y.E.; Reich, B.H. IT alignment: What have we learned? J. Inf. Technol. 2007, 22, 297–315. [Google Scholar] [CrossRef]

- Coltman, T.; Tallon, P.; Sharma, R.; Queiroz, M. Strategic IT alignment: Twenty-five years on. J. Inf. Technol. 2015, 30, 91–100. [Google Scholar] [CrossRef]

- Juiz, C.; Toomey, M. To govern IT, or not to govern IT? Commun. ACM 2015, 58, 58–64. [Google Scholar] [CrossRef]

- Mintzberg, H.; Waters, J.A. Of strategies, deliberate and emergent. Strateg. Manag. J. 1985, 6, 257–272. [Google Scholar] [CrossRef]

- Ambrosini, V.; Johnson, G.; Scholes, K. Exploring Techniques of Analysis and Evaluation in Strategic Management; Prentice Hall Europe: Hemel Hempstead, UK, 1998. [Google Scholar]

- Parker, M.M.; Benson, R.J.; Trainor, H.E. Information Economics: Linking Business Performance to Information Technology; Prentice-Hall: Upper Saddle River, NJ, USA, 1988. [Google Scholar]

- Ashurst, C.; Doherty, N.F.; Peppard, J. Improving the impact of IT development projects: The benefits realization capability model. Eur. J. Inf. Syst. 2008, 17, 352–370. [Google Scholar] [CrossRef]

- Ward, J.; Daniel, E. Benefits Management: How to Increase the Business Value of your IT Projects; John Wiley Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Meskendahl, S. The influence of business strategy on project portfolio management and its success—A conceptual framework. Int. J. Proj. Manag. 2010, 28, 807–817. [Google Scholar] [CrossRef]

- DeLone, W.H.; McLean, E.R. Information systems success: The quest for the dependent variable. Inf. Syst. Res. 1992, 3, 60–95. [Google Scholar] [CrossRef]

- DeLone, W.H.; McLean, E.R. The DeLone and McLean model of information systems success: A ten-year update. J. Manag. Inf. Syst. 2003, 19, 9–30. [Google Scholar]

- Gable, G.G.; Sedera, D.; Chan, T. Re-conceptualizing information system success: The IS-impact measurement model. J. Assoc. Inf. Syst. 2008, 9, 377. [Google Scholar] [CrossRef]

- Petter, S.; DeLone, W.; McLean, E. Measuring information systems success: Models, dimensions, measures, and interrelationships. Eur. J. Inf. Syst. 2008, 17, 236–263. [Google Scholar] [CrossRef]

- Bartenschlager, J.; Goeken, M. IT strategy implementation framework-bridging enterprise architecture and IT governance. In Proceedings of the 16th Americas Conference on Information Systems (AMCIS 2010), Lima, Peru, 12–15 August 2010; p. 400. [Google Scholar]

- Universitat Oberta de Catalunya: Strategic Plan 2014–2020. Available online: https://www.uoc.edu/portal/_resources/EN/documents/la_universitat/uoc-strategic-plan-2014-2020.pdf (accessed on 13 September 2019).

- Hall, L.; Stegman, E.; Futela, S.; Badlani, D. IT Key Metrics Data 2018: Key Industry Measures: Software Publishing and Internet Services Analysis: Current Year. Available online: https://www.gartner.com/en/documents/3832775/it-key-metrics-data-2018-key-industry-measures-software- (accessed on 13 September 2019).

- Sarkar, S. The role of information and communication technology (ICT) in higher education for the 21st century. Science 2012, 1, 30–41. [Google Scholar]

- Barber, M.; Donnelly, K.; Rizvi, S.; Summers, L. An avalanche is coming. In Higher Education and the Revolution ahead; Institute for Public Policy Research: London, UK, 2013; Volume 73. [Google Scholar]

- Altbach, P.G. Global Perspectives on Higher Education; JHU Press: Baltimore, MD, USA, 2016. [Google Scholar]

- Pucciarelli, F.; Kaplan, A. Competition and strategy in higher education: Managing complexity and uncertainty. Bus. Horiz. 2016, 59, 311–320. [Google Scholar] [CrossRef]

- Lowendahl, J.M.; Thayer, T.B.; Morgan, G.; Yanckello, R.A. Top 10 Business Trends Impacting Higher Education in 2018. Available online: https://www.gartner.com/en/documents/3843385/top-10-business-trends-impacting-higher-education-in-2010 (accessed on 13 September 2019).

- Grajek, S. Top 10 IT Issues, 2018: The Remaking of Higher Education. Available online: https://er.educause.edu/articles/2018/1/top-10-it-issues-2018-the-remaking-of-higher-education (accessed on 13 September 2019).

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).