Inter-Vehicle Communication Protocol Design for a Yielding Decision at an Unsignalized Intersection and Evaluation of the Protocol Using Radio Control Cars Equipped with Raspberry Pi

Abstract

:1. Introduction

2. Related Studies

3. Yielding Method at an Unsignalized Intersection

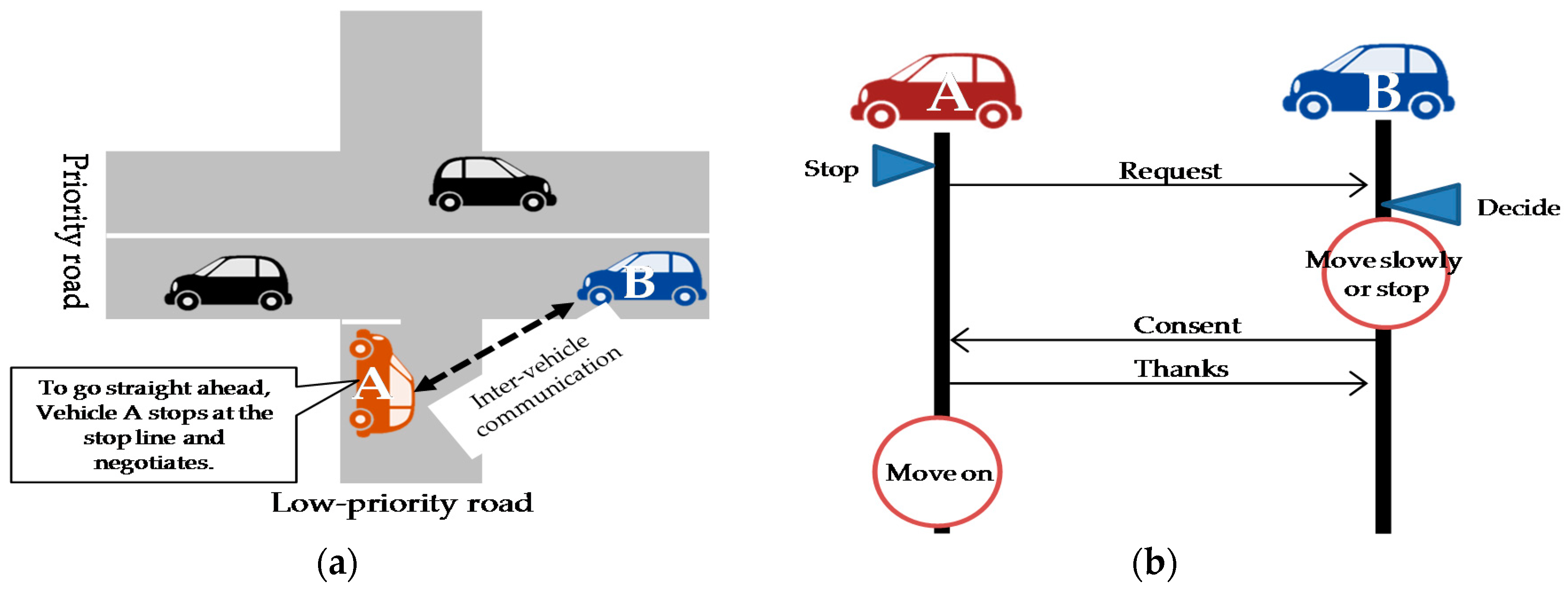

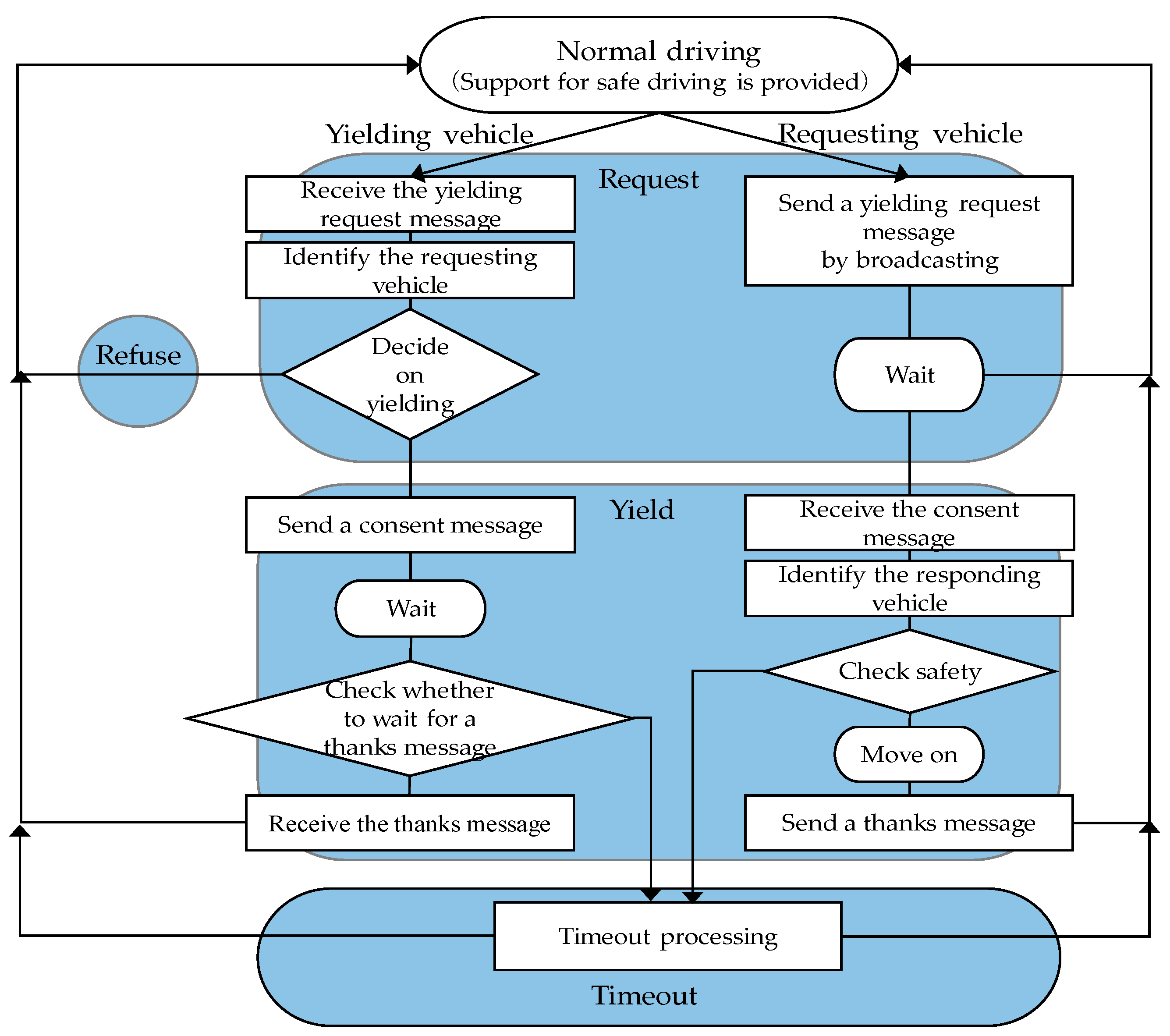

3.1. Yielding Based on Message Exchanges and the Related State Transition Diagram

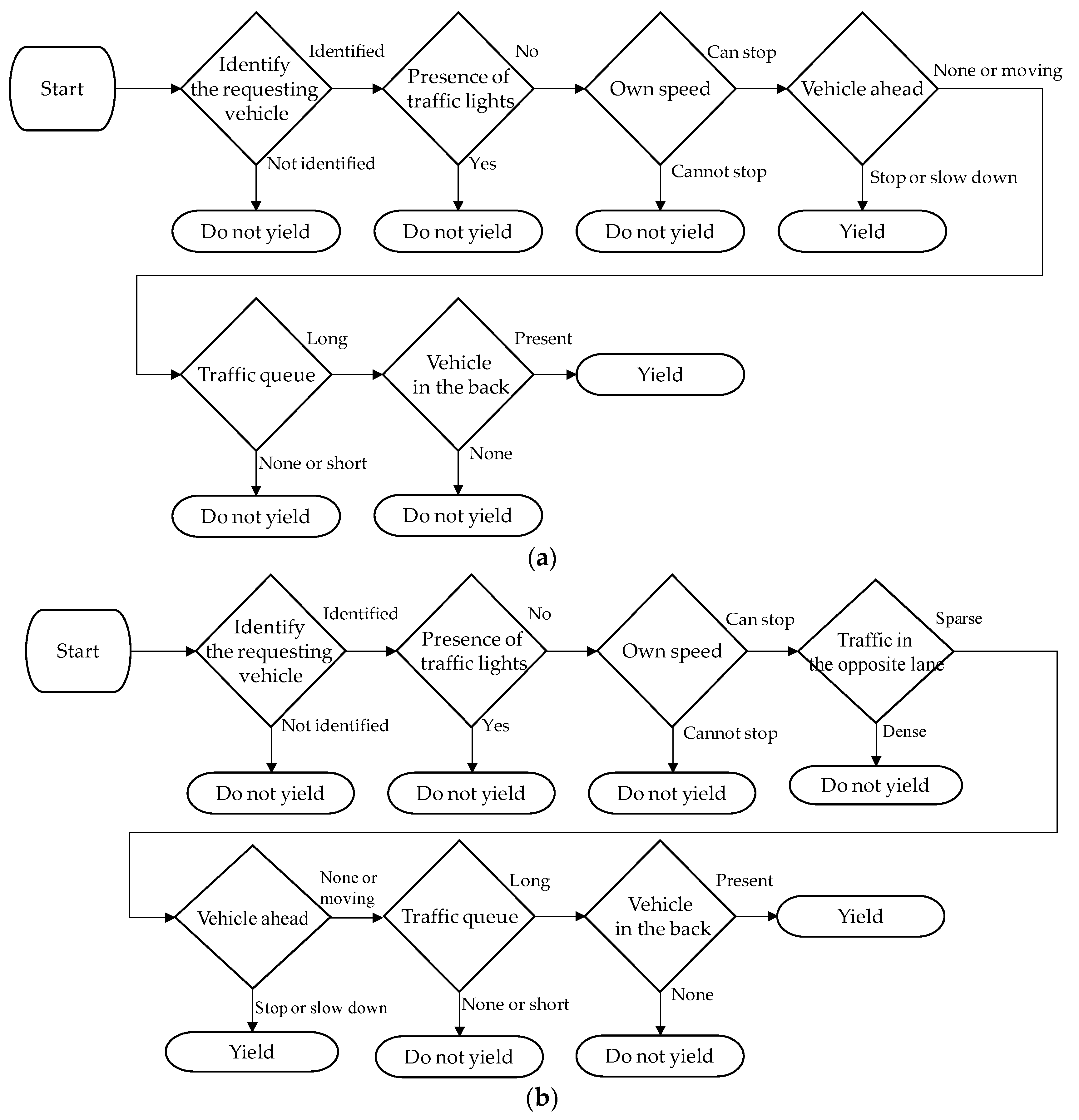

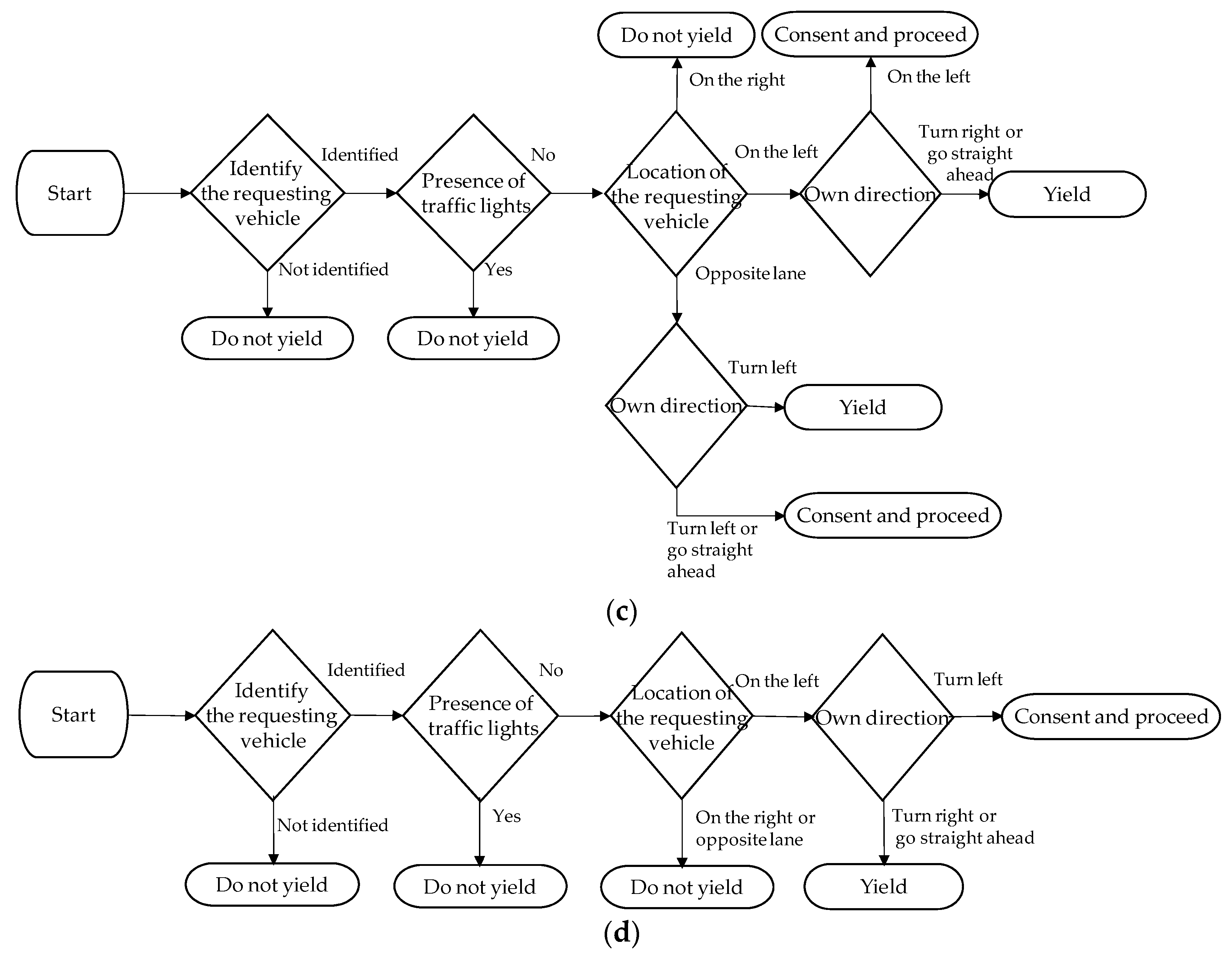

3.2. Yielding Patterns

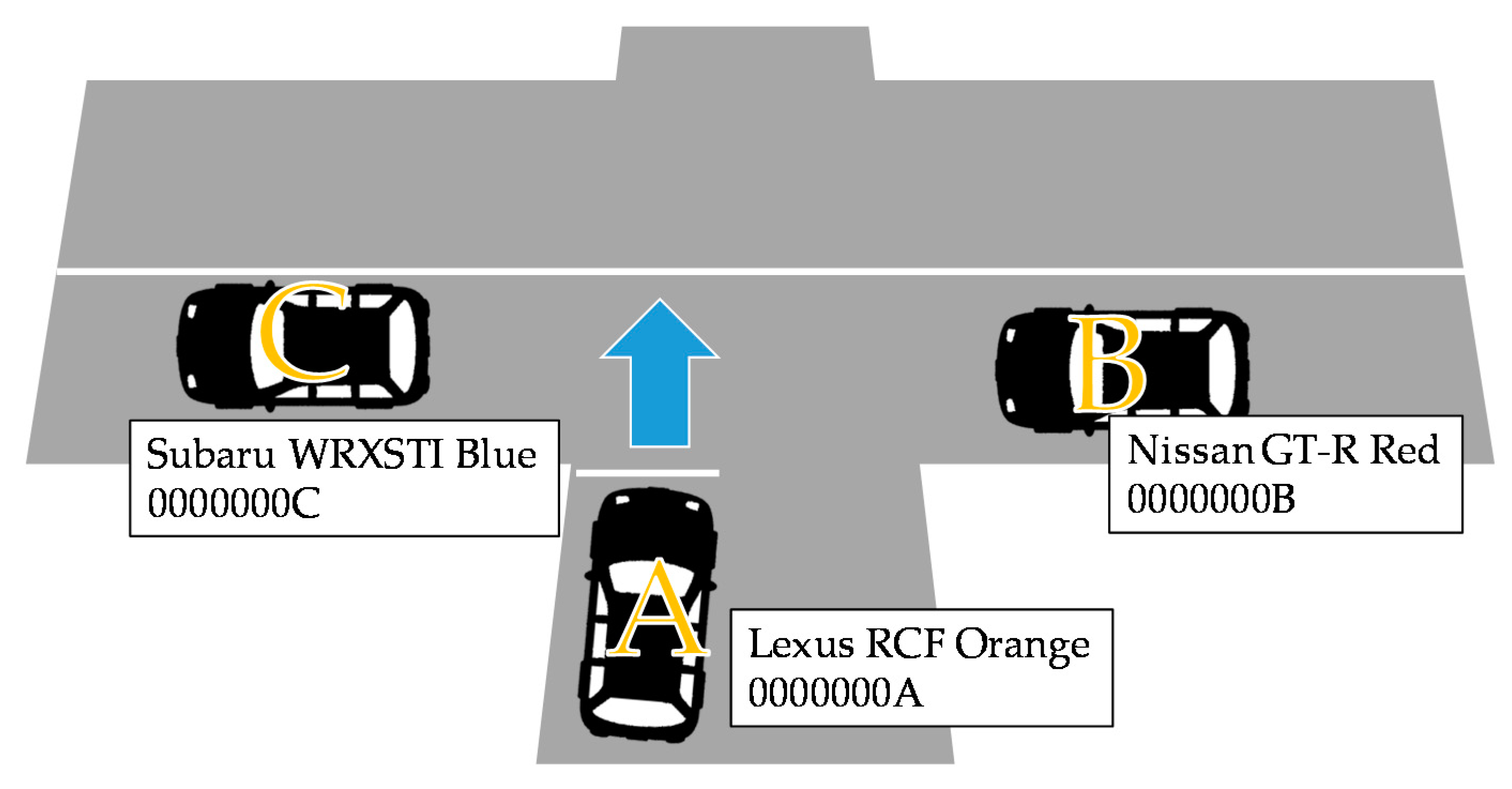

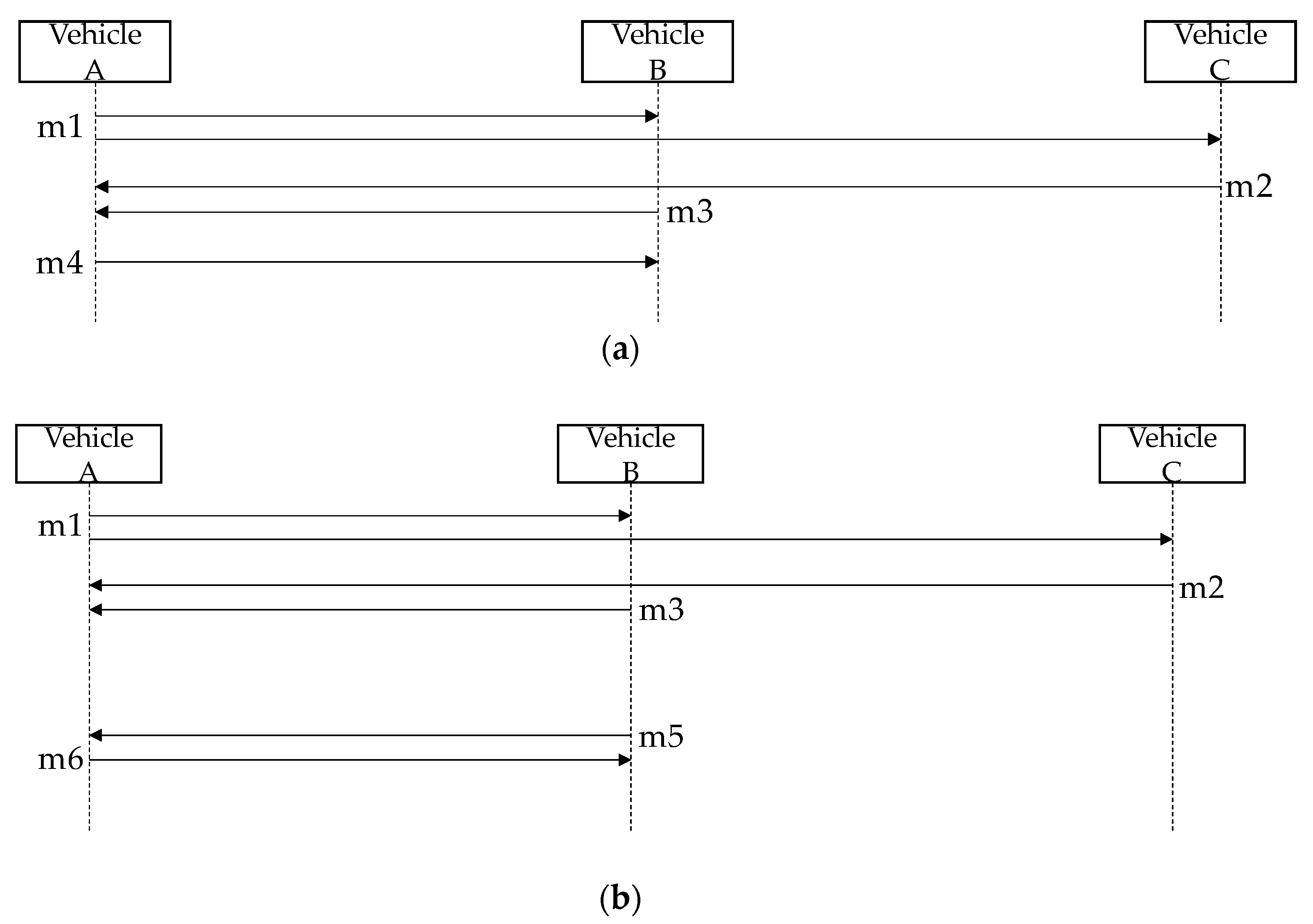

3.3. Design of the Inter-Vehicle Communication Protocol Used for Yielding Control

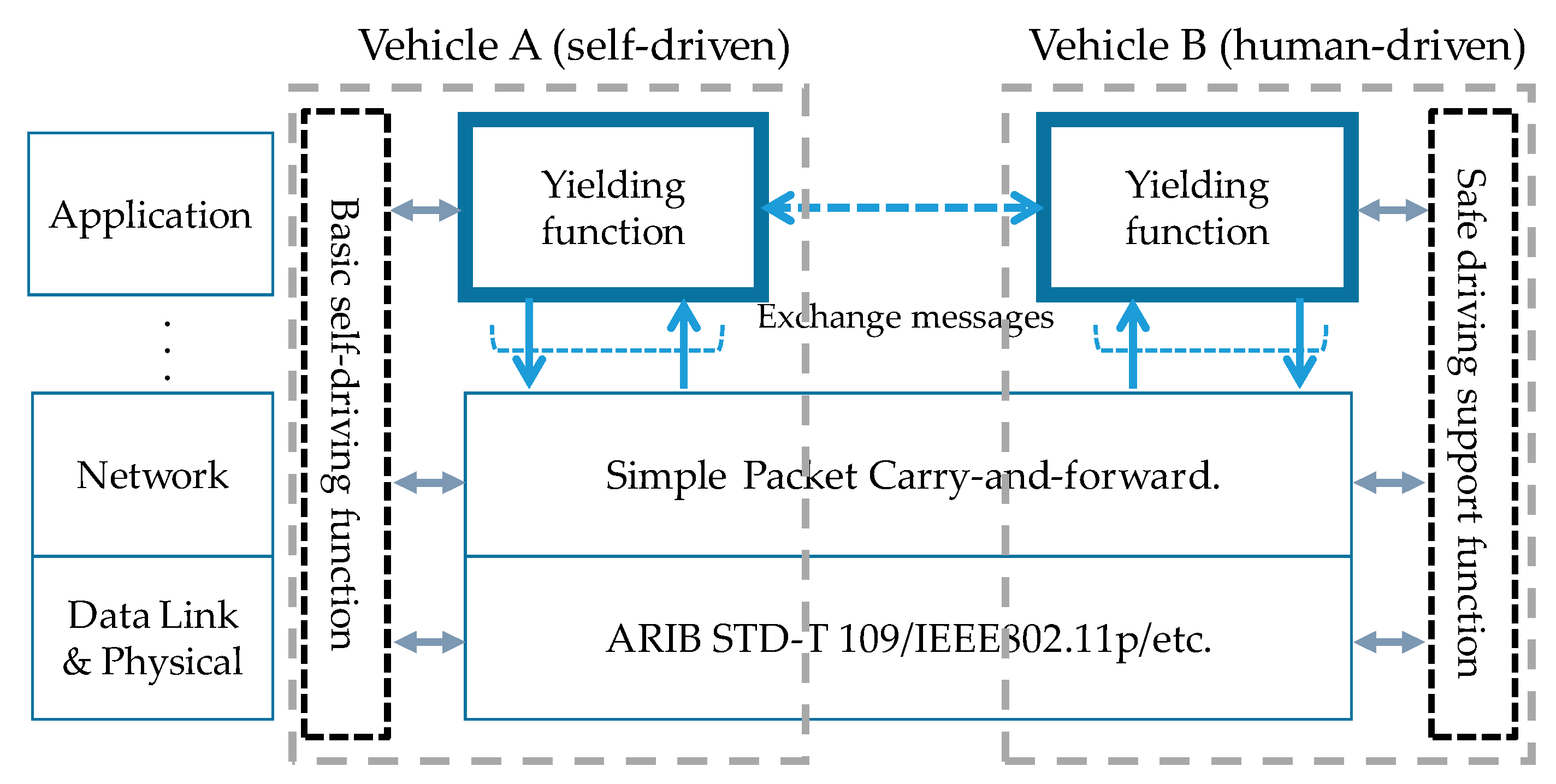

3.3.1. Assumed Inter-Vehicle Communication Protocol Stack

3.3.2. Message Elements and Format

4. Experimental System

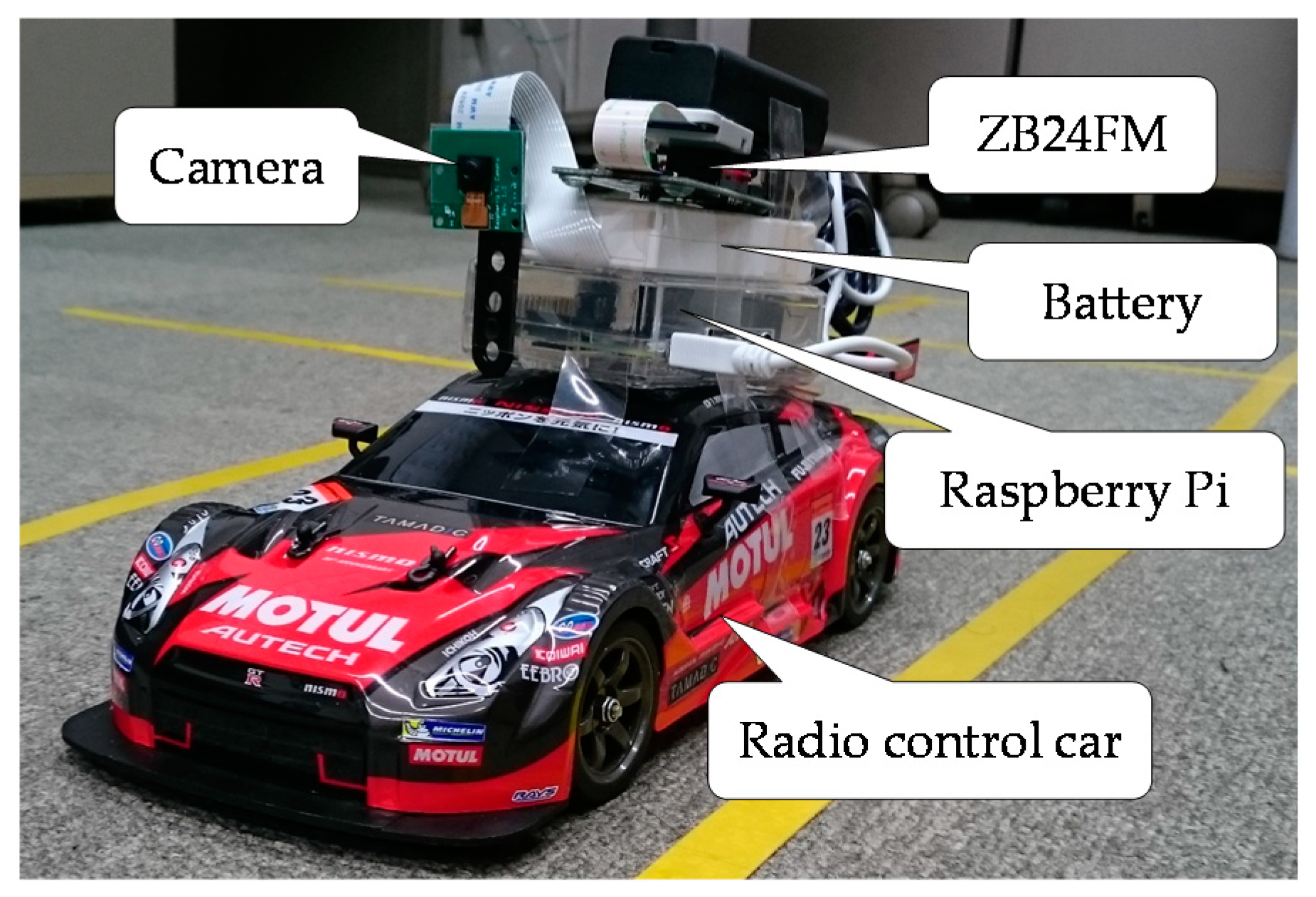

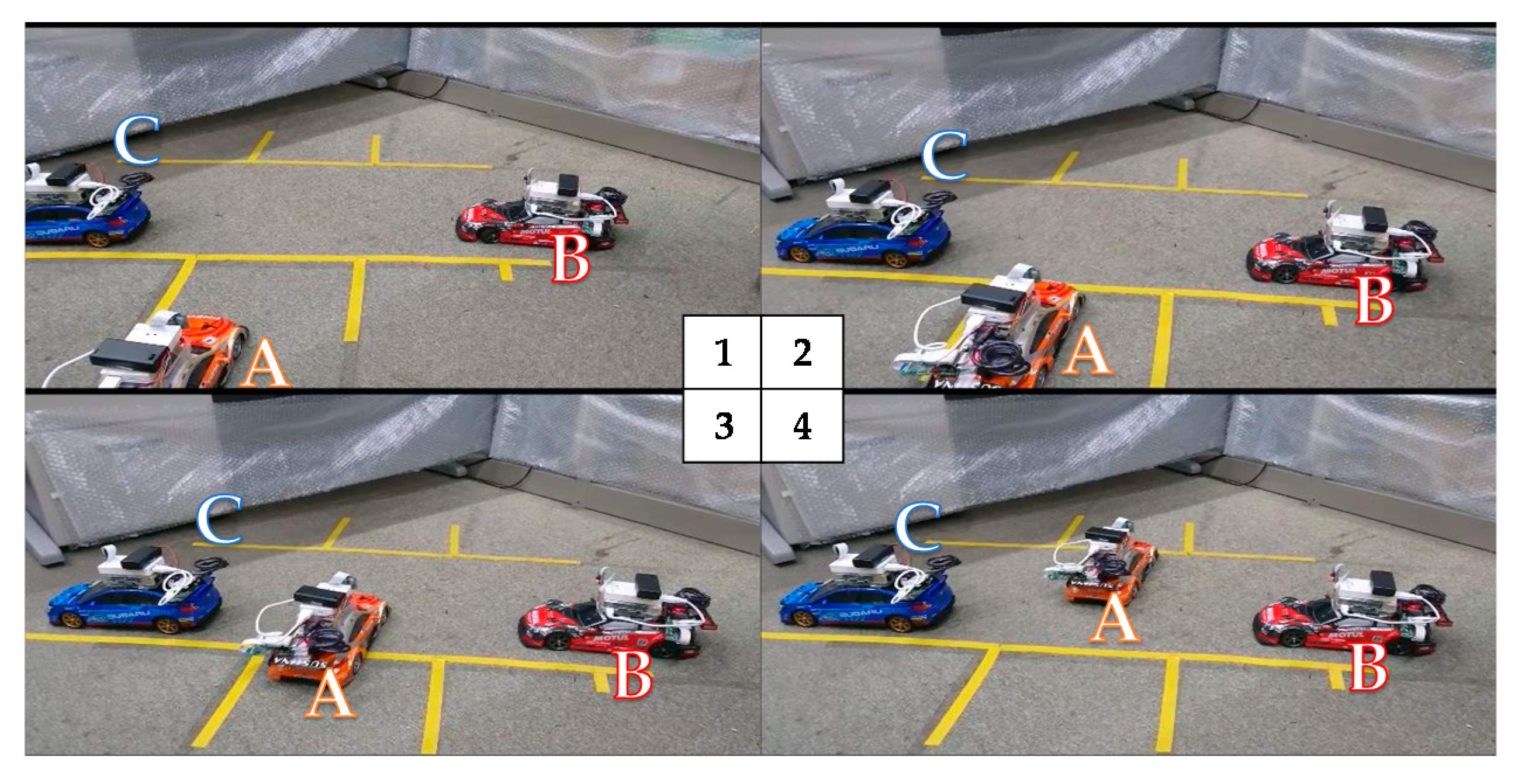

4.1. Experimental System Consisting of Radio Control Cars and Raspberry Pi Computers

4.2. Recognition of Surrounding Vehicles and the Road Shape at an Intersection

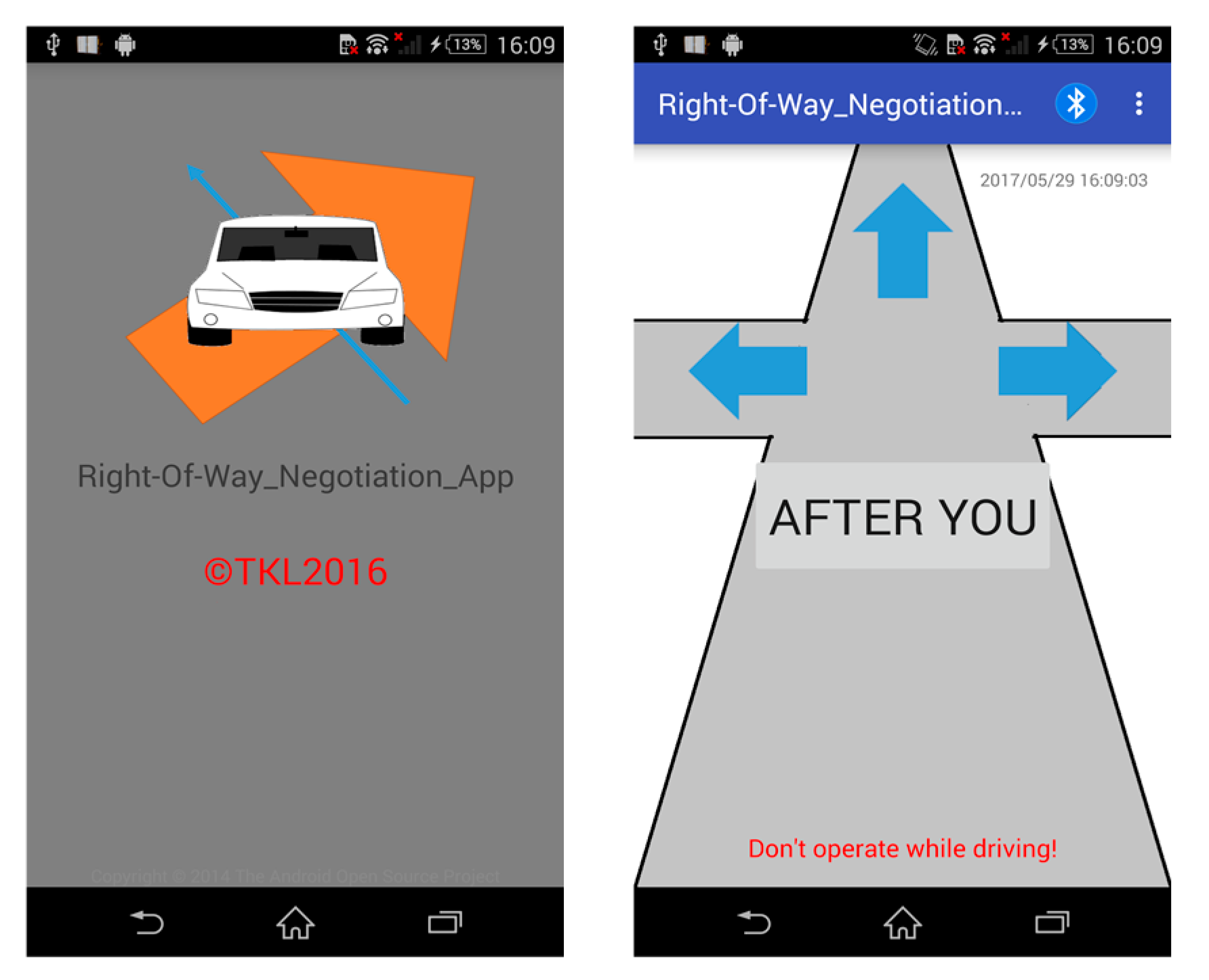

4.3. Human-Machine Interface

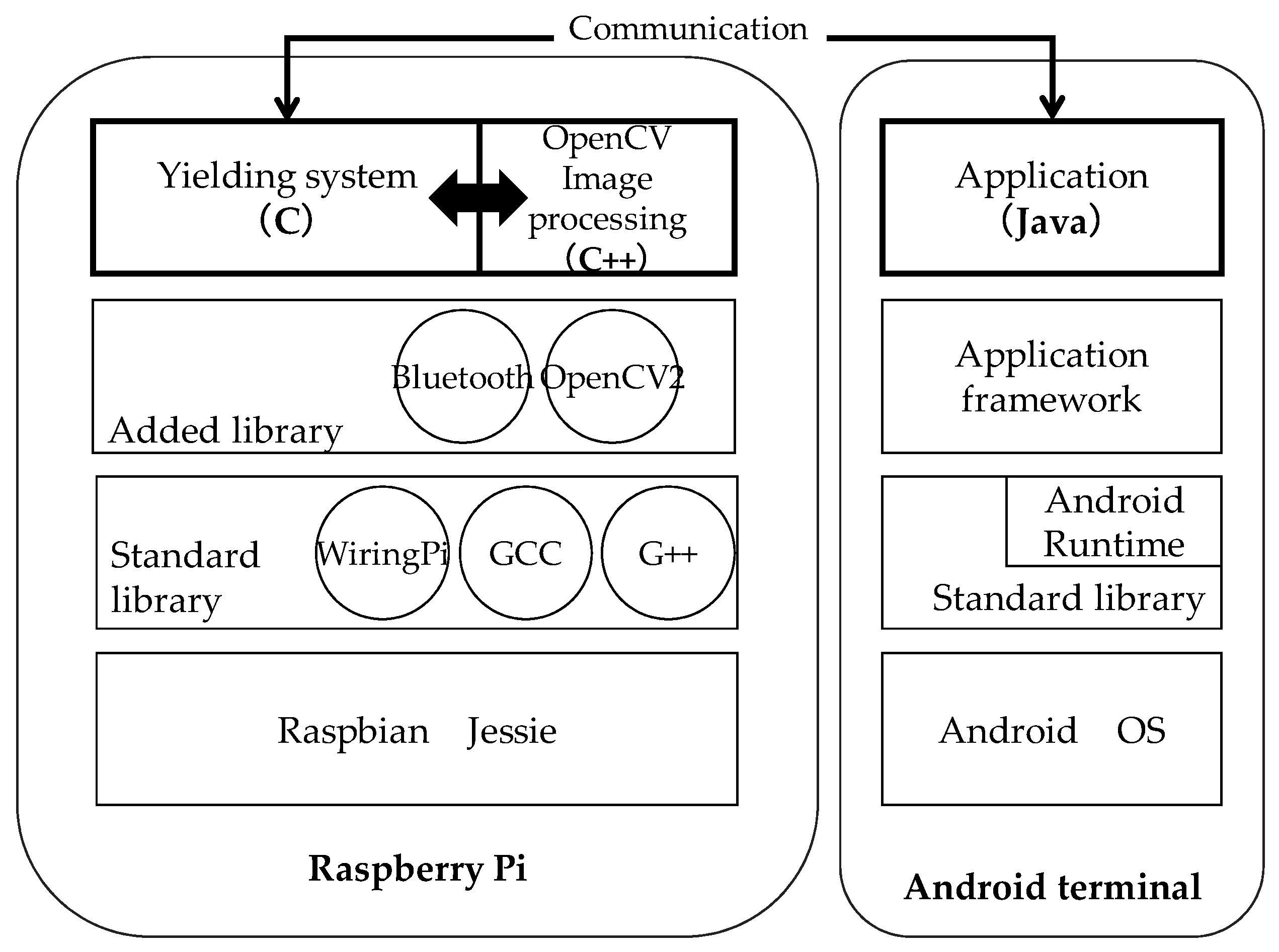

4.4. Software Configuration of the Experimental System

5. Evaluation

5.1. Evaluation Environment

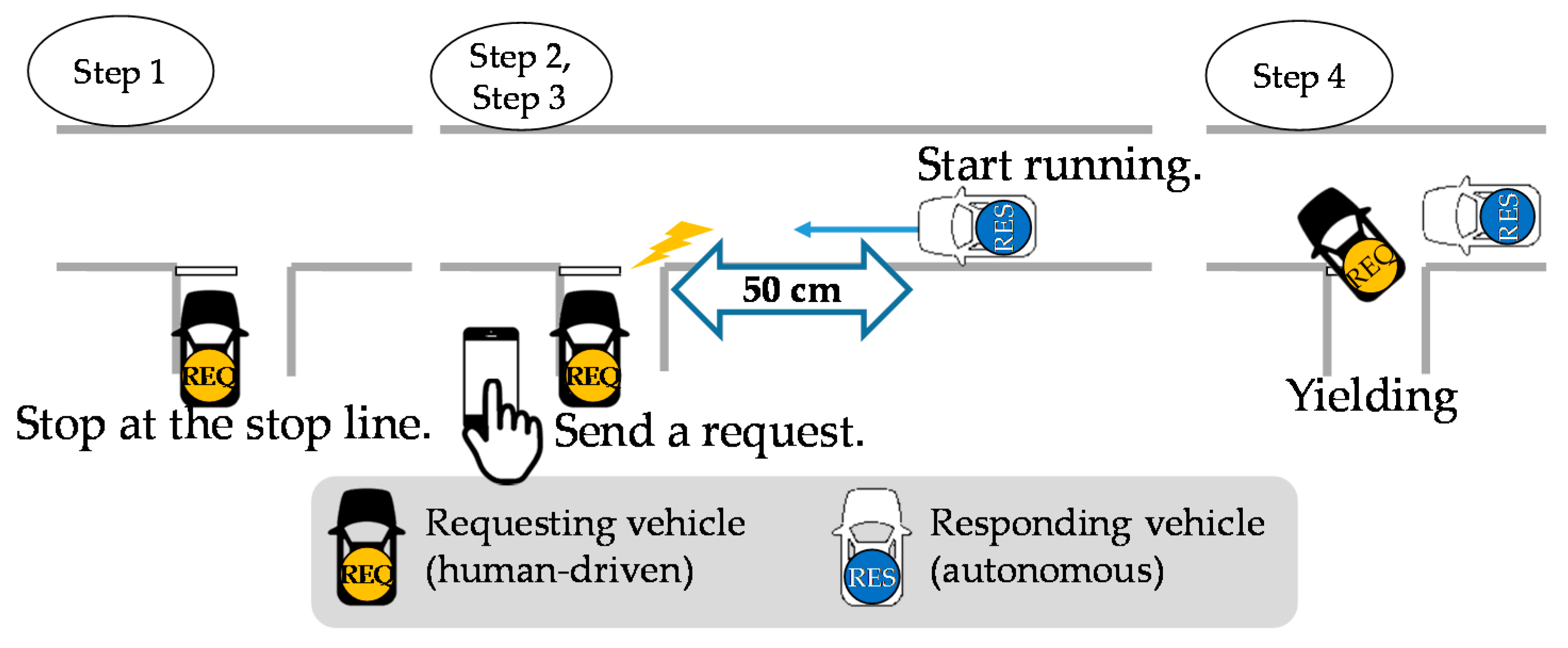

5.2. Evaluation Items and Experiment Methods

- step1.

- The requesting vehicle stops at the stop line of the intersection.

- step2.

- The responding vehicle starts running.

- step3.

- When the responding vehicle comes to a point about 50 cm from the intersection, it receives a yielding request message on the app.

- step4.

- The responding vehicle autonomously yields to the requesting vehicle.

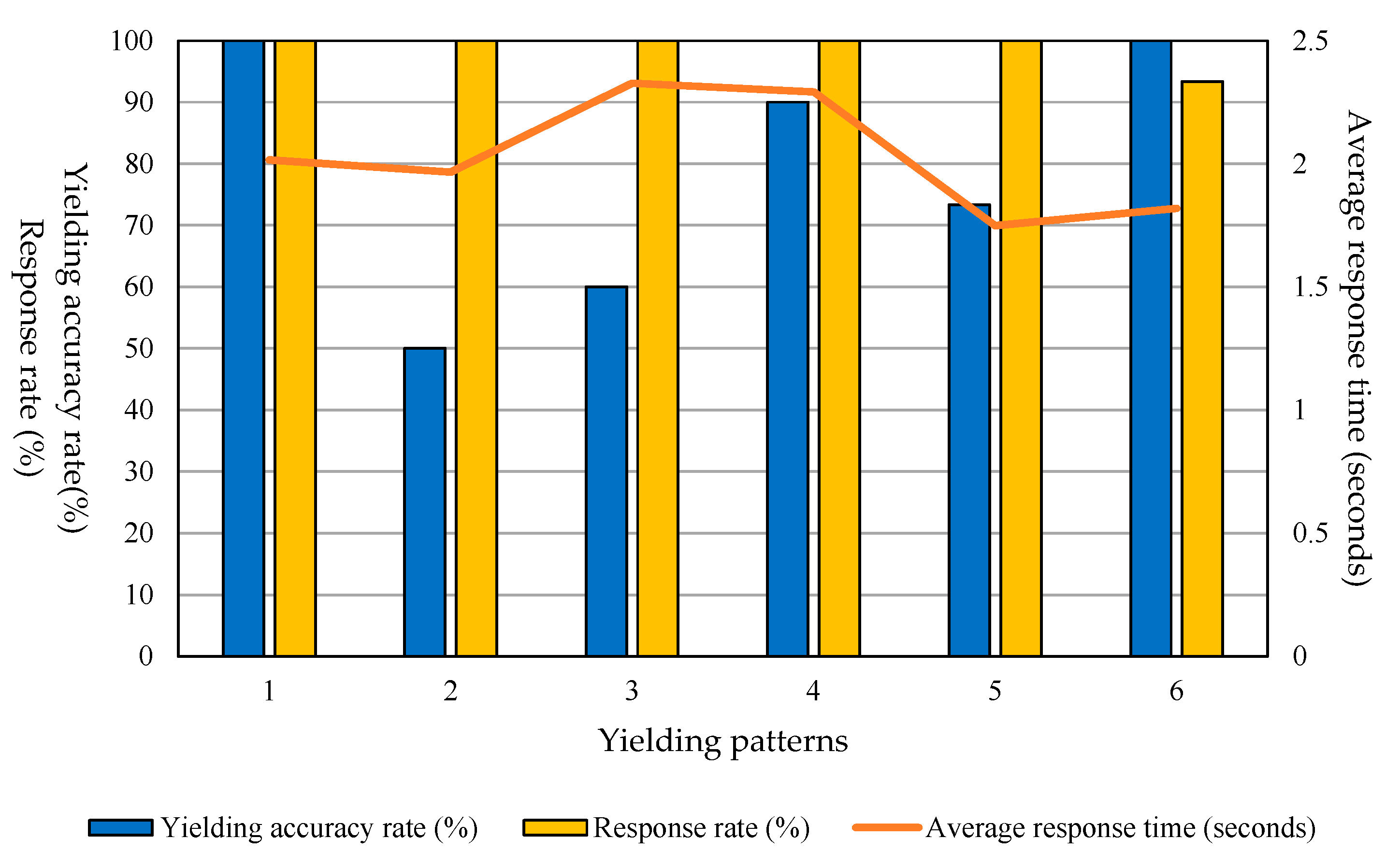

5.3. Experiment Results

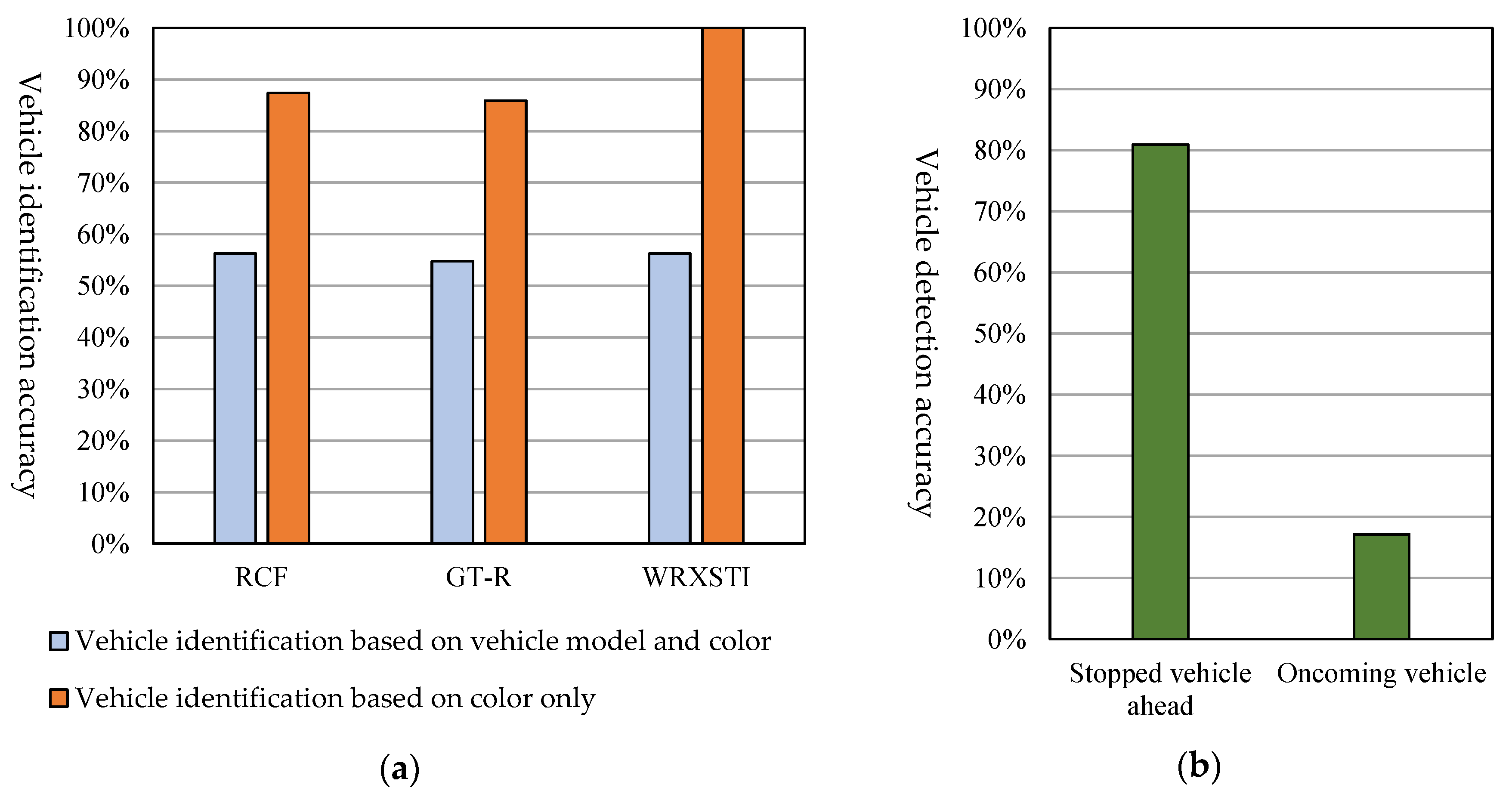

5.4. Vehicle Identification Accuracy and Vehicle Detection Accuracy

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Cross-Ministerial Strategic Innovation Promotion Program (SIP)—Innovation of Automated Driving for Universal Services, Research & Development Plan, Cabinet Office, Government of Japan (Online). Available online: http://www8.cao.go.jp/cstp/gaiyo/sip/keikaku/6_jidousoukou.pdf (accessed on 5 September 2017).

- Xu, B.; Ban, X.; Bian, Y.; Wang, J.; Li, K. V2I based cooperation between traffic signal and approaching automated vehicles. In Proceedings of the Intelligent Vehicles Symposium, Los Angeles, CA, USA, 11–14 June 2017; pp. 1658–1664. [Google Scholar] [CrossRef]

- Tran, H.K.; Ideguchi, T.; Okuda, T.; Xuejun, T. A consideration for consensus formation on t-crossing utilizing inter-vehicle communication. In Proceedings of the Multimedia, Distributed, Corporative, and Mobile Symposium (DICOMO), Beppu-shi, Japan, 8–10 July 2009; pp. 252–257. [Google Scholar]

- Ozaki, H.; Nagao, K. A Traffic accident prevention system using autonomous driving control at intersection area. In Proceedings of the 70th National Convention of IPSJ, Tukuba-shi, Japan, 13–15 March 2008; pp. 311–312. [Google Scholar]

- Hausknecht, M.; Au, T.; Stone, P. Autonomous intersection management: multi-intersection optimization. In Proceedings of the International Conference on Intelligent Robots and Systems Conference, San Francisco, CA, USA, 25–30 September 2011; pp. 4581–4586. [Google Scholar]

- Yang, B.; Zheng, R.; Shimono, K.; Kaizuka, T.; Nakano, K. Evaluation of the effects of in-vehicle traffic lights on driving performances for unsignalized intersections. IET Intell. Transp. Syst. 2017, 11, 76–83. [Google Scholar] [CrossRef]

- Yang, B.; Zheng, R.; Kaizuka, T.; Nakano, K. Influences of waiting time on driver behaviors while implementing in-vehicle traffic light for priority-controlled unsignalized intersections. J. Adv. Transport. 2017, 7871561. [Google Scholar] [CrossRef]

- IEEE 802.11p-2010. Available online: https://standards.ieee.org/standard/802_11p-2010.html (accessed on 11 April 2017).

- Dedicated Short-Range Communication System (ARIB). Available online: http://www.arib.or.jp/english/html/overview/doc/5-STD-T75v1_0-E2.pdf (accessed on 11 April 2017).

- 700 MHz Band Intelligent Transport System (ARIB). Available online: http://www.arib.or.jp/english/html/overview/doc/5-STD-T109v1_2-E1.pdf (accessed on 11 April 2017).

- 700 MHz Band Intelligent Transport SYSTEMS—Experimental Guideline for Inter-Vehicle Communication Messages (ITS Info-Communications Forum). Available online: http://www.itsforum.gr.jp/Public/J7Database/p48/ITS_FORUM_RC-013_v10.pdf (accessed on 6 December 2016).

- Raspberry Pi 2 Model B (The Raspberry Pi Foundation). Available online: https://www.raspberrypi.org/products/raspberry-pi-2-model-b (accessed on 18 January 2016).

- Proprietary Protocol—Serial Communication Module (ZB24FM-E2022-01) (NEC Corporation). Available online: http://jpn.nec.com/engsl/pro/wireless/serial_module.html (accessed on 13 April 2016).

- Self Driving RC Car. Available online: https://zhengludwig.wordpress.com/projects/self-driving-rc-car/ (accessed on 25 May 2016).

- Gao, Y.; Lee, H.J. Local tiled deep networks for recognition of vehicle make and model. Sensors 2016, 16, 226. [Google Scholar] [CrossRef] [PubMed]

- Zhao, S.; Zhu, X.L.; Li, Y.B.; Zhang, L. Research on the recognition of car models based on deep-learning networks. In Proceedings of the 2017 Asia-Pacific Engineering and Technology Conference, Lancaster, PA, USA, 25–26 May 2017; pp. 1770–1775. [Google Scholar]

- Dehghan, A.; Masood, S.Z.; Shu, G.; Ortiz, E.G. View independent vehicle make, model and color recognition using convolutional neural network. arXiv, 2017; arXiv:1702.01721. [Google Scholar]

- OpenCV. Available online: http://opencv.org/about.html (accessed on 2 November 2016).

- Android BluetoothChat Sample (Google, GitHub). Available online: https://github.com/googlesamples/android-BluetoothChat (accessed on 30 August 2016).

- Open JTalk. Available online: http://open-jtalk.sourceforge.net/ (accessed on 7 October 2016).

- Jouppi, N.P.; Young, C.; Patil, N.; Patterson, D.; Agrawal, G.; Bajwa, R.; Bates, S.; Bhatia, S.; Boden, N.; Borchers, A.; et al. In-datacenter performance analysis of a tensor processing unit. In Proceedings of the 2017 ACM/IEEE 44th Annual International Symposium on Computer Architecture (ISCA), Toronto, ON, Canada, 24–28 June 2017; pp. 1–12. [Google Scholar] [CrossRef]

| Comparison Item Method | Traffic Light | Roadside Unit | Intersection Shape | A Mix of Self-Driven and Human-Driven Vehicles |

|---|---|---|---|---|

| Optimization of signal change timing [2] | Present | Present | Crossroads with no priority given to any roads and with separate lanes for different directions | Self-driven vehicles only |

| Yielding control based on request and consent [3] | Not present | Not present | T-shaped intersection where a priority road and a low-priority road meet | Human-driven vehicles only |

| Adjustment of vehicle speeds [4] | Present | Crossroads with no priority given to any roads | Self-driven vehicles only | |

| Intersection passing order control [5] | ||||

| Display of virtual traffic lights based on distances between vehicles [6,7] | Not present | Crossroads of a priority road and a low-priority road | Human-driven vehicles only | |

| Proposed method | Crossroads of a priority road and a low-priority road and crossroads with no priority given to any roads | Mixed |

| Pattern Number | Description | Schematic View (The Lanes Are Drawn in Accordance with the Japanese Traffic Rules) |

|---|---|---|

| 1 | A vehicle wants to turn left from a low-priority road to a priority road |  |

| 2 | A vehicle wants to turn right from a low-priority road to a priority road | |

| 3 | A vehicle wants to cross a priority road from a low-priority road | |

| 4 | A vehicle wants to turn right from a priority road to a low-priority road |  |

| 5 | A vehicle wants to go straight at an intersection of two roads of the same width (no priority assigned) |  |

| 6 | A vehicle wants to turn right at an intersection of two roads of the same width (no priority assigned) |

| Header/Body | Element | Symbol | Data Example | ||

|---|---|---|---|---|---|

| Message header | System ID (4 bytes) | h1 | 0x01595257 | ||

| Message length (1 byte) | h2 | 0x11 | |||

| Destination | ID (4 bytes) | h3 | 0x01234567 | ||

| Sender | ID (4 bytes) | h4 | 0x89ABCDEF | ||

| Vehicle information | Maker (1 byte) | h5 | 0x01 | ||

| Vehicle model (1 byte) | h6 | 0x03 | |||

| Colour (1byte) | h7 | 0x0b | |||

| Location information (GPS) | Latitude (4 bytes) | h8 | 0x420EC0B8 | ||

| Longitude (4 bytes) | h9 | 0x430B545E | |||

| Message body | Yielding pattern number (4 bits) | b1 | 0001 | ||

| Requester confirmation bit (1 bit) | b2 | 1 | |||

| Message type (3 bits) | b3 | 000 | |||

| Spare (0 to 7 bytes) | b4 | ||||

| No. | Message Direction | Message Element | Data | Meaning |

|---|---|---|---|---|

| m1 | A-> All vehicles Request for going straight ahead | h3 | 0xffffffff | Broadcast |

| h4 | 0x0000000a | 0000000A | ||

| h5,6,7 | 0x08,08,03 | Lexus RCF Orange | ||

| b1 | 0011 | Pattern 3 | ||

| b2 | 1 | Yielded-to side | ||

| b3 | 010 | Request for going straight ahead | ||

| m2 | C -> A Refusal | h3 | 0x0000000a | 0000000A |

| h4 | 0x0000000c | 0000000C | ||

| h5,6,7 | 0x04,03,08 | Subaru WRXSTI Blue | ||

| b1 | 0011 | Pattern 3 | ||

| b2 | 0 | Yielding side | ||

| b3 | 100 | Refusal | ||

| m3 | B -> A Consent | h3 | 0x0000000a | 0000000A |

| h4 | 0x0000000b | 0000000B | ||

| h5,6,7 | 0x01,06,01 | Nissan GT-R Red | ||

| b1 | 0011 | Pattern 3 | ||

| b2 | 0 | Yielding side | ||

| b3 | 011 | Consent | ||

| b4 | 0x01 | No oncoming vehicle | ||

| m4 | A -> B Thanks | h3 | 0x0000000b | 0000000B |

| h4 | 0x0000000a | 0000000A | ||

| h5,6,7 | 0x08,08,03 | Lexus RCF Orange | ||

| b1 | 0011 | Pattern 3 | ||

| b2 | 1 | Yielded-to side | ||

| b3 | 101 | Thanks | ||

| m5 | B -> A Timeout | h3 | 0x0000000a | 0000000A |

| h4 | 0x0000000b | 0000000B | ||

| h5,6,7 | 0x01,06,01 | Nissan GT-R Red | ||

| b1 | 0011 | Pattern 3 | ||

| b2 | 0 | Yielding side | ||

| b3 | 110 | Timeout | ||

| m6 | A -> B Timeout | h3 | 0x0000000b | 0000000B |

| h4 | 0x0000000a | 0000000A | ||

| h5,6,7 | 0x08,08,03 | Lexus RCF Orange | ||

| b1 | 0011 | Pattern 3 | ||

| b2 | 1 | Yielded-to side | ||

| b3 | 110 | Timeout |

| Item | Conditions |

|---|---|

| Place | Indoor |

| Number of vehicles | 3 |

| Vehicle type | Self-driven vehicle (yielding side) Human-driven vehicle (yielded-to side) |

| Moving direction (yielding side) | Always moving straight ahead |

| Running speed | Speed at which it is easy to stop |

| Congestion in the opposite lane | None |

| Checking of the following vehicle | None |

| Obstacles | None |

| Identification of the road shape | Manual input |

| Distance scale | 1/16 |

| Yielding patterns used | 1 to 6 |

| Value for the request message retransmission timer | 10 s |

| Value for the thanks message waiting timer | 10 s |

| Number of experiments | 5 (for each condition in Table 6) |

| Yielding Pattern Number | Conditions (Identification Symbol, Description) | Result Assumed by the Proposed System | |

|---|---|---|---|

| 1 | a | There is a stopped vehicle ahead. | Yield |

| b | There is no stopped vehicle ahead. | Not yield | |

| 2 | a | There is a stopped vehicle ahead. | Yield |

| b | There is no stopped vehicle ahead. | Not yield | |

| 3 | a | There is a stopped vehicle ahead. | Yield |

| b | There is no stopped vehicle ahead. | Not yield | |

| 4 | a | There is a stopped vehicle ahead. | Yield |

| b | There is no stopped vehicle ahead. | Not yield | |

| 5 | a | The requesting vehicle is on the left. | Yield |

| b | The requesting vehicle is on the right. | Not yield | |

| c | The requesting vehicle is in the opposite lane. | Consent (move on) | |

| 6 | a | The requesting vehicle is on the left. | Yield |

| b | The requesting vehicle is on the right. | Not yield | |

| c | The requesting vehicle is in the opposite lane. | Not yield | |

| Item No. | Experiment Results | Cause | Item No. | Experiment Results | Cause | ||||

|---|---|---|---|---|---|---|---|---|---|

| R | A | RT | R | A | RT | ||||

| 1-a | G | G | 2.202 | 4-b | G | G | 2.311 | ||

| G | G | 2.220 | G | G | 1.831 | ||||

| G | G | 2.099 | G | G | 1.876 | ||||

| G | G | 2.322 | G | G | 2.426 | ||||

| G | G | 2.045 | G | B | 2.435 | The vehicle ahead incorrectly detected. | |||

| 1-b | G | G | 1.631 | 5-a | G | G | 2.014 | ||

| G | G | 1.726 | G | G | 1.416 | ||||

| G | G | MF | G | G | 1.934 | ||||

| G | G | 1.944 | G | G | 1.467 | ||||

| G | G | 1.958 | G | G | 1.995 | ||||

| 2-a | G | B | 2.004 | The vehicle ahead incorrectly recognized as an oncoming vehicle. | 5-b | G | G | 1.767 | |

| G | B | 2.127 | G | G | 1.402 | ||||

| G | B | 1.807 | G | G | 2.046 | ||||

| G | B | 1.931 | G | G | 1.678 | ||||

| G | B | MF | Vehicles cannot be identified. | G | G | 1.827 | |||

| 2-b | G | G | MF | 5-c | G | G | 1.977 | ||

| G | G | 1.611 | G | B | 1.383 | The responding vehicle incorrectly recognized as being on the right side. | |||

| G | G | 2.636 | G | B | 1.997 | ||||

| G | G | 1.645 | G | B | 1.615 | ||||

| G | G | MF | G | B | 1.726 | ||||

| 3-a | G | G | 2.530 | 6-a | G | G | MF | ||

| G | B | 2.293 | The vehicle ahead incorrectly recognized as an oncoming vehicle. | G | G | 1.525 | |||

| G | B | 2.380 | G | G | 1.927 | ||||

| G | B | 2.405 | G | G | 1.697 | ||||

| G | B | 2.438 | G | G | 1.902 | ||||

| 3-b | G | G | 2.991 | 6-b | G | G | 2.149 | ||

| G | G | 2.009 | G | G | 1.801 | ||||

| G | G | 2.048 | G | G | 1.428 | ||||

| G | G | 2.083 | B | Unknown (possibly failure in synchronization with the communication module?) | |||||

| G | G | 2.099 | G | G | 2.065 | ||||

| 4-a | G | G | MF | 6-c | G | G | 1.987 | ||

| G | G | 2.098 | G | G | 2.117 | ||||

| G | G | 2.971 | G | G | 1.735 | ||||

| G | G | 2.025 | G | G | 1.851 | ||||

| G | G | 2.662 | G | G | 1.474 | ||||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yajima, H.; Takami, K. Inter-Vehicle Communication Protocol Design for a Yielding Decision at an Unsignalized Intersection and Evaluation of the Protocol Using Radio Control Cars Equipped with Raspberry Pi. Computers 2019, 8, 16. https://doi.org/10.3390/computers8010016

Yajima H, Takami K. Inter-Vehicle Communication Protocol Design for a Yielding Decision at an Unsignalized Intersection and Evaluation of the Protocol Using Radio Control Cars Equipped with Raspberry Pi. Computers. 2019; 8(1):16. https://doi.org/10.3390/computers8010016

Chicago/Turabian StyleYajima, Hayato, and Kazumasa Takami. 2019. "Inter-Vehicle Communication Protocol Design for a Yielding Decision at an Unsignalized Intersection and Evaluation of the Protocol Using Radio Control Cars Equipped with Raspberry Pi" Computers 8, no. 1: 16. https://doi.org/10.3390/computers8010016

APA StyleYajima, H., & Takami, K. (2019). Inter-Vehicle Communication Protocol Design for a Yielding Decision at an Unsignalized Intersection and Evaluation of the Protocol Using Radio Control Cars Equipped with Raspberry Pi. Computers, 8(1), 16. https://doi.org/10.3390/computers8010016