1. Introduction

Autism Spectrum Disorder (ASD) is a pervasive and prominent disorder that has been well documented and vastly studied in the past decade [

1]. ASD is difficult to categorise and diagnose in its early stages because of its characteristics [

2]. Currently, approximately 2.94% of UK children between the ages of 10 and 14 are diagnosed with ASD [

3], representing an important figure considering the population. The DSM-V-TR, with the latest 2022 revision, categorises the disorder as a large spectrum rather than a single condition [

4]. It identifies different levels of severity, but one common characteristic is the impairment of social-emotional skills, which consequently might affect the individual’s quality of life in everyday social interactions.

Tools such as artificial intelligence (AI) are becoming more popular, and their introduction to the industry represents an important milestone identified as a Fourth Industrial Revolution [

5]. AI tools are becoming a more predominant element in our lives [

6], especially in the education field to help teachers and educators. Specifically, facial-recognition technologies have a wide range of applications spanning from security to education [

7,

8]. Machines can be trained to recognise facial expressions, identities, and emotions.

Serious games are stimulating and interactive games, which usually allow the players to improve a specific set of skills. They accomplish this by presenting the player with pleasant activities that can teach skills through playing. These games are known to be useful in helping both ASD and neurotypical children develop skills, especially for their multisensory approach [

9]. Specifically, they can help create ways to simulate natural environments and can be used to develop specific activities aimed at focused training. Several serious games are developed specifically for children with ASD [

10]. Serious games offer a new perspective on how these tools can be used to support vulnerable children’s development. AI tools can be integrated into such games to offer innovative ways to support children’s development, and mobile applications can be the perfect medium to offer educators or parents the support children with ASD might need.

ASD is usually wrongly represented in the media, reinforcing a misconception of the challenges that Autism might pose [

11]. Usually, social communication abilities and interactions with peers are impacted by these disorders [

12]. Individuals with ASD tend to struggle with social interactions and especially with expressing and identifying emotions. Individuals with ASD tend to have a poorly developed sense of self, often causing them not to elaborate on their internal states of mind. Specifically, a component of the Theory of Mind (ToM), defined as the ability to understand and attribute mental states to the self and others [

13], is affected. Such a deficit in this area is defined as “mind-blindness”, usually implying difficulties in “seeing others’ minds”, intentions, and motives. This is also demonstrated by the “Sally-Anne test” [

14,

15]. Consequently, children with ASD struggle to recognise emotions, especially using facial clues [

16], which is one of the most important ways for children to communicate in early stages [

17].

These deficits can induce anxiety and depression, especially when individuals use constant “masking” behaviours as a result [

18]. However, tools designed to help teach emotion recognition techniques have proved to have a positive impact [

19]. Specifically, games using new technologies and robots can help with the challenges posed by ASD [

20]. In particular, children with ASD might find it hard to work with people face to face due to their social-emotional deficits, and the use of tablets and smartphones can help [

21].

The integration of “affective computing” technologies can help learners develop their skills [

22]. New methodologies can be applied to education and teaching by enabling computers to recognise emotions. Using CNN (Convolutional Neural Network) AI models, which can learn by identifying patterns from the data provided [

23], is currently one of the most popular approaches to teaching AI to recognise emotions from facial expressions [

24,

25,

26]. Several APIs (Application Programming Interfaces) use such approaches for their pre-trained models. Google Cloud Vision API is one of the most popular ones, which enables the application to recognise emotions with an 85% accuracy [

27].

These technologies can be utilised to support children with special needs in their development. For example, specific training can be implemented to train facial recognition skills [

28], effectively translating behavioural or developmental therapy into digital practices. Other serious games that are proven to be effective with ASD individuals provide customised activities in a multisensory virtual environment. This approach could result in higher engagement and improved results in developing an aspect of the ToM, namely, the skills which involve emotion awareness [

29].

Serious games designed for children with ASD can allow them to be more engaged with entertaining activities while improving their socio-emotional skills. This methodology is highly effective, as individuals with ASD tend to be visual learners, preferring mobile technologies [

30]. For this reason, several applications have been developed to aid children with ASD. An example is represented by Ying, a mobile Android application integrating ABA techniques in therapeutic settings [

31].

Other applications exist to help such individuals develop their skills. An example is Emoface [

32], with several activities to allow children to better develop deficient skills, and EmoTen [

33], which also includes emotion-aware technologies. The latter is developed using Affectiva Software Development Kits (SDK) and enables a few activities focused on improving certain skills. The application is tested and evaluated against a small cohort of children with ASD, supported by a team of developmental psychologists. Although the application suffered from certain limitations, the results of the evaluation are positive, confirming the existing literature on the effectiveness of such an approach.

The main contribution of this paper is to propose a new framework that can have an impact on future works. The developed framework aims to improve existing applications by introducing facial-recognition technology to help create engaging and motivating activities. Two key features are presented and developed, each aimed at improving quality of life for children with ASD. This is achieved with the introduction of two functionalities that can help users to learn how to recognise emotions and express them through a serious game. Furthermore, it uses a sandbox environment, which automatically categorises emotions and offers an emotion diary to be consulted by users. The application, developed as a result of the designed framework, takes advantage of the power of the cloud-based API offered by Google Cloud Vision, which gives an example of how such cutting-edge technology can be applied and used by professionals in the developmental psychology field.

The paper is structured in the following way:

Section 2 presents the developed new framework and how it can enhance the social emotional skills of children with ASD. It also highlights the gaps in the current literature and sets the basics for further implementation and applications.

Section 3 illustrates how the framework is applied to create an experimental Android application. It highlights its design, the actual implementation, and the preparation of a custom AI model.

Section 4 describes the results obtained from the development and testing of the application EmoPal. In

Section 5, the results are discussed, highlighting the benefits of the presented approach, and the paper concludes with recommendations for further research and investigations.

2. AI Framework—Improve Socio-Emotional Skills Through AI Technologies

The proposed framework to help children with ASD takes inspiration from existing socio-emotional therapy, which also involves play therapy and emotion recognition techniques. The main focus of the framework is to take advantage of the advancements in AI technology, computer vision, and emotion-aware technology to create a new methodology to help children, educators, teachers, and legal guardians to overcome the challenges posed by such a condition. The field of study regarding ASD is well documented and rich in literature, especially in regard to play therapies and how emotional therapy can enhance socio-emotional skills in children with ASD. One of the characteristics of ASD is alexithymia, also known as emotional blindness. These individuals usually struggle to express and recognise emotions in themselves and others. This leads to masking behaviours and increased levels of stress that might lead to anxiety and depression.

The core feature of the framework is to somehow translate existing therapies and techniques into a digital format that is powered by AI technologies.

Play therapy has proven to be an effective way in improving emotion regulation in children with Special Educational Needs (SEN) [

34]. This type of therapy involves using several techniques, such as interaction with play activities, that encourage children to express feelings. This helps children to navigate their complex range of emotions without the strict need of using words, which can be challenging when verbal language is not enough. An example is found in The Stories of Ciro and Beba [

35], a book which contains a set of short stories specifically designed to help children to learn and expand their emotional skills by building up different socio-emotional constructs, such as emotional expression, understanding, and regulation.

The idea of these games is to integrate the playful element of a game and emotional conversation, encouraging the user to not only express emotions but also to understand them better. The games are designed to incorporate such elements. For example, the games are highly portable as a mobile application, and they can be used anywhere; for example, they can be used during Floortime activities, which is one of the models used in play therapy, focused on improving emotional growth. Furthermore, the game presents the user with images depicting emotions, promoting their recognition, and mimicking and encouraging emotional talk between parents and children.

Advancements in AI, especially in face recognition, are allowing models to be built and tuned to recognise facial expressions like emotions. These models can be used to develop tools that can effectively help these individuals to develop the required skills.

The idea behind the framework is to have an AI companion that can recognise emotions and use recognition to give feedback to the user. This methodology enables the development of invaluable strategies. New technologies such as AI can be used to facilitate therapy and training by offering a valid and easy-to-use aid. This topic is currently not well documented in the literature, and the present framework is thought to fill the gap and highlight the importance of these tools.

AI models can be leveraged to enhance learning opportunities and are adaptable for use in virtually any environment. Current socio-emotional therapies involve play-therapy techniques, which can be digitally translated and combined with AI-driven emotion aware technologies. The presented framework aims to use socio-emotional therapy techniques and play therapy through serious games, combined with AI facial-recognition models that can recognise several emotions.

Figure 1 shows the designed framework, unifying socio-emotional therapy and play therapy, with AI tools like emotion-aware technology and existing or customised AI models.

The core engine of the framework is an AI tool which is used to recognise expressed emotions and give users feedback whilst playing. The main objective of this approach is to help the user learn how to recognise and express emotions. This is meant to reinforce their socio-emotional skills with the goal of supporting and developing them further through engaging and stimulating activities. The feedback provided from AI models and the outcome of successfully progressing through a game can be used to reinforce the learning process, especially if used repeatedly through pleasant activities that can be engaged more than once in different settings.

This methodology allows children with ASD to learn while using new engaging technologies that can act as an effective companion and, at the same time, as a valid method for parents or therapists to help the user. Such reinforcement and training is expected to have a cascade effect on an individual’s quality of life. By increasing their competence in identifying and expressing emotions, the likelihood of developing anxiety symptoms and depression decreases, including social anxiety and suicidal thoughts in later stages of life [

36].

The proposed framework focuses on AI technology as an aid and not a complete substitute for the human factor, which is still needed. However, this approach would highly help the target audience to internalise the meanings of emotions, as well as understanding them and expressing their internal states. Training such deficient skills could help them reduce stress, anxiety, and depression, especially when caused by not recognizing social cues, or by the uncertainty caused by social interaction. Furthermore, it would give them the right tools to understand their internal states and be more aware of felt emotions. The framework is applicable to new tools and applications in order to promote an innovative way of supporting young individuals.

As a result of the study and design of the framework, a native Android application named EmoPal has been developed to implement the framework’s principles and serve as an experimental tool, demonstrating the discussed features and implementations while highlighting their strengths and limitations.

3. Framework Implementation—Materials and Methods

The EmoPal application is developed primarily for the Android platform due to its open-source nature and its status, based on reviewed data, as one of the most widely used mobile operating systems [

37]. Android is also developed by Google, which offers support for developers to implement compatible services and AI features. The application and its framework are developed for mobile devices running Android 13.0 (API 30—“R”). Such a decision is to allow the application to run on most devices available in the market. According to the Android documentation, the API is currently leveraged by 67.6% of its users [

38] as also shown in

Figure 2.

Table 1 summarises the technologies used to develop EmoPal. Such technologies allow for fine control of the development of the UI in a scalable way.

The user interface is designed to be both effective and easy to use. An intuitive and easily understandable design is essential, especially for the target user. The design is inspired by the user-centred design paradigm, as it allows for focusing primarily on the user’s needs, and it has previously been used to re-adapt mobile applications for children with ASD [

39]. This approach would help reduce the user’s cognitive fatigue while using the application and reduce cluttering and background stimuli [

40].

The Jetpack Compose framework is chosen as the tool for designing and developing the UI. This framework supports the new features from Material3 themes and replaces the traditional XML approach. This is slowly becoming the new industry standard, as it gives developers full control over UI development [

41]. Furthermore, it introduces the concept of composables, which are reusable UI elements that help to decouple code and allow the creation of multipurpose components that can be reused. An example is shown in

Figure 3. The methodology used allows the framework to be reliable, scalable, and reusable.

Emotion-aware technologies are the core of the serious games developed to enforce the overlaying framework principles described previously in

Section 2. Furthermore, the produced AI Model sets the base for a new way to use such a technology and expand it with further work. The games are designed to be entertaining and able to interact with the user by enabling emotion recognition through AI technology. This is to enable an active interaction between users and intelligent technology.

3.1. EmoPal Technical Design

EmoPal is designed with an MVVM design pattern in mind as illustrated in

Figure 4. This is common in mobile development, and it is widely used in the industry. It allows the application to be easily scalable and flexible [

42]. This approach allows the use of different ViewModels to populate specific Views with data. This helps it to have a more loosely coupled code, easier to maintain and develop [

43]. The goal is to apply a flexible solution that can help other developers to expand the use cases and adapt the framework in several possible ways.

The application is structured in three distinct parts. The View is what the user sees, which is populated by the ViewModel, where the business logic is applied. Finally, the Model is where the data is stored.

In conjunction with this design pattern, the Dependency Injection (DI) pattern is also heavily used through the Dagger Hilt API. Consequently, this allows developers to decouple code, making the structure modular, and it allows it to reduce memory usage by creating each dependency once [

44]. This enables the injection of ViewModels in different parts of the code, allowing a high flexibility and reusability of components.

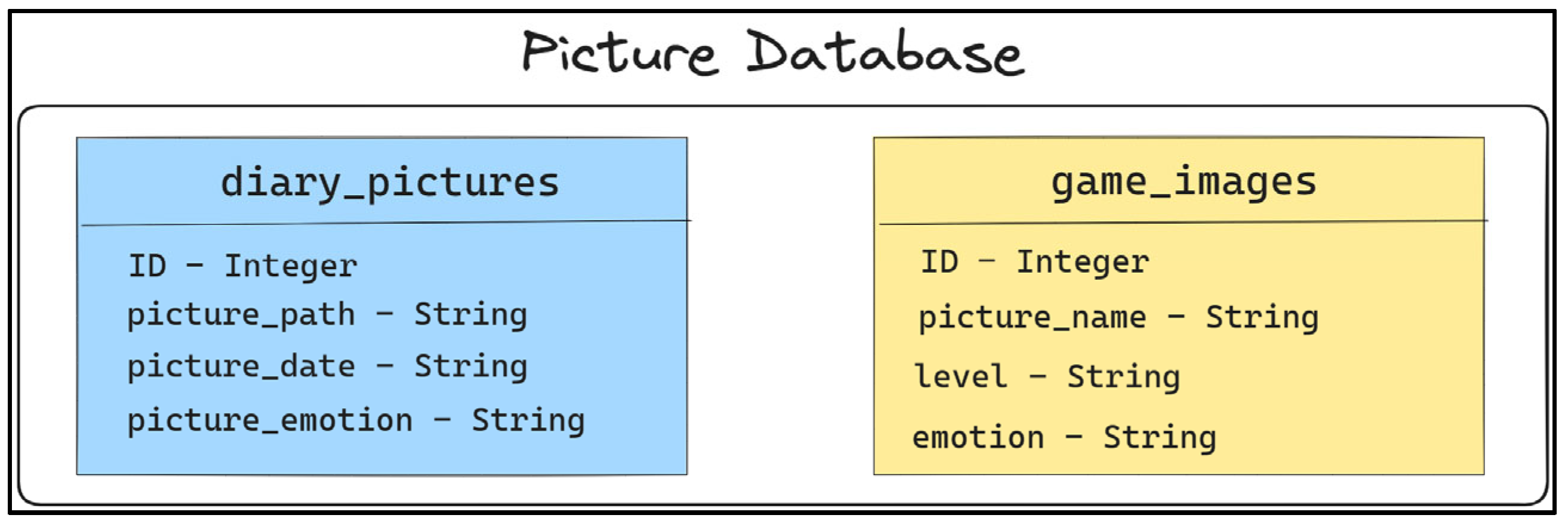

EmoPal uses an internal SQLite database using Room ORM. It stores data used for the game and pictures taken using the diary features of the application. The tables use the path of the pictures instead of storing them as BLOBS. This design allows the user to take several pictures without the memory limitation of the SQLite database, which might cause issues in saving them through a TypeConverter. Furthermore, it allows the application to store other types of data for future advancements.

Figure 5 shows the schema of the database and the tables’ structure.

EmoPal connects through a RESTful API to Google Cloud Vision, which elaborates and interprets the image transferred. Google’s AI model is used in the first iteration of the experimental application to facilitate the development and test the model’s capabilities. Its design is illustrated in

Figure 6.

EmoPal and its framework aim to fill the gap set by the recent publications and similar applications currently present in the market, but also to define a standard in the application of such technologies designed to help individuals with ASD. Two key features are implemented to allow EmoPal to supplement and enrich the current literature with this reusable framework. The main purpose is to improve the minigame proposed in EmoTen and the diary functionality in Emoface by introducing an automated tool to make the learning process more interactive and innovative. Specifically, the minigame proposed works as illustrated in

Figure 7.

The game is structured to present a prompt to the user. The user is then asked to take a picture of themselves so that the application can compare their expression to the prompt. If the emotion is matched, the game will continue with the next prompt. Otherwise, a message will return the current user expression, and a message will appear to try again. Even if the emotion is not matched, it is important to state what emotion they used. This is to enforce the learning aspect of the framework and its reinforcement goals. This also allows the user to think of how they expressed their emotion and to try again to match the current prompt and learn from errors.

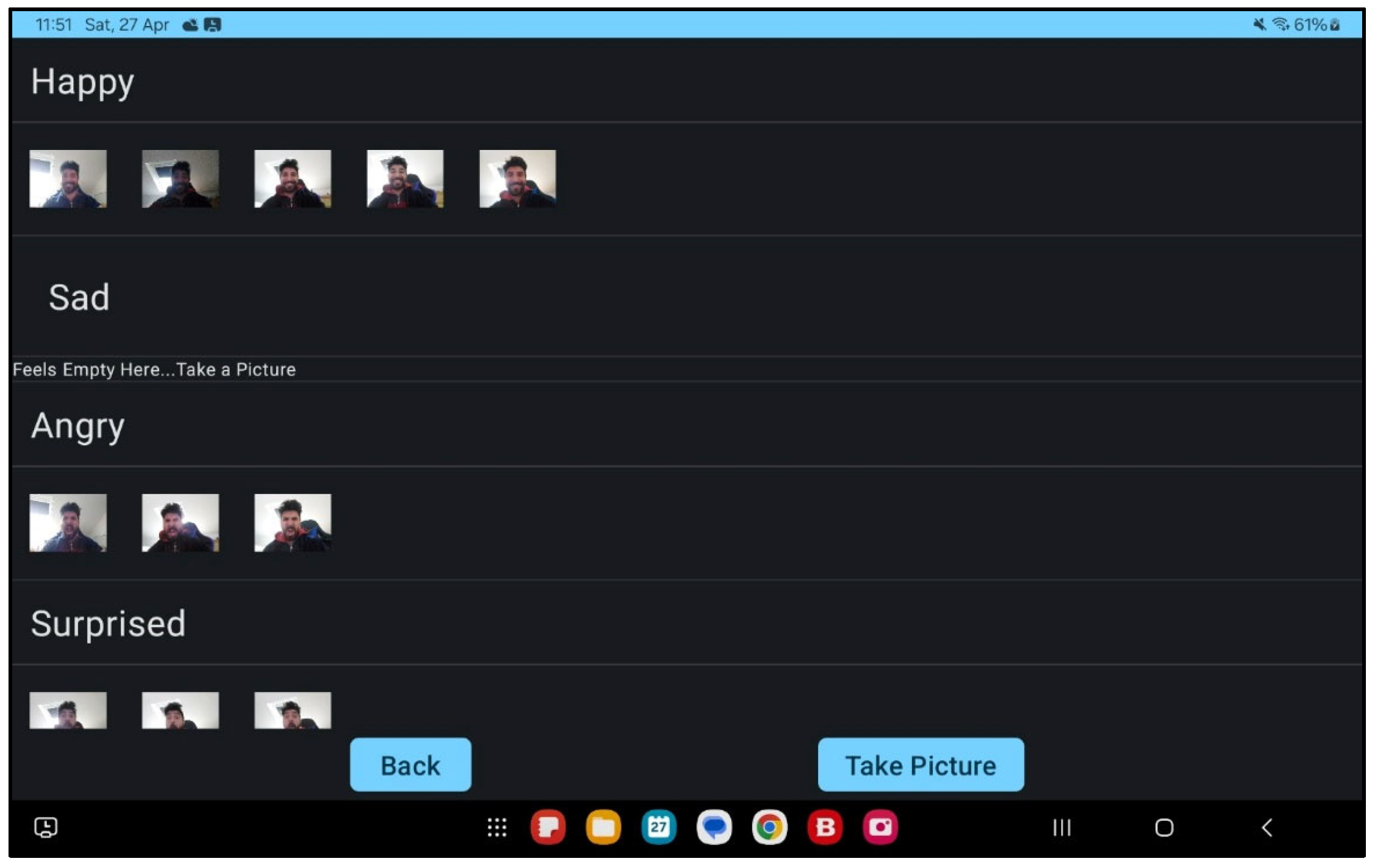

The emotion diary feature allows users to have a sandbox environment where they can freely experiment with their emotions by taking pictures of themselves. This functionality offers a good way for children to play with their expressions and to check which one is associated with the relative emotion (

Figure 8).

A similar functionality is seen in another application called Emoface, sponsored by the National Centre for Scientific Research in France (CNRS). However, such an application does not use emotion-aware technology, as the user would manually add their pictures to the list.

EmoPal recognises the emotion and categorises it. Google Cloud Vision API is used for this specific operation. CameraX library is also used with Jetpack Compose, as it provides a consistent API which enables the use of the camera. It is also compatible with ML Kit for future developments with image analysis capabilities [

45]. The camera allows the user to have immediate feedback, and once the picture is taken, it is then stored in the internal memory of the application, together with its unique URI in the Room database configured.

The picture is sent to the API, which analyses the facial features and sends back a response in JSON format, which is then interpreted by the customised parser. At the current stage, only four emotions are supported: happiness, anger, sadness, and surprise.

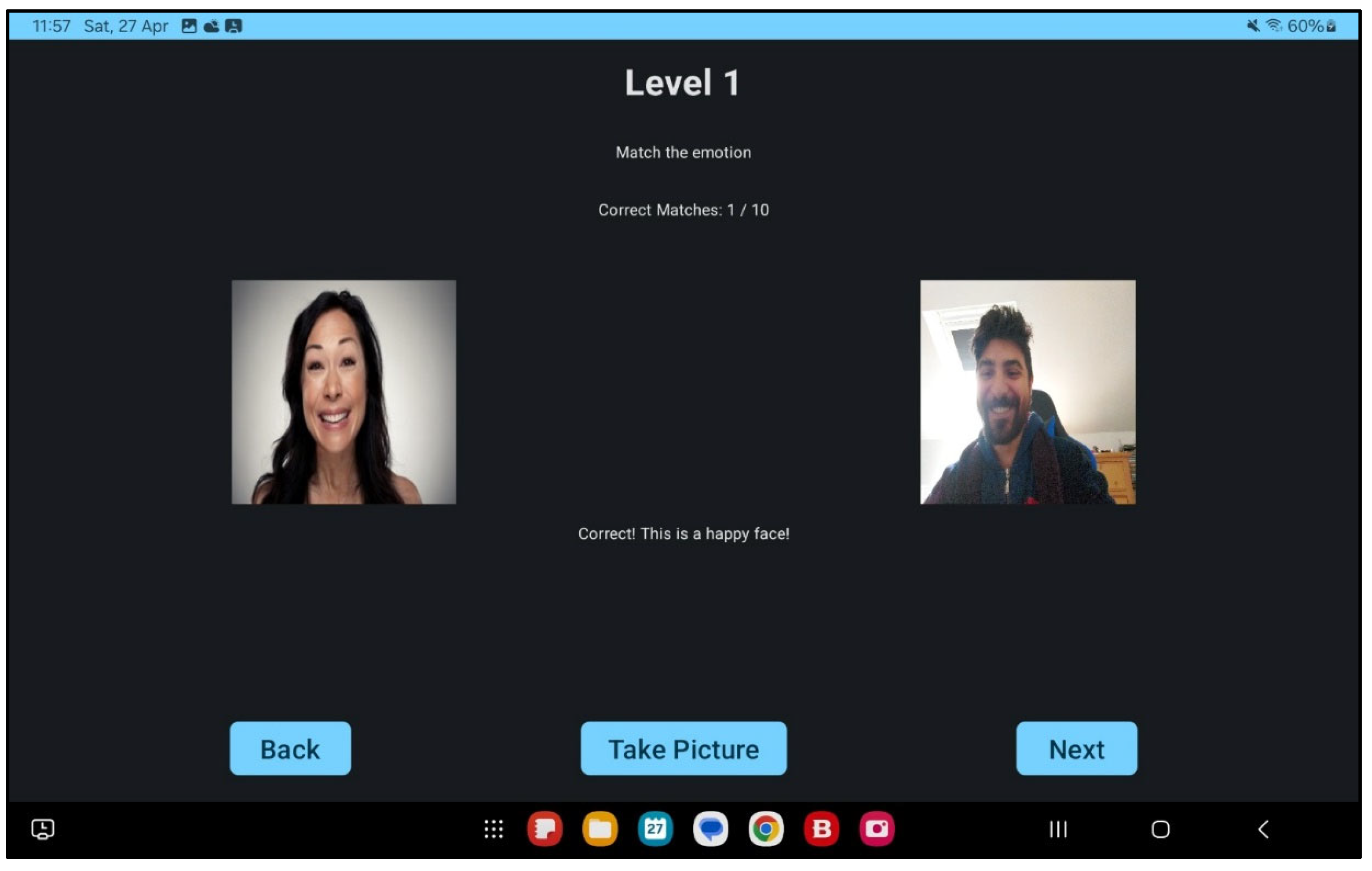

The second core feature is the emotion mimicking game. The game presents picture prompts expressing a specific facial expression. The goal of the user is to mimic the expression by taking a picture of themselves and progressing through the game. A response is displayed to give feedback to the user if the expressed emotion is correct or not. Also, if the expressed emotion is wrong, the user will be given a prompt with the emotion they expressed. The game has three levels, each of which focuses on specific training outcomes.

3.2. Implementation

The first level is mainly focused on developing the ability to recognise facial expressions. The pictures shown display high-intensity emotions, which are more easily recognised by ASD individuals [

46]. Random prompts are presented, which is a crucial aspect, as it can prevent the user from expecting a repeated sequence of presented pictures. This is to improve the effectiveness of the training, as ASD individuals tend to adopt mechanical learning strategies. Furthermore, the pictures presented depict real people in contrast with other applications reviewed in the literature (

Figure 9).

Each level of the minigame focuses on a specific learning goal. The first level focuses only on facial expressions and emotions. The pictures are clear and set in a neutral environment. The background is usually white, and this is to reduce distractions and users’ hyper-focussing on elements that are not part of the scope of the game. Such a problem is illustrated in research by using eye-tracking technologies [

47].

The second level increases the difficulty by proposing pictures having a background and other elements of the body. The reasons are to help the user train their ability to recognise emotions through facial expressions and body language, an important medium of communication during social interactions.

The images are still focused on facial expressions; however, more complexity is added to the background, along with more subtle expressions and more complex emotions. This adds another layer of difficulty, which can be challenging for children with ASD. The aim of the second level is to represent clear pictures with more distracting elements. The emotions included in the sample include pictures depicting the emotions of surprise and the complex emotion of disgust. These are created according to Ekman’s categorisation of emotions [

48].

Lastly, the third level presents contextual situations where the user needs to infer the general emotion expressed by analysing the environmental elements. This is inspired by EmoTen [

33], which presents a social situation as an animated scene with cartoon characters. This third level is improved by providing pictures with real people in several scenarios. The scenes are tailored to represent the range of emotions taken into consideration for this proof of concept. For example, to represent happiness, an image will display people cheering and laughing together, a scene in which the focus is the social situation rather than a single person’s expression. This is important to teach the user how to abstract social situations and think about the kinds of emotions expressed in a more social context, facilitating abstraction and reasoning, as also illustrated in the literature [

49]. The pictures displayed are part of a freely accessible repository and serve the proof of concept. For further development, different pictures or even short videos should be taken and prepared for the purposes of the game.

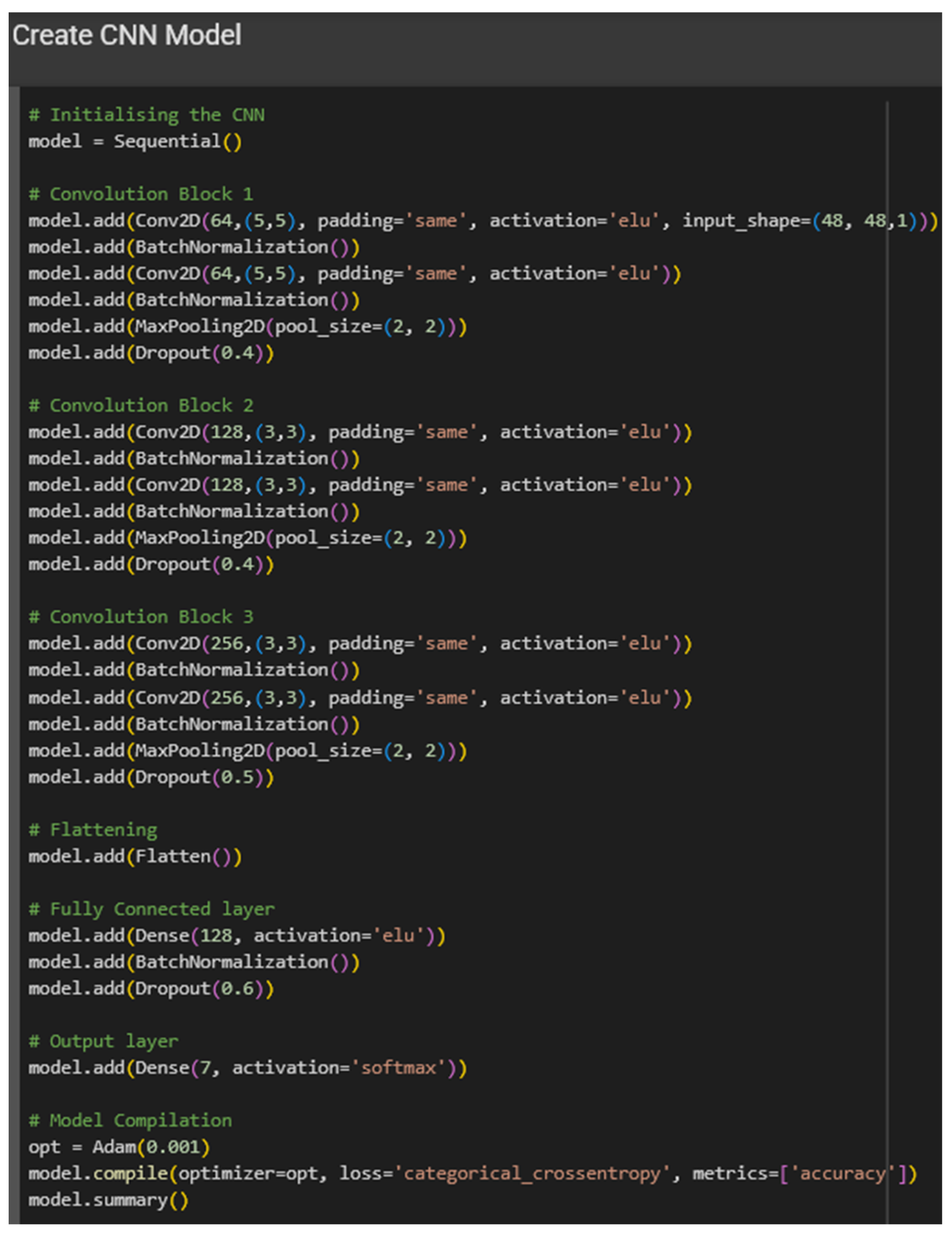

3.3. CNN Model

This application is limited to having access to the network, as it uses the Google Cloud Vision API. This limits the capabilities of the application, as it requires constant internet access, as well as slowing the picture processing time and being bound to the Google AI Model. As such, an experimental model is introduced to overcome these limitations and for future experiments and developments.

This model is trained using the FER-2013 dataset, freely available on Kaggle. The Keras API and a CNN algorithm at 96 Epoch are used. A Python (v3.13) script is written using Google Colab, which uses the provided dataset to train the model. A code snippet is shown in

Figure 10.

Because of the data structure, the training is performed using a CNN as opposed to an NNM algorithm or Support Vector Machine (SVM).

Bogush et al. [

50] illustrated how a CNN achieved one of the highest levels of accuracy at 90.9% by training a model to recognise facial expressions. This exceeds other algorithms such as NNMs, which have achieved 82% as the highest in emotion recognition. Their systematic review shows a distribution of the various algorithms taken into consideration in the review (

Figure 11).

It is also shown how accuracy is influenced by the quality and quantity of the data collected. Therefore, the effectiveness of the model is directly impacted by such variables.

For the present implementation, the produced custom model is then converted into a TensorFlowLite model. This model is compatible with ML Kit and CameraX, which suit the future scope of the project as, for example, the actual implementation and further developments to achieve the highest possible level of accuracy.

4. Results

The developed application is based on similar, but also different, applications present in the literature, but it also aims to set the standard on how such frameworks should be designed and built. According to the literature, not so many applications are present in the PlayStore with the mentioned capabilities. Also, most of the applications reviewed do not use facial-recognition technology, which in this case is used through Google Cloud API and also through a custom AI model.

The framework and application are evaluated against the features present in similar applications reviewed in the literature. EmoPal enhances the features implemented in EmoTea by using randomised pictures using real people. This is to improve the learning outcome. Also, including different levels with different training outcomes helps users to learn from different contexts. The minigame concept is designed to be engaging, including a level progression and a victory message once 10 prompts are guessed correctly.

The decision to use real people instead of emojis or drawn pictures is to ensure that the user would better recognise such emotions in different people. Individuals express emotions differently, especially when considering facial features. The feature improves the effectiveness of the application during the game. However, the implementation is not addressed in the reviewed and evaluated work.

The diary feature is compared and evaluated with the one present in Emoface. This activity is designed for the user to have a free sandbox environment to experiment with emotions. The diary helps keep track of how the user feels at a specific moment and automates the process of recognising their expressions. The implementation of such a feature is intended to prove how this technology can be used to promote self-directed and independent learning by using computer vision and AI, and applying the framework’s core concept of using AI technology with social-emotional therapy techniques.

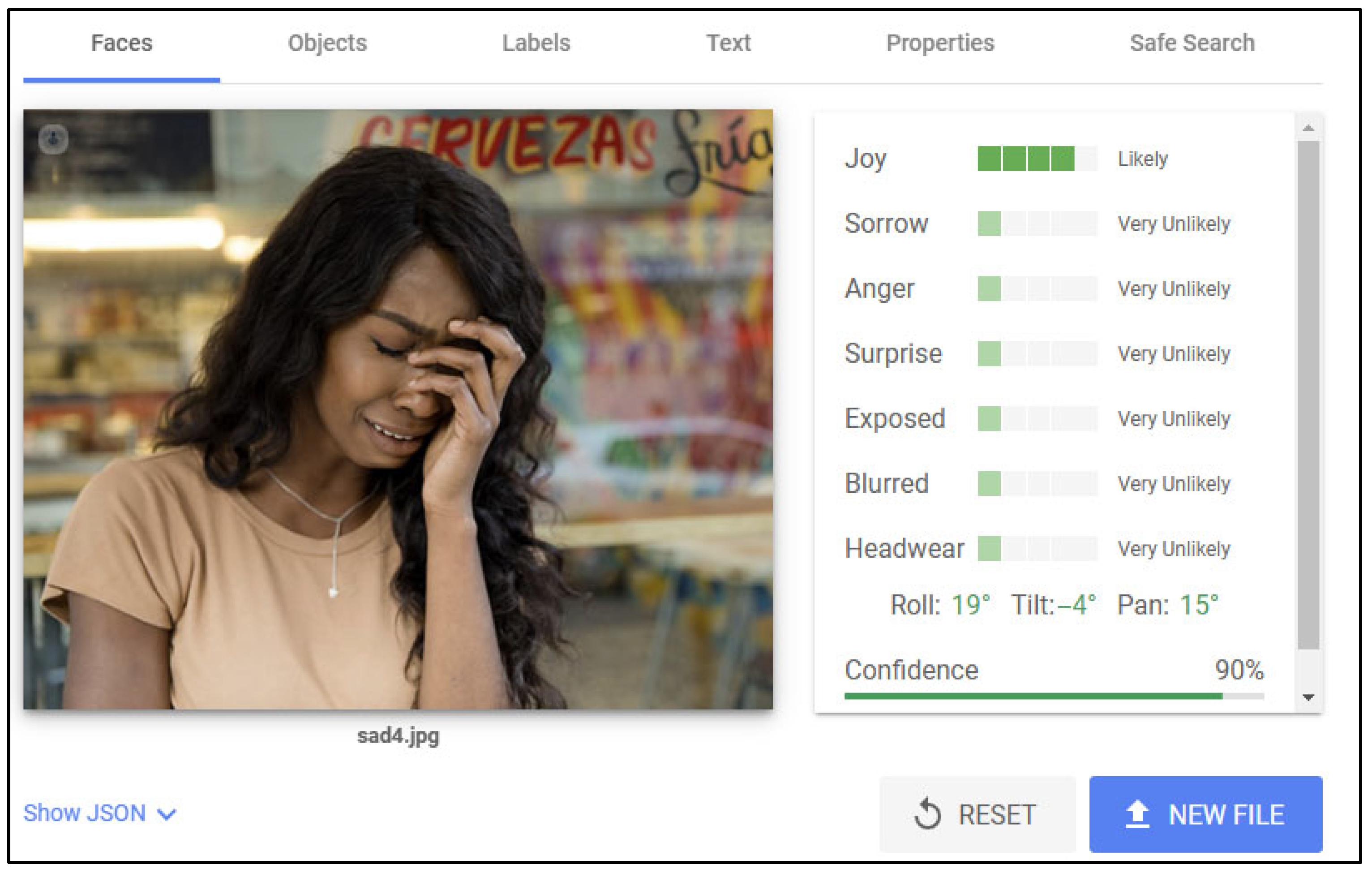

Both features use the same API to analyse the picture. The feasibility test was conducted with non-ASD control users to confirm the program was working to its specifications. During the test phase, it was noticed that Google Cloud Vision API fails to recognise the sad emotion in most cases, as shown in

Figure 12.

The reason for this could have multiple implications which span between the light exposure, the quality of the camera, and the facial element coordinates. Google advertises the Emotion Recognition feature with 80% accuracy, which applies mainly to the other emotions. The emotions recognised are also limited to four.

Both the diary and the mimicking games rely on Google Cloud Vision API. Although the game works well after conducting a feasibility test, effectively digitalising a training activity from CBT, it fails at recognising emotions accurately in a few cases. This has an impact on the effectiveness of the application, as it needs a more accurate model. Also, the REST API used requires an internet connection. This limits the use of the application only when a network connection is available, which led to the early development of a model to be directly integrated into the application. The 80% accuracy advertised by Google is impressive, and it can only improve with further adaptation and training of models. The application developed should be considered a feasibility study, and such results are going to be used to improve further iterations and developments by using customised models with potential higher accuracy. The use of the application is also encouraged with the guidance of an adult, which can help elaborate on complex emotions and give accurate feedback to the young user.

Future expansions should aim to test the application on students with ASD to analyse the effectiveness of the treatment and significant effects based on users’ age and ASD severity.

Custom NNMs Model Result Discussion

The custom model is trained with the purpose of mitigating the limitations of the implementation. Therefore, it is trained with the FER-2013 dataset containing a large number of facial expressions categorised by the expressed emotion. The current categories include seven emotions: happiness, disgust, fear, surprise, anger, neutral, and sadness.

Test results indicate that with only 96 epochs and one hour of training, the model reaches an overall accuracy of ~70%, as shown in

Figure 13.

This model is also trained to recognise more complex emotions, such as fear and disgust. However, its accuracy in detecting disgust is expected to be lower due to the limited number of training samples—only 436 images—representing this emotion included in the dataset. A detailed breakdown of the dataset by emotion is presented in

Figure 14.

For ethical reasons, the model is not implemented and tested on actual ASD subjects. The research did not have a clinical focus, with control subjects and a large sample. The pictures representing real people used to train the custom AI model are from a public dataset. Using real humans on the pictures increases the accuracy of the model in recognising human expressions using CNN techniques, as well as giving the user a way to recognise themselves in the depicted facial expressions.

The accuracy of the model is based on the test dataset provided during the AI model training and results from the training script. However, the dataset can be expanded with more pictures as well as increasing the epochs and time spent training to achieve a higher accuracy. Overall, the product effectively improves the already-seen features in similar applications by expanding them using a new and different approach, which covers the gaps in previous research. However, it still lacks high accuracy, which has an impact on the efficiency of the developed framework.

5. Discussion

The results of this study are promising, as an increasing number of technologies today are leveraging AI to support the assessment and intervention processes for individuals with ASD [

51]. Nevertheless, there remains a strong need for a structured and flexible framework. EmoPal serves as a valuable prototype that demonstrates the potential of applying a novel AI-based framework. Such tool can be effectively utilised by psychologists and educators to collaboratively support children with ASD and their families. This is particularly significant given the current scarcity of applications on the market that utilise AI-driven emotion recognition.

Figure 15 demonstrates an overview of available applications that apply AI-driven technologies in mobile environments.

The proposed framework aims to ease future work and implementations by creating a new methodology to refer to, which is also capable of being dynamically expanded in the future. The idea behind the core functionalities is that it is designed to be used in a domestic environment and commonly used devices such as smartphones and tablets. This is to increase the accessibility of the application and allow the methodology to be applied on a larger scale. Furthermore, having in mind an accessible UI facilitates the operations to access the requested features with ease. The minigame has an easy-to-understand implementation, considering the challenges that children with ASD can face. This is an example of how it is possible to digitalise these activities by using AI technology and to make them highly interactive and easier to use.

The game represents an enhanced version of those described in the literature, offering greater potential for further development through the integration of new elements and improved human–computer interaction. The concepts of the minigame levels are used as the base to develop more functionalities to make EmoPal more engaging to use and to reinforce its use. An achievement system can be implemented in future versions, promoting a streak point gathering and achievements. This is to improve the reinforcement principle of the framework even further. The diary implementation is a great way for users to keep track of their emotions in a sandbox environment. Such functionality would help the user to recognise and name emotions expressed by themselves. The association between facial expressions and emotions is made clear to facilitate their identification. This would help children with ASD to give a name to an emotional state and keep track of it. Educators or legal guardians can use such functionality to discuss emotions and have training sessions to elaborate further on them.

Serious games are a very effective way to teach children with ASD, especially using new technologies. The current state of the application allows users to play with only one type of minigame, which can be easily expanded with new features in the future. However, the implementation, although in a prototype state of development, can prove effective to help children develop social-emotional skills by playing as illustrated in the reviewed literature. As also stated in the literature review, serious games can help such individuals recognise and express emotions effectively by experimenting in a playful and engaging environment.

This API can also be replaced with the offline model produced. However, more testing and research are needed to reach a satisfactory level of accuracy. The implementation would greatly improve the effectiveness of EmoPal, as it would remove the need for having an active internet connection. By working offline, the model also allows us to perform the analysis of the image provided for free and in less time. The current average time recorded for the image to be processed is ~300 ms, which will be reduced if the offline model is used.

The application’s architecture and its underlying framework are designed to be expanded and improved in time for future development. The libraries used supporting TensorFlow Lite models, which means that the application can use such custom and trained AI models to further improve its accuracy. Further research can expand on the current work by effectively producing a more accurate model to be used offline, as well as by testing the application with joint work with a professional developmental psychologist and assessing children with ASD, as seen in previous work.

6. Conclusions

In this paper, a new framework is designed and thoroughly studied to support the development of social-emotional skills in children with ASD. EmoPal and its framework allow for the development of an engaging application specifically tailored for children with ASD to play to improve their social-emotional skills as well as their emotion awareness. The adopted methodologies help stimulate creativity and abstraction, teaching the user how to recognise patterns seen in facial expressions and social contexts by leveraging AI capabilities.

New emotions can be implemented in further versions of the applications, along with more complex emotions, which might have a new game designed specifically for them. The current state of the application serves as a good prototype for further developments in a complex and interdisciplinary subject. Further studies could help extend the research and future developments with human tests using students with ASD, which was not possible during the present iterations.

The limitations highlighted from testing the AI model and the API are valuable insights for future work and developments. The following work will see the use of a more accurate AI custom model and a better refined interface. Further research is needed to analyse how the produced prototype would impact ASD children, as well as introducing several variables such as the severity of ASD and age of subjects.

Developmental psychology can greatly benefit from computer science solutions in such a thriving and ever-changing world. The findings aim to offer a novel approach to both subjects, shedding a revealing light on how to improve the quality of life of children with ASD by using new emerging technologies such as computer vision and AI, using current technologies at their fullest, and to set standards with the proposed framework, allowing different disciplines to collaborate to create new innovative solutions using an interdisciplinary approach.