A Lightweight Intrusion Detection System for IoT and UAV Using Deep Neural Networks with Knowledge Distillation

Abstract

1. Introduction

2. Related Work

2.1. Knowledge Distillation-Based IDS

2.2. Federated Learning with Knowledge Distillation

2.3. Hybrid and GAN-Based IDS with KD Approaches

2.4. Cyber-Physical and Feature Fusion Models

2.5. Self-Knowledge Distillation and Optimization-Based Distillation

3. Methodology

3.1. Dataset Introduction

3.1.1. NSL-KDD

3.1.2. UNSW-NB15

3.1.3. CIC-IDS2017

3.1.4. IoTID20

3.1.5. UAV IDS

3.2. Data Preprocessing

3.3. Algorithms

3.3.1. Deep Neural Networks (DNN)

- Input layer—Accepts input featureswhere n is the number of features.

- Hidden layer—erforms transformations through neurons. Each neuron applies a linear transformation followed by a non-linear activation function (e.g., ReLU, sigmoid).

- Output layer—Produces predictions .

- is the weight matrix of layer l,

- is the bias vector,

- is a non-linear activation function (ReLU).

- m is the number of samples,

- is the true label (0 for normal, 1 for anomaly),

- is the predicted probability of an anomaly.

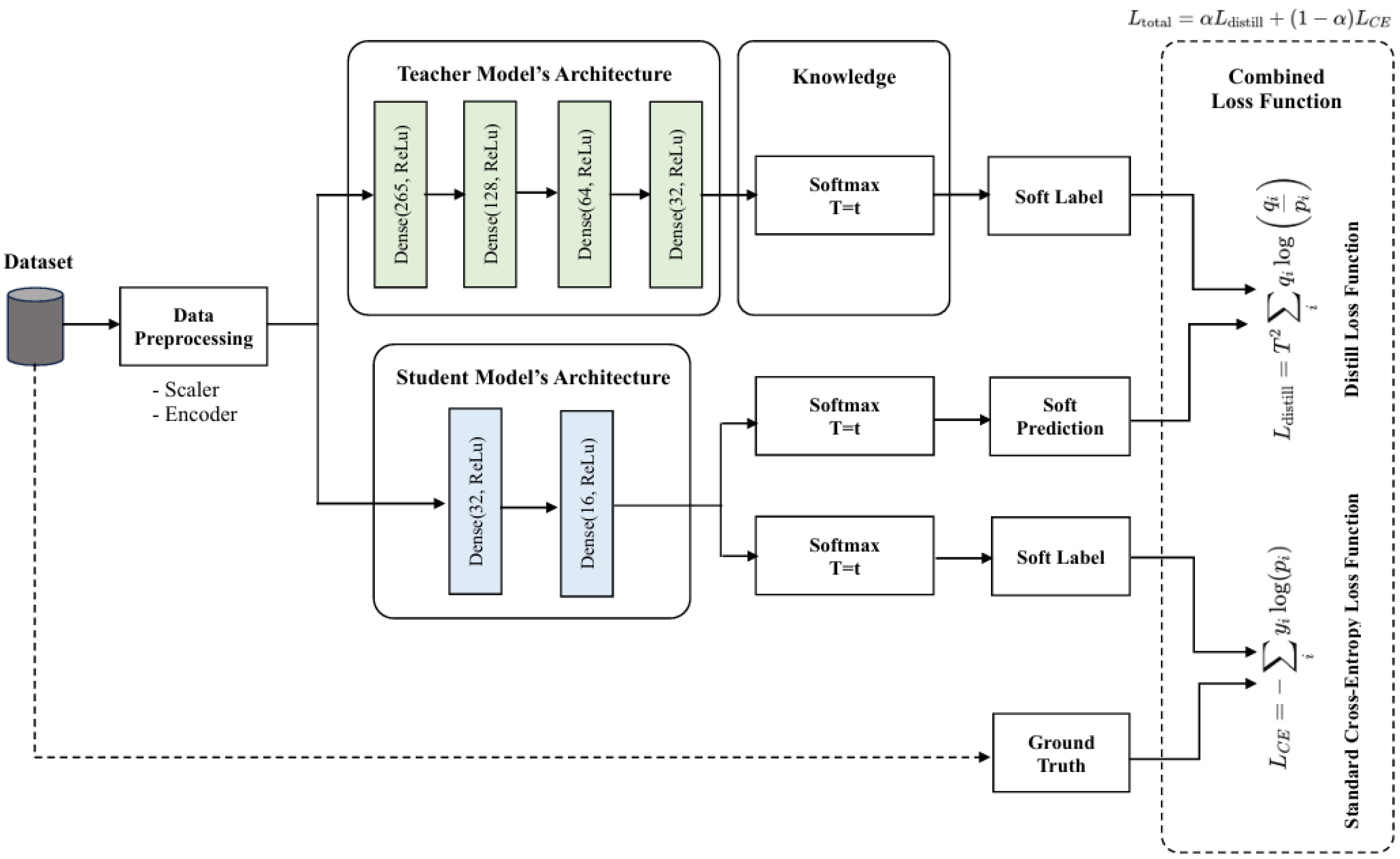

3.3.2. Knowledge Distillation (KD)

- T: the temperature parameter that controls the softness of the probability distribution.

- : the soft probability (output by the teacher model) for class i.

- : the soft probability (output by the student model) for class i.

- is a hyperparameter balancing the two losses. It is a weighting factor that balances the distillation and cross-entropy losses.

- is defined as follows:

4. Result

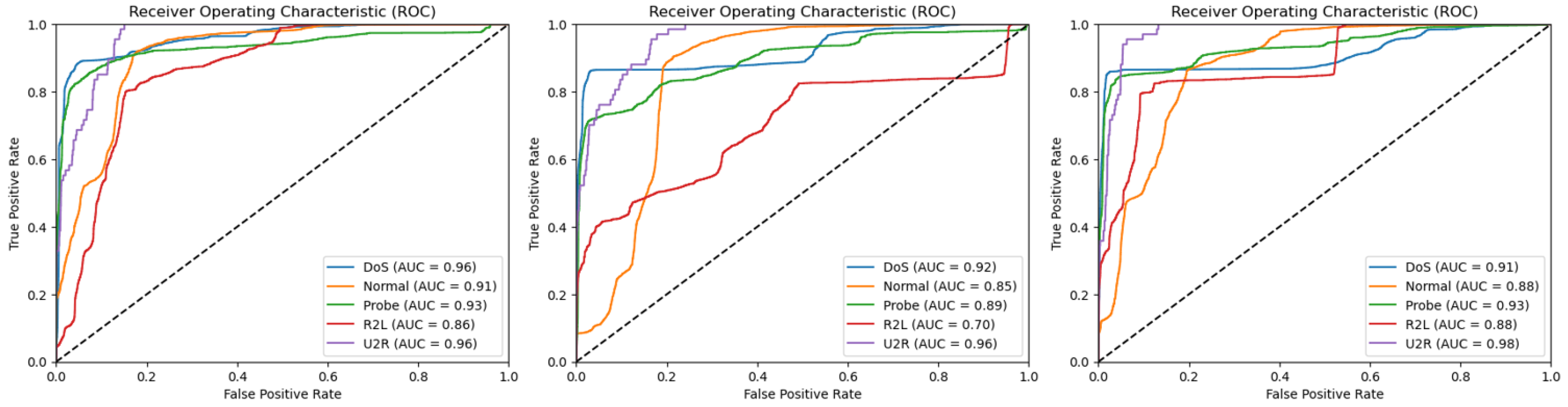

4.1. NSL-KDD

4.2. UNSW-NB15

4.3. CIC-IDS2017

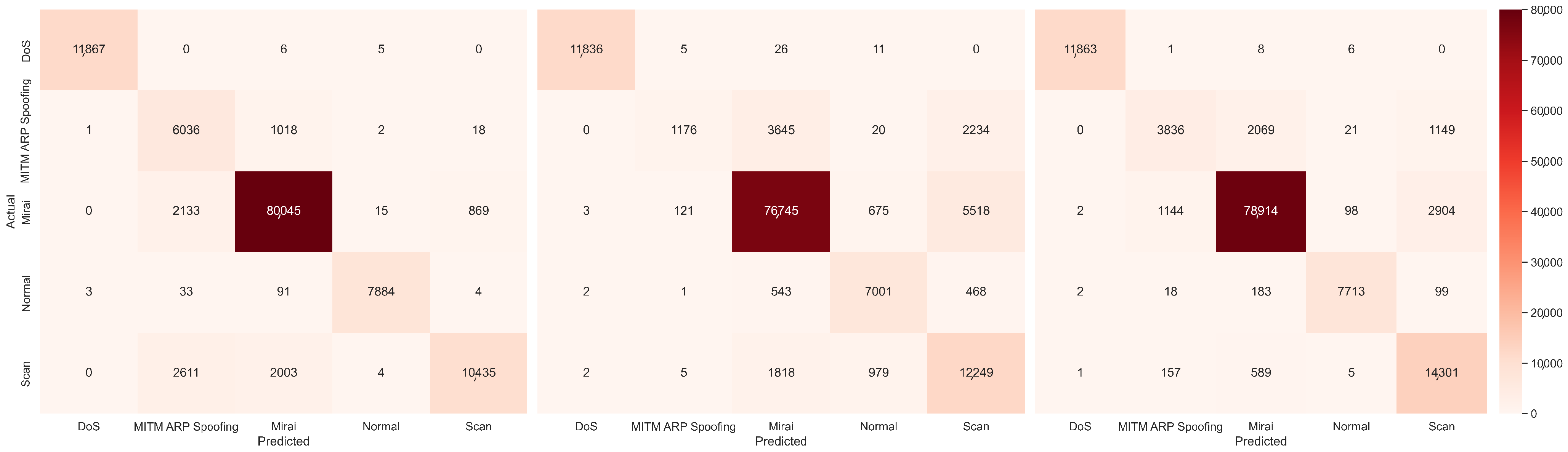

4.4. IoTID20

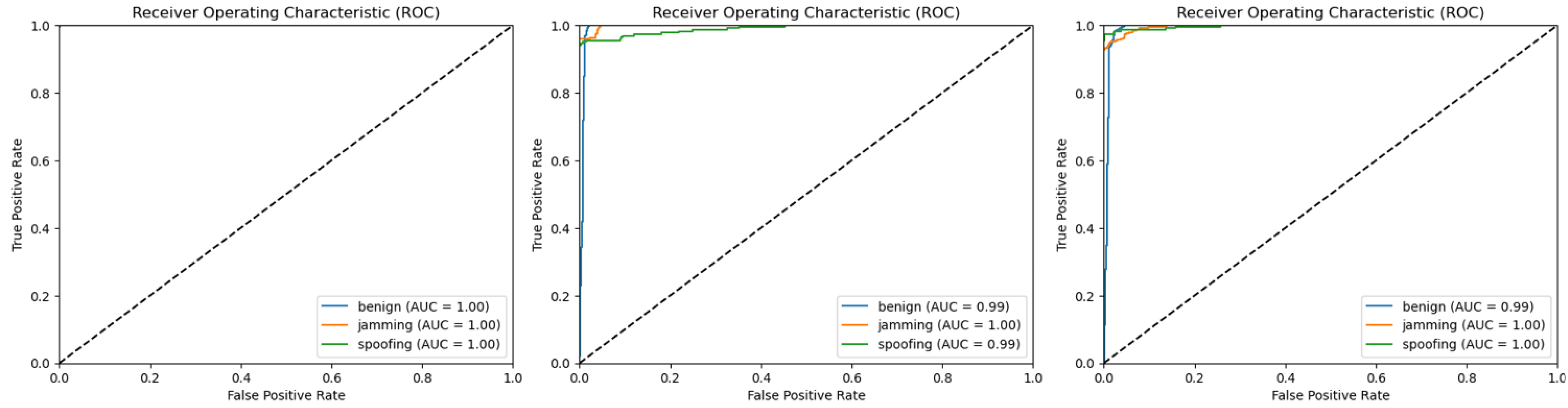

4.5. UAV IDS

4.6. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| Abbreviation | Definition |

| AB-TRAP | Attack Bonafide Train RealizAtion and Performance |

| AUC | Area Under the Curve |

| CNN | Convolutional Neural Network |

| CPS | Cyber Physical System |

| DNN | Deep Neural Network |

| FCN | Fully-Connected Network |

| FFCNN | Feedforward Convolutional Neural Network |

| GAN | Generative Adversarial Network |

| GPS | Global Positioning System |

| IDS | Intrusion Detection System |

| IMU | Inertial Measurement Unit |

| IoT | Internet of Things |

| KD | Knowledge Distillation |

| KDDT | Knowledge Distillation-Empowered Digital Twin for Anomaly Detection |

| LNet-SKD | Lightweight Intrusion Detection Approach based on Self-Knowledge Distillation |

| NFQUEUE | Netfilter QUEUE |

| RNN | Recurrent Neural Network |

| ROC | Receiver Operating Characteristic |

| RSSI | Received Signal Strength Indicator |

| SSFL | Semi-Supervised Federated Learning |

| TBCLNN | Tied Block Convolution Lightweight Deep Neural Network |

| UAV | Unmanned Aerial Vehicle |

References

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J. Survey of intrusion detection systems: Techniques, datasets and challenges. Cybersecurity 2019, 2, 1–22. [Google Scholar] [CrossRef]

- Scarfone, K.; Mell, P. Guide to intrusion detection and prevention systems (idps). NIST Spec. Publ. 2007, 800, 94. [Google Scholar]

- Hussain, A.; Sharif, H.; Rehman, F.; Kirn, H.; Sadiq, A.; Khan, M.S.; Riaz, A.; Ali, C.N.; Chandio, A.H. A systematic review of intrusion detection systems in internet of things using ML and DL. In Proceedings of the 2023 4th International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 17–18 March 2023; pp. 1–5. [Google Scholar]

- Sommer, R.; Paxson, V. Outside the closed world: On using machine learning for network intrusion detection. In Proceedings of the 2010 IEEE Symposium on Security and Privacy, Oakland, CA, USA, 16–19 May 2010; pp. 305–316. [Google Scholar]

- Fernandes, G.; Rodrigues, J.J.; Carvalho, L.F.; Al-Muhtadi, J.F.; Proença, M.L. A comprehensive survey on network anomaly detection. Telecommun. Syst. 2019, 70, 447–489. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Shone, N.; Ngoc, T.N.; Phai, V.D.; Shi, Q. A deep learning approach to network intrusion detection. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 2, 41–50. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. ICISSp 2018, 1, 108–116. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Gou, J.; Yu, B.; Maybank, S.J.; Tao, D. Knowledge distillation: A survey. Int. J. Comput. Vis. 2021, 129, 1789–1819. [Google Scholar] [CrossRef]

- Cheng, Y.; Wang, D.; Zhou, P.; Zhang, T. Model compression and acceleration for deep neural networks: The principles, progress, and challenges. IEEE Signal Process. Mag. 2018, 35, 126–136. [Google Scholar] [CrossRef]

- Teerapittayanon, S.; McDanel, B.; Kung, H.T. Distributed deep neural networks over the cloud, the edge and end devices. In Proceedings of the 2017 IEEE 37th International Conference on Distributed Computing Systems (ICDCS), Atlanta, GA, USA, 5–8 June 2017; pp. 328–339. [Google Scholar]

- Wang, Z.; Li, Z.; He, D.; Chan, S. A lightweight approach for network intrusion detection in industrial cyber-physical systems based on knowledge distillation and deep metric learning. Expert Syst. Appl. 2022, 206, 117671. [Google Scholar] [CrossRef]

- Li, Z.; Yao, W. A two stage lightweight approach for intrusion detection in Internet of Things. Expert Syst. Appl. 2024, 257, 124965. [Google Scholar] [CrossRef]

- Wang, L.H.; Dai, Q.; Du, T.; Chen, L.f. Lightweight intrusion detection model based on CNN and knowledge distillation. Appl. Soft Comput. 2024, 165, 112118. [Google Scholar] [CrossRef]

- Zhao, R.; Chen, Y.; Wang, Y.; Shi, Y.; Xue, Z. An efficient and lightweight approach for intrusion detection based on knowledge distillation. In Proceedings of the ICC 2021—IEEE International Conference on Communications, Montreal, QC, Canada, 14–23 June 2021; pp. 1–6. [Google Scholar]

- Chen, J.; Guo, Q.; Fu, Z.; Shang, Q.; Ma, H.; Wang, N. Semi-supervised Campus Network Intrusion Detection Based on Knowledge Distillation. In Proceedings of the 2023 International Joint Conference on Neural Networks (IJCNN), Gold Coast, Australia, 18–23 June 2023; pp. 1–7. [Google Scholar]

- Zhu, S.; Xu, X.; Zhao, J.; Xiao, F. Lkd-stnn: A lightweight malicious traffic detection method for internet of things based on knowledge distillation. IEEE Internet Things J. 2023, 11, 6438–6453. [Google Scholar] [CrossRef]

- Ren, Y.; Restivo, R.D.; Tan, W.; Wang, J.; Liu, Y.; Jiang, B.; Wang, H.; Song, H. Knowledge distillation-based GPS spoofing detection for small UAV. Future Internet 2023, 15, 389. [Google Scholar] [CrossRef]

- Abbasi, M.; Shahraki, A.; Prieto, J.; Arrieta, A.G.; Corchado, J.M. Unleashing the potential of knowledge distillation for IoT traffic classification. IEEE Trans. Mach. Learn. Commun. Netw. 2024, 2, 221–239. [Google Scholar] [CrossRef]

- Zhao, R.; Yang, L.; Wang, Y.; Xue, Z.; Gui, G.; Ohtsuki, T. A semi-supervised federated learning scheme via knowledge distillation for intrusion detection. In Proceedings of the ICC 2022—IEEE International Conference on Communications, Seoul, Republic of Korea, 16–20 May 2022; pp. 2688–2693. [Google Scholar]

- Shen, J.; Yang, W.; Chu, Z.; Fan, J.; Niyato, D.; Lam, K.Y. Effective intrusion detection in heterogeneous Internet-of-Things networks via ensemble knowledge distillation-based federated learning. In Proceedings of the ICC 2024—IEEE International Conference on Communications, Denver, CO, USA, 9–13 June 2024; pp. 2034–2039. [Google Scholar]

- Quyen, N.H.; Duy, P.T.; Nguyen, N.T.; Khoa, N.H.; Pham, V.H. FedKD-IDS: A robust intrusion detection system using knowledge distillation-based semi-supervised federated learning and anti-poisoning attack mechanism. Inf. Fusion 2025, 117, 102807. [Google Scholar] [CrossRef]

- Ravipati, R.D.; Abualkibash, M. Intrusion detection system classification using different machine learning algorithms on KDD-99 and NSL-KDD datasets-a review paper. Int. J. Comput. Sci. Inf. Technol. (IJCSIT) Vol 2019, 11, 65–80. [Google Scholar] [CrossRef]

- Ali, T.; Eleyan, A.; Bejaoui, T.; Al-Khalidi, M. Lightweight Intrusion Detection System with GAN-Based Knowledge Distillation. In Proceedings of the 2024 International Conference on Smart Applications, Communications and Networking (SmartNets), Harrisonburg, VA, USA, 28–30 May 2024; pp. 1–7. [Google Scholar]

- Bertoli, G.D.C.; Júnior, L.A.P.; Saotome, O.; Dos Santos, A.L.; Verri, F.A.N.; Marcondes, C.A.C.; Barbieri, S.; Rodrigues, M.S.; De Oliveira, J.M.P. An end-to-end framework for machine learning-based network intrusion detection system. IEEE Access 2021, 9, 106790–106805. [Google Scholar] [CrossRef]

- Hassler, S.C.; Mughal, U.A.; Ismail, M. Cyber-physical intrusion detection system for unmanned aerial vehicles. IEEE Trans. Intell. Transp. Syst. 2023, 25, 6106–6117. [Google Scholar] [CrossRef]

- Hadi, H.J.; Cao, Y.; Li, S.; Hu, Y.; Wang, J.; Wang, S. Real-time collaborative intrusion detection system in uav networks using deep learning. IEEE Internet Things J. 2024, 11, 33371–33391. [Google Scholar] [CrossRef]

- Yang, S.; Zheng, X.; Xu, Z.; Wang, X. A lightweight approach for network intrusion detection based on self-knowledge distillation. In Proceedings of the ICC 2023—IEEE International Conference on Communications, Rome, Italy, 28 May–1 June 2023; pp. 3000–3005. [Google Scholar]

- Wang, Z.; Zhou, R.; Yang, S.; He, D.; Chan, S. A Novel Lightweight IoT Intrusion Detection Model Based on Self-knowledge Distillation. IEEE Internet Things J. 2025, 12, 16912–16930. [Google Scholar] [CrossRef]

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A detailed analysis of the KDD CUP 99 data set. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009; pp. 1–6. [Google Scholar]

- Wisanwanichthan, T.; Thammawichai, M. A double-layered hybrid approach for network intrusion detection system using combined naive bayes and SVM. IEEE Access 2021, 9, 138432–138450. [Google Scholar] [CrossRef]

- Moustafa, N.; Slay, J. UNSW-NB15: A comprehensive data set for network intrusion detection systems (UNSW-NB15 network data set). In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, ACT, Australia, 10–12 November 2015; pp. 1–6. [Google Scholar]

- Kumar, A.; Guleria, K.; Chauhan, R.; Upadhyay, D. Advancing Intrusion Detection with Machine Learning: Insights from the UNSW-NB15 Dataset. In Proceedings of the 2024 IEEE International Conference on Information Technology, Electronics and Intelligent Communication Systems (ICITEICS), Bangalore, India, 28–29 June 2024; pp. 1–5. [Google Scholar]

- Arun, C.B.; Anusha, M.; Rao, T.; Rohini, B.; Gargi, N.; Kodipalli, A. Enhancing Network Intrusion Detection using Artificial Neural Networks: An Analysis of the UNSW-NB15 Dataset. In Proceedings of the 2024 International Conference on Integrated Intelligence and Communication Systems (ICIICS), Kalaburagi, India, 22–23 November 2024; pp. 1–7. [Google Scholar]

- Ullah, I.; Mahmoud, Q.H. A scheme for generating a dataset for anomalous activity detection in iot networks. In Proceedings of the Canadian Conference on Artificial Intelligence, Kingston, ON, Canada, 28–31 May 2020; pp. 508–520. [Google Scholar]

- Elrawy, M.F.; Awad, A.I.; Hamed, H.F. Intrusion detection systems for IoT-based smart environments: A survey. J. Cloud Comput. 2018, 7, 1–20. [Google Scholar] [CrossRef]

- Bebortta, S.; Senapati, D. Empirical characterization of network traffic for reliable communication in IoT devices. In Security in Cyber—Physical Systems; Springer: Berlin/Heidelberg, Germany, 2021; pp. 67–90. [Google Scholar]

- Whelan, J.; Sangarapillai, T.; Minawi, O.; Almehmadi, A.; El-Khatib, K. Uav attack dataset. IEEE Dataport 2020, 167, 1561–1573. [Google Scholar]

- Choudhary, G.; Sharma, V.; You, I.; Yim, K.; Chen, R.; Cho, J.H. Intrusion detection systems for networked unmanned aerial vehicles: A survey. In Proceedings of the 2018 14th International Wireless Communications & Mobile Computing Conference (IWCMC), Limassol, Cyprus, 25–29 June 2018; pp. 560–565. [Google Scholar]

- Krishna, C.L.; Murphy, R.R. A review on cybersecurity vulnerabilities for unmanned aerial vehicles. In Proceedings of the 2017 IEEE International Symposium on Safety, Security and Rescue Robotics (SSRR), Shanghai, China, 11–13 October 2017; pp. 194–199. [Google Scholar]

- Attack, W.; Attack, I.; Attack, B.F. Ensemble of feature augmented convolutional neural network and deep autoencoder for efficient detection of network attacks. Sci. Rep. 2025, 15, 4267. [Google Scholar] [CrossRef] [PubMed]

- Fu, Y.; Du, Y.; Cao, Z.; Li, Q.; Xiang, W. A deep learning model for network intrusion detection with imbalanced data. Electronics 2022, 11, 898. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Volume 1. [Google Scholar]

- Drewek-Ossowicka, A.; Pietrołaj, M.; Rumiński, J. A survey of neural networks usage for intrusion detection systems. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 497–514. [Google Scholar] [CrossRef]

- Khalaf, L.I.; Alhamadani, B.; Ismael, O.A.; Radhi, A.A.; Ahmed, S.R.; Algburi, S. Deep learning-based anomaly detection in network traffic for cyber threat identification. In Proceedings of the Cognitive Models and Artificial Intelligence Conference, Prague, Czech Republic, 13–14 June 2024; pp. 303–309. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Jeong, H.J.; Lee, H.J.; Kim, G.N.; Choi, S.H. Self-MCKD: Enhancing the Effectiveness and Efficiency of Knowledge Transfer in Malware Classification. Electronics 2025, 14, 1077. [Google Scholar] [CrossRef]

- Wu, Y.; Zang, Z.; Zou, X.; Luo, W.; Bai, N.; Xiang, Y.; Li, W.; Dong, W. Graph attention and Kolmogorov–Arnold network based smart grids intrusion detection. Sci. Rep. 2025, 15, 8648. [Google Scholar] [CrossRef] [PubMed]

- Goldblum, M.; Fowl, L.; Feizi, S.; Goldstein, T. Adversarially robust distillation. In Proceedings of the AAAI conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 3996–4003. [Google Scholar]

- Sheatsley, R.; Papernot, N.; Weisman, M.J.; Verma, G.; McDaniel, P. Adversarial examples for network intrusion detection systems. J. Comput. Secur. 2022, 30, 727–752. [Google Scholar] [CrossRef]

| Study | Datasets Used | Methods | Key Contributions |

|---|---|---|---|

| Wang et al. (2022) [14] | NSL-KDD, CIC-IDS2017 | Triplet-CNN teacher to small CNN student via KD | Reduced model size by 86% with only 0.4% accuracy loss; outperformed SOTA on both benchmarks. |

| Chen et al. (2023) [18] | Campus network traffic | Semi-supervised KD with labeled and unlabeled data | Realized 98.49% parameter reduction and 98.52% FLOPs decrease for student models, with only 1–2% drop in accuracy. |

| Li & Yao (2024) [15] | KDD CUP99, NSL-KDD, UNSW-NB15, BoT-IoT, CIC-IDS2017 | CL-SKD two-stage detection: contrastive self-supervision, self-KD, and depth-wise separable convolution | Achieved up to 99.95% in binary and 99.93% in multi-class accuracy on KDD CUP99, and strong generalization across multiple IDS datasets. |

| Ali et al. (2024) [26] | NSL-KDD, CIC-IDS2017, IoT-23 | Generative adversarial networks with KD | Demonstrated >95% accuracy in NSL-KDD and CIC-IDS2017 datasets and 89% in IoT-23, but performance drops with adversarial training. |

| Wang et al. (2025) [31] | CIC-IDS2017, BoT-IoT, TON-IoT | Self-KD (TBCLNN) with binary Harris hawk optimization for feature selection and tied block convolution | Obtained 99% multiclass classification accuracy across all three benchmarks while remaining computationally efficient. |

| This Work | NSL-KDD, UNSW-NB15, CIC-IDS2017, IoTID20, UAV-IDS | Four-layer FCN teacher to two-layer FCN student via KD (alpha = 0.4, T = 3) | First to apply vanilla KD uniformly across five diverse IDS domains, achieving > 90% parameter reduction and 11% faster inference without accuracy loss. |

| Class | Description | No. of Training | No. of Testing |

|---|---|---|---|

| Denial of Service (DoS) | Attacks that make the service unavailable by flooding connections. | 45,927 | 7460 |

| Normal | Normal traffic. | 67,343 | 9711 |

| Probe | Scans or probes for vulnerabilities or important data (e.g., port scanning). | 11,656 | 2421 |

| Remote to Local (R2L) | An outsider gains unauthorized local access. | 995 | 2885 |

| User to Root (U2R) | A local user account is exploited to gain root or administrative privileges. | 52 | 67 |

| Class | Description | No. of Training | No. of Testing |

|---|---|---|---|

| Analysis | Evaluating system behavior or patterns to identify vulnerabilities or threats. | 2000 | 677 |

| Backdoor | A hidden entry point used to access a system without authorization. | 1746 | 583 |

| DoS | Flooding a system or resource to deny service to legitimate users. | 12,264 | 4089 |

| Exploits | Attacks that take advantage of vulnerabilities in software or systems. | 33,393 | 11,132 |

| Fuzzers | Tools or methods that send random data to applications to uncover weaknesses. | 18,184 | 6062 |

| Generic | Attacks that are not specific to a particular system or application. | 40,000 | 18,871 |

| Normal | Normal traffic. | 56,000 | 37,000 |

| Reconnaissance | Gathering information about a target to prepare for an attack. | 10,491 | 3496 |

| Shellcode | Malicious code used to control or exploit a compromised system. | 1133 | 378 |

| Worms | Self-replicating malware that spreads without requiring user interaction. | 130 | 44 |

| Class | Description | No. of Training | No. of Testing |

|---|---|---|---|

| Bot | An attack that performs automated tasks, often used in malicious activities like botnets. | 1565 | 391 |

| BruteForce | An attack that systematically tries all possible combinations of passwords or keys. | 11,066 | 2766 |

| DoS | Overwhelming a system with traffic to make it unavailable. | 102,420 | 25,605 |

| DDoS | A DoS attack launched from multiple devices, often part of a botnet. | 201,369 | 50,343 |

| Normal | Normal traffic. | 1,817,056 | 454,264 |

| PortScan | Scanning a system’s ports to find open or vulnerable entry points. | 127,043 | 31,761 |

| Web Attack | Exploiting vulnerabilities in web applications, such as through SQL injection or XSS. | 1744 | 436 |

| Class | Description | No. of Training | No. of Testing |

|---|---|---|---|

| DoS | An attack that floods a system to make it unavailable to users. | 47,513 | 11,878 |

| Mirai | Malware that infects IoT devices, creating botnets for launching large-scale DDoS attacks. | 28,302 | 7075 |

| MITM ARP Spoofing | Intercepting communication by tricking devices into sending data through the attacker’s system. | 332,247 | 83,062 |

| Normal | Normal traffic. | 32,058 | 8015 |

| Scan | Probing a network or system to identify open ports, services, or vulnerabilities. | 60,212 | 15,053 |

| Class | Description | No. of Training | No. of Testing |

|---|---|---|---|

| Benign | Normal traffic. | 4459 | 3650 |

| Jamming | Disrupting communication signals by overwhelming them with interference or noise. | 803 | 657 |

| Spoofing | Falsifying data or signals to impersonate a legitimate source and deceive a target. | 274 | 224 |

| Metric | Formula | Description |

|---|---|---|

| Accuracy | Measures how often the model predicts correctly for all classes. | |

| Precision (weighted averaged) | Number of positive predictions that are actually correct. | |

| Recall (weighted averaged) | Number of actual positives that the model truly identifies. | |

| F1 Score (Weighted-Averaged) | Balances precision and recall; prioritizes classes with more true instances. | |

| True positive rate (TPR) | Fraction of positives correctly identified. | |

| False positive rate (FPR) | Fraction of negatives incorrectly identified as positives. | |

| AUC (Area Under Curve) | – | Measures the area under the ROC curve; higher AUC means better performance. |

| Inference Speed | Measures how fast the model processes each instance. |

| Model’s Architecture | No. of Parameters | Accuracy (%) | Precision (%) | F1 Score (%) | Inference Time |

|---|---|---|---|---|---|

| Deep Neural Network (Teacher) | 224,657 | 77.22 | 82.16 | 73.05 | 2.935 |

| Shallow Neural Network (Student without KD) | 13,649 | 75.96 | 82.06 | 70.97 | 2.630 |

| Shallow Neural Network (Student with KD) | 13,649 (93.93%) | 76.73 (+0.77%) | 82.62 (+0.56%) | 71.83 (+0.86%) | 2.599 (−11.45%) |

| Model’s Architecture | No. of Parameters | Accuracy (%) | Precision (%) | F1 Score (%) | Inference Time |

|---|---|---|---|---|---|

| Deep Neural Network (Teacher) | 280,448 | 75.45 | 81.12 | 76.40 | 2.722 |

| Shallow Neural Network (Student without KD) | 20,816 | 69.66 | 78.12 | 71.10 | 2.502 |

| Shallow Neural Network (Student with KD) | 20,816 (−92.57%) | 75.78 (+6.12%) | 80.98 (+2.86%) | 76.10 (+5.00%) | 2.451 (−9.96%) |

| Model’s Architecture | No. of Parameters | Accuracy (%) | Precision (%) | F1 Score (%) | Inference Time |

|---|---|---|---|---|---|

| Deep Neural Network (Teacher) | 191,063 | 98.22 | 98.30 | 98.20 | 2.602 |

| Shallow Neural Network (Student without KD) | 9527 | 97.81 | 97.97 | 97.79 | 2.382 |

| Shallow Neural Network (Student with KD) | 9527 (−95.01%) | 97.88 (+0.07%) | 97.95 (+0.02%) | 97.85 (+0.06%) | 2.398 (−7.84%) |

| Model’s Architecture | No. of Parameters | Accuracy (%) | Precision (%) | F1 Score (%) | Inference Time |

|---|---|---|---|---|---|

| Deep Neural Network (Teacher) | 191,633 | 92.95 | 94.04 | 93.13 | 2.651 |

| Shallow Neural Network (Student without KD) | 9521 | 87.15 | 88.51 | 86.20 | 2.386 |

| Shallow Neural Network (Student with KD) | 9521 (−95.03%) | 93.24 (+6.09%) | 93.42 (+4.91%) | 93.13 (+6.93%) | 2.462 (−7.13%) |

| Model’s Architecture | No. of Parameters | Accuracy (%) | Precision (%) | F1 Score (%) | Inference Time |

|---|---|---|---|---|---|

| Deep Neural Network (Teacher) | 194,507 | 99.93 | 99.93 | 99.93 | 2.650 |

| Shallow Neural Network (Student without KD) | 9803 | 97.97 | 98.02 | 97.86 | 2.386 |

| Shallow Neural Network (Student with KD) | 9803 (−94.96%) | 98.63 (+0.66%) | 98.65 (+0.63%) | 98.59 (+0.73%) | 2.462 (−7.09%) |

| Dataset | # Test Samples | Model | Accuracy (%) | 95% CI Lower (%) | 95% CI Upper (%) |

|---|---|---|---|---|---|

| NSL-KDD | 22,544 | Teacher | 77.22 | 76.67 | 77.77 |

| Student without KD | 75.96 | 75.40 | 76.52 | ||

| Student with KD | 76.73 | 76.18 | 77.28 | ||

| UNSW-NB15 | 82,332 | Teacher | 75.45 | 75.16 | 75.74 |

| Student without KD | 69.66 | 69.35 | 69.97 | ||

| Student with KD | 75.78 | 75.49 | 76.07 | ||

| CIC-IDS2017 | 565,566 | Teacher | 98.22 | 98.19 | 98.25 |

| Student without KD | 97.81 | 97.77 | 97.85 | ||

| Student with KD | 97.88 | 97.84 | 97.92 | ||

| IoTID20 | 125,083 | Teacher | 92.95 | 92.81 | 93.09 |

| Student without KD | 87.15 | 86.96 | 87.34 | ||

| Student with KD | 93.24 | 93.10 | 93.38 | ||

| UAV IDS | 4531 | Teacher | 99.93 | 99.86 | 100.00 |

| Student without KD | 97.97 | 97.56 | 98.38 | ||

| Student with KD | 98.63 | 98.29 | 98.97 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wisanwanichthan, T.; Thammawichai, M. A Lightweight Intrusion Detection System for IoT and UAV Using Deep Neural Networks with Knowledge Distillation. Computers 2025, 14, 291. https://doi.org/10.3390/computers14070291

Wisanwanichthan T, Thammawichai M. A Lightweight Intrusion Detection System for IoT and UAV Using Deep Neural Networks with Knowledge Distillation. Computers. 2025; 14(7):291. https://doi.org/10.3390/computers14070291

Chicago/Turabian StyleWisanwanichthan, Treepop, and Mason Thammawichai. 2025. "A Lightweight Intrusion Detection System for IoT and UAV Using Deep Neural Networks with Knowledge Distillation" Computers 14, no. 7: 291. https://doi.org/10.3390/computers14070291

APA StyleWisanwanichthan, T., & Thammawichai, M. (2025). A Lightweight Intrusion Detection System for IoT and UAV Using Deep Neural Networks with Knowledge Distillation. Computers, 14(7), 291. https://doi.org/10.3390/computers14070291