AHA: Design and Evaluation of Compute-Intensive Hardware Accelerators for AMD-Xilinx Zynq SoCs Using HLS IP Flow

Abstract

1. Introduction

- A suite of five AHA IP-cores for rapid evaluation and benchmarking of Zynq-based heterogeneous SoCs. These IP-cores accelerate a group of customized, compute-intensive algorithms: (i) matrix multiplication, (ii) fast Fourier transform (FFT), (iii) advanced encryption standard (AES), (iv) back-propagation neural network (BPNN), and (v) artificial neural network (ANN).

- Performance evaluation and optimization of hardware and software implementation for these algorithms using various configurations of IP-cores in Zynq FPGA-based SoCs.

- Development of custom Tool Command Language (Tcl) scripts for the Vivado Design Suite to automate the generation of hardware accelerator IP-cores and Zynq FPGA-based SoCs, reducing the non-recurring engineering (NRE) cost for less experienced designers.

- Documented and streamlined design flow for developing heterogeneous SoCs and hardware accelerator IP-cores in Zynq FPGAs using Vivado HLS.

- A concise survey of the state-of-the-art in HLS, summarizing current prominent tools and principal HLS concepts.

2. HLS Tools and Related Work

2.1. Relevant High-Level Synthesis Tools

2.2. Studies That Evaluate or Develop HLS Tools

2.3. AMD-Xilinx HLS Tools

2.3.1. Vivado HLS

2.3.2. Vitis Unified Software Platform (USP)

2.3.3. Vitis HLS and Development Flows

2.3.4. Vitis Libraries

2.3.5. Vivado HLS Examples

2.4. Benchmarking IP Cores for Zynq-Based SoCs

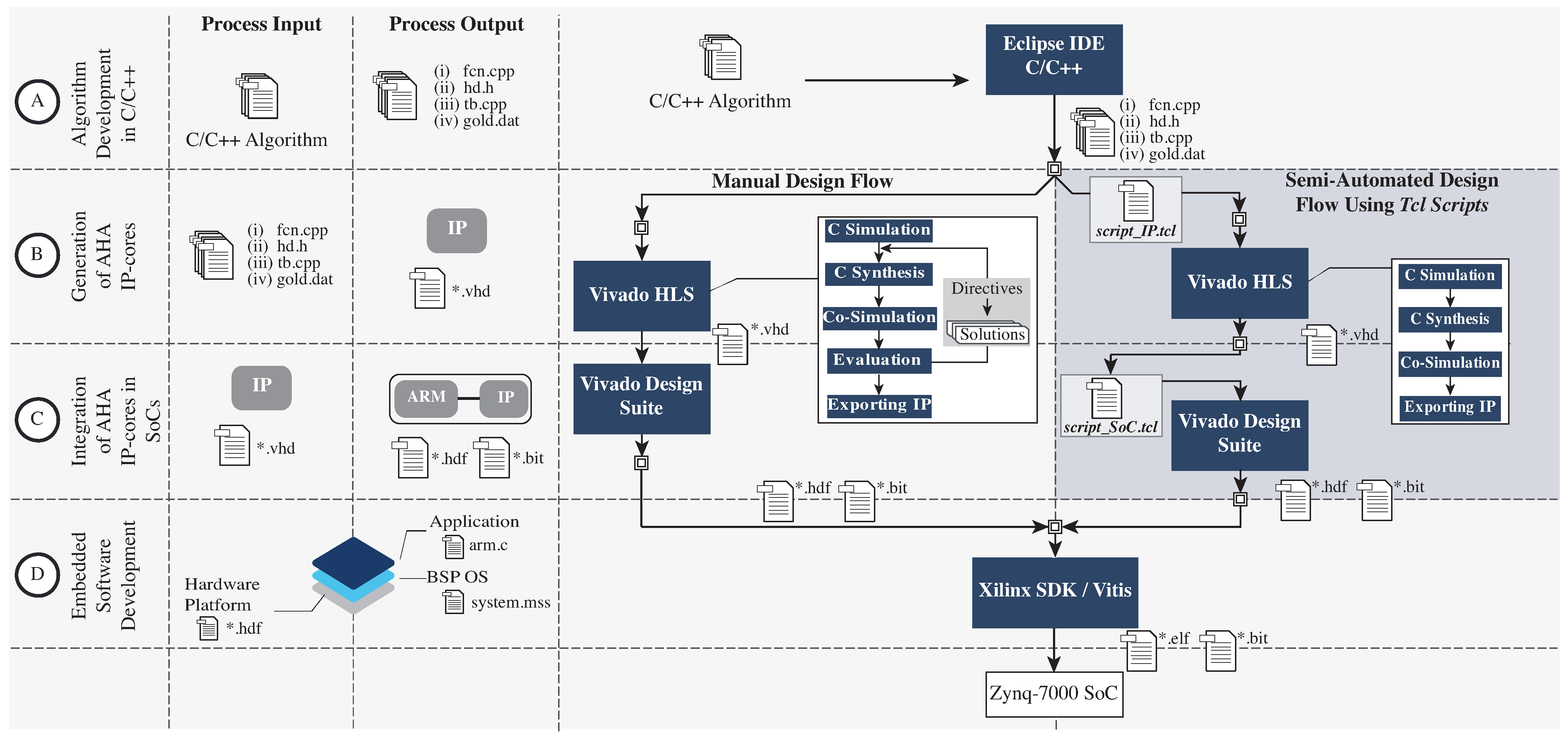

3. AHA Design Methodology

3.1. Process A: Algorithm Development in C/C++

3.2. Process B: Generation of AHA IP Cores

3.2.1. Importing the C Specification

3.2.2. C Simulation

3.2.3. C Synthesis

3.2.4. C/RTL Co-Simulation

3.2.5. Evaluation and Optimization

3.2.6. Exporting the IP-Core

3.3. Process C: Integration of AHA IP-Cores in SoCs

3.4. Process D: Embedded Software Development

3.4.1. Hardware Platform

3.4.2. Board Support Package (BSP)

3.4.3. Application

4. Rapid Generation of AHA IP-Cores + SoC

4.1. Advantages

4.2. Semi-Automated Design Flow

4.2.1. Creation of IP-Cores (Script_IP.tcl)

4.2.2. SoC Creation (Script_SoC.tcl)

4.3. Requirements of the C Specifications for Creating New IP-Cores and SoCs, Using Tcl Scripts

- The C specification and golden results of the test bench must be verified utilizing software.

- The C specification must not contain operating system operations that cannot be synthesized into hardware.

- The IP-core inputs and outputs must be defined as arguments in the top-level function.

- The C specification must incorporate a test bench that invokes the top-level function and verifies its results. The test bench must return a zero-integer value upon validation of the results.

- The AXI interfaces must be specified for the top-level function employing the unique statement #pragma HLS INTERFACE to ensure that the generated IP-core can be subsequently integrated into the SoC.

4.4. Limitations of Semi-Automated Design Using Tcl Scripts

- While the scripts can automate the process to create a single solution for IP core implementations, performance or resource analysis must be conducted manually. This necessitates the designer to iteratively examine and analyze the synthesis report files and subsequently incorporate appropriate optimization directives directly into the C specification files. Subsequently, the scripts are executed again to generate a new solution.

- The scripts generate SoCs with a fixed architecture, which integrates the IP core generated by Vivado HLS into a Zynq SoC through AXI4-Lite interfaces (Figure 4). Any modifications to this architecture require manual intervention in the IP-integrator GUI of Vivado.

5. AHA IP-Core Suite

5.1. Matrix Multiplication

5.2. FFT (Fast Fourier Transform)

5.3. AES (Advanced Encryption Standard)

5.4. BPNN (Back-Propagation Neural Network)

5.5. ANN (Artificial Neural Network)

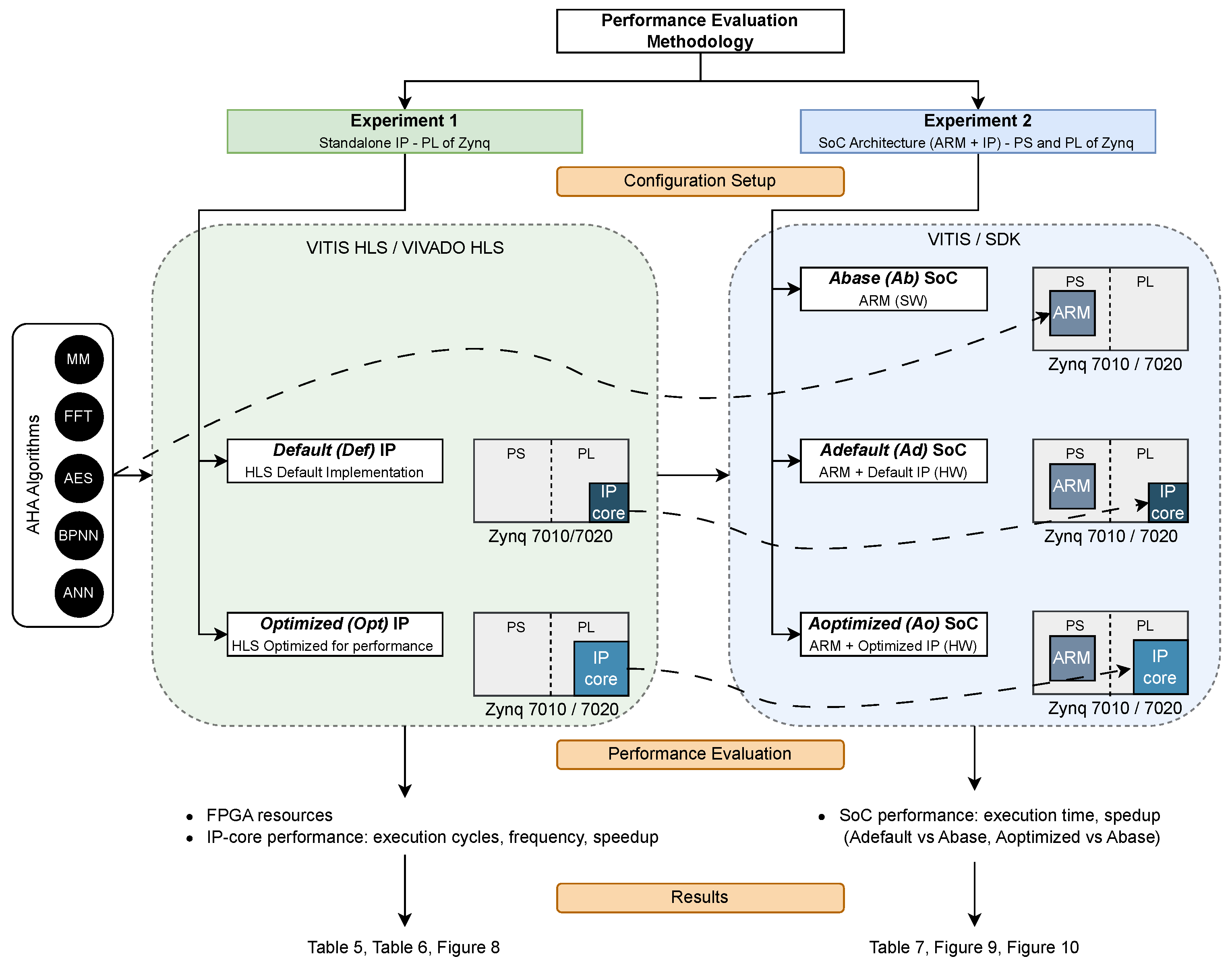

6. Performance Evaluation

6.1. Experiments Setup

6.1.1. Experiment 1

6.1.2. Experiment 2

6.2. Configuration of SoC Architectures for Experiment 2

6.2.1. Ab (ARM Cortex-A9)

6.2.2. Ad and Ao (ARM Cortex-A9 + AHA IP-Core)

6.3. Speedup Factor

7. Results

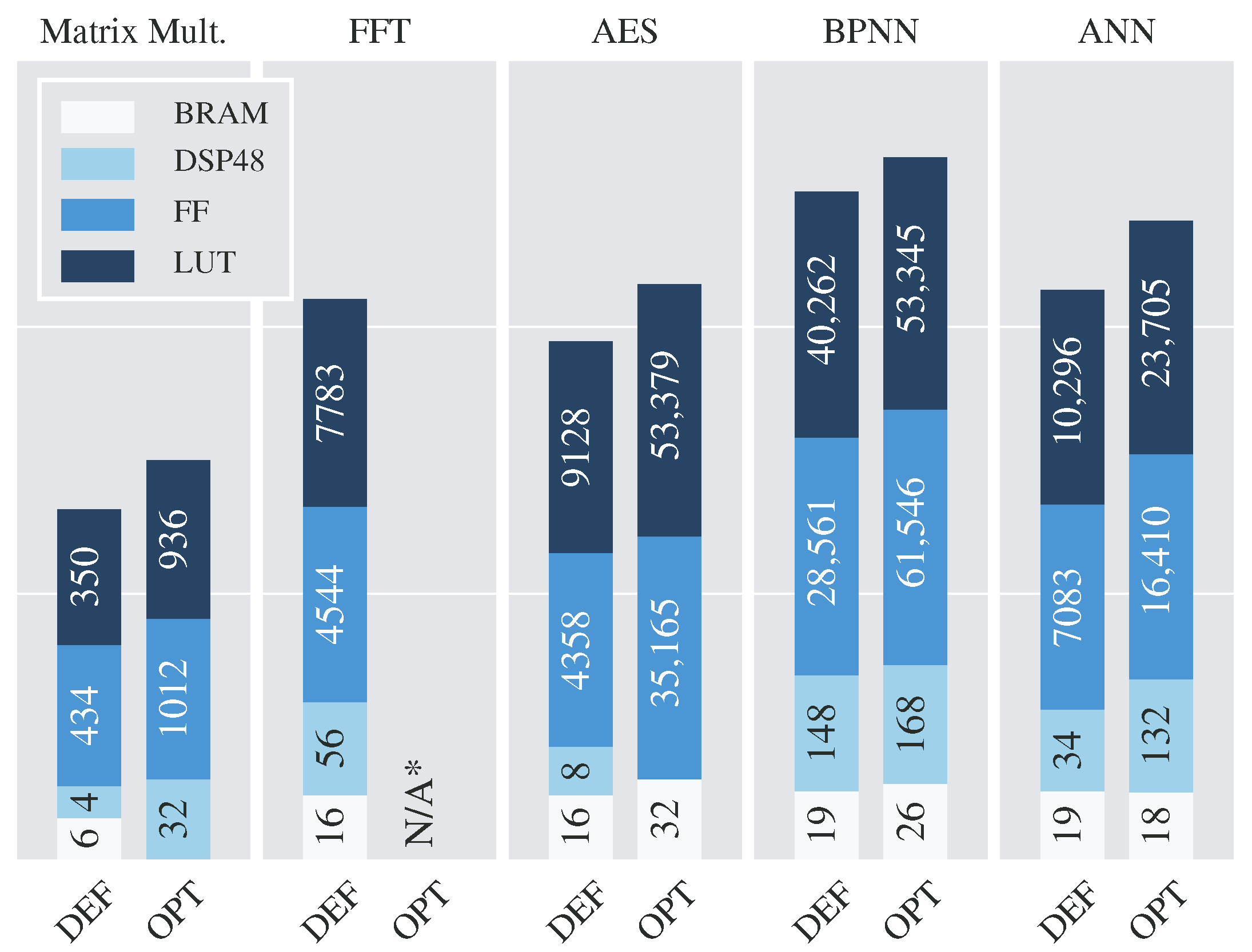

7.1. Experiment 1

7.2. Experiment 2

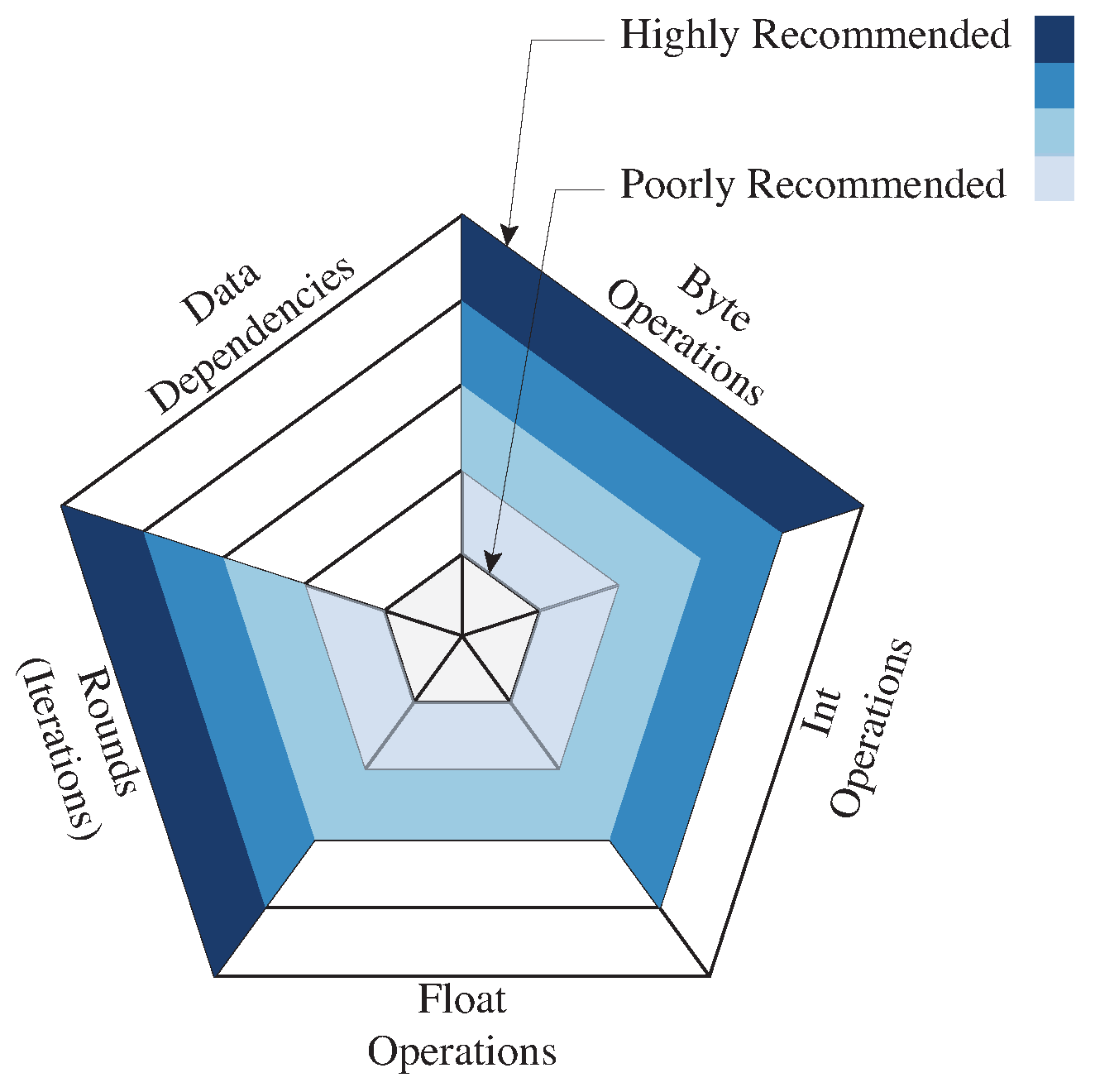

8. Discussion

- Data type: Algorithms that perform byte-level or integer operations are recommended (e.g., matrix multiplication and AES), whereas algorithms that perform floating-point operations may require additional resources. Utilizing data types for efficient hardware that supports arbitrary data lengths produces more compact and expeditious operators [16] that are suitable for applications that compromise data precision without adversely affecting the results. This approach can potentially yield superior speedup factors in applications such as deep neural networks and image processing.

- Data dependencies: High data dependencies in algorithms render them unsuitable for hardware implementation due to their sequential execution (e.g., FFT), thus limiting FPGA parallelism. Manual transformation is necessary to mitigate these dependencies, and variable-loop bounds cannot be efficiently parallelized with conventional transformations. This limitation affects the capacity of Vivado HLS to accurately estimate the latency cycles and resource utilization. To address these issues in FFT and AES, a combination of source-code transformations and traditional synthesis directives was employed. For further information, refer to [39], who presents a framework for optimizing applications with variable loop bounds.

- Multiple iterations or rounds: Iterative executions of compute-intensive operations benefit from hardware acceleration, particularly with minimal data dependencies and deep pipelines (e.g., AES, BPNN, and ANN), whereas sequential algorithms with few or no iterations do not exhibit significant performance improvements.

- Enabling cache memory in hardware and software can enhance system performance, particularly when external memory is utilized; however, its impact on BRAM on-chip systems is limited.

- AXI HP (high-performance) interfaces facilitate high-bandwidth transfers between the PS and PL slave interfaces to OCM and DDR memories or memory-mapped PL-based accelerators. Nevertheless, cache coherency can be managed using software controls.

- The AXI accelerator coherency port (ACP) interface possesses an IP structure similar to that of the AXI HP but exhibits the highest throughput for a single interface. Its hardware coherency and connectivity within the PS, snoop control unit (SCU), and L1 and L2 caches enable ACP transactions to communicate with cache subsystems, potentially reducing the latency between the PS and PL-based accelerators. Comparisons between the HP and ACP ports are presented in [78,79]. Additionally, refs. [70,80] compared various techniques for linking programmable logic to a processing system, focusing on data movement tasks, such as direct memory access (DMA).

- Other methods that are less efficient than PL DMA using AXI HP or AXI ACP include CPU-controlled transactions, PS DMA controllers, and PL DMA using a general purpose (GP) AXI Slave [70].

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Brodtkorb, A.R.; Dyken, C.; Hagen, T.R.; Hjelmervik, J.M.; Storaasli, O.O. State-of-the-art in heterogeneous computing. Sci. Program. 2010, 18, 1–33. [Google Scholar] [CrossRef]

- Hao, C.; Zhang, X.; Li, Y.; Huang, S.; Xiong, J.; Rupnow, K.; Hwu, W.M.; Chen, D. FPGA/DNN co-design: An efficient design methodology for IoT intelligence on the edge. In Proceedings of the 56th Annual Design Automation Conference 2019, Dac ’19, New York, NY, USA, 2 June 2019. [Google Scholar] [CrossRef]

- Talib, M.A.; Majzoub, S.; Nasir, Q.; Jamal, D. A systematic literature review on hardware implementation of artificial intelligence algorithms. J. Supercomput. 2021, 77, 1897–1938. [Google Scholar] [CrossRef]

- Nechi, A.; Groth, L.; Mulhem, S.; Merchant, F.; Buchty, R.; Berekovic, M. FPGA-based Deep Learning Inference Accelerators: Where Are We Standing? ACM Trans. Reconfigurable Technol. Syst. 2023, 16, 1–32. [Google Scholar] [CrossRef]

- Intel. Virtual RAN (vRAN) with Hardware Acceleration. Available online: https://builders.intel.com/solutionslibrary/virtual-ran-vran-with-hardware-acceleration (accessed on 31 March 2025).

- Azariah, W.; Bimo, F.A.; Lin, C.W.; Cheng, R.G.; Nikaein, N.; Jana, R. A Survey on Open Radio Access Networks: Challenges, Research Directions, and Open Source Approaches. Sensors 2024, 24, 1038. [Google Scholar] [CrossRef]

- Kundu, L.; Lin, X.; Agostini, E.; Ditya, V.; Martin, T. Hardware Acceleration for Open Radio Access Networks: A Contemporary Overview. IEEE Commun. Mag. 2024, 62, 160–167. [Google Scholar] [CrossRef]

- Coussy, P.; Gajski, D.D.; Meredith, M.; Takach, A. An introduction to high-level synthesis. IEEE Des. Test Comput. 2009, 26, 8–17. [Google Scholar] [CrossRef]

- Cong, J.; Liu, B.; Neuendorffer, S.; Noguera, J.; Vissers, K.; Zhang, Z. High-level synthesis for fpgas: From prototyping to deployment. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2011, 30, 473–491. [Google Scholar] [CrossRef]

- Meeus, W.; Van Beeck, K.; Goedemé, T.; Meel, J.; Stroobandt, D. An overview of today’s high-level synthesis tools. Des. Autom. Embedded Syst. 2012, 16, 31–51. [Google Scholar] [CrossRef]

- Crockett, L.H.; Elliot, R.A.; Enderwitz, M.A.; Stewart, R.W. The Zynq Book: Embedded Processing with the Arm Cortex-A9 on the Xilinx Zynq-7000 All Programmable Soc; Strathclyde Academic Media: Glasgow, UK, 2014.

- Gort, M.; Anderson, J. Design re-use for compile time reduction in FPGA high-level synthesis flows. In Proceedings of the 2014 International Conference on Field-Programmable Technology (FPT), Shanghai, China, 10–12 December 2014; pp. 4–11. [Google Scholar] [CrossRef]

- Koch, D.; Hannig, F.; Ziener, D. (Eds.) FPGAs for Software Programmers; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Zhu, J.; Sander, I.; Jantsch, A. HetMoC: Heterogeneous Modelling in SystemC. In Proceedings of the 2010 Forum on Specification & Design Languages (FDL 2010), Southampton, UK, 14–16 September 2010; pp. 1–6. [Google Scholar]

- Berrazueta, M.D. A Study and Application of High-Level Synthesis for the Design of High-Performance FPGA-Based System-on-Chip with Custom IP-Cores. Bachelor’s Thesis, Universidad de las Fuerzas Armadas ESPE, Sangolquí, Ecuador, 2019. [Google Scholar]

- AMD-Xilinx. Vivado Design Suite User Guide: High-Level Synthesis (UG902). Available online: https://docs.amd.com/v/u/2017.4-English/ug902-vivado-high-level-synthesis (accessed on 31 March 2025).

- Numan, M.W.; Phillips, B.J.; Puddy, G.S.; Falkner, K. Towards Automatic High-Level Code Deployment on Reconfigurable Platforms: A Survey of High-Level Synthesis Tools and Toolchains. IEEE Access 2020, 8, 174692–174722. [Google Scholar] [CrossRef]

- Cong, J.; Lau, J.; Liu, G.; Neuendorffer, S.; Pan, P.; Vissers, K.; Zhang, Z. FPGA HLS Today: Successes, Challenges, and Opportunities. ACM Trans. Reconfigurable Technol. Syst. 2022, 15, 1–42. [Google Scholar] [CrossRef]

- Li, J.; Chi, Y.; Cong, J. HeteroHalide: From Image Processing DSL to Efficient FPGA Acceleration. In Proceedings of the 2020 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays (FPGA ’20), New York, NY, USA, 23–25 February 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 51–57. [Google Scholar]

- Ishikawa, A.; Fukushima, N.; Maruoka, A.; Iizuka, T. Halide and GENESIS for Generating Domain-Specific Architecture of Guided Image Filtering. In Proceedings of the 2019 IEEE International Symposium on Circuits and Systems (ISCAS), Sapporo, Japan, 26–29 May 2019; pp. 1–5. Available online: https://ieeexplore.ieee.org/document/8702260 (accessed on 31 March 2025).

- Hegarty, J.; Daly, R.; DeVito, Z.; Ragan-Kelley, J.; Horowitz, M.; Hanrahan, P. Rigel: Flexible multi-rate image processing hardware. ACM Trans. Graph. 2016, 35, 85:1–85:11. [Google Scholar] [CrossRef]

- Sujeeth, A.K.; Lee, H.; Brown, K.J.; Chafi, H.; Wu, M.; Atreya, A.R.; Olukotun, K.; Rompf, T.; Odersky, M. OptiML: An Implicitly Parallel Domain-Specific Language for Machine Learning. In Proceedings of the 28th International Conference on Machine Learning (ICML’11), Madison, WI, USA, 28 June–2 July 2011; Omnipress: Madison, WI, USA, 2011; pp. 609–616. [Google Scholar]

- Lai, Y.-H.; Chi, Y.; Hu, Y.; Wang, J.; Yu, C.H.; Zhou, Y.; Cong, J.; Zhang, Z. HeteroCL: A Multi-Paradigm Programming Infrastructure for Software-Defined Reconfigurable Computing. In Proceedings of the 2019 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays (FPGA ’19), New York, NY, USA, 24–26 February 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 242–251. [Google Scholar] [CrossRef]

- Nane, R.; Sima, V.; Pilato, C.; Choi, J.; Fort, B.; Canis, A.; Chen, Y.T.; Hsiao, H.; Brown, S.; Ferrandi, F.; et al. A survey and evaluation of FPGA high-level synthesis tools. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2016, 35, 1591–1604. [Google Scholar] [CrossRef]

- Josipović, L.; Guerrieri, A.; Ienne, P. Invited Tutorial: Dynamatic: From C/C++ to Dynamically Scheduled Circuits. In Proceedings of the 2020 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays (FPGA ’20), New York, NY, USA, 23–25 February 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–10. [Google Scholar] [CrossRef]

- Ye, H.; Jun, H.; Jeong, H.; Neuendorffer, S.; Chen, D. ScaleHLS: A Scalable High-Level Synthesis Framework with Multi-Level Transformations and Optimizations. In Proceedings of the 59th ACM/IEEE Design Automation Conference (DAC ’22), New York, NY, USA, 5–9 August 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 1355–1358. [Google Scholar] [CrossRef]

- Lattner, C.; Amini, M.; Bondhugula, U.; Cohen, A.; Davis, A.; Pienaar, J.; Riddle, R.; Shpeisman, T.; Vasilache, N.; Zinenko, O. MLIR: Scaling Compiler Infrastructure for Domain Specific Computation. In Proceedings of the 2021 IEEE/ACM International Symposium on Code Generation and Optimization (CGO), Virtual Event, 27 February–3 March 2021; pp. 2–14. [Google Scholar] [CrossRef]

- Qin, S.; Berekovic, M. A comparison of high-level design tools for SoC-FPGA on disparity map calculation example. arXiv 2015, arXiv:1509.00036. [Google Scholar]

- Navas, B.; Oberg, J.; Sander, I. Towards the Generic Reconfigurable Accelerator: Algorithm Development, Core Design, and Performance Analysis. In Proceedings of the 2013 International Conference on Reconfigurable Computing and FPGAs (ReConFig), Cancun, Mexico, 9–11 December 2013; pp. 1–6. Available online: https://ieeexplore.ieee.org/document/6732334 (accessed on 31 March 2025).

- Sozzo, E.D.; Conficconi, D.; Zeni, A.; Salaris, M.; Sciuto, D.; Santambrogio, M.D. Pushing the Level of Abstraction of Digital System Design: A Survey on How to Program FPGAs. ACM Comput. Surv. 2022, 55, 106:1–106:48. [Google Scholar] [CrossRef]

- Martin, G.; Smith, G. High-level synthesis: Past, present, and future. IEEE Des. Test Comput. 2009, 26, 18–25. [Google Scholar] [CrossRef]

- de Fine Licht, J.; Besta, M.; Meierhans, S.; Hoefler, T. Transformations of High-Level Synthesis Codes for High-Performance Computing. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 1014–1029. [Google Scholar] [CrossRef]

- Schafer, B.C.; Wang, Z. High-Level Synthesis Design Space Exploration: Past, Present, and Future. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2020, 39, 2628–2639. [Google Scholar] [CrossRef]

- Molina, R.S.; Gil-Costa, V.; Crespo, M.L.; Ramponi, G. High-Level Synthesis Hardware Design for FPGA-Based Accelerators: Models, Methodologies, and Frameworks. IEEE Access 2022, 10, 90429–90455. [Google Scholar] [CrossRef]

- Cong, J.; Wei, P.; Yu, C.H.; Zhang, P. Automated Accelerator Generation and Optimization with Composable, Parallel and Pipeline Architecture. In Proceedings of the 55th Annual Design Automation Conference (DAC ’18), San Francisco, CA, USA, 24–28 June 2018; Association for Computing Machinery: New York, NY, USA, 2018; p. 154. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, J.; Schafer, B.C. Efficient and Robust High-Level Synthesis Design Space Exploration through Offline Micro-Kernels Pre-Characterization. In Proceedings of the 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 9–13 March 2020; pp. 145–150. Available online: https://ieeexplore.ieee.org/document/9116309 (accessed on 31 March 2025).

- Liu, S.; Lau, F.C.M.; Schafer, B.C. Accelerating FPGA Prototyping through Predictive Model-Based HLS Design Space Exploration. In Proceedings of the 56th Annual Design Automation Conference (DAC ’19), Las Vegas, NV, USA, 2–6 June 2019; Association for Computing Machinery: New York, NY, USA, 2019; p. 97. [Google Scholar] [CrossRef]

- Sun, Q.; Chen, T.; Liu, S.; Miao, J.; Chen, J.; Yu, H.; Yu, B. Correlated Multi-Objective Multi-Fidelity Optimization for HLS Directives Design. In Proceedings of the 2021 Design, Automation & Test in Europe Conference & Exhibition (DATE), Virtual Event, 1–5 February 2021; pp. 46–51. Available online: https://ieeexplore.ieee.org/document/9474241 (accessed on 31 March 2025).

- Choi, Y.; Cong, J. HLS-Based Optimization and Design Space Exploration for Applications with Variable Loop Bounds. In Proceedings of the 2018 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), San Diego, CA, USA, 5–8 November 2018; IEEE Press: Piscataway, NJ, USA, 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Özkan, M.A.; Pérard-Gayot, A.; Membarth, R.; Slusallek, P.; Leißa, R.; Hack, S.; Teich, J.; Hannig, F. AnyHLS: High-level synthesis with partial evaluation. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2020, 39, 3202–3214. [Google Scholar] [CrossRef]

- Piccolboni, L.; Di Guglielmo, G.; Carloni, L.P. KAIROS: Incremental Verification in High-Level Synthesis through Latency-Insensitive Design. In Proceedings of the 2019 Formal Methods in Computer Aided Design (FMCAD), San Jose, CA, USA, 22–25 October 2019; pp. 105–109. Available online: https://ieeexplore.ieee.org/document/8894295 (accessed on 31 March 2025).

- Singh, E.; Lonsing, F.; Chattopadhyay, S.; Strange, M.; Wei, P.; Zhang, X.; Zhou, Y.; Chen, D.; Cong, J.; Raina, P.; et al. A-QED Verification of Hardware Accelerators. In Proceedings of the 2020 57th ACM/IEEE Design Automation Conference (DAC), Virtual Event, 20–24 July 2020; pp. 1–6. Available online: https://ieeexplore.ieee.org/document/9218715 (accessed on 31 March 2025).

- Chen, J.; Schafer, B.C. Watermarking of Behavioral IPs: A Practical Approach. In Proceedings of the 2021 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 1–5 February 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1266–1271. Available online: https://ieeexplore.ieee.org/document/9474071/ (accessed on 31 March 2025).

- Badier, H.; Pilato, C.; Le Lann, J.-C.; Coussy, P.; Gogniat, G. Opportunistic IP Birthmarking Using Side Effects of Code Transformations on High-Level Synthesis. In Proceedings of the 2021 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 1–5 February 2021; pp. 52–55. Available online: https://ieeexplore.ieee.org/document/9474200 (accessed on 31 March 2025).

- Mo, L.; Zhou, Q.; Kritikakou, A.; Liu, J. Energy Efficient, Real-Time and Reliable Task Deployment on NoC-Based Multicores with DVFS. In Proceedings of the 2022 Design, Automation & Test in Europe Conference & Exhibition (DATE), Antwerp, Belgium, 14–23 March 2022; pp. 1347–1352. Available online: https://ieeexplore.ieee.org/document/9774667 (accessed on 31 March 2025).

- Mehrabi, A.; Manocha, A.; Lee, B.C.; Sorin, D.J. Bayesian Optimization for Efficient Accelerator Synthesis. ACM Trans. Archit. Code Optim. 2021, 18, 1–25. [Google Scholar] [CrossRef]

- Zhao, J.; Feng, L.; Sinha, S.; Zhang, W.; Liang, Y.; He, B. COMBA: A Comprehensive Model-Based Analysis Framework for High Level Synthesis of Real Applications. In Proceedings of the 2017 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), Irvine, CA, USA, 13–16 November 2017; pp. 430–437. Available online: https://ieeexplore.ieee.org/document/8203809 (accessed on 31 March 2025).

- Wu, N.; Xie, Y.; Hao, C. IronMan-Pro: Multiobjective Design Space Exploration in HLS via Reinforcement Learning and Graph Neural Network-Based Modeling. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2023, 42, 900–913. [Google Scholar] [CrossRef]

- Gautier, Q.; Althoff, A.; Crutchfield, C.L.; Kastner, R. Sherlock: A Multi-Objective Design Space Exploration Framework. ACM Trans. Des. Autom. Electron. Syst. 2022, 27, 1–20. [Google Scholar] [CrossRef]

- Zou, Z.; Tang, C.; Gong, L.; Wang, C.; Zhou, X. FlexWalker: An Efficient Multi-Objective Design Space Exploration Framework for HLS Design. In Proceedings of the 2024 34th International Conference on Field-Programmable Logic and Applications (FPL), Gothenburg, Sweden, 2–6 September 2024; pp. 126–132. Available online: https://ieeexplore.ieee.org/document/10705443 (accessed on 31 March 2025).

- Rashid, M.I.; Schaefer, B.C. VeriPy: A Python-Powered Framework for Parsing Verilog HDL and High-Level Behavioral Analysis of Hardware. In Proceedings of the 2024 IEEE 17th Dallas Circuits and Systems Conference (DCAS), Dallas, TX, USA, 25–26 April 2024; pp. 1–6. Available online: https://ieeexplore.ieee.org/document/10539889 (accessed on 31 March 2025).

- Abi-Karam, S.; Sarkar, R.; Seigler, A.; Lowe, S.; Wei, Z.; Chen, H.; Rao, N.; John, L.; Arora, A.; Hao, C. HLSFactory: A Framework Empowering High-Level Synthesis Datasets for Machine Learning and Beyond. In Proceedings of the 2024 ACM/IEEE 6th Symposium on Machine Learning for CAD (MLCAD), Austin, TX, USA, 18–19 September 2024; pp. 1–9. Available online: https://ieeexplore.ieee.org/document/10740213 (accessed on 31 March 2025).

- Ferikoglou, A.; Kakolyris, A.; Masouros, D.; Soudris, D.; Xydis, S. CollectiveHLS: A Collaborative Approach to High-Level Synthesis Design Optimization. ACM Trans. Reconfigurable Technol. Syst. 2024, 18, 11:1–11:32. [Google Scholar] [CrossRef]

- Lahti, S.; Sjövall, P.; Vanne, J.; Hämäläinen, T.D. Are we there yet? A study on the state of high-level synthesis. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2019, 38, 898–911. [Google Scholar] [CrossRef]

- Fort, B.; Canis, A.; Choi, J.; Calagar, N.; Lian, R.; Hadjis, S.; Chen, Y.T.; Hall, M.; Syrowik, B.; Czajkowski, T.; et al. Automating the Design of Processor/Accelerator Embedded Systems with LegUp High-Level Synthesis. In Proceedings of the 2014 12th IEEE International Conference on Embedded and Ubiquitous Computing, Milan, Italy, 26–28 August 2014; pp. 120–129. Available online: https://ieeexplore.ieee.org/document/6962276 (accessed on 31 March 2025).

- Nane, R.; Sima, V.M.; Pham Quoc, C.; Goncalves, F.; Bertels, K. High-Level Synthesis in the Delft Workbench Hardware/Software Co-Design Tool-Chain. In Proceedings of the 2014 12th IEEE International Conference on Embedded and Ubiquitous Computing, Milan, Italy, 26–28 August 2014; pp. 138–145. Available online: https://ieeexplore.ieee.org/document/6962278 (accessed on 31 March 2025).

- AMD-Xilinx. SDSoC Development Environment Design Hub. Available online: https://docs.amd.com/v/u/en-US/dh0057-sdsoc-hub (accessed on 31 March 2025).

- AMD-Xilinx. SDAccel Development Environment Design Hub. Available online: https://docs.amd.com/v/u/en-US/dh0058-sdaccel-hub (accessed on 31 March 2025).

- AMD-Xilinx. AMD Vitis™ Unified Software Platform. Available online: https://www.amd.com/en/products/software/adaptive-socs-and-fpgas/vitis.html (accessed on 31 March 2025).

- AMD-Xilinx. Vitis High-Level Synthesis User Guide (UG1399). Available online: https://docs.amd.com/r/2023.1-English/ug1399-vitis-hls (accessed on 31 March 2025).

- AMD-Xilinx. AMD Vitis™ HLS. Available online: https://www.amd.com/en/products/software/adaptive-socs-and-fpgas/vitis/vitis-hls.html (accessed on 31 March 2025).

- AMD-Xilinx. Xilinx/Vitis-HLS-Introductory-Examples (GitHub). Available online: https://github.com/Xilinx/Vitis-HLS-Introductory-Examples (accessed on 31 March 2025).

- AMD-Xilinx. Xilinx/Vitis_Libraries (GitHub). Available online: https://github.com/Xilinx/Vitis_Libraries (accessed on 31 March 2025).

- Vitali, E.; Gadioli, D.; Ferrandi, F.; Palermo, G. Parametric Throughput Oriented Large Integer Multipliers for High Level Synthesis. In Proceedings of the 2021 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 1–5 February 2021; pp. 38–41. Available online: https://ieeexplore.ieee.org/document/9473908 (accessed on 31 March 2025).

- Wu, N.; Yang, H.; Xie, Y.; Li, P.; Hao, C. High-Level Synthesis Performance Prediction Using GNNs: Benchmarking, Modeling, and Advancing. In Proceedings of the 59th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 10–14 July 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 49–54. [Google Scholar]

- Hara, Y.; Tomiyama, H.; Honda, S.; Takada, H. Proposal and quantitative analysis of the chstone benchmark program suite for practical c-based high-level synthesis. J. Inf. Process. 2009, 17, 242–254. [Google Scholar] [CrossRef][Green Version]

- Ndu, G.; Navaridas, J.; Luján, M. CHO: Towards a Benchmark Suite for OpenCL FPGA Accelerators. In Proceedings of the 3rd International Workshop on OpenCL (IWOCL ’15), Oxford, UK, 12–13 May 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 1–10. [Google Scholar] [CrossRef]

- Reagen, B.; Adolf, R.; Shao, Y.S.; Wei, G.Y.; Brooks, D. MachSuite: Benchmarks for Accelerator Design and Customized Architectures. In Proceedings of the 2014 IEEE International Symposium on Workload Characterization (IISWC), Raleigh, NC, USA, 26–28 October 2014; pp. 110–119. Available online: https://ieeexplore.ieee.org/document/6983050 (accessed on 31 March 2025).

- Zhou, Y.; Gupta, U.; Dai, S.; Zhao, R.; Srivastava, N.; Jin, H.; Featherston, J.; Lai, Y.-H.; Liu, G.; Velasquez, G.A.; et al. Rosetta: A Realistic High-Level Synthesis Benchmark Suite for Software Programmable FPGAs. In Proceedings of the 2018 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays (FPGA ’18), Monterey, CA, USA, 25–27 February 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 269–278. [Google Scholar]

- AMD-Xilinx. Vivado Design Suite: AXI Reference Guide (UG1037). Available online: https://docs.amd.com/api/khub/documents/1N~rJgeEMU28Fyv7kWcW6Q/content?Ft-Calling-App=ft%2Fturnkey-portal&Ft-Calling-App-Version=5.0.70# (accessed on 31 March 2025).

- AMD-Xilinx. Generating Basic Software Platforms: Reference Guide (UG1138). Available online: https://docs.amd.com/v/u/en-US/ug1138-generating-basic-software-platforms (accessed on 31 March 2025).

- AMD-Xilinx. OS and Libraries Document Collection (UG643). Available online: https://docs.amd.com/r/2020.2-English/oslib_rm (accessed on 31 March 2025).

- LeCun, Y.; Cortes, C. The MNIST Database of Handwritten Digits. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 31 March 2025).

- Zhang, X.; Ramachandran, A.; Zhuge, C.; He, D.; Zuo, W.; Cheng, Z.; Rupnow, K.; Chen, D. Machine Learning on FPGAs to Face the IoT Revolution. In Proceedings of the 2017 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), Irvine, CA, USA, 13–16 November 2017; pp. 819–826. Available online: https://ieeexplore.ieee.org/document/8203862 (accessed on 31 March 2025).

- Neshatpour, K.; Makrani, H.M.; Sasan, A.; Ghasemzadeh, H.; Rafatirad, S.; Homayoun, H. Design Space Exploration for Hardware Acceleration of Machine Learning Applications in MapReduce. In Proceedings of the 2018 IEEE 26th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Boulder, CO, USA, 29 April–2 May 2018; p. 221. Available online: https://ieeexplore.ieee.org/document/8457670 (accessed on 31 March 2025).

- Leipnitz, M.T.; Nazar, G.L. High-Level Synthesis of Resource-Oriented Approximate Designs for FPGAs. In Proceedings of the 56th Annual Design Automation Conference (DAC ’19), Las Vegas, NV, USA, 2–6 June 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–6. [Google Scholar] [CrossRef]

- AMD-Xilinx. SmartConnect v1.0 LogiCORE IP Product Guide (PG247). Available online: https://docs.amd.com/r/en-US/pg247-smartconnect/SmartConnect-v1.0-LogiCORE-IP-Product-Guide (accessed on 31 March 2025).

- Nayak, R.J.; Chavda, J.B. Proficient Design Space Exploration of ZYNQ SoC Using VIVADO Design Suite: Custom Design of High Performance AXI Interface for High Speed Data Transfer between PL and DDR Memory Using Hardware-Software Co-Design. Int. J. Appl. Eng. Res. 2018, 13, 8991–8997. [Google Scholar]

- Sklyarov, V.; Skliarova, I. Exploration of High-Performance Ports in Zynq-7000 Devices with Different Traffic Conditions. In Proceedings of the 2017 5th International Conference on Electrical Engineering—Boumerdes (ICEE-B), Boumerdes, Algeria, 29–31 October 2017; pp. 1–4. Available online: https://ieeexplore.ieee.org/document/8192204 (accessed on 31 March 2025).

- AMD-Xilinx. Zynq 7000 SoC Technical Reference Manual (UG585). Available online: https://docs.amd.com/r/en-US/ug585-zynq-7000-SoC-TRM (accessed on 31 March 2025).

- AMD-Xilinx. Fast Fourier Transform LogiCORE IP Product Guide (PG109). Available online: https://docs.amd.com/r/en-US/pg109-xfft/Introduction (accessed on 31 March 2025).

| Tool | Owner | License | Release Year * | Target Architecture | Input | Output | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A | C | ASIC | FPGA | Vendor-Specific | C | C++ | SystemC | Other | Verilog | VHDL | SystemC | |||

| Catapult HLS | Mentor Graphics (Wilsonville, OR, USA) | ✓ | 2004 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| Bluespec | Bluespec, Inc. (Framingham, MA, USA) | ✓ | 2004 | ✓ | ✓ | BSV | ✓ | ✓ | ||||||

| Synphony HLS | Synopsys, Inc. (Sunnyvale, CA, USA) | ✓ | 2009 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||

| ROCCC | UC Riverside | ✓ | 2010 | ✓ | ✓ | ✓ | ||||||||

| LegUp | University of Toronto/Microchip Technology | ✓ | 2011 | ✓ | Microchip (Chandler, AZ, USA) | ✓ | ✓ | |||||||

| PandA-Bambu | Polytechnic University of Milan | ✓ | 2012 | ✓ | ✓ | ✓ | ||||||||

| Vivado HLS | AMD-Xilinx (San Jose, CA, USA) | ✓ | 2012 | ✓ | AMD-Xilinx (San Jose, CA, USA) | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| HDL Coder | The MathWorks, Inc. (Natick, MA, USA) | ✓ | 2012 | ✓ | ✓ | Xilinx (San Jose, CA, USA), Altera (San Jose, CA, USA), and Microsemi (Aliso Viejo, CA, USA) | Matlab, Simulink | ✓ | ✓ | |||||

| Stratus HLS | Cadence Design Systems (San Jose, CA, USA) | ✓ | 2014 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||

| Intel HLS Compiler | Intel (Altera) Corporation (San Jose, CA, USA) | ✓ | 2017 | ✓ | Intel (Santa Clara, CA, USA) | ✓ | ✓ | OpenCL † | ✓ | |||||

| Kiwi | University of Cambridge | ✓ | 2017 | ✓ | Xilinx (San Jose, CA, USA), and Altera (San Jose, CA, USA) | C# (.NET bytecode) | ✓ | ✓ | ||||||

| Vitis HLS | AMD-Xilinx (San Jose, CA, USA) | ✓ | 2019 | ✓ | AMD-Xilinx (San Jose, CA, USA) | ✓ | ✓ | ✓ | OpenCL ‡ | ✓ | ✓ | |||

| Directives | Optimization Goals | Results | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| AHA IP-Core | PIPELINE | UNROLL | Array Opt. | INLINE | Perf. Opt. | Area Opt. | Challenges | Cycle Reduction | Final II 1 | Resources | Outcome |

| Matrix Multiplication | Yes | Yes | PARTITION (Full) | No | High | Medium | Trade-off between initiation interval and resources | High | 1 | Mod. | Full loop pipelining and matrix partitioning enabled maximum throughput |

| FFT | Yes | No | PARTITION (Partial) 2 | No | Medium | Low | Unrolling limited by variable loop bounds | Moderate | N/A | Low | Performance constrained by loop structure |

| AES | Yes | Yes | PARTITION (S-box) 3 | Yes | High | Medium | Controlled reuse of sub-functions | High | 1 | Mod. | Round-level pipelining and S-box parallel access improved throughput |

| Backpropagation | Limited 4 | Failed 5 | RESHAPE | No | Low | Medium | Synthesis failed with full unroll due to complexity | Low | N/A | Low | Simplified structure with partial reshape allowed synthesis |

| ANN | Yes | Yes | PARTITION (Weights) 6 | No | High | Medium | Layered structure enabled pipeline across layers | High | 1 | Mod. | Efficient layer-wise acceleration through pipelining and unroll |

| AHA IP-Core | Source | Description | Data Type | Lines | Functions | Variables | Statements | Hardware Characteristics of IP-Cores | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Array | Scalar | For | If | Switch | Goto | Resource Utilization | Data Dependencies | Multiple Iterations | ||||||

| Matrix multiplication | Custom coded | Multiplication of matrices of order 2 × 2 | 2D array of 32-bit integers | 23 | 1 | 3 | 3 | 3 | ●○○○ | ●○○○ | ○○○○ | |||

| Fast Fourier Transform (FFT) | MachSuite | 1024-point FFT by the Cooley-Tukey method | Array of 64-bit doubles | 35 | 1 | 4 | 7 | 2 | ●●○○ | ●●●● | ○○○○ | |||

| Advance Encryption Standard (AES) | CHStone | Encryption and de-encryption of a 128-bit vector with a 128-bit key | Array of 32-bit integers | 716 | 11 | 11 | 345 | 24 | 26 | 10 | 37 | ●●●○ | ●●○○ | ●●●○ |

| Back-Propagation Neural Network (BPNN) | MachSuite | Training a two-layer artificial neural network with ten neurons each | Array of 64-bit doubles | 314 | 14 | 72 | 19 | 41 | 1 | ●●●● | ●●○○ | ●●●○ | ||

| Artificial Neural Network (ANN) | Custom coded | Classification of handwritten numbers in images of 20 × 20 pixels | Array of 64-bit doubles | 35 | 1 | 10 | 4 | 4 | ●●●○ | ●●○○ | ●○○○ | |||

| SoC Architecture | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Configuration | Development Board | Zynq Device | CPU | Freq (MHz) | AHA IP-Core | OS | App. Code | Compiler | ||||||

|

Digilent Zybo |

Xilinx ZC702 Eval. Kit | Z-7010 | Z-7020 |

ARM Cortex-A9 * | 650 | 667 | None | Default | Optimized | BSP | C |

GCC GNU 4.4 | ||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||

| IP Cores | |||||

|---|---|---|---|---|---|

| Benchmark | Default (DEF) | Optimized (OPT) | Speedup | ||

| Cycles † | Freq | Cycles † | Freq | ||

| Matrix Mult. | 85 | 125 | 7 | 125 | 12.14× |

| FFT | 122,901 | 100 | N/A * | N/A * | N/A * |

| AES | 4118 | 100 | 928 | 100 | 4.44× |

| BPNN | 464,387,001 | 100 | 82,890,001 | 100 | 5.60× |

| ANN | 92,193 | 100 | 32,298 | 100 | 2.85× |

| AHA IP-Cores | ||||||||

|---|---|---|---|---|---|---|---|---|

| Benchmark | Default (DEF) | Optimized (OPT) | ||||||

| BRAM | DSP48 | FF | LUT | BRAM | DSP48 | FF | LUT | |

| Matrix Mult. | 6 | 4 | 434 | 350 | 0 | 32 | 1012 | 936 |

| FFT | 16 | 56 | 4544 | 7783 | N/A * | N/A * | N/A * | N/A * |

| AES | 16 | 8 | 4358 | 9128 | 32 | 0 | 35,165 | 53,379 |

| BPNN | 19 | 148 | 28,561 | 40,262 | 26 | 168 | 61,546 | 53,345 |

| ANN | 19 | 34 | 7083 | 10,296 | 18 | 132 | 16,410 | 23,705 |

| Zynq SoC Architectures, with AHA IP Cores | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Benchmark | |||||||||

| t (us) | t (us) | S | t (us) | S | t (us) | S | t (us) | S | |

| Matrix Mult. | 1.52 × 101 | 2.80 × 100 | 5.43× | 2.63 × 100 | 5.78× | 1.97 × 100 | 7.72× | 1.95 × 100 | 7.79× |

| FFT | 2.04 × 104 | 1.23 × 103 | 16.57× | 1.23 × 103 | 16.57× | N/A * | N/A * | N/A * | N/A * |

| AES | 2.14 × 103 | 4.46 × 101 | 47.98× | 4.35 × 101 | 49.18× | N/A † | N/A † | 1.26 × 101 | 169.84× |

| BPNN | 2.00 × 107 | N/A † | N/A † | 4.64 × 106 | 4.31× | N/A † | N/A † | 8.29 × 105 | 24.13× |

| ANN | 8.45 × 103 | 9.24 × 102 | 9.14× | 9.24 × 102 | 9.14× | N/A † | N/A † | 3.26 × 102 | 25.92× |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Berrazueta-Mena, D.; Navas, B. AHA: Design and Evaluation of Compute-Intensive Hardware Accelerators for AMD-Xilinx Zynq SoCs Using HLS IP Flow. Computers 2025, 14, 189. https://doi.org/10.3390/computers14050189

Berrazueta-Mena D, Navas B. AHA: Design and Evaluation of Compute-Intensive Hardware Accelerators for AMD-Xilinx Zynq SoCs Using HLS IP Flow. Computers. 2025; 14(5):189. https://doi.org/10.3390/computers14050189

Chicago/Turabian StyleBerrazueta-Mena, David, and Byron Navas. 2025. "AHA: Design and Evaluation of Compute-Intensive Hardware Accelerators for AMD-Xilinx Zynq SoCs Using HLS IP Flow" Computers 14, no. 5: 189. https://doi.org/10.3390/computers14050189

APA StyleBerrazueta-Mena, D., & Navas, B. (2025). AHA: Design and Evaluation of Compute-Intensive Hardware Accelerators for AMD-Xilinx Zynq SoCs Using HLS IP Flow. Computers, 14(5), 189. https://doi.org/10.3390/computers14050189