Generation of Natural-Language Explanations for Static-Analysis Warnings Using Single- and Multi-Objective Optimization

Abstract

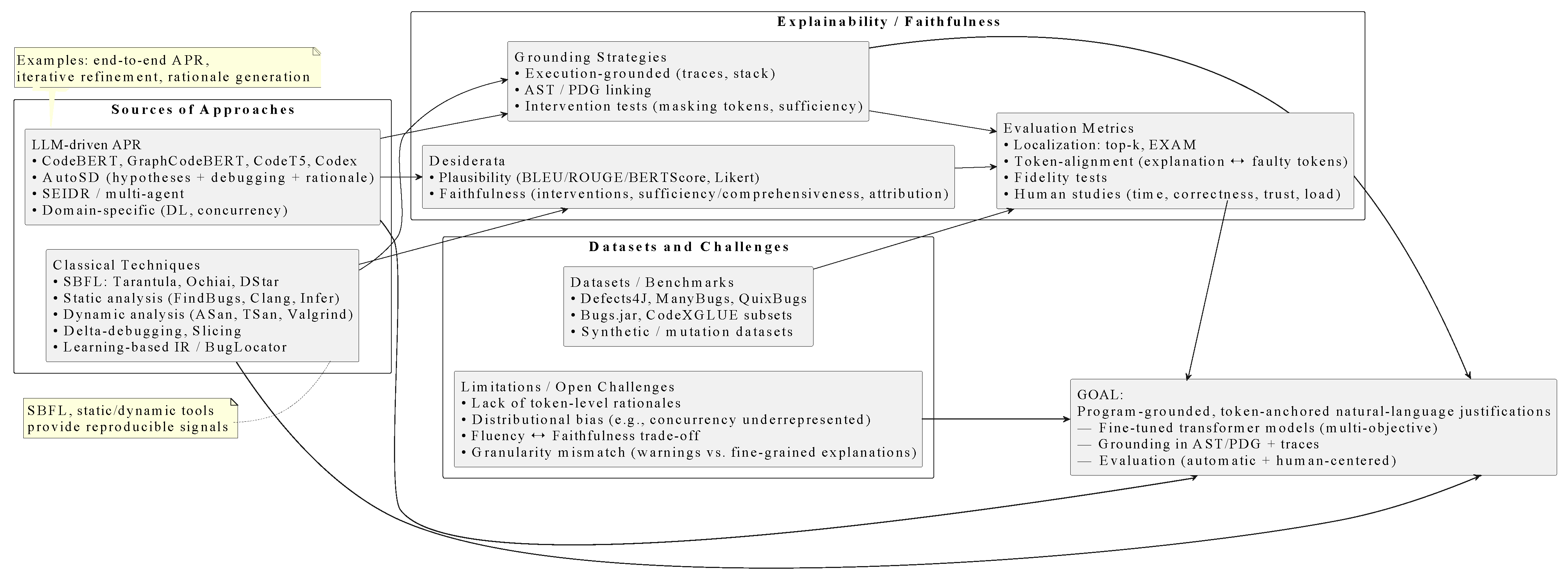

1. Introduction

- A framework that integrates multi-source diagnostic signals with conditioned language-model generation to produce natural language justifications for code warnings.

- A taxonomy of explanation types for localization results, derived from developer-oriented requirements and empirical observation.

- An empirical assessment of the framework demonstrating its effects on localization metrics and on developer validation performance.

2. Related Work

3. Approach

Example 1—Faithfulness. A null-pointer dereference warning was issued for the statement if (user.getName().length() > 3). The generated explanation indicated that “getName() may return null”. When this phrase was removed, the model’s severity prediction dropped from high to low, demonstrating a causal link between the explanation and the prediction. The confidence reduction (18%) resulted in a faithfulness score of 0.82.

Example 2—Causal-Probe Sensitivity. For a resource-leak warning, the explanation stated that “the file stream is not closed”. When only the phrase “not closed” was masked, the prediction weakened by 41% (sensitivity score 0.41). Masking an unrelated phrase (“during execution”) produced no significant change, showing that the metric selectively responds to semantically relevant tokens.

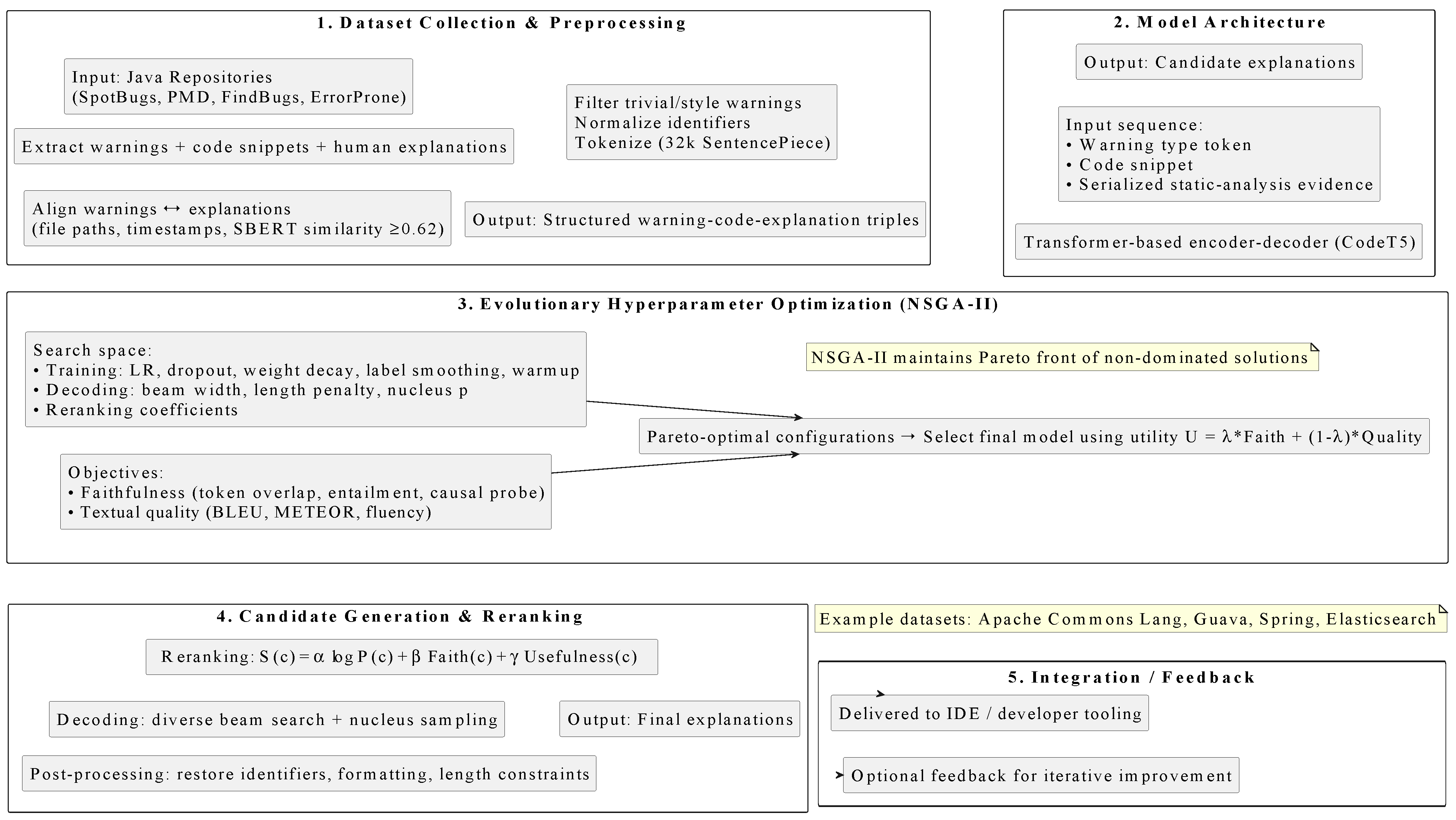

3.1. Dataset Collection and Preprocessing

3.2. Model Architecture and Single-Objective Optimization

3.3. Model Architecture and Multi-Objective Optimization

3.4. Candidate Generation and Reranking

4. Experimental Setup

5. Results

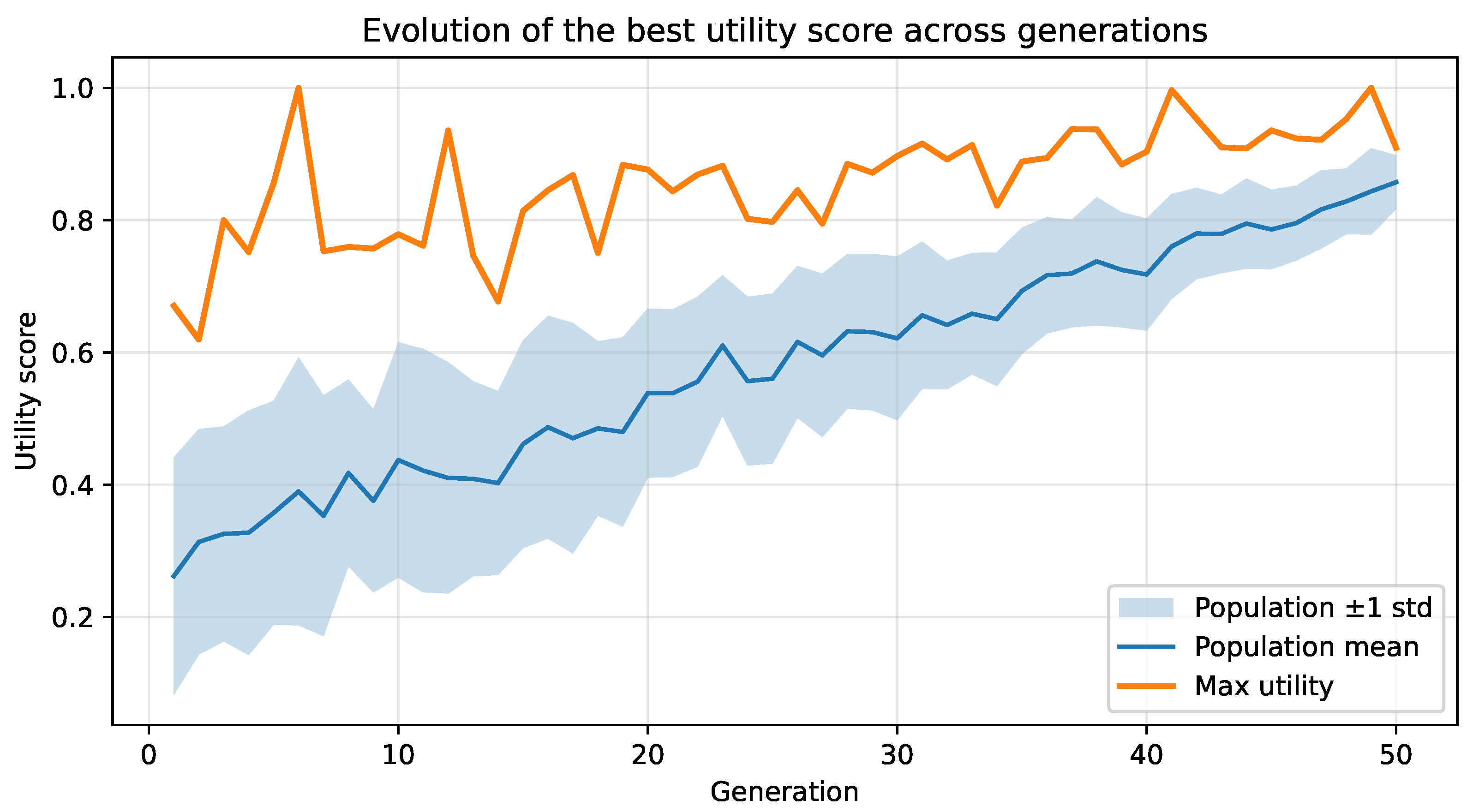

5.1. Single-Objective Results

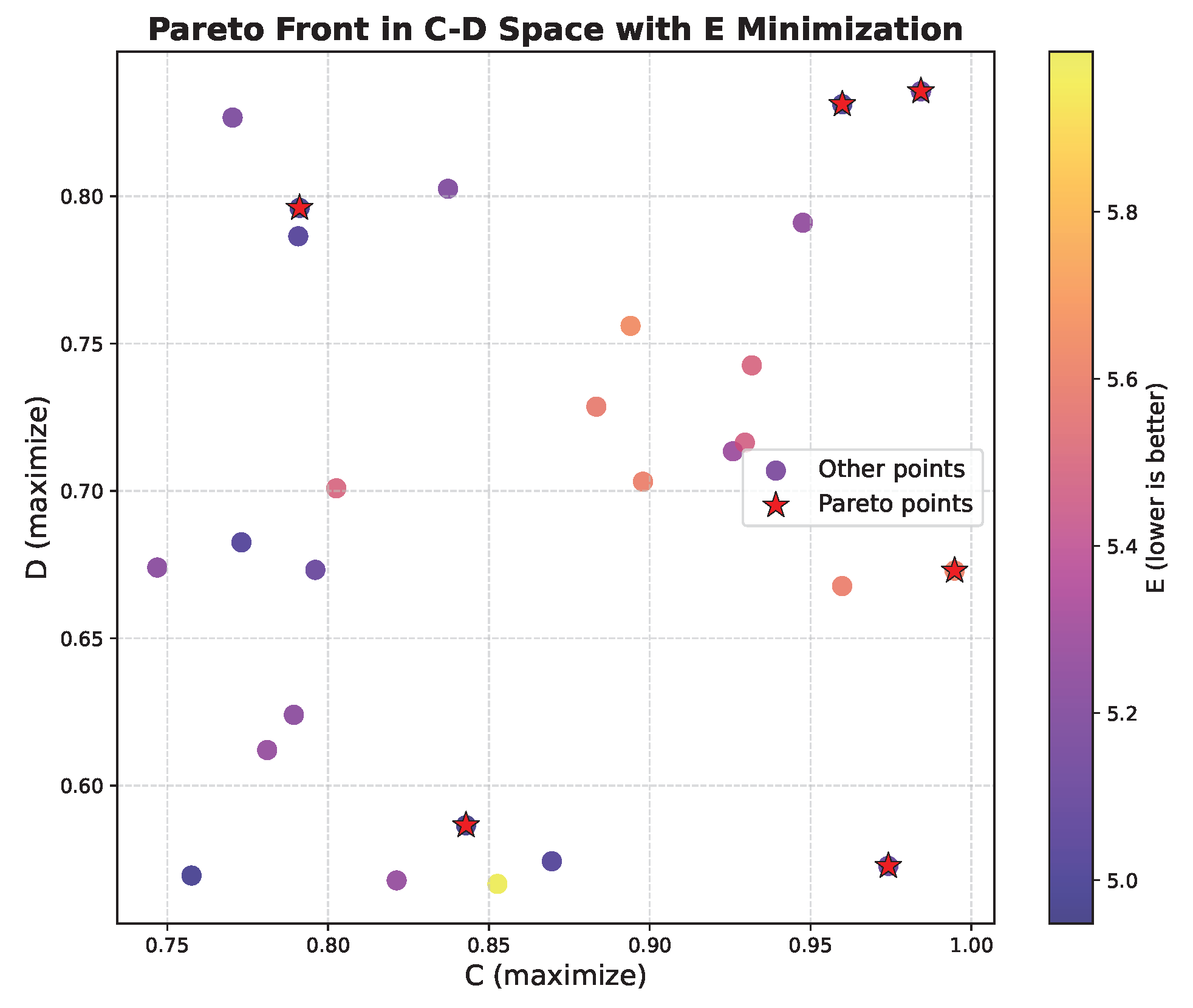

5.2. Multi-Objective Results

6. Discussion

7. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Representative Examples

Example 1—Null Dereference (SpotBugs ID: NP_NULL_ON_SOME_PATH).

Warning: Possible NullPointerException in method getUserName().

Code snippet:

String name = user.getName();

return name.toUpperCase();

Final explanation (dataset entry): “The call to getName() may return null, and toUpperCase() is invoked without checking it, which can trigger a NullPointerException.”

This warning passed the filtering stage because the static analyzer provided a deterministic issue ID and a clear data-flow path pointing to the risky call site.

Example 2—Resource Leak (SpotBugs ID: OS_OPEN_STREAM).

Warning: Stream opened in method loadFile() is not closed.

Code snippet:

InputStream in = new FileInputStream(path);

data = read(in);

return data;

Final explanation (dataset entry): “The input stream opened in loadFile() is not closed after reading, which may result in a resource leak.”

The warning survived filtering because it refers to a statically traceable resource-management pattern and can be linked to a specific program location.

References

- Zhang, J.; El-Gohary, N.M. Integrating semantic NLP and logic reasoning into a unified system for fully-automated code checking. Autom. Constr. 2017, 73, 45–57. [Google Scholar] [CrossRef]

- Ma, H.; Zhang, Y.; Sun, S.; Liu, T.; Shan, Y. A comprehensive survey on NSGA-II for multi-objective optimization and applications. Artif. Intell. Rev. 2023, 56, 15217–15270. [Google Scholar] [CrossRef]

- Sahar, S.; Younas, M.; Khan, M.M.; Sarwar, M.U. DP-CCL: A supervised contrastive learning approach using CodeBERT model in software defect prediction. IEEE Access 2024, 12, 22582–22594. [Google Scholar]

- Cao, Y.; Ju, X.; Chen, X.; Gong, L. MCL-VD: Multi-modal contrastive learning with LoRA-enhanced GraphCodeBERT for effective vulnerability detection. Autom. Softw. Eng. 2025, 32, 67. [Google Scholar] [CrossRef]

- Amin, M.F.I.; Shirafuji, A.; Rahman, M.M.; Watanobe, Y. Multi-label code error classification using CodeT5 and ML-KNN. IEEE Access 2024, 12, 100805–100820. [Google Scholar]

- Kang, S.; Chen, B.; Yoo, S.; Lou, J.G. Explainable automated debugging via large language model-driven scientific debugging. Empir. Softw. Eng. 2025, 30, 45. [Google Scholar]

- Alsaedi, S.A.; Noaman, A.Y.; Gad-Elrab, A.A.A.; Eassa, F.E.; Haridi, S. Leveraging Large Language Models for Automated Bug Fixing. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 444–456. [Google Scholar] [CrossRef]

- Grishina, A.; Liventsev, V.; Härmä, A.; Moonen, L. Fully Autonomous Programming Using Iterative Multi-Agent Debugging with Large Language Models. ACM Trans. Evol. Learn. Optim. 2025, 5, 1–37. [Google Scholar] [CrossRef]

- Liventsev, V.; Grishina, A.; Härmä, A.; Moonen, L. Fully autonomous programming with large language models. In Proceedings of the Genetic and Evolutionary Computation Conference, Lisbon, Portugal, 15–19 July 2023; pp. 1146–1155. [Google Scholar]

- Vella Zarb, D.; Parks, G.; Kipouros, T. Synergistic Utilization of LLMs for Program Synthesis. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Melbourne, VIC, Australia, 14–18 July 2024; pp. 539–542. [Google Scholar]

- Cao, J.; Li, M.; Wen, M.; Cheung, S.C. A study on prompt design, advantages and limitations of ChatGPT for deep learning program repair. Autom. Softw. Eng. 2025, 32, 30. [Google Scholar] [CrossRef]

- Alsofyani, M.; Wang, L. Evaluating ChatGPT’s strengths and limitations for data race detection in parallel programming via prompt engineering. J. Supercomput. 2025, 81, 776. [Google Scholar] [CrossRef]

- Ortin, F.; Rodriguez-Prieto, O.; Garcia, M. Introspector: A general-purpose tool for visualizing and comparing runtime object structures on the Java platform. SoftwareX 2025, 31, 102250. [Google Scholar] [CrossRef]

- Cooper, N.; Clark, A.T.; Lecomte, N.; Qiao, H.; Ellison, A.M. Harnessing large language models for coding, teaching and inclusion to empower research in ecology and evolution. Methods Ecol. Evol. 2024, 15, 1757–1763. [Google Scholar] [CrossRef]

- Widyasari, R.; Prana, G.A.A.; Haryono, S.A.; Wang, S.; Lo, D. Real world projects, real faults: Evaluating spectrum based fault localization techniques on Python projects. Empir. Softw. Eng. 2022, 27, 147. [Google Scholar] [CrossRef]

- Sarhan, Q.I.; Beszédes, Á. A survey of challenges in spectrum-based software fault localization. IEEE Access 2022, 10, 10618–10639. [Google Scholar] [CrossRef]

- Bekkouche, M. Model checking-enhanced spectrum-based fault localization. In Proceedings of the International Conference on Computing Systems and Applications, Sousse, Tunisia, 22–26 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 305–316. [Google Scholar]

- Widyasari, R.; Prana, G.A.A.; Haryono, S.A.; Tian, Y.; Zachiary, H.N.; Lo, D. XAI4FL: Enhancing spectrum-based fault localization with explainable artificial intelligence. In Proceedings of the 30th IEEE/ACM International Conference on Program Comprehension, Virtual Event, 16–17 May 2022; pp. 499–510. [Google Scholar]

- Ren, L.; Zhang, X.; Hua, Z.; Jiang, Y.; He, X.; Xiong, Y.; Xie, T. Validity-Preserving Delta Debugging via Generator Trace Reduction. ACM Trans. Softw. Eng. Methodol. 2025, 34, 1–33. [Google Scholar] [CrossRef]

- Wang, J.; Huang, Y.; Wang, S.; Wang, Q. Find bugs in static bug finders. In Proceedings of the 30th IEEE/ACM International Conference on Program Comprehension, Virtual Event, 16–17 May 2022; pp. 516–527. [Google Scholar]

- Tomassi, D.A.; Rubio-González, C. On the real-world effectiveness of static bug detectors at finding null pointer exceptions. In Proceedings of the 2021 36th IEEE/ACM International Conference on Automated Software Engineering (ASE), Melbourne, Australia, 15–19 November 2021; pp. 292–303. [Google Scholar]

- Umann, K.; Porkoláb, Z. Towards Better Static Analysis Bug Reports in the Clang Static Analyzer. In Proceedings of the 2025 IEEE/ACM 47th International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP), Ottawa, ON, Canada, 27 April–3 May 2025; pp. 170–180. [Google Scholar]

- Jin, M.; Shahriar, S.; Tufano, M.; Shi, X.; Lu, S.; Sundaresan, N.; Svyatkovskiy, A. Inferfix: End-to-end program repair with llms. In Proceedings of the 31st ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, San Francisco, CA, USA, 3–9 December 2023; pp. 1646–1656. [Google Scholar]

- Serebryany, K.; Kennelly, C.; Phillips, M.; Denton, M.; Elver, M.; Potapenko, A.; Morehouse, M.; Tsyrklevich, V.; Holler, C.; Lettner, J.; et al. Gwp-asan: Sampling-based detection of memory-safety bugs in production. In Proceedings of the 46th International Conference on Software Engineering: Software Engineering in Practice, Lisbon, Portugal, 14–20 April 2024; pp. 168–177. [Google Scholar]

- Andrianov, P.; Mutilin, V.; Gerlits, E. Detecting Data Races in Language Virtual Machines with RaceHunter. Lessons Learned. In Proceedings of the 33rd ACM International Conference on the Foundations of Software Engineering, Trondheim, Norway, 23–28 June 2025; pp. 1246–1247. [Google Scholar]

- Dewan, P.; Gaddis, N. Leveraging Valgrind to Assess Concurrent, Testing-Unaware C Programs. In Proceedings of the 2024 IEEE 31st International Conference on High Performance Computing, Data and Analytics Workshop (HiPCW), Bangalore, India, 18–21 December 2024; pp. 17–24. [Google Scholar]

- Huang, X.; Xiang, C.; Li, H.; He, P. Sbuglocater: Bug localization based on deep matching and information retrieval. Math. Probl. Eng. 2022, 2022, 3987981. [Google Scholar] [CrossRef]

- Coello, C.E.A.; Alimam, M.N.; Kouatly, R. Effectiveness of ChatGPT in coding: A comparative analysis of popular large language models. Digital 2024, 4, 114–125. [Google Scholar] [CrossRef]

- Wang, R.; Ji, X.; Xu, S.; Tian, Y.; Jiang, S.; Huang, R. An empirical assessment of different word embedding and deep learning models for bug assignment. J. Syst. Softw. 2024, 210, 111961. [Google Scholar] [CrossRef]

- Li, J.; Fang, L.; Lou, J.G. Retro-BLEU: Quantifying chemical plausibility of retrosynthesis routes through reaction template sequence analysis. Digit. Discov. 2024, 3, 482–490. [Google Scholar] [CrossRef]

- Citarella, A.A.; Barbella, M.; Ciobanu, M.G.; De Marco, F.; Di Biasi, L.; Tortora, G. Assessing the effectiveness of ROUGE as unbiased metric in Extractive vs. Abstractive summarization techniques. J. Comput. Sci. 2025, 87, 102571. [Google Scholar] [CrossRef]

- Mukherjee, A.; Hassija, V.; Chamola, V.; Gupta, K.K. A Detailed Comparative Analysis of Automatic Neural Metrics for Machine Translation: BLEURT & BERTScore. IEEE Open J. Comput. Soc. 2025, 6, 658–668. [Google Scholar] [CrossRef]

- Hoeijmakers, E.J.; Martens, B.; Hendriks, B.M.; Mihl, C.; Miclea, R.L.; Backes, W.H.; Wildberger, J.E.; Zijta, F.M.; Gietema, H.A.; Nelemans, P.J.; et al. How subjective CT image quality assessment becomes surprisingly reliable: Pairwise comparisons instead of Likert scale. Eur. Radiol. 2024, 34, 4494–4503. [Google Scholar] [CrossRef]

- Jia, Q.; Cui, J.; Xi, R.; Liu, C.; Rashid, P.; Li, R.; Gehringer, E. On assessing the faithfulness of llm-generated feedback on student assignments. In Proceedings of the 17th International Conference on Educational Data Mining, Atlanta, Georgia, 14–17 July 2024; pp. 491–499. [Google Scholar]

- Agarwal, C.; Tanneru, S.H.; Lakkaraju, H. Faithfulness vs. plausibility: On the (un) reliability of explanations from large language models. arXiv 2024, arXiv:2402.04614. [Google Scholar]

- Camburu, O.M.; Giunchiglia, E.; Foerster, J.; Lukasiewicz, T.; Blunsom, P. Can I trust the explainer? Verifying post-hoc explanatory methods. arXiv 2019, arXiv:1910.02065. [Google Scholar]

- Russino, J.A.; Wang, D.; Wagner, C.; Rabideau, G.; Mirza, F.; Basich, C.; Mauceri, C.; Twu, P.; Reeves, G.; Tan-Wang, G.; et al. Utility-Driven Approach to Onboard Scheduling and Execution for an Autonomous Europa Lander Mission. J. Aerosp. Inf. Syst. 2025, 22, 73–89. [Google Scholar] [CrossRef]

- Qiu, S.; Bicong, E.; Huang, X.; Liu, L. Software Defect Prediction Based on Double Traversal AST. In Proceedings of the 2024 8th Asian Conference on Artificial Intelligence Technology (ACAIT), Fuzhou, China, 8–10 November 2024; pp. 1665–1674. [Google Scholar]

- Wen, Z.; Fang, Y. Augmenting low-resource text classification with graph-grounded pre-training and prompting. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, Taipei, Taiwan, 23–27 July 2023; pp. 506–516. [Google Scholar]

- Majeed, A. Improving time complexity and accuracy of the machine learning algorithms through selection of highly weighted top k features from complex datasets. Ann. Data Sci. 2019, 6, 599–621. [Google Scholar] [CrossRef]

- Wecks, J.O.; Voshaar, J.; Plate, B.J.; Zimmermann, J. Generative AI Usage and Exam Performance. arXiv 2024, arXiv:2404.19699. [Google Scholar]

- Hua, F.; Wang, M.; Li, G.; Peng, B.; Liu, C.; Zheng, M.; Stein, S.; Ding, Y.; Zhang, E.Z.; Humble, T.; et al. Qasmtrans: A qasm quantum transpiler framework for nisq devices. In Proceedings of the SC’23 Workshops of the International Conference on High Performance Computing, Network, Storage, and Analysis, Denver, CO, USA, 12–17 November 2023; pp. 1468–1477. [Google Scholar]

- Zhang, H.; Qiao, Z.; Wang, H.; Duan, B.; Yin, J. VCounselor: A psychological intervention chat agent based on a knowledge-enhanced large language model. Multimed. Syst. 2024, 30, 363. [Google Scholar] [CrossRef]

- He, G.; Demartini, G.; Gadiraju, U. Plan-then-execute: An empirical study of user trust and team performance when using llm agents as a daily assistant. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama Japan, 26 April–1 May 2025; pp. 1–22. [Google Scholar]

- Mo, R.; Wang, D.; Zhan, W.; Jiang, Y.; Wang, Y.; Zhao, Y.; Li, Z.; Ma, Y. Assessing and analyzing the correctness of github copilot’s code suggestions. ACM Trans. Softw. Eng. Methodol. 2025, 34, 1–32. [Google Scholar]

- Börstler, J.; Bennin, K.E.; Hooshangi, S.; Jeuring, J.; Keuning, H.; Kleiner, C.; MacKellar, B.; Duran, R.; Störrle, H.; Toll, D.; et al. Developers talking about code quality. Empir. Softw. Eng. 2023, 28, 128. [Google Scholar] [CrossRef]

- Jo, J.; Zhang, H.; Cai, J.; Goyal, N. AI Trust Reshaping Administrative Burdens: Understanding Trust-Burden Dynamics in LLM-Assisted Benefits Systems. In Proceedings of the 2025 ACM Conference on Fairness, Accountability, and Transparency, Athens, Greece, 23–26 June 2025; pp. 1172–1183. [Google Scholar]

- Wang, J.; Huang, C.; Yan, S.; Xie, W.; He, D. When young scholars cooperate with LLMs in academic tasks: The influence of individual differences and task complexities. Int. J. Hum.–Comput. Interact. 2025, 41, 4624–4639. [Google Scholar] [CrossRef]

- Liu, Y.; Sharma, P.; Oswal, M.; Xia, H.; Huang, Y. PersonaFlow: Designing LLM-Simulated Expert Perspectives for Enhanced Research Ideation. In Proceedings of the 2025 ACM Designing Interactive Systems Conference, Madeira, Portugal, 5–9 July 2025; pp. 506–534. [Google Scholar]

- Yang, D.; Liu, K.; Kim, D.; Koyuncu, A.; Kim, K.; Tian, H.; Lei, Y.; Mao, X.; Klein, J.; Bissyandé, T.F. Where were the repair ingredients for defects4j bugs? exploring the impact of repair ingredient retrieval on the performance of 24 program repair systems. Empir. Softw. Eng. 2021, 26, 122. [Google Scholar] [CrossRef]

- Morovati, M.M.; Nikanjam, A.; Khomh, F.; Jiang, Z.M. Bugs in machine learning-based systems: A faultload benchmark. Empir. Softw. Eng. 2023, 28, 62. [Google Scholar] [CrossRef]

- Wuisang, M.C.; Kurniawan, M.; Santosa, K.A.W.; Gunawan, A.A.S.; Saputra, K.E. An evaluation of the effectiveness of openai’s chatGPT for automated python program bug fixing using quixbugs. In Proceedings of the 2023 International Seminar on Application for Technology of Information and Communication (iSemantic), Semarang, Indonesia, 16–17 September 2023; pp. 295–300. [Google Scholar]

- Gyimesi, P.; Vancsics, B.; Stocco, A.; Mazinanian, D.; Beszédes, Á.; Ferenc, R.; Mesbah, A. BUGSJS: A benchmark and taxonomy of JavaScript bugs. Softw. Test. Verif. Reliab. 2021, 31, e1751. [Google Scholar] [CrossRef]

- Chang, W.; Ye, C.; Zhou, H. Fine-Tuning Pre-trained Model with Optimizable Prompt Learning for Code Vulnerability Detection. In Proceedings of the 2024 IEEE 35th International Symposium on Software Reliability Engineering (ISSRE), Tsukuba, Japan, 28–31 October 2024; pp. 108–119. [Google Scholar]

- Yang, C.; Si, Q.; Lin, Z. Breaking the Trade-Off Between Faithfulness and Expressiveness for Large Language Models. arXiv 2025, arXiv:2508.18651. [Google Scholar]

- Wang, B.; Deng, M.; Chen, M.; Lin, Y.; Zhou, J.; Zhang, J.M. Assessing the effectiveness of recent closed-source large language models in fault localization and automated program repair. Autom. Softw. Eng. 2026, 33, 26. [Google Scholar] [CrossRef]

- Song, J.; Li, Y.; Tian, Y.; Ma, H.; Li, H.; Zuo, J.; Liu, J.; Niu, W. Investigating the bugs in reinforcement learning programs: Insights from Stack Overflow and GitHub. Autom. Softw. Eng. 2026, 33, 9. [Google Scholar] [CrossRef]

- Li, Y.; Cai, M.; Chen, J.; Xu, Y.; Huang, L.; Li, J. Context-aware prompting for LLM-based program repair. Autom. Softw. Eng. 2025, 32, 42. [Google Scholar] [CrossRef]

- Blocklove, J.; Thakur, S.; Tan, B.; Pearce, H.A.; Garg, S.J.; Karri, R. Automatically Improving LLM-based Verilog Generation using EDA Tool Feedback. ACM Trans. Des. Autom. Electron. Syst. 2025, 30, 1–26. [Google Scholar] [CrossRef]

- Xie, L.; Li, Z.; Pei, Y.; Wen, Z.; Liu, K.; Zhang, T.; Li, X. PReMM: LLM-Based Program Repair for Multi-method Bugs via Divide and Conquer. Proc. ACM Program. Lang. 2025, 9, 1316–1344. [Google Scholar] [CrossRef]

- Liu, Z.; Du, X.; Liu, H. ReAPR: Automatic program repair via retrieval-augmented large language models. Softw. Qual. J. 2025, 33, 30. [Google Scholar] [CrossRef]

- Alhanahnah, M.J.; Hasan, M.R.; Xu, L.; Bagheri, H. An empirical evaluation of pre-trained large language models for repairing declarative formal specifications. Empir. Softw. Eng. 2025, 30, 149. [Google Scholar] [CrossRef]

- Xu, P.; Kuang, B.; Su, M.; Fu, A. Survey of Large-Language-Model-Based Automated Program Repair. Jisuanji Yanjiu Yu Fazhan/Comput. Res. Dev. 2025, 62, 2040–2057. [Google Scholar] [CrossRef]

- Yang, R.; Xu, X.; Wang, R. TestLoter: A logic-driven framework for automated unit test generation and error repair using large language models. J. Comput. Lang. 2025, 84, 101348. [Google Scholar] [CrossRef]

- Zhang, H.; David, C.; Wang, M.; Paulsen, B.; Kroening, D. Scalable, Validated Code Translation of Entire Projects using Large Language Models. Proc. ACM Program. Lang. 2025, 9, 1616–1641. [Google Scholar] [CrossRef]

- Rahman, S.; Kuhar, S.; Çirisci, B.; Garg, P.; Wang, S.; Ma, X.; Deoras, A.; Ray, B. UTFix: Change Aware Unit Test Repairing using LLM. Proc. ACM Program. Lang. 2025, 9, 143–168. [Google Scholar] [CrossRef]

- Wang, S.; Lu, L.; Qiu, S.; Tian, Q.; Lin, H. DALO-APR: LLM-based automatic program repair with data augmentation and loss function optimization. J. Supercomput. 2025, 81, 640. [Google Scholar] [CrossRef]

- Pereira, A.F.; Ferreira Mello, R. A Systematic Literature Review on Large Language Models Applications in Computer Programming Teaching Evaluation Process. IEEE Access 2025, 13, 113449–113460. [Google Scholar] [CrossRef]

- Blinn, A.J.; Li, X.; Kim, J.h.; Omar, C. Statically Contextualizing Large Language Models with Typed Holes. Proc. ACM Program. Lang. 2024, 8, 468–498. [Google Scholar] [CrossRef]

- Xuan, J.; Martinez, M.; Demarco, F.; Clement, M.; Marcote, S.L.; Durieux, T.; Le Berre, D.; Monperrus, M. Nopol: Automatic repair of conditional statement bugs in java programs. IEEE Trans. Softw. Eng. 2016, 43, 34–55. [Google Scholar] [CrossRef]

- Rosà, A.; Basso, M.; Bohnhoff, L.; Binder, W. Automated Runtime Transition between Virtual and Platform Threads in the Java Virtual Machine. In Proceedings of the 2023 30th Asia-Pacific Software Engineering Conference (APSEC), Seoul, Republic of Korea, 4–7 December 2023; pp. 607–611. [Google Scholar]

- Guizzo, G.; Bazargani, M.; Paixao, M.; Drake, J.H. A hyper-heuristic for multi-objective integration and test ordering in google guava. In Proceedings of the International Symposium on Search Based Software Engineering, Paderborn, Germany, 9–11 September 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 168–174. [Google Scholar]

- Gajewski, M.; Zabierowski, W. Analysis and comparison of the Spring framework and play framework performance, used to create web applications in Java. In Proceedings of the 2019 IEEE XVth International Conference on the Perspective Technologies and Methods in MEMS Design (MEMSTECH), Polyana, Ukraine, 22–26 May 2019; pp. 170–173. [Google Scholar]

- Walter-Tscharf, F.F.W.V. Indexing, clustering, and search engine for documents utilizing Elasticsearch and Kibana. In Mobile Computing and Sustainable Informatics: Proceedings of ICMCSI 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 897–910. [Google Scholar]

- Alqaradaghi, M.; Nazir, M.Z.I.; Kozsik, T. Design and Implement an Accurate Automated Static Analysis Checker to Detect Insecure Use of SecurityManager. Computers 2023, 12, 247. [Google Scholar] [CrossRef]

- Choi, Y.h.; Nam, J. WINE: Warning miner for improving bug finders. Inf. Softw. Technol. 2023, 155, 107109. [Google Scholar] [CrossRef]

- Vach, W.; Gerke, O. Gwet’s AC1 is not a substitute for Cohen’s kappa–A comparison of basic properties. MethodsX 2023, 10, 102212. [Google Scholar] [CrossRef]

- Cho, D.; Lee, H.; Kang, S. An empirical study of Korean sentence representation with various tokenizations. Electronics 2021, 10, 845. [Google Scholar] [CrossRef]

- Kovačević, A.; Luburić, N.; Slivka, J.; Prokić, S.; Grujić, K.G.; Vidaković, D.; Sladić, G. Automatic detection of code smells using metrics and CodeT5 embeddings: A case study in C#. Neural Comput. Appl. 2024, 36, 9203–9220. [Google Scholar] [CrossRef]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Deb, K. Multi-objective evolutionary algorithms. In Springer Handbook of Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2015; pp. 995–1015. [Google Scholar]

- Pan, L.; Xu, W.; Li, L.; He, C.; Cheng, R. Adaptive simulated binary crossover for rotated multi-objective optimization. Swarm Evol. Comput. 2021, 60, 100759. [Google Scholar] [CrossRef]

- Shahedi, K.; Gyambrah, N.; Li, H.; Lamothe, M.; Khomh, F. An Empirical Study on Method-Level Performance Evolution in Open-Source Java Projects. arXiv 2025, arXiv:2508.07084. [Google Scholar]

- Dehdarirad, T. Evaluating explainability in language classification models: A unified framework incorporating feature attribution methods and key factors affecting faithfulness. Data Inf. Manag. 2025, 9, 100101. [Google Scholar] [CrossRef]

- Zhao, H.; Chen, H.; Yang, F.; Liu, N.; Deng, H.; Cai, H.; Wang, S.; Yin, D.; Du, M. Explainability for large language models: A survey. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–38. [Google Scholar] [CrossRef]

- Kern, M.; Erata, F.; Iser, M.; Sinz, C.; Loiret, F.; Otten, S.; Sax, E. Integrating static code analysis toolchains. In Proceedings of the 2019 IEEE 43rd Annual Computer Software and Applications Conference (COMPSAC), Milwaukee, WI, USA, 15–19 July 2019; Volume 1, pp. 523–528. [Google Scholar]

- Gbenle, P.; Abieba, O.A.; Owobu, W.O.; Onoja, J.P.; Daraojimba, A.I.; Adepoju, A.H.; Chibunna, U.B. A privacy-preserving AI model for autonomous detection and masking of sensitive user data in contact center analytics. World Sci. News 2025, 203, 154–193. [Google Scholar]

- Eghbali, A.; Burk, F.; Pradel, M. DyLin: A Dynamic Linter for Python. Proc. ACM Softw. Eng. 2025, 2, 2828–2849. [Google Scholar] [CrossRef]

- Al-Khafaji, N.J.; Majeed, B.K. Evaluating Large Language Models using Arabic Prompts to Generate Python Codes. In Proceedings of the 2024 4th International Conference on Emerging Smart Technologies and Applications (eSmarTA), Sana’a, Yemen, 6–7 August 2024; pp. 1–5. [Google Scholar]

- Rak-Amnouykit, I.; McCrevan, D.; Milanova, A.; Hirzel, M.; Dolby, J. Python 3 types in the wild: A tale of two type systems. In Proceedings of the 16th ACM SIGPLAN International Symposium on Dynamic Languages, Virtual, 17 November 2020; pp. 57–70. [Google Scholar]

- Strong, E.; Kleynhans, B.; Kadioğlu, S. Mabwiser: A parallelizable contextual multi-armed bandit library for python. In Proceedings of the 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI), Portland, OR, USA, 4–6 November 2019; pp. 909–914. [Google Scholar]

- Verma, A.; Saha, R.; Kumar, G.; Brighente, A.; Conti, M.; Kim, T.H. Exploring the Landscape of Programming Language Identification with Machine Learning Approaches. IEEE Access 2025, 13, 23556–23579. [Google Scholar] [CrossRef]

- Guo, Z.; Tan, T.; Liu, S.; Liu, X.; Lai, W.; Yang, Y.; Li, Y.; Chen, L.; Dong, W.; Zhou, Y. Mitigating false positive static analysis warnings: Progress, challenges, and opportunities. IEEE Trans. Softw. Eng. 2023, 49, 5154–5188. [Google Scholar] [CrossRef]

- Xue, F.; Fu, Y.; Zhou, W.; Zheng, Z.; You, Y. To repeat or not to repeat: Insights from scaling llm under token-crisis. Adv. Neural Inf. Process. Syst. 2023, 36, 59304–59322. [Google Scholar]

- Priya, S.; Karthika Renuka, D.; Ashok Kumar, L. Robust Multi-Dialect End-to-End ASR Model Jointly with Beam Search Threshold Pruning and LLM. SN Comput. Sci. 2025, 6, 323. [Google Scholar]

- Guo, L.; Wang, Y.; Shi, E.; Zhong, W.; Zhang, H.; Chen, J.; Zhang, R.; Ma, Y.; Zheng, Z. When to stop? towards efficient code generation in llms with excess token prevention. In Proceedings of the 33rd ACM SIGSOFT International Symposium on Software Testing and Analysis, Vienna, Austria, 16–20 September 2024; pp. 1073–1085. [Google Scholar]

- Latif, Y.A. Hallucinations in large language models and their influence on legal reasoning: Examining the risks of ai-generated factual inaccuracies in judicial processes. J. Comput. Intell. Mach. Reason. Decis.-Mak. 2025, 10, 10–20. [Google Scholar]

- Naiseh, M.; Simkute, A.; Zieni, B.; Jiang, N.; Ali, R. C-XAI: A conceptual framework for designing XAI tools that support trust calibration. J. Responsible Technol. 2024, 17, 100076. [Google Scholar] [CrossRef]

- Li, K.; Liu, H.; Zhang, L.; Chen, Y. Automatic Inspection of Static Application Security Testing (SAST) Reports via Large Language Model Reasoning. In Proceedings of the International Conference on AI Logic and Applications, Lanzhou, China, 10–11 August 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 128–142. [Google Scholar]

- Qu, H.; Fan, W.; Zhao, Z.; Li, Q. TokenRec: Learning to Tokenize ID for LLM-Based Generative Recommendations. IEEE Trans. Knowl. Data Eng. 2025, 37, 6216–6231. [Google Scholar]

| Warning Type | Extracted Explanation (from PR/Commit) | Code Snippet (Centered, Shortened) |

|---|---|---|

| Null dereference | “Null pointer when ‘config.getConnector()’ may return null; add null-check or guard.” | Connector c = config.getConnector(); |

| c.start(); // NPE if null | ||

| Resource leak | “InputStream not closed on exception path in ‘readConfig’—close in finally or use try-with-resources.” | InputStream in = new FileInputStream(f); |

| // missing close() on error path | ||

| API misuse | “Using ‘List.remove(Object)’ with index value; likely intended ‘removeAt(index)’—causes unexpected element removal.” | list.remove(i); // i is int index |

| // ambiguous call leads to wrong element removal |

| Code Snippet (10 Lines) | Explanation | Labels |

|---|---|---|

| 1:public void processData(Data input) 2:List<String> results = loadData(input); 3:for(String item : results) 4:System.out.println(item.length()); 5: 6: | “The variable results may be null if loadData() returns null; a null check is required before iterating.” | Relevant lines: 2–4 Type: Suspected Root Cause + Remediation Hint Correctness: Correct |

| 1:private Map<String, Integer> cacheMap; 2:public void updateCache(String key, int val) 3:cacheMap.put(key, val); 4: | “Concurrent access to cacheMap is unsynchronized; use ConcurrentHashMap or synchronize access.” | Relevant lines: 1–3 Type: Suspected Root Cause + Remediation Hint Correctness: Correct |

| 1:FileInputStream fis = new FileInputStream(file); 2:// process file 3:// missing close | “FileInputStream is not closed; this may cause resource leaks.” | Relevant lines: 1–3 Type: Symptom + Remediation Hint Correctness: Correct |

| Batch | Decoding | Epochs | LR | MaxTok | Temp | Top-k | Top-p | Utility U |

|---|---|---|---|---|---|---|---|---|

| 12 | nucleus | 3 | 0.000392451 | 42 | 0.4731 | 182 | 0.8654 | 0.941 |

| 12 | nucleus | 4 | 0.000389308 | 47 | 0.3845 | 178 | 0.9562 | 0.929 |

| 14 | sampling | 3 | 0.000451932 | 70 | 0.5187 | 169 | 0.1542 | 0.922 |

| 14 | sampling | 3 | 0.000446128 | 72 | 0.5449 | 171 | 0.0935 | 0.931 |

| 29 | sampling | 3 | 0.000405613 | 138 | 0.1316 | 45 | 1.0000 | 0.955 |

| 29 | sampling | 3 | 0.000405613 | 138 | 0.1316 | 45 | 0.9841 | 0.963 |

| 13 | nucleus | 4 | 0.000372406 | 41 | 0.4515 | 183 | 0.9440 | 0.884 |

| 19 | nucleus | 4 | 0.000483574 | 214 | 0.4296 | 22 | 0.0721 | 0.865 |

| 22 | sampling | 4 | 0.000462301 | 34 | 0.2614 | 65 | 0.0587 | 0.872 |

| 23 | nucleus | 5 | 0.000289793 | 231 | 0.2948 | 139 | 0.5365 | 0.951 |

| 28 | sampling | 5 | 0.0000154021 | 69 | 0.6224 | 98 | 0.6483 | 0.947 |

| Batch | Decoding | Epochs | LR | MaxTok | Temp | Top-k | Top-p | C | D | E |

|---|---|---|---|---|---|---|---|---|---|---|

| 10 | nucleus | 3 | 0.000376507 | 36 | 0.4463 | 189 | 0.8823 | 0.9495 | 0.6387 | 4.9691 |

| 10 | nucleus | 4 | 0.000376819 | 36 | 0.3700 | 189 | 0.9711 | 0.9096 | 0.6179 | 5.2911 |

| 11 | sampling | 3 | 0.000438291 | 65 | 0.5340 | 164 | 0.1369 | 0.9316 | 0.7554 | 5.2562 |

| 11 | sampling | 3 | 0.000431242 | 65 | 0.5364 | 164 | 0.0763 | 0.9173 | 0.8286 | 5.2693 |

| 31 | sampling | 3 | 0.000417897 | 145 | 0.1185 | 38 | 1.0 | 0.8977 | 0.6589 | 5.4920 |

| 31 | sampling | 3 | 0.000417897 | 145 | 0.1185 | 38 | 0.9699 | 0.9850 | 0.7346 | 5.5802 |

| 10 | nucleus | 4 | 0.000368677 | 36 | 0.4371 | 189 | 0.9507 | 0.7949 | 0.6954 | 5.1831 |

| 18 | nucleus | 4 | 0.000465528 | 205 | 0.4560 | 15 | 0.0479 | 0.9052 | 0.7241 | 5.0292 |

| 18 | nucleus | 4 | 0.000487121 | 205 | 0.5178 | 15 | 0.0494 | 0.9503 | 0.5617 | 5.1944 |

| 18 | nucleus | 4 | 0.000485128 | 205 | 0.4639 | 15 | 0.0748 | 0.7825 | 0.5551 | 5.2367 |

| 10 | nucleus | 4 | 0.000374806 | 36 | 0.3741 | 189 | 0.9992 | 0.8917 | 0.8030 | 4.9812 |

| 11 | sampling | 3 | 0.000438272 | 65 | 0.5364 | 164 | 0.0763 | 0.7898 | 0.7869 | 5.0842 |

| 10 | nucleus | 4 | 0.000376507 | 36 | 0.4463 | 189 | 0.9992 | 0.7674 | 0.6642 | 5.0241 |

| 11 | sampling | 3 | 0.000438272 | 65 | 0.5364 | 164 | 0.0763 | 0.7885 | 0.6505 | 4.9947 |

| 10 | sampling | 3 | 0.000329176 | 203 | 0.3780 | 111 | 0.0965 | 0.7810 | 0.7174 | 5.5986 |

| 27 | sampling | 5 | 0.000434426 | 119 | 0.2404 | 100 | 0.0581 | 0.8806 | 0.6128 | 5.9915 |

| 29 | sampling | 3 | 0.000417897 | 145 | 0.1185 | 38 | 0.9699 | 0.8751 | 0.8043 | 5.2680 |

| 21 | sampling | 4 | 0.000447549 | 29 | 0.2511 | 60 | 0.0465 | 0.8693 | 0.5743 | 5.4706 |

| 10 | sampling | 3 | 0.00035257 | 203 | 0.3765 | 111 | 0.3641 | 0.9158 | 0.6141 | 5.4920 |

| 21 | nucleus | 4 | 0.000277888 | 236 | 0.3805 | 54 | 0.5201 | 0.7602 | 0.7503 | 5.6160 |

| 11 | sampling | 3 | 0.000455567 | 65 | 0.5457 | 164 | 0.0344 | 0.7592 | 0.6364 | 5.1011 |

| 10 | sampling | 3 | 0.000345274 | 203 | 0.3742 | 111 | 0.0977 | 0.8622 | 0.8452 | 5.0360 |

| 21 | sampling | 4 | 0.000474954 | 29 | 0.2535 | 60 | 0.0651 | 0.9130 | 0.6195 | 5.1061 |

| 22 | nucleus | 5 | 0.000300283 | 244 | 0.2797 | 131 | 0.5142 | 0.7739 | 0.6069 | 5.6472 |

| 18 | nucleus | 4 | 0.00048714 | 205 | 0.4599 | 15 | 0.0467 | 0.7688 | 0.7526 | 4.9480 |

| 30 | sampling | 5 | 0.0000134625 | 64 | 0.6557 | 89 | 0.6117 | 0.8582 | 0.5745 | 5.6032 |

| 18 | nucleus | 4 | 0.0005 | 205 | 0.4603 | 15 | 0.0232 | 0.8778 | 0.8149 | 5.2272 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Malashin, I. Generation of Natural-Language Explanations for Static-Analysis Warnings Using Single- and Multi-Objective Optimization. Computers 2025, 14, 534. https://doi.org/10.3390/computers14120534

Malashin I. Generation of Natural-Language Explanations for Static-Analysis Warnings Using Single- and Multi-Objective Optimization. Computers. 2025; 14(12):534. https://doi.org/10.3390/computers14120534

Chicago/Turabian StyleMalashin, Ivan. 2025. "Generation of Natural-Language Explanations for Static-Analysis Warnings Using Single- and Multi-Objective Optimization" Computers 14, no. 12: 534. https://doi.org/10.3390/computers14120534

APA StyleMalashin, I. (2025). Generation of Natural-Language Explanations for Static-Analysis Warnings Using Single- and Multi-Objective Optimization. Computers, 14(12), 534. https://doi.org/10.3390/computers14120534