1. Financial Market Crises

Financial crises, speculative bubbles, and market crashes have repeatedly disrupted both advanced and emerging economies. These events, though varied in form, commonly involve sharp asset price swings, financial intermediation breakdowns, and credit contractions, often leading to prolonged recessions and significant declines in consumption, investment, and employment [

1].

The origins of such crises are debated. Exogenous theories attribute fluctuations to external forces, including monetary policy shifts and technological change. Slutzky [

2] modeled cycles as the result of random shocks forming regular patterns. Friedman [

3] argued that monetary expansion fuels inflation and asset bubbles, while contractionary policy triggers downturns. Kydland and Prescott [

4] identified technological shocks as dominant macroeconomic drivers.

Endogenous theories, in contrast, locate the roots of crises within financial systems. Minsky’s Financial Instability Hypothesis [

5] suggests that stability breeds risk-taking, ultimately leading to fragility. Shiller [

6] emphasized behavioral factors, such as cognitive biases and herd dynamics, that distort investor judgment and fuel speculative excess.

The dot-com bubble exemplified these dynamics. Investor euphoria over internet companies led to extreme valuations, with firms adding ’.com’ to their names experiencing price surges of over 50% despite no substantive changes [

7]. Shiller [

6] characterized this as ’irrational exuberance’ driven by media amplification and social contagion. When expectations failed to materialize, the bubble collapsed.

The 2007–2008 crisis originated in the US subprime mortgage market amid low interest rates and capital inflows, creating a housing bubble [

8]. The collapses of Bear Stearns and Lehman Brothers triggered systemic panic, prompting unprecedented interventions by the Federal Reserve. Parallel failures at AIG and Washington Mutual highlighted systemic fragilities.

In Europe, the sovereign debt crisis (2009–2012) exposed institutional weaknesses in the eurozone. Lane [

9] noted that the lack of fiscal integration and exchange rate flexibility limited member states’ ability to absorb shocks, in contrast to more integrated monetary unions like the United States.

The COVID-19 pandemic caused a sharp market crash in early 2020, driven by lockdowns, business closures, and global uncertainty. Mazur et al. [

10] showed that losses were unevenly distributed: energy and hospitality firms suffered most, while healthcare and software sectors gained. Extreme volatility and divergent corporate responses characterized the crisis.

Recognizing the systemic risks, the G20 in 2008 mandated the IMF to strengthen surveillance and develop early warning systems [

11]. Sornette [

12] argued that crises are not entirely random but display detectable precursors, introducing the concept of “Dragon-Kings”—extreme, self-reinforcing events outside normal fluctuations.

Numerous predictive models have emerged. Claessens and Kose [

1] analyzed common pre-crisis signals, while Beutel et al. [

13] compared traditional statistical models and machine learning (ML). Despite capturing nonlinear patterns, ML methods often lack interpretability. Classical logit models remain competitive in forecasting performance due to their transparency and robustness.

This paper proposes a framework that integrates Topological Data Analysis (TDA) with a strictly causal detection pipeline to detect early warning signals (EWS) of financial crises. The methodology can support machine-learning-based monitoring systems, but here we focus on the detection pipeline and its empirical evaluation.

Specifically, it introduces

- and

-norm indicators of persistence landscapes as informative features, and demonstrates their predictive power across several major crises using multiple ML classifiers. The methodology is empirically validated across multiple historical financial crises—including the 2008 global financial crisis and the 2020 COVID-19 crash—using daily stock index data. The results demonstrate that topological indicators significantly improve predictive performance compared to baseline models (see, for example [

14,

15,

16,

17]) offering a promising and interpretable tool for early crisis detection.

Furthermore, this work (as per

Figure 1) focuses on the following research question: can topological features extracted from financial time series via persistent homology act as interpretable early warning signals of financial crises, and support machine learning-based monitoring systems?

The work is structured as follows. After this introduction,

Section 2 presents the theoretical background of TDA.

Section 3 reviews the scientific literature, while

Section 4 explains the experiments conducted on a specific dataset to detect early signals of a financial crisis. Finally,

Section 5 discusses the results, while

Section 6 provides the conclusions and outlines directions for future work.

2. Background

A topology [

18] on a set

X is a collection

of subsets (open sets) satisfying: (i) ∅ and

X belong to

, (ii) finite intersections and (iii) arbitrary unions of open sets remain open. The pair

defines a topological space.

A k-simplex is the convex hull of affinely independent points in . Simplices include vertices (), edges (), triangles (), and tetrahedra (). A simplicial complex is a finite set of simplices closed under taking faces and such that the intersection of any two is either empty or a shared face.

Given a point cloud

S and scale

, the Vietoris–Rips complex

includes all simplices of diameter

:

Homology encodes topological features via algebraic structures. For a simplicial complex and integer p, a p-chain is a formal sum of p-simplices with coefficients (typically in ). The set of p-chains forms an abelian group .

The boundary operator

maps each

p-simplex to its oriented

-faces:

A

p-cycle is a

p-chain with zero boundary; they form the kernel

. A

p-boundary is the image of

and forms

. The Fundamental Lemma of Homology states:

The

p-th homology group is defined as the quotient:

whose rank

(the

p-th Betti number) counts

p-dimensional topological features:

(connected components),

(loops),

(voids).

Homology is functorial: a continuous map

induces

. It is also homotopy invariant: if

, then

and

for all

p [

19].

2.1. Persistent Homology

Let

K be a simplicial complex and

a monotonic function (i.e.,

for

). The sublevel sets

form a filtration:

Each inclusion induces homomorphisms between homology groups:

Persistent homology tracks homology classes across the filtration. A class born at

and dying at

defines a persistence

The persistent Betti number is

The persistence diagram

is a multiset of points

in

, where each point’s multiplicity is

The Fundamental Lemma of Persistent Homology states:

The Wasserstein distance

between two diagrams

quantifies their dissimilarity:

2.2. Persistence Landscapes

Bubenik [

20] introduced persistence landscapes as functional summaries of persistence diagrams. Given a persistence module

M, the landscape

is defined as:

This function captures the persistence of the k-th most prominent feature at location t. It satisfies: (i) non-negativity, (ii) monotonicity in k, and (iii) 1-Lipschitz continuity in t.

Given a persistence diagram

, the landscape is computed as:

The

-norm of

is defined as:

In a statistical setting, persistence landscapes can be viewed as random variables in

. Given i.i.d. samples

with landscapes

, the empirical mean landscape is:

2.3. Why TDA-Based Features Can Anticipate Crises

Traditional econometric indicators, such as volatility, autocorrelation, or macroeconomic ratios, are inherently low-dimensional and often assume linear or parametric relationships in financial data. In contrast, TDA captures high-dimensional geometric and structural information embedded in multivariate time series without requiring distributional assumptions or model specification. Persistent homology, in particular, is sensitive to both local and global deformations in the data manifold, enabling the detection of subtle structural transitions—such as fragmentation of market regimes or emergence of co-movement patterns—that may not be visible through traditional indicators.

Mathematically, let denote a multivariate financial time series embedded as point clouds via sliding windows. The associated Vietoris–Rips complex captures simplicial connectivity at scale r, and the resulting persistence diagram summarizes the birth and death of topological features across scales. The persistence landscape , which maps this multiscale information into a functional representation, allows the use of and norms as summary statistics. These norms react to early topological instabilities—such as increased loops (connected clusters of assets) or persistent voids—before such effects manifest as elevated volatility.

Thus, the rationale is that financial crises emerge from the build-up of complex systemic interdependencies. TDA captures the underlying topological evolution of the market structure itself, not just its first- or second-order statistical moments. This allows early detection of regime shifts or bifurcations in the data geometry that may precede observable crises, providing a theoretically grounded advantage over classical econometric tools.

4. Experiments

This section presents a comprehensive evaluation of the proposed framework for detecting EWS of financial crises using persistent homology and topological machine learning. In contrast to earlier formulations, the revised methodology implements a strictly causal detection pipeline, thereby eliminating any reliance on future information, and validates results against both historically defined crisis periods and independent volatility benchmarks. The objective is to assess whether topological signals derived from multivariate market data can provide reliable and anticipatory indicators of systemic risk.

4.1. Dataset and Preprocessing

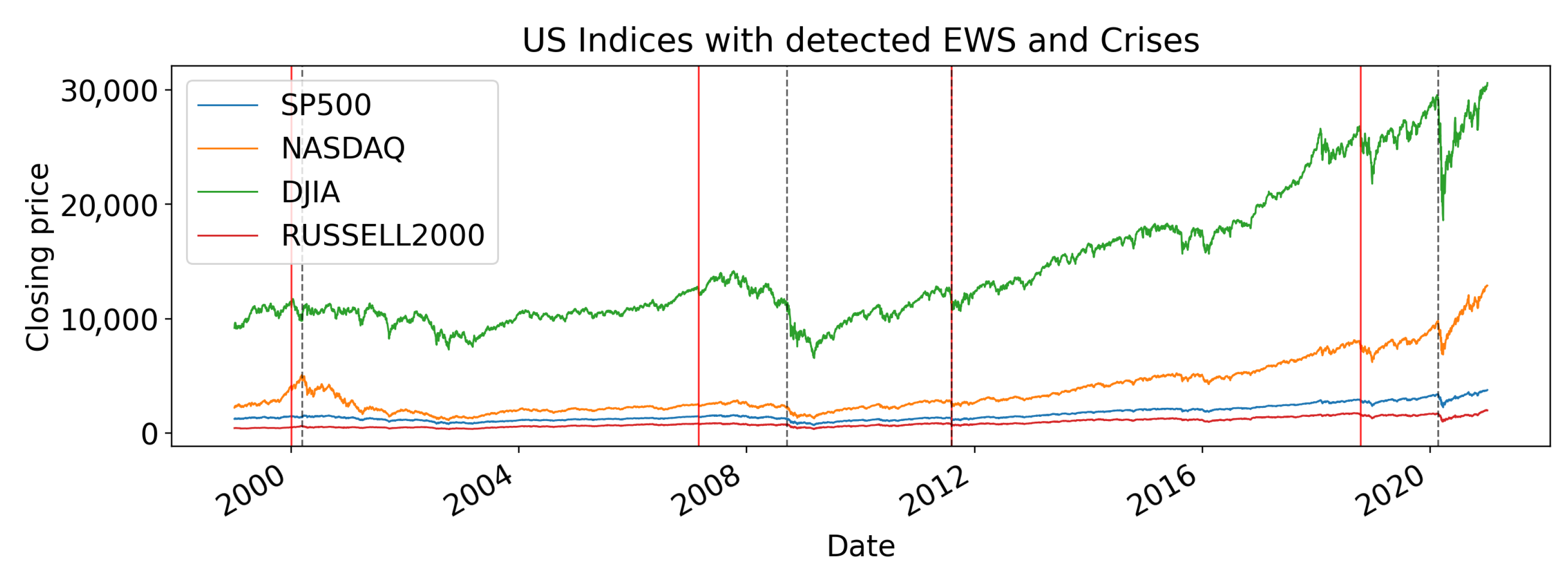

The empirical analysis is based on four major US equity indices: the S&P 500, the NASDAQ Composite, the Dow Jones Industrial Average, and the Russell 2000. Together, these indices capture large-cap, small-cap, technology-oriented, and blue-chip segments of the US market, offering a representative view of systemic dynamics. The dataset spans 4 January 1999, to 1 January 2021 (5535 trading days), retrieved from Yahoo Finance at daily frequency.

Daily log-returns are computed as

where

is the index closing price on day

t. This transformation enforces scale invariance and stabilizes variance, which is essential for comparing across indices with different levels and volatilities.

Using a sliding window of

trading days, we generate 5506 overlapping point clouds in

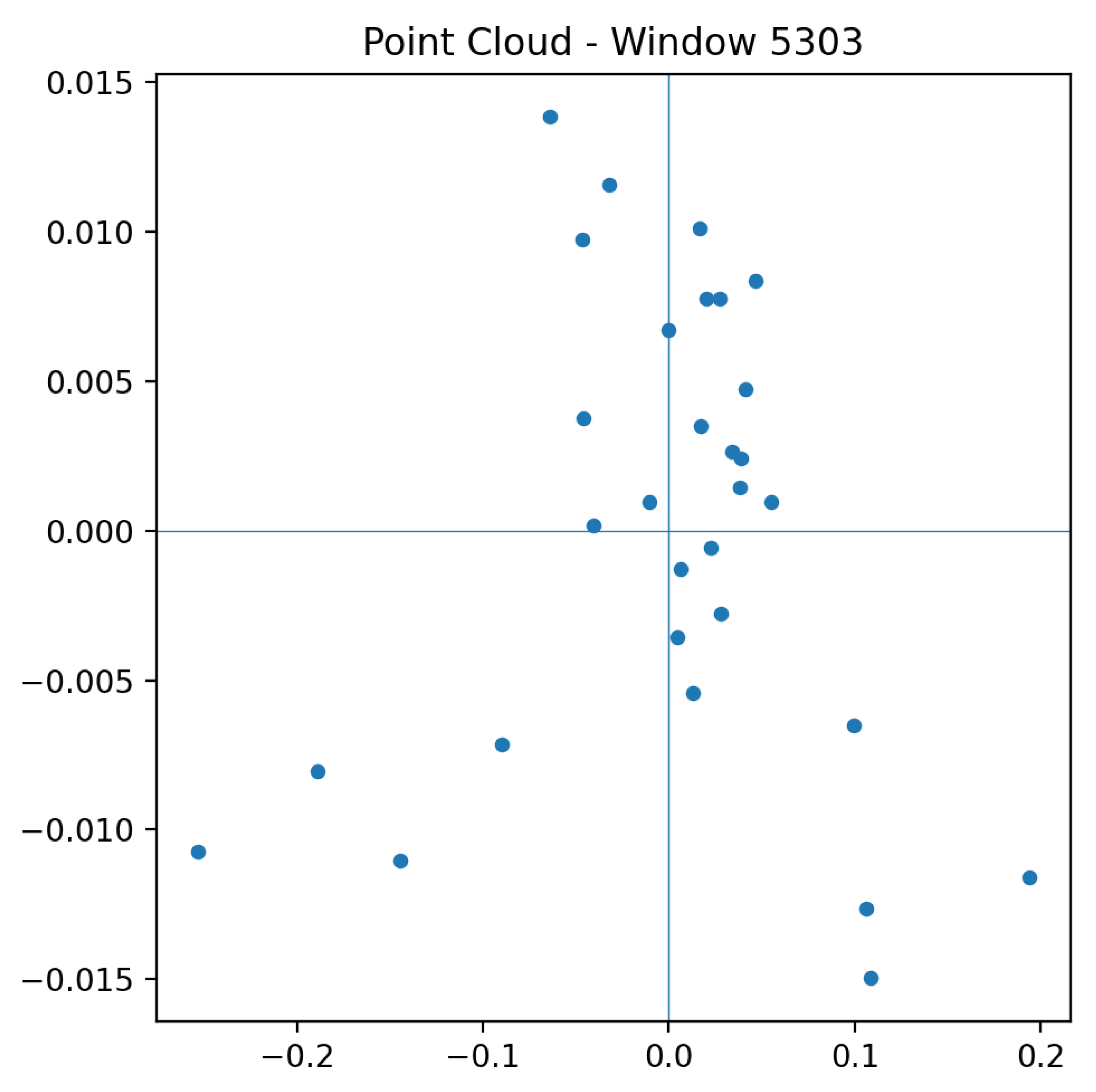

. Each cloud encodes the joint behaviour of the indices during a short horizon. For visualization purposes, clouds may be projected into lower dimensions using Principal Component Analysis (PCA), though persistent homology is computed in the full four-dimensional space.

Figure 2 and

Figure 3 show representative point clouds for both stable and turbulent market periods.

4.2. Crisis Labeling and Ground Truth

Ground truth crisis periods are defined using a hybrid strategy. First, four canonical systemic events are identified: the dot-com bubble (2000), the global financial crisis (2008), the US sovereign debt downgrade (2011), and the COVID-19 crash (2020). These are well-established crises in financial history and serve as reference benchmarks.

Second, to reduce reliance on purely historical annotation, we add a quantitative rule: periods are labeled as crises if realized volatility exceeds the 95th percentile of its historical rolling distribution. This dual approach balances interpretability with reproducibility and helps capture periods of exceptional stress that may not fit neatly into the four canonical events.

4.3. Topological Feature Extraction

Each point cloud is transformed into a Vietoris–Rips complex using the giotto-tda library, with maximum filtration value and Euclidean distance as the metric. Homology groups (connected components) and (loops) are computed.

Persistence diagrams are obtained with the

VietorisRipsPersistence transformer. They encode the birth and death of topological features as the filtration radius grows. Points close to the diagonal correspond to short-lived, noisy features, while points far from the diagonal reflect persistent structures.

Figure 4 and

Figure 5 illustrate persistence diagrams for a stable and a turbulent period, highlighting how crises yield longer-lived features.

Persistence barcodes offer a complementary visualization by representing feature lifetimes as horizontal line segments. Longer bars correspond to persistent components or loops, while shorter bars represent transient features likely attributable to noise.

Figure 6 and

Figure 7 show barcodes for the same windows as above, confirming the structural differences between stable and crisis periods.

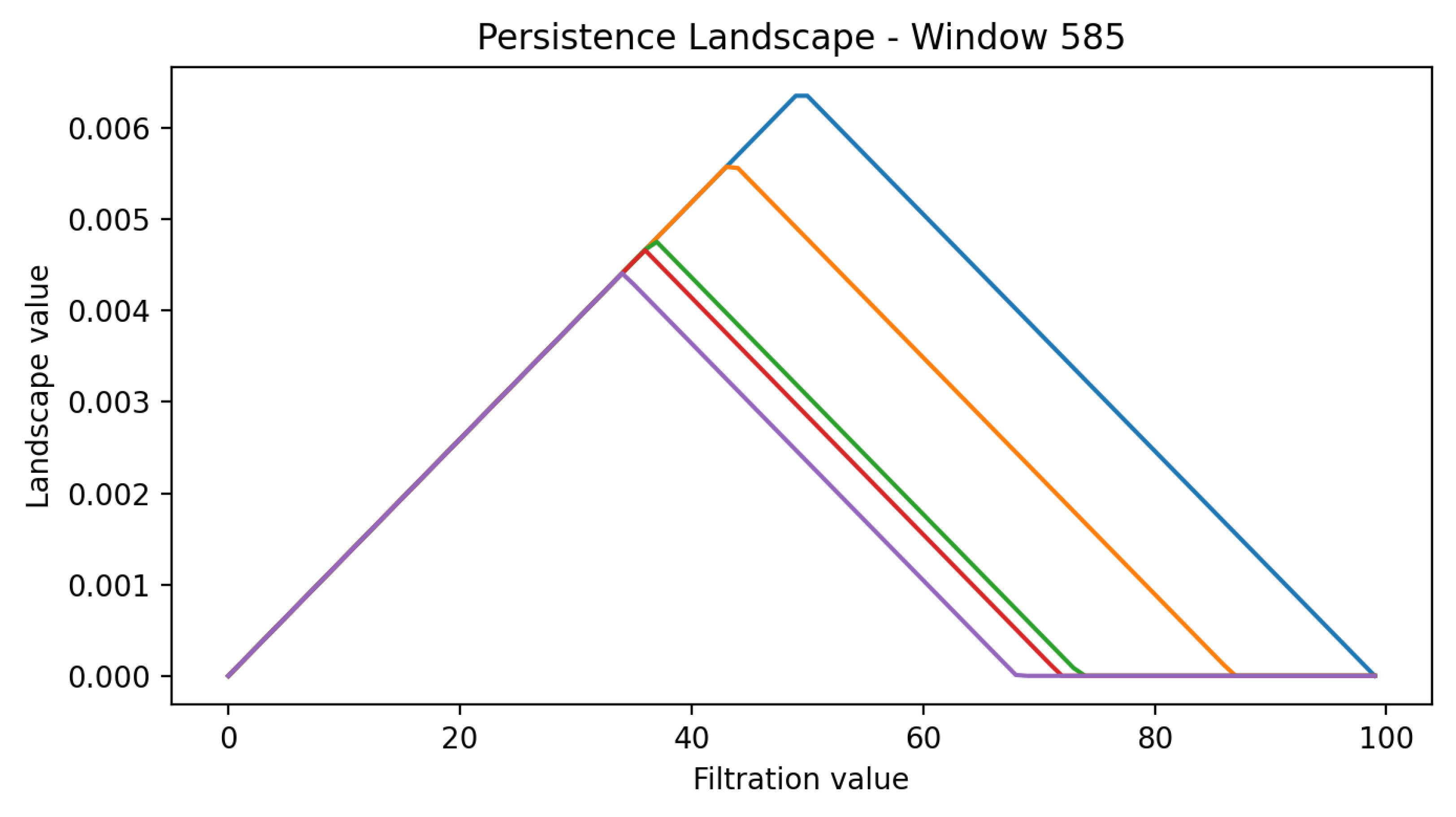

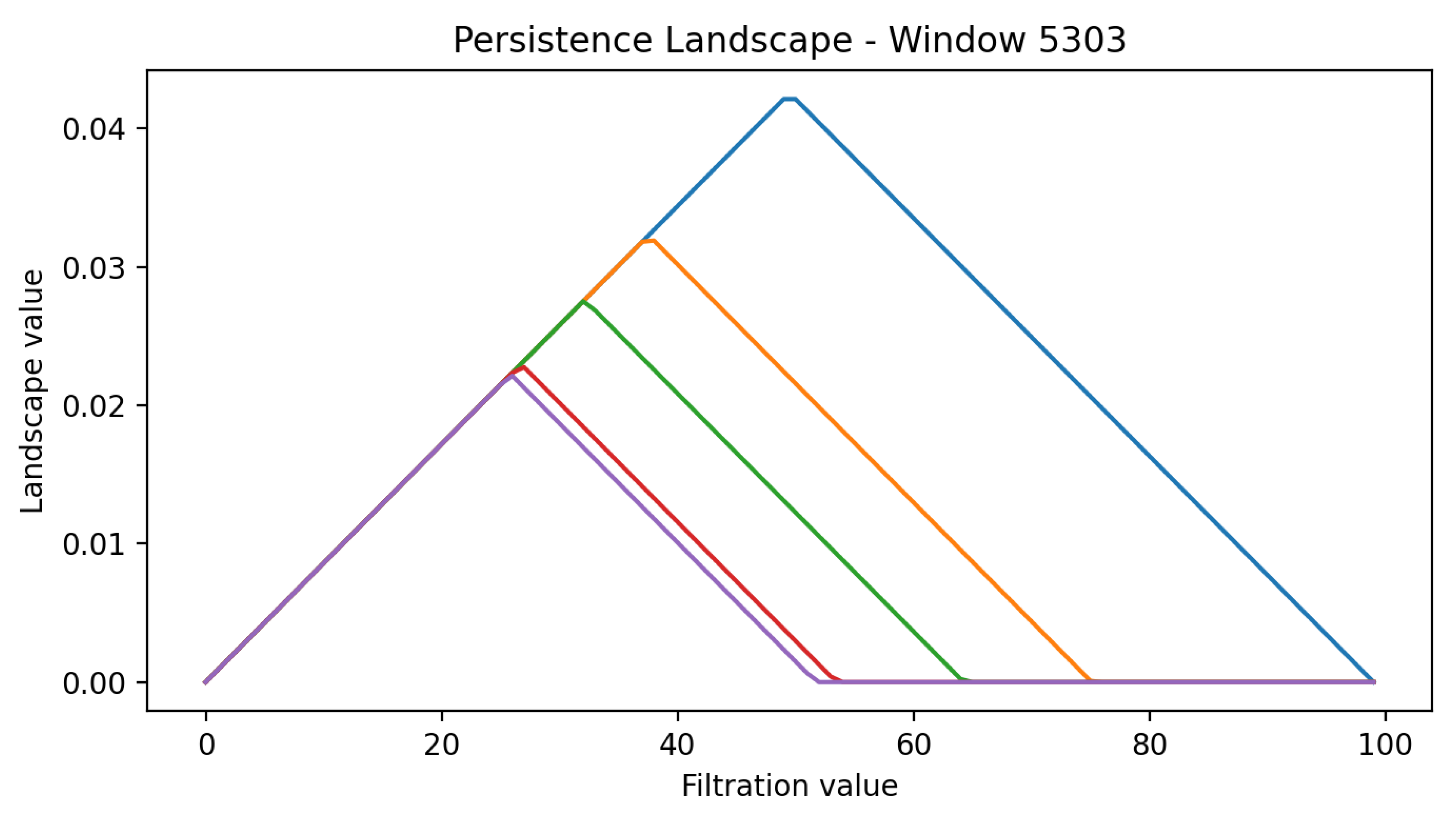

For statistical analysis, persistence diagrams are mapped into persistence landscapes using the

PersistenceLandscape transformer, with five layers and 100 bins. Landscapes convert discrete diagram points into functional summaries of persistence. Scalar indicators are derived by computing

and

norms:

In

Figure 8 and

Figure 9, the coloured curves are the first layers of the persistence landscape computed on the shown rolling window. Given a persistence diagram,

encodes, at filtration value

t, the magnitude of the

k-th most persistent homology class: lower layers (small

k) capture dominant, long-lived features, while higher layers represent progressively weaker features. The

x-axis reports the filtration value and the

y-axis the landscape amplitude. We plot the first five layers to highlight regime differences: windows preceding crises exhibit taller and broader peaks across multiple layers, whereas tranquil windows display flatter profiles.

4.4. Causal Detection of Early Warning Signals

The norm time series extracted from persistence landscapes is processed through a strictly causal detection rule, ensuring that only past information informs decisions. At each time j, three conditions are applied:

- (i)

Sudden local increase: ,

- (ii)

Short-term deviation: , where is the mean of the past s values,

- (iii)

Sustained elevation: , where is the mean of the past t values.

If all conditions are satisfied, j is flagged as the onset of an early warning signal. Consecutive detections are collapsed into single bursts to avoid redundancy.

Detected signals are compared with ground truth crises under the following rules:

True positive (TP): signal within a 180-day lead window before a crisis,

Late detection: signal within a 30-day lag window after a crisis,

False positive (FP): signal outside lead/lag windows,

False negative (FN): crisis with no associated signal.

From these, we compute Precision, Recall, and F1. Additional indicators include the false alarm rate (FAR), mean lead time, and mean delay time.

The contribution of each component of the pipeline is quantified through an ablation study. Holding the dataset, sliding window, and detector parameters fixed

, we vary one factor at a time: the norm (

L1 vs.

L2), the homology content (H

0 vs. H

1), the topological summary (landscape vs. raw diagram sum vs. persistence image), and each causal condition (local jump, short-term deviation, sustained elevation), with/without burst collapsing. Signals are matched to canonical crisis onsets using a 180-day lead window and a 30-day late window. Event counts (

TP/

Late/

FN/

FP), total signals, precision, recall,

F1, false-alarm rate, and mean lead/late times (

Table 2) are reported. All indicators are finite (no

NaN/

Inf).

As per

Table 2, the full pipeline (landscape +

L2, causal conditions i–iii with burst collapsing) balances anticipation and selectivity (

F1 , mean lead

days). Removing the

local jump condition (i) reduces precision (more spurious day-to-day spikes pass through), while removing

sustained elevation (iii) yields the largest precision drop, confirming both filters are important. The short-term deviation (ii) is comparatively less influential on this dataset. Regarding topological content, H

0-only nearly matches the full pipeline, whereas H

1-only generates many false positives, indicating connectivity features dominate loop structure for these point clouds. As negative controls, the raw diagram sum and the persistence image produce no warnings under the same strict causal detector, underscoring the stability of persistence landscapes for this task.

4.4.1. Baselines

Realized-volatility thresholds (global and rolling) and a simple

TDA+critical-slowing-down hybrid do not outperform the causal

TDA pipeline (

Table 3. A non-causal toponorm threshold attains some recall but with worse precision and a higher false-alarm rate. These comparisons highlight that (i) the landscape normalization and (ii) the causal detection logic are key drivers of performance.

4.4.2. Qualitative Evidence

Figure 10 and

Figure 11 provide the underlying series and log-returns.

Figure 12,

Figure 13 and

Figure 14 show that warnings occur near major build-ups in the landscape norms, while

Figure 15 situates the selected early warnings on the price trajectories alongside crisis onsets.

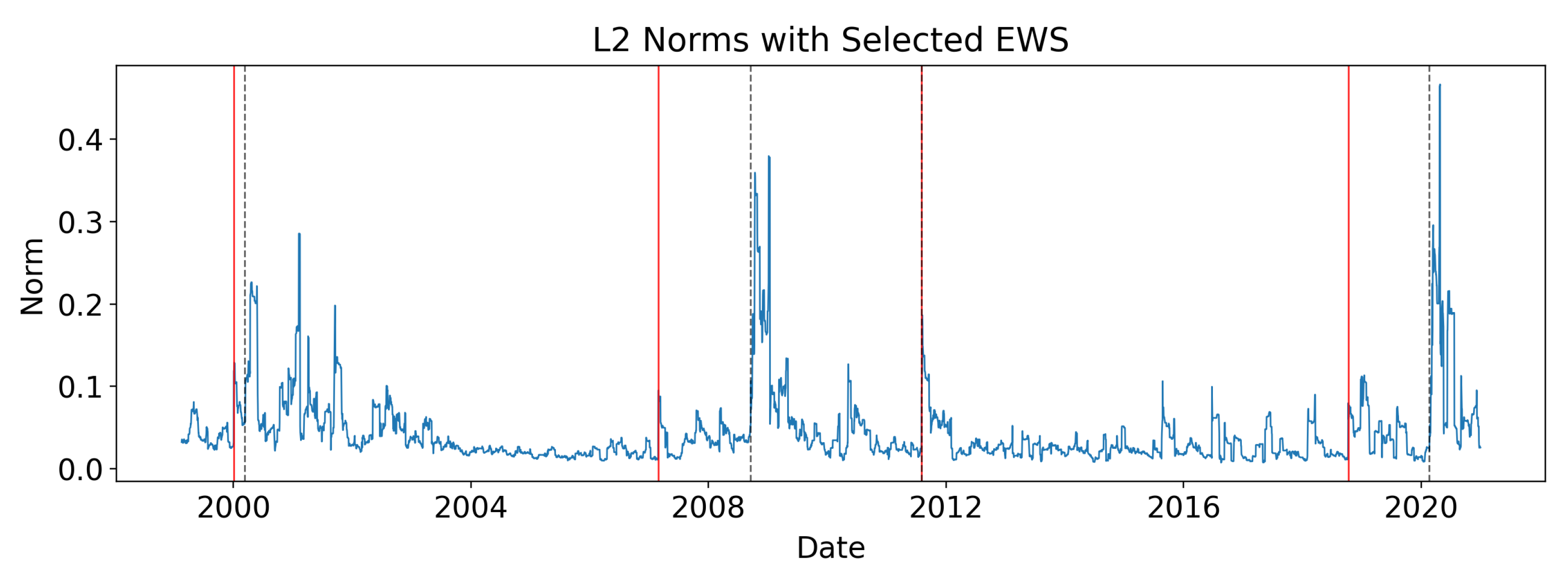

5. Discussion

Figure 16 shows the

norm series with detected signals. Spikes in the topological indicators cluster around known crises and often appear months in advance, suggesting that persistent homology captures structural changes in market co-movements before conventional stress measures. This anticipatory ability is crucial for systemic risk monitoring, as it provides decision-makers with valuable lead time for intervention.

At the strictly causal operating point used throughout (

= 3.1,

= 3.1, s = 57, t = 16), the aggregate performance is

with a mean lead time of about 34 days (

Table 2). This reflects a conservative decision rule that balances anticipation and selectivity under identical scoring across all variants.

5.1. Sensitivity Analysis

To probe robustness, we consider two complementary views. (i) For the strictly causal detector, varying

traces the usual precision–recall trade-off (

Figure 17), with recall higher at permissive settings and precision improving as thresholds tighten. (ii) As a permissive baseline, we also examine a simple spike detector controlled by a single

z-score threshold

;

Table 4 reports its behaviour as

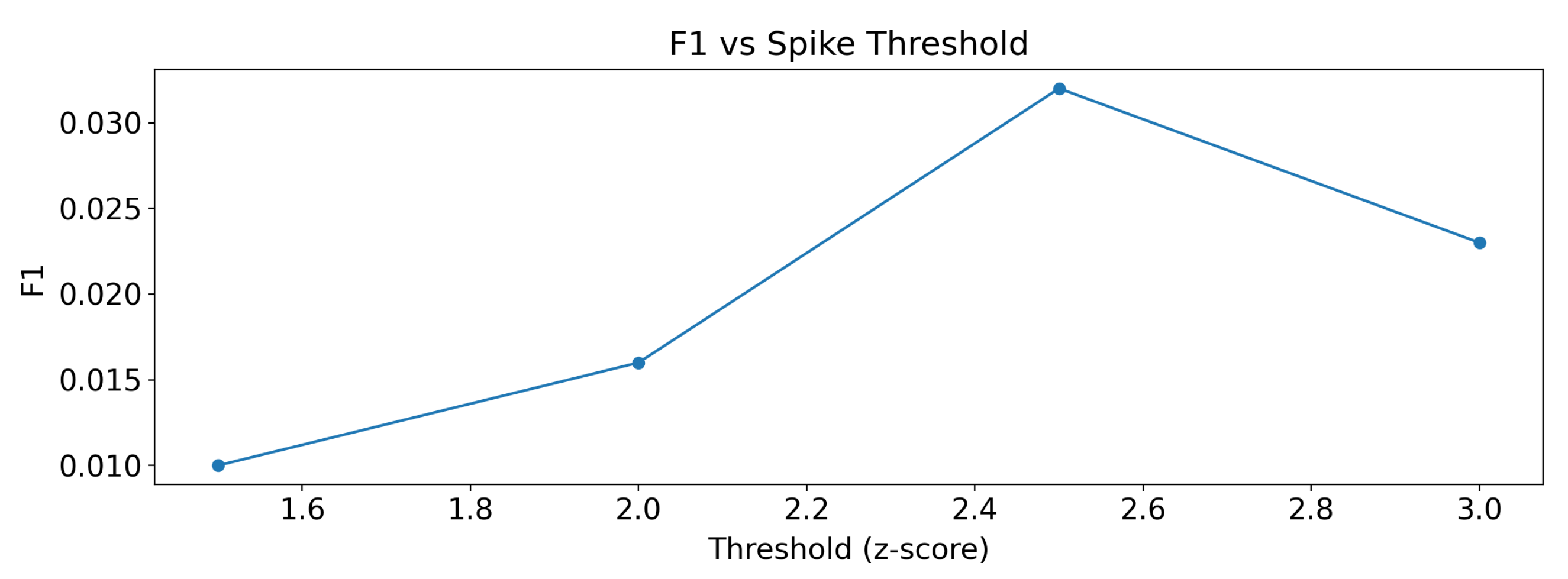

varies (1.5–3.0), showing the expected reduction in false alarms at the cost of lower recall at stricter thresholds. These results are methodologically distinct from the causal detector and are reported for context.

Figure 17 (spike baseline) shows that recall remains high at low-to-intermediate thresholds, while precision increases as

tightens. This illustrates the central trade-off: permissive settings capture most events but issue many false alarms, whereas stricter settings suppress noise but risk missing events.

Figure 18 complements this view by plotting the

score for the spike baseline. The curve exhibits a modest maximum around

–

; however, absolute

values remain small, consistent with the large number of false alarms at permissive settings.

5.2. Validation Against External Indicators

Validation against independent benchmarks is crucial. Using the

VIX index with spikes above 43 as markers of extreme volatility, four stress episodes are identified (2001, 2008, 2011, 2020).

Figure 19 and

Figure 20 show that all episodes are anticipated by the topological signals, with no false positives or late detections.

Figure 19 demonstrates close alignment with

VIX spikes: every extreme volatility episode is preceded by a topological signal, confirming that the method detects systemic stress in advance.

Figure 20 provides a more permissive alignment, showing that even beyond the strict spike threshold, topological warnings consistently lead to

VIX surges. This highlights the anticipatory character of the signals relative to a widely used benchmark of market stress.

5.3. Comparison with Literature

We re-implemented the core ideas that recur in prior work and evaluated them under the same strictly causal protocol (180-day lead, 30-day late window, identical crisis calendar):

- (a)

TDA landscape norms as early signals (e.g., pre-crash rises in norms): already the backbone of our method; we keep the landscape summary but evaluate it with a formal, causal event detector.

- (b)

TDA + critical slowing down (CSD) trend–based ideas (e.g., high AR(1)/variance together with a topological signal): implemented as a hybrid baseline (high realized volatility ∧ high AR(1)∧ high toponorm).

- (c)

Conventional financial EWS used in the crisis literature: (i) realized-volatility exceedance at a global 95th percentile and (ii) a rolling 95th-percentile realized-volatility rule.

- (d)

Controls within TDA to probe representation choice: a non-causal toponorm threshold (95th) and a persistence-image+causal variant.

Under identical settings, the proposed pipeline (landscape + causal conditions with burst collapsing) achieves a balanced precision–recall with F1 = 0.50 and a mean lead of ≈34 days. In contrast:

Realized-volatility thresholds (global and rolling) and the TDA+CSD hybrid do not anticipate crises under the strict causal scoring (F1 in our dataset).

The non-causal toponorm threshold recovers some recall but at the cost of many false alarms (e.g., Precision , Recall , F1).

The persistence-image + causal control rarely triggers, reinforcing that persistence landscapes are the more effective summary here.

The comparison highlights and contributions are, therefore, the following:

Causal event detection and accounting. Prior TDA work often reports pre-crash trends; we formalize a strictly causal detector (local jump, short-term deviation, sustained elevation, and burst collapsing) and report TP/Late/FN/FP with per-crisis matches (CSV provided), enabling transparent benchmarking rather than relying on visual inspection alone.

Representation choice matters. Persistence landscapes yield selective, auditable warnings; persistence images and raw diagram sums act as negative controls under the same detector.

Module-level evidence. Results show that features and conditions (i) and (iii) contribute most to precision on this dataset.

Finally, with regard to the limitations of this work, it is possible to see that the number of canonical crises is small; results depend on dating conventions and the lead/late windows (fixed ex-ante for all methods). On these US equity indices, carries less predictive value than ; we caution against over-generalizing that finding to other markets.

6. Conclusions

This work introduced a strictly causal early–warning framework for financial crises that extracts topological signals from multivariate return streams. Sliding windows of daily log–returns are embedded as point clouds, Vietoris–Rips persistent homology is computed, and persistence landscapes provide an interpretable summary whose norm acts as a single scalar indicator. A simple, transparent decision rule—parameterised by and run–length controls —suppresses isolated spikes and collapses bursts into time–stamped warnings, yielding a pipeline that is auditable end–to–end.

On four major U.S. equity indices (S&P 500, NASDAQ, DJIA, Russell 2000) over 1999–2021, the method attains, at a fixed strictly causal operating point , a balanced precision–recall () with an average lead time of about 34 days. It anticipates two of the four canonical crises and issues a contemporaneous signal for the 2008 global financial crisis. Sensitivity analyses show that varying traces the expected precision–recall trade–off without altering the qualitative behaviour of the detector, while an external alignment against VIX spikes corroborates the anticipatory content of the signals.

Ablation studies clarify the contribution of each stage. The full pipeline—landscape summary with norm, causal conditions (i–iii), and burst collapsing—dominates simplified variants. Removing individual causal conditions increases false alarms; using instead of reduces discrimination; restricting to a single homology dimension ( or only) degrades performance, with alone producing many spurious detections. Raw diagram aggregates and persistence–image baselines are weaker under the same scoring. Against non–topological comparators (e.g., realised–volatility thresholds or permissive spike rules), the causal topological pipeline achieves substantially fewer false alarms at comparable recall.

The approach is interpretable: landscape layers visualise the emergence and growth of topological features within a window, while the norm condenses this multi–layer structure into a transparent time series of geometric stress. The resulting warnings are therefore traceable both to specific windows and to visible changes in the shape of the data.

The present study is confined to U.S. equity indices at daily frequency and a fixed window length; results may vary across assets, horizons, and market microstructures. Crisis labels carry inherent dating uncertainty, and the small number of major events limits statistical power. The detector is threshold–based rather than probabilistic and is not evaluated as a trading strategy. Future work will extend the analysis to cross–asset and cross–market settings, explore multiscale and adaptive windowing, investigate alternative filtrations and distances, and integrate calibrated learners (e.g., conformal or Bayesian post–processing) for probabilistic warning scores. Formal uncertainty quantification for topological summaries and tighter couplings with macro–financial covariates are also promising directions.