1. Introduction

Learning Disabilities (LDs) are the most prevalent developmental challenges among school-aged children, affecting approximately 6–9.9% in Thailand and around 10% globally [

1,

2]. The three main subtypes, dyslexia, dysgraphia, and dyscalculia, are linked to specific cognitive impairments, with dyslexia accounting for roughly 80% of cases [

3,

4]. A key deficit in dyslexia is directional processing, which impairs spatial awareness and symbol discrimination [

4,

5,

6,

7]. Children often confuse letters, for example, “b” and “d” or “p” and “q”, and about 80% of severe cases show signs of directional confusion. These spatial difficulties frequently co-occur with phonological deficits, contributing to slow or inaccurate reading and spelling [

8].

Existing computer-based tools show promise but remain limited [

9,

10,

11,

12,

13,

14] by expert requirements [

2,

3,

4,

15], long procedures [

3,

16,

17], and language dependence [

2,

3,

4,

18,

19]. In many regions, access to clinical diagnosis is also constrained by shortages of specialists, waiting times, and costs [

1,

16,

17,

20,

21]. Game-based approaches offer engagement and allow performance tracking, but most remain outcome-focused rather than process-sensitive.

Recent studies have applied machine learning to dyslexia preliminary risk assessment using both language-dependent and independent features, along with multimodal data such as speech, handwriting, and eye movements [

9,

10,

22,

23]. Algorithms such as Support Vector Machines (SVM), Random Forest (RF), and K-Nearest Neighbors (KNN) have been widely explored [

22,

24,

25,

26,

27,

28,

29,

30,

31]. Studies utilizing these models report varying success; for instance, some ensemble models (Extra Trees) achieved moderate accuracy (0.67–0.75) [

25], while others combining multiple features reached high accuracy (90–92.22%) [

23,

32], highlighting the potential of robust classifiers for this task.

Mouse tracking has become a practical process-tracing method for capturing moment-to-moment cognitive states [

33]. Kinematic features such as trajectory deviation, velocity, and pauses co-vary with uncertainty, cognitive load, and shifts in attention [

34]. Prior work has linked higher cognitive loads to slower cursor speeds, more direction changes, and longer pauses, which resemble eye fixations as markers of processing effort [

35,

36,

37]. In the context of dyslexia, processing difficulties are often reflected in prolonged and frequent fixations during reading [

35,

36,

37]. Building on this, we hypothesize that directional confusion, a potential marker of dyslexia risk, may manifest in longer pause durations, reduced click acceleration, and increased hesitation during mouse activity.

Despite this potential, few studies have directly examined directional perception through interactive responses [

38]. For instance, MusVis [

25] applies machine learning but mainly targets general visual patterns (symmetries) or musical cues, rather than kinematic signals of directional processing. Likewise, other tools rely on static symmetrical or mirrored stimuli [

16,

20,

24,

25,

28,

39,

40,

41,

42] (

Table A1), overlooking real-time behavioral markers that reveal how children manage, or fail to manage, directional deficits. Because directional difficulties can precede academic deficits, such tasks are promising for early, preliminary risk assessment [

23,

32,

43]. From existing studies, few tools appear to combine three key elements: (i) fine-grained kinematic behavioral analysis (click acceleration, fixation duration), (ii) a game-based format to maintain engagement, and (iii) explicit assessment of directional perception, an area particularly challenging to capture for dyslexia risk evaluation.

To address this gap, we introduce the Direction Game, a game-based task that uses mouse-tracking to capture behavioral signals related to directional confusion. The present study is designed as a preliminary feasibility study aimed at establishing the ecological validity of the proposed measurement methods within a real-world educational setting, where standardized school screening is the established ground truth. It is not presented as a definitive clinical validation study but rather as an effort to surface subtle behavioral indicators that are less visible in conventional assessments.

Specifically, this study addresses three research questions:

- (1)

To what extent can a computer-based game utilizing only directional cues identify behavioral markers associated with dyslexia risk in young children?

- (2)

Which additional mouse-tracking and gameplay variables provide significant predictive power beyond those used in prior studies?

- (3)

Which classification approach, baseline models or ensemble models, yields superior performance for preliminary dyslexia risk assessment under a fivefold cross-validation framework?

The paper is structured as follows.

Section 2 introduces the exploratory stage, including the pilot study and the development of the Direction Game.

Section 3 presents the confirmatory stage, covering the dataset, feature extraction, and machine-learning validation.

Section 4 reports the experimental results,

Section 5 discusses findings and limitations,

Section 6 provides recommendations, and

Section 7 concludes the paper.

2. Pilot Study and Direction Game Design

Phase 1 consisted of an exploratory paper-based pilot designed to test the prototype game and evaluate the feasibility of direction-based tasks for young children. This phase aimed to gather feedback on task appropriateness and the User Interface (UI) and User Experience (UX) design of the game.

The special education experts provided guidance on the game design for screening directional confusion in young students at risk of dyslexia. The key recommendations included:

The effectiveness of tasks for measuring directional confusion.

The types of tasks required to accurately distinguish children with dyslexia.

The application of a graduated task-based approach (via progressive difficulty) to differentiate typically developing children from those with persistent difficulties.

Consequently, the paper prototype was created with three mini-games reflecting sub-indicators of directional understanding. The order of these mini-games was structured according to the experts’ recommendations.

2.1. Pilot Study

Participants were recruited from two public schools in Mueang District, Lampang Province: Banpongsanook (BPN) and Suksasongkraojitaree (SKJ). BPN provided 18 second-grade students (aged 7–9), categorized as at-risk, high-performing, or average-performing. SKJ contributed six third-grade students (aged 8–10), all identified as at risk (as they were the only students in the school who met the inclusion criteria at the time). Schools were selected for variation in size, willingness to participate, and administrative support, ensuring contextual diversity.

The purposive sampling approach, guided by special education experts, was selected given the practical limitations in accessing formally diagnosed populations in Thailand. Due to the limited access to formal clinical services, such as those provided by clinical psychologists or special education assessment professionals, a common and acknowledged challenge in rural Thai schools, this approach was necessary to ensure the inclusion of at-risk students identified through school-based screening.

During administration, children were given clear explanations and group-based verbal instructions (“Draw a line from the fruit to the plate”). These tasks required sequential directional responses, which allowed the researchers to observe how students followed stepwise instructions.

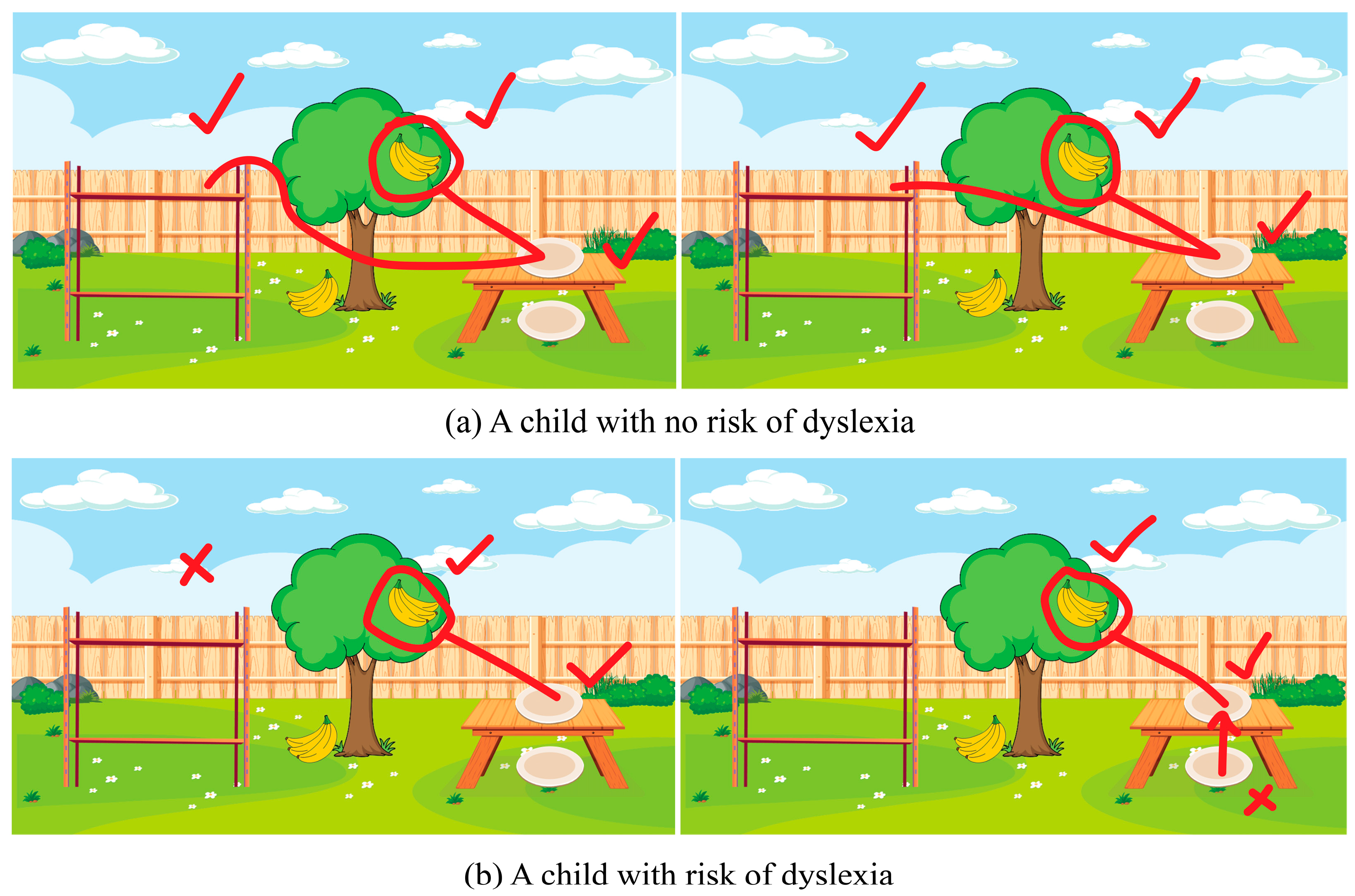

The observations indicated that some at-risk students struggled with spatial tasks (drawing a line from a plate to a shelf, see

Figure 1), reflecting directional confusion consistent with prior findings [

7,

38,

44]. However, the paper-based format captured only outcomes rather than underlying processes such as hesitation or error correction.

2.2. Game Design

Following the pilot study, the Direction Game was implemented in Unity3D (version 2021.3.20f1), incorporating the revised task structure and interface design to create a preliminary risk assessment tool for dyslexia in children. The game was designed with simple visuals, short text, and optional Thai audio to maximize accessibility for young users. Furthermore, the directional tasks are inherently language-independent and can be easily adapted to any language once the core commands are translated, thus supporting wider use across different linguistic populations.

The observed difficulty in drawing a line from one location to another, which may indicate possible directional confusion, emphasizes the need for data collection across a variety of cognitive and motor tasks. In addition to performance and accuracy-based outcomes, the game also needs to record mouse trajectories, providing supplementary motor-related data (extra movements, pauses) for later analysis [

38,

45]. The following section introduces the mini-games that implement these concepts.

2.3. Mini-Games

The Direction Game consists of three mini-games arranged in ascending difficulty, each addressing a distinct type of directional confusion: vertical (Up–Down), lateral (Left–Right), and cardinal orientation (North, South, East, and West). Each mini-game incorporated three levels of difficulty, easy, medium, and hard, structured across 10 rounds (3 easy, 4 medium, 3 hard). Before gameplay, children were given instructions to ensure they understood the mechanics. Examples of the three mini-games at different difficulty levels are shown in

Figure 2. (The English translations of commands provided here are for publication purposes only).

Mini-game #1: Fruit Picking (Vertical Perception): This task assessed vertical confusion (up–down). Participants clicked on fruit positioned at the top or bottom of a tree (“Click on the fruit at the top of the tree”) to test their interpretation of vertical spatial cues.

Mini-game #2: Fruit Passing (Lateral Perception): This task assessed left–right confusion. Participants passed fruit to the avatar’s left or right hand, with the avatar facing either toward or away from the player (“Pass the fruit to the left hand”).

Mini-game #3: Chicken Walking (Cardinal Orientation): This task assessed cardinal orientation. Participants guided a chicken character along a map using symbolic arrows representing North, South, East, and West (“Make the chicken walk up”).

Instructions were displayed both as on-screen text and as optional audio, which can be replayed. At the end, a summary screen showed the participant’s name with options to replay or exit.

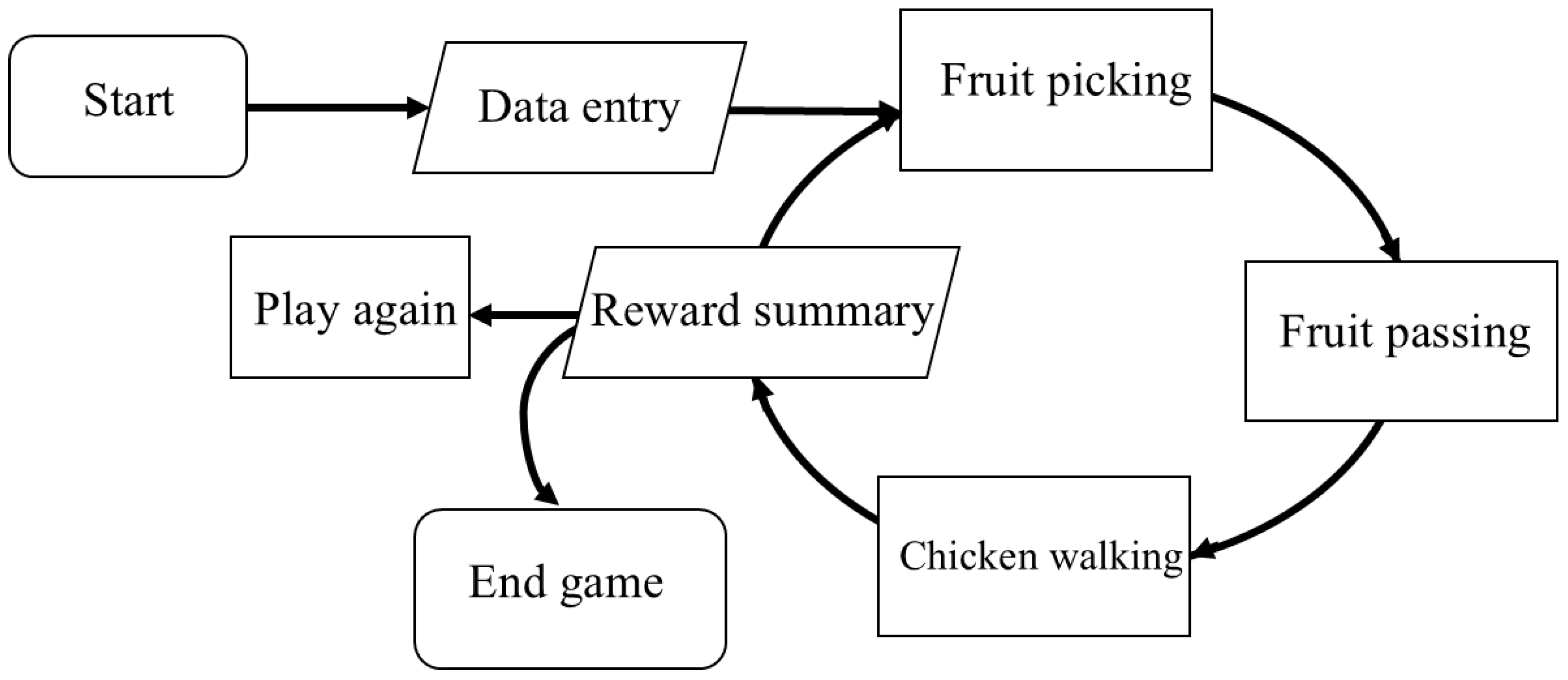

Figure 3 illustrates the overall flow of gameplay in the Direction Game.

2.4. Behavioral Assessment

The pilot study enabled the transition of the Direction Game from measuring performance outcomes to measuring and controlling the process of the player’s behavior. The core challenge is separating confusion from other variables that also affect mouse trajectories. To address this, this section outlines the task conditions used to control confounding factors and the behavioral features extracted for analysis.

2.4.1. Task Conditions

The Direction Game was designed to differentiate directional confusion from general task difficulty by varying cognitive and motor demands. Task conditions were categorized by Task Difficulty (TD), Cognitive Load (CL), and Motor Load (ML). Response Latency (RL, delay before the first response), Movement Time (MT, duration of cursor movement), and Movement Jerk (variability in movement acceleration) were observed.

- (1)

Baseline (Low load): This condition (Low TD, Low CL, and Low ML) was implemented in Mini-Game #1: Fruit Picking. This task uses fixed, absolute spatial references with minimal cognitive or motor demand, serving to establish each child’s typical, non-confused kinematic profile (characterized by fast RL, fast MT, and low jerk).

- (2)

Motor Load Control (High motor load): This condition is a variant of Fruit Picking designed to isolate motor execution demands rather than cognitive processing. By reducing the clickable target size, the task imposes high motor load but minimal cognitive load (High TD, Low CL, High ML). The expected behavioral pattern is a fast decision (low RL) paired with a longer MT featuring a deliberate and steady trajectory. This pattern reflects physical precision effort rather than hesitation arising from cognitive confusion.

- (3)

Cognitive Load (Directional Confusion): Two mini-games were designed to impose high cognitive load while maintaining moderate motor demands (High TD, High CL, and Medium ML), each testing distinct forms of directional confusion:

Mini-game #2: Fruit Passing imposed higher cognitive demand through perspective-switching (avatar facing toward and away).

Mini-game #3: Chick Walking required map-based navigation using cardinal directions, eliciting more complex planning demands.

Controlling baseline, motor load, and cognitive-load tasks provided a framework for distinguishing directional confusion from general motor or task demands. Together, these mini-games turned abstract spatial concepts into simple, engaging activities that were intended to enable child-friendly detection of directional difficulties.

2.4.2. Behavioral Features

Raw gameplay logs and mouse-tracking streams were processed to capture behavioral variables. Informed by prior studies [

22,

24,

25,

26,

27,

28,

29,

30,

31], these variables were organized into three construct-driven domains: Organizational Skills (OS) [

46,

47], Concentration (CONC) [

47,

48], and Performance (PF) [

49,

50], which reflect task efficiency, attentional control, and outcome performance, respectively.

The detailed operationalization of these constructs, mapping them from raw data measures into 20 behavioral variables, is presented in

Table A2. The complete feature extraction and engineering process is described in

Section 3.4.

3. Validation Study Using Machine Learning Models

Phase 2 is a validation study designed to collect gameplay and mouse-tracking data from 102 participants for machine learning analysis.

3.1. Experimental Design

Research variables and control of confounding factors: The independent variable was group classification (at-risk and non-at-risk), while the dependent variables were behavioral features, including response time, movement dynamics, mouse clicks, and accuracy. All tasks used mouse clicks only, minimizing fine-motor demands.

Study procedure and data collection flow: Children received standardized instructions before gameplay and then completed the three mini-games targeting vertical, lateral, and cardinal directions. Data were collected in a controlled environment using notebook computers (1920 × 1080). Mouse trajectories were recorded in real time with Ogama (version 5.1, 2021), a behavioral data logging software. Motor behaviors such as extra movements or pauses were not task outcomes but were captured as supplementary data.

3.2. Participants, Process, and Tools

The participants were 102 students (ages 7–12; mean = 8.5, SD = 1.1; Grades 2–4) from Ban Pong Sanuk School, Lampang, Thailand. The school’s special-education teacher classified students as at-risk (n = 58) or non-at-risk (n = 44). All students had undergone routine school-based screening for reading and learning difficulties prior to data collection.

Standardized school screening process: In Thai primary schools, learning-difficulty screening follows a multi-week, curriculum-embedded process rather than a single assessment. In this study, all participants had undergone at least one academic term (≈4 months) of preliminary screening, including behavioral observation records, curriculum-based assessments, and Tier-1 risk-screening instruments administered by trained special-education teachers.

Screening and group classification: Classification was based on classroom observations and two standardized Tier-1 tools mandated in Thai public schools: (1) the Office of the Basic Education Commission (OBEC), Checklist for classifying the types of disabilities, a standard instrument mandated by the Ministerial Regulation B.E. 2550 (2007) requiring initial screening for eligibility to special education services, and (2) the Kasetsart University Screening Inventory (KUS-SI), developed by Kasetsart University Laboratory School (KUS) and the Faculty of Medicine Siriraj Hospital (SI). The KUS-SI has shown strong correspondence with clinical assessments (>94% diagnostic agreement; ≥90% scoring accuracy) [

51,

52,

53,

54], supporting its suitability for school-based risk identification. Although not equivalent to clinical diagnosis, these instruments represent the most feasible evidence-based approach in Thai primary schools, where access to psychologists and diagnostic services, especially in rural areas, is limited.

3.3. Data Collection and Preparation

For the classification experiments, the dataset was organized into five systematically defined feature configurations to compare predictive performance and interpretability. Here, n refers to the number of features included in each configuration.

- (1)

Game Element Features (n = 8): In-game telemetry variables (misses, hits, score, play time).

- (2)

Mouse-Tracking Features (n = 90): Cursor dynamics including movement, fixation duration, clicks, velocity (horizontal velocity (Vx) and vertical velocity (Vy)), and acceleration (a) along X–Y axes.

- (3)

All Features (n = 98): Combined game-related and mouse-tracking variables.

- (4)

Behavioral Features (

n = 20): Construct-driven indicators mapped from raw features into three domains: OS, CONC, and PF, as defined in

Section 3.4.

- (5)

Selected Features (

n = 18): A compact subset carried forward as the main basis for classification and subsequent analysis of interaction patterns (see

Section 3.6).

To ensure validity and avoid data leakage, all preprocessing was performed within each training fold prior to model evaluation.

3.4. Feature Extraction and Engineering

Raw gameplay logs and mouse-tracking streams were processed into all quantifiable variables. To improve interpretability, we map these measures onto our behavioral framework (introduced in

Section 2.4.2). These behavioral variables are grouped into our three construct-driven domains:

- (1)

OS: Indicators of task efficiency and error control from game telemetry. Subcategories:

Speed and Latency (SL) (Time to first click, Total play time), commonly used as indicators of task efficiency and information-acquisition timing in mouse-based attention research [

47].

Accuracy and Error Control (AEC) (Misses), consistent with accuracy deficits observed in dyslexia [

46].

- (2)

CONC: Indicators of attentional and visuo-motor control. Subcategories:

Attentional Control (AC), which includes Fixation Behavior (FB) (Fixation duration, defined as pauses ≥ 100 ms within a 20-pixel radius), Hesitation and Re-checking (HRC) (Number of duplicate clicks, Time difference in clicks), and Exploration and Focus (EVF) (Trajectory length). These measures have been used in prior mouse-tracking and visuomotor-decision studies as indicators of hesitation, impulsive re-clicking, and cognitive load [

47,

48,

55].

Visuo-motor Planning and Dynamics (VMPD), which includes Movement Amplitude (MA) (Mouse movement (count)) and Kinematics (KIN) (of mouse movement (Std)). Duplicate clicks (points ≥ 2 within ≤20 pixels) were coded as repeated attempts and interpreted as corrective visuo-motor adjustments [

47,

48,

56,

57,

58].

- (3)

PF: Outcome-based indicators of accuracy and throughput. Subcategories:

Accuracy and Error Control (AEC) (Score/success rate), a standard outcome measure in reading- and visuomotor-related performance assessments [

49,

50].

Throughput (THR) (Speed (clicks/second)), commonly used as an indicator of processing efficiency and motor fluency in child–computer interaction studies [

49,

50].

The 20 behavioral variables (

Table A2) were derived from construct-to-behavior mapping informed by prior studies on mouse-tracking and reading-related behaviors [

2,

3,

46,

47,

59,

60,

61]. A hybrid feature-selection procedure then reduced these to the Selected-18 set (

Table A3). Because many of these engineered variables reflect correlated aspects of the same visuomotor processes, six representative indicators, covering the core dimensions of OS, CONC, and PF, were chosen to summarize broader behavioral patterns. The remaining variables were retained for modeling but not emphasized due to redundancy or weaker discriminative value.

3.5. Modeling

To compare model performance for dyslexia risk classification, we tested models of varying complexity. Baseline algorithms included Gaussian Naive Bayes, Decision Tree, Support Vector Machine (SVM), AdaBoost, and Random Forest (RF). In addition, two ensemble strategies were implemented:

Models were selected for interpretability, efficiency, and suitability for small-sample contexts, with priority given to simpler classifiers. All experiments followed a two-stage framework: (i) feature set specification and (ii) comparative model evaluation. Models were trained and validated using stratified 5-fold cross-validation with a fixed random seed. To address class imbalance, the Synthetic Minority Oversampling Technique (SMOTE) was applied within each training fold to prevent data leakage.

3.6. Data Analysis

Before classification, descriptive and inferential analyses were performed on the 20 behavioral variables to characterize group differences (at-risk and non-at-risk). For each variable, group means and standard deviations were computed and compared using Welch’s

t-test (

α = 0.05). Effect sizes were calculated as Cohen’s

d with 95% confidence intervals, with positive values indicating higher scores in the at-risk group. The results are summarized in

Table A4, which reports statistical significance (

p-values) and effect sizes for each variable. These analyses were used for feature characterization; the classification results are reported in

Section 4.

Model performance was evaluated using complementary metrics. The primary metric was the Receiver Operating Characteristic and Area Under the Curve (ROC-AUC). In addition, the Area Under the Precision–Recall Curve (AUPRC) was reported to emphasize correct identification of the at-risk class under class imbalance. Accuracy, Sensitivity (Recall), Specificity, and Cohen’s κ were calculated to provide threshold-dependent measures and agreement beyond chance.

To characterize broader behavioral tendencies, six representative indicators, duplicate clicks (HRC), fixation duration (FB), accuracy (PF-AEC), misses (OS-AEC), time difference between clicks (HRC), and trajectory length (EVF), were selected to summarize performance across the three behavioral domains (OS, CONC, PF) [

33,

34,

36,

38,

46,

47,

55,

57,

58]. Five exploratory profiles were hypothesized and later examined against empirical clustering results (

Section 4.4). The profiles were defined as follows:

Profile 1—Hesitation: High duplicate clicks, long fixation durations, extended click interval, and increased trajectory length.

Profile 2—Impulsivity: High duplicate clicks, short fixation durations, short click intervals (rapid corrective movements).

Profile 3—Deliberate processing: Low-to-moderate duplicates, long fixation durations, extended click interval, and high accuracy.

Profile 4—Fluent performance: Low duplicates, short fixation durations, high accuracy, and minimal misses.

Profile 5—Disengagement: Overall low engagement across fixation and duplicates, coupled with low accuracy and elevated misses.

5. Discussion

We evaluated the Direction Game, designed to distinguish directional confusion from motor difficulty. Framed by three behavioral domains: OS, CONC, and PF, the most informative signals in this dataset were found within the CONC domain, especially AC and VMPD, while PF contributed little. These results are consistent with the experimental rationale and indicate that mouse-tracking can capture behavioral markers relevant to early screening.

5.1. Interpretation of Key Findings

As shown in

Table 1, the Selected-18 consistently offered the best balance between predictive performance and interpretability. This outcome highlights the value of combining construct-driven mapping with statistical filtering when deriving behavioral indicators.

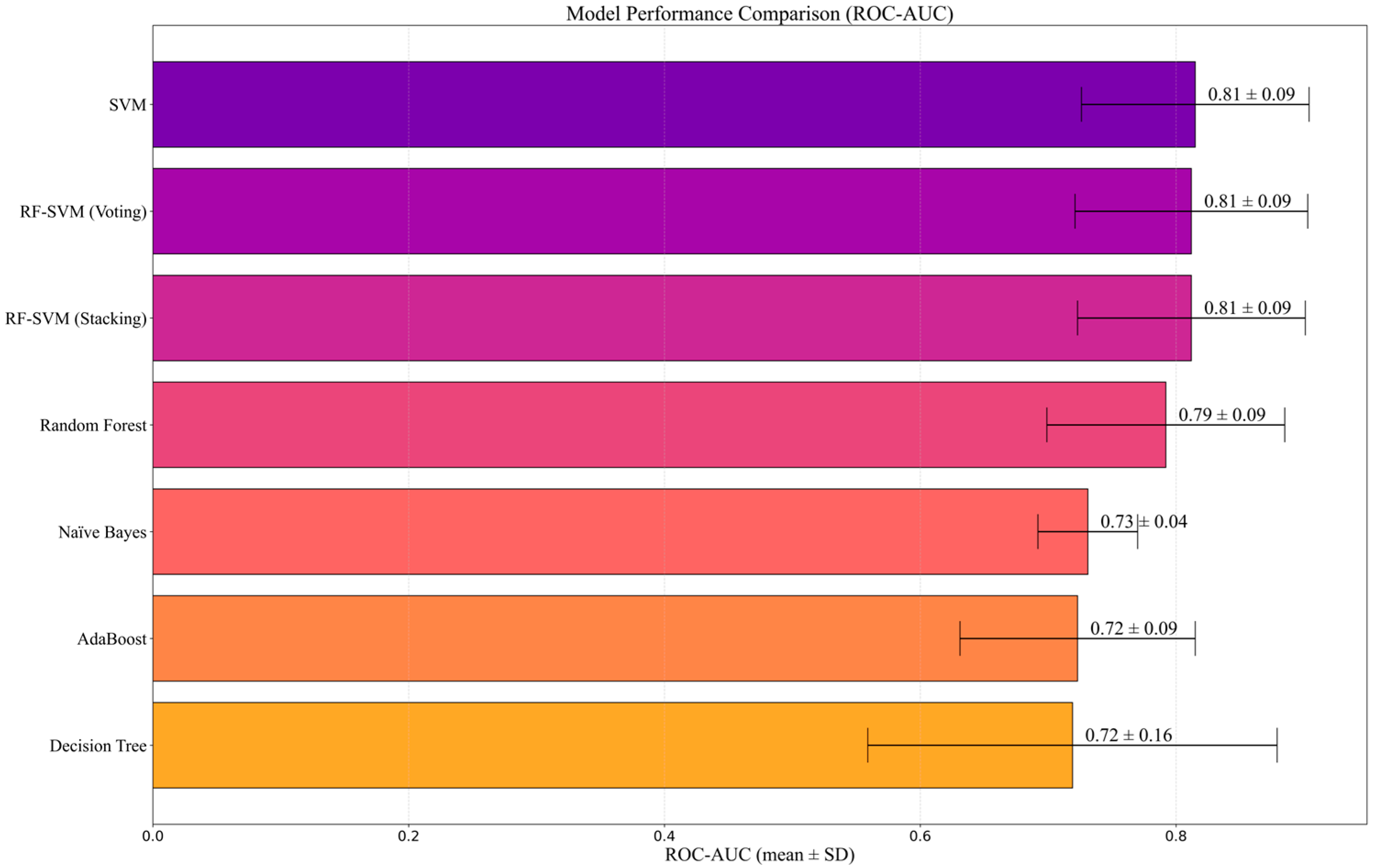

Model selection. While the top three models (SVM, RF–SVM Voting, and RF–SVM Stacking, as shown in

Table 2 and

Figure 4) showed no statistically significant differences, SVM was retained as the reference model. It offered simplicity, lower computational cost, and relative robustness under modest sample conditions, which is preferable at the feasibility stage where marginal gains from ensembles may not justify added complexity. In contrast, Naive Bayes produced the highest specificity but substantially lower sensitivity, indicating a tendency to under-identify at-risk students, an unfavorable trade-off for Tier-1 screening contexts where capturing potential risk cases is prioritized.

Behavioral and outcome features. Differences between at-risk and non-at-risk children were more apparent in fine-grained mouse-interaction measures than in overall task accuracy. This was expected by design: the task difficulty was intentionally kept low so that both groups could achieve similar accuracy, allowing process-level behaviors, such as extended fixations, higher duplicate clicks, or longer trajectories (

Table A3 and

Table A4), to become more observable. Within the Concentration domain, these patterns were consistent with behaviors observed during gameplay, including slower decision transitions and repeated clicking during movement delays. These observations reflect interaction tendencies within the task environment and are not interpreted as indicators of underlying cognitive mechanisms.

Behavioral interaction profiles. Exploratory clustering of six behavioral variables revealed patterns resembling hesitation, impulsivity, deliberate processing, fluent performance, and disengagement (

Figure 5,

Table A5). Hesitation (Cluster 3) showed prolonged fixations and extended cursor trajectories, consistent with decision uncertainty [

33,

36,

47,

55]. The Impulsivity pattern (Cluster 0) exhibited rapid duplicate clicks, short click intervals, and many misses [

57,

58]; however, these behaviors may also reflect frustration, low confidence, motor difficulties, or misunderstanding of instructions, as supported by previous research [

57,

58,

62]. Disengagement was less distinct, with low engagement but relatively high accuracy, suggesting task demands were insufficient to elicit typical performance drops. At-risk children were more often in clusters with longer trajectories, prolonged fixations, higher duplicate clicks, short intervals, and more misses, whereas non-at-risk children showed shorter trajectories, fewer fixations, lower duplicate clicks, and fewer misses. These raw behavioral indicators serve as preliminary signals for early risk assessment rather than diagnostic evidence.

Evaluation trade-offs. Complementary metrics (ROC–AUC, AUPRC, sensitivity, specificity, accuracy, and Cohen’s κ) helped characterize model behavior under class imbalance. These metrics revealed expected trade-offs between identifying more at-risk cases and minimizing false-positives. For Tier-1 screening, prioritizing sensitivity may be acceptable as long as the false-positive rate remains manageable.

Overall, the findings indicate that the Direction Game can capture interaction-level signals beyond outcome accuracy, indicate suitable model options for small-sample feasibility testing, and reveal observable interaction styles. Collectively, these results offer preliminary evidence that process-level behavioral analysis may support early risk identification in school-based Tier-1 settings.

5.2. Benchmarking Against Existing Dyslexia Screening Tools

Existing non-linguistic tools, such as MusVis or Whac-A-Mole variants, typically emphasize outcome-level measures (accuracy or response time) and rely on static visual or symbolic cues [

25,

30]. In contrast, the Direction Game captured process-level interaction signals through mouse tracking, which may reflect aspects of attentional control and planning effort, as suggested by prior research (see

Table A3 and

Table A4). This extends prior work linking mouse dynamics to cognitive states [

33,

34,

35,

36,

37] while retaining a simple, low-resource format suitable for Tier-1 school contexts.

Other tools remain constrained by language dependence or specific age ranges (GraphoLearn, Lucid Rapid, Askisi-Lexia, Galexia, Fluffy) [

20,

22,

40,

42,

63,

64]. By comparison, the Direction Game requires only minimal linguistic mediation, making it potentially more adaptable in multilingual classrooms.

Finally, systems such as Beat by Beat demonstrate the potential of multimodal sensing but require costly hardware and setup [

19]. The present approach offers a low-overhead alternative (PC + mouse) that may later be integrated into broader multimodal frameworks. Comparative performance claims are beyond the scope of this study.

5.3. Limitations

This feasibility study has several limitations. First, the sample was purposive and drawn from a single school, with an at-risk proportion higher than the typical prevalence. This limits generalizability and introduces potential selection bias. The class distribution was also inverted relative to real-world screening (more at-risk than non-at-risk). Although SMOTE was applied during training, such rebalancing may influence threshold-dependent metrics.

Second, model performance was based solely on internal cross-validation. External validation, clinical benchmarking, and longitudinal follow-up were not conducted. The modest sample size further constrains reliability, and test–retest stability was not examined.

Third, the measurement context imposes constraints. Mouse-tracking was analyzed without concurrent neuropsychological validation, and unmeasured confounders, such as motor difficulties, attention problems, or device familiarity, may have influenced the results. The sessions were brief and conducted on standard PCs with minimal supervision. Post-task interviews or detailed behavioral observations were not included, limiting confirmation of whether specific patterns (rapid duplicate clicks) reflected cognitive impulsivity or situational factors such as frustration or misunderstanding.

Fourth, participant classification relied on school-based OBEC and KUS-SI screening rather than clinical diagnosis. Although this approach does not include biological or clinical confirmation, it adheres to standardized educational screening protocols. Therefore, predictive performance should be interpreted within the context of these educational standards rather than as a substitute for clinically validated diagnoses.

Fifth, behavioral features used in clustering were derived from the same raw interaction data and may be correlated, challenging the independence assumption of K-means. Clusters should be interpreted as exploratory profiles of response styles rather than distinct latent classes.

Finally, multiple univariate comparisons were performed; although effect sizes were reported, this may not fully account for Type I error. Predictive performance should be interpreted relative to the school-based screening labels (OBEC, KUS-SI) used for classification and not as equivalent to confirmed clinical diagnoses. These findings represent preliminary feasibility evidence rather than definitive diagnostic accuracy.

7. Conclusions

This study examined the preliminary feasibility of the Direction Game, a game-based task using mouse tracking for the school-based screening of at-risk students. Phase 1 focused on developing directional tasks, while Phase 2 applied machine learning models to evaluate behavioral features.

The findings offered initial insights into the Research Questions (RQ):

RQ1: Process-level interaction measures showed clearer group differences than outcome-level scores, suggesting their potential relevance for identifying interaction patterns associated with risk status, though further validation is required.

RQ2: A compact statistically filtered subset (Selected-18), which emphasized interaction measures, yielded more consistent improvements than larger feature sets, supporting the value of reducing complexity while retaining predictive power.

RQ3: Among the leading models, overall performance was broadly comparable. SVM was used as a practical reference because of its simplicity and suitability for small-sample contexts, while ensemble methods remain viable when added complexity is acceptable.

Exploratory analysis also indicated tentative interaction profiles (impulsivity-like or hesitation patterns). However, as noted in the limitations, these should be interpreted as behavioral response styles rather than distinct clinical subtypes and require further validation with larger, clinically assessed samples.

The behavioral indicators identified here provide preliminary signals that may support the design of game-based tools to complement teacher-led questionnaire screenings in Tier-1 assessments. By adding objective behavioral data to subjective evaluations, such tools could strengthen early identification in schools, though further validation is still required.