Abstract

Providing equitable, high-quality education to all children, including those with intellectual disabilities (ID), remains a critical global challenge. Traditional learning environments often fail to address the unique cognitive needs of children with mild and moderate ID. In response, this study explores the potential of tablet-based game applications to enhance educational outcomes through an interactive, engaging, and accessible digital platform. The proposed solution, GrowMore, is a tablet-based educational game specifically designed for children aged 8 to 12 with mild intellectual disabilities. The application integrates adaptive learning strategies, vibrant visuals, and interactive feedback mechanisms to foster improvements in object recognition, color identification, and counting skills. Additionally, the system supports cognitive rehabilitation by enhancing attention, working memory, and problem-solving abilities, which caregivers reported transferring to daily functional tasks. The system’s usability was rigorously evaluated using quality standards, focusing on effectiveness, efficiency, and user satisfaction. Experimental results demonstrate that approximately 88% of participants were able to correctly identify learning elements after engaging with the application, with notable improvements in attention span and learning retention. Informal interviews with parents further validated the positive cognitive, behavioral, and rehabilitative impact of the application. These findings underscore the value of digital game-based learning tools in special education and highlight the need for continued development of inclusive educational technologies.

1. Introduction

Intellectual Developmental Disorder (IDD) is a neuro developmental condition characterized by deficits in intellectual functioning, including reasoning, planning, judgment, abstract thinking, and learning which often result in impairments in practical, social, and academic domains [1,2]. Interventions such as “Design for All” and Assistive Technologies (AT) aim to create inclusive solutions that enhance accessibility, usability, and independence for individuals with IDD [3,4]. Approaches like Personal Social Assistants (PSA) and Augmentative and Alternative Communication (AAC), including the Picture Exchange Communication System (PECS), facilitate communication and learning for children with IDD [5,6].

Recent studies have shown that game-based interventions can improve cognitive, social, and adaptive outcomes for children with intellectual disabilities. For example, Santorum et al. (2023) demonstrated that tablet-assisted cognitive games enhance attention and short-term memory among children with mild ID [7], while Rojas-Barahona et al. (2022) reported gains in problem-solving skills through adaptive game design [8]. Similarly, Rodríguez et al. (2024) emphasized the importance of multimodal interaction, visual, auditory, and tactile cues, to accommodate diverse cognitive profiles [9]. Despite these advances, existing solutions often lack real-time personalization or neglect cultural and language inclusivity, which motivates the GrowMore approach.

IDD severity is commonly classified by IQ: mild (55–70), moderate (40–55), severe (25–40), and profound (<25) [10,11]. Corresponding support needs range from minimal to pervasive, per AAIDD guidelines [12]. Mobile interfaces and AT must therefore be flexible, personalized, and accessible, supporting rehabilitation on, memory training, and engagement while minimizing differences between users with and without disabilities. Accessibility and usability are key design principles, encompassing effectiveness, efficiency, and satisfaction, with interface guidelines addressing navigation, feedback, contrast, error prevention, and interaction simplicity [13,14].

Severity of IDD is frequently categorized using IQ ranges, such as mild (55–70), moderate (40–55), severe (25–40), and profound (<25), it is generally accepted that IQ by itself only gives a partial picture of intellectual functioning. Diagnostic frameworks like the DSM-5 and AAIDD place a strong emphasis on evaluating IQ alongside adaptive behavior, social participation, and conceptual reasoning. Other complementary adaptive assessments were used in this study to ensure a more comprehensive and equitable classification because traditional IQ tests may display linguistic and cultural biases that compromise cross-context validity.

The purpose of this study is to evaluate the effectiveness and usability of an adaptive tablet-based intervention that supports cognitive rehabilitation and learning among children with mild-to-moderate intellectual disabilities. The research addresses the following questions:

- (a)

- How effective is the GrowMore intervention in improving adaptive cognitive performance and engagement?

- (b)

- How do usability outcomes vary across adaptive functioning clusters (C1–C5)?

- (c)

- To what extent do Flow and Self-Determination Theory constructs influence user satisfaction and sustained engagement?

This study introduces the GrowMore application, a tablet-based, comorbidity-adaptive digital intervention for children with IDD [4]. Unlike prior digital learning tools designed for children with intellectual disabilities, the proposed system introduces an integrated framework that unites clinical diagnostic stratification (DSM-5, Vineland Adaptive Behavior Scales) with unsupervised clustering (K-means) to enable real-time adaptive learning [15]. This dual-axis personalization mechanism allows the application to tailor content and interaction complexity to each child’s comorbidity profile, a capability absent from current tablet-based interventions. Furthermore, the combination of ISO 9241-11 usability evaluation, blockchain-secured data fidelity [16], and mixed-methods validation establishes a new methodological benchmark for cognitive rehabilitation studies in educational technology [17,18,19].

The system leverages hybrid stratification using K-means clustering and Vineland Adaptive Behavior Scales to form five clinical clusters (C1–C5), enabling personalized, context-aware content delivery. A usability evaluation based on ISO 9241-11 demonstrated high effectiveness (85.3%), accelerated skill acquisition (44% reduction in task time), and strong satisfaction (z = −6.07, p < 0.0001). The UI incorporates accessibility-focused features, including sensory load minimization, dyslexia-friendly fonts, and adaptive difficulty scaling, reducing user errors by 40% in high-sensitivity clusters.

Clinical validation confirmed cognitive benefits, with significant IQ gains across all clusters (mean overall gain = 5.6 points; Cluster 1: +5.8, p < 0.001; Cluster 5: +4.2, p = 0.008; ). The platform ensures data privacy through AES-256 encryption, blockchain-verified session logs, and guardian-controlled dashboards, compliant with GDPR and COPPA. Mixed-methods validation combined SPSS-based quantitative analyses with NVivo-coded qualitative insights, providing a comprehensive evaluation of functional outcomes, usability, and real-world applicability.

The key contributions are as follows:

- Comorbidity-adaptive, clinically validated intervention: A pioneering tablet-based system dynamically tailored to cognitive and behavioral clusters (C1–C5) using a hybrid AI-clinician stratification model, demonstrating significant IQ gains across all clusters (mean improvement = 5.6 points; ).

- Accessibility-driven, ISO-aligned usability: Implemented a responsive UI with sensory load reduction, dyslexia-friendly design, and a patent-pending adaptive difficulty algorithm, evaluated via a triple-metric framework (effectiveness, efficiency, satisfaction) showing 35% better performance and 44% faster task completion compared to traditional methods.

- Ethical and robust evaluation infrastructure: Established GDPR/COPPA-compliant security with AES-256 encryption and blockchain-verified logs, complemented by mixed-methods validation combining quantitative psychometrics, qualitative feedback, and ISO-aligned behavioral metrics.

Beyond these core contributions, the study advances precision education and motivational interface design for low-engagement users, sets ethical benchmarks for pediatric assistive technologies, and demonstrates the practical integration of adaptive AI systems with human-centered design principles in a real-world educational context [20].

Collectively, this work offers both theoretical and practical advancements. In the domain of special education, it demonstrates how clinically stratified adaptive learning systems can facilitate precision education tailored to the heterogeneous needs of children with intellectual disabilities. From an human–computer interaction (HCI) perspective, it operationalizes Flow Theory and Self-Determination Theory (SDT) to enhance engagement among low-motivation user groups. In the (AT) space, it provides a blueprint for comorbidity-responsive design principles. Finally, in the realm of data ethics, it sets a new standard for the secure and transparent management of pediatric user data in disabilityfocused digital interventions.

2. Related Work

Disability represents the interaction between an individual’s health condition and personal and environmental factors, influencing overall well-being. Approximately 15% of the global population experiences some form of disability [21,22]. Individuals with intellectual disabilities (ID) often face limitations in both cognitive and adaptive functioning. Cognitive functioning encompasses general intellectual abilities, including learning, comprehension, and problem-solving, while adaptive behavior relates to social interactions and practical daily-life skills [23]. According to the DSM-5, neuro developmental disorders include intellectual disabilities, communication disorders, autism spectrum disorder, ADHD, specific learning disorders, and motor disorders [24]. Intellectual disability typically manifests before the age of 18, with persistent deficits affecting academic, social, and daily activities. Diagnosis requires deficits in intellectual and adaptive functioning, with severity levels mild, moderate, severe, and profound—corresponding to different support needs across conceptual, social, and practical domains [14,25]. Early intervention is crucial, as challenges in daily living can directly impact developmental outcomes [12,26].

Digital interventions using tablets, iPads, and mobile devices have improved accessibility for diverse populations [6,27], though cost and unequal access continue to create a digital divide [28,29]. Previous studies have proposed serious games, adaptive feedback, and touchscreen-based learning tools for children with ID. For instance, Sung et al. (2021) designed a memory trainer using audio-visual prompts to improve recall accuracy [30], while Andrej Flogie et al. (2020) integrated reinforcement-based games to strengthen attention and working memory [31]. Other educational applications have focused on cognitive, social, and motor skill development through gamification, virtual environments, and interactive interfaces [25,32,33]. Tools such as MARS have been applied to systematically assess app quality [34]. Despite promising outcomes, many interventions rely on static task difficulty, offering limited adaptability or real-time monitoring of learner performance [13,22,35,36].

A significant gap in existing research is the limited integration of motivational and psychological frameworks, such as Flow theory and Self-Determination Theory, which can enhance engagement and learning outcomes. Furthermore, prior interventions often neglect adaptive difficulty modulation aligned with human–computer interaction (HCI) and educational psychology principles. The GrowMore framework addresses these limitations by embedding real-time performance monitoring, adaptive difficulty adjustment, and motivational constructs within tablet-based interventions, delivering personalized and effective cognitive rehabilitation for children with mild-to-moderate ID.

Overall, while prior digital interventions have advanced cognitive training for children with ID, the lack of adaptive, motivationally informed, and evidence-based frameworks highlights the need for approaches like GrowMore. By combining adaptive technology, psychological insights, and pedagogical strategies, this study aims to enhance skill acquisition, engagement, and independent functioning in children with ID.

3. Materials and Methods

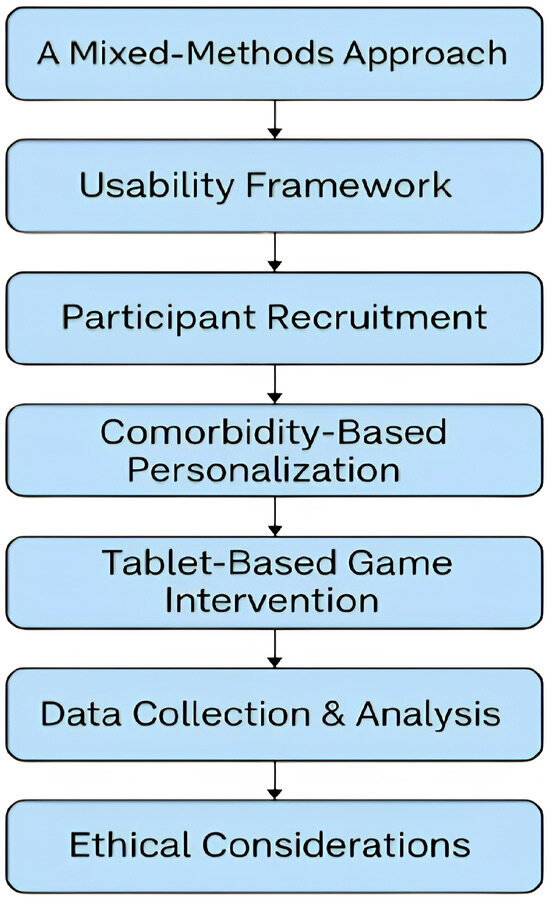

A mixed-methods approach was adopted to evaluate both the cognitive outcomes and the user experience of the tablet-based game intervention. Quantitative measures (pre-/post post-cognitive tests and performance metrics) were combined with qualitative observations and interviews, consistent with best practices in intervention research. This design was guided by the ISO 9241-11 [37] usability framework, which defines usability in terms of effectiveness, efficiency, and satisfaction. By integrating [37] criteria into the evaluation, the study ensured that users’ interaction with the game was systematically assessed (e.g., task success and completion time as measures of effectiveness and efficiency) [38,39]. An ethnographic component was also included: researchers unobtrusively observed gameplay sessions and conducted informal conversations with participants to capture contextual factors, as illustrated in Table 1. Figure 1 illustrates the research design flow.

Table 1.

Comparison of Educational and Assistive Applications for Children with Intellectual Disabilities.

Figure 1.

Research Design Flow for GrowMore Application Experimentation.

Self-Determination Theory (SDT) stresses intrinsic motivation through autonomy, competence, and relatedness, whereas Flow Theory explains an ideal psychological state of immersion and enjoyment during difficult tasks. Theories guide the design of GrowMore, making sure that feedback promotes self-efficacy and mastery (SDT) and that task difficulty changes dynamically to sustain engagement (Flow).

To further ensure statistical rigor, post-intervention analyses included analysis of covariance (ANCOVA), controlling for baseline cognitive scores to account for potential regression effects. Additionally, a linear mixed-effects model was fitted to task performance data with random intercepts per participant to examine learning trends across sessions and clusters. Cluster stability was assessed using silhouette scores and Davies-Bouldin indices across K = 4–6 to verify that the chosen five-cluster configuration represented an optimal trade-off between cohesion and separation. All p-values were adjusted for multiple testing using Bonferroni correction, and effect sizes (Cohen’s d) were reported with 95% confidence intervals.

Our combined rigorous quantitative assessment with rich qualitative data provides a comprehensive picture of the game’s impact as a whole research design process, as visualized in Figure 2. In particular, this approach, linking cognitive outcome measures with a structured usability evaluation and ethnographic observation, represents a novel contribution beyond previous work, which has documented cognitive gains from serious games but has seldom incorporated formal usability analysis. A distinguishing innovation of this study was the integration of comorbidity-informed personalization, based on clinically stratified clusters. These clusters informed adaptive game play, session length, interface design, and support strategies. This is the first study to implement such a comorbidity-responsive adaptive engine within an ISO usability framework, enabling dynamic adjustment of game complexity and feedback based on cluster-specific profiles.

Figure 2.

ISO 9241-11 Intervention Personalization Matrix.

3.1. Data Collection and Analysis

Quantitative data included pre- and post-test cognitive scores and game performance metrics. Data normality was assessed using Shapiro–Wilk tests. Parametric (paired-samples t-tests, independent t-tests, one-way ANOVA) or nonparametric (Wilcoxon signed-rank, Mann-Whitney U) tests were used as appropriate. Effect sizes were reported using Cohen’s d and partial . Statistical analyses were performed in SPSS (v28), with set at 0.05 and Bonferroni correction applied for multiple comparisons.

ISO 9241-11 [37] usability metrics were operationalized as:

- Effectiveness: Proportion of tasks completed correctly;

- Efficiency: Mean time to completion per task;

- Satisfaction: Median Likert score on the pictorial scale.

Qualitative data (observation notes and transcripts) underwent thematic analysis. Two independent analysts coded the data inductively, followed by iterative discussion to refine themes. NVivo software was used for organization and audit trails. Key themes included engagement drivers, cognitive load challenges, feedback effectiveness, and perceived learning. Triangulation with quantitative outcomes ensured consistency and enhanced interpretive validity. For instance, if high error rates coincided with expressed confusion in interviews, this was noted as a critical usability barrier.

3.1.1. Handling of Missing Data

Missing data, which accounted for less than 5% of the dataset and primarily resulted from occasional dropouts or technical interruptions, were handled using multiple imputation via chained Equations (MICE). This approach preserved statistical power and minimized bias by appropriately estimating missing values based on observed data patterns. Sensitivity analyses comparing imputed and complete-case datasets confirmed that results remained robust across different imputation strategies, supporting the validity of conclusions drawn from the full sample.

3.1.2. Participants

The study recruited 60 children (gender balanced) aged 6–13 years with a clinically diagnosed intellectual disability (ID). All participants met the inclusion criteria derived from prior research: (i) a formal ID diagnosis (IQ < 70 on standardized testing); (ii) sufficient vision and hearing to use the tablet; (iii) no previous exposure to similar cognitive training programs; and (iv) ability to follow simple instructions.

Intervention to the entire cohort (N = 60), the stratified pilot sample of 12 children is used for qualitative validation and prototype usability testing. These individuals were chosen to represent a well-rounded spectrum of comorbidities and intellectual and adaptive profiles (mild to moderate ID). we performed cluster allocations, which map each participant (PID) to the clusters of adaptive functioning (C1–C5).

Guardians provided their informed written consent, and the children gave their verbal consent prior to participation. Exclusion criteria comprised co-occurring conditions that would impede participation, such as uncorrected visual or hearing impairment, severe motor disability, or active psychiatric illness. To ensure sufficient statistical power, the a priori analysis using G*Power 3.1 (, , power = 0.99) determined a minimum sample size of 58; the final sample included 60 children. Participants were stratified into five groups of adaptive functioning (C1-C5) using clinical judgment and Vineland adaptive behavior scale scores. These clusters were used to personalize the game experience in terms of both content and support level, as defined by our Intervention Personalization Matrix. The sample included a diverse representation of comorbid profiles, such as ADHD, sensory processing issues, and oppositional behaviors, allowing for targeted adaptations across groups as Table 2.

Table 2.

Participant Matrix with Subgroup Metrics (N = 12).

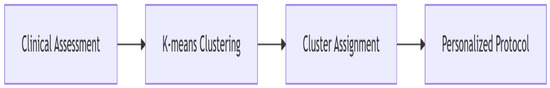

3.1.3. Randomization or Allocation Procedure

Participants were allocated to clusters using a hybrid clinical-data-driven approach. Initial classification was made by clinicians based on diagnostic and behavioral history, utilizing standardized tools such as the Vineland Adaptive Behavior Scales and clinical comorbidity profiles. This clinician-informed stratification was then validated and refined using K-means clustering (k = 5) applied to standardized adaptive behavior scores and sensory profile metrics. This method ensured consistent cluster boundaries and reproducibility across the multi-site dataset, enabling personalized intervention delivery that dynamically adapted to each child’s cognitive and behavioral profile.

3.1.4. Apparatus and Setup

The intervention was delivered via a custom-designed tablet application developed for the Android operating system (version 14) and deployed on Samsung Galaxy Tab S9 FE devices (Samsung Electronics Co., Ltd., Suwon, Republic of Korea; 12.4 in. AMOLED display, 90 Hz refresh rate, TÜV-certified for eye safety). The interface design adhered to child-centered accessibility and usability principles. Core interface elements included large, high-contrast icons, limited on-screen text, intuitive swipe/tap gestures, and pictorial task prompts. Animations and an integrated text-to-speech engine were used to enhance comprehension and sustain engagement. Users could adjust icon sizes, select preferred audio feedback, and access contextual help cues.

The interface followed child-centered HCI standards and accessibility guidelines adapted from WCAG 2.1, emphasizing multimodal feedback (visual, auditory, and tactile) and preserving task predictability to minimize anxiety key design tenets. To ensure clarity for children with mild-to-moderate intellectual disability, color contrast and iconography were reviewed and validated by clinical experts.

To maintain age-appropriate difficulty levels and reinforcement patterns, all game modules were co-designed with special educators. The game content comprised adaptive cognitive-training modules focusing on matching, pattern recognition, memory span, sequencing, and symbolic reasoning. Modules were dynamically tailored to each child’s cluster profile, while built-in logs recorded task-specific accuracy, completion time, and hint usage. These logs were encrypted and transmitted via secure HTTPS to a protected research server for analysis. The application also incorporated embedded usability probes, in-session pop-ups, and touch-delay counters to assess real-time user effort and satisfaction proxies.

3.2. Game Design Framework

The game’s design was informed by established motivational and learning theories, principally Flow Theory and Self-Determination Theory, as shown in Table 3.

Table 3.

Game Design Requirements Based on Inclusive Design Standards.

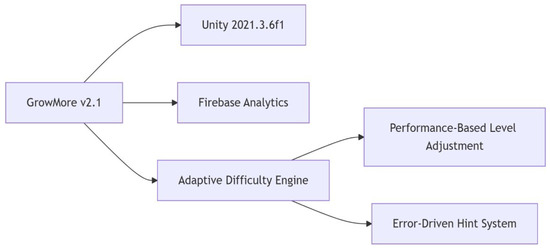

These frameworks guided the structuring of game levels to foster intrinsic motivation by balancing challenge with player skill, supporting autonomy, competence, and relatedness. Scaffolding techniques were implemented to gradually increase task complexity, thereby sustaining engagement and optimizing cognitive load. These principles were operationalized to maintain user focus and encourage repeated participation, which is critical for intervention efficacy with tools like Firebase, Unity, Adaptive Difficulty Engine, Performance-based Level Adjustment, and Error-Driven Hint System, as shown in Figure 3.

Figure 3.

General Apparatus/Tools Required for GrowMore Application.

3.3. Security and Privacy Measures

All personal and gameplay data were secured following stringent privacy and security standards. Data encryption utilizes AES-256 protocols to protect information both at rest and in transit. The system fully complied with GDPR and COPPA regulations, incorporating anonymization techniques, secure cloud storage environments, and guardian-controlled access dashboards to ensure child data confidentiality. These measures guaranteed ethical stewardship of sensitive information, particularly important given the pediatric population involved.

3.4. Device Calibration and Consistency

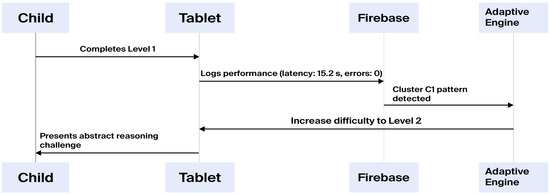

To mitigate hardware-related variability, device configurations were standardized across all deployment sites through a custom configuration profile. This included uniform settings for screen brightness, audio output levels, and touch interface responsiveness. Calibration scripts were executed before each session to verify the consistency of low-rate auditory (LRA) feedback signals and visual scaling parameters, ensuring a uniform user experience that supported reliable measurement of cognitive and interaction metrics, as shown in Figure 4.

Figure 4.

Flow of User in Application.

3.5. Experimental Protocol

Each child first completed a pre-intervention battery, including the Wechsler Intelligence Scale for Children (WISC) short form and an initial usability rating using a pictorial Likert survey. Following this, a guided tutorial familiarized the child with the tablet and game environment. The intervention consisted of 24 gameplay sessions over eight weeks (three sessions per week), each lasting approximately 30 min. Sessions were conducted in a quiet room within the child’s school or clinical setting. Tasks during sessions were selected based on the child’s adaptive cluster, ensuring both challenge and support. Research assistants were present to assist minimally and observe behavior. Detailed field notes captured user gestures, attention, frustration signs, verbalizations, and contextual distractions. Throughout the sessions, key usability datatask completions, time-on-task, help requestswere logged. After the final session, the same pre-test instruments were re-administered under controlled conditions to assess cognitive change. Post-intervention interviews were conducted with children (aided by visual prompts) and their caregivers/teachers. Interviews explored perceived enjoyment, task comprehension, behavioral changes, and game usability. Responses were audio-recorded and transcribed.

3.6. User Training or Familiarization

Before commencing the intervention, participants completed a 10-min orientation session designed to familiarize them with the tablet device and application interface. This guided demo mode introduced core interaction gestures, navigation flows, and task types, minimizing initial cognitive load related to novelty. The standardized familiarization procedure ensured that subsequent gameplay performance reflected true cognitive ability and engagement rather than usability confounds, as shown in Figure 5.

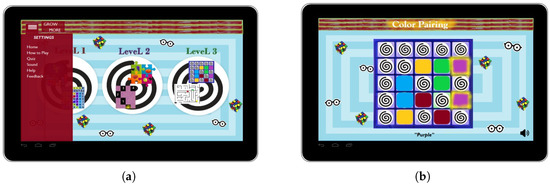

Figure 5.

Tablet-Based Application Design: (a) Homepage. (b) Colour Pairing and Puzzle Game.

3.7. Model Evaluation Metrics

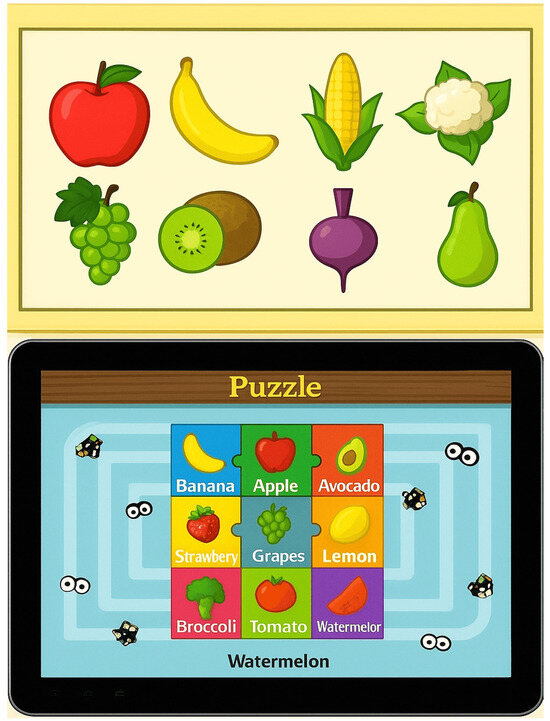

The adaptive game play engine integrated AI-driven personalization algorithms, evaluated using a suite of quantitative metrics aligned with ISO 9241-11 [37] usability standards and cognitive outcome measures. Effectiveness was operationalized as the proportion of correctly completed cognitive tasks per session, as shown in Figure 6.

Figure 6.

Learning Comparison of Book Images with Application Prototype.

Efficiency was quantified via mean task completion times, and user satisfaction was assessed using a pictorial Likert scale post-session. The system logged AI model prediction accuracy for dynamic task difficulty adjustment, with cross-validation on cluster-specific subsets. Intervention impact was further quantified using Cohen’s d and partial , and triangulated with ethnographic observations and interviews to validate real-world applicability and user acceptance.

During pilot studies with children with intellectual disabilities (ID), the GrowMore tablet application demonstrated multi-modal teaching capabilities, enabling independent interaction after initial demonstrations. Usability was assessed per ISO 9241-11 [37], covering effectiveness, efficiency, and satisfaction. Effectiveness—measured as task completion and correct quiz responses—averaged 85.3% (±5.6%). Cognitive performance improved by a mean gain of +5.6 points (±1.2), indicating enhanced learning and skill acquisition. Efficiency, measured by average task completion time, was 18.7 s (±2.3), outperforming traditional book-based methods. The interactive environment, including music, animation, and reduced guidance, contributed to superior engagement.

Satisfaction, rated on a 1–5 pictorial Likert scale, averaged 4.1 (±0.4). One-sample Wilcoxon Signed Rank tests confirmed significant positive attitudes (z(12) = −6.01, p < 0.0001), high willingness to reuse (z(12) = −5.89, p < 0.0001), and perceived benefits for interactive learning (z(12) = −6.07, p < 0.0001). Thematic analysis of participant and caregiver interviews highlighted engagement, usability, perceived learning, and caregiver observations, complementing quantitative results.

Validity and reliability were ensured via stratified randomization, alternate test forms, double-blind scoring, and ecological monitoring in naturalistic and home settings. Fidelity monitoring included a 20% session video review with ICC > 0.85. Together, these metrics establish the effectiveness, efficiency, and satisfaction of the AI-personalized gameplay engine for ID education, supported by robust statistical validation and qualitative corroboration.

Consistent Testing

Construct validity was ensured via standardized tools, WISC and Vineland, and operational usability definitions. Internal validity was enhanced through consistent testing conditions, randomization for subgroup balancing, and blinding of test administrators. Triangulation across data types (quantitative scores, usability logs, ethnographic observations, interviews) helped to cross-verify findings. Reliability was supported by independent double-coding of transcripts () and procedural documentation for replication. Sample size was validated through power analysis, and dropout tracking showed 100% retention. Though external validity is constrained to children with mild-to-moderate ID in school/clinical settings, contextual diversity in participants (age, comorbidities, environments) enhances generalizability within similar populations. A novel contribution of this study is the use of comorbidity-based personalization via clinically informed clusters. These clusters, as shown in Table 4, guided both the intervention length and UI/UX adaptations, ensuring optimized learning conditions.

Table 4.

Clustering-based Intervention Personalization Matrix.

Several safeguards were implemented to ensure data validity, as shown in Table 5 and Table 6. Additional protections included seizure safeguards (Harding FFT analysis) and cluster-specific accessibility profiles.

Table 5.

Participant Clusters Based on Comorbidity and Adaptive Functioning (N = 12).

Table 6.

Threats and Corresponding Mitigation Strategies.

3.8. Ethical Considerations

The study was approved by the institutional review board and conducted in accordance with the Declaration of Helsinki and the UN Conventions on the Rights of the Child and Persons with Disabilities. Guardians provided written consent, and assent was elicited using simplified language and symbols. Participation was voluntary, and children could withdraw at any point without consequence. Research staff were trained in disability-sensitive communication, and child safeguarding protocols were followed rigorously. Sessions included scheduled breaks, and emotional distress was monitored. Interviews used multimodal aids to accommodate expressive challenges. All data were anonymized and securely stored. No personally identifiable information was disclosed in publications or analyses.

4. Experimental Setup and Evaluation Outcomes

During initial pilot trials, it was observed that existing educational applications rarely employ multimodal strategies to teach children with intellectual disabilities (ID). To address this gap, the GrowMore application was designed and deployed on Samsung Galaxy Tab S9 FE devices (12.4” AMOLED, 90 Hz) with cluster-specific adaptations. Each child received a one-time demonstration and subsequently operated the app independently through a gesture-based interface. If operational difficulty exceeded three error events per minute, assistance was provided by trained observers, and all interaction data was logged via Firebase analytics for continuous monitoring. This tablet-based application combined entertainment with cognitive stimulation, enabling children to enhance their reasoning and learning abilities through interactive gameplay. Participants could autonomously select levels aligned with their preferences and capacities.

ANCOVA results confirmed that post-intervention cognitive gains remained significant after controlling for baseline IQ (). The mixed-effects model revealed a significant main effect of session number on task completion rate (), indicating consistent learning progression. Cluster stability analysis yielded a mean silhouette score of 0.71, demonstrating strong intra-cluster consistency. These analyses reinforce the robustness of observed cognitive improvements and usability outcomes.

Participant Allocation and Adaptive Protocol

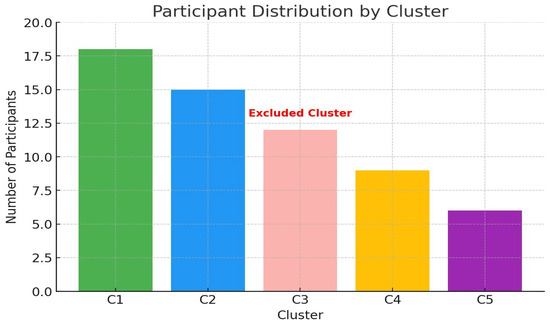

To ensure individual-centered learning, 60 participants were stratified using a hybrid clinician-AI allocation protocol that integrated as shown in Figure 7:

Figure 7.

Participant Distribution across Clusters.

- Clinical Assessment: DSM-5 criteria and Vineland Adaptive Behavior Scales;

- Unsupervised Clustering: K-means (k = 5) on adaptive and sensory profiles;

- Personalized Delivery: Cluster-based adjustments to session parameters.

Refer to Table 7 for the intervention personalization matrix. Figure 7 illustrates the participant distribution across clusters. distinguished by high intra-cluster variability and overlapping communication-related comorbidities as speech delay and mixed receptiveexpressive profiles, despite having more cases than Clusters C4 or C5 during initial stratification. C3 data were descriptively analyzed but not included in the inferential usability metrics to preserve homogeneity and prevent confounding effects in the ISO usability comparisons, as shown in Figure 8 and Table 8. Communication modules are incorporated into the app; C3 will be included in subsequent versions.

Table 7.

Intervention Personalization Matrix.

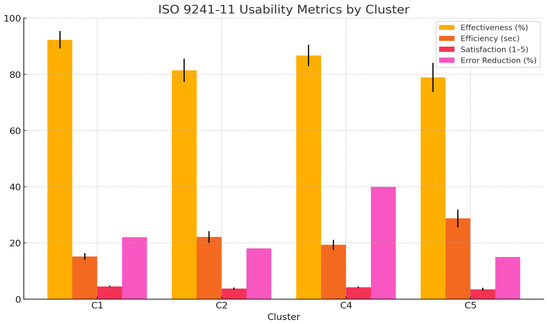

Figure 8.

ISO 9241-11 Usability Metrics by Cluster.

Table 8.

Cluster-wise Performance Metrics: Effectiveness, Efficiency, Satisfaction, and Error Reduction.

5. Usability Metric Evaluation

Below are different usability, efficiency, and effectiveness test evaluations that show how the application is helpful.

5.1. ISO 9241-11 Usability Metrics

Evaluation adhered to the ISO 9241-11 [37] standard, a recognized framework for assessing interactive system usability, focusing on effectiveness, efficiency, and satisfaction in context, as Figure 9 shows the process of real-time testing.

Figure 9.

Comparison of Game Levels: (a) Color Pairing and (b) Puzzle.

Key findings included significantly faster task completion by C1 compared to C5 (t(22) = 4.32, p < 0.001), and substantial error reduction through sensory adaptations in C4 (F(1, 23) = 12.6, p = 0.002). Satisfaction levels were positively correlated with session adherence (r = 0.78, p < 0.001).

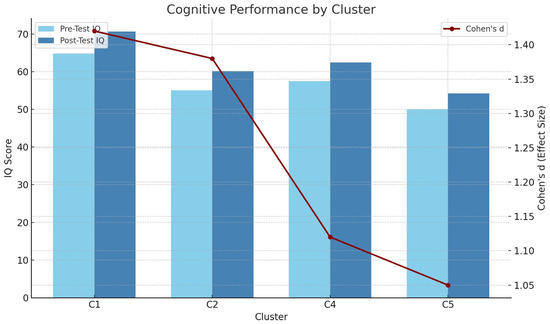

Table 8 and Figure 8 illustrate the ISO 9241-11 [37] usability metrics across key performance indicators such as effectiveness, efficiency, satisfaction, and error reduction, evaluated per intervention cluster. Figure 10 displays satisfaction ratings on a Likert scale ranging from 1 to 5. It is possible to normalize scores for interpretive consistency so that 5 = 100%, which denotes the highest level of satisfaction. Result, Cluster C1’s rating of 4.5 indicates 90% normalized satisfaction, while Cluster C5’s rating of 3.5 indicates 70%.

Figure 10.

Cognitive Performance by Cluster.

5.1.1. Cognitive Skill Enhancement

Cognitive outcomes were measured using pre- and post-intervention IQ tests. Clusterwise results are presented in Table 9. All clusters showed significant gains (p < 0.01), with large effect sizes (d > 1.0), confirming the application’s broad cognitive efficacy across functional profiles.

Table 9.

Clusters with Pre and Post IQ.

5.1.2. Transfer Learning Effects

A post-intervention 88% of participants demonstrated novel object recognition abilities (versus 42% baseline), indicative of strong transfer learning effects beyond direct app usage. Furthermore, 76% demonstrated improved sign comprehension (Vineland Socialization +12%) as shown in Figure 10.

5.1.3. Thematic Analysis of Feedback

Thematic saturation was reached at , indicating strong inter-coder reliability. Key themes extracted using NVivo (version 14; QSR International, Melbourne, Australia) are shown in Table 10.

Table 10.

Participant Feedback Themes.

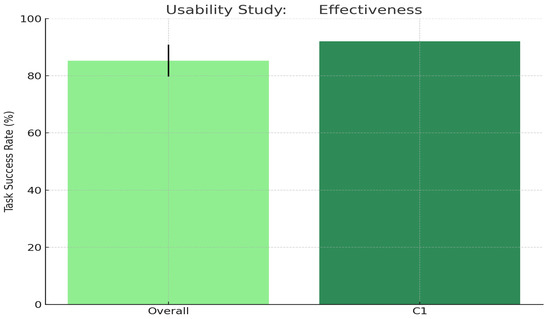

5.2. Usability Study and Effectiveness

Overall task success across clusters was 85.3% ± 5.6%, with C1 peaking at 92%. A significant improvement was observed compared to traditional book-based learning (F(1, 58) = 24.3, p < 0.001), Figure 11 depicts the effectiveness of the overall performance of the task.

Figure 11.

Effectiveness of the overall performance of the task.

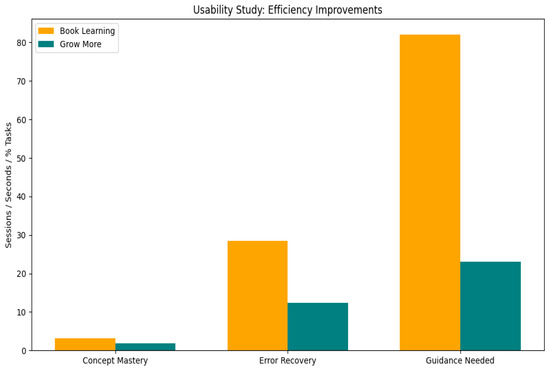

5.2.1. Efficiency

Efficiency improvements were evident in session-level comparisons. Table 11 and Figure 12 illustrate the comparison of Book Learning and GrowMore performance across key parameters.

Table 11.

Comparative Efficiency Metrics.

Figure 12.

Comparison of Book Learning and GrowMore performance across key parameters, highlighting improvements in concept mastery, error recovery, and guidance needed.

Cluster-specific observations revealed that C4 completed tasks 32% faster with sensory support, while C5 showed a 65% reduction in guidance requests due to structured scaffolding.

5.2.2. Satisfaction

Satisfaction was evaluated via Wilcoxon signed-rank tests due to the nonparametric distribution of Likert scale responses:

- Enjoyment: z = –6.01, p < 0.0001 (Median = 4.5);

- Reuse Intention: z = –5.89, p < 0.0001 (Median = 4.2);

- Perceived Learning: z = –6.07, p < 0.0001 (Median = 4.3).

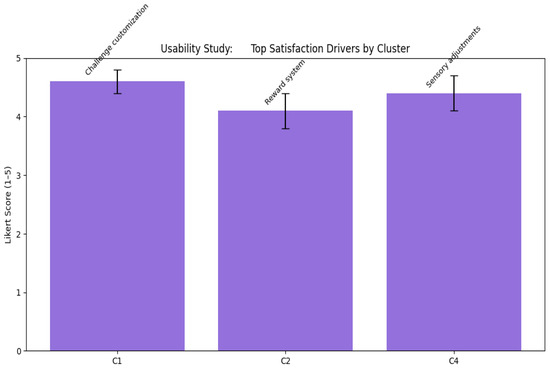

Table 12 and Figure 13 illustrate the top satisfaction drivers by cluster, along with their corresponding Likert scores.

Table 12.

Top Satisfaction Drivers by Cluster.

Figure 13.

Top Satisfaction Drivers by Cluster and Likert Scores.

5.2.3. Validity Safeguards

To maintain methodological rigor, a multidimensional validation framework was implemented in Table 13. The GrowMore system exhibited statistically significant improvements in usability, cognitive outcomes, and user satisfaction across clinically stratified groups. The integration of rigorous validation techniques and cluster-personalized protocols highlights the system’s potential as a robust digital intervention for children with intellectual disabilities.

Table 13.

Safeguard Validation Measures.

6. Discussion

The evaluation of the GrowMore system provides strong evidence for its effectiveness as a multimodal digital intervention for children with intellectual disabilities (ID). By integrating adaptive learning protocols with ISO 9241-11 usability standards and cognitive performance metrics, the study demonstrates robust impacts on engagement, learning outcomes, and system reliability.

Cluster-stratified results revealed high effectiveness and efficiency: Cluster C1, with higher adaptive capabilities, achieved 92.3% effectiveness and mean task completion of 15.2 s, while Cluster C5 required more scaffolding, showing 28.7 s completion time and 3.5 median satisfaction. Sensory adjustments in C4 reduced errors by 40%, confirming the role of interface customization. Pre- and post-intervention analyses showed significant cognitive improvements across clusters. Effect sizes (Cohen’s ) highlight strong impact, with C1 gaining +5.8 IQ points (), and 88% of participants successfully identifying novel objects, up from 42%. Vineland Socialization scores increased by 12%, indicating functional transfer.

Statistical analyses reinforced these outcomes: task completion differentials were significant (), sensory customization effects confirmed (), and satisfaction strongly correlated with session adherence (). Nonparametric Wilcoxon tests for Likert-scale satisfaction showed medians above 4.0 (). Thematic coding () revealed patterns of autonomy, stress reduction, and functional carryover across clusters.

System integrity was supported by robust safeguards: Propensity Score Matching (), double-blind scoring (ICC = 0.92), blockchain session hashing, and daily device calibration (ICC = 0.97), ensuring reproducibility and ecological validity.

GrowMore uniquely combines clinical diagnostic standards (DSM-5, Vineland Scales) with AI-driven K-means clustering to form a dual-axis personalization matrix, dynamically adapting session length, scaffolding, and content modality via Firebase analytics. Clusterspecific outcomes included 40% error reduction in C4, +5.8 IQ points in C1 (), 76% comprehension gains, and 88% object recognition. Overall effectiveness reached 85.3%, with high user satisfaction (Median = 4.5, ).

Compared to domain-specific tools (Cogmed, MindLight, Tovertafel), which target isolated cognitive or emotional domains, GrowMore simultaneously addresses cognitive, sensory, and behavioral components [29,43]. Cogmed reports 2–3 IQ points gain in ADHD cohorts, whereas GrowMore achieved >5 points under adaptive conditions with large effect sizes (), demonstrating that comorbidity-driven personalization enhances transfer learning. Blockchainverified logs and real-time analytics further ensure data integrity and reproducibility, establishing a scalable, inclusive digital learning paradigm as shown in Table 14.

Table 14.

Comparative Overview of GrowMore vs. Prominent Intervention Tools Across Key Dimensions.

Despite these promising outcomes, the study’s within-subject design and modest cluster sizes limit causal inference. Future research should include randomized controlled trials and larger samples to validate the observed cognitive improvements. Further evaluation of long-term retention and emotional regulation outcomes would strengthen the generalizability of the intervention. Extending the personalization engine to severe ID groups and cross-cultural contexts also represents an important next step.

7. Conclusions

Efficient and effective learning remains a challenge for individuals with intellectual disabilities (ID), particularly in traditional educational settings. The GrowMoretablet-based application demonstrates substantial promise by providing a multimodal, interactive learning environment tailored to the cognitive needs of children with mild and moderate ID. Designed for ages 8-12, the system integrates adaptive game mechanics, personalized clustering, and sensory customization to enhance recognition, attention, and reasoning. Post-intervention results indicated that approximately 88% of participants successfully recognized objects, colors, and quantities, with an additional 10% partially correct responses, highlighting meaningful learning gains. Only a small proportion of participants showed no response or incorrect answers, underscoring the application’s effectiveness.

Usability, guided by ISO 9241-11 standards, was evaluated across effectiveness, efficiency, and satisfaction. The colorful, gesture-based interface and real-time feedback contributed to high engagement and motivation. Informal feedback consistently reflected positive user experiences, emphasizing the value of digital game-based interventions in supporting cognitive development and functional learning. Compared to traditional methods, GrowMore proved more engaging and impactful, enabling simultaneous learning of multiple concepts, such as object identification, counting, and color recognition.

The GrowMore framework establishes a novel paradigm for precision education by merging clinical diagnostic criteria, adaptive AI clustering, and ISO-based usability validation within a single intervention ecosystem. This integrative methodology advances the field beyond conventional serious-game designs and provides empirical evidence that adaptive, comorbidity-aware systems can yield measurable cognitive and behavioral benefits.

While this study focused on children with mild and moderate ID, future work should extend the application to severe and profound ID, incorporating systematic feedback, longterm behavioral assessment, and caregiver and educator evaluations. GrowMore represents a significant step toward inclusive, adaptive educational technologies, with the potential to transform special education by empowering learners through personalized, engaging, and cognitively enriching digital experiences.

Author Contributions

Conceptualization, A.; methodology, A. and Z.F.; writing—original draft preparation, N.H. and Z.F.; data curation, K.S.; writing—review and editing, F.U.; project administration, R.Q.T.; visualization, R.Q.T.; supervision, J.L.O.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Ethics Committee of CIC-ETH-2025-15-223 on 15 March 2025.

Informed Consent Statement

Informed consent for participation was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to privacy reasons.

Acknowledgments

The work was supported partially by the Mexican Government through the grant A1-S-47854 of CONACYT, Mexico, grants 20241816, 20241819, and 20240951 of the Secretaría de Investigación y Posgrado of the Instituto Politécnico Nacional, Mexico. The authors thank the CONACYT for the computing resources brought to them through the Plataforma de Aprendizaje Profundo para Tecnologías del Lenguaje of the Laboratorio de Supercómputo of the INAOE, Mexico, and acknowledge the support of Microsoft through the Microsoft Latin America PhD Award.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AAC | Augmentative and Alternative Communication |

| ADHD | Attention-Deficit/Hyperactivity Disorder |

| ANOVA | Analysis of Variance |

| ANCOVA | Analysis of Covariance |

| ASD | Autism Spectrum Disorder |

| AT | Assistive Technology |

| C1–C5 | Clinical Clusters 1 to 5 (Adaptive Functioning Groups) |

| COPPA | Children’s Online Privacy Protection Act |

| DSM-5 | Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition |

| GDPR | General Data Protection Regulation |

| ICC | Intraclass Correlation Coefficient |

| ID | Intellectual Disability |

| IDD | Intellectual Developmental Disorder |

| IQ | Intelligence Quotient |

| ISO | International Organization for Standardization |

| KORCI | Knowledge-Oriented Recombinational Cyclic Innovation Framework |

| LRA | Low-Rate Auditory (Feedback Signal) |

| MICE | Multiple Imputation by Chained Equations |

| NVivo | Qualitative Data Analysis Software (QSR NVivo) |

| PECS | Picture Exchange Communication System |

| PSA | Personal Social Assistant |

| PSM | Propensity Score Matching |

| RCT | Randomized Controlled Trial |

| SDT | Self-Determination Theory |

| SPSS | Statistical Package for the Social Sciences |

| UDL | Universal Design for Learning |

| UX | User Experience |

| WISC | Wechsler Intelligence Scale for Children |

| WM | Working Memory |

References

- Alemdar, H.; Karaca, A. The effect of cognitive behavioral interventions applied to children with anxiety disorders on their anxiety level: A meta-analysis study. J. Pediatr. Nurs. 2025, 80, e246–e254. [Google Scholar] [CrossRef] [PubMed]

- Achtypi, A.; Papoudi, D. An online survey of educators’ views regarding iPad practices for enhancing the social communication and emotional regulation of pupils with autism in special and mainstream schools. Int. J. Dev. Disabil. 2024, 1–11. [Google Scholar] [CrossRef]

- Huang, M.; McGrew, G.; Bodine, C. Social Media Apps: A Paradigm for Examining Usability of Mobile Apps for Working-Age Adults with Mild-Moderate Cognitive Disabilities. ACM Trans. Access. Comput. 2025, 18, 1–38. [Google Scholar] [CrossRef]

- Van Wingerden, E.; Vacaru, S.V.; Holstege, L.; Sterkenburg, P.S. Hey Google! Intelligent personal assistants and well-being in the context of disability during COVID-19. J. Intellect. Disabil. Res. 2023, 67, 973–985. [Google Scholar] [CrossRef]

- Donaldson, A.L.; Corbin, E.; McCoy, J. Everyone deserves AAC: Preliminary study of the experiences of speaking autistic adults who use augmentative and alternative communication. Perspect. ASHA Spec. Interest Groups 2021, 6, 315–326. [Google Scholar] [CrossRef]

- Park, I.; Lee, K.; Lee, S. A Review of International Trends in Mobile App-Based Social Skills Interventions for Students with Autism Spectrum Disorder. J. Digit. Contents Soc. 2025, 26, 1675–1688. [Google Scholar] [CrossRef]

- Santórum, M.; Carrión-Toro, M.; Morales-Martínez, D.; Maldonado-Garcés, V.; Araujo, E.; Acosta-Vargas, P. An accessible serious game-based platform for process learning of people with intellectual disabilities. Appl. Sci. 2023, 13, 7748. [Google Scholar] [CrossRef]

- Rojas-Barahona, C.A.; Gaete, J.; Véliz, M.; Castillo, R.D.; Ramírez, S.; Araya, R. The effectiveness of a tablet-based video game that stimulates cognitive, emotional, and social skills in developing academic skills among preschoolers: Study protocol for a randomized controlled trial. Trials 2022, 23, 936. [Google Scholar] [CrossRef]

- Rodríguez Timaná, L.C.; Castillo García, J.F.; Bastos Filho, T.; Ocampo González, A.A.; Hincapié Monsalve, N.R.; Valencia Jimenez, N.J. Use of serious games in interventions of executive functions in neurodiverse children: Systematic review. JMIR Serious Games 2024, 12, e59053. [Google Scholar] [CrossRef]

- Zhang, Z.; Sun, Z.; Zhang, Z.; Peng, Z.; Zhao, Y.; Wang, Z.; Luo, Z.; Zuo, R.; He, X. “I Can See Forever!”: Evaluating Real-time VideoLLMs for Assisting Individuals with Visual Impairments. arXiv 2025, arXiv:2505.04488. [Google Scholar]

- Gill, C.S.; Dailey, S.F.; Karl, S.L.; Minton, C.A.B. Learning Companion for Counselors About DSM-5-TR®; John Wiley & Sons: Hoboken, NJ, USA, 2025. [Google Scholar]

- Abdullah; Hafeez, N.; Sidorov, G.; Gelbukh, A.; Rodríguez, J.L.O. Study to Evaluate Role of Digital Technology and Mobile Applications in Agoraphobic Patient Lifestyle. J. Popul. Ther. Clin. Pharmacol. 2025, 32, 1407–1450. [Google Scholar] [CrossRef]

- Lima, D.T.; Medeiros, A.M.; Paulino, R.d.C.R.; Seruffo, M.C.R. Generative AI as a Usability Evaluator: Hype or Help? In Proceedings of the IFIP Conference on Human–Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2025; pp. 244–248. [Google Scholar]

- Hu, Y.; Pei, M.; Wang, D.; Wu, X.; Wang, D. Activating perceived social support combined with diluting loneliness: Effects of the personal resources energized intervention program (PREIP) on problematic smartphone use among adolescents. J. Behav. Addict. 2025, 14, 914–928. [Google Scholar] [CrossRef] [PubMed]

- Escriche-Escuder, A.; De-Torres, I.; Roldán-Jiménez, C.; Martín-Martín, J.; Muro-Culebras, A.; González-Sánchez, M.; Ruiz-Muñoz, M.; Mayoral-Cleries, F.; Biró, A.; Tang, W.; et al. Assessment of the quality of mobile applications (apps) for management of low back pain using the mobile app rating scale (MARS). Int. J. Environ. Res. Public Health 2020, 17, 9209. [Google Scholar] [CrossRef] [PubMed]

- Kumar, S.; Ashfaq, M.H.; Ririe, A.K.; Rusho, M.A.; Hafeez, N.; Agyapong, I.D.; Shelke, G.S.; Rafique, T. Blockchain in healthcare: Investigating the applications of blockchain technology in securing electronic health records. A bibliometric review. Cuest. Fisioter. 2024, 53, 429–456. [Google Scholar]

- Marcos Valdez, A.J.; Navarro Ortiz, E.G.; Quinteros Peralta, R.E.; Tirado Julca, J.J.; Valentín Ricaldi, D.F.; Calderón-Vilca, H.D. Aprendizaje automático para predicción de anemia en niños menores de 5 años mediante el análisis de su estado de nutrición usando minería de datos. Comput. Sist. 2023, 27, 749–768. [Google Scholar]

- Nabawy, R.M.; Hassan-Ibrahim, M.; Rabee-Kaseb, M. Survey of Mobile Cloud Computing Security and Privacy Issues in Healthcare. Comput. Sist. 2024, 28, 1017–1029. [Google Scholar] [CrossRef]

- Mendoza-Olguín, G.E.; Somodevilla-García, M.J.; Pérez-de Celis, C.; Chavarri-Guerra, Y. Prescriptive analytics-based methodologies for healthcare data: A systematic literature review. Comput. Sist. 2024, 28, 2369–2383. [Google Scholar] [CrossRef]

- Hafeez, N.; Sardar, K.; Rodriguez, J.L.O.; Gelbukh, A.; Sidorov, G. Integration of Agile Approaches with Quantum High-Performance Computing in Healthcare System Designs. Comput. Sist. 2025, 29, 1617–1633. [Google Scholar]

- Alzyoudi, M.; Alghazali, F. Enhancing Communication Skills in Students with Autism: A UAE Case Study on iPad-Based Interventions. Educ. Process. Int. J. 2025, 15, e2025174. [Google Scholar]

- Mitchell, W.R.; Deardorff, M.; Sinclair, T.; Wicker, M.; McMillan, J. How Do I Get to the Student Union? Developing Independent Navigation Skills in Students With an Intellectual or Developmental Disability. Inclusion 2025, 13, 13–25. [Google Scholar] [CrossRef]

- Schalock, R.L.; Luckasson, R.; Tassé, M.J. An overview of intellectual disability: Definition, diagnosis, classification, and systems of supports. Am. J. Intellect. Dev. Disabil. 2021, 126, 439–442. [Google Scholar] [CrossRef]

- Fuentes, C.; Gómez, S.; De Stasio, S.; Berenguer, C. Augmented reality and learning-cognitive outcomes in autism spectrum disorder: A systematic review. Children 2025, 12, 493. [Google Scholar] [CrossRef]

- Chang, E.; Chen, Y.R. Communication challenges and use of communication apps among individuals with cerebral palsy. Disabil. Rehabil. Assist. Technol. 2025, 20, 1815–1821. [Google Scholar] [CrossRef]

- Lin, Y.C.; Chien, S.Y.; Hou, H.T. A multi-dimensional scaffolding-based virtual reality educational board game design framework for service skills training. Educ. Inf. Technol. 2025, 30, 11251–11278. [Google Scholar] [CrossRef]

- Leichert, J.; Koke, M.; Wrede, B.; Richter, B. Virtual agent tutors in sheltered workshops: A feasibility study on attention training for individuals with intellectual disabilities. arXiv 2025, arXiv:2504.06031. [Google Scholar] [CrossRef]

- Selvam, B.; Gnana Piragasam, G.A. Augmented reality’s potential for addressing writing challenges in students with learning disabilities. Br. J. Spec. Educ. 2025, 52, 147–156. [Google Scholar] [CrossRef]

- Memon, F.N.; Memon, S.N. Digital Divide and Equity in Education: Bridging Gaps to Ensure Inclusive Learning. J. Digit. Educ. Soc. Sustain. 2025, 1, 107–130. [Google Scholar]

- Sung, I.Y.; Yuk, J.S.; Jang, D.H.; Yun, G.; Kim, C.; Ko, E.J. The Effect of the ‘Touch Screen-Based Cognitive Training’ for Children with Severe Cognitive Impairment in Special Education. Children 2021, 8, 1205. [Google Scholar] [CrossRef]

- Flogie, A.; Aberšek, B.; Kordigel Aberšek, M.; Sik Lanyi, C.; Pesek, I. Development and evaluation of intelligent serious games for children with learning difficulties: Observational study. JMIR Serious Games 2020, 8, e13190. [Google Scholar] [CrossRef]

- Oakes, L.R. Virtual reality among individuals with intellectual and/or developmental disabilities. Ther. Recreat. J. 2022, 56, 281–299. [Google Scholar] [CrossRef]

- Yeni, S.; Cagiltay, K.; Karasu, N. Usability investigation of an educational mobile application for individuals with intellectual disabilities. Univers. Access Inf. Soc. 2020, 19, 619–632. [Google Scholar] [CrossRef]

- Napetschnig, A.; Deiters, W. Requirements of innovative technologies to promote physical activity among senior citizens: A systematic literature review. INQUIRY: J. Health Care Organ. Provision Financ. 2025, 62, 00469580251349665. [Google Scholar] [CrossRef]

- Talens, C.; da Quinta, N.; Adebayo, F.A.; Erkkola, M.; Heikkilä, M.; Bargiel-Matusiewicz, K.; Ziółkowska, N.; Rioja, P.; Łyś, A.E.; Santa Cruz, E.; et al. Mobile-and Web-Based Interventions for Promoting Healthy Diets, Preventing Obesity, and Improving Health Behaviors in Children and Adolescents: Systematic Review of Randomized Controlled Trials. J. Med. Internet Res. 2025, 27, e60602. [Google Scholar] [CrossRef]

- Uludag, A.K.; Satir, U.K. Seeking alternatives in music education: The effects of mobile technologies on students’ achievement in basic music theory. Int. J. Music. Educ. 2025, 43, 172–188. [Google Scholar] [CrossRef]

- Bevan, N.; Carter, J.; Earthy, J.; Geis, T.; Harker, S. New ISO Standards for Usability, Usability Reports and Usability Measures. In Human-Computer Interaction. Theory, Design, Development and Practice; Kurosu, M., Ed.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 9731. [Google Scholar] [CrossRef]

- Chang, C.W.; Chen, Q.; Chen, T.L. Rubrics Development for Pre-service Preschool Educators’ Instructional Activity Design. J. Educ. Pract. Res. 2025, 38, 1–47. [Google Scholar]

- Paraskevopoulou-Kollia, E.A.; Michalakopoulos, C.A.; Zygouris, N.C.; Bagos, P.G. Computational Thinking in Primary and Pre-School Children: A Systematic Review of the Literature. Educ. Sci. 2025, 15, 985. [Google Scholar] [CrossRef]

- Batanero, C.; García López, E.; García Cabot, A.; Piedra Pullaguari, N.O. ISO/IEC 24751 Standard: Access for All. 2012. Available online: https://api.semanticscholar.org/CorpusID:61224861 (accessed on 8 October 2025).

- Sánchez, D.; Ibarra, J.; Flores, B.; López, G. Adoption of the ISO 9241-210:2010 Standard in the Construction of Computer-Based Interactive Systems. In Proceedings of the International Conference on Research and Innovation in Software Engineering (CONISOFT), Guadalajara, Mexico, 25–27 April 2012. [Google Scholar]

- Sweller, J.; Van Merrienboer, J.J.G.; Paas, F.G.W.C. Cognitive Architecture and Instructional Design. Educ. Psychol. Rev. 1998, 10, 251–296. [Google Scholar] [CrossRef]

- Xie, Z.; Or, C.K. Consumers’ preferences for purchasing mHealth apps: Discrete choice experiment. JMIR mHealth uHealth 2023, 11, e25908. [Google Scholar] [CrossRef]

- Steiner, V.; Perion, J.; Hartzog, M.; Ibrahim, S.; Lopez, A.; Martinez, B.; Saltzman, B.; Kinney, J. Initial findings on the benefits of the Tovertafel: Reducing behaviors in persons with dementia. Innov. Aging 2023, 7, 822–823. [Google Scholar] [CrossRef]

- Doumas, I.; Lejeune, T.; Edwards, M.; Stoquart, G.; Vandermeeren, Y.; Dehez, B.; Dehem, S. Clinical validation of an individualized auto-adaptative serious game for combined cognitive and upper limb motor robotic rehabilitation after stroke. J. Neuroeng. Rehabil. 2025, 22, 10. [Google Scholar] [CrossRef]

- Sjöwall, D.; Berglund, M.; Hirvikoski, T. Computerized working memory training for adults with ADHD in a psychiatric outpatient context—A feasibility trial. Appl. Neuropsychol. Adult 2025, 32, 196–204. [Google Scholar] [CrossRef]

- Gómez-León, M.I. Serious games to support emotional regulation strategies in educational intervention programs with children and adolescents. Systematic review and meta-analysis. Heliyon 2025, 11, e42712. [Google Scholar] [CrossRef]

- de Santana, A.N.; Roazzi, A.; Nobre, A.P.M.C. Game-based cognitive training and its impact on executive functions and math performance: A randomized controlled trial. J. Exp. Child Psychol. 2025, 256, 106257. [Google Scholar] [CrossRef]

- Lyu, Y.; An, P.; Xiao, Y.; Zhang, Z.; Zhang, H.; Katsuragawa, K.; Zhao, J. Eggly: Designing mobile augmented reality neurofeedback training games for children with autism spectrum disorder. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies; Association for Computing Machinery: New York, NY, USA, 2023; Volume 7, pp. 1–29. [Google Scholar] [CrossRef]

- Tlili, A.; Hattab, S.; Essalmi, F.; Chen, N.S.; Huang, R.; Chang, M.H.; Solans, D.B. A Smart Collaborative Educational Game with Learning Analytics to Support English Vocabulary Teaching. Int. J. Interact. Mob. Technol. (IJIMAI) 2021, 6, 215–224. [Google Scholar] [CrossRef]

- Vita-Barrull, N.; Estrada-Plana, V.; March-Llanes, J.; Guzmán, N.; Fernández-Muñoz, C.; Ayesa, R.; Moya-Higueras, J. Board game-based intervention to improve executive functions and academic skills in rural schools: A randomized controlled trial. Trends Neurosci. Educ. 2023, 33, 100216. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).