Image-Based Threat Detection and Explainability Investigation Using Incremental Learning and Grad-CAM with YOLOv8

Abstract

1. Introduction

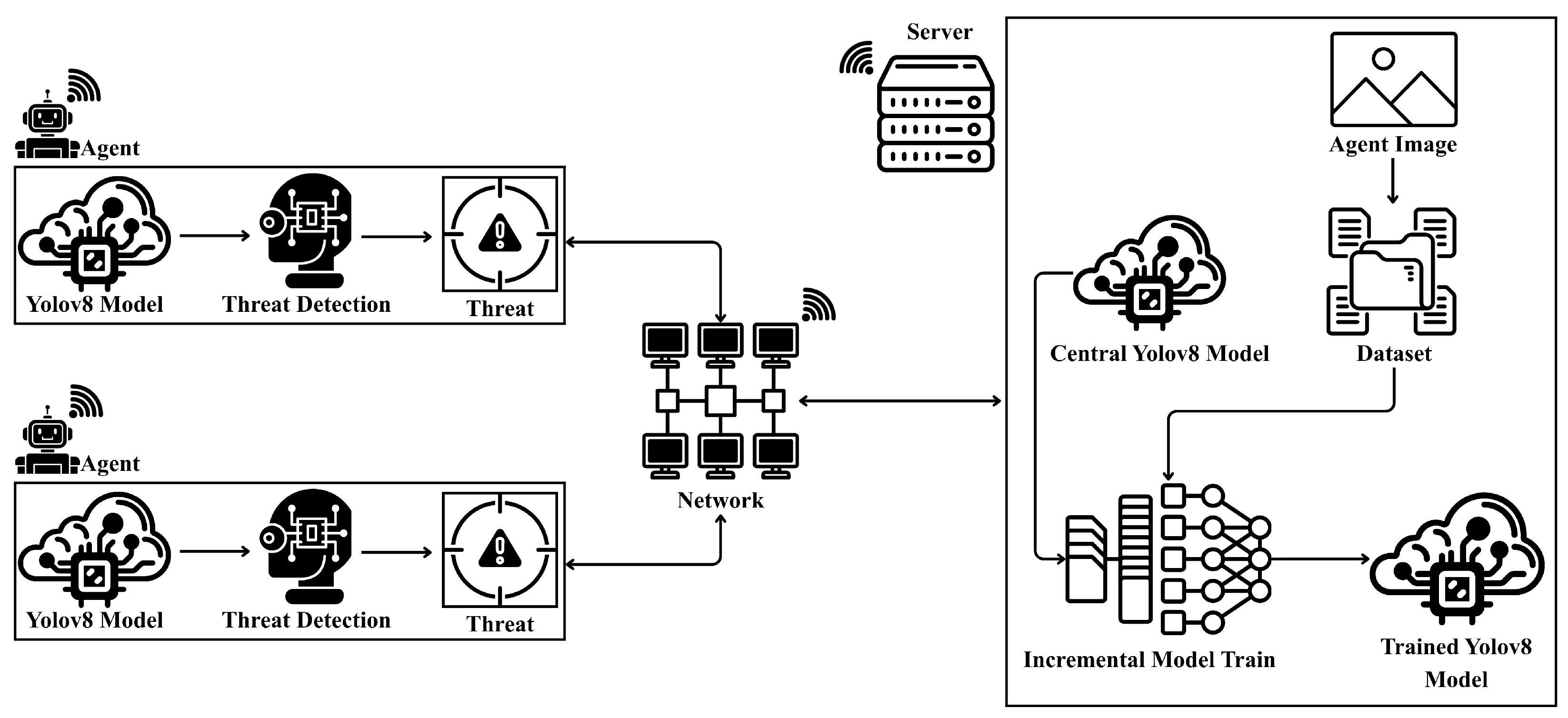

- A distributed agent-based edge–cloud framework for automated threat sample collection and progressive model updates;

- A memory-efficient incremental learning protocol that progressively enriches detection capabilities through sequential fine tuning while organically resolving class imbalance;

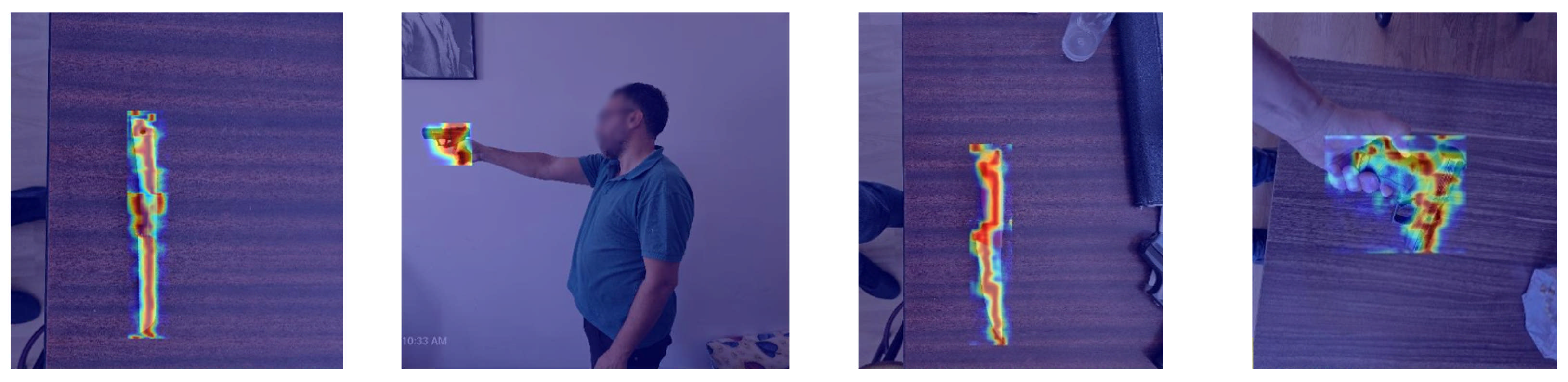

- Systematic Grad-CAM integration across incremental learning rounds for visual interpretability and trust assessment;

- Empirical validation showing mAP@0.5 increasing from 0.518 to 0.886 across five training rounds, demonstrating effective continual learning without full rehearsal.

2. Related Works

2.1. Deep Learning Architectures for Threat Detection

2.2. Incremental Learning for Dynamic Environments

2.3. Explainable AI for Model Transparency

2.4. Synthesis and Research Positioning

- A distributed edge–cloud framework where edge agents perform inference with YOLOv8, transmit detection results to a central server for quality filtering (class verification + IoU ≥ 0.5 localization assessment) and verified samples are progressively incorporated across five incremental rounds [2,17].

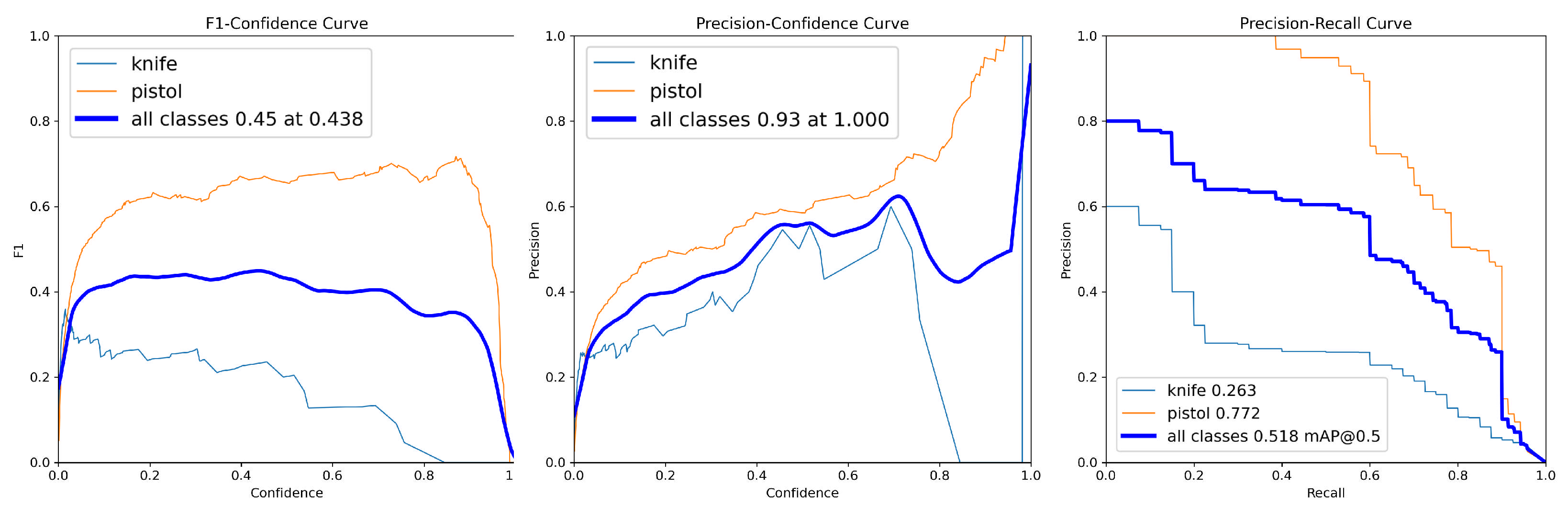

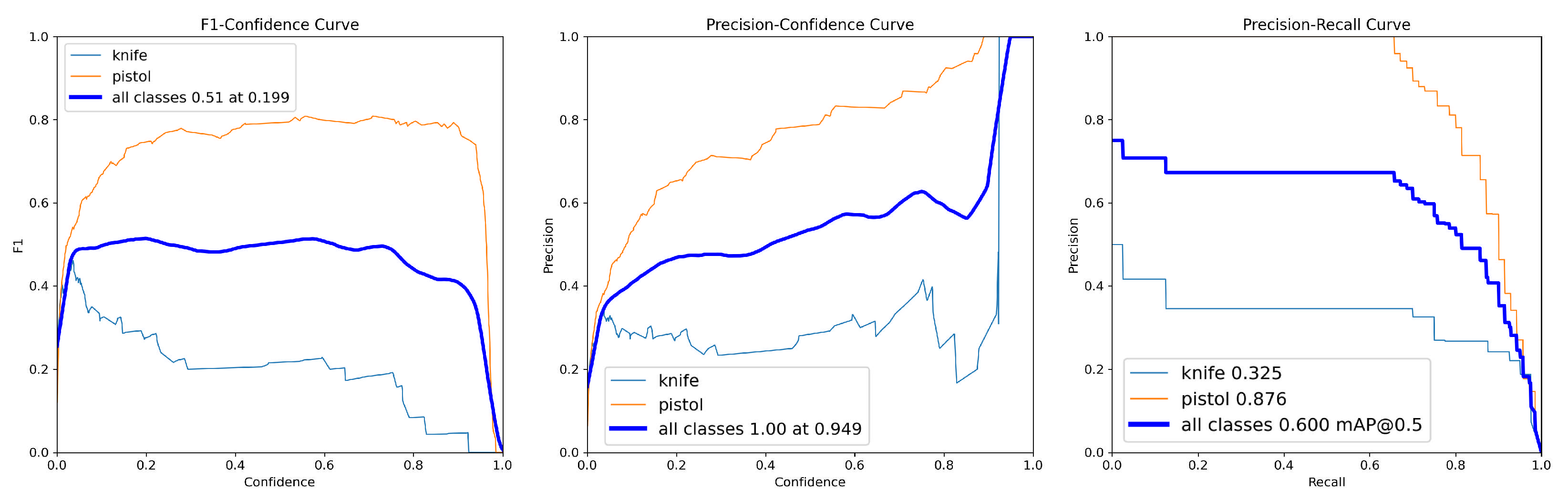

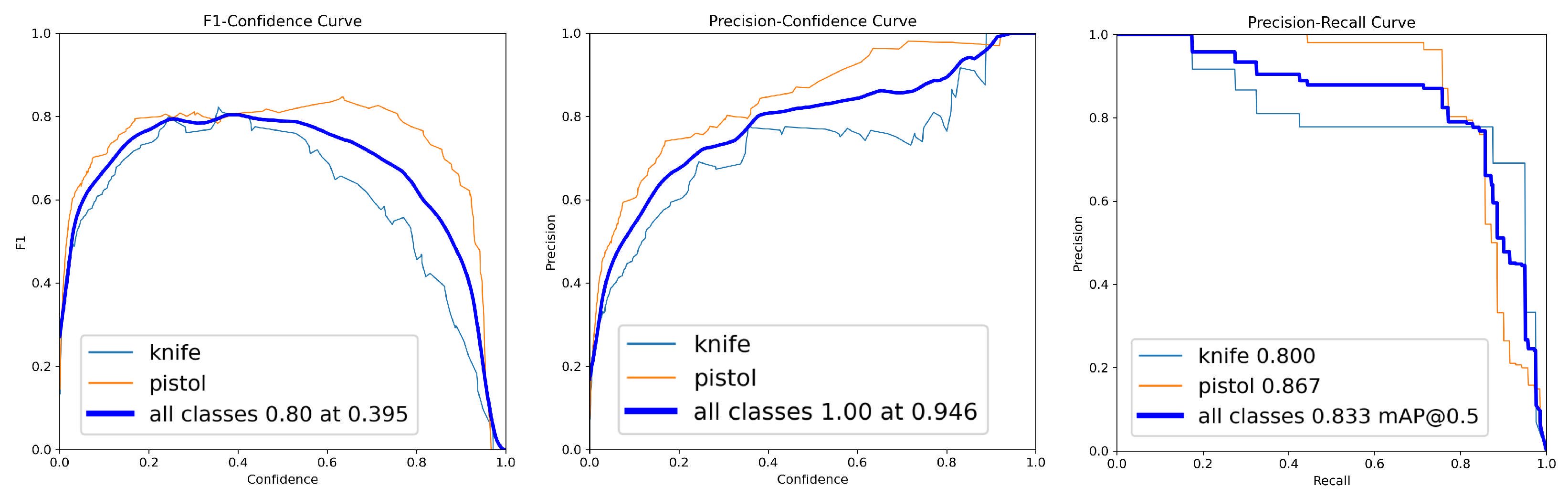

- Comprehensive validation on a real-world weapon detection dataset [15] (5064 images, two threat classes), demonstrating a macro-averaged F1-score increase from 0.45 to 0.83 and mAP@0.5 improvement from 0.518 to 0.886 across incremental learning rounds.

3. Materials and Methods

3.1. Dataset

3.2. Hardware, Software and Hyperparameters

3.3. Evaluation Metrics

3.4. Proposed Methodology

- Stage 1—Class Label Validation: The predicted class label is compared against the ground-truth label. Only detections with exact class matches proceed to geometric validation.

- Stage 2—Localization Quality Assessment: For class-validated detections, the Intersection over Union (IoU) between the predicted bounding box and the ground-truth bounding box is computed. Detections achieving IoU ≥ 0.5 are accepted as correctly localized, following the COCO evaluation protocol [36].

| Algorithm 1 Pseudocode of the agent-based incremental learning framework. |

|

3.5. Algorithm Design

4. Experimental Results

4.1. Obtained Results

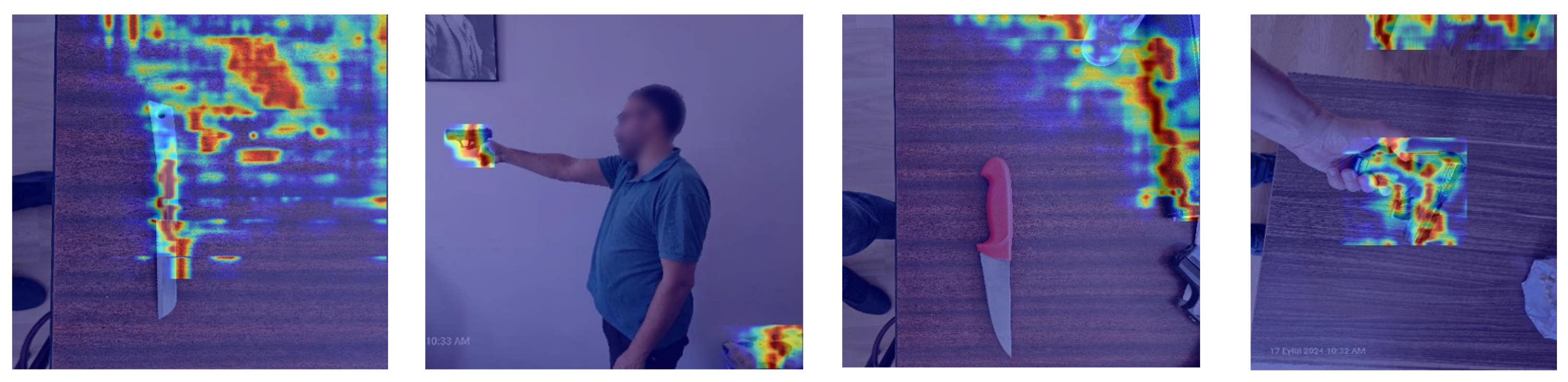

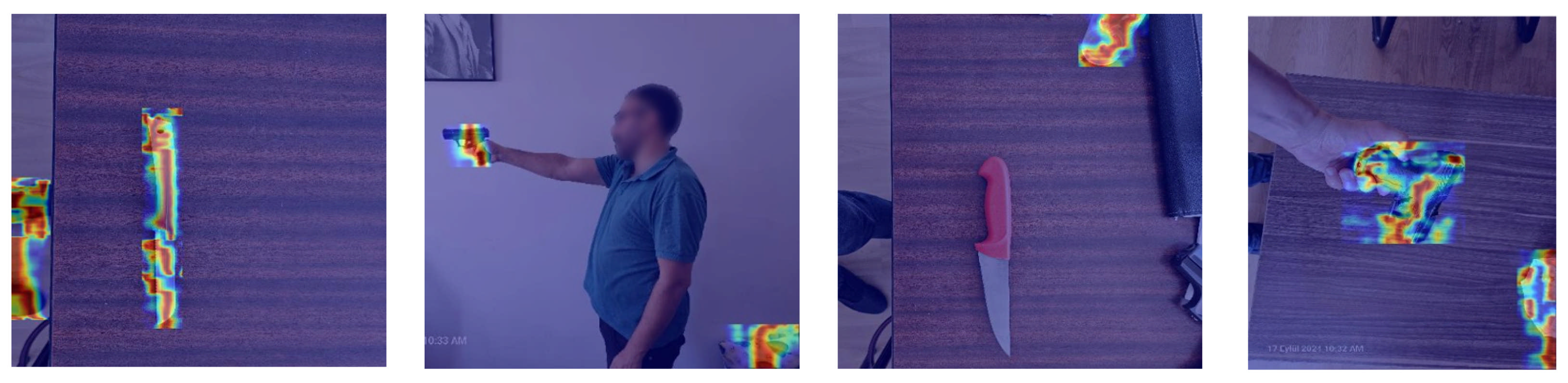

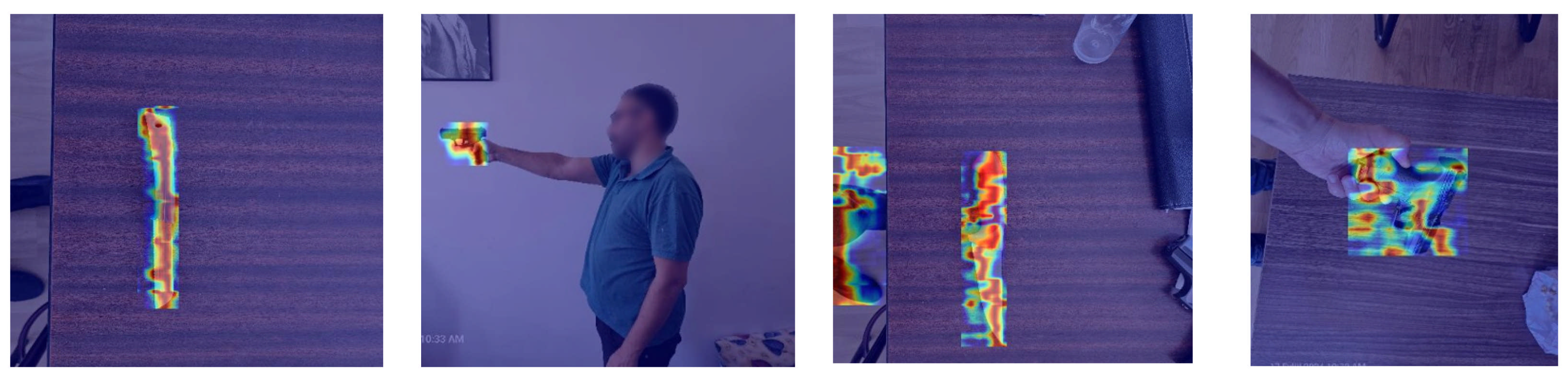

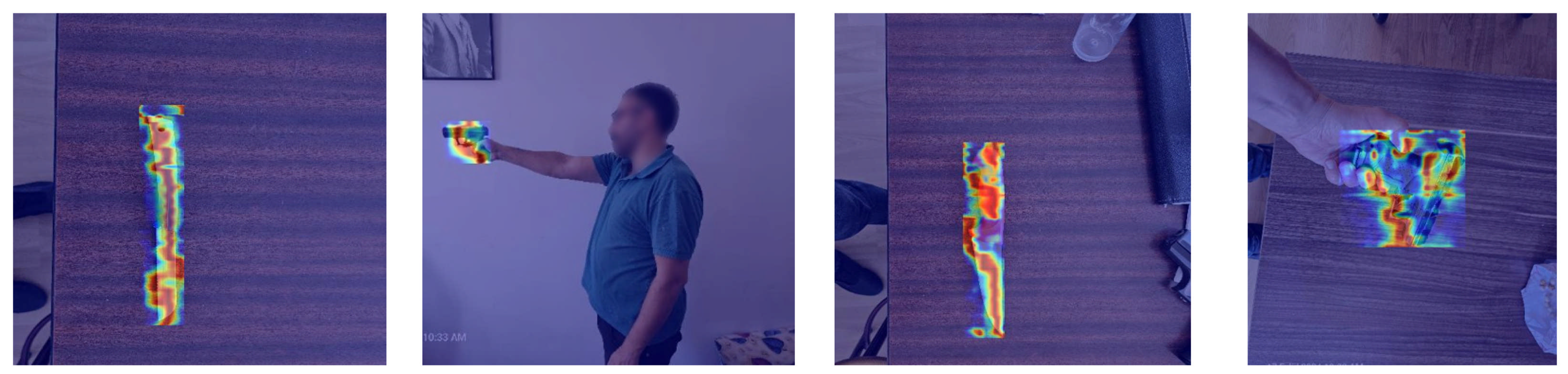

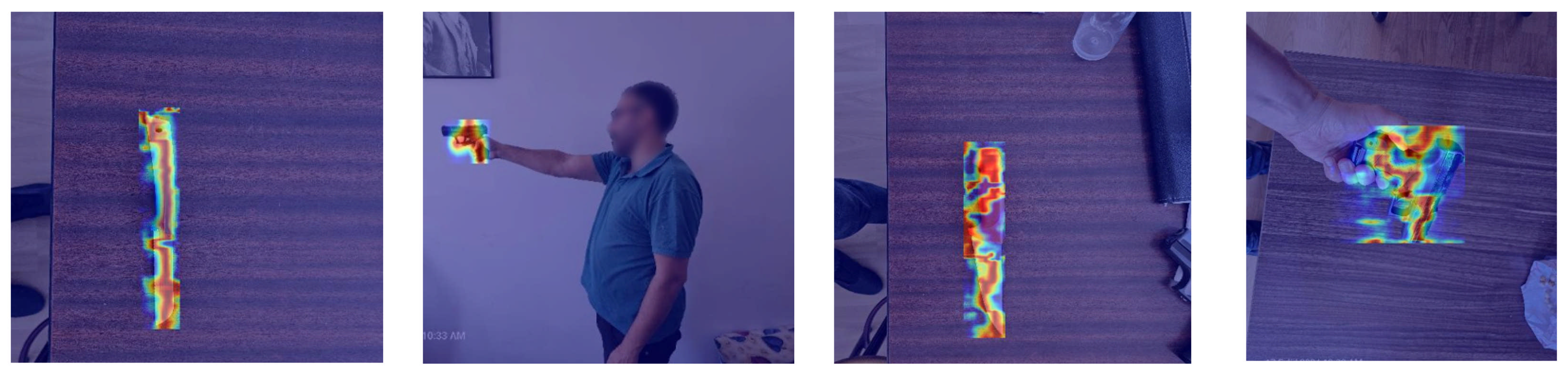

4.2. Validating the Training Using XAI

- In intermediate rounds, heatmaps for both classes displayed more focused attention on the weapons, albeit with minor spillover.

- By the final round, attention was sharply concentrated on the true object regions for both classes, with minimal noise.

4.3. Comparative Analysis

4.4. Performance Analysis

4.5. Framework Advantages

4.6. Baseline Comparison

4.7. Discussion

5. Limitations and Future Directions

5.1. Limitations

- Human-in-the-Loop Validation: Security personnel review flagged detections, but this introduces inter-annotator variability (typically 85–95% agreement [37]) and scalability constraints.

- Confidence-Based Filtering: High-confidence detections (e.g., >0.9) are auto-approved, but this may introduce confirmation bias and miss edge cases [38].

- Teacher–Student Models: A larger pre-trained model validates detections from lightweight edge models, but teacher errors propagate as label noise [38].

5.2. Future Directions

- Expand class coverage in the dataset.

- Investigate newer YOLO versions to identify possible accuracy and latency improvements in edge–cloud threat detection.

- Employ more comprehensive, domain-agnostic evaluation strategies.

- Actively simulate or collect uncontrolled real-world data (e.g., via collaborations with security agencies).

- Optimize for computational efficiency.

- Integrate advanced XAI methods, ultimately enabling the deployment of reliable, interpretable and adaptable threat detection systems in real-world operational environments.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A comprehensive review of yolo architectures in computer vision: From yolov1 to yolov8 and yolo-nas. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Shieh, J.L.; Haq, Q.M.u.; Haq, M.A.; Karam, S.; Chondro, P.; Gao, D.Q.; Ruan, S.J. Continual learning strategy in one-stage object detection framework based on experience replay for autonomous driving vehicle. Sensors 2020, 20, 6777. [Google Scholar] [CrossRef] [PubMed]

- Ashar, A.A.K.; Abrar, A.; Liu, J. A survey on object detection and recognition for blurred and low-quality images: Handling, deblurring, and reconstruction. In Proceedings of the 2024 8th International Conference on Information System and Data Mining, Los Angeles, CA, USA, 24–26 June 2024; pp. 95–100. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Rodriguez-Rodriguez, J.A.; López-Rubio, E.; Ángel-Ruiz, J.A.; Molina-Cabello, M.A. The impact of noise and brightness on object detection methods. Sensors 2024, 24, 821. [Google Scholar] [CrossRef] [PubMed]

- Ali, S.; Abuhmed, T.; El-Sappagh, S.; Muhammad, K.; Alonso-Moral, J.M.; Confalonieri, R.; Guidotti, R.; Del Ser, J.; Díaz-Rodríguez, N.; Herrera, F. Explainable Artificial Intelligence (XAI): What we know and what is left to attain Trustworthy Artificial Intelligence. Inf. Fusion 2023, 99, 101805. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; García, S.; Gil-López, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Bakır, H.; Bakır, R. Evaluating the robustness of YOLO object detection algorithm in terms of detecting objects in noisy environment. J. Sci. Rep.-A 2023, 054, 1–25. [Google Scholar] [CrossRef]

- Zhao, Z.; Alzubaidi, L.; Zhang, J.; Duan, Y.; Gu, Y. A comparison review of transfer learning and self-supervised learning: Definitions, applications, advantages and limitations. Expert Syst. Appl. 2024, 242, 122807. [Google Scholar] [CrossRef]

- Aguiar, G.; Krawczyk, B.; Cano, A. A survey on learning from imbalanced data streams: Taxonomy, challenges, empirical study, and reproducible experimental framework. Mach. Learn. 2024, 113, 4165–4243. [Google Scholar] [CrossRef]

- Joshi, P. Weapon Detection Computer Vision Model. 2023. Available online: https://universe.roboflow.com/parthav-joshi/weapon_detection-xbxnv (accessed on 18 November 2025).

- Parisi, G.I.; Kemker, R.; Part, J.L.; Kanan, C.; Wermter, S. Continual Lifelong Learning with Neural Networks: A Review. Neural Netw. 2019, 113, 54–71. [Google Scholar] [CrossRef]

- Shaheen, K.; Hanif, M.A.; Hasan, O.; Shafique, M. Continual learning for real-world autonomous systems: Algorithms, challenges and frameworks. J. Intell. Robot. Syst. 2022, 105, 9. [Google Scholar] [CrossRef]

- Rebuffi, S.A.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. iCaRL: Incremental Classifier and Representation Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Kemker, R.; Kanan, C. FearNet: Brain-Inspired Model for Incremental Learning. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018; pp. 1–14. [Google Scholar] [CrossRef]

- Feng, T.; Wang, M.; Yuan, H. Overcoming Catastrophic Forgetting in Incremental Object Detection via Elastic Response Distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 9427–9436. [Google Scholar] [CrossRef]

- Hassan, T.; Hassan, B.; Owais, M.; Velayudhan, D.; Dias, J.; Ghazal, M.; Werghi, N. Incremental Convolutional Transformer for Baggage Threat Detection. Pattern Recognit. 2024, 153, 110493. [Google Scholar] [CrossRef]

- Aljundi, R.; Lin, M.; Goujaud, B.; Bengio, Y. Gradient based sample selection for online continual learning. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32, pp. 11816–11825. [Google Scholar]

- Dong, Q.; Gong, S.; Zhu, X. Imbalanced Deep Learning by Minority Class Incremental Rectification. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1367–1381. [Google Scholar] [CrossRef]

- Doshi-Velez, F.; Kim, B. Towards A Rigorous Science of Interpretable Machine Learning. arXiv 2017, arXiv:1702.08608. [Google Scholar] [CrossRef]

- Adebayo, J.; Gilmer, J.; Muelly, M.; Goodfellow, I.; Hardt, M.; Kim, B. Sanity Checks for Saliency Maps. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Balasubramaniam, N.; Kauppinen, M.; Rannisto, A.; Hiekkanen, K.; Kujala, S. Transparency and Explainability of AI Systems: From Ethical Guidelines to Requirements. Inf. Softw. Technol. 2023, 159, 107197. [Google Scholar] [CrossRef]

- Gunning, D.; Stefik, M.; Choi, J.; Miller, T.; Stumpf, S.; Yang, G.Z. XAI—Explainable Artificial Intelligence. Sci. Robot. 2019, 4, eaay7120. [Google Scholar] [CrossRef]

- Bhati, D.; Neha, F.; Amiruzzaman, M. A Survey on Explainable Artificial Intelligence (XAI) Techniques for Visualizing Deep Learning Models in Medical Imaging. J. Imaging 2024, 10, 239. [Google Scholar] [CrossRef]

- Ultralytics. Hyperparameter Tuning Guide—YOLOv8. 2025. Available online: https://docs.ultralytics.com/guides/hyperparameter-tuning/ (accessed on 18 November 2025).

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Mulajkar, R.; Yede, S. YOLO Version v1 to v8 Comprehensive Review. In Proceedings of the 2024 International Conference on Inventive Computation Technologies (ICICT), Lalitpur, Nepal, 24–26 April 2024; pp. 472–478. [Google Scholar] [CrossRef]

- Ali, M.L.; Zhang, Z. The YOLO Framework: A Comprehensive Review of Evolution, Applications, and Benchmarks in Object Detection. Computers 2024, 13, 336. [Google Scholar] [CrossRef]

- Ramos, L.T.; Sappa, A.D. A Decade of You Only Look Once (YOLO) for Object Detection: A Review. IEEE Access 2025, 13, 192747–192794. [Google Scholar] [CrossRef]

- Hussain, M. YOLO-v1 to YOLO-v8, the rise of YOLO and its complementary nature toward digital manufacturing and industrial defect detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Powers, D.M.W. Evaluation: From Precision, Recall and F-Measure to ROC, Informedness, Markedness and Correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2014, arXiv:1405.0312. [Google Scholar] [CrossRef]

- Papadopoulos, D.P.; Uijlings, J.R.R.; Keller, F.; Ferrari, V. Extreme Clicking for Efficient Object Annotation. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4940–4949. [Google Scholar]

- Arazo, E.; Ortego, D.; Albert, P.; O’Connor, N.E.; McGuinness, K. Pseudo-Labeling and Confirmation Bias in Deep Semi-Supervised Learning. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Richardson, L.; Ruby, S. RESTful Web Services; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2007. [Google Scholar]

- Pimentel, V.; Nickerson, B.G. Communicating and Displaying Real-Time Data with WebSocket. IEEE Internet Comput. 2012, 16, 45–53. [Google Scholar] [CrossRef]

- Farhan, A.; Shafi, M.A.; Gul, M.; Fayyaz, S.; Bangash, K.U.; Rehman, B.U.; Shahid, H.; Kashif, M. Deep Learning-based Weapon Detection using Yolov8. Int. J. Innov. Sci. Technol. 2025, 7, 1269–1280. [Google Scholar] [CrossRef]

- Shanthi, P.; Manjula, V. Weapon detection with FMR-CNN and YOLOv8 for enhanced crime prevention and security. Sci. Rep. 2025, 15, 26766. [Google Scholar] [CrossRef]

- Northcutt, C.G.; Jiang, L.; Chuang, I.L. Confident Learning: Estimating Uncertainty in Dataset Labels. J. Artif. Intell. Res. 2021, 70, 1373–1411. [Google Scholar] [CrossRef]

- Han, B.; Yao, Q.; Yu, X.; Niu, G.; Xu, M.; Hu, W.; Tsang, I.W.; Sugiyama, M. Co-teaching: Robust Training of Deep Neural Networks with Extremely Noisy Labels. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; Curran Associates, Inc.: Red Hook, NY, USA, 2018; Volume 31, pp. 8527–8537. [Google Scholar]

- Sapkota, R.; Karkee, M. Ultralytics YOLO Evolution: An Overview of YOLO26, YOLO11, YOLOv8 and YOLOv5 Object Detectors for Computer Vision and Pattern Recognition. arXiv 2025, arXiv:2510.09653. [Google Scholar] [CrossRef]

- Hassija, V.; Chamola, V.; Mahapatra, A.; Singal, A.; Goel, D.; Huang, K.; Scardapane, S.; Spinelli, I.; Mahmud, M.; Hussain, A. Interpreting Black-Box Models: A Review on Explainable Artificial Intelligence. Cogn. Comput. 2024, 16, 45–74. [Google Scholar] [CrossRef]

| Category | Parameter | Value | Justification |

|---|---|---|---|

| Hardware | GPU | NVIDIA RTX 3090 (24 GB) | High-throughput training |

| CPU | Intel Core i9-11900K CPU | Parallel data pre-processing | |

| RAM | 64 GB DDR4 | Large-batch caching | |

| Software | Framework | PyTorch 2.0.1 + CUDA 11.8 | GPU acceleration |

| YOLOv8 Version | Ultralytics 8.0.196 [29] | Stable API compatibility | |

| Model | Architecture | YOLOv8-nano (YOLOv8n) | Efficiency–accuracy trade-off |

| Parameters | 3.2 M | Edge deployment suitability | |

| Input Resolution | 640 × 640 pixels | Standard YOLO input size | |

| Training | Batch Size | 16 | Memory–performance balance |

| Optimizer | AdamW | Decoupled weight decay [30] | |

| Initial Learning Rate | 0.001 | Default YOLOv8 setting [29] | |

| LR Schedule | Step decay (×0.1 every 5 epochs) | Gradual convergence | |

| Epochs per Round | 10 | Sufficient for convergence | |

| Loss Function | CIoU + BCE (classification + objectness) | YOLOv8 default [29] | |

| Augmentation | Mosaic, scaling, HSV jitter [29] | Built-in YOLOv8 pipeline |

| Round | Dataset Size | New Samples | Epochs | Training Time (Minutes) |

|---|---|---|---|---|

| 1 (initial model) | 709 | - | 10 | 18 |

| 2 | 1342 | 633 | 10 | 20 |

| 3 | 2343 | 1001 | 10 | 25 |

| 4 | 2859 | 516 | 10 | 22 |

| 5 | 3543 | 684 | 10 | 24 |

| Total Incremental | 3543 | - | 50 | 109 |

| One-Shot Training | 3543 | - | 50 | 420 |

| Round | Unlabeled Pool | Total Detections | Verified Samples (Added to Training) | Rejected Detections | Undetected Samples | Cumulative Training Set | Remaining Pool |

|---|---|---|---|---|---|---|---|

| 1 | — | — | 709 (initial) | — | — | 709 | 2834 |

| 2 | 2834 | 795 | 633 | 162 | 2039 | 1342 | 2201 |

| 3 | 2201 | 1251 | 1001 | 250 | 950 | 2343 | 1200 |

| 4 | 1200 | 642 | 516 | 126 | 558 | 2859 | 684 |

| 5 | 684 | 684 | 684 | 0 | 0 | 3543 | 0 |

| Round | F1-Score (All Classes) | Precision (All Classes) | mAP@0.5 (All Classes) |

|---|---|---|---|

| 1 (initial model) | 0.45 at conf. 0.438 | 0.93 at conf. 1.000 | 0.518 |

| 2 | 0.51 at conf. 0.199 | 1.00 at conf. 0.949 | 0.600 |

| 3 | 0.80 at conf. 0.395 | 1.00 at conf. 0.946 | 0.833 |

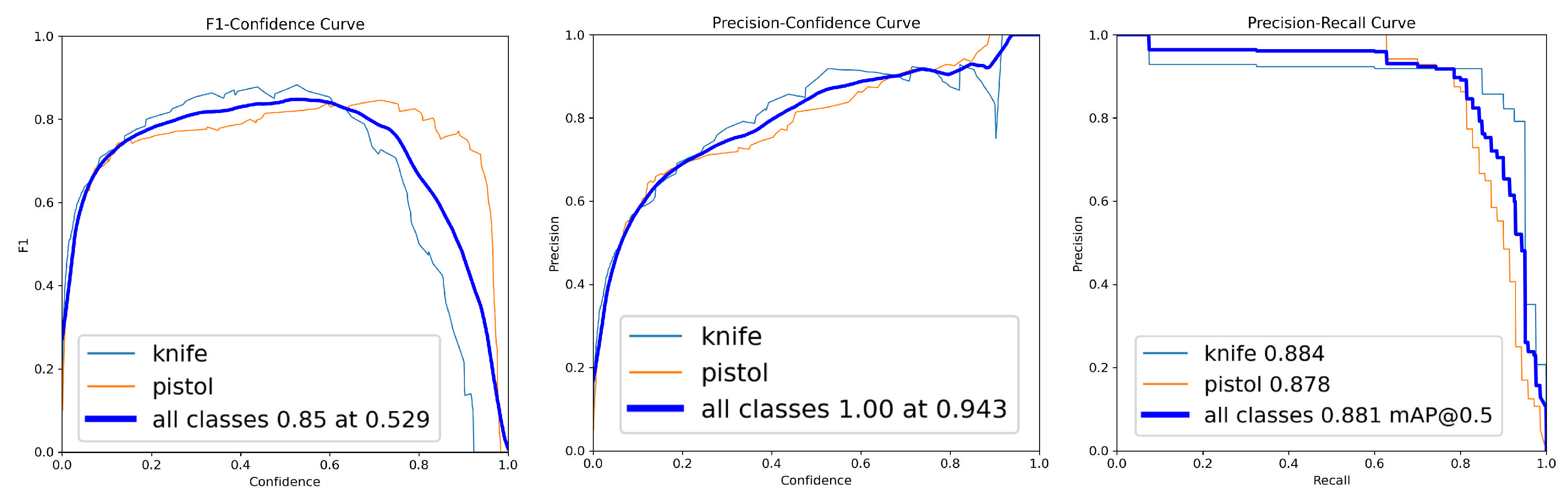

| 4 | 0.85 at conf. 0.529 | 1.00 at conf. 0.943 | 0.881 |

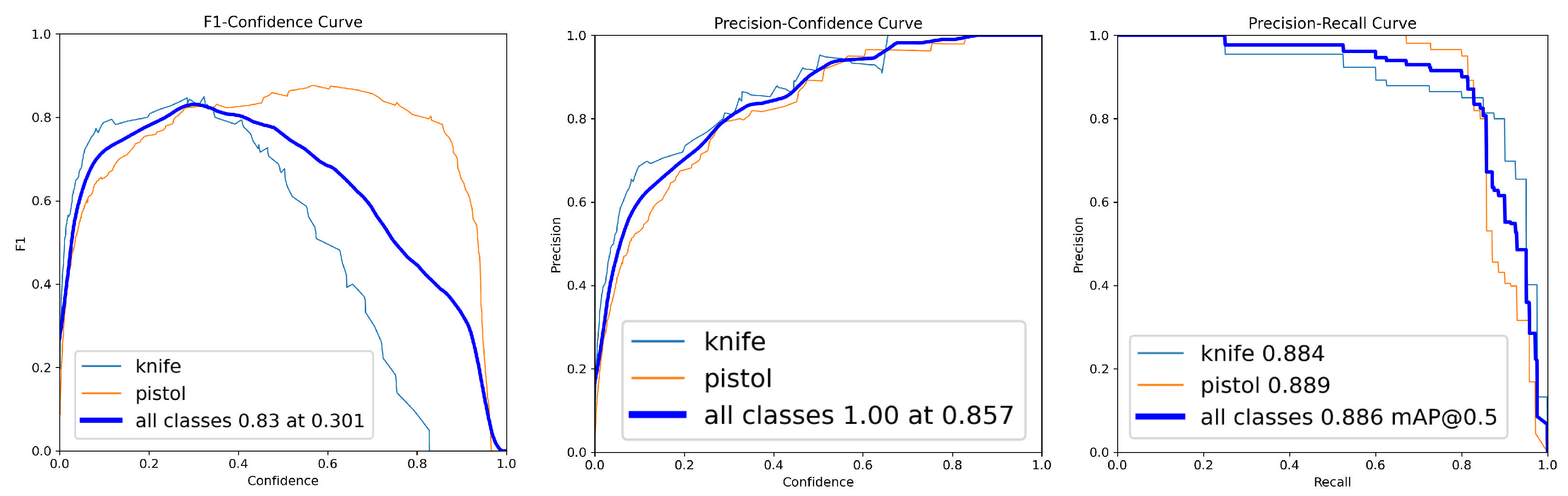

| 5 | 0.83 at conf. 0.301 | 1.00 at conf. 0.857 | 0.886 |

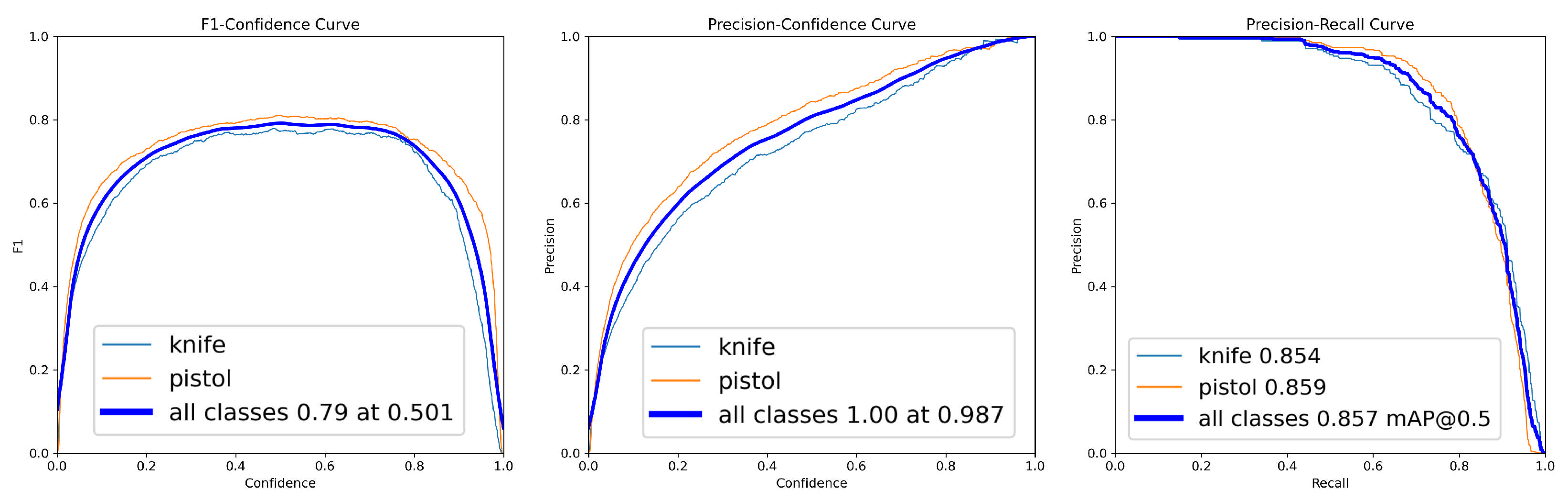

| One-Shot Training | 0.79 at conf. 0.501 | 1.00 at conf. 0.987 | 0.857 |

| Round | Class | F1-Score (Peak) | Precision @ F1-Score-Peak | mAP@0.5 |

|---|---|---|---|---|

| 1 (initial model) | Knife | 0.28 | 0.45 | 0.263 |

| Pistol | 0.63 | 0.75 | 0.772 | |

| 2 | Knife | 0.30 | 0.45 | 0.325 |

| Pistol | 0.75 | 0.85 | 0.876 | |

| 3 | Knife | 0.78 | 0.88 | 0.800 |

| Pistol | 0.84 | 0.93 | 0.867 | |

| 4 | Knife | 0.86 | 0.90 | 0.884 |

| Pistol | 0.83 | 0.92 | 0.878 | |

| 5 | Knife | 0.83 | 0.91 | 0.884 |

| Pistol | 0.84 | 0.94 | 0.889 | |

| One-Shot Training | Knife | 0.79 | 1.00 | 0.854 |

| Pistol | 0.79 | 1.00 | 0.859 |

| Method | Architecture | mAP | Model Size | Incremental | XAI |

|---|---|---|---|---|---|

| @0.5 | (M) | Learning | |||

| Farhan et al. [41] | YOLOv8m | 0.852 | 25.9 | × | × |

| Shanthi & Manjula [42] | FMR-CNN + YOLOv8 | 0.901 * | ∼28 | × | × |

| Hassan et al. [21] | Incremental Transformer | 0.790 | — | ✓ | × |

| Proposed Method | YOLOv8n | 0.886 | 3.2 | ✓ | ✓ |

| Method Variant | F1-Score | mAP@0.5 | Heatmap Focus (%) |

|---|---|---|---|

| (All Classes) | (All Classes) | ||

| Proposed Framework (Full) | 0.83 | 0.886 | 92.5 |

| Baseline (One-shot Training) | 0.79 | 0.857 | 90.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kutlu, Z.; Emiroğlu, B.G. Image-Based Threat Detection and Explainability Investigation Using Incremental Learning and Grad-CAM with YOLOv8. Computers 2025, 14, 511. https://doi.org/10.3390/computers14120511

Kutlu Z, Emiroğlu BG. Image-Based Threat Detection and Explainability Investigation Using Incremental Learning and Grad-CAM with YOLOv8. Computers. 2025; 14(12):511. https://doi.org/10.3390/computers14120511

Chicago/Turabian StyleKutlu, Zeynel, and Bülent Gürsel Emiroğlu. 2025. "Image-Based Threat Detection and Explainability Investigation Using Incremental Learning and Grad-CAM with YOLOv8" Computers 14, no. 12: 511. https://doi.org/10.3390/computers14120511

APA StyleKutlu, Z., & Emiroğlu, B. G. (2025). Image-Based Threat Detection and Explainability Investigation Using Incremental Learning and Grad-CAM with YOLOv8. Computers, 14(12), 511. https://doi.org/10.3390/computers14120511