1. Introduction

Special components in agricultural products encompass a diverse array of chemical, biological, and physical constituents that critically influence product safety, quality, and physiological integrity. These components are distinguished by their significant impact on human health, market value, and agricultural sustainability, yet they often occur at trace levels or exhibit complex interactions within biological matrices, rendering their detection analytically challenging. Broadly categorized, special components include (1) safety-critical hazards such as pesticide residues (e.g., chlorpyrifos in oils [

1]), heavy metals (e.g., cadmium in lettuce [

2], lead in oilseed rape [

3,

4]), mycotoxins (e.g., aflatoxin B

1 in maize [

5]), and polycyclic aromatic hydrocarbons [

6] (e.g., benzofluoranthene in seafood), which pose direct risks to consumers; (2) quality-defining attributes like soluble solids content in fruits [

7], tea polyphenols [

8], starch functional properties [

9], gel strength in processed foods [

10], and texture parameters (e.g., resilience in tofu [

11]), which determine sensory acceptability and economic value; and (3) physiological markers, including chlorophyll (Chl) content [

12], anthocyanin levels [

13], moisture dynamics [

14], and nutrient status (e.g., nitrogen in crops), which reflect plant health, stress responses, and post-harvest physiological changes.

The inherent complexity of agricultural systems, characterized by heterogeneous matrices, environmental variability, and dynamic biological processes, imposes stringent demands on detection methodologies. Traditional methods for detecting special components in agricultural products face significant constraints that impede efficient quality and safety monitoring, including chromatography (e.g., high-pressure liquid chromatography [

15], gas chromatography–mass spectrometry) [

16,

17,

18,

19,

20], atomic spectroscopy (e.g., inductively coupled plasma mass spectrometry (ICP-MS) [

21,

22]), and immunoassays [

23,

24,

25,

26]. These techniques are inherently destructive, requiring extensive sample preparation and chemical reagents, which prolongs the analysis time (often hours per sample) and increases operational costs. Moreover, their reliance on laboratory infrastructure and skilled personnel limits the field deployment, hindering real-time decision making in agricultural supply chains. For spectral-based methods such as near-infrared (NIR) or HSI [

27,

28,

29], challenges persist in handling high-dimensional data with noise interference, baseline drift, and redundant variables, which degrade model robustness and generalizability across various crop matrices. Consequently, the precise identification and quantification of these special components require innovative approaches that transcend conventional analytical paradigms.

Deep learning (DL) techniques address these limitations by automating feature extraction and enabling end-to-end modeling [

6,

14,

30,

31,

32,

33]. Convolutional neural networks (CNNs) transform raw spectral data into discriminative spatial features, bypassing manual preprocessing steps like Savitzky–Golay smoothing or multiplicative scatter correction [

34,

35]. Recurrent architectures (e.g., long short-term memory (LSTM) networks [

36]) capture temporal dependencies in sequential spectral data, enhancing prediction stability for dynamic processes such as fermentation or drying. Attention mechanisms [

37] (e.g., Squeeze-and-excitation networks (SENet) [

38,

39], residual attention modules) further refine feature selection by adaptively weighting informative wavelengths or channels, mitigating interference from complex backgrounds. These capabilities allow DL models to achieve state-of-the-art accuracy in quantifying trace contaminants (e.g., aflatoxins at 0.03 mg/kg) and physiological markers (e.g., Chl with

> 0.92) while reducing false positives under field conditions.

The integration of DL with edge computing [

40,

41] and lightweight architectures (e.g., 1D-CNNs [

42,

43], MobileNet [

44,

45,

46]) facilitates real-time, non-destructive monitoring. For instance, portable systems combining miniaturized sensors (e.g., Raman spectrometers [

10], microwave detectors [

47]) with optimized DL algorithms can deliver results within seconds, significantly outperforming traditional methods in speed and cost efficiency. This paradigm shift not only enhances detection precision but also democratizes access to advanced analytics for resource-limited agricultural settings, ultimately strengthening food safety protocols and enabling proactive quality control across the production lifecycle.

Many studies have reviewed the application of DL in agriculture [

33,

48,

49,

50,

51,

52,

53,

54,

55,

56], but very few have specifically focused on the performance of special component detection. This review addresses the urgent need to synthesize emerging strategies for the detection of special components, with a focus on DL-driven solutions that enhance accuracy, efficiency, and adaptability in agricultural analytics. By defining “special components” through the tripartite lens of safety, quality, and physiology, we establish a foundation to evaluate how DL techniques overcome the limitations of traditional methods, ultimately enabling real-time and non-destructive monitoring across the agricultural value chain. The contributions of our review are summarized and given below:

According to the impact of the special components in agricultural products, we divide the DL applications into three categories: DL for contaminant detection, DL for quality and nutritional component analysis, DL for structural/textural and biotic stress assessment.

We introduce and analyze the above three categories of work. Specifically, we summarize and compare them mainly from the aspects of targets, samples, techniques, efficiency, accuracy and cost.

We summarize the challenges and propose future research directions.

Figure 1 highlights the schematic diagram of the review methodologies in accomplishing the article. It presented the screening and review process. In screening process, the relevant articles were selected in several searching processes. All the articles were initially searched in MDPI, IEEE digital Library, Bing and Google Scholar etc. We used “Deep learning”, “Agriculture”, “Contaminant”, “Nutritional”, “Biotic”, etc as keywords for searching. After searching, 139 articles were primarily selected for review. In the second stage of our search, we selected 98 articles based on the paper title, abstract, and conclusion. Finally, we did an aggressive study and selected 85 published articles in recent journals, conferences, and websites based on impact factors, citations, relevance, and quality. We thoroughly read and scrutinized the papers to collect valuable information, then analyze and discuss challenges and future research work. The results of the reviews are summarized in different sections. First, the background of DL applications in detecting special components for agricultural products is summarized. The second stage is to divide the existing work into three categories. The third stage is exploration and analysis. Here, we discuss the targets, samples, techniques, efficiency, accuracy and cost. Fourth, challenges and future directions are summarized that are associated with model explainability, standardized datasets and protocols, and field application.

This paper is organized into six sections and graphically presented in

Figure 2.

Section 2 reviews the application of DL techniques for contaminant detection.

Section 3 reviews the application of DL techniques for quality and nutritional component analysis.

Section 4 reviews the application of DL techniques for structural/textural and biotic stress assessment.

Section 5 presents the challenges and future directions. Finally,

Section 6 concludes the review paper.

2. DL for Contaminant Detection

DL techniques have revolutionized the detection of contaminants in agricultural products by addressing critical limitations of traditional methods. For heavy metal detection, multimodal sensor fusion strategies demonstrate significant advantages. Han et al. [

57] pioneered a low-cost optical electronic tongue system using colorimetric sensor arrays (CSA) with nine functionalized dyes immobilized on cellulose membranes, enabling visual recognition of Pb, Cd, and Hg in fish through specific metal–ligand coordination. By integrating extreme learning machines (ELM) optimized with principal component analysis (PCA), the system achieved the correlation coefficient in the prediction set as 0.854 (Pb), 0.83 (Cd), 0.845 (Hg) and the corresponding root mean square error of prediction (

) as 0.102 mg/kg (Pb), 0.026 mg/kg (Cd), and 0.016 mg/kg (Hg). Moreover, this colorimetric electronic tongue enables rapid simultaneous detection of multiple heavy metals at low cost and with portability for field analysis, compared to the conventional ICP-MS methods requiring expensive instrumentation and longer analysis times. This approach highlights the potential of combining low-cost sensing hardware (5 min) with lightweight neural networks for field-deployable solutions.

HSI coupled with deep feature extraction has emerged as a powerful paradigm for crop contamination analysis. Sun et al. [

2,

27] developed a particle swarm optimization (PSO)-enhanced deep belief network (DBN) to quantify Cd in lettuce, addressing spectral nonlinearity across 618 bands. Their PSO-DBN framework employed dynamic inertia weight tuning (

) to escape local optima during pretraining, achieving unprecedented accuracy (

= 0.9234,

= 3.5894) that surpassed practical applicability thresholds. Similarly, advanced architectures like wavelet transform-stacked convolutional autoencoders (WT-SCAE) have been designed to resolve spectral interference from compound heavy metals [

58]. By decomposing hyperspectral data into multi-frequency components via db5 wavelets and processing them through deep SCAE blocks, researchers attained

> 0.93, for both Cd and Pb in lettuce while capturing metal interaction mechanisms such as Pb-enhanced Cd uptake. These approaches bypass manual feature selection and directly learn from raw spectral inputs, significantly enhancing biological plausibility.

Innovative data representation methods further expand DL’s capabilities in trace contaminant detection. Wang et al. [

59] introduced Markov transition fields (MTF) to convert 1D near-infrared spectra of maize into 2D spatial images, preserving inter-wavelength dependencies through Markov transition probabilities. Coupled with a CNN, this MTF-CNN framework reduced prediction errors for aflatoxin B

1 by 75% (

= 1.36

g/kg) compared to 1D-CNN, while achieving

= 14.94. It demonstrates how topological feature encoding enhances model sensitivity for ultratrace analytes. In pesticide detection, Wu et al. [

1] integrated DL into surface-enhanced Raman spectroscopy (SERS) substrates to overcome spectral noise challenges. Li et al. [

60] engineered Au-Ag octahedral hollow cages (OHCs) with electromagnetic field enhancement factors of

, enabling 1D-CNN models to quantify thiram and pymetrozine in tea at parts-per-billion levels (limit of detection = 0.286 ppb for thiram), surpassing European Union maximum residue limits by two orders of magnitude. The CNN architecture’s inherent noise resistance eliminated complex pre-processing steps while maintaining high robustness (relative standard deviation = 5.23% over 15 days).

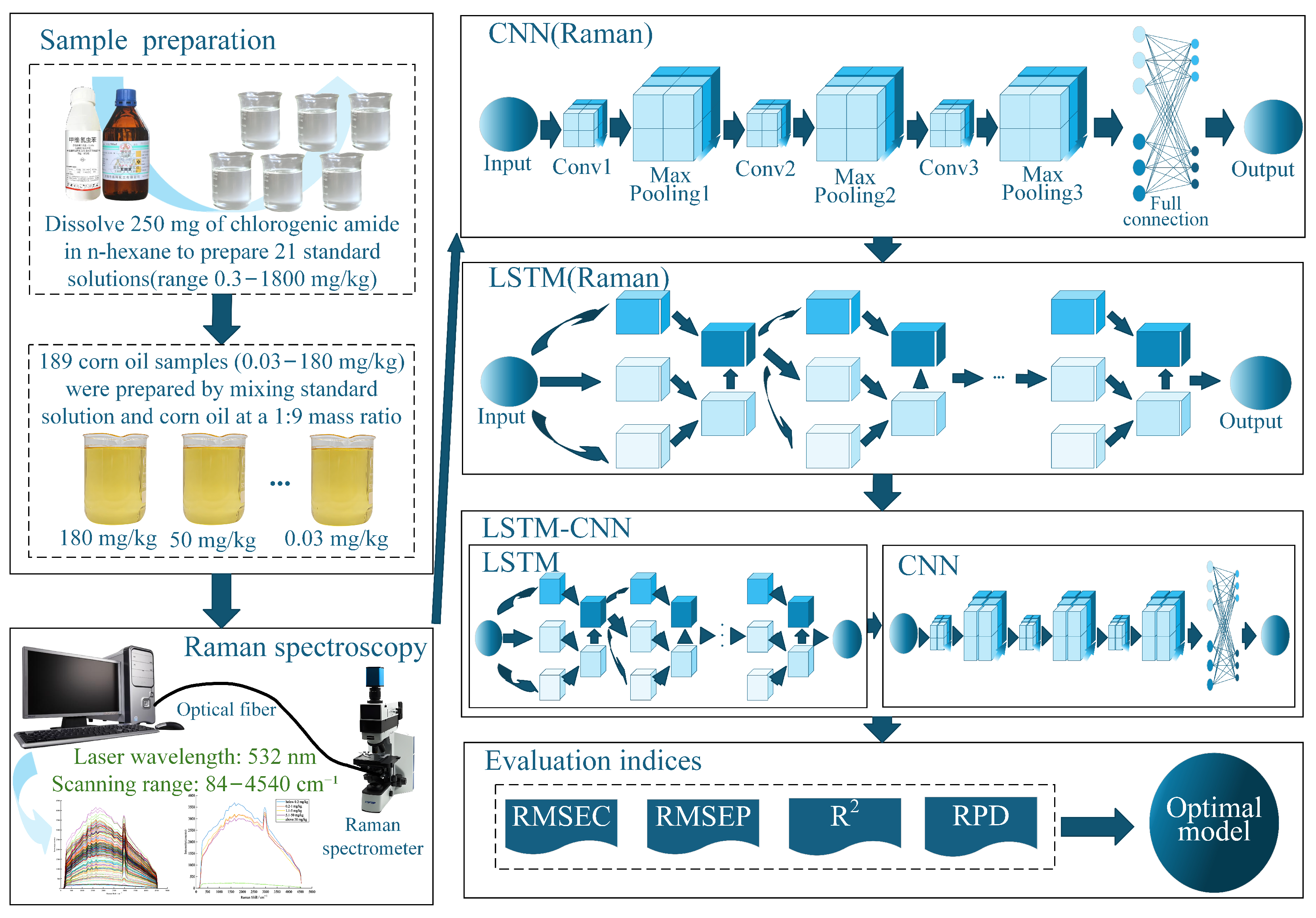

Hybrid DL models represent the frontier for processing complex spectral signatures. Xue et al. [

61] devised an LSTM-CNN fusion network for chlorpyrifos detection in corn oil, where LSTM layers extracted sequential features from raw Raman spectra (84–4540

) and CNN blocks calibrated spatial dependencies. This end-to-end approach achieved superior performance (

= 12.3 mg/kg,

= 3.2) over standalone CNN or LSTM models by simultaneously capturing temporal-spectral correlations and spatial patterns without preprocessing. To make it clear, we present its schematic diagram in

Figure 3 and core algorithm in

Appendix A.1, as a representative example of DL research for contaminant detection. Despite these advances, computational efficiency and field adaptability remain critical challenges. Future efforts should prioritize lightweight architectures like MobileNetV3 for edge deployment, attention mechanisms for interpretable feature weighting, and multi-residue detection frameworks to address real-world contamination scenarios.

The pollution problem of plastic shopping bags in cotton fields is equally serious. If they are not removed before harvest, they will seriously affect the quality of fibers and disrupt the ginning operation. Yadav et al. [

62] employed unmanned aerial vehicle (UAV)-captured RGB imagery and four variants of the YOLOv5 deep learning model

to detect and locate plastic bags in real time, significantly improving upon traditional methods’ limited accuracy (64%) and processing delays. By manually placing 180 bags (90 white, 90 brown) at three heights on cotton plants and evaluating model performance through a desirability function, they demonstrated that YOLOv5 achieved 92% accuracy for white bags and 78% for brown bags (mAP@50: 88%), with color and height profoundly impacting detection—white bags outperformed brown due to contrast (

), while detection rates plummeted from 94.25% (top) to 5% (bottom) because of occlusion (

). The YOLOv5 variant emerged as optimal (95% desirability, 86 FPS), balancing speed and accuracy, though larger models (

) underperformed due to insufficient training convergence. The framework enables near-real-time field deployment, with future work targeting edge-device implementation for robotic removal.

Last but not least, recent research [

63,

64] also demonstrates the versatility of DL in detecting diverse physical contaminants within agricultural products, utilizing advanced sensing modalities beyond conventional imaging. Alsaid et al. [

63] explored the application of electrical impedance tomography (EIT) combined with CNNs for detecting hidden physical contaminants (plastic, stones, foreign food objects) embedded within fresh food products like chicken breast. Their approach leverages EIT’s ability to capture internal conductivity variations, overcoming limitations of surface-only inspection techniques when dealing with irregular shapes and sizes. Four dedicated CNNs were trained for binary classification (contaminated vs. clean), achieving promising accuracies (78–92.9%) for specific contaminant types and mixtures, showcasing EIT’s unique capability for internal anomaly detection, albeit with longer measurement times (15–20 min/sample). Complementing this, Lee et al. [

64] addressed the critical challenge of detecting unknown or rare contaminants in food inspection lines using HSI. They propose the Partial and Aggregate Autoencoder (PA2E), a novel anomaly detection architecture specifically optimized for HSI spectral data. PA2E overcomes limitations of standard autoencoders (lacking locality) and convolutional layers (suffering from translation invariance) by employing masked fully connected layers to learn local spectral features without invariance, coupled with efficient global feature aggregation. Crucially, PA2E is engineered for real-time inference (0.53 ms/sample) through techniques like ReLU culling and layer fusion, significantly outperforming state-of-the-art anomaly detection methods (detecting 29 vs. avg. 23 out of 42 contaminants) on datasets like almonds, pistachios, and garlic stems. This makes PA2E particularly suitable for industrial settings requiring high-speed detection of unforeseen contaminants where labeled anomaly data is scarce.

Table 1 and

Table 2 summarize these DL studies for contaminant detection, from the aspects of contaminant, sample, technique, efficiency, accuracy and cost. These studies demonstrate that DL can achieve rapid, non-destructive identification of heavy metals (Pb, Cd, Hg) and pesticides with exceptional accuracy (

up to 0.9955). DL is a transformative tool for detecting diverse agricultural contaminants, leveraging techniques like HSI [

2,

27,

58,

64], CSA [

57], Raman spectroscopy [

61], and surface-enhanced Raman scattering [

60]. For physical contaminants, UAV-based YOLOv5 models enable real-time plastic detection in fields (81–86 FPS [

62]), while AI-enhanced EIT identifies subsurface materials like plastic with 92.9% accuracy [

63]. Innovative architectures, including MTF-CNN for aflatoxins [

59], LSTM-CNN hybrids [

61], and efficiency-optimized autoencoders (PA2E [

64]), significantly outperform traditional lab methods in speed and cost efficiency after initial hardware investments.

However, critical challenges persist, including computational complexity limiting field deployment for some advanced models (WT-SCAE, MTF-CNN, EIT), variable performance with contaminant properties (e.g., color/height for plastics, material type for EIT), and the need for optimization towards edge computing and broader generalizability. Moreover, the “black-box” nature of complex models (e.g., WT-SCAE, DBN-PSO) hampers regulatory trust due to limited model explainability, with few studies incorporating explainable artificial intelligence (XAI) techniques (e.g., attention mechanisms) to clarify feature contributions or decision logic. Furthermore, reproducibility is hindered by a pervasive lack of standardized datasets and protocols, as studies rely on proprietary or context-specific data with inconsistent contamination levels, sensor parameters (e.g., HSI wavelength ranges), and preprocessing methods, obstructing direct benchmarking and scalability. Future progress necessitates integrating XAI frameworks alongside collaborative efforts to establish unified data curation and evaluation standards, bridging the gap between laboratory efficacy and field deployment.

3. DL for Quality and Nutritional Component Analysis

Recent advances in DL have revolutionized the quality and nutritional component analysis in agricultural products. A prominent study by Zhang et al. [

9] established a high-efficiency paradigm for geographical origin tracing of Radix puerariae starch using Fourier transform near-infrared (FT-NIR) spectroscopy. Their three-stage framework—incorporating interval selection via synergy interval partial least squares (siPLS) and an innovative iteratively retaining informative variables (IRIV) algorithm—achieved 100% classification accuracy with only 10 characteristic wavelengths. This approach demonstrated the critical role of feature engineering in DL pipelines, where IRIV’s variable interaction mechanism could enhance deep feature extraction layers. The extreme lightweight model (utilizing ELM) validated a “less-but-better” philosophy, though computational costs and untapped DL alternatives like CNNs warrant further exploration.

Similarly, in green tea polyphenol quantification [

65], a cost-effective colorimetric sensor combined with ant colony optimization (ACO)-ELM addressed limitations of destructive chemical methods [

8]. By optimizing 7–10 key RGB features from a 3 × 3 porphyrin array, the model achieved a prediction correlation coefficient (

) of 0.8035. This “simple hardware + intelligent algorithm” framework democratized rapid screening (<10 min/sample), yet moderate accuracy compared to NIR spectroscopy highlighted opportunities for integrating multi-sensor data or replacing handcrafted features with CNN-based image analysis.

For fruit quality assessment, Xu et al. [

66] pioneered the fusion of deep spectral features and biophysical parameters in Kyoho grapes. An unsupervised stacked autoencoder (SAE) extracted pixel-level features from HSI data, while physical size compensation significantly improved predictions for total soluble solids (TSS,

) and titratable acidity (TA,

). This study underscored DL’s capacity to overcome spatial interference in HSI but revealed GPU dependency and single-variety limitations. Future edge computing adaptations could enable field deployment.

Physiological trait monitoring in crops has also benefited from multimodal integration of DL. Elsherbiny et al. [

67] introduced a meta-learning framework for IoT-enabled aeroponic lettuce, fusing novel 3D spectral indices (e.g.,

), thermal indicators, and environmental parameters. Gradient boosting machine (GBM)-back-propagation neural networks (BPNN) stacking achieved robust recognition of leaf relative humidity (LRH,

= 0.875), Chl (

= 0.886) and Nitrogen levels (N,

= 0.930), leveraging 3D indices’ superiority over conventional 2D counterparts. Despite hardware cost barriers, the work demonstrated meta-learning’s potential for rapid cross-crop adaptation.

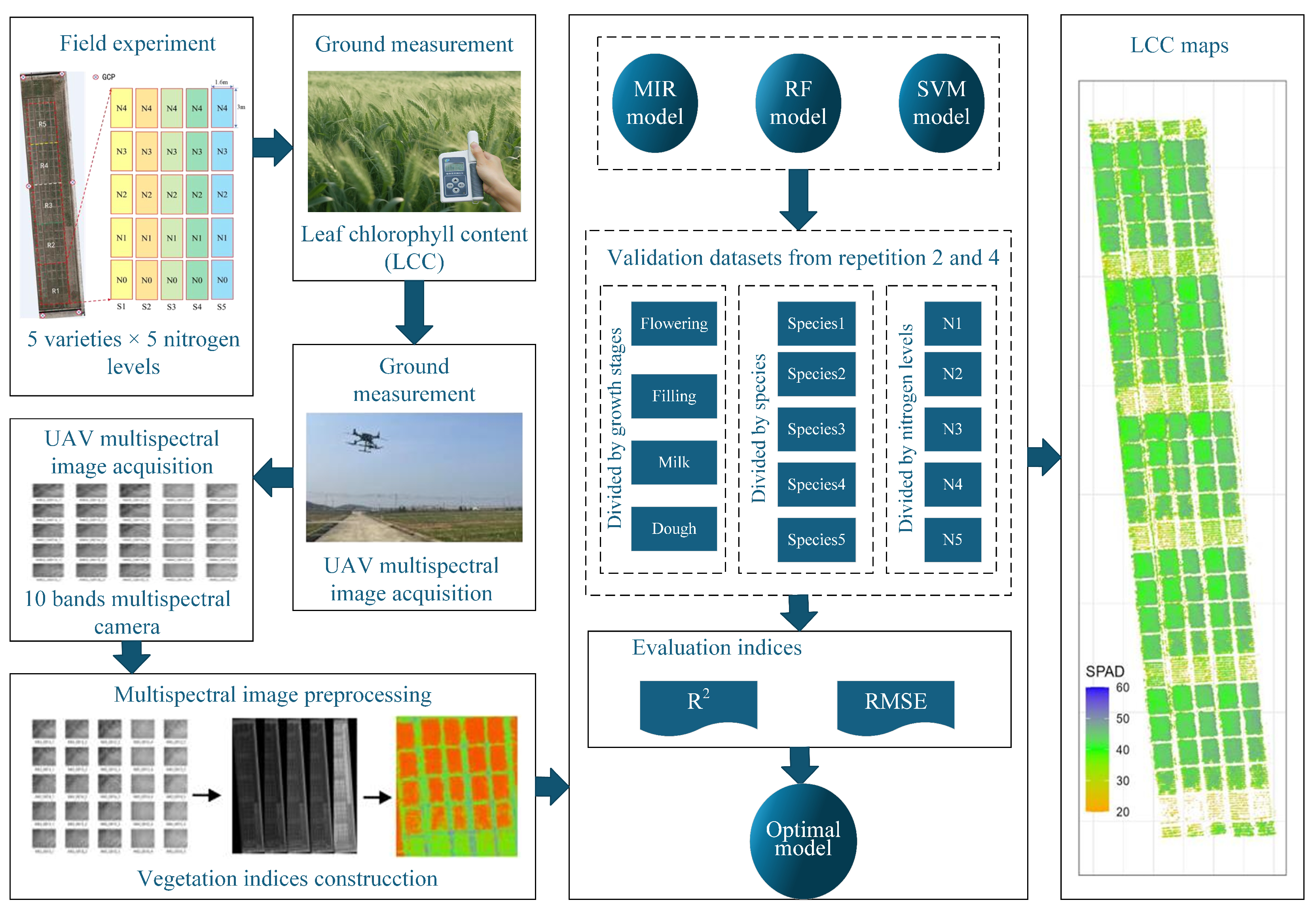

In Chl content estimation of winter wheat, Zhang et al. [

12] systematically validated support vector machine (SVM) robustness across cultivars, nitrogen stresses, and growth stages using UAV multispectral data. SVM outperformed random forest (RF) and multiple linear regression (MLR), especially at dough stage (

= 0.60 vs. 0.49 for RF), though water stress interference and underutilized red-edge bands remained challenges. To make it clear, we present its schematic diagram in

Figure 4 and core algorithm in

Appendix A.2. This study identified growth stage as the primary generalization bottleneck, advocating stage-specific models and multi-source data fusion.

Anthocyanin prediction in purple-leaf lettuce by Liu et al. [

13] showcased optimized ELM enhanced by bio-inspired algorithms. A UVE-competitive adaptive reweighted sampling (CARS) feature selection pipeline compressed hyperspectral data to 12 key bands, while dung beetle optimizer (DBO) elevated ELM performance (validation

= 0.8617). Three-band vegetation indices (e.g., Enhanced vegetation index (EVI)) surpassed two-band indices by 12.3% in

, emphasizing multispectral synergy in biochemical inversion. Computational load and field applicability limitations call for portable spectrometers and UAV integration.

Ahsan et al. [

68] explored the application of DL for nutrient concentration assessment in hydroponic lettuce, achieving high accuracy (87.5–100%) in classifying nitrogen levels (0–300 ppm) across four cultivars using RGB images and transfer learning with VGG16/VGG19 architectures. This established the feasibility of DL for rapid monitoring of nutrients. Extending beyond direct RGB analysis, Ahmed et al. [

69] addressed the limitations of HSI for real-time use by developing a DL-based reconstruction approach. Their hyperspectral convolutional neural network-dense (HSCNN-D) model successfully reconstructed hyperspectral images from standard RGB inputs for sweet potatoes, enabling accurate prediction of soluble solid content (SSC) via partial least squares regression (PLSR) models that outperformed those using full-spectrum HSI data. This highlights DL’s potential to democratize high-fidelity spectral analysis. For complex morphological phenotyping linked to yield quality, van Vliet et al. [

70] tackled the data bottleneck in pod valve segmentation for Brassica napus. Their “DeepCanola” pipeline combined semi-synthetic data generation (using real pod annotations with programmatic augmentation and Thompson-inspired shape transformations) and active learning with human-in-the-loop validation. This drastically reduced annotation effort (1000× faster than manual labeling) while producing a robust Mask R-CNN model capable of accurately measuring yield-relevant valve length (

= 0.99) in both ordered and disordered scenes, even generalizing to related species. These studies showcase DL’s evolution from direct image classification to sophisticated data synthesis/reconstruction for efficient, non-destructive quality and nutritional trait analysis across diverse products.

Collectively, these recent advances demonstrate the transformative role of DL and integrated sensing technologies in enabling efficient, accurate, and increasingly accessible analysis of key quality and nutritional components in agricultural products, which are summarized in

Table 3 and

Table 4. Studies leveraging DL with diverse data sources—HSI for grape TSS/TA [

66], UAV multispectral for wheat Chl [

12], RGB images for lettuce nutrients [

68], and even reconstructed HSI from RGB for sweet potato SSC [

69]—consistently report high accuracy (often

> 0.90 or accuracy > 87.5%) and efficiency gains over traditional methods. These approaches automate complex feature extraction (e.g., SAE for HSI pixels), enable rapid large-scale monitoring (e.g., via UAVs), and reduce long-term operational costs through minimized labor and targeted interventions. Meta-learning [

67] and hybrid strategies combining DL with active learning/semi-synthetic data [

70] further enhance robustness and reduce annotation burdens.

However, critical challenges remain. First, the inherent “black-box” nature of complex DL models like SAEs, CNNs (VGG16/19), and Mask R-CNN raises significant concerns regarding model explainability. Understanding why these models make specific predictions about component concentration or quality traits is crucial for building trust, diagnosing errors (e.g., accuracy drops during wheat dough stage), and enabling actionable insights for growers and regulators; yet, explicit discussion and application of XAI techniques within these agricultural DL studies are notably lacking. Second, the field suffers from a pronounced lack of standardized datasets and protocols. Research utilizes vastly different data types (pixel spectra, UAV images, RGB photos), acquisition settings, preprocessing methods, cultivar selections, and growth stage definitions. This heterogeneity severely hampers reproducibility, fair benchmarking of model performance, and the development of universally applicable solutions, limiting the broader adoption and scalability of these otherwise promising DL technologies. Third, there are some other challenges, including initial equipment costs (FT-NIR, HSI), model robustness across diverse conditions (growth stages, occlusion), and accuracy optimization for some sensor-based methods. Future directions should focus on building refined model architectures while being interpretable and establishing strict, community-agreed standards for data collection and annotation. There is also a need to enhance accessibility through portable devices, refine algorithms (e.g., exploring transformers for occlusion) and optimize sensor materials. By extending these frameworks to a wider set of components and crop types, scalable and intelligent precision agriculture will be necessary.

4. DL for Structural/Textural and Biotic Stress Assessment

DL techniques have demonstrated significant efficacy in the non-destructive assessment of agricultural product textures. For deep-fried tofu, Xuan et al. [

11] proposed a novel framework, which integrated discrete wavelet transform (DWT) denoising with Light Gradient Boosting Machine (LightGBM) regression achieved high-precision texture prediction, yielding determination coefficients of 0.969 for resilience and 0.956 for cohesion. This approach leveraged ultrasonic echoes processed through PCA for dimensionality reduction, outperforming XGBoost and RF while visualizing spatial heterogeneity in porous structures.

Similarly, in minced chicken gel systems, Nunekpeku et al. [

10] combined multimodal fusion of NIR and Raman spectroscopy with LSTM networks to decode complex gelation dynamics. Ultrasonic pretreatment optimized to 30 min enhanced protein

-sheet formation, and low-level data fusion enabled the LSTM model to attain unprecedented accuracy (

=0.9882,

=9.2091), capturing nonlinear relationships between spectral sequences and gel strength. Both studies highlight the critical role of signal preprocessing and sensor fusion in overcoming noise interference inherent to heterogeneous agricultural matrices. To make it clear, we present its schematic diagram in

Figure 5 and core algorithm in

Appendix A.3. This can serve as a representative example of DL research for structural/textural and biotic stress assessment.

For plant disease and weed management, DL models address key challenges in real-time field deployment. In strawberry cultivation, Liu et al. [

71] designed a VGG16-based variable-rate spraying system to achieve targeted weed control with 93% coverage accuracy at speeds ≤ 3 km/h, reducing agrochemical usage by over 30% through low-cost cameras and solenoid valves. However, performance declined sharply beyond 3 km/h due to motion blur, revealing a fundamental speed–accuracy trade-off in dynamic environments.

To enhance disease diagnosis efficiency, Peng et al. [

72] extracted fused deep features from pretrained CNNs (e.g., ResNet50 + ResNet101), and combined it with SVM to accelerate grape leaf disease classification to under 1 sec/inference while maintaining 99.81% F1-score—a 1000× speedup over end-to-end CNNs.

For tomato leaf diseases, Zhao et al. [

73] proposed an SE-ResNet50 model embedding channel attention mechanisms (SENet), which improved small-lesion recognition to a 96.81% accuracy by amplifying discriminative features and suppressing background noise, outperformed vanilla ResNet50 by 4.25%. This model also exhibited exceptional cross-crop generalizability, attaining 99.24% accuracy on grape leaf datasets.

For cucumber leaf diseases, Khan et al. [

74] present an automated framework for recognizing six cucumber leaf diseases (angular leaf spot, anthracnose, blight, downy mildew, powdery mildew, cucumber mosaic) using DL and feature selection. To address data imbalance and limited samples, the authors applied augmentation techniques (horizontal/vertical flips, 45°/60° rotations) to expand the dataset. Four pre-trained models (VGG16, ResNet50, ResNet101, DenseNet201) were fine-tuned, with DenseNet201 achieving the highest accuracy. A novel Entropy–ELM feature selection method was proposed to eliminate redundant features, followed by a parallel fusion approach to combine optimal features from individual and fused models. The framework achieved 98.48% accuracy using Cubic SVM, outperforming existing methods while reducing computational time.

For wheat leaf disease, Xu et al. [

75] proposed an integrated DL framework for wheat leaf disease identification, combining parallel CNNs, residual channel attention blocks (RCAB), feedback blocks (FB), and elliptic metric learning (EML). The model achieves 99.95% accuracy on a proprietary dataset (7239 images across 5 classes) and maintains >98% accuracy on public datasets (CGIAR, Plant Diseases, LWDCD 2020). Key innovations include dual-path feature extraction for healthy/diseased leaves, channel-aware feature optimization via RCAB, iterative feature refinement through FB, and redundancy reduction using EML for precise classification. While demonstrating state-of-the-art performance over models like VGG19 and EfficientNet-B7, the authors note limitations in generalizing across ecological variations and wheat cultivars, suggesting future integration of hyperspectral data for enhanced robustness.

Complementing these approaches, Zhu et al. [

76] integrated AlexNet, VGG, and ResNet features to propose a Multi-Model Fusion Network (MMFN) via transfer learning, which achieved 98.68% accuracy for citrus disease classification, leveraging complementary feature representations to distinguish subtle inter-class variations such as canker and greasy spot. Shafik et al. [

77] introduced AgarwoodNet, a lightweight DL model designed for multi-plant biotic stress classification and detection to support sustainable agriculture. Addressing the limitations of heavy DL models (e.g., high computational costs and memory constraints), the authors developed a resource-efficient CNN using depth-wise separable convolutions and residual connections. The model was trained on two novel datasets: the agarwood pest and disease dataset (APDD) with 5,472 images (14 classes) from Brunei, and the turkey plant pests and diseases (TPPD) dataset with 4447 images (15 classes). AgarwoodNet achieved high accuracy (96.66–98.59% on APDD; 95.85–96.84% on TPPD) while maintaining a compact size of 37 MB, enabling deployment on low-memory edge devices for real-time field applications.

These recent advances in DL demonstrate significant potential for assessing structural/textural properties and biotic stress in agricultural products, which are summarized in

Table 5 and

Table 6. Studies highlight the efficiency, cost effectiveness, and high accuracy (often >95–99%) of DL models like VGG16, SE-ResNet50, MMFN, and lightweight architectures (e.g., AgarwoodNet) in tasks such as targeted weed/spray control, disease diagnosis across crops (strawberry, grape, tomato, citrus, wheat, cucumber), and texture prediction (e.g., deep-fried tofu, chicken gel). Innovations like attention mechanisms (e.g., SENet [

38,

39]), fused deep features (e.g., direct concatenation [

78], CCA [

79,

80]) and ensemble methods enhance feature extraction, reduce computational costs, and enable near-real-time processing, facilitating field deployment.

However, critical limitations persist. First, model explainability remains largely unaddressed. While attention modules (e.g., SE-ResNet50) offer basic interpretability by highlighting relevant features, deeper exploration of XAI techniques (e.g., Shapley additive explanations (SHAP), local interpretable model-agnostic explanations (LIME)) is essential to build trust, understand decision logic, and refine models for complex agricultural environments. Second, the lack of standardized datasets and protocols hampers reproducibility and benchmarking. Performance variations across plant varieties (e.g., wheat), ecological conditions, and datasets (e.g., cross-domain drops in AgarwoodNet), coupled with challenges from data imbalance (e.g., minority disease classes) and dynamic field conditions (lighting, occlusion), underscore the need for large-scale, diverse, and consistently annotated benchmark datasets. Third, challenges persist in balancing speed–accuracy trade-offs, generalizing models across diverse environments, and adapting DL systems to resource-constrained edge devices. Future work must prioritize XAI integration and collaborative efforts toward standardized data collection and evaluation frameworks to ensure robustness and scalability. Simultaneously, lightweight model optimization, multimodal data integration, and cross-species adaptability are also critical to advance scalable precision agriculture.

5. Challenges and Future Directions

This review categorizes DL applications in detecting special components of agricultural products into three primary domains. Firstly, DL for contaminant detection focuses on identifying foreign materials and hazardous substances, utilizing advanced image analysis and spectral data processing to enhance food safety inspection. Secondly, DL for quality and nutritional component analysis leverages non-invasive techniques like HSI and computer vision to rapidly and accurately quantify internal quality attributes, ripeness, and nutritional value, overcoming limitations of traditional destructive methods. Finally, DL for structural/textural and biotic stress assessment employs high-throughput image analysis to evaluate surface features, texture, and physical damage, while also enabling early and precise detection of pest infestations, diseases, and other biotic stresses affecting crop health and marketability. Collectively, these DL approaches offer transformative capabilities for automated, objective, accurate and efficient assessment across the agricultural product value chain.

Despite significant advancements in DL for agricultural component detection, several persistent challenges impede widespread deployment. Foremost, the pervasive “black-box” nature of complex models (e.g., WT-SCAE, DBN-PSO, SAEs, Mask R-CNN, SE-ResNet50) severely limits explainability and regulatory trust, as critical spectral features identified by networks (e.g., key wavelengths for heavy metal detection) often lack clear biophysical justification, hindering scientific validation and user trust. Few schemes adopt XAI techniques (e.g., attention, SHAP, LIME) to clarify decision logic or feature contributions. Second, reproducibility and scalability are crippled by a pronounced lack of standardized datasets, protocols, and benchmarking frameworks. Studies rely on fragmented, context-specific data with inconsistencies in contamination levels, sensor parameters (HSI wavelengths, FT-NIR), acquisition settings, preprocessing methods, crop varieties, growth stages, and annotation criteria. Most DL frameworks exhibit substantial performance degradation when confronted with variations in crop cultivars, growth stages, or environmental conditions, as evidenced by [

12] on wheat Chl prediction where model accuracy dropped by over 60% during the dough stage compared to flowering. This fragility stems from insufficient training data representing agricultural heterogeneity and the inherent complexity of biotic/abiotic interactions in field environments. Third, computational inefficiency further constrains real-time applications, particularly for resource-intensive architectures like 3D-CNNs and hybrid models (e.g., CNN-LSTM), which require specialized hardware (e.g., GPUs) and struggle with latency requirements for field-deployable systems. Moreover, challenges also exist in balancing speed–accuracy trade-offs, achieving robustness across variable field conditions (occlusion, lighting, biotic stresses), generalizing models across environments/species, and adapting advanced architectures to resource-constrained edge devices. Additional hurdles include high equipment costs, data imbalance (minority disease classes), and performance sensitivity to contaminant properties or component variability. Specifically, the dependency on controlled laboratory settings for data acquisition—where samples are meticulously prepared under optimal conditions—fails to capture the sensor noise, occlusion, and lighting variability endemic to real-world agricultural operations.

Future progress necessitates integrated strategies addressing core limitations. First, the systematic incorporation of XAI frameworks (e.g., attention mechanisms, SHAP, LIME) is essential to demystify model reasoning, build trust, and enable actionable diagnostics. Embedding domain-specific physical knowledge into DL architectures will enhance robustness and interpretability. Techniques like physics-guided feature weighting (e.g., prioritizing chlorophyll-sensitive bands in Cd-stressed crops) or coupling mechanistic models (e.g., protein denaturation kinetics in thermal processing) with neural networks could bridge data-driven predictions and agricultural principles [

81]. Second, establishing large-scale benchmark datasets and standardized validation protocols for cross-crop, cross-environment model testing is essential to reproducibility and fair benchmarking. Transfer learning leveraging agricultural foundation models, alongside federated learning frameworks for distributed farm data, will accelerate the transition from lab-validated prototypes to field-ready solutions capable of addressing global food safety and quality challenges. Third, developing lightweight architectures (e.g., knowledge-distilled CNNs, attention-optimized MobileNetV3) compatible with edge devices will enable real-time field deployment, as demonstrated in Deng et al.’s miniaturized microwave sensor for lead detection in edible oils [

5]. Integrating multimodal data fusion represents another critical pathway, where complementary sensing technologies—such as HSI combined with IoT environmental parameters or Raman spectroscopy [

82] paired with ultrasonic metrics—can compensate for individual modality limitations. Osama et al.’s meta-learning framework for lettuce physiology monitoring exemplifies this approach [

67], synergizing 3D spectral indices with microclimate data to achieve

for Chl and nitrogen prediction. Extending validated frameworks to diverse components and crop types, alongside sensor material innovation, will be critical for scalable, intelligent precision agriculture.