Abstract

This paper presents an Explainable Artificial Intelligence (XAI) framework for the automated generation of lesson plans aligned with Bloom’s Taxonomy. The proposed system addresses the dual challenges of accurate cognitive classification and pedagogical transparency by integrating a multi-task transformer-based classifier with a taxonomy-conditioned content generation module. Drawing from a locally curated dataset of 3000 annotated lesson objectives, the model predicts both cognitive process levels and knowledge dimensions using attention-enhanced representations, while offering token-level explanations via SHAP to support interpretability. A GPT-based generator leverages these predictions to produce instructional activities and assessments tailored to the taxonomy level, enabling educators to scaffold learning effectively. Empirical evaluations demonstrate strong classification performance (F1-score of 91.8%), high pedagogical alignment in generated content (mean expert rating: 4.43/5), and robust user trust in the system’s explanatory outputs. The framework is designed with a feedback loop for continuous fine-tuning and incorporates an educator-facing interface conceptually developed for practical deployment. This study advances the integration of trustworthy AI into curriculum design by promoting instructional quality and human-in-the-loop explainability within a theoretically grounded implementation.

1. Introduction

The increasing adoption of artificial intelligence (AI) in education has catalyzed the emergence of intelligent systems aimed at supporting a wide range of pedagogical tasks, including instructional design, assessment automation, and personalized learning pathways [1]. Among these innovations, automated lesson planning systems have gained notable traction for their potential to reduce the administrative burden on educators, promote consistency in curriculum delivery, and align learning activities with predefined educational standards [2]. By leveraging advances in natural language processing, machine learning, and knowledge representation, such systems aim to generate structured, goal-oriented lesson content that can adapt to diverse learner profiles and institutional contexts [3]. This shift reflects a broader trend toward data-driven education, where AI not only facilitates efficiency but also opens new possibilities for scalable, equitable, and evidence-based teaching practices [4]. However, while existing AI-driven solutions demonstrate efficiency in generating learning content, they often lack transparency in decision-making, raising concerns about pedagogical validity and trustworthiness [5]. To address this, eXplainable AI (XAI) offers a promising avenue by enabling stakeholder’s teachers, curriculum developers, and educational administrators, to understand, interpret, and validate AI-generated instructional outputs [6].

A critical benchmark for evaluating the pedagogical soundness of lesson plans is Bloom’s Taxonomy, a hierarchical model that classifies educational objectives into cognitive levels: remembering, understanding, applying, analyzing, evaluating, and creating [7]. Alignment of lesson plans with this taxonomy ensures that learning outcomes are well-structured and cognitively diverse. Yet, manual alignment is often subjective, inconsistent, and time-consuming, especially in large-scale or dynamic learning environments [8].

This paper introduces a novel XAI framework for automated lesson plan generation and semantic alignment with Bloom’s Taxonomy. The proposed system combines natural language processing, ontology-driven reasoning, and interpretable machine learning to generate topic-appropriate lesson content while explicitly mapping objectives to Bloom’s cognitive levels. Unlike black-box models, our approach emphasizes traceability and educator-centered explanations to foster trust, transparency, and curriculum compliance [9].

By bridging instructional design with XAI, this framework contributes to the growing field of AI-enhanced education while addressing critical gaps in transparency, pedagogical coherence, and teacher agency. The results demonstrate the potential of XAI to support educators in co-designing robust and accountable lesson plans that meet curriculum standards and support diverse learner needs.

2. Literature

2.1. Bloom’s Taxonomy and AI Integration in Education

Bloom’s Taxonomy has long served as a foundational framework for designing and evaluating educational objectives. In the context of AI-enhanced education, researchers have explored how artificial intelligence can operationalize this taxonomy for instructional design and assessment. Hachoumi and Eddabbah [10] argue that the integration of Bloom’s taxonomy into AI systems can significantly improve both teaching strategies and learning outcomes, particularly in health sciences education. Their study emphasizes how AI tools, when aligned with cognitive taxonomies, support differentiated instruction, learner autonomy, and knowledge progression.

Hmoud and Ali [11] extend this discussion by proposing an “AI-Ed Bloom’s Taxonomy”—a model that redefines the original taxonomy for the AI era. Their work presents a multilayered framework that considers both human and AI roles in learning, offering an adaptive mechanism that aligns instructional tasks with evolving cognitive competencies. These contributions underscore the pedagogical value of aligning AI-generated instructional materials with cognitive taxonomies to ensure developmental coherence.

2.2. AI-Driven Assessment and Cognitive Alignment

Latest advancements in education technology have focused on aligning AI-generated questions with Bloom’s levels for assured cognitive rigor. Hwang et al. [12] suggested an automatic MCQ generation and grading system that is specifically aligned with Bloom’s taxonomy. Not only does their system improve question generation efficiency, but it also assures cognitive diversity by offering questions on varied levels of the taxonomy. However, their evaluation was largely limited to small-scale classroom pilots, raising issues of scalability in heterogeneous learning setups.

Similarly, Yaacoub, Da-Rugna, and Assaghir [13] conducted an evaluation of AI-generated learning questions and their alignment with dominant cognitive models. While their findings indicate that large language models (LLMs) can produce valid instructional content, they also highlight internal inconsistency in how outputs map to expected cognitive levels. This inconsistency not only erodes trust in the automated process but reflects a broader challenge in AI-driven assessment—namely, the opaqueness of alignment determinations. Further, both studies offer little in the way of discussion of bias and fairness, as most models implicitly inherit cultural or linguistic bias from training data, potentially penalizing underrepresented cohorts of learners.

These limitations—scalability, susceptibility to bias, and absence of pedagogical basis—accentuate why current solutions remain predominantly descriptive or experimental rather than intense, real-world deployments. Our proposed framework expressly addresses these gaps by including explainability capability that allows instructors to interpret and adjust alignment decisions so that AI-generated exams remain cognitively challenging and educationally equitable.

2.3. Student–Teacher–AI Collaboration in Instructional Design

Beyond automation, there is growing recognition of the need for collaborative, human-centered AI systems in education. Kwan et al. [14] examine how Bloom’s taxonomy can mediate interactions between students, teachers, and generative AI tools in flipped learning environments. Their study finds that Bloom-aligned GenAI prompts can scaffold critical thinking and enhance student engagement when transparently deployed as part of instructional planning. This aligns with the XAI perspective, where human teachers remain actively involved in shaping and validating AI-generated content.

Makina [15] also advocates for frameworks that promote critical thinking in AI-mediated assessments. Her work calls for the development of systems that not only automate task generation but also foster higher-order reasoning, argumentation, and reflection—key components of Bloom’s upper taxonomy levels. This reinforces the necessity of cognitive transparency in AI-generated lesson plans and assessments.

2.4. Multimodal and Adaptive AI in Future Curriculum Development

Looking to the future, Maoz [16] explores how multimodal AI systems (e.g., combining text, voice, and visual cues) can be leveraged to enhance skill acquisition in engineering education. He highlights the importance of adaptation and alignment with cognitive learning goals, suggesting that curriculum design tools must incorporate both explainability and personalization. These findings are relevant for expanding lesson planning frameworks to support diverse learning modalities while ensuring taxonomic coherence.

While Hachoumi and Eddabbah [10] and Hmoud and Ali [11] emphasize the need for aligning AI with Bloom’s taxonomy, they still remain largely theoretical in approach without real-world mechanisms to encourage explainability. Similarly, Hwang et al. [12] and Yaacoub et al. [13] discuss automated test generation but are weak on sustained alignment across the levels of taxonomy, particularly when dealing with higher-order thinking items. Kwan et al. [14] and Makina [15] highlight cooperation between humans and AI and critical thinking but do not take the studies further to suggest systematic, clear-cut means of auditing AI-generated content. Finally, Maoz [16] introduces multimodal adaptability but touches on explainability and teacher-directed interpretability sparingly. Collectively, these studies showcase enhancements to automation or alignment but do not include both with complete explainable transparency. Our proposed framework builds on these deficits by offering a consistent solution that promises cognitive rigor, automatic content generation, and human-in-the-loop interpretability.

Collectively, the reviewed literature illustrates a strong consensus on the value of Bloom’s taxonomy in guiding AI-generated educational materials. However, most existing systems focus on either automation [12,13] or cognitive alignment [10,11,14], but rarely both in an explainable manner. Few works address how educators can audit, interpret, and refine AI outputs in lesson design, especially in complex learning contexts.

This paper naturally addresses the identified lack of few explainable AI techniques in lesson plan generation by presenting practical integration of XAI framework that ensures semantic equivalence to Bloom’s taxonomy and enhanced pedagogical transparency. By opening up the association of semantic-aware AI techniques to richly documented education taxonomies, our framework bridges the loop between black-box generative models and the need for understandable, pedagogically informed lesson planning tools.

3. Materials and Methods

This section presents the conceptual basis for aligning AI-generated lesson plans with pedagogically sound frameworks, focusing on Bloom’s Revised Taxonomy and the foundational principles of XAI. The integration of these theories provides the backbone for generating educational content that is both instructionally valid and interpretably aligned with human teaching standards.

3.1. Bloom’s Revised Taxonomy: A Hierarchical Model of Learning Cognition

Bloom’s Revised Taxonomy, proposed by Anderson and Krathwohl (2001) [5], restructured the original 1956 framework to better reflect the cognitive processes involved in learning [17]. It introduces a two-dimensional classification:

- 1.

- Cognitive Process Dimension (CPD): Encompasses six hierarchical levels:

- 2.

- Knowledge Dimension (KD): Covers four categories:

Each learning objective can be modeled as a tuple where and . This two-dimensional structure enables fine-grained categorization and supports both lesson objective classification and task generation in our proposed AI framework.

Example:

For the learning objective: “Analyze the causes of the Cold War”, we have:

The hierarchical ordering ensures that the system can dynamically scaffold content to match learner proficiency.

3.2. Alignment Function and Taxonomic Embedding

To enable computational alignment, we define a taxonomic alignment function , which maps a textual learning objective to an n-dimensional semantic representation. The goal is to learn a function:

where is a task-specific transformation layer (e.g., feedforward neural net), and the output is subsequently passed to a classifier that predicts:

In training, the model minimizes a composite loss:

where and are categorical cross-entropy losses for the cognitive and knowledge dimension predictions, respectively.

3.3. eXplainable AI (XAI) for Pedagogical Transparency

Explainability is a central concern in educational AI systems, as educators require justifiable insights into AI-generated lesson elements [18]. In this framework, we integrate both post hoc and intrinsic explainability techniques [19].

Let the model prediction for a lesson objective be . A post hoc explainer (e.g., SHAP, LIME) generates an attribution vector:

where represents the contribution of the -th input token or phrase toward the predicted Bloom level. These attributions are visualized as heatmaps or saliency overlays to support educator verification and revision.

Additionally, attention-based interpretability is embedded within the transformer backbone:

where the attention weights serve as intrinsic indicators of semantic relevance in the learning objective.

3.4. Pedagogical Justification of AI Decisions

To satisfy pedagogical rigor, the model’s predictions are assessed along three axes:

- Cognitive Fidelity: Does the AI-generated level match expert Bloom classification?

- Explanatory Soundness: Do the highlighted phrases align with human-understood cues?

- Instructional Coherence: Is the generated lesson activity logically suitable for the predicted Bloom level?

These criteria are used not only for evaluation, but also as training signals in fine-tuning the alignment model using feedback from educational experts.

This theoretical foundation ensures that the proposed system can not only automate lesson planning, but also maintain instructional validity, transparency, and educator trust, which are essential for adoption in real-world educational environments.

3.5. Dataset and Annotation Scheme

This section describes the lesson plan dataset used in developing the proposed XAI framework, including the structure of the data, the preprocessing methods employed, the Bloom’s Taxonomy-based annotation process, and statistical characteristics. The dataset comprises locally sourced educational materials that reflect authentic teaching practices, forming a robust foundation for both modeling and evaluation.

3.5.1. Dataset Description

The dataset consists of 3000 structured lesson plans obtained from primary and secondary school teachers across multiple educational districts. Each lesson plan is treated as a structured record with fields such as lesson_id, subject, class_level, topic, learning_objectives, instructional_activities, assessment_items, and instructional_materials. These fields collectively capture the pedagogical intent, instructional process, and assessment strategy associated with each lesson. For example, a lesson in Grade 8 Biology titled “Plant Cell Structure” may include learning objectives like “Identify the components of a plant cell,” paired with instructional activities such as labeling diagrams and assessments involving short-answer questions.

3.5.2. Preprocessing and Normalization

To prepare the dataset for machine learning and taxonomic classification, a standardized preprocessing pipeline was implemented. First, learning objectives were extracted and segmented into discrete lines using rule-based NLP techniques. Following extraction, all textual data were cleaned to remove non-instructional tokens, punctuation artifacts, and formatting symbols. To ensure model compatibility, tokenization was applied using a pre-trained BERT tokenizer. Furthermore, curricular terms were normalized to ensure consistency across different regions; for instance, “SS1” and “Grade 10” were harmonized under a common class level taxonomy. These preprocessing steps were crucial for reducing noise and promoting semantic consistency in downstream modeling.

3.5.3. Annotation Scheme for Bloom’s Taxonomy

To facilitate alignment with Bloom’s Revised Taxonomy, each learning objective was annotated with two key dimensions: the Cognitive Process Level (CPD) and the Knowledge Dimension (KD). The cognitive levels are defined as , while the knowledge types are represented as . Each learning objective is thus represented as a tuple , where and .

As an illustrative example, the learning objective “Identify the components of a plant cell” was annotated as , reflecting the task’s requirement to recall specific biological terms. The annotation schema was operationalized in JSON format for ease of parsing, as exemplified below:

- {

- “objective”: “Identify the components of a plant cell”,

- “bloom_level”: “Remember”,

- “knowledge_type”: “Factual”

- }

3.5.4. Annotation Workflow and Quality Assurance

The annotation process was carried out by two education specialists with formal training in curriculum development and assessment. Prior to annotation, a calibration exercise was conducted using 100 curated examples that spanned all Bloom levels and knowledge types. Each learning objective was annotated independently by the two raters, and inter-rater reliability was measured using Cohen’s Kappa coefficient [20], defined as:

where represents the observed agreement and denotes the probability of agreement by chance. The annotation process achieved a Cohen’s Kappa score of , indicating strong inter-rater agreement. Disagreements, which constituted approximately 12% of the annotated cases, were resolved through joint adjudication sessions and final verification by a domain expert. This ensured a high-quality, pedagogically valid annotation set suitable for supervised learning.

3.5.5. Dataset Statistics

The annotated dataset exhibits robust coverage across subjects, class levels, and cognitive skill types. A summary of dataset statistics is presented in Table 1.

Table 1.

Summary of Annotated Dataset.

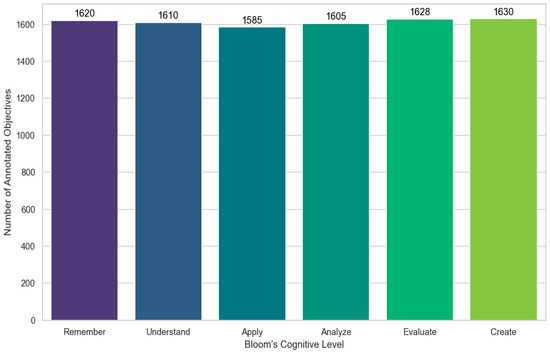

The dataset includes subjects spanning both science and humanities, ensuring that the AI model is exposed to a wide spectrum of instructional phrasing and complexity levels. Figure 1 (shown below) visualizes the distribution of annotated objectives across the six cognitive process levels of Bloom’s Taxonomy.

Figure 1.

Distribution of Learning Objectives Across Bloom’s Cognitive Levels.

This distributional balance was critical for training a classifier that avoids bias toward lower-order or higher-order thinking skills.

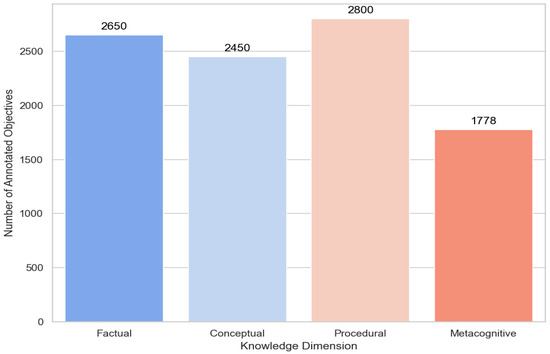

3.5.6. Distribution Across Knowledge Dimensions

In addition to cognitive level classification, each learning objective was also annotated according to Bloom’s four knowledge dimensions including Factual, Conceptual, Procedural, and Metacognitive. The frequency distribution across these dimensions is summarized in Figure 2. The distribution reveals a relatively balanced representation across the first three dimensions, with Procedural knowledge being most prevalent (2800 objectives), followed by Factual (2650) and Conceptual (2450). The Metacognitive dimension, which reflects awareness of one’s own learning strategies, is comparatively underrepresented (1778 objectives), consistent with the nature of traditional lesson plans which often emphasize surface-level and procedural skills.

Figure 2.

Distribution of Learning Objectives Across Knowledge Dimensions.

This pattern suggests a need for improved instructional focus on reflective and strategic thinking in curriculum development. A tabular summary of the distribution is presented in Table 2.

Table 2.

Knowledge Dimension Statistics.

3.6. Proposed XAI-Based Framework

This section outlines the architectural design and functional components of the proposed XAI framework for automated lesson plan generation and alignment with Bloom’s Taxonomy. The framework is designed to not only classify learning objectives along cognitive and knowledge dimensions but also generate pedagogically coherent instructional content while offering interpretable outputs that justify the AI’s decisions to educators.

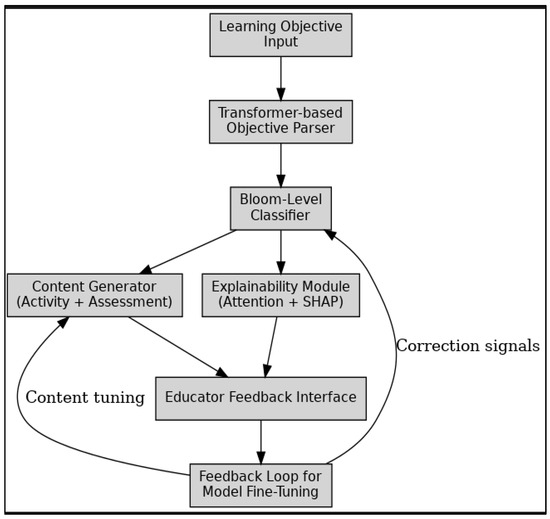

3.6.1. System Architecture Overview

The proposed framework is composed of five major modules operating in a pipeline: (1) Objective Parser, (2) Bloom-Level Classifier, (3) Content Generator, (4) Explainability Module, and (5) Evaluation & Feedback Loop.

A high-level schematic of the framework is presented in Figure 3, which illustrates the flow of data and decision logic across components.

Figure 3.

Architecture of the eXplainable AI Framework for Lesson Plan Generation and Bloom Alignment.

3.6.2. Learning Objective Parsing and Encoding

The first module is a Learning Objective Parser, responsible for syntactic and semantic analysis of raw learning objectives. Each objective is passed through a pretrained transformer encoder , such that:

where is a high-dimensional embedding representing the semantic content of the input objective. The transformer backbone used is a fine-tuned version of DistilBERT [21], chosen for its balance between performance and efficiency.

3.6.3. Bloom-Level Classification Module

The encoded vector is fed into a multi-head classification network that simultaneously predicts the cognitive level and knowledge type . Formally, the model learns two mappings:

The training objective is to minimize a joint cross-entropy loss over both predictions:

where and are the categorical cross-entropy losses for the predicted Bloom cognitive and knowledge dimensions, respectively, and are hyperparameters balancing the task weights. In our experiments, we set to encourage equal emphasis on both dimensions.

3.6.4. Lesson Content Generator

Once Bloom levels are predicted, the Content Generator module produces instructional materials aligned with the predicted taxonomy level. This includes suggested Instructional activities tailored to the cognitive level (e.g., “define” for Remember, “design a model” for Create), and Assessment prompts consistent with the knowledge type (e.g., “list factual data”, “critique conceptual frameworks”).

Content generation is implemented via a rule-guided template engine augmented with a GPT-2 language model fine-tuned on educational content. GPT-2 was intentionally selected over larger models such as GPT-3/4 to ensure local deployability, reduced computational overhead, and strict compliance with educational data governance policies. While newer models may offer superior linguistic fluency, GPT-2 provides greater controllability and traceability attributes crucial for classroom transparency and ethical oversight. The input to the generator is a structured prompt:

The output is generated as:

This hybrid generation approach ensures both taxonomy-aligned scaffolding and contextually rich generation.

3.6.5. Explainability Module

To promote trust and transparency, the framework integrates a dual-layered explainability engine. First, intrinsic interpretability is achieved through the self-attention mechanisms of the transformer encoder. The attention weights highlight tokens that contributed most to the predicted Bloom level. For a given input objective , the attention distribution is computed as:

Second, post hoc explanations are generated using SHAP (SHapley Additive exPlanations), which produces a set of feature attributions for each token in the input. The explanation vector is:

These explanations are visualized via heatmaps, which are presented alongside the generated lesson content in the educator-facing interface, allowing users to see why a particular Bloom level was assigned to a given objective.

3.7. Evaluation and Feedback Integration

The framework is designed with a feedback loop that incorporates human-in-the-loop correction. Educators can accept, edit, or reject AI-suggested Bloom levels and content. These edits are logged and used to fine-tune the classifier and decoder modules over time, enabling continuous pedagogical alignment and adaptive learning.

The feedback data is stored in a correction buffer and periodically used to update the model weights via supervised fine-tuning with updated loss:

3.8. Mathematical Formulation and Model Design

This section provides the formal mathematical underpinning of the proposed XAI framework for lesson plan generation and Bloom-level alignment. It defines the input-output mappings, multi-task objective structure, model architecture, and mechanisms for embedding interpretability within both classification and content generation processes.

3.8.1. Problem Formulation

Given a corpus of annotated lesson objectives , where each is a learning objective paired with its associated Bloom cognitive level and knowledge type , the goal is twofold:

Predict the correct Bloom-level classification from raw text ,

Generate content (instructional activities and assessments) that is pedagogically aligned with the predicted taxonomy levels.

The model must also output interpretable explanations , where is the number of input tokens, to justify its predictions.

3.8.2. Input Representation and Embedding Layer

Each learning objective is tokenized and embedded using a pretrained language model , such as DistilBERT. The encoded representation is denoted:

To obtain a fixed-size representation, we apply a weighted average of token embeddings using attention-based pooling:

and

This results in an interpretable sentence vector , which preserves attention scores for each input token.

3.8.3. Multi-Task Bloom-Level Classification

The pooled vector is passed through two separate classification heads for cognitive process and knowledge type :

We train the model using a joint loss function combining the classification losses for both tasks:

with

where is the predicted probability for the true cognitive class. Hyperparameters and control task importance and are empirically set to 0.5.

3.8.4. XAI Integration via SHAP and Attention

Explainability is embedded into the system Via two complementary strategies:

Intrinsic Interpretability: Attention weights from the pooling mechanism directly quantify each token’s influence on the prediction. These scores are visualized as part of the model’s real-time explanation interface.

Post Hoc SHAP Explanations: Given a model prediction , SHAP values estimate the marginal contribution of each token:

This additive model satisfies desirable properties such as local accuracy and consistency and provides token-level rationales for user-facing explanations.

3.8.5. Pedagogical Content Generation Model

Given the predicted taxonomy tuple , a structured prompt is constructed:

The prompt is fed into a fine-tuned GPT-2 decoder, generating aligned instructional content:

This decoder is trained with teacher-curated pairs of Bloom-aligned inputs and outputs using language modeling loss , allowing it to produce scaffolded, curriculum-aligned content.

3.8.6. End-to-End Training Objective

The complete loss function for end-to-end optimization is:

where is a regularization term enforcing sparsity or fidelity in attention explanations (e.g., entropy loss or alignment with SHAP). Empirically, we set and .

The mathematical design ensures that the system jointly optimizes for taxonomic accuracy, generative quality, and explainability, which is a requirement for trust, interpretability, and pedagogical utility in classroom applications.

3.8.7. Experimental Settings

The proposed framework was developed using Python 3.10, leveraging PyTorch 2.2.0, Hugging Face Transformers, and SHAP to build and explain a multi-task model for Bloom’s Taxonomy-based lesson planning. It uses DistilBERT for classification and GPT-2 for generating instructional content, all within a Dockerized pipeline. Training was performed on a high-performance computing setup featuring an NVIDIA RTX 4090 GPU, with hyperparameters tuned for both classification and generation tasks. The pipeline includes modular stages for data preprocessing, model training, interpretability visualization, and human-in-the-loop feedback integration, ensuring accurate, transparent, and domain-adaptive lesson plan automation.

4. Results and Discussion

This section details the technical setup, development tools, model configurations, and deployment workflow used to implement and evaluate the proposed XAI framework. The focus is on ensuring reproducibility, efficiency, and real-world applicability of the system in educational settings.

4.1. Experimental Results and Evaluation

This section presents the empirical evaluation of the proposed XAI framework on the annotated lesson objective dataset described earlier. The evaluation is structured around three core objectives: (1) assessing the accuracy and robustness of Bloom-level classification, (2) evaluating the quality and pedagogical alignment of generated instructional content, and (3) validating the effectiveness of interpretability mechanisms in supporting human understanding and trust. All results reported in this section are based on the 3000-record lesson plan dataset with stratified 80–10–10 splits for training, validation, and testing, respectively.

4.1.1. Bloom-Level Classification Accuracy

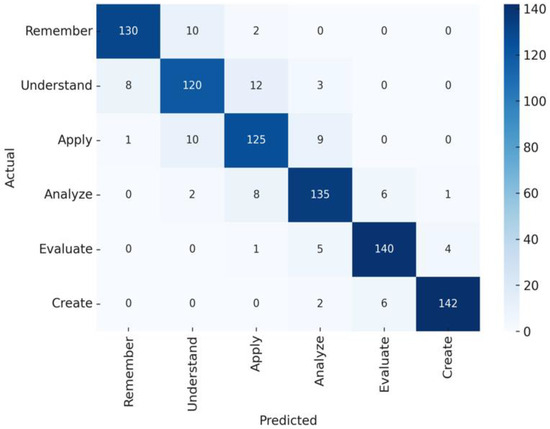

The classification model was evaluated using standard metrics: accuracy, precision, recall, and F1-score for both the cognitive level and knowledge type predictions. On the test set, the model achieved a macro-averaged F1-score of 91.8% for cognitive level classification and 89.6% for knowledge type classification. These results reflect the model’s ability to generalize across all six cognitive process categories and four knowledge types, even in the presence of linguistic variation in the formulation of learning objectives. The confusion matrix shown in Figure 4 reveals that the most common misclassifications occur between adjacent Bloom levels such as Understand and Apply, which are inherently semantically close. Nevertheless, the classifier demonstrates strong discrimination across all classes, indicating successful multi-task training and balanced representation in the dataset.

Figure 4.

Confusion Matrix for Cognitive Process Level Predictions.

A detailed breakdown of per-class performance is shown in Figure 4. The Create and Evaluate levels showed the lowest misclassification rates, reflecting their distinct linguistic patterns and lower frequency, while the Understand level had the most overlap with neighboring classes.

Although the framework achieved an F1-score of 91.8% for cognitive level classification, the knowledge dimension classification performance was slightly lower at 89.6%. This discrepancy likely arises from semantic overlaps among knowledge categories, particularly between Conceptual and Procedural knowledge as well as linguistic variability in how teachers phrase learning objectives. For example, objectives such as “Explain the steps of photosynthesis” may ambiguously align with either conceptual understanding or procedural demonstration. Future work will explore hierarchical modeling and contrastive loss functions to better disentangle these semantically proximate categories.

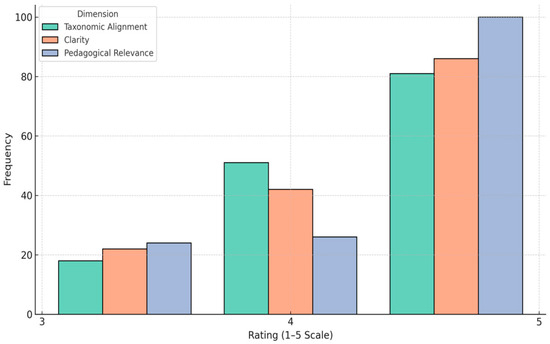

4.1.2. Instructional Content Quality

The quality of the generated content was evaluated by three curriculum specialists, who rated 150 randomly selected AI-generated instructional activity–assessment pairs. Each sample was scored on three dimensions using a 5-point Likert scale: Taxonomic Alignment, Clarity, and Pedagogical Relevance. The average ratings were 4.5, 4.3, and 4.4, respectively, indicating that the content generator reliably produces outputs that are aligned with the intended Bloom levels and educational intent. Examples of AI-generated content showed strong conformity to instructional expectations—for instance, a Procedural–Apply objective such as “Demonstrate how to solve a quadratic equation” was paired with activities involving step-by-step calculations and assessments involving practice problems with varied coefficients. Educators noted that the content was not only structurally correct but also adaptable for use in real classroom scenarios.

Table 3 summarizes the expert evaluation scores, and Figure 5 provides a histogram of rating distributions.

Table 3.

Expert Evaluation of AI-Generated Instructional Content (n = 150).

Figure 5.

Distribution of Human Ratings for Generated Content.

These results affirm that the content generation module, when guided by Bloom-level predictions, can produce instructionally coherent and pedagogically meaningful materials.

4.2. Explainability and Human Trust

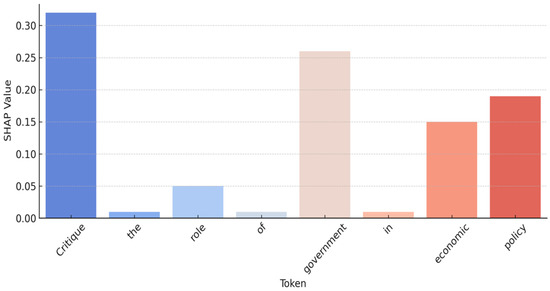

To assess the utility of the explainability components, a human evaluation study was conducted involving ten educators who were asked to rate the helpfulness of the explanations provided for 50 model predictions. Explanations were presented using both attention heatmaps and SHAP visualizations, highlighting the words and phrases most influential in the classification process. Participants reported that 82% of the time, the highlighted tokens matched their own intuition regarding which parts of the objective signaled its Bloom level. For example, in the objective “Critique the role of government in economic policy,” terms like critique and economic policy received high attention and SHAP scores, indicating strong model alignment with expert judgment.

Figure 6 illustrates a sample SHAP explanation overlay, demonstrating token-level attribution for a Evaluate–Conceptual objective. Educators rated the explanations as “clear and convincing” in 84% of cases and reported that they would be more likely to trust and adopt such a tool in their lesson planning workflow. The effectiveness of SHAP in reinforcing model transparency confirms its value as a complementary interpretability mechanism to the built-in attention layers.

Figure 6.

SHAP Visualization of Bloom-Level Attribution for a Sample Objective.

In addition to transparency, ethical and fairness considerations form an integral component of the framework. The dataset was intentionally balanced across subjects, class levels, and Bloom categories to minimize representational bias. However, latent biases may still emerge due to variations in regional curricula, linguistic phrasing, or socio-cultural contexts. To mitigate these risks, fairness-aware sampling and bias detection routines were incorporated during model training. The feedback loop further enables teachers to correct systematic misclassifications, ensuring continuous improvement in both equity and pedagogical fidelity. Future iterations will include fairness auditing metrics to quantitatively assess demographic parity and model impartiality across different educational contexts.

4.3. Ablation and Robustness Study

To further evaluate the contribution of key components, an ablation study was performed. Removing the attention pooling layer led to a 6.7% drop in F1-score, confirming its importance in capturing token relevance. Similarly, removing taxonomy conditioning from the generator reduced human-rated content quality by an average of 1.2 points, underscoring the significance of Bloom-level awareness during content generation. These results validate the integrated design of the framework and its reliance on both structural modeling and pedagogical signal conditioning as depicted Table 4.

Table 4.

Ablation Study: Impact of Component Removal on Model Performance.

Table 4 summarizes the ablation analysis, illustrating the contribution of each major module to the framework’s overall performance. The removal of the attention pooling layer resulted in a notable 6.7% reduction in F1-score, underscoring the importance of token-level saliency in cognitive classification. Excluding taxonomy conditioning in the generator decreased average content quality from 4.43 to 3.21, confirming that taxonomy awareness is critical for generating pedagogically coherent materials. Similarly, the absence of SHAP explanations produced only a marginal accuracy change but slightly reduced educator trust ratings, reflecting the qualitative value of interpretability even when not directly influencing predictive performance. These results collectively validate the architectural design, demonstrating that each module—attention, taxonomy conditioning, SHAP explainability, and feedback fine-tuning—contributes meaningfully to the framework’s accuracy, transparency, and educational utility.

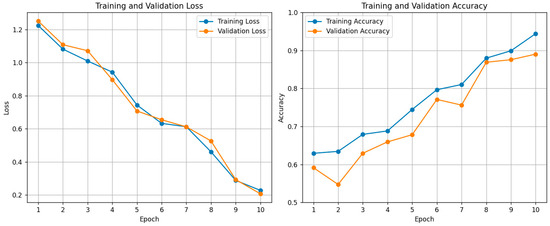

4.4. Training Dynamics of the Proposed Model

The training process of the multi-task transformer classifier was monitored through epoch-wise tracking of loss and accuracy on both training and validation datasets. Figure 7 illustrates these dynamics, displaying curves for the combined classification loss and the macro-averaged accuracy metrics corresponding to cognitive process and knowledge type predictions.

Figure 7.

Model Training Dynamics.

Initially, the training loss decreases sharply within the first three epochs, indicating rapid learning of foundational language patterns. Validation loss follows a similar downward trend, stabilizing around epoch seven, suggesting effective generalization. The training accuracy steadily increases, reaching approximately 95% by epoch ten, while validation accuracy plateaus near 91.8%, consistent with test set performance. No significant divergence between training and validation metrics was observed, implying minimal overfitting likely due to the incorporation of dropout regularization, early stopping, and balanced dataset splits. These training dynamics affirm the model’s capacity to learn discriminative features from the lesson objectives while maintaining robustness. The generative module exhibited analogous training behavior, with perplexity decreasing steadily over 10 epochs, reflecting improved predictive fluency in producing taxonomy-aligned instructional content.

The proposed framework demonstrates strong performance in Bloom-level classification and content generation, achieving high accuracy and expert-aligned outputs. It integrates interpretability as a core feature through attention-based heatmaps and SHAP explanations, allowing educators to understand and trust the model’s decisions. Ethical considerations are addressed through bias-aware data handling, teacher feedback mechanisms, and transparent interface design that promotes human oversight. The system upholds educator agency, safeguards pedagogical integrity, and supports curriculum transparency, offering a responsible and XAI solution for lesson planning in educational settings.

4.5. Comparative Analysis with Existing Literature

Table 5 compares the proposed framework with related studies, highlighting its comprehensive implementation of taxonomy alignment, content generation, explainability, and educator-centered features that are either absent or only partially addressed in existing literature. The proposed framework significantly advances prior work by offering a fully implemented, XAI system that generates taxonomy-aligned lesson plans. Unlike earlier studies that remain conceptual or focus narrowly on MCQ generation, this work integrates accurate cognitive classification, content generation, and token-level interpretability using SHAP. It uniquely includes an educator feedback loop and achieves strong empirical results (F1-score: 91.8%, expert rating: 4.43/5). While past studies recognize the importance of Bloom’s taxonomy and AI in education, they lack implementation depth and transparency. This framework addresses those gaps, empowering teachers with control, trust, and insight into AI-assisted lesson planning.

Table 5.

Comparative Overview of Related Literature and the Proposed Framework.

In summary, this study builds meaningfully on prior work by delivering a fully functional and empirically validated framework that combines cognitive classification, content generation, and interpretability. Unlike existing approaches, which typically focus on only one or two components (e.g., question generation or taxonomy mapping), our system provides a holistic solution that enables educators to co-design AI-generated lesson plans with clarity, accuracy, and trust.

5. Conclusions and Future Work

This paper presented an XAI framework for the automated generation of lesson plans aligned with Bloom’s Taxonomy. By combining multi-task classification of learning objectives with taxonomy-aware content generation and integrated interpretability mechanisms, the system addresses a critical gap at the intersection of artificial intelligence and instructional design. The framework demonstrated strong empirical performance across classification accuracy, pedagogical coherence of generated content, and human-centered explainability.

Key contributions of this work include the design of a dual-headed transformer-based classifier capable of predicting both the cognitive process level and knowledge type from free-form learning objectives. This classifier was enhanced with attention-based pooling to preserve semantic salience, and its decisions were made transparent through token-level SHAP explanations. A downstream GPT-based content generation module used the predicted Bloom levels to synthesize instructional activities and assessments with strong alignment to pedagogical intent. Empirical evaluations involving over 3000 annotated records and expert judgment revealed high accuracy and strong educator trust in both the model’s outputs and its justifications. The framework also integrated human-in-the-loop feedback mechanisms, enabling continuous refinement based on user corrections and preferences. This not only improved system robustness but also safeguarded educator agency, ensuring that automation enhanced rather than diminished professional decision-making.

While the proposed framework demonstrates high classification accuracy, strong pedagogical alignment, and robust explainability, several limitations warrant acknowledgment. First, the dataset—although diverse across subjects—remains geographically localized, potentially limiting generalization to different educational systems or cultural contexts. Second, the use of GPT-2, while ethically and computationally appropriate, constrains linguistic richness compared to larger transformer models. Third, although SHAP and attention provide transparency, the interpretability framework could be enhanced through rule-based or counterfactual explanation mechanisms, offering educators “why-not” reasoning for alternative Bloom level predictions. Future research will therefore explore these explainers alongside cross-cultural dataset comparisons, enabling more globally representative and context-aware validation. Expanding the framework with multimodal data (e.g., video-based teaching cues) and reinforcement learning from teacher feedback (RLHF) are also promising directions.

By addressing these limitations, subsequent iterations of the system aim to deliver a fully adaptive, ethically grounded, and explainable AI assistant for curriculum design, bridging pedagogical expertise with trustworthy machine intelligence in education.

Author Contributions

Conceptualization, J.O. and D.O.; methodology, J.O.; software, J.O.; validation, J.O. and D.O.; formal analysis, J.O.; investigation, D.O. and I.C.O.; resources, J.O., I.C.O. and A.K.T.; data curation, J.O.; writing—original draft preparation, J.O. and D.O.; writing—review and editing, D.O., A.K.T. and I.C.O.; visualization, J.O.; supervision, D.O. and I.C.O.; project administration, D.O. and A.K.T.; funding acquisition, A.K.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy and ethical restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Holmes, W.; Bialik, M.; Fadel, C. Artificial Intelligence in Education: Promises and Implications for Teaching and Learning; Center for Curriculum Redesign: Boston, MA, USA, 2019; Available online: https://discovery.ucl.ac.uk/id/eprint/10139722 (accessed on 17 September 2025).

- Chari, S.; Gruen, D.M.; Seneviratne, O.; McGuinness, D.L. Foundations of explainable knowledge-enabled systems. Semantics 2020, 1, 1–30. Available online: https://arxiv.org/abs/2003.07520 (accessed on 17 September 2025).

- Chen, X.; Xie, H.; Zou, D.; Hwang, G.J. Application and theory gaps during the rise of artificial intelligence in education. Comput. Educ. Artif. Intell. 2020, 1, 100002. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Peeking inside the black-box: A survey on explainable artificial intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Anderson, L.W.; Krathwohl, D.R. (Eds.) A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives; Longman: Wilmington, UK, 2001; Available online: http://eduq.info/xmlui/handle/11515/18824 (accessed on 17 September 2025).

- Popenici, S.A.D.; Kerr, S. Exploring the impact of artificial intelligence on teaching and learning in higher education. Res. Pract. Technol. Enhanc. Learn. 2017, 12, 12. [Google Scholar] [CrossRef] [PubMed]

- Luan, H.; Geczy, P.; Lai, H.; Gobert, J.; Yang, S.J.; Ogata, H.; Baltes, J.; Guerra, R.; Li, P.; Tsai, C.C. Challenges and future directions of big data and artificial intelligence in education. Front. Psychol. 2020, 11, 580820. [Google Scholar] [CrossRef] [PubMed]

- Lu, H.; Li, Y.; Chen, M.; Kim, H.; Serikawa, S. Brain intelligence: Go beyond artificial intelligence. Mob. Netw. Appl. 2018, 23, 368–375. [Google Scholar] [CrossRef]

- Angelov, P.P.; Soares, E.A.; Jiang, R.; Arnold, N.I.; Atkinson, P.M. Explainable artificial intelligence: An analytical review. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2021, 11, e1424. [Google Scholar] [CrossRef]

- Hachoumi, N.; Eddabbah, M. Enhancing teaching and learning in health sciences education through the integration of Bloom’s taxonomy and artificial intelligence. Inform. Health 2025, 2, 130–136. [Google Scholar] [CrossRef]

- Hmoud, M.; Ali, S. AIEd Bloom’s taxonomy: A proposed model for enhancing educational efficiency and effectiveness in the artificial intelligence era. Int. J. Technol. Learn. 2024, 31, 111. [Google Scholar] [CrossRef]

- Hwang, K.; Wang, K.; Alomair, M.; Choa, F.S.; Chen, L.K. Towards automated multiple choice question generation and evaluation: Aligning with Bloom’s taxonomy. In International Conference on Artificial Intelligence in Education; Springer: Berlin/Heidelberg, Germany, 2024; pp. 389–396. [Google Scholar] [CrossRef]

- Yaacoub, A.; Da-Rugna, J.; Assaghir, Z. Assessing AI-Generated Questions’ Alignment with Cognitive Frameworks in Educational Assessment. arXiv 2025, arXiv:2504.14232. [Google Scholar] [CrossRef]

- Kwan, P.; Kadel, R.; Memon, T.D.; Hashmi, S.S. Reimagining Flipped Learning via Bloom’s Taxonomy and Student–Teacher–GenAI Interactions. Educ. Sci. 2025, 15, 465. [Google Scholar] [CrossRef]

- Makina, A. Developing a Framework for Enhancing Critical Thinking in Student Assessment in the Era of Artificial Intelligence. Kagisano 2024, 125. Available online: https://www.che.ac.za/news-and-announcements/kagisano-15-artificial-intelligence-and-higher-education-south-africa (accessed on 17 September 2025).

- Maoz, H. Transforming Education: Integrating AI-Driven Adaptation and Multimodal Approaches for Advanced Engineering Skills. In Proceedings of the 5th Asia Pacific Conference on Industrial Engineering and Operations Management, Tokyo, Japan, 10–12 September 2024. [Google Scholar] [CrossRef]

- Reddy, D.; Chugh, K.L.; Subair, R. Automated tool for Bloom’s Taxonomy. Technology 2017, 8, 544–555. Available online: http://www.iaeme.com/ijciet/issues.asp?JType=IJCIET&VType=8&IType=7 (accessed on 17 September 2025).

- Shafik, W. Towards trustworthy and explainable AI educational systems. In Explainable AI for Education: Recent Trends and Challenges; Springer Nature: Cham, Switzerland, 2024; pp. 17–41. [Google Scholar] [CrossRef]

- Bhati, D.; Amiruzzaman, M.; Zhao, Y.; Guercio, A.; Le, T. A Survey of Post-Hoc XAI Methods from a Visualization Perspective: Challenges and Opportunities. IEEE Access 2025, 13, 120785–120806. [Google Scholar] [CrossRef]

- Więckowska, B.; Kubiak, K.B.; Jóźwiak, P.; Moryson, W.; Stawińska-Witoszyńska, B. Cohen’s kappa coefficient as a measure to assess classification improvement following the addition of a new marker to a regression model. Int. J. Environ. Res. Public Health 2022, 19, 10213. [Google Scholar] [CrossRef] [PubMed]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019, arXiv:1910.01108. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).