Feature-Optimized Machine Learning Approaches for Enhanced DDoS Attack Detection and Mitigation

Abstract

1. Introduction

2. Literature Review

3. Proposed Framework

4. Methodology

4.1. Detection Methodology

4.2. DDoS Attack Detection Approach

4.2.1. Data Collection

- Portmap and NetBIOS: Exploiting RPC services and legacy systems to reflect and amplify traffic.

- LDAP and MSSQL: Leveraging database and directory services for large-scale amplification.

- UDP and UDP-Lag: Exploiting connectionless transport layer protocols for high-throughput flooding.

- SYN: Targeting TCP handshakes to exhaust server resources.

- NTP, DNS, and SNMP: Exploiting common network management and time synchronization services for reflection and amplification.

4.2.2. Data Scaling

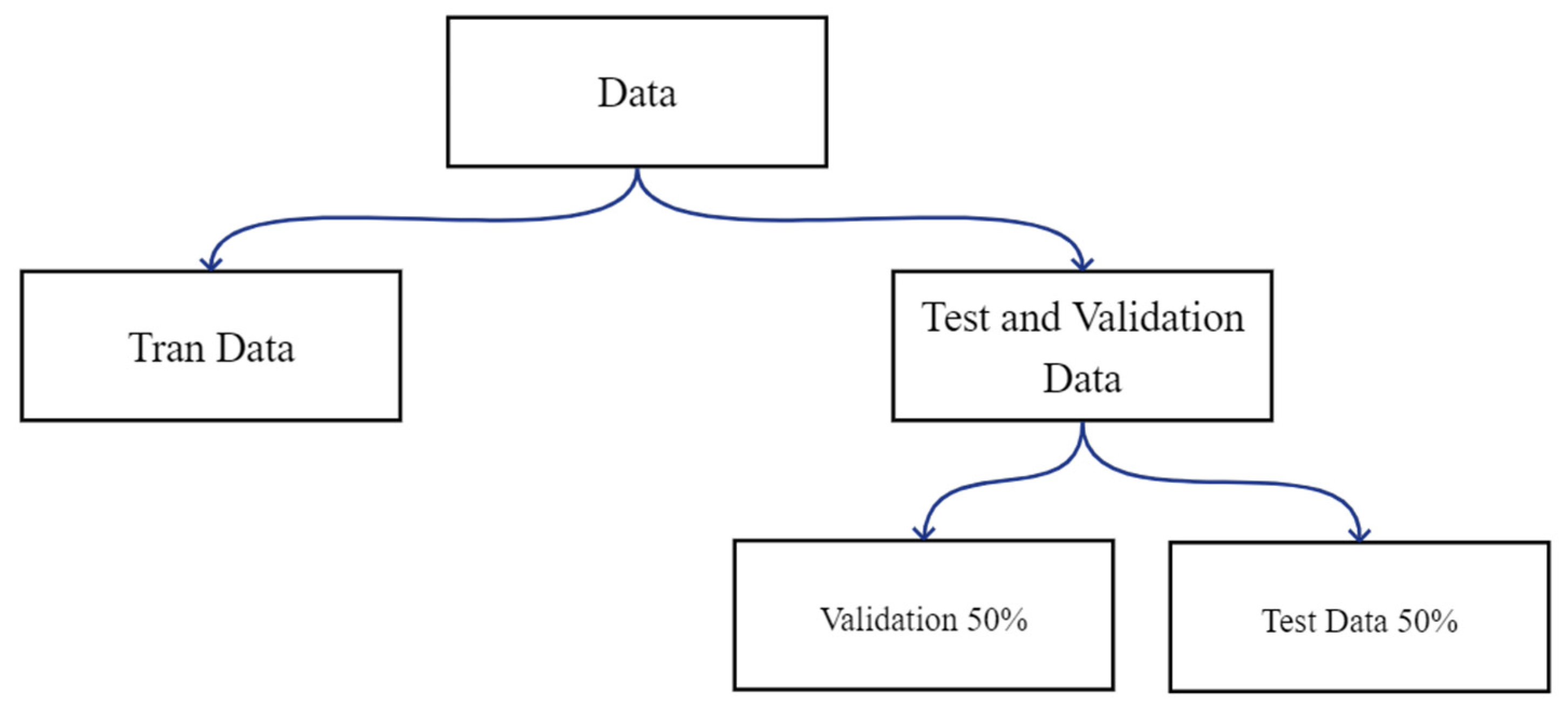

4.2.3. Data Splitting

4.2.4. Machine Learning Algorithms

- Logistic regression

- Decision tree algorithm

- Random Forest algorithm

- The Gradient Boosting algorithm

- CatBoost algorithm

4.2.5. Model Performance Evaluation Metrics

4.2.6. Dimensionality Reduction

4.3. Adaptive Mitigation Scenario

Mapping FRL to Enforceable Policy (Token Bucket)

5. Results and Discussion

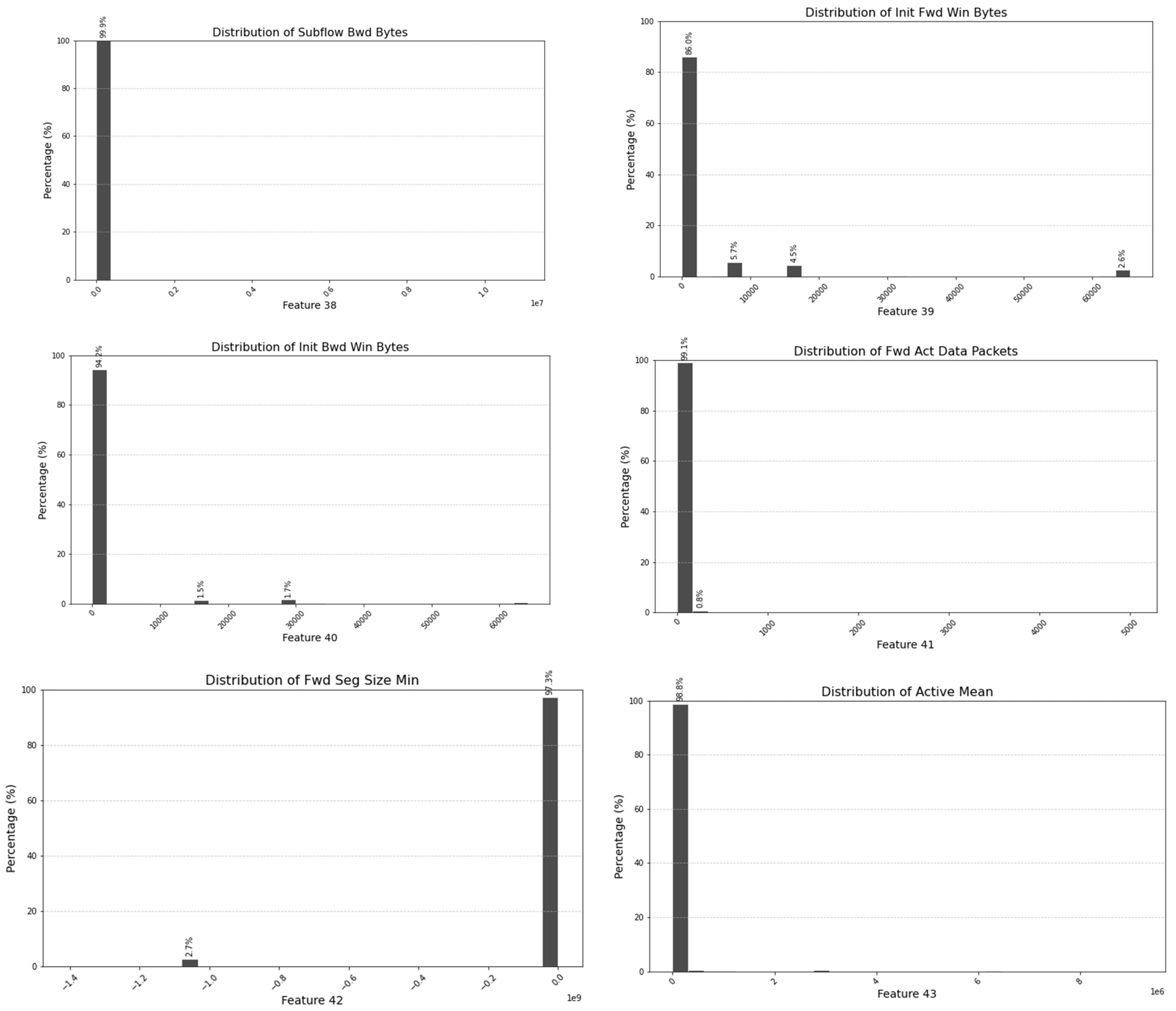

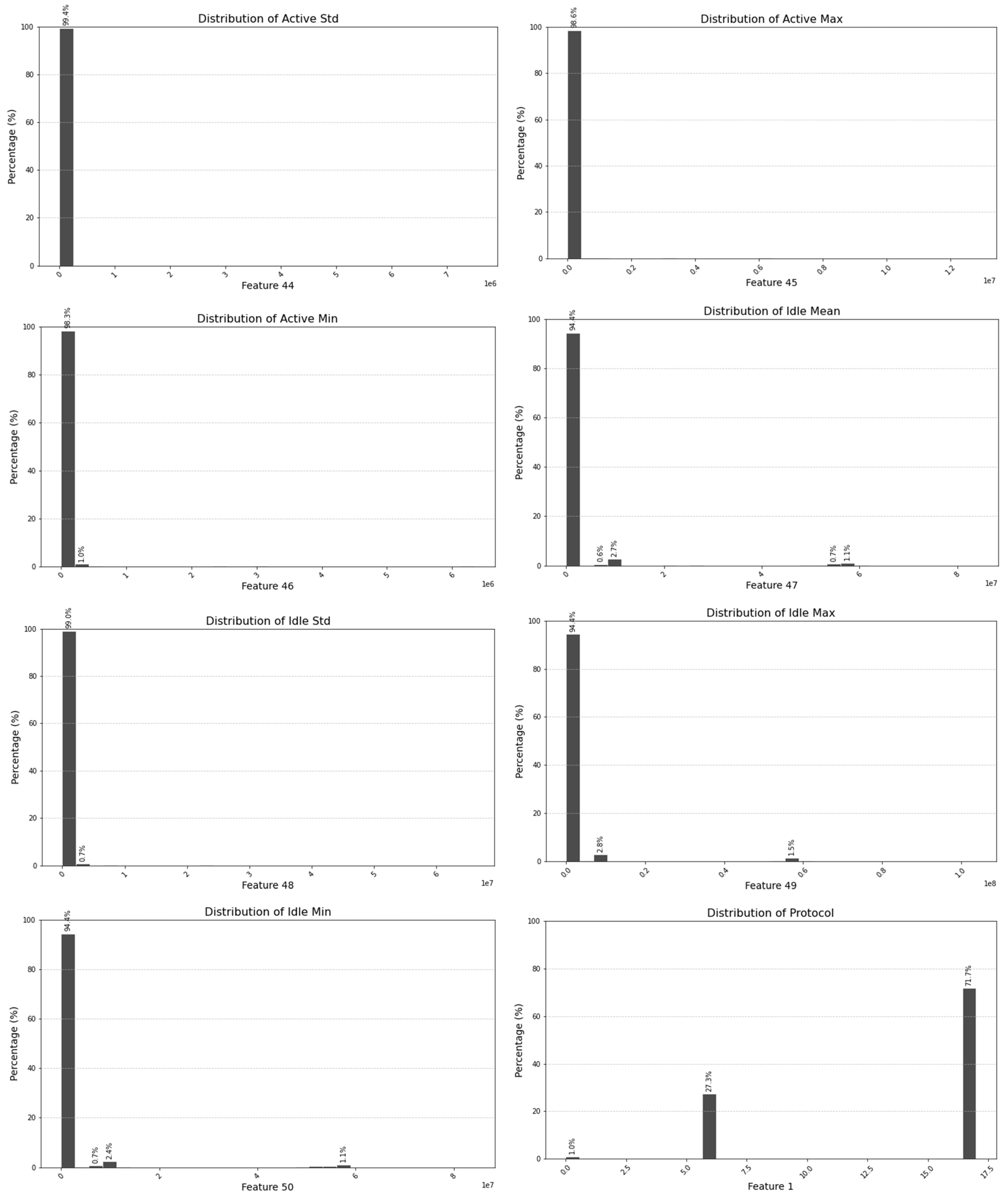

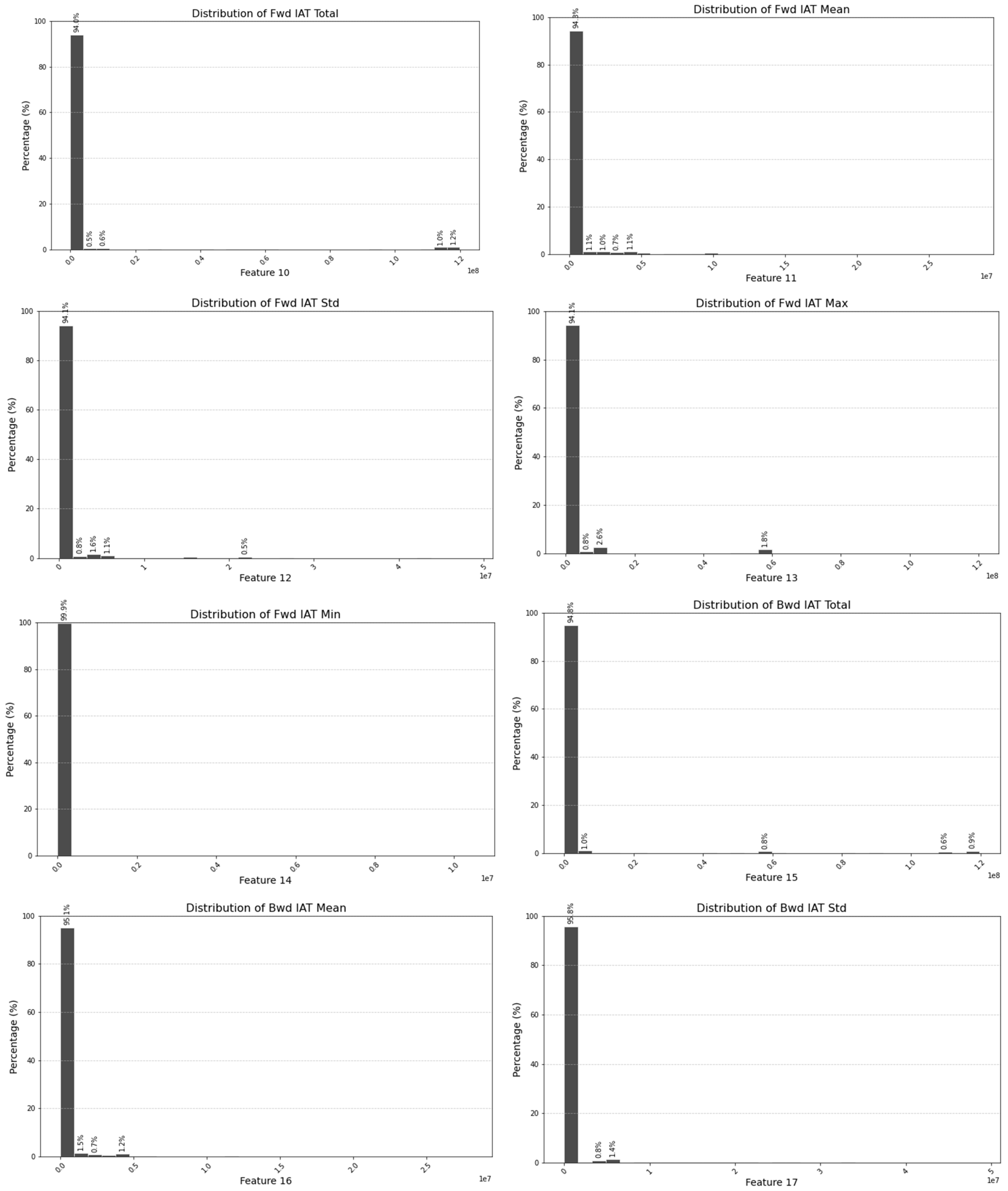

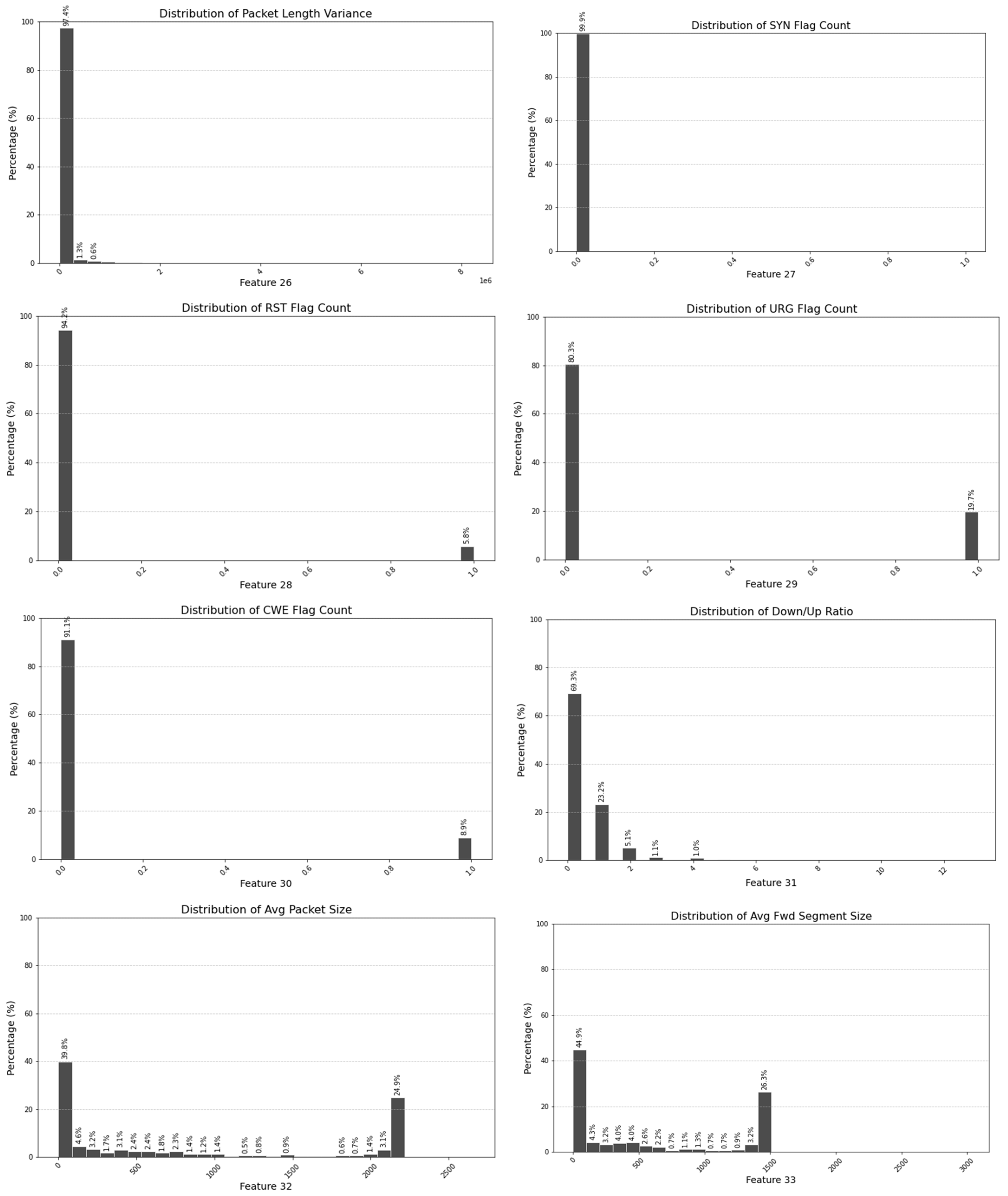

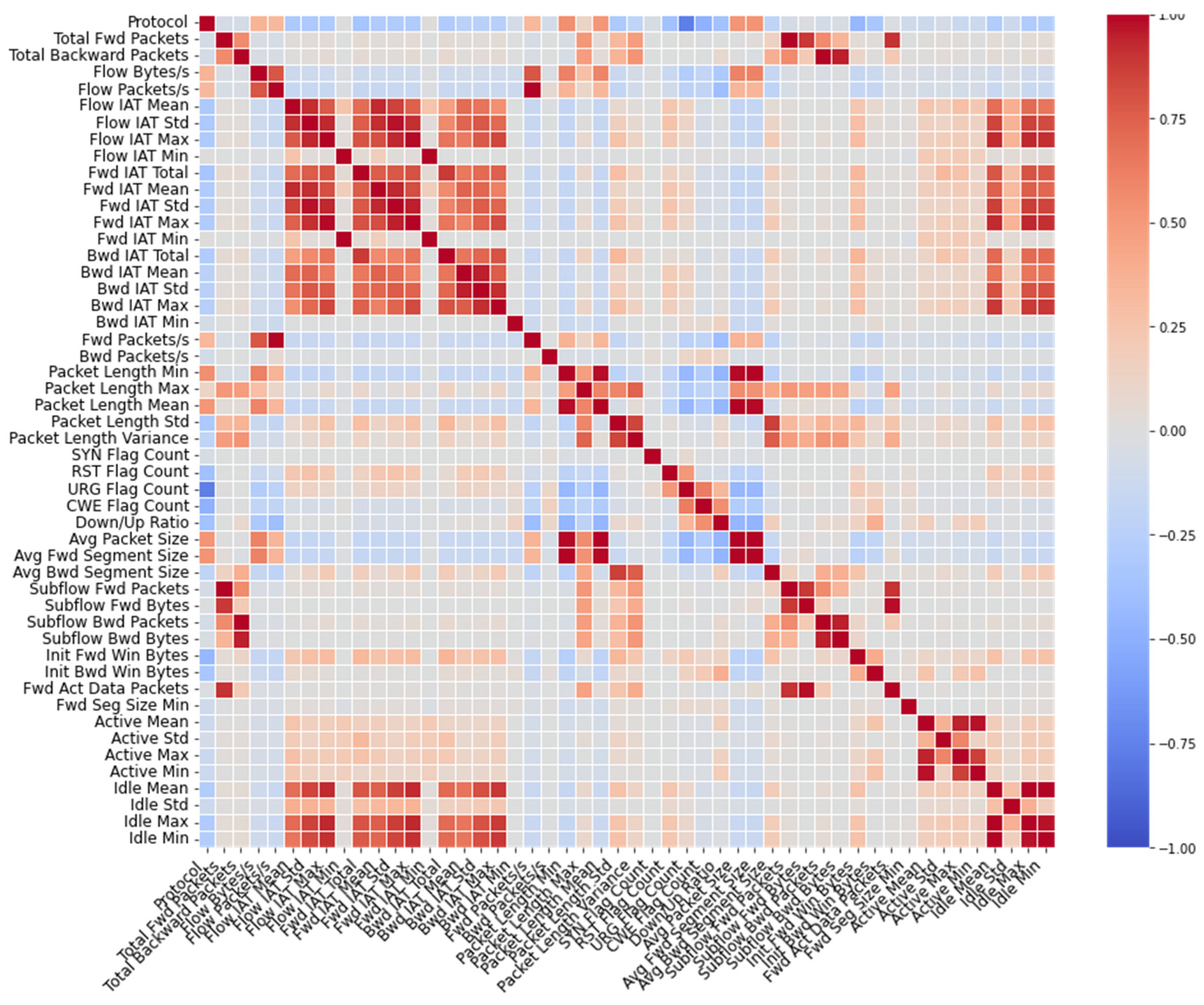

5.1. Exclusion of Non-Contributing Features

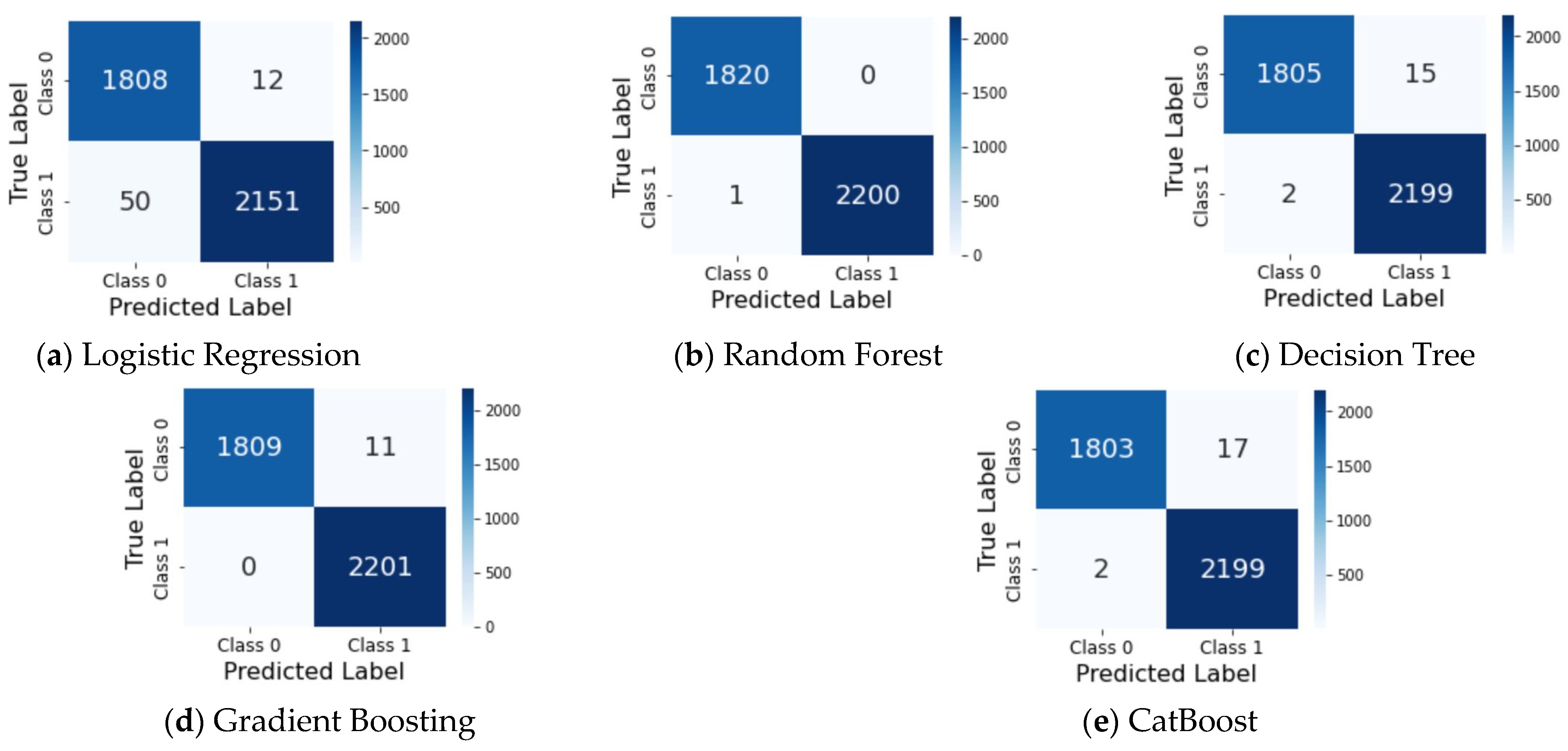

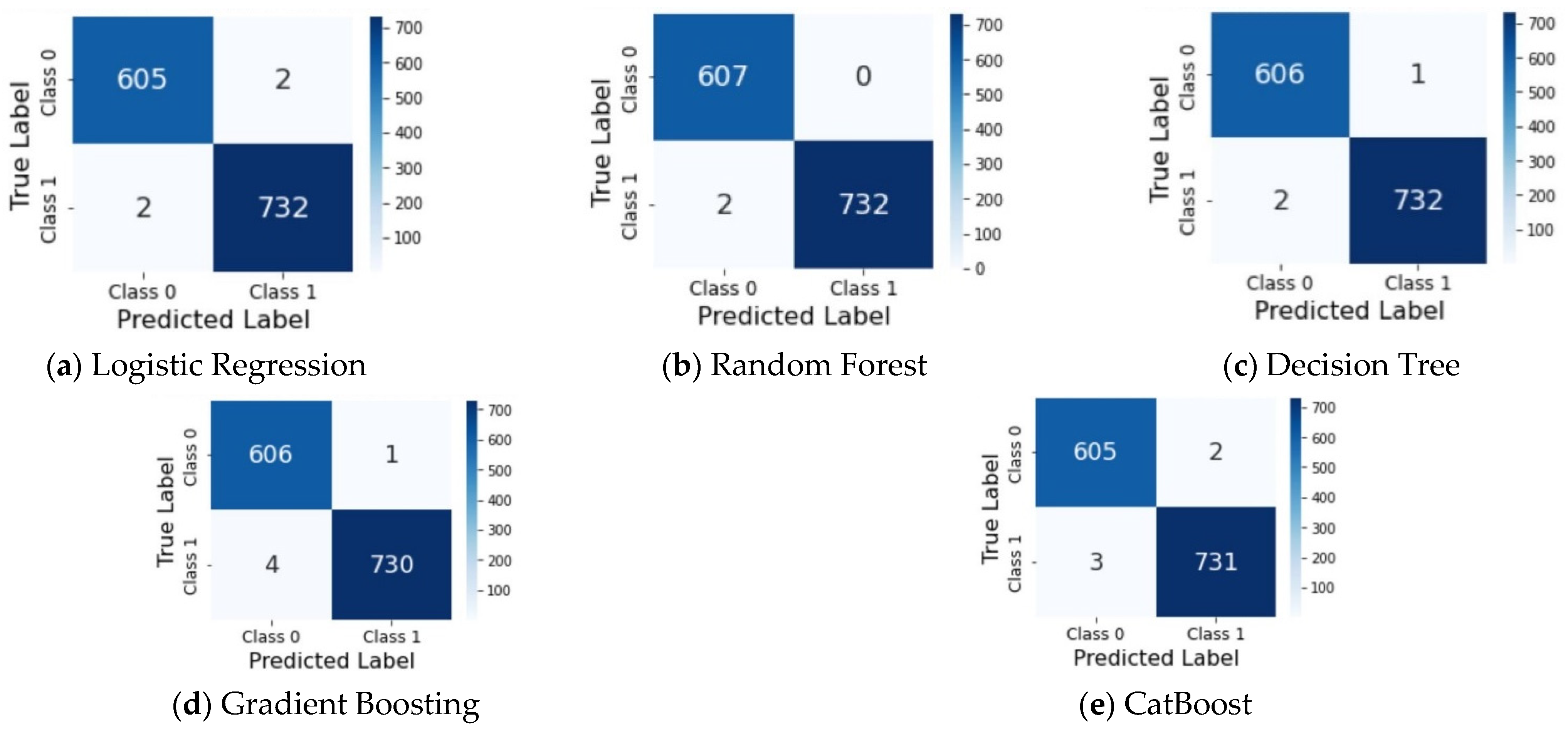

5.2. Performance of Machine Learning Models

5.3. Evaluation of the Proposed DDoS Mitigation Strategy

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Selected Features (Important Parameters) | Unimportant Parameters |

|---|---|

| Packet Length Min | Flow Duration |

| Total Backward Packets | Fwd Packets Length Total |

| Avg Fwd Segment Size | Bwd Packets Length Total |

| Flow Bytes/s | Fwd Packet Length Max |

| Avg Packet Size | Fwd Packet Length Min |

| Protocol | Fwd Packet Length Mean |

| Flow IAT Std | Fwd Packet Length Std |

| Subflow Bwd Packets | Bwd Packet Length Max |

| Flow IAT Mean | Bwd Packet Length Min |

| Packet Length Mean | Bwd Packet Length Mean |

| Bwd Packet Length Std | |

| Fwd PSH Flags | |

| Bwd PSH Flags | |

| Fwd URG Flags | |

| Bwd URG Flags | |

| Fwd Header Length | |

| Bwd Header Length | |

| FIN Flag Count | |

| PSH Flag Count | |

| ACK Flag Count | |

| ECE Flag Count | |

| Fwd Avg Bytes/Bulk | |

| Fwd Avg Packets/Bulk | |

| Fwd Avg Bulk Rate | |

| Bwd Avg Bytes/Bulk | |

| Bwd Avg Packets/Bulk | |

| Bwd Avg Bulk Rate | |

| Flow IAT Max | |

| Flow IAT Min | |

| Fwd IAT Total | |

| Fwd IAT Mean | |

| Fwd IAT Std | |

| Fwd IAT Max | |

| Fwd IAT Min | |

| Bwd IAT Total | |

| Bwd IAT Mean | |

| Bwd IAT Std | |

| Bwd IAT Max | |

| Bwd IAT Min | |

| Fwd Packets/s | |

| Bwd Packets/s | |

| Packet Length Min | |

| Packet Length Max | |

| Packet Length Std | |

| Packet Length Variance | |

| FIN Flag Count | |

| SYN Flag Count | |

| RST Flag Count | |

| PSH Flag Count | |

| ACK Flag Count | |

| URG Flag Count | |

| CWE Flag Count | |

| ECE Flag Count | |

| Down/Up Ratio | |

| Subflow Bwd Bytes | |

| Init Fwd Win Bytes | |

| Init Bwd Win Bytes | |

| Fwd Act Data Packets | |

| Fwd Seg Size Min | |

| Active Mean | |

| Active Std | |

| Active Max | |

| Active Min | |

| Idle Mean | |

| Idle Std | |

| Idle Max | |

| Idle Min | |

| Label | |

| Avg Bwd Segment Size | |

| Subflow Fwd Packets | |

| Subflow Fwd Bytes | |

| Total Fwd Packets | |

| Flow Packets/s |

References

- Amrish, R.; Bavapriyan, K.; Gopinaath, V.; Jawahar, A.; Kumar, C.V. DDoS Detection using Machine Learning Techniques. J. IoT Soc. Mob. Anal. Cloud 2022, 4, 24–32. [Google Scholar] [CrossRef]

- Karaca, O.; Sokullu, R.; Prasad, N.R.; Prasad, R. Application Oriented Multi Criteria Optimization in WSNs Using on AHP. Wirel. Pers. Commun. 2012, 65, 689–712. [Google Scholar] [CrossRef]

- Ahmad, Z.; Khan, A.S.; Shiang, C.W.; Abdullah, J.; Ahmad, F. Network intrusion detection system: A systematic study of machine learning and deep learning approaches. Trans. Emerg. Telecommun. Technol. 2021, 32, e4150. [Google Scholar] [CrossRef]

- Bhayo, J.; Shah, S.A.; Hameed, S.; Ahmed, A.; Nasir, J.; Draheim, D. Towards a machine learning-based framework for DDOS attack detection in software-defined IoT (SD-IoT) networks. Eng. Appl. Artif. Intell. 2023, 123, 106432. [Google Scholar] [CrossRef]

- Pranggono, B.; Arabo, A. COVID-19 pandemic cybersecurity issues. Internet Technol. Lett. 2021, 4, e247. [Google Scholar] [CrossRef]

- Mittal, M.; Kumar, K.; Behal, S. Deep learning approaches for detecting DDoS attacks: A systematic review. Soft Comput. 2023, 27, 13039–13075. [Google Scholar] [CrossRef] [PubMed]

- Saluja, K.; Bagchi, S.; Solanki, V.; Khan, M.N.A.; Dhamija, E.; Debnath, S.K. Exploring Robust DDoS Detection: A Machine Learning Analysis with the CICDDoS2019 Dataset. In Proceedings of the 2024 IEEE 5th India Council International Subsections Conference (INDISCON), Chandigarh, India, 22–24 August 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Siddiqui, S.; Hameed, S.; Shah, S.A.; Ahmad, I.; Aneiba, A.; Draheim, D.; Dustdar, S. Toward Software-Defined Networking-Based IoT Frameworks: A Systematic Literature Review, Taxonomy, Open Challenges and Prospects. IEEE Access 2022, 10, 70850–70901. [Google Scholar] [CrossRef]

- Agrawal, S.; Sarkar, S.; Alazab, M.; Maddikunta, P.K.R.; Gadekallu, T.R.; Pham, Q.-V. Genetic CFL: Hyperparameter Optimization in Clustered Federated Learning. Comput. Intell. Neurosci. 2021, 2021, 7156420. [Google Scholar] [CrossRef]

- Prima, F.; Dylan, L.; Gunawan, A.A.S. Comparison of Machine Learning Models for Classification of DDoS Attacks. In Proceedings of the 2023 5th International Conference on Cybernetics and Intelligent System (ICORIS), Pangkapinang, Indonesia, 6–7 October 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Parfenov, D.; Kuznetsova, L.; Yanishevskaya, N.; Bolodurina, I.; Zhigalov, A.; Legashev, L. Research Application of Ensemble Machine Learning Methods to the Problem of Multiclass Classification of DDoS Attacks Identification. In Proceedings of the 2020 International Conference Engineering and Telecommunication (En&T), Dolgoprudny, Russia, 25–26 November 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Mohmand, M.I.; Hussain, H.; Khan, A.A.; Ullah, U.; Zakarya, M.; Ahmed, A.; Raza, M.; Rahman, I.U.; Haleem, M. A Machine Learning-Based Classification and Prediction Technique for DDoS Attacks. IEEE Access 2022, 10, 21443–21454. [Google Scholar] [CrossRef]

- Nagpal, B.; Sharma, P.; Chauhan, N.; Panesar, A. DDoS tools: Classification, analysis and comparison. In Proceedings of the 2015 2nd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 11–13 March 2015; pp. 342–346. Available online: https://ieeexplore.ieee.org/document/7100270 (accessed on 25 October 2024).

- Dasari, S.; Kaluri, R. An Effective Classification of DDoS Attacks in a Distributed Network by Adopting Hierarchical Machine Learning and Hyperparameters Optimization Techniques. IEEE Access 2024, 12, 10834–10845. [Google Scholar] [CrossRef]

- Salmi, S.; Oughdir, L. Performance evaluation of deep learning techniques for DoS attacks detection in wireless sensor network. J. Big Data 2023, 10, 17. [Google Scholar] [CrossRef]

- Chovanec, M.; Hasin, M.; Havrilla, M.; Chovancová, E. Detection of HTTP DDoS Attacks Using NFStream and TensorFlow. Appl. Sci. 2023, 13, 6671. [Google Scholar] [CrossRef]

- Mishra, A.; Gupta, B.B.; Perakovic, D.; Penalvo, F.J.G.; Hsu, C.-H. Classification Based Machine Learning for Detection of DDoS attack in Cloud Computing. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 10–12 January 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Tan, L.; Pan, Y.; Wu, J.; Zhou, J.; Jiang, H.; Deng, Y. A New Framework for DDoS Attack Detection and Defense in SDN Environment. IEEE Access 2020, 8, 161908–161919. Available online: https://ieeexplore.ieee.org/abstract/document/9186014 (accessed on 25 October 2024). [CrossRef]

- Sangodoyin, A.O.; Akinsolu, M.O.; Pillai, P.; Grout, V. Detection and Classification of DDoS Flooding Attacks on Software-Defined Networks: A Case Study for the Application of Machine Learning. IEEE Access 2021, 9, 122495–122508. [Google Scholar] [CrossRef]

- Peng, S.; Tian, J.; Zheng, X.; Chen, S.; Shu, Z. DDoS Defense Strategy Based on Blockchain and Unsupervised Learning Techniques in SDN. Future Internet 2025, 17, 367. [Google Scholar] [CrossRef]

- Karpowicz, M.P. Adaptive tuning of network traffic policing mechanisms for DDoS attack mitigation systems. Eur. J. Control 2021, 61, 101–118. [Google Scholar] [CrossRef]

- Swami, R.; Dave, M.; Ranga, V. IQR-based approach for DDoS detection and mitigation in SDN. Def. Technol. 2023, 25, 76–87. [Google Scholar] [CrossRef]

- Alashhab, A.A.; Zahid, M.S.; Isyaku, B.; Elnour, A.A.; Nagmeldin, W.; Abdelmaboud, A.; Abdullah, T.A.A.; Maiwada, U.D. Enhancing DDoS Attack Detection and Mitigation in SDN Using an Ensemble Online Machine Learning Model. IEEE Access 2024, 12, 51630–51649. [Google Scholar] [CrossRef]

- Hamarshe, A.; Ashqar, H.I.; Hamarsheh, M. Detection of DDoS Attacks in Software Defined Networking Using Machine Learning Models. arXiv 2023, arXiv:2303.06513. [Google Scholar] [CrossRef]

- Elsayed, M.S.; Le-Khac, N.-A.; Dev, S.; Jurcut, A.D. DDoSNet: A Deep-Learning Model for Detecting Network Attacks. arXiv 2020, arXiv:2006.13981. [Google Scholar] [CrossRef]

- Khan, A.A.R.; Nisha, S.S. Efficient hybrid optimization based feature selection and classification on high dimensional dataset. Multimed. Tools Appl. 2023, 83, 58689–58727. [Google Scholar] [CrossRef]

- NSF NHERI DesignSafe|DesignSafe-CI. Available online: https://www.designsafe-ci.org/ (accessed on 26 October 2024).

- DDoS 2019|Datasets|Research|Canadian Institute for Cybersecurity|UNB. Available online: https://www.unb.ca/cic/datasets/ddos-2019.html (accessed on 25 October 2024).

- Kamaldeep; Malik, M.; Dutta, M. Feature Engineering and Machine Learning Framework for DDoS Attack Detection in the Standardized Internet of Things. IEEE Internet Things J. 2023, 10, 8658–8669. [Google Scholar] [CrossRef]

- Raju, V.N.G.; Lakshmi, K.P.; Jain, V.M.; Kalidindi, A.; Padma, V. Study the Influence of Normalization/Transformation process on the Accuracy of Supervised Classification. In Proceedings of the 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 20–22 August 2020; pp. 729–735. [Google Scholar] [CrossRef]

- Ullah, F.; Babar, M.A. On the scalability of Big Data Cyber Security Analytics systems. J. Netw. Comput. Appl. 2022, 198, 103294. [Google Scholar] [CrossRef]

- Medar, R.; Rajpurohit, V.S.; Rashmi, B. Impact of Training and Testing Data Splits on Accuracy of Time Series Forecasting in Machine Learning. In Proceedings of the 2017 International Conference on Computing, Communication, Control and Automation (ICCUBEA), Pune, India, 7–18 August 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Zou, X.; Hu, Y.; Tian, Z.; Shen, K. Logistic Regression Model Optimization and Case Analysis. In Proceedings of the 2019 IEEE 7th International Conference on Computer Science and Network Technology (ICCSNT), Dalian, China, 19–20 October 2019; pp. 135–139. [Google Scholar] [CrossRef]

- Kim, M.; Song, Y.; Wang, S.; Xia, Y.; Jiang, X. Secure Logistic Regression Based on Homomorphic Encryption: Design and Evaluation. JMIR Med. Inform. 2018, 6, e19. [Google Scholar] [CrossRef]

- Hai, T.; Zhou, J.; Adetiloye, O.A.; Zadeh, S.A.; Yin, Y.; Iwendi, C. DDoS Attack Prediction Using Decision Tree and Random Forest Algorithms. In Proceedings of ICACTCE’23—The International Conference on Advances in Communication Technology and Computer Engineering, Bolton, UK, 24–25 February 2023; Iwendi, C., Boulouard, Z., Kryvinska, N., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 37–46. [Google Scholar] [CrossRef]

- Breiman, L. Random Forest. January 2001. Available online: https://www.stat.berkeley.edu/~breiman/randomforest2001.pdf (accessed on 1 January 2025).

- Sharma, P.; Singh, P.; Kumar, C.N.S.V. Web Guardian: Harnessing Web Mining to Combat Online Terrorism. In Proceedings of the 2024 International Conference on Signal Processing, Computation, Electronics, Power and Telecommunication (IConSCEPT), Karaikal, India, 4–5 July 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Hajjouz, A.; Avksentieva, E. Evaluating the Effectiveness of the CatBoost Classifier in Distinguishing Benign Traffic, FTP BruteForce and SSH BruteForce Traffic. In Proceedings of the 2024 9th International Conference on Signal and Image Processing (ICSIP), Nanjing, China, 12–14 July 2024; pp. 351–358. [Google Scholar] [CrossRef]

- Saleem, M.; Azam, M.; Mubeen, Z.; Mumtaz, G. Machine Learning for Improved Threat Detection: LightGBM vs. CatBoost. J. Comput. Biomed. Inform. Jun 2024, 7, 571–580. [Google Scholar]

- Samat, A.; Li, E.; Du, P.; Liu, S.; Xia, J. GPU-Accelerated CatBoost-Forest for Hyperspectral Image Classification Via Parallelized mRMR Ensemble Subspace Feature Selection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3200–3214. [Google Scholar] [CrossRef]

- Ramzan, M.; Shoaib, M.; Altaf, A.; Arshad, S.; Iqbal, F.; Castilla, Á.K.; Ashraf, I. Distributed Denial of Service Attack Detection in Network Traffic Using Deep Learning Algorithm. Sensors 2023, 23, 8642. [Google Scholar] [CrossRef] [PubMed]

- Chaira, M.; Belhenniche, A.; Chertovskih, R. Enhancing DDoS Attacks Mitigation Using Machine Learning and Blockchain-Based Mobile Edge Computing in IoT. Computation 2025, 13, 158. [Google Scholar] [CrossRef]

- Raza, M.S.; Sheikh, M.N.A.; Hwang, I.-S.; Ab-Rahman, M.S. Feature-Selection-Based DDoS Attack Detection Using AI Algorithms. Telecom 2024, 5, 333–346. [Google Scholar] [CrossRef]

- Chu, T.S.; Si, W.; Simoff, S.; Nguyen, Q.V. A Machine Learning Classification Model Using Random Forest for Detecting DDoS Attacks. In Proceedings of the 2022 International Symposium on Networks, Computers and Communications (ISNCC), Shenzhen, China, 19–22 July 2022; pp. 1–7. [Google Scholar] [CrossRef]

| Flow_ID (Behavioral Key) | FRL | CIR_pps | Burst | CIR_Mbps | Burst_Mbps |

|---|---|---|---|---|---|

| 17 | (−0.001, 412.04] | (29,883.18, 5,353,271.0] | (38,203.44, 15,491,469.0] | 3 | 0.0 |

| 17 | (1.0824 × 109, 2.656 × 109] | (0.999, 2.0] | (−0.001, 59.507] | 3 | 0.0 |

| 17 | (1.350 × 107, 6.264 × 107] | (2.0, 7.0] | (−0.001, 59.507] | 3 | 0.0 |

| 17 | (2.552 × 104, 1.350 × 107] | (182.567, 6877.667] | (59.507, 11,895.958] | 3 | 0.0 |

| Accuracy | Precision | Recall | F1-Score | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Train | Valid | Test | Train | Valid | Test | Train | Valid | Test | Train | Valid | Test | |

| LR | 99.73 | 99.70 | 99.70 | 99.73 | 99.70 | 99.70 | 99.73 | 99.70 | 99.70 | 99.73 | 99.70 | 99.70 |

| RF | 99.98 | 99.70 | 99.85 | 99.98 | 99.70 | 99.85 | 99.98 | 99.70 | 99.85 | 99.98 | 99.70 | 99.85 |

| DT | 100 | 99.78 | 99.78 | 100 | 99.78 | 99.77 | 100 | 99.78 | 99.77 | 100 | 99.78 | 99.77 |

| GB | 99.88 | 99.78 | 99.63 | 99.88 | 99.78 | 99.62 | 99.88 | 99.78 | 99.62 | 99.88 | 99.78 | 99.62 |

| CB | 99.75 | 99.70 | 99.63 | 99.75 | 99.70 | 99.62 | 99.75 | 99.70 | 99.62 | 99.75 | 99.70 | 99.62 |

| Accuracy | Precision | Recall | F1-Score | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Train | Valid | Test | Train | Valid | Test | Train | Valid | Test | Train | Valid | Test | |

| LR | 97.65 | 97.93 | 97.39 | 97.71 | 97.97 | 97.48 | 97.65 | 97.93 | 97.39 | 97.65 | 97.93 | 97.39 |

| RF | 100 | 99.89 | 99.78 | 100 | 99.89 | 99.78 | 100 | 99.89 | 99.78 | 100 | 99.89 | 99.78 |

| DT | 100 | 99.72 | 99.85 | 100 | 99.72 | 99.85 | 100 | 9972 | 99.85 | 100 | 99.72 | 99.85 |

| GB | 100 | 99.89 | 99.70 | 100 | 99.89 | 99.70 | 100 | 99.89 | 99.70 | 100 | 99.89 | 99.70 |

| CB | 99.97 | 99.94 | 99.63 | 99.97 | 99.94 | 99.63 | 99.97 | 99.94 | 99.63 | 99.97 | 99.94 | 99.63 |

| Algorithms | Accuracy | Precision | Recall | F1-Score | Notes |

|---|---|---|---|---|---|

| RF [7] | 99.11 | 99 | 99.23 | 99.11 | 24 Features |

| RF [41] | 86 | 78 | 70 | 73 | - |

| RF [42] | 99.62 | 99.34 | 98.11 | 98.72 | 13 Features |

| RF [43] | 99 | 99 | 97 | 99 | 16 features |

| Our proposed RF | 99.85 | 99.85 | 99.85 | 99.85 | 10 Features |

| DT [7] | 98.25 | 97.55 | 98.25 | 97.89 | 24 Features |

| DT [41] | 77 | 92 | 60 | 40 | - |

| DT [42] | 96.80 | 95.10 | 99.67 | 97.33 | 13 Features |

| DT [43] | 91 | 91 | 91 | 94 | 16 features |

| Our proposed DT | 99.78 | 99.77 | 99.77 | 99.77 | 10 Features |

| CB [11] | - | 96.7 | 97 | 96.8 | 24 Features |

| Our proposed CB | 99.63 | 99.62 | 99.62 | 99.62 | 10 Features |

| LR [41] | 95 | 86 | 11 | 19 | - |

| Our proposed LR | 99.70 | 99.70 | 99.70 | 99.70 | 10 Features |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ibrahim, A.J.; Répás, S.R.; Bektaş, N. Feature-Optimized Machine Learning Approaches for Enhanced DDoS Attack Detection and Mitigation. Computers 2025, 14, 472. https://doi.org/10.3390/computers14110472

Ibrahim AJ, Répás SR, Bektaş N. Feature-Optimized Machine Learning Approaches for Enhanced DDoS Attack Detection and Mitigation. Computers. 2025; 14(11):472. https://doi.org/10.3390/computers14110472

Chicago/Turabian StyleIbrahim, Ahmed Jamal, Sándor R. Répás, and Nurullah Bektaş. 2025. "Feature-Optimized Machine Learning Approaches for Enhanced DDoS Attack Detection and Mitigation" Computers 14, no. 11: 472. https://doi.org/10.3390/computers14110472

APA StyleIbrahim, A. J., Répás, S. R., & Bektaş, N. (2025). Feature-Optimized Machine Learning Approaches for Enhanced DDoS Attack Detection and Mitigation. Computers, 14(11), 472. https://doi.org/10.3390/computers14110472