4.1. Results

This section presents and describes the results achieved by the proposed model.

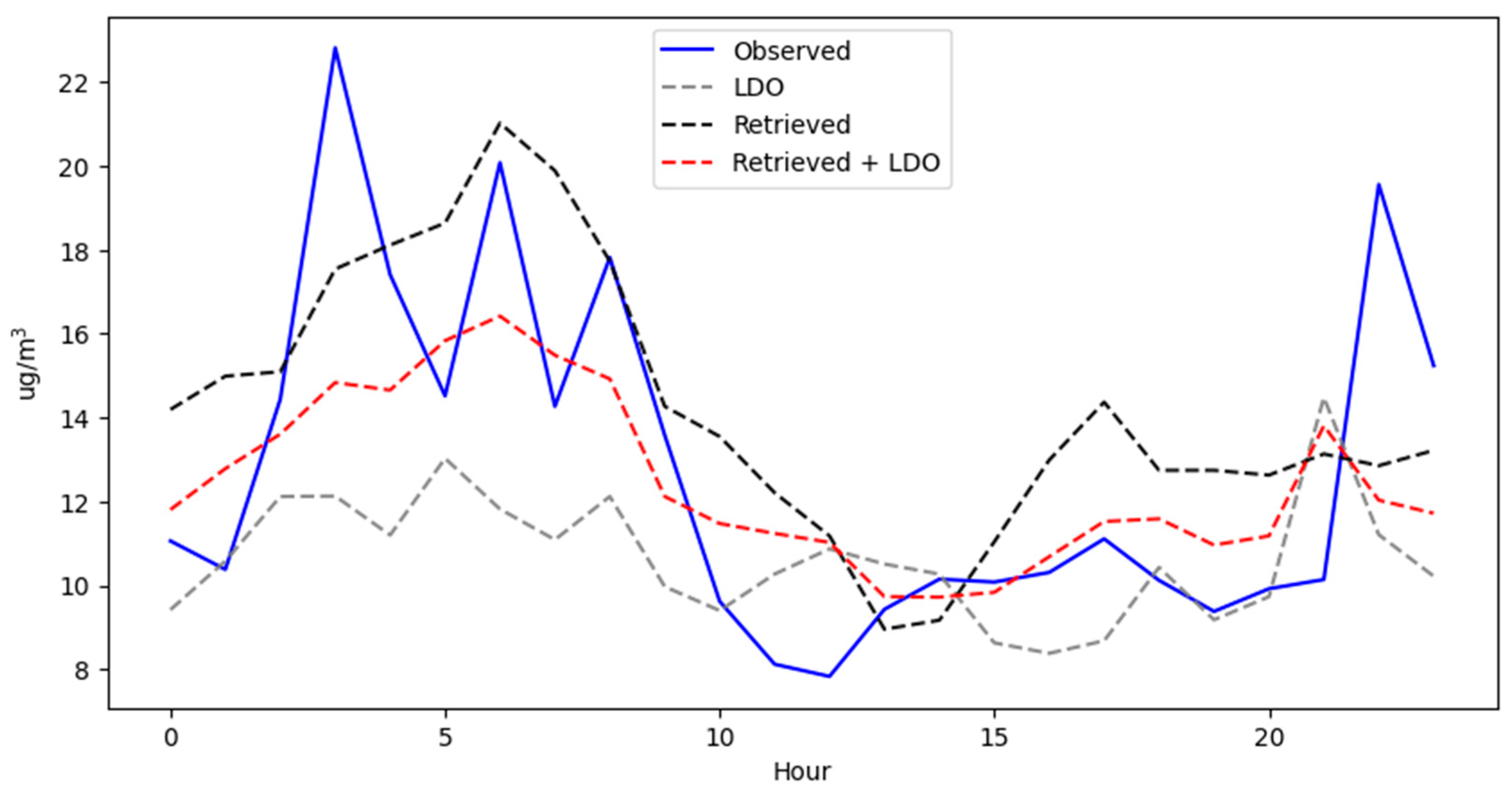

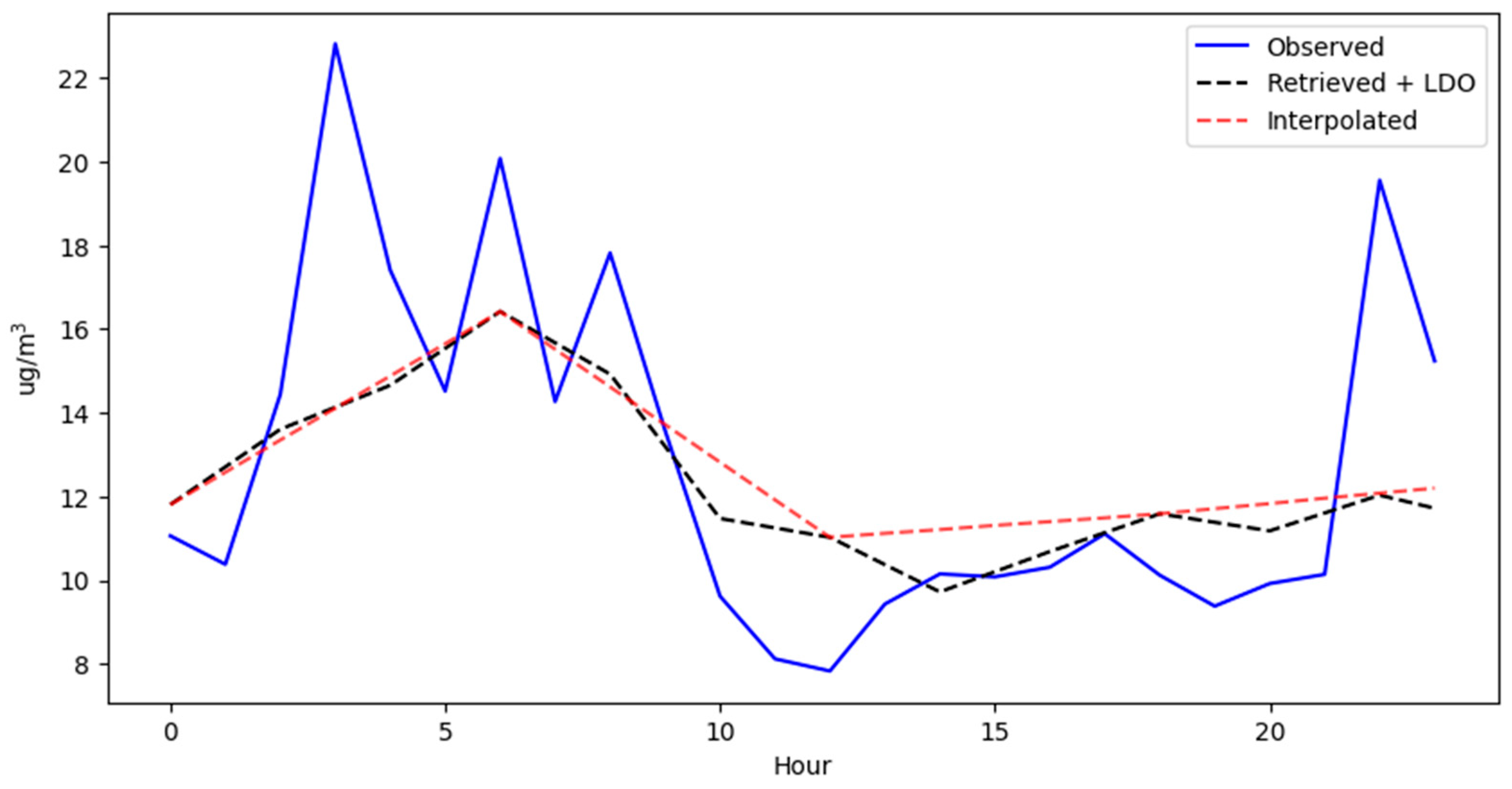

According to

Table 5, it can be seen that in most monitoring stations, each element of the model contributes to improving the predictions, except for the case of the Espinar station, where the retrieved element, instead of reducing the RMSE, increases it. The optimal linear interpolation for each station was different: for Tala it was 6, for Uchumayo it was 5, and for Espinar it was 5.

According to

Table 6, in terms of the MAPE, it can be seen that each element of the model does not contribute to the improvement in the model in the same way. For the Tala station, the elements that do not contribute to the improvement in the MAPE are retrieved to a greater extent and linear interpolation to a lesser extent. For the Uchumayo station, all elements contribute to the improvement. And, for the Espinar station, linear interpolation does not contribute to the improvement in the MAPE.

According to

Table 7, in terms of the R correlation, all the elements of the model contribute positively to improving the correlation level in the stations, except for linear interpolation at the Espinar station, which instead of increasing the correlation of LDO + retrieved (0.5470), decreases it to 0.5332.

4.2. Discussions

4.2.1. Comparison with Benchmark Models

The benchmark models implemented in this study include LSTM, BiLSTM, GRU, and BiGRU.

LSTM is a type of recurrent neural network that works with sequential or time series data, designed to solve the vanishing gradient problem through a memory cell that allows remembering or forgetting information, controlled by three main gates: the forget gate, input gate, and output gate.

BiLSTM is a variant of LSTM that processes information in two directions. One LSTM network reads the sequence forward, while another LSTM network reads it backward, and in the end, both representations are combined.

GRU is a recurrent neural network very similar to LSTM, but with a simpler and lighter structure. GRU has only two gates: a reset gate and an update gate.

BiGRU, similar to BiLSTM, is an extension of GRU that processes the sequence in two directions, forward and backward.

The hyperparameters used to implement the benchmark models are detailed in

Table 8.

All the implemented models use the same number of layers and neurons in each layer. They were implemented in the Jupyter IDE using the TensorFlow 2.18.0 library in Python language 3.11.7. The lookback for each model is 48 h. The models were compiled with ‘mse’ as the loss function and ‘adam’ as the optimizer, with a batch size of 50 and 50 training epochs. The results achieved are shown and described below.

According to

Table 9, in terms of the RMSE, for the Tala station, GRU shows the lowest RMSE, equal to 4.4382 µg/m

3, while the proposed model ranks last. For the Uchumayo station, BiGRU presents the lowest RMSE, equal to 4.3567 µg/m

3, with the proposed model ranking second with an RMSE of 4.5296 µg/m

3. Finally, for the Espinar station, the proposed model is the best, achieving the lowest RMSE, equal to 11.4006 µg/m

3.

According to

Table 10, in terms of the MAPE, for the Tala station, BiLSTM shows the lowest MAPE at 0.4355. For the Uchumayo station, BiGRU shows the lowest MAPE at 0.4725. And, for the Espinar station, BiLSTM shows the lowest MAPE at 0.7302. The proposed model shows the highest MAPE across all stations.

According to

Table 11, in terms of the R, for the Tala station, GRU shows the highest correlation at 0.5353, while the proposed model ranks last with 0.4282. For the Uchumayo station, BiGRU shows the highest correlation at 0.3529, while the proposal model ranks second with 0.2943. And, for the Espinar station, the proposed model shows the highest correlation at 0.5332.

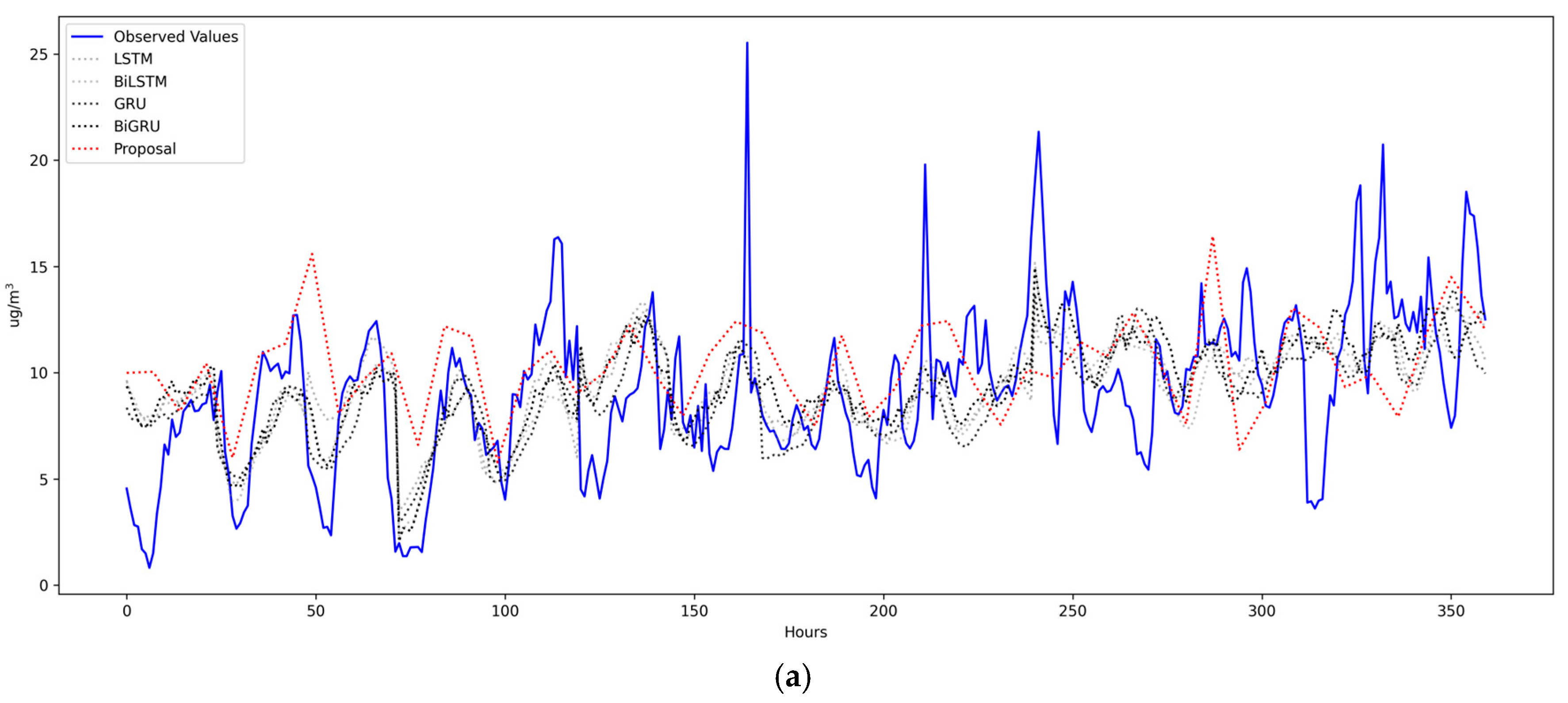

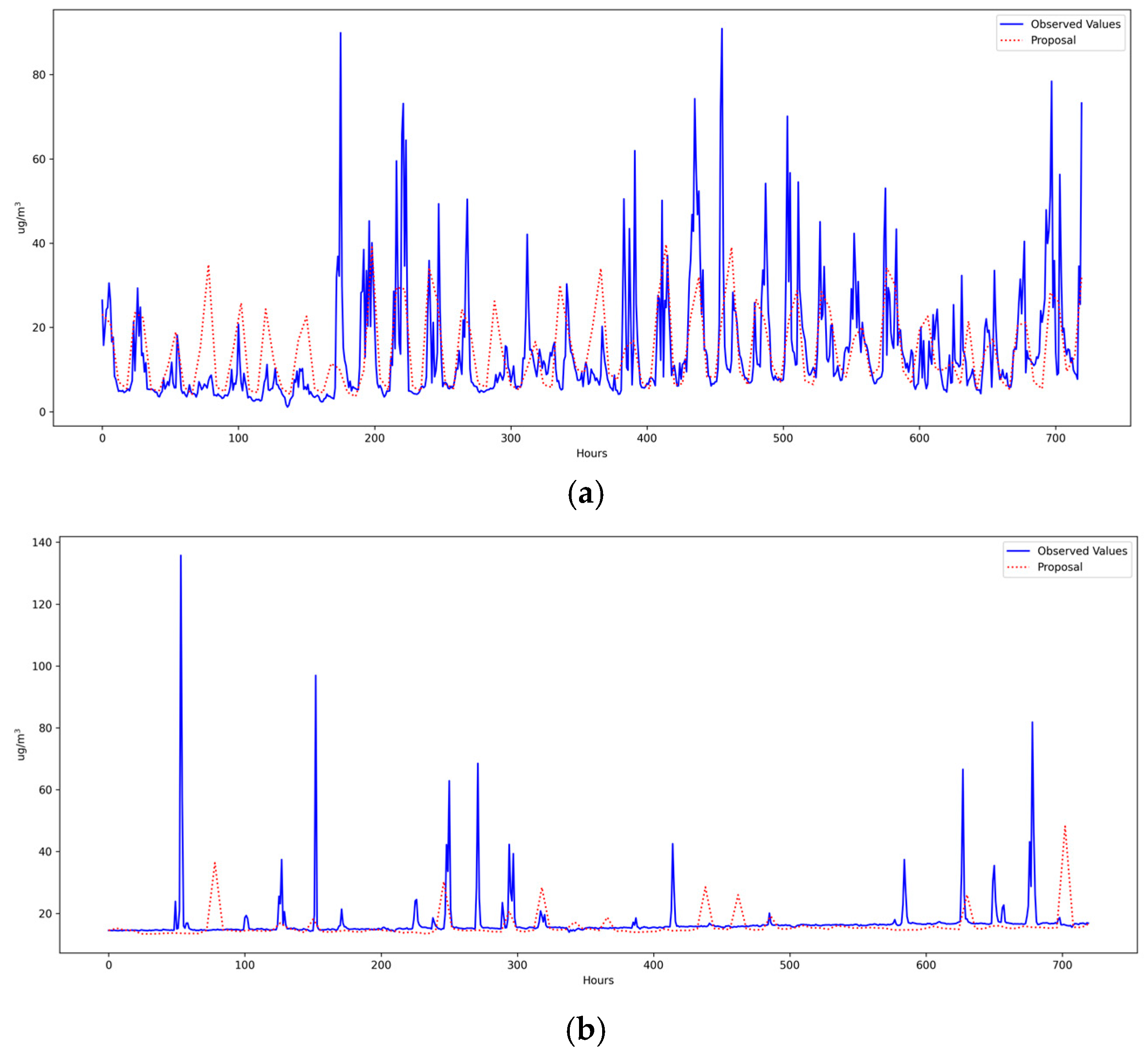

According to

Figure 6, it can be observed that in the stations (Uchumayo and Espinar) where there is greater data variability, the proposed model shows a better performance than the benchmark models. However, in the Tala station, where data variability is lower, all the benchmark models outperform the proposed model.

In summary, each metric identifies different models as the best performers. This is due to the way each metric is calculated: the RMSE heavily penalizes large errors since it squares them, while the MAPE penalizes relative errors much more when the actual value is small.

4.2.2. Analysis of Prediction Errors

To analyze the errors in the predictions of the implemented models, the corresponding heatmaps were generated.

According to

Figure 7a, for the Tala station, the absolute errors range between 0 and 80. All models show similar errors, concentrated around hour 2340 of the test data. However, the proposed model shows other clusters of high errors between hours 2700 and 2760, which definitely impacted the model’s performance for this station.

In contrast, for the Uchumayo station, according to

Figure 7b, the absolute errors are smaller than those in the previous station, ranging between 0 and 25. Likewise, greater variability can be observed in the locations of the errors, but overall, all models show a similar number of errors, which is reflected in the RMSE, according to

Table 6 shown earlier.

Finally, for the Espinar station, according to

Figure 7c, the absolute errors are larger than those in the previous stations, ranging between 0 and 120. The distribution of the errors is similar for all models, with the highest concentrations between hours 2772 and 3234. However, it can be seen that the proposed model shows a lower concentration of errors compared to the benchmark models, which is reflected in the model’s performance.

At this point, it is important to highlight the difference between the predictions of the benchmark models, which are based on recurrent neural networks (RNNs), and the predictions of the proposed model. The benchmark models tend to produce higher errors at stations with greater variability, such as the Tala and Espinar stations, due to the nature of their architectures. During training, they minimize the average MSE, which leads the models to avoid predicting extreme values, peaks, or abrupt drops, resulting in smoother curves. In contrast, the proposed model applies smoothing based on linear interpolation; however, this is not necessarily applied to the extreme elements but rather to a set of predetermined values, allowing it to predict extreme values, as shown in

Figure 6, thus producing better estimates for stations with greater variability.

4.2.3. Statistical Analysis

To determine whether one model is significantly better than another, in this section, the Kolmogorov–Smirnov (KS) test for two samples is applied. This test determines whether there is a significant difference between the proposed model and the benchmark models.

According to

Table 12, it can be seen that all

p-values are less than 0.05; therefore, the null hypothesis is rejected, and the alternative is accepted. This indicates that there is indeed a significant difference between the proposed model and the benchmark models across all monitoring stations.

As observed in

Figure 5, the predictions of the recurrent neural network-based models are similar; however, they are not similar to those of the proposed model. This has been identified by the KS test, indicating a significant difference between the proposed model and the benchmark models, which does not imply that the proposed model is superior to the benchmark models, or vice versa.

4.2.4. Computational Cost

Table 13 shows the computational cost required to train each of the models implemented in this study, including the proposed model, on a computer with a Core i7-13700H processor at 2.40 GHz, 16 GB of RAM, running in a Windows 11 environment.

According to

Table 13, it can be seen that the training costs in seconds of the benchmark models vary on average between 300.96 and 470.27 s, with LSTM being the least costly and BiGRU being the most costly. In contrast, the proposed model requires a much lower computational cost, averaging 0.4140 s, which represents 0.14% of the time required by LSTM and 0.09% of the time required by BiGRU.

Rapid and low-cost air quality forecasts, especially in mining areas, have direct and significant implications for public health and environmental management. First, having accessible predictive systems makes it possible to anticipate critical episodes of particulate matter pollution, facilitating the early issuance of health alerts to the exposed population. This can reduce the incidence of respiratory and cardiovascular diseases, particularly among vulnerable groups such as children, the elderly, and mining workers.

From an environmental perspective, these models contribute to the more efficient management of mining impacts, as they provide real-time information to adjust or temporarily halt operations during high-pollution events. Moreover, they promote transparency and citizen participation by allowing communities to access data and forecasts about their environment.

Likewise, it is important to highlight that the main weakness of complex deep learning models is the high computational cost required for their training. The proposed model greatly reduces this gap, making it a very good alternative for settings with limited computational resources.

4.2.5. Additional Tests

To improve the reliability of the results, additional tests were conducted using two new PM2.5 level datasets that do not correspond to mining areas but rather to urban areas, namely, the Pacocha and Pardo stations located in the city of Ilo, in southern Peru.

According to

Table 14, it can be observed that the model’s performance is similar to that obtained for the three mining area datasets, with a noteworthy performance at the Pardo station, showing 10.2005 µg/m

3 and a MAPE of 0.3152, which is higher than those obtained previously. This demonstrates that the model can be used not only for PM2.5 datasets in mining areas but also in other contexts.

Figure 8 shows 720 predicted days for both datasets.

4.2.6. Limitations of the Study

This study has several limitations that could be addressed in future works to improve the results and be applied in other contexts. As can be seen from the results obtained, the prediction errors in all stations are still high, which indicates that there is still considerable room for improvement.

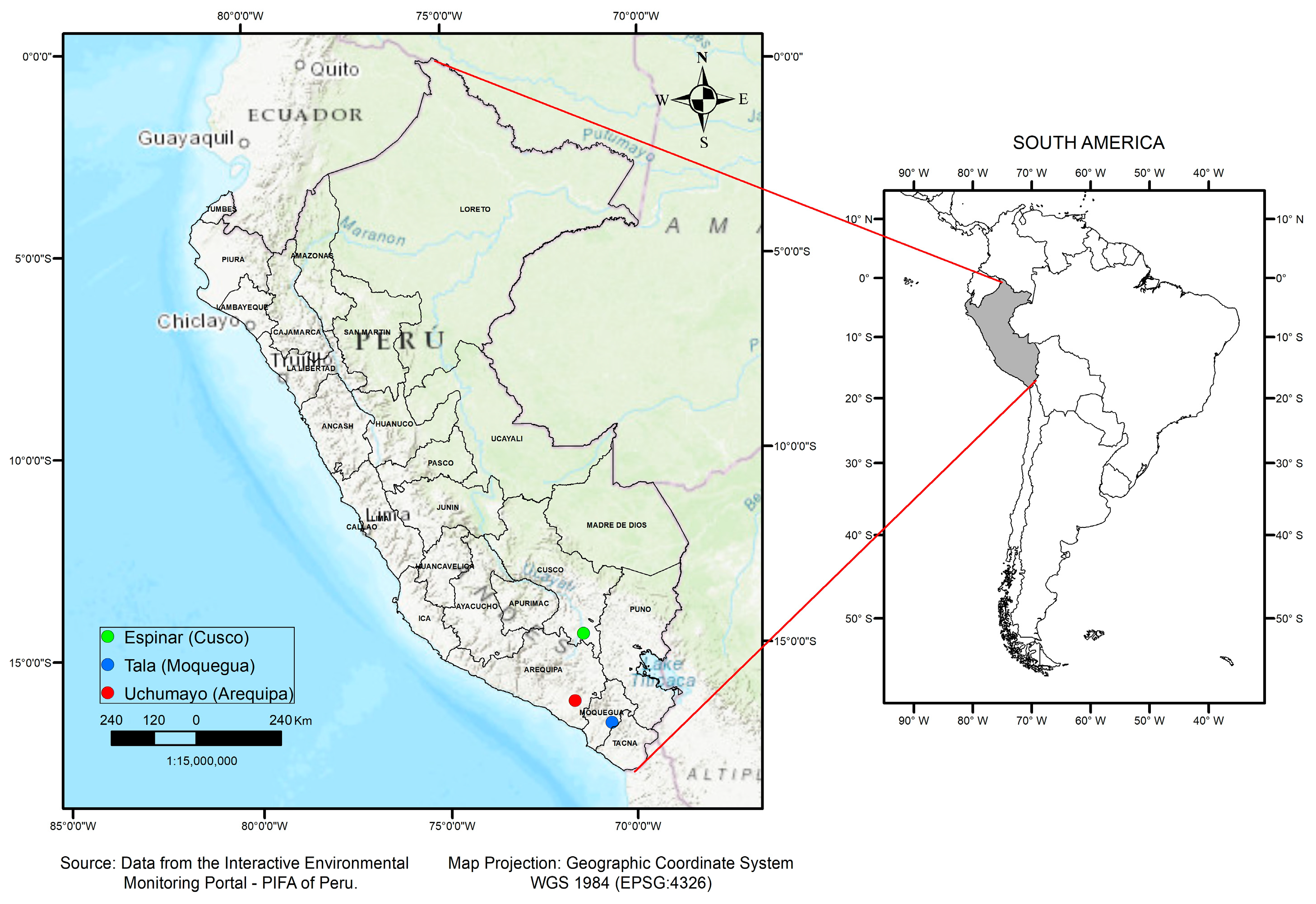

The first limitation lies in the number of datasets used, which is only three. For future works, datasets from other mines in Peru or other countries could be considered. Likewise, the monitoring stations are not located exactly at the mines but in the nearest cities.

Second, instead of using only two days for the retrieved data phase, a larger number of days could be considered. Similarly, instead of using an arithmetic mean, a weighted mean could be implemented according to the level of correlations. In this sense, instead of considering only 20 or 30 days of historical data for the search of similar series, this could be extended to a larger number of days.

Third, instead of linear interpolation, other types of interpolation could be considered, including Lagrange, Stineman, Spline, Kriging, Inverse Distance Weighting, or others.

Fourth, the proposed model is univariate, as it only considers PM2.5 levels; for future work, a multivariate model could be implemented by including other variables, such as wind speed, wind direction, humidity, precipitation, solar radiation, altitude, and vegetation cover. It is also important to highlight that a multivariate model will require a larger amount of data, as well as variables that are highly correlated with the PM2.5 levels to be predicted.

Fifth, the proposed model is implemented for the context of hourly data and predicts 24 h blocks. For daily, monthly data or frequencies lower than one hour, other adaptations would be needed, such as organizing the data by months, years, or similar.