Teaching Machine Learning to Undergraduate Electrical Engineering Students †

Abstract

1. Introduction

- Human Factors Pedagogical Framework: The teaching methodology is framed within a human factors perspective, an area of industrial engineering that optimizes human performance in systems [12]. Although human factors can be applied to optimize pedagogy and the associated process for teaching ML and computational math, it is rarely applied in literature.

- Implementation Details: We provide detailed descriptions of both successful and unsuccessful teaching strategies. In addition to previously published strategies [9], we also describe novel strategies, including the resubmit grading policy, oral midterms, specific software requirements, and hands-on visualization activities for understanding the histogram and convolution. Our goal is to provide actionable guidance for other educators.

- Curriculum Integration: This paper shows how key concepts and skills can be taught and reinforced across multiple courses from the first to the final year. We have not seen curriculum integration addressed in the existing ML and computational math education literature.

- Responsible Use of Generative AI: We present strategies to encourage students to properly use generative AI, with attention to issues such as citation, reliability, and tool usage to aid rather than replace engineers in report writing and coding.

- Student Perspective: This paper incorporates student perspectives from student coauthors (W.Z., J.R., and C.B.) representing both Gen Z and Millennial students, who evaluated the assignment and lecture materials for Computing for Electrical Engineers course.

- RQ1: What ML and computational math skills and concepts do EEs need to learn their sophomore year to be successful in (1) EE internships after their sophomore year and (2) EE positions after graduation?

- RQ2: What are the most effective approaches for teaching these skills and concepts given our demographics? This question is further divided into the following:

- RQ2a: What are the best general strategies? (I.e., optimal mix of lecture and hands-on activities; methods for working with Gen Z students; grading mechanism; approaches for alleviating stress regarding making errors; etc.)

- RQ2b: What are the best specific strategies for traditional EE computational methods? (E.g., time/frequency/time-frequency domain analysis, filtering & convolution, Fourier transform, time-frequency spectrogram, sampling, etc.)

- RQ2c: What are the best specific strategies for teaching recent EE computational methods in ML? (I.e., using ML algorithms properly, recognizing underfit/overfit, visualizing data, etc.)

- RQ2d: What are the best specific strategies for teaching programming skills for ML and computational math? (I.e., writing reusable, clean well-documented code, and learning new programming languages and development environments.)

- RQ2e: What strategies are best for teaching engineering report writing skills? (I.e., initially how to structure a report and how to write clearly; more recently, how to cite properly, how to use generative AI appropriately, and how to use report templates.)

2. Background and Literature Review

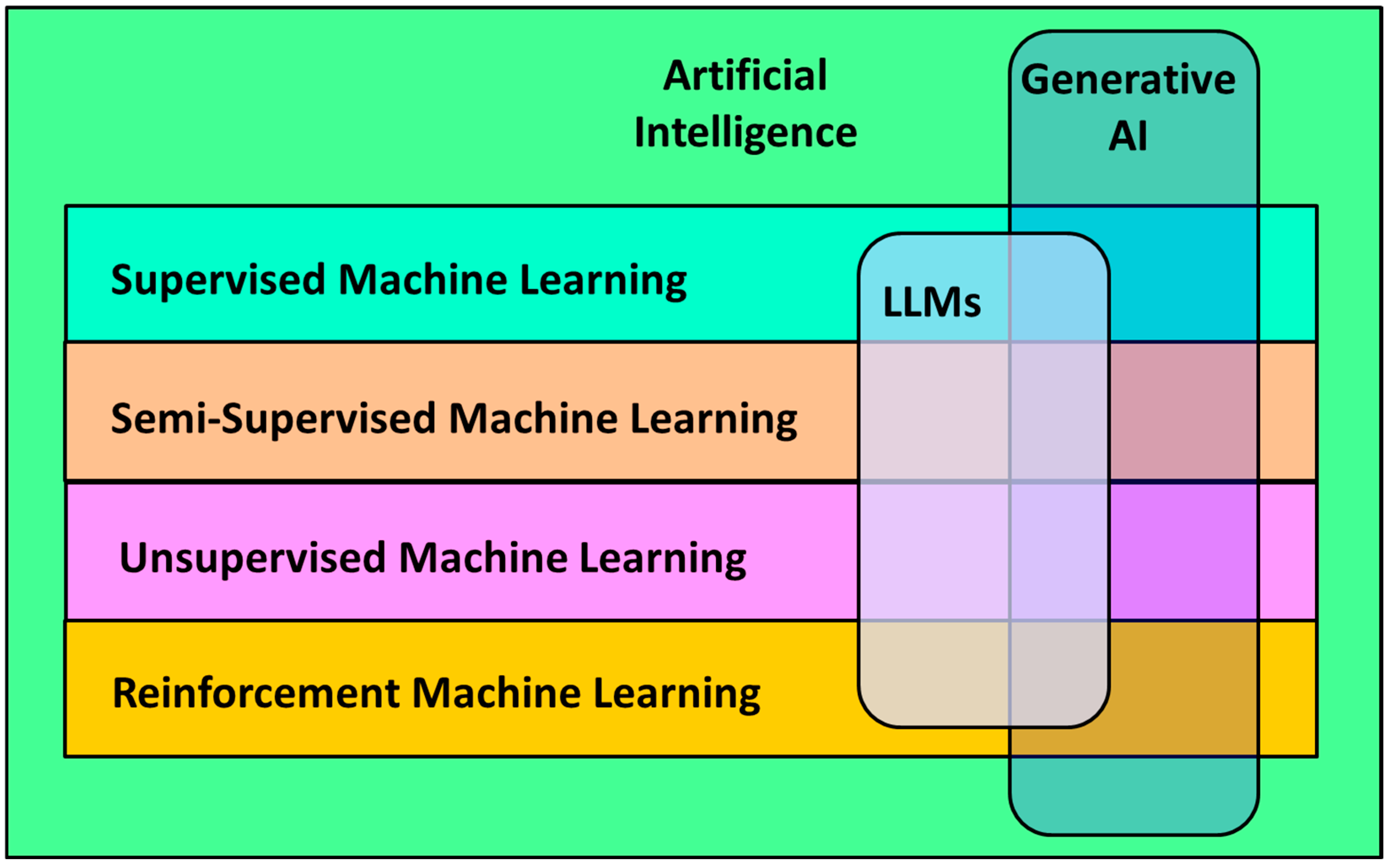

2.1. Machine Learning and AI

2.2. Required Skills and Knowledge for Electrical Engineers Working in Machine Learning

| Skill/Concept | Discussion |

|---|---|

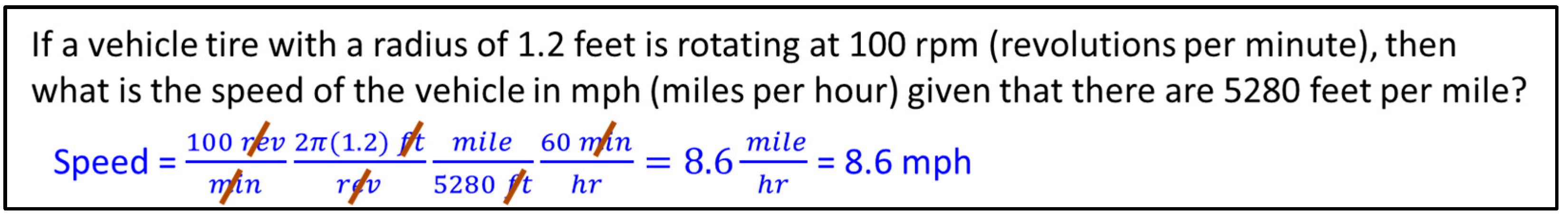

| Unit conversion skills | Engineering, including ML, often requires unit conversions to support scale changes, plotting, and data visualization. For example, EEs often need to convert from sample rate to frequency. |

| Estimation skills | Modern computational tools, while sophisticated, can be difficult to use properly, and, even if used properly, will generate erroneous results if the input data is incorrect. Thus, engineers need to be able to estimate to provide a sanity check when using sophisticated computational math tools. |

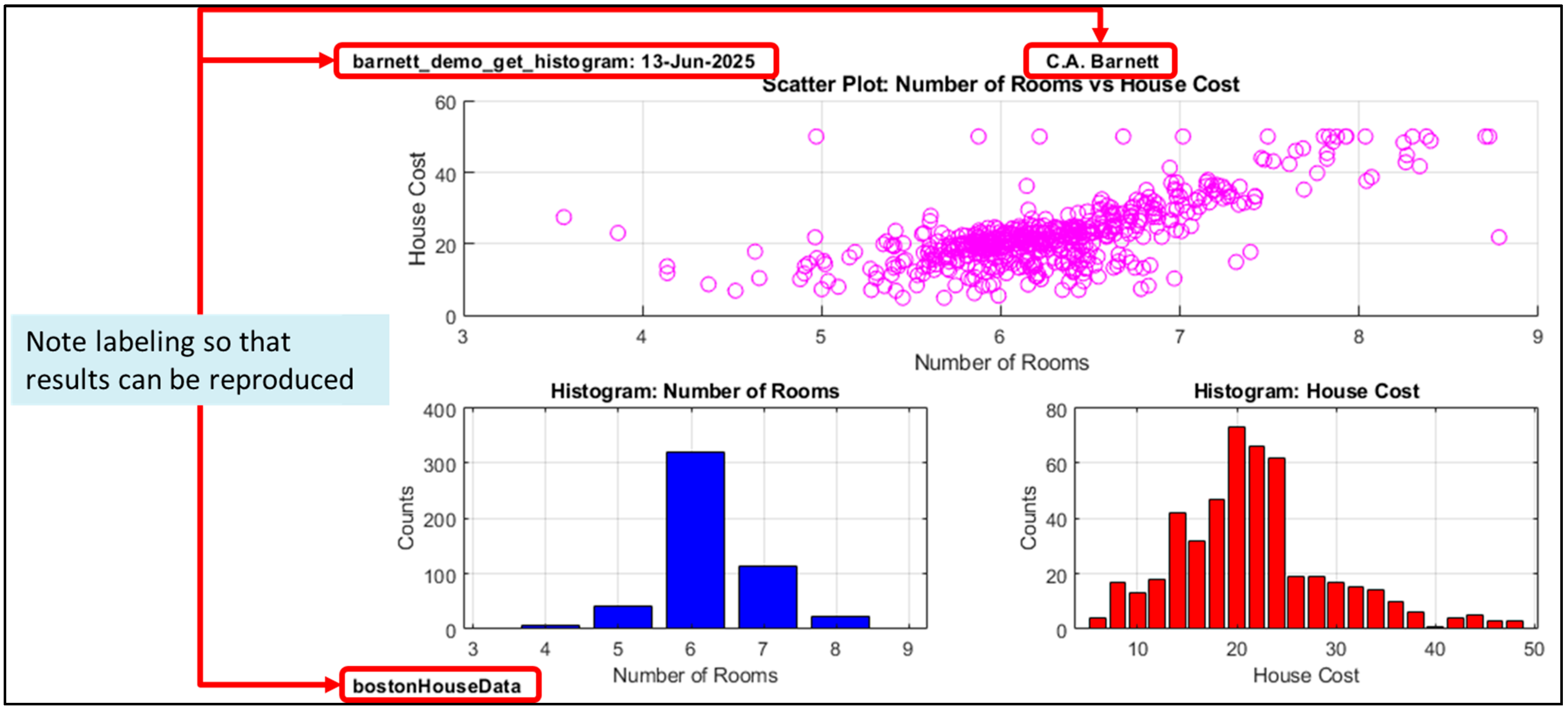

| Documentation skills | Based on industry experience, engineers often struggle with developing proper documentation, including reports, code documentation, and proper plot labeling. Proper documentation during development is critical for reproducibility, team collaboration, and transition to production. |

| Noise, probability, & statistics | ML is essentially applied probability and statistics using automated computational tools. Thus, a solid foundation in probability and statistics is required for ML. Furthermore, given that real-world data is noisy, engineers need to understand how to effectively handle noise in signals and data. |

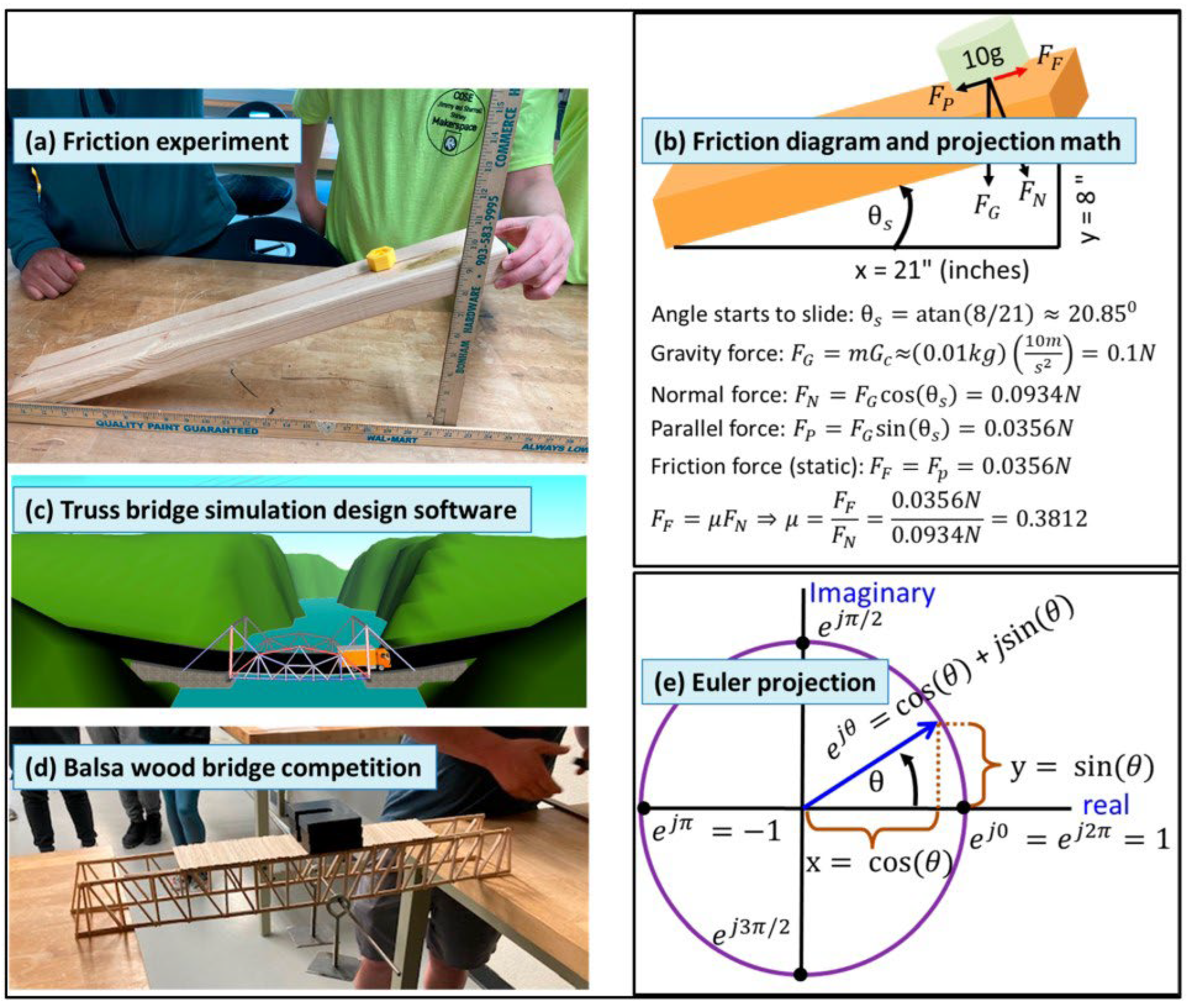

| Trigonometry & projections | Both linear and non-linear projections are widely used in ML to reduce the data dimensionality, perform feature extraction, and support visualization. Prior to understanding non-linear projection techniques, engineers must understand linear projections, including trigonometric sine/cosine projections commonly used in engineering, as well as projection via PCA and the DFT as discussed below. |

| Generative AI skills | Engineers need to know how to properly use generative AI to support coding and documentation, while maintaining critical thinking skills, and while identifying generative AI hallucinations. |

| Data visualization | Visualization is critical in the data exploration phase of ML, and plays a key role in interpreting and explaining the results and methodology to colleagues, managers, and customers. |

| ML theory & practice | Engineers need to understand and apply key ML concepts when developing ML code, including supervised vs. unsupervised training, overfit vs. underfit, classification vs. regression vs. clustering, data scrubbing and other preprocessing steps, the curse of dimensionality, performance metrics, and training vs. test. |

| Software coding skills | Engineers need to be able to develop algorithms to solve problems. Proficiency is needed in both object-oriented and structured coding methods used in languages such as Python and MATLAB. In addition, proficiency in using visual block-diagram tools may be needed since many tools are visual (e.g., Simulink). Engineers also need skills to learn new languages given the variety of ML and computational math platforms. Finally, given typical corporate coding standards, engineers also need to understand software requirements regarding test methodology, style, documentation, naming conventions, etc. |

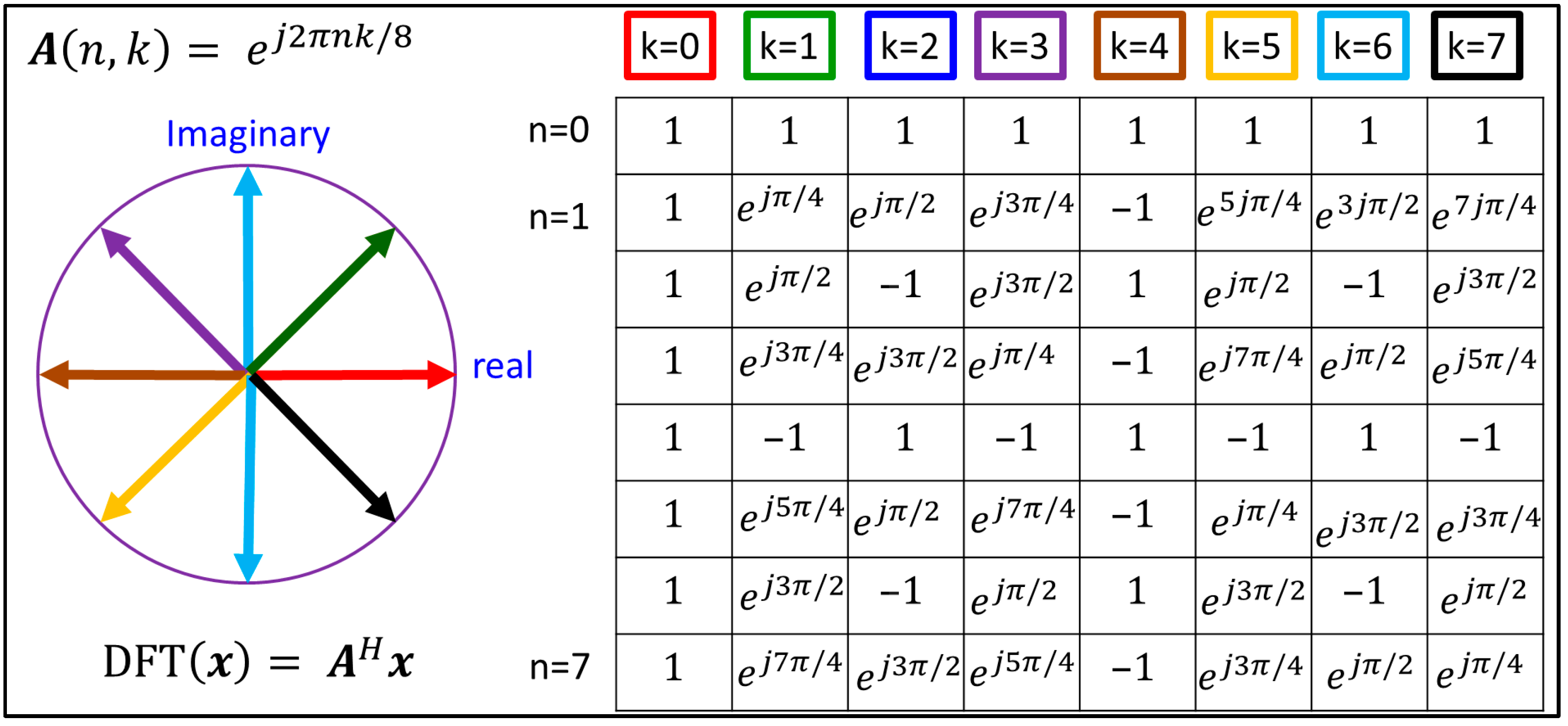

| Discrete Fourier Transform (DFT) | The DFT is the standard tool to analyze digitized periodic signals, and is used in ML applications involving periodic signals for feature extraction, dimensionality reduction, and data visualization. The DFT can also be used to implement fast convolution used in ML algorithms such as the convolutional neural network. Understanding the DFT, in turn, requires an understanding of trigonometric projections, Euler’s formula, complex phasors, and inner products. |

| Convolution & filtering | Convolution is used in a variety of applications, including convolutional neural networks, computing probability distribution functions, and finite impulse response filters. Filtering is used to smooth data and reduce noise (low pass), support edge detection (high pass), and to isolate signals (bandpass/reject). |

| Matrix math & linear algebra | These skills and concepts are needed to develop algorithms, and to understand and interpret algorithms such as PCA or other dimensionality reduction techniques. Engineers need to understand concepts such as linear independence and matrix rank to properly use tools that solve systems of linear equations. |

| Sampling theory & practice | ML practitioners need to know when the sampling granularity is sufficient. In addition, an understanding of statistical sampling is critical in ML to avoid biased results caused by poor training data. |

| Principal Component Analysis (PCA) | PCA is widely used in ML to reduce the data dimensionality prior to training in order to reduce the processing time and, in some cases, to improve the ML classifier performance. PCA is based on eigenvector decomposition of the feature data covariance matrix, followed by replacing the original features with the eigenvectors associated with the largest eigenvalues. Thus, an understanding of eigenvectors and eigenvalues is critical to effective use of PCA in ML. |

| Optimization theory & practice | ML algorithms rely on optimization for training and hyperparameter optimization. Thus, a basic understanding of optimization theory, and concepts such as step size and learning rate, is required. |

| Digital logic | Binary number systems, Boolean logic, state machines, and sequential logic flow crop up in numerous areas of ML and computational math, including hypothesis testing and detection, sequential algorithm flow, hidden Markov models, large language models, digital signal processing, etc. |

| Embedded implementation skills | Due to computational complexity, many ML and computational math algorithms are implemented using graphics processor units (GPUs), field programmable gate arrays (FPGAs), etc., in order to support effective real-world deployment. Engineers with skills in these areas will have more career opportunities. |

2.3. Student Demographics

- Lack of adequate financial support [20,21]. Many of our students need to work part or full time, and some students even need to support their families, or deal with incarcerated family members [19]. While employment can help develop responsible habits, it also creates problems, including reduced study time, lack of sleep, and coordination challenges on team projects.

- Need to commute [21]. Many of our students commute an hour or more, reducing available study time, and leading to missed classes due to traffic accidents, flat tires, and even closed roads (some students live in rural locations that are inaccessible by road after heavy rains).

2.4. ETAMU Electrical Engineering Curriculum

| Year 1 | Year 2 | Year 3 | Year 4 | ||||

|---|---|---|---|---|---|---|---|

| Fall | Spring | Fall | Spring | Fall | Spring | Fall | Spring |

| Calculus I | Calculus II | Differential Equations | Linear Algebra | Calculus III | Digital Systems & Embedded Controls | Capstone I | Capstone II |

| Chemistry I | Intro to CS & Programming | Computing for Electrical Engineers ** | Circuit Theory I | Circuit Theory II | Continuous Signals & Systems | Electric Machinery | Digital Signal Processing |

| Intro to Engineering & Technology * | Product Design & Development | Digital Circuits * | Engineering Probability & Statistics ** | Electronics I | Electronics II | Technical Electives 1–2 | Control Systems |

| Physics I | Physics II | Engineering Economic Analysis | Electromagnetics | Technical Elective 3 | Technical Electives 4–5 ** | ||

Notes regarding EE Technical Electives

| |||||||

| * Course discussed in this paper ** Course also includes significant ML content | |||||||

2.5. Human Factors Framework for Teaching Machine Learning and Computational Math

2.6. Teaching ML and Computational Math

3. Methods

3.1. Teaching Methodology

3.2. Research Methodology and Course Effectiveness Assessment

- Instructor observations

- ⚬

- During in-class hands-on exercises as students work through problems;

- ⚬

- During in-class lectures and office hours as students ask questions.

- Student feedback

- ⚬

- End-of-semester student evaluations;

- ⚬

- Informal student feedback during and after the semester;

- ⚬

- Detailed student analysis by student coauthors.

- Industry feedback

- Assignments

- ⚬

- Homework;

- ⚬

- End-of-semester projects.

- Exams

- ⚬

- Written and oral midterms;

- ⚬

- Written finals.

4. Strategies and Results

4.1. General Strategies for Teaching ML and Computational Math

4.2. Intro to Engineering & Technology

4.2.1. Unit Conversion Process

4.2.2. Estimation

4.2.3. Trigonometry and Projections

4.2.4. Reports and Generative AI

4.3. Computing for Electrical Engineers

4.3.1. Course Overview

4.3.2. Common Assignment Strategies

- PBL: The homework assignments are like mini-projects so that students learn problem-solving skills rather than just syntax. This approach is successful in that once the first Fall 2021 cohort reached their senior year, we began seeing more capstone projects tackle designs with significant ML and computational math components.

- Sequenced learning: The assignments build on each other as the assignments get more sophisticated. Thus, later assignments reinforce concepts from earlier assignments. One problem is that if students fall behind early on, it is difficult to catch up; hence, intervention must be employed early in the semester for students who are struggling. Another problem that we are still working to solve is that the assignment complexity ramps up too quickly over the first month as noted earlier.

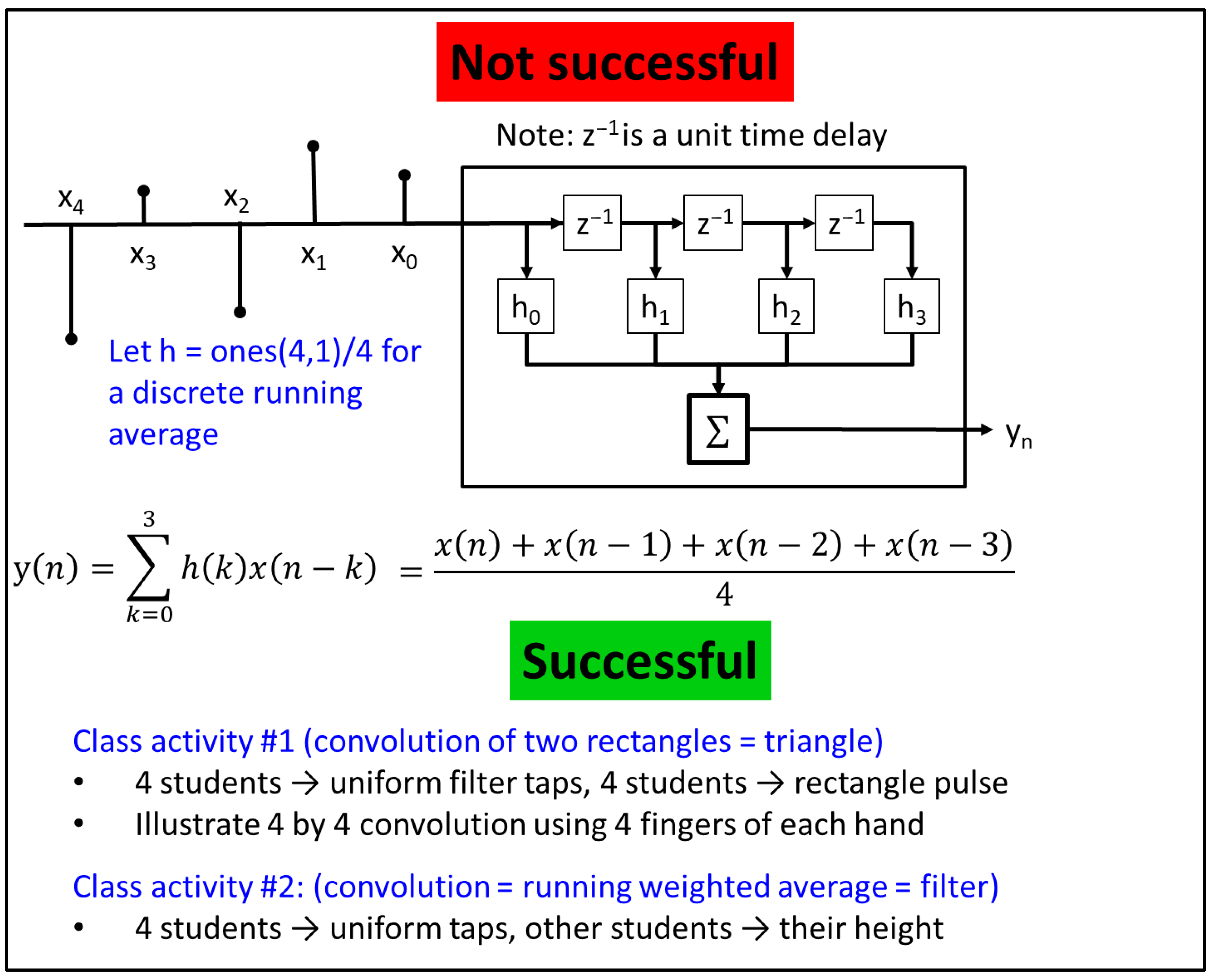

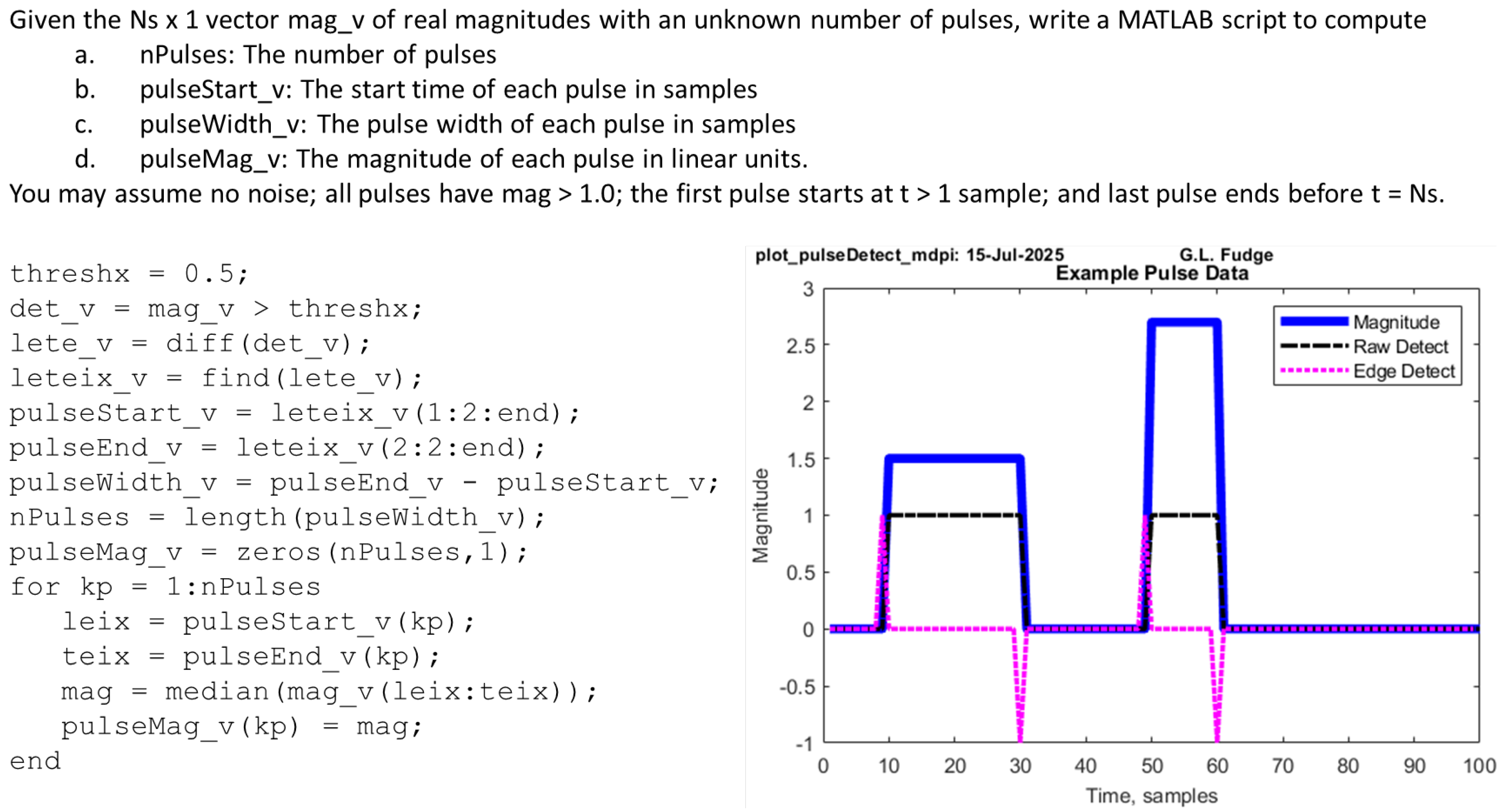

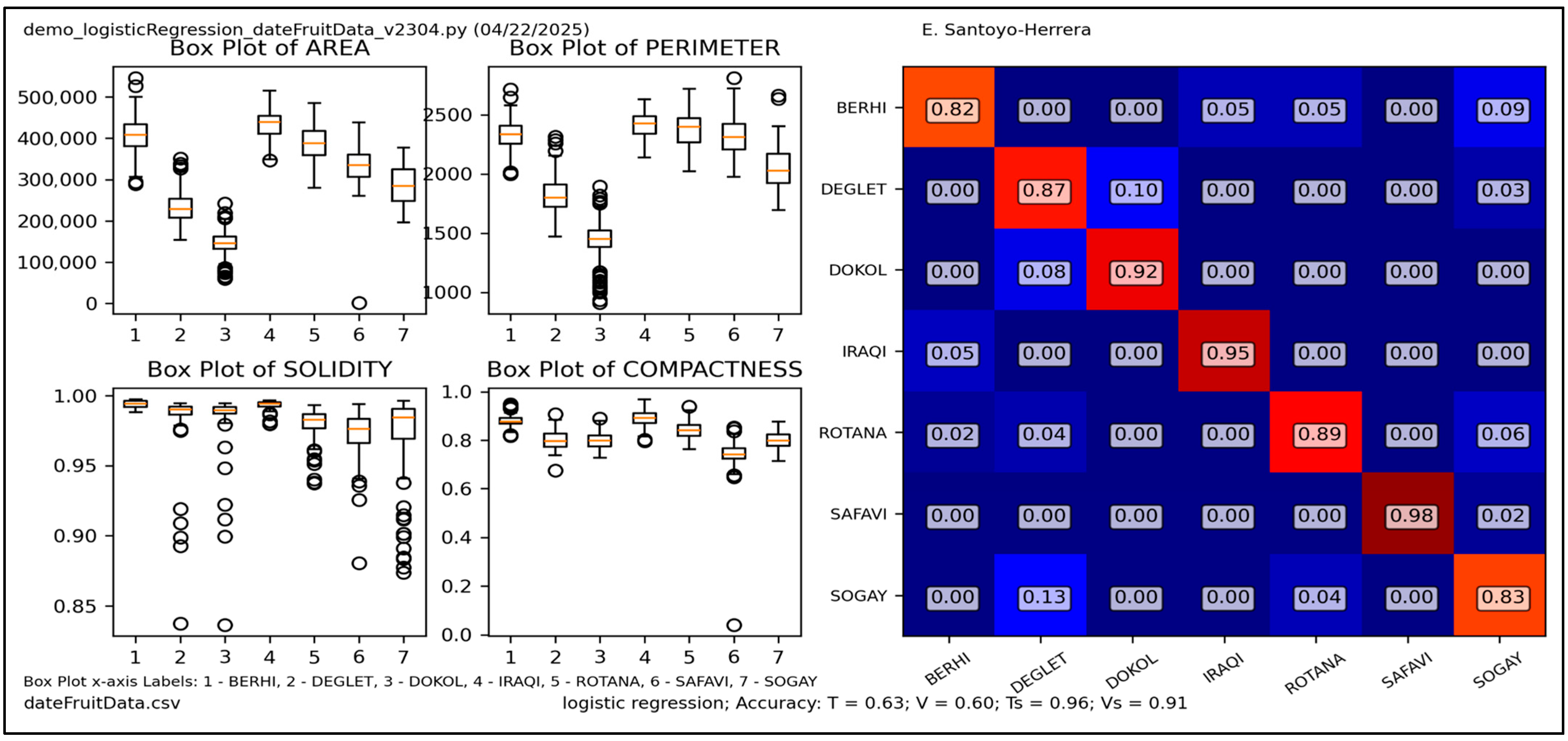

- Data visualization: Each assignment includes visualization plots, which students report is helpful in learning concepts. An example can be seen in Figure 6.

- The “Use-modify-create” plus “save-as” strategy: Students are taught to develop new code by first saving old code that is similar to the new code, then modifying while maintaining the software requirements discussed below. This technique not only helps students, but also improves productivity in industry.

- Peer learning via peer-review, the LA model, and classmate collaboration: The peer pressure involved in submitted code walkthroughs is one of the more effective strategies for motivating students to pay attention to details. Similarly, the LA model has proven effective to provide supplemental tutoring. However, we have observed mixed results with collaboration because some students tend to rely too heavily on their classmates. Thus, while partially successful, the collaboration strategy needs improvement.

- Software requirements and figure labeling: This specific strategy has not been described in the literature previously, so we describe it in more detail below.

4.3.3. Grading Rubric

4.3.4. Assignments and Classroom Teaching Strategies

4.3.5. Generative AI in Coding

4.3.6. Project Strategy

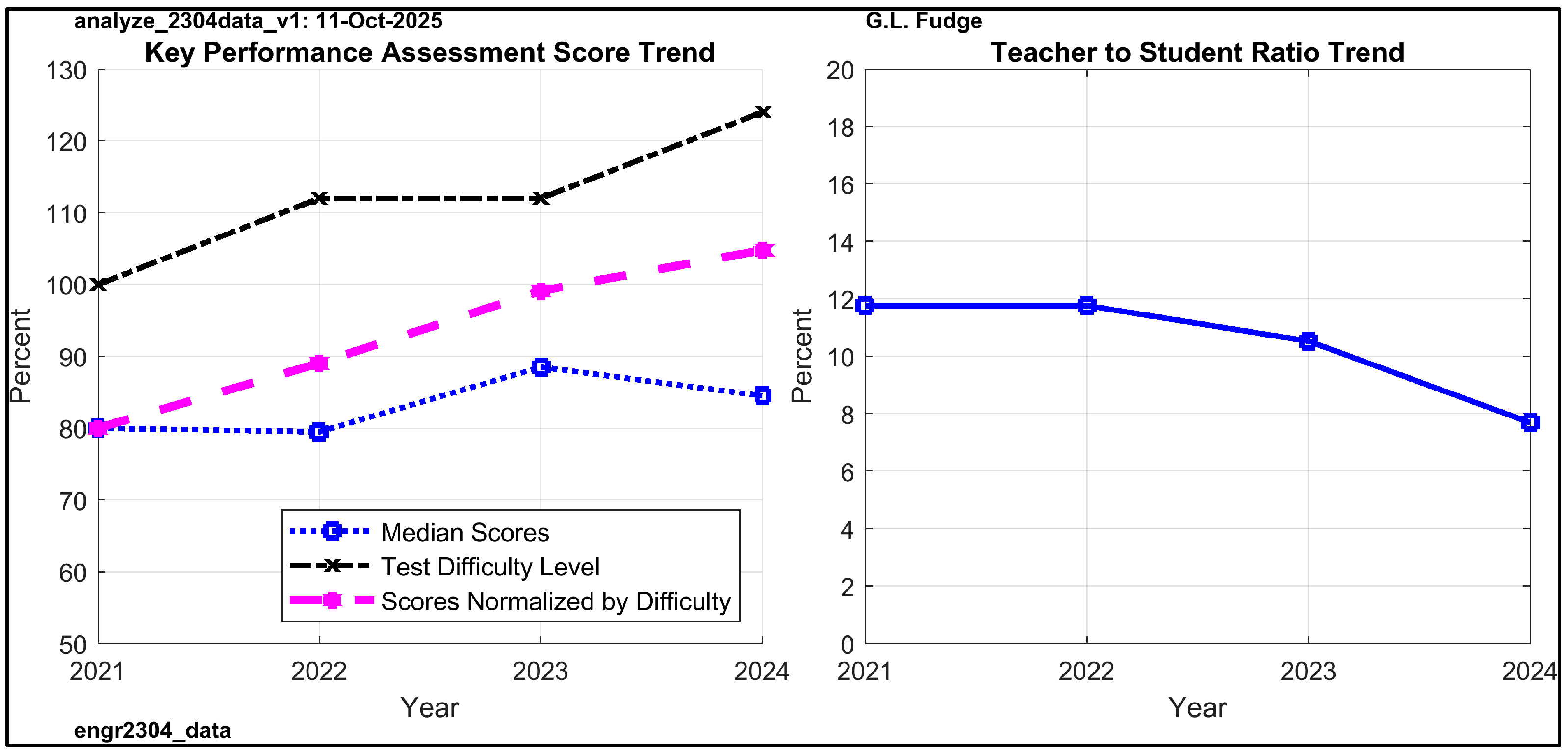

4.3.7. Empirical Results

4.4. Engineering Probability and Statistics

4.5. Digital Circuits

4.6. Applied Machine Learning

5. Discussion

5.1. Successful Teaching Strategies

5.2. Limitations and Future Directions

- We will replace most of the syntax lecture time with in-class hands-on activities to practice creation of functions, and to practice matrix math computations using both hand calculations and MATLAB. In addition, for the Fall 2025 semester, we will provide a YouTube link to a short lecture introducing MATLAB.

- Students will be taught how to use generative AI effectively so that they can improve their productivity while maintaining critical thinking. We plan to add an exercise in which the student (1) develops their own version of a built-in MATLAB tool, (2) develops a generative AI based version, and (3) compares the three versions (original built-in, hand-coded, generative AI coded). Later in the semester, we will show students how to develop and test more complex code from generative AI (e.g., connected components algorithm). The goal is to get students to emulate the same type of process that engineers have used for decades when using open-source or other externally provided commodity tools, such as the Numerical Recipes series of books that originated in the 1980s [52]. Our contention is that there is no fundamental difference between the appropriate use of generative AI and traditional commodity tools—in either case, the appropriate algorithm module meeting the design requirements must be identified, tested and verified, cited appropriately, and documented (including any IP issues). However, engineers (and instructors of engineers) need to understand key differences between generative AI and traditional commodity tools, including that (1) generative AI solutions are not obtained from a source-controlled tested library and thus require more evaluation and testing to verify; (2) generative AI is not constrained to low-level algorithm modules, but will confidently generate solutions, whether correct or incorrect, to complex problems that normally might require dozens of lower-level major algorithm modules; (3) generative AI does not come with external algorithm documentation; and (4) based on the first author’s personal experience with ChatGPT, generative AI solutions can vary wildly in quality and content, depending on the exact prompt and prompt sequence.

- We plan to modify the team-based project to make it competitive with a common dataset and common goals (visualization, regression, and classification), but with the algorithms and methods open to student decisions. Projects will be evaluated using student peer-review scores in conjunction with a rubric scoring sheet.

- We plan to add a time-frequency exercise in which the students collect and analyze audio data of interest (musical instruments, human speech, birds, etc.). The goal of this exercise is to stimulate curiosity.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Online Resources

| Category (Host) | Site and Discussion |

|---|---|

| Code (Anaconda) | https://www.anaconda.com/download Includes latest Python, Spyder IDE, NumPy, Matplotlib, Scikit-learn ML algorithms, and many other computational math algorithms in ML, data visualization, statistical analysis, etc. |

| Code, toy data (Scikit-learn) | https://scikit-learn.org/stable/ Includes many easy-to-use ML algorithms that have common interface methodology, including data visualization, clustering, regression, and classification. Also, various toy datasets for ML beginners. |

| Data (Kaggle) | https://www.kaggle.com/ Popular site for ML, with a large variety of datasets with descriptions. Also, hosts ML competitions. Account required (free). |

| Data (UC Irvine) | https://archive.ics.uci.edu/datasets Hundreds of datasets useful for ML. Well organized with suggested types of ML prediction (classification, regression). |

| Data, code (OpenML) | https://www.openml.org/ Includes a variety of documented datasets. Also can share code and can compare with benchmark information posted by other users. |

| Book (Raschka) | https://github.com/rasbt/machine-learning-book GitHub site for [7] that includes figures, Jupyter Notebooks associated with the chapters (including Python code examples), and some datasets. |

| Data, papers (Figshare) | https://figshare.com/browse Contains a large variety of datasets and papers. Can be difficult to navigate to find good data to practice on, but potentially useful for more advanced students. |

| Video series (Linear Algebra) (3Blue1Brown) | https://www.3blue1brown.com/topics/linear-algebra Intuitive explanations of key concepts in linear algebra with outstanding graphics. Total of 16 videos, most of which are about 10 min long. |

| Video series (Deep Learning) (3Blue1Brown) | https://www.3blue1brown.com/topics/neural-networks Intuitive explanations of key concepts in deep learning. Total of 7 videos covering neural networks (including gradient descent and backpropagation for training), LLMs, transformers, and attention methods. |

| Video (Fourier Transform) (3Blue1Brown) | https://www.3blue1brown.com/lessons/fourier-transforms Intuitive explanation of the Fourier Transform with animated graphics that many students and engineers find useful. About 20 min long. |

| Video (MATLAB) (freeCodeCamp) | https://www.youtube.com/watch?v=7f50sQYjNRA MATLAB Crash Course for Beginners. Useful introduction to basic MATLAB, including the IDE, plots, and equations. About 30 min. |

| LinkedIn Learning | Machine Learning with Scikit-Learn (43 min) Outstanding introduction to ML, including Jupyter Notebook examples to work through with lecture. |

| LinkedIn Learning | MATLAB Essential Training (2.5 h) Good introduction to MATLAB that students have found to be very useful. |

| LinkedIn Learning | Learning FPGA Development (1.15 h) Outstanding introduction to FPGA programming, including Verilog and VHDL. |

References

- Do, H.D.; Tsai, K.T.; Wen, J.M.; Huang, S.K. Hard skill gap between university education and the robotic industry. J. Comput. Inf. Syst. 2023, 63, 24–36. [Google Scholar] [CrossRef]

- Verma, A.; Lamsal, K.; Verma, P. An investigation of skill requirements in artificial intelligence and machine learning job advertisements. Ind. High. Educ. 2022, 36, 63–73. [Google Scholar] [CrossRef]

- Aikins, G.; Berdanier, C.G.P.; Nguyen, K.-D. Data proficiency in MAE education: Insights from student perspectives and experiences. Int. J. Mech. Eng. Educ. 2024. [Google Scholar] [CrossRef]

- Saroja, S.; Jinwal, S. Employment dynamics: Exploring job roles, skills, and companies. IEEE Potentials 2025, 44, 23–29. [Google Scholar] [CrossRef]

- Alsharif, A.M. Exploring Engineering Employment Trends: A Decade-Long Deep Dive into Skills and Competences Included in Job Advertisements. Ph.D. Thesis, Virginia Polytechnic Institute and State University, Blacksburg, MA, USA, 24 August 2025. [Google Scholar]

- Beke, E. Engineering competencies expected in the digital working places. In Proceedings of the 2023 IEEE 21st World Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 19–21 January 2023; pp. 000241–000244. [Google Scholar]

- Raschka, S.; Liu, Y.; Mirjalili, V.; Dzhulgakov, D. Machine Learning with PyTorch and Scikit-Learn; Packt: Birmingham, UK, 2022. [Google Scholar]

- Prentzas, J.; Sidiropoulou, M. Assessing the use of open AI chat-GPT in a University Department of Education. In Proceedings of the 2023 14th International Conference on Information, Intelligence, Systems & Applications (IISA), Volos, Greece, 12 July 2023; pp. 1–4. [Google Scholar]

- Fudge, G.L. Teaching Computational Math and Introducing Machine Learning to Electrical Engineering Students at an Emerging Hispanic Serving Institution. In Proceedings of the IEEE SoutheastCon, Atlanta, GA, USA, 18 February 2024; pp. 353–359. [Google Scholar]

- Van Sickle, J.; Schuler, K.R.; Holcomb, J.P.; Carver, S.D.; Resnick, A.; Quinn, C.; Jackson, D.K.; Duffy, S.F.; Sridhar, N. Closing the achievement gap for underrepresented minority students in STEM: A deep look at a comprehensive intervention. J. STEM Educ. Innov. Res. 2020, 21, 2. [Google Scholar]

- Atindama, E.; Ramsdell, M.; Wick, D.P.; Mondal, S.; Athavale, P. Impact of targeted interventions on success of high-risk engineering students: A focus on historically underrepresented students in STEM. Front. Educ. 2025, 10, 1435279. [Google Scholar] [CrossRef]

- Czaja, S.J.; Nair, S.N. Human factors engineering and systems design. In Handbook of Human Factors and Ergonomics; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2012; pp. 38–56. [Google Scholar]

- Kaswan, K.S.; Dhatterwal, J.S.; Malik, K.; Baliyan, A. Generative AI: A review on models and applications. In Proceedings of the 2023 International Conference on Communication, Security and Artificial Intelligence (ICCSAI), Greater Noida, India, 23–25 November 2023; pp. 699–704. [Google Scholar]

- Bender, E.M.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the dangers of stochastic parrots: Can language models be too big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, Virtual, 3–10 March 2021; pp. 610–623. [Google Scholar]

- Gaitantzi, A.; Kazanidis, I. The Role of Artificial Intelligence in Computer Science Education: A Systematic Review with a Focus on Database Instruction. Appl. Sci. 2025, 15, 3960. [Google Scholar] [CrossRef]

- Munir, B. Hallucinations in Legal Practice: A Comparative Case Law Analysis. Int. J. Law Ethics Technol. 2025, 2025, 2653508. [Google Scholar] [CrossRef]

- Jamieson, P.; Ricco, G.D.; Swanson, B.A.; Van Scoy, B. BOARD# 134: Results and Evaluation of an Early LLM Benchmarking of our ECE Undergraduate Curriculums. In Proceedings of the 2025 ASEE Annual Conference & Exposition, Montreal, QC, Canada, 22–25 June 2025. [Google Scholar]

- Hernandez, N.V.; Fuentes, A.; Crown, S. Effectively transforming students through first year engineering student experiences. In Proceedings of the 2018 IEEE Frontiers in Education Conference (FIE), San Jose, CA, USA, 3–6 October 2018; pp. 1–5. [Google Scholar]

- Lundy-Wagner, V.C.; Salzman, N.; Ohland, M.W. Reimagining engineering diversity: A study of academic advisors’ perspectives on socioeconomic status. In Proceedings of the 2013 ASEE Annual Conference & Exposition, Atlanta, GA, USA, 23–26 June 2013; pp. 23–1031. [Google Scholar]

- Lee, W.C.; Lutz, B.; Hermundstad Nave, A.L. Learning from practitioners that support underrepresented students in engineering. J. Prof. Issues Eng. Educ. Pract. 2018, 144, 04017016. [Google Scholar] [CrossRef]

- Estrada, M.; Burnett, M.; Campbell, A.G.; Campbell, P.B.; Denetclaw, W.F.; Gutiérrez, C.G.; Hurtado, S.; John, G.H.; Matsui, J.; McGee, R.; et al. Improving underrepresented minority student persistence in STEM. CBE-Life Sci. Educ. 2016, 15, es5. [Google Scholar] [CrossRef]

- Barrasso, A.P.; Spilios, K.E. A scoping review of literature assessing the impact of the learning assistant model. Int. J. STEM Educ. 2021, 8, 12. [Google Scholar] [CrossRef]

- Tapia, R.A. Losing the Precious Few: How America Fails to Educate Its Minorities in Science and Engineering; Arte Público Press: Houston, TX, USA, 2020. [Google Scholar]

- Hammad, H.S. Teaching the Digital Natives: Examining the Learning Needs and Preferences of Gen Z Learners in Higher Education. Transcult. J. Humanit. Soc. Sci. 2025, 6, 214–242. [Google Scholar] [CrossRef]

- Quallen, S.M.; Crepeau, J.; Willis, B.; Beyerlein, S.W.; Petersen, J.J. Transforming introductory engineering courses to match genZ learning styles. In Proceedings of the 2021 ASEE Virtual Annual Conference Content Access, virtual, 26–29 July 2021. [Google Scholar]

- Kim, K.J. Medical student needs for e-learning: Perspectives of the generation Z. Korean J. Med. Educ. 2024, 36, 389. [Google Scholar] [CrossRef]

- Hollender, N.; Hofmann, C.; Deneke, M.; Schmitz, B. Integrating Cognitive Load Theory and Concepts of Human-Computer Interaction. Comput. Hum. Behav. 2010, 26, 1278–1288. [Google Scholar] [CrossRef]

- Sweller, J.; van Merriënboer, J.J.G.; Paas, F. Cognitive Architecture and Instructional Design: 20 Years Later. Educ. Psychol. Rev. 2019, 31, 261–292. [Google Scholar] [CrossRef]

- Sweller, J.; van Merrienboer, J.J.G.; Paas, F.G.W.C. Cognitive Architecture and Instructional Design. Educ. Psychol. Rev. 1998, 10, 251–296. [Google Scholar] [CrossRef]

- Bannert, M. Managing Cognitive Load—Recent Trends in Cognitive Load Theory. Learn. Instr. 2002, 12, 139–146. [Google Scholar] [CrossRef]

- Kalyuga, S. Prior Knowledge Principle in Multimedia Learning. In The Cambridge Handbook of Multimedia Learning; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Oviatt, S. Human-Centered Design Meets Cognitive Load Theory: Designing Interfaces That Help People Think. In Proceedings of the 14th Annual ACM International Conference on Multimedia, Santa Barbara, CA, USA, 23–27 October 2006. [Google Scholar]

- Oviatt, S.; Arthur, A.; Cohen, J. Quiet Interfaces That Help Students Think. In Proceedings of the UIST 2006: Proceedings of the 19th Annual ACM Symposium on User Interface Software and Technology, Montreux, Switzerland, 15–18 October 2008. [Google Scholar]

- Altay, B. User-Centered Design through Learner-Centered Instruction. Teach. High. Educ. 2014, 19. [Google Scholar] [CrossRef]

- Aditomo, A.; Goodyear, P.; Bliuc, A.M.; Ellis, R.A. Inquiry-Based Learning in Higher Education: Principal Forms, Educational Objectives, and Disciplinary Variations. Stud. High. Educ. 2013, 38, 1239–1258. [Google Scholar] [CrossRef]

- Bonwell, C.C.; Sutherland, T.E. The Active Learning Continuum: Choosing Activities to Engage Students in the Classroom. New Dir. Teach. Learn. 1996, 1996, 3–16. [Google Scholar] [CrossRef]

- Hussain, A.A.; El-Nakla, S.; Khan, A.H.; Nayfeh, J.; Ifurong, A.; Tayem, N. Project-Based Learning Approach for Undergraduate Electrical Engineering Laboratories: A Case Study. In Proceedings of the 2024 6th International Symposium on Advanced Electrical and Communication Technologies, ISAECT 2024, Alkhobar, Saudi Arabia, 3–5 December 2024. [Google Scholar]

- Margulieux, L.E.; Prather, J.; Rahimi, M.; Uzun, G.C.; Cooper, R.; Jordan, K. Leverage Biology to Learn Rapidly from Mistakes Without Feeling Like a Failure. Comput. Sci. Eng. 2023, 25, 44–49. [Google Scholar] [CrossRef]

- Lytle, N.; Cateté, V.; Boulden, D.; Dong, Y.; Houchins, J.; Milliken, A.; Isvik, A.; Bounajim, D.; Wiebe, E.; Barnes, T. Use, modify, create: Comparing computational thinking lesson progressions for stem classes. In Proceedings of the 2019 ACM Conference on Innovation and Technology in Computer Science Education, Aberdeen, UK, 15–19 July 2019; pp. 395–401. [Google Scholar]

- Martins, R.M.; Gresse Von Wangenheim, C. Findings on teaching machine learning in high school: A ten-year systematic literature review. Inform. Educ. 2023, 22, 421–440. [Google Scholar] [CrossRef]

- Solórzano, J.G.L.; Ángel Rueda, C.J.; Vergara Villegas, O.O. Measuring Undergraduates’ Motivation Levels When Learning to Program in Virtual Worlds. Computers 2024, 13, 188. [Google Scholar] [CrossRef]

- Shin, D.; Shin, E.Y. Data’s impact on algorithmic bias. Computer 2023, 56, 90–94. [Google Scholar] [CrossRef]

- Tick, A. Exploring ChatGPT’s Potential and Concerns in Higher Education. In Proceedings of the 2024 IEEE 22nd Jubilee International Symposium on Intelligent Systems and Informatics (SISY), Pula, Croatia, 19–21 September 2024; pp. 000447–000454. [Google Scholar]

- De Barros, V.A.M.; Paiva, H.M.; Hayashi, V.T. Using PBL and agile to teach artificial intelligence to undergraduate computing students. IEEE Access 2023, 11, 77737–77749. [Google Scholar] [CrossRef]

- Vargas, M.; Nunez, T.; Alfaro, M.; Fuertes, G.; Gutierrez, S.; Ternero, R.; Sabattin, J.; Banguera, L.; Duran, C.; Peralta, M.A. A project based learning approach for teaching artificial intelligence to undergraduate students. Int. J. Eng. Educ. 2020, 36, 1773–1782. [Google Scholar]

- ABET. Criteria for Accrediting Engineering Program. 2024. Available online: https://www.abet.org/accreditation/accreditation-criteria/criteria-for-accrediting-engineering-programs-2025-2026 (accessed on 3 October 2025).

- Theobald, O. Machine Learning for Absolute Beginners: A Plain English Introduction, 3rd ed.; Independently published: Chicago, IL, USA, 2021; ISBN 979-8558098426. [Google Scholar]

- Lee, H.P.; Sarkar, A.; Tankelevitch, L.; Drosos, I.; Rintel, S.; Banks, R.; Wilson, N. The impact of generative AI on critical thinking: Self-reported reductions in cognitive effort and confidence effects from a survey of knowledge workers. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 1 May–26 April 2025; pp. 1–22. [Google Scholar]

- Herrero-de Lucas, L.C.; Martínez-Rodrigo, F.; De Pablo, S.; Ramirez-Prieto, D.; Rey-Boué, A.B. Procedure for the determination of the student workload and the learning environment created in the power electronics course taught through project-based learning. IEEE Trans. Educ. 2021, 65, 428–439. [Google Scholar] [CrossRef]

- Koklu, M.; Kursun, R.; Taspinar, Y.S.; Cinar, I. Classification of Date Fruits into Genetic Varieties Using Image Analysis. Math. Probl. Eng. 2021, 2021, 4793293. [Google Scholar] [CrossRef]

- Gradinaru, S.J.; Harrington, C.L.; Clinton, J.T.; Jackson, S.; Gilbert, G.; Fudge, G.L. An Integrated Time-Frequency Detector and Radar Pulse Modulation CNN Classifier. In Proceedings of the IEEE SoutheastCon 2025, virtual, 16–17 March 2025; pp. 575–582. [Google Scholar]

- Planitz, M. Numerical recipes—The art of scientific computing, by W. H. Press, B. P. Flannery, S. A. Teukolsky and W. T. Vetterling. Pp 818. £25. 1986. ISBN 0-521-30811-9 (Cambridge University Press). Math. Gaz. 1987, 71, 245–246. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fudge, G.; Rimu, A.; Zorn, W.; Ringle, J.; Barnett, C. Teaching Machine Learning to Undergraduate Electrical Engineering Students. Computers 2025, 14, 465. https://doi.org/10.3390/computers14110465

Fudge G, Rimu A, Zorn W, Ringle J, Barnett C. Teaching Machine Learning to Undergraduate Electrical Engineering Students. Computers. 2025; 14(11):465. https://doi.org/10.3390/computers14110465

Chicago/Turabian StyleFudge, Gerald, Anika Rimu, William Zorn, July Ringle, and Cody Barnett. 2025. "Teaching Machine Learning to Undergraduate Electrical Engineering Students" Computers 14, no. 11: 465. https://doi.org/10.3390/computers14110465

APA StyleFudge, G., Rimu, A., Zorn, W., Ringle, J., & Barnett, C. (2025). Teaching Machine Learning to Undergraduate Electrical Engineering Students. Computers, 14(11), 465. https://doi.org/10.3390/computers14110465