Abstract

The emergence of generative artificial intelligence (GAI) has significantly transformed higher education. As a linguistic assistant, GAI can promote equity and reduce barriers in academic writing. However, its widespread availability also raises ethical dilemmas about integrity, fairness, and skill development. Despite the growing debate, empirical evidence on how students’ ethical evaluations influence their predicted use of GAI in academic tasks remains scarce. This study analyzes the ethical determinants of students’ determination to use GAI as a linguistic assistant in essay writing. Based on the Multidimensional Ethics Scale (MES), the model incorporates four ethical criteria: moral equity, moral relativism, consequentialism, and deontology. Data were collected from a sample of 151 university students. For the analysis, we used a mix of partial least squares structural equation modeling (PLS-SEM), aimed at testing sufficiency relationships, and necessary condition analysis (NCA), to identify minimum acceptance thresholds or necessary conditions. The PLS-SEM results show that only consequentialism is statistically relevant in explaining the predicted use. Moreover, the NCA reveals that reaching a minimum degree in the evaluations of all ethical constructs is necessary for use to occur. While the necessary condition effect size of moral equity and consequentialism is high, that of relativism and deontology is moderate. Thus, although acceptance of GAI use in the analyzed context increases only when its consequences are perceived as more favorable, for such use to occur it must be considered acceptable, which requires surpassing certain thresholds in all the ethical factors proposed as explanatory.

1. Introduction

In recent years, artificial intelligence (AI) and, in particular, generative artificial intelligence (GAI), has become established as a key technology across various domains, including higher education [1]. GAI tools such as ChatGPT, Gemini, and Copilot are transforming teaching–learning processes by offering everything from personalized tutoring to support in academic writing [2,3]. GAI can enhance the effectiveness of teaching processes through key factors such as content availability, learning engagement, motivation, and learner involvement [4]. Thus, its impact redefines both the student’s and the teacher’s role, expanding access to knowledge and serving as a potential source of improvement in educational efficiency [5,6].

This advancement also raises important ethical and pedagogical challenges [2]. Inappropriate use of GAI may discourage the development of essential skills such as writing, critical thinking, or creativity, fostering passive attitudes toward learning [7]. Likewise, the presence of biases in algorithmic models risks reproducing stereotypes and limiting diversity of perspectives, which is particularly problematic in university education [8]. Integrating GAI in education means balancing its benefits with safeguards that protect equity and intellectual autonomy, while minimizing negative externalities [9]. In other words, it cannot be concluded that the use of GAI in an academic environment is intrinsically “good” or “bad,” since the consequences of its use (positive or negative) depend on the context and the specific task at hand [10,11].

Academic essay writing occupies a central place in higher education, as it constitutes not only a means of communicating knowledge but also an epistemic tool for learning and the development of critical thinking [12]. Assessment of writing usually follows an integrative approach, considering cognitive, linguistic, and pragmatic dimensions beyond formal aspects [13]. Academic writing in advanced contexts poses a challenge for both native and non-native students, as it simultaneously demands specialized communication, knowledge creation, and mastery of specific discourse conventions [14]. Thus, the scientific essay becomes a key pedagogical resource, allowing students to express, argue, and structure ideas with clarity and coherence, integrating theory and practice. In addition to fostering critical reflection, essay writing demands a high level of language proficiency, as the quality of argumentation depends on the conceptual rigor, precision, and expressive richness of the discourse [15].

This fact motivates the present study, which analyzes how students’ ethical evaluations influence their determination to use GAI as an assistant in essay text editing. The evaluated scenario implies that GAI is employed exclusively as an assistant to perform linguistic corrections and minor edits on a text previously written independently by the student. This is a use that, in research practice, is widely accepted [8], and which current word processors already partially provide, but which could also be admissible in evaluative essays in higher education [9].

Although empirical studies have shown that students distinguish between the ethical implications of using GAI as a linguistic assistant and employing it intensively for essay writing—consistently evaluating the former more favorably [10]—this use is not without risks. First, since higher education students are still in training, resorting to GAI may discourage personal effort to improve their writing skills, such as linguistic accuracy, discourse structuring, syntax, and spelling [12], leading to excessive dependence on technology and a loss of autonomy [7]. This dependence fits within the broader risks of GAI dependence in multiple domains [7]. Second, even in an “appropriate” scenario of GAI use, such as the one analyzed here, there is a possibility that students may end up using it to produce substantial parts of the essay. This phenomenon has two explanations. The law of the instrument suggests that the availability of a tool encourages its overuse, even in inappropriate contexts [16]. In parallel, ego depletion theory in psychology argues that self-control is a limited resource that weakens over time, making students more likely to yield to temptation and misuse GAI [17].

Decision-making based solely on ethical vs. unethical criteria is simplistic, as moral philosophy offers diverse normative approaches [18]. For example, using GAI, from a moral equity point of view, could be considered unfair and unequal if not all students had the same access to these tools. From a deontological standpoint, however, it could be deemed legitimate as long as university regulations do not explicitly prohibit its use for text correction.

Within this framework, the present study adopts the Multidimensional ethics scale (MES) [18,19] as a reference. This scale has been employed in research on the acceptance of ethically controversial technologies such as body insertables [20,21], as well as in the analysis of questionable academic behaviors related to information technologies, such as plagiarism, privacy violations, various forms of falsification, or piracy [22,23,24,25]. It has also been used to systematize students’ views on their academic behavior in specific scenarios of GAI use [10].

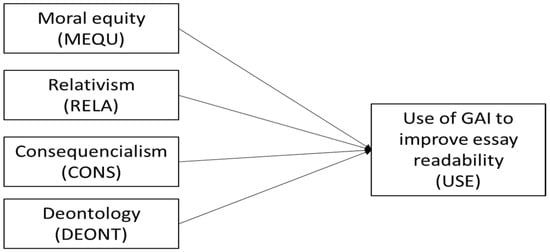

The analytical framework employed is presented in Figure 1 and pursues two research objectives (ROs):

Figure 1.

Theoretical groundwork based in Mutidimensional Ethics Scale.

RO1: To assess ability of the conceptual ground presented in Figure 1 to understand the ause of GAI use in essay correction. This objective involves evaluating both the adjustment and predictive capability of the MES-based ground. From the perspective of ethical judgments, this RO implies analyzing whether a more favorable evaluation in a given moral dimension (e.g., moral equity) translates into a greater predicted use of GAI. Logically, this approach corresponds to the classical framework of sufficiency studies in applied ethics: “if the ethical evaluation in dimension X is more positive, it is more likely that the student will use GAI” [26].

RO2: To determine whether explanatory ethical judgments also act as necessary conditions for reaching higher levels of adoption. This objective is framed within the logic of necessity and implies assessing whether it is essential to reach a minimum threshold Xa in moral dimension X for GAI use in essay linguistic correction to occur [26].

To address these objectives, a quantitative approach is employed that combines partial least squares structural equation modeling (PLS-SEM) to evaluate RO1, with necessary condition analysis (NCA) to evaluate RO2, following the methodological proposal of Sarstedt et al. [27]. We consider that the findings derived from this mixed approach will provide valuable insights into both the drivers and the necessary conditions in a specific yet highly common higher education context, namely the writing of thematic essays as a form of assessment.

The structure of the paper is as follows: Section 2 develops the analytical framework proposed in the figure; Section 3 describes the materials and methods used in the study; Section 4 presents the estimation and validation of the model along with the main findings; and finally, Section 5 concludes with a summary of the results, a reflection on their practical implications, and a proposal for future lines of research.

2. Theoretical Ground

2.1. Overview of the Grondwork

In this section, we develop the theoretical model presented in Figure 1, reflecting on how the different ethical theories considered as explanatory factors have the potential to influence the acceptance of GAI use in the analyzed educational scenario. The model is based on the MES in [18,19], slightly modified in [28]. Specifically, the predicted use of GAI in the evaluated scenario (USE) is explained by four ethical judgments: moral equity, relativism, consequentialism (which includes the sub-theories of moral egoism and utilitarianism), and, finally, deontology.

The reflections conducted lead us to propose hypotheses regarding the direction of judgments in the four ethical constructs in terms of sufficiency, according to which a more favorable evaluation of a given ethical construct will imply a greater predisposition to use GAI. These hypotheses can be empirically tested through statistical tools such as regression.

Commonly, behavioral intention in studies on technology use relies on two items: the perception about the intention to use and the prediction of actual use. If the availability of assessed technology is not widespread—such as the Technology Acceptance Model (TAM) [29] or the Unified Theory of Acceptance and Use of Technology (UTAUT) [30]—these two dimensions may diverge. An example within the MES approach is [21], which examines the acceptability of technological implants. In such cases, intention may not coincide with prediction due to perceived access limitations.

We are exclusively concerned with the decision to act, rather than with intention alone. Access to GAI among university students is practically universal, as most already use it to complete many of their academic tasks [31]. Hence, decision-making is not affected by the novelty of the technology. We therefore focus solely on what students believe they will actually do when writing an essay—that is, the predicted action. Likewise, the use of the MES with single-item constructs for the outcome variable, directly asking about the decision or action to be taken, is a well-established practice in the literature [18,19,24,25,32].

The MES approach is not contradictory to TAM or UTAUT, which are the most widely used theories in the study of GAI use and acceptance (see, e.g., [33,34,35]), but rather shares certain elements while emphasizing different aspects. The central elements of TAM and UTAUT are constructs related to technology use based on arguments of performance and ease of use [29]. In contrast, MES connects with consequentialist theory, which holds that an action is ethically admissible if its consequences are positive for the individual performing it or for the society in which they live, in terms of improved well-being and cost/benefit [18].

Furthermore, the subjective norm or social influence in TAM and UTAUT has a clear connection to the theory of moral relativism. While technology acceptance theories define social influence as the perception that close others and the decision-maker’s environment expect them to adopt a certain technology [30], moral relativism posits that a behavior is ethically correct if it is perceived as appropriate by the social and cultural environment of the decision-maker [18].

Extensions of TAM, such as TAM 3 [36], build on the addition of new variables influencing the core constructs (usefulness and ease of use), while UTAUT extensions, such as UTAUT 2 [37], incorporate additional variables that nuance the basics, such as hedonic motivation or price–value perception. Unlike these extensions, MES places emphasis on ethical evaluations, going beyond convenience and perceived social approval, by incorporating perspectives such as moral equity—the innate perception of what is fair or unfair, equal or unequal—and deontology, which asserts that morally correct actions involve respecting the contracts individuals hold with the society in which they live [18].

Moreover, establishing the direction of the relationship between variables in the hypotheses is particularly useful for necessary condition analysis (NCA). Since a positive relationship is expected—in other words, that a higher evaluation of an ethical construct will be associated with greater acceptance of GAI—this relationship must be interpreted in terms of the possible existence of a minimum threshold in the ethical variable required for the determination to use GAI [38]. Therefore, the development of hypotheses on the direction of the relationships will allow us to guide the necessary condition analysis, which is exploratory in nature, in order to establish which thresholds associated with ethical judgments act as floors (minimum requirements) rather than ceilings (maximum tolerable levels).

2.2. Moral Equity

Moral Equity (MEQU), also understood as justice, refers to a person’s ability to evaluate what is fair or unfair, as well as the broader principles related to right and wrong [39]. This ethical approach entails an impartial commitment to promoting fairness, defending equality and fundamental rights, and actively criticizing situations of inequality and injustice [40]. From a capabilities perspective, justice cannot be reduced to the mere availability of resources or the existence of formal freedoms; what matters is what people are actually able to achieve and who they are able to become [41]. Within this view, moral equity involves ensuring that all individuals have the minimum conditions that allow them to lead a full life, which requires support sensitive to their specific circumstances.

From moral equity point of view, the use of GAI to refine academic essays may be considered positive insofar as it promotes greater equality of opportunities among students. In particular, it provides support to those who do not fully master writing skills, such as international students or those with specific learning difficulties, by addressing disadvantages unrelated to their intellectual ability [42]. In this way, essay assessment focuses on students’ knowledge and arguments on the subject matter rather than on linguistic factors that could introduce bias into evaluation.

Nevertheless, significant concerns remain regarding equal access to GAI among students. First, its use requires a stable internet connection [2] and adequate devices [43], resources that not all students possess [2]. Second, differences in access to paid versions—which typically offer substantially higher performance than free ones—may exacerbate pre-existing gaps among students [42,44]. Under this premise, the principle of moral equity calls into question not only the legitimacy of using such technologies but also the conditions of access and their fair distribution within the academic community.

Additionally, at formative stages such as postgraduate studies, essays play an essential role in strengthening linguistic skills—syntax, structuring of ideas, and construction of arguments. Excessive reliance on GAI could therefore inhibit the development of these competencies, fostering an overdependence on the tool for academic writing [12].

The literature has shown that moral equity constitutes a key construct in explaining certain academic behaviors with ethical implications, such as plagiarism [23,24,25,32], piracy [22,23], or falsification in ambiguous contexts [25]. Regarding the academic use of GAI, this significance has been observed both in teachers’ decisions to recommend its use to students [45] and in its inclusion as a construct within an extended model of the UTAUT applied to the analysis of GAI use in a sample of Chinese students [46]. Accordingly, we propose:

Hypothesis 1 (H1).

A favorable perception of the use of GAI for refining academic texts from the perspective of moral equity facilitates the willingness to employ it.

2.3. Moral Relativism

Moral Relativism (RELA) is based on the premise that judgments about what is right or wrong are not universal, but are conditioned by the cultural, historical, and social context in which they are formulated [18]. From this perspective, morality lacks an absolute standard: what may be considered ethically acceptable in one community may be regarded as questionable or inappropriate in another [47].

In the educational domain, this framework implies that students’ disposition toward specific academic practices is strongly shaped by the opinions and attitudes of their immediate environment. Norms conveyed by peers, instructors, and institutions guide behavior, either directly or through subtle cues [48]. In this regard, both peer influence [49] and educator influence [50] become key determinants in fostering or discouraging the use of GAI, regardless of the specific type of application.

Moreover, higher education increasingly emphasizes the inculcation of values related to social responsibility and sustainability [7]. This commitment adds an additional dimension to relativism, since the intensive use of GAI models has been pointed out as less environmentally friendly compared to lower-impact technological alternatives such as traditional online applications [51]. In institutions with a strong focus on sustainability, the systematic use of GAI for academic tasks could therefore be perceived as a practice misaligned with group values, limiting its acceptance.

The importance of these social perceptions in technology adoption has been widely documented within acceptance conceptual grounds like the TAM and the UTAUT. Indeed, multiple studies have shown that attitudes toward GAI are closely linked to social influence in Asian countries—such as South Korea [52], Saudi Arabia [33,53], Egypt [34], and Indonesia [35]—as well as in European and Western contexts, including Poland [49], Norway [54], or Spain [55].

Hypothesis 2 (H2).

A favorable perception of the use of GAI for the linguistic correction of academic texts from the perspective of moral relativism increases students’ willingness to employ it.

2.4. Consequentialism

Consequentialism (CONS) posits that an action is morally valid when it produces a net positive balance in terms of utility or well-being [56]. This school of thought is divided into two main approaches: moral egoism and utilitarianism. The former holds that a behavior is ethical if it promotes the interests of the agent, whether through immediate or long-term benefits [57]. In contrast, utilitarianism—developed from the ideas of Jeremy Bentham and John Stuart Mill—considers morally right whatever maximizes collective well-being [58].

From an egoistic perspective, the use of GAI to improve evaluable essays may translate into higher grades by enhancing clarity, coherence, and text structure [3], thereby increasing opportunities for scholarships, postgraduate study, or employment. From a utilitarian angle, GAI use can optimize learning processes, facilitate study organization, and accelerate the resolution of doubts [59], thereby increasing overall academic productivity. Empirical evidence supports this view, since [60] found that after training in ChatGPT, students significantly improved their analytical, synthesis, information management, and autonomous learning skills.

By reducing the time spent on editing, students can redirect effort to higher-value tasks or to personal activities—such as leisure or socialization—that also improve academic performance [61]. Essay writing is also often associated with anxiety and lack of self-confidence, which hinder motivation and skills development [62]. In this respect, GAI may serve as an emotional buffer, reducing stress and fostering mental health [63]. Whether from the standpoint of moral egoism—by seeking individual well-being [64]—or from utilitarianism—by reducing overall suffering and increasing collective well-being [65]—these justifications are coherent.

Nevertheless, a consequentialist approach also requires consideration of negative externalities. Intensive use of GAI may erode intellectual autonomy, discourage active learning, and weaken the appreciation of academic merit [12,66]. It may also hinder the development of writing skills in less proficient students or lead to deterioration in those who already possess them [67]. Additional risks involve privacy and the handling of data shared with GAI, which may be subject to misuse or fraudulent practices [8,68].

In TAM and UTAUT based-models usefulness, efficiency, and convenience are central predictors of the acceptance the technologies [29,30]. This also applies in the case of adaptation to AI acceptance [69]. These factors have been documented in diverse contexts such as Turkey [70], South Korea [52], Saudi Arabia [33,53,71], Indonesia [72], Norway [54], and Poland [49]. In addition, recent studies have shown the positive influence of hedonic and well-being motives on predicted use [49,55] and their negative relationship with academic anxiety [70,73].

Within the MES framework, both egoistic and utilitarian criteria have proven relevant for explaining ethically controversial student behaviors such as plagiarism [23,25], document falsification [24,25], and even teachers’ recommendations to use GAI [45].

Hypothesis 3 (H3).

A favorable perception of GAI use for the linguistic correction of academic texts from the perspective of consequentialism increases students’ willingness to employ it.

2.5. Deontology or Contractualism

From the perspective of contractualism or deontological ethics (DEONT), actions are not evaluated by their consequences but by their conformity with pre-established ethical norms, principles, and duties [74]. Applied to the university context, this implies that student conduct should be judged according to the normative duties made between students, educational centers, and community, beyond immediate personal benefits. Within this framework, the focus lies on respecting a social contract—formal or tacit— hat delineates the limits of ethically permissible conduct within the academic sphere [18].

The tacit commitments of a student to their university, family, and society encompass, on the one hand, fundamental duties such as avoiding dishonest behavior and engaging with the acquisition of knowledge and skills [75]. On the other hand, they include the responsibility to make full use of the training received, particularly in public institutions supported by collective funds. Thus, academic success transcends the individual, also constituting a form of moral reciprocity toward the society that finances the educational process [76,77]. In this context, the use of GAI for the linguistic correction of essays can be beneficial by relieving students of the burden of formal aspects, allowing them to devote more time to higher cognitive and creative tasks [9]. However, it also carries risks, since excessive dependence could erode essential writing skills required for academic and professional performance [12].

Student behavior is likewise mediated by family, social, and institutional expectations that shape motivation and performance [78,79]. Families, for example, may reinforce commitment to studies through positive encouragement, increasing perceived responsibility not only toward personal achievements but also toward collective goals [79,80].

A higher expectation of academic performance within the social environment may create the perception that achieving good grades, the primary indicator of success, constitutes an implicit contract with that environment, reinforcing their relevance as both a personal and social obligation. If the use of GAI contributes to enhanced academic performance, its evaluation from this perspective could be considered ethically admissible from a deontological standpoint. This positive assessment would be further reinforced if GAI also frees up time for higher value-added activities, thereby aligning efficiency gains with ethical justifiability.

Nevertheless, institutional authorization of GAI use for linguistic correction may open the door to misuse. A rule legitimizing its use for minimal editing could be interpreted by students as tacit justification for generating substantial parts of an essay, bordering on plagiarism and explicitly violating academic regulations [12]. This risk is particularly critical, given that detecting AI-generated text is more difficult than identifying traditional plagiarism [81], and it could even lead to unintentional violations of copyright and publishing agreements [82].

This phenomenon can be understood through the lens of moral hazard, according to which the availability of a resource alters behavior and increases the probability of misuse [83]. It also corresponds to the logic of the law of the instrument, which describes the tendency to use a tool simply because it is available, even when inappropriate [16]. The immediacy and privacy of interaction with GAI further reinforce the online disinhibition effect, reducing psychological barriers against ethical transgression [84]. Even when students recognize that they should not use GAI to write an entire essay, they may succumb to temptation due to weakness of will. This vulnerability increases under academic pressure, when the ego depletion reduces the ability to resist temptation [17].

The relevance of deontological judgments for explaining academic behaviors is well documented. They have been used to understand phenomena such as plagiarism [24,25,32], improper collaboration in individual exams [24], software piracy [23], and authorship-related fraud in research [25].

Hypothesis 4 (H4).

A favorable perception of GAI use from the perspective of deontology positively influences students’ determination to employ it for the linguistic correction of academic texts.

3. Materials and Data Analysis

3.1. Sampling and Sample

This paper was conducted with students from two Spanish universities enrolled in social sciences programs, specifically in degrees such as Economics, Business Administration, and Social Work. These curricula are characterized by the demand for communicative skills—both oral and written—as well as an understanding of the humanistic aspects of human behavior. The academic essay is a widely used teaching and assessment method in higher education within the social sciences [85]. By focusing specifically on students in the social sciences, the study avoids dispersion and addresses a context where essay writing is a central academic task; however, this also means that the findings may not directly transfer to other domains, such as engineering, where assignments are more often based on technical projects rather than written essays.

The study employed purposive sampling and was conducted during May, June, and July of 2025. Data were collected through an anonymous, voluntary, and self-administered electronic questionnaire. The confidential and free participation in the study tried to foster more deliberate and authentic responses, likely motivated by internal rather than external factors, while also reducing the influence of socially desirable answering. The items were written in Spanish, and access to the questionnaire was provided through a digital link. Responses were restricted to one per IP address.

The electronic questionnaire was made available in the Moodle virtual classrooms of both universities, specifically within courses in business, economics, and social work, covering all academic years. At the first university, located in a medium-sized metropolitan area (approximately 500,000 inhabitants), the universe of potential respondents was about 400 students. At the second university, situated in a metropolitan area of around 7 million inhabitants, approximately 1200 students were eligible to participate. To complete the survey, students were required not only to provide explicit informed consent but also to acknowledge that they had previously used GAI in their studies, regardless of the specific purpose. We understand that this second requirement was easily achievable, considering results such as those reported in [31], where 95% of students acknowledged using GAI for academic purposes.

The final sample consisted of 151 participants, representing an approximate response rate of 9.5%. Although this rate is small, several considerations must be taken into account. First, online surveys typically achieve lower response rates than telephone or face-to-face surveys [86]. Second, participation was not incentivized, whereas monetary or other forms of compensation could have increased the response rate [32]. Finally, the survey did not address a behavior with few or no ethical implications, but rather a potentially moral implications, which likely discouraged some students from responding.

Regarding gender, 53 (35%) were men and 98 (65%) women. With respect to age, 50 participants (33%) were 20 years old or younger, 33 (22%) were 21, 27 (18%) were 22, and 40 (26%) were 23 years old or older, while 1 participant (1%) did not provide this information. Concerning perceived academic performance, 107 (71%) considered their performance average or below average, while 44 (29%) rated it as beyond the mean. Finally, regarding job situation, 58 participants (38%) were employed full time, while 93 (63%) worked part time or were unemployed.

3.2. Measurement Instrument

The survey included an initial description with the case that participants were asked to evaluate:

“The deadline for an essay you have been working on for weeks is approaching. You have researched, structured your ideas, and carefully written each paragraph. However, you know that artificial intelligence tools such as ChatGPT, Gemini, or Claude could help you refine your writing, improve the fluency of the text, and give it a more professional touch.Based on this scenario, please take a moment to consider how generative artificial intelligence is used in the coursework and assignments of your degree program.”

As for the output question, it was limited to a single item, which asked whether the student would be willing to use GAI in essay production. This question was also measured on an 11-point scale, identical to that of the ethical constructs.

The questions employed to capture the ethical perceptions developed in Section 2, which are the explanatory variables, are detailed in Table 1. The items were assessed using an 11-point Likert scale (0 to 10), ranging from “strongly disagree” to “strongly agree”. This scale and the use of eleven response points have been employed in different MES applications, such as the evaluation of cyborg technology [21,87], assessment of immunity passports [88], or the use of novel sports technologies [28].

Table 1.

Descriptive statistics and scale reliability measures of items used in this study.

3.3. Data Analysis

The data analysis follows a sequence aimed at successively answering RO1 and RO2. First, the validity of the scales (internal consistency, convergent reliability, and discriminant validity) is evaluated and factor scores are obtained. This step is common to both PLS-SEM [89] and NCA [27]. At this stage, we also implemented Student’s t-test and Kendall’s-τ correlation to assess the discriminant capacity of the variable gender on the response USE, given its potential relevance in explaining differences in contexts with ethical implications [23,25,32] and due to the different predispositions toward the use of GAI in academic settings [90].

The inner model presented in Figure 1 is fitted with PLS-SEM. The results obtained provide an answer to RO1. This step includes the evaluation of sample fit, the statistical significance of the variables—which allows testing the proposed hypotheses—and their predictive power. The protocol proposed in [89] is followed, and predictive capacity is assessed with the Cross-Validated Predictive Ability Test [91,92].

The second phase of the analysis consists of conducting NCA, following the guidelines [27,93]. The factor scores obtained in the PLS-SEM estimation are used as values for the latent variables. The analysis is carried out using the ceiling envelopment technique based on the free disposal hull (FDH) approach. The FDH defines the XY-plane region in which realizations of the dependent variable are not possible given a specific level of the conditional (input) variable. The main NCA indicators are:

Necessity effect size (d). This can be classified as small (0 < d < 0.1), medium (0.1 ≤ d < 0.3), large (0.3 ≤ d < 0.5), or very large (d ≥ 0.5), along with its statistical significance. Following [93], these effect sizes are considered practically relevant when d > 0.1 and p < 0.05.

Bottleneck analysis based on bottleneck tables. These tables facilitate the evaluation of necessity relationships. When the relationship between variables is positive, as in the case of ethical variables, the interpretation is: “To achieve a USE level, it is necessary to reach at least level Xa of ethical construct X.”.

3.4. Sample Size Adequacy

The adequacy of the sample size must be evaluated in relation to the quantitative studies similar than our and the techniques employed for data analysis, which in our study are PLS-SEM and NCA.

We must acknowledge that the sample size is relatively modest, and indeed there are studies with larger samples (e.g., Prashar et al. [38] with 298 observations). Nevertheless, it is important to emphasize that recruiting participants willing to disclose—even anonymously—potentially questionable behaviors is inherently challenging. Within the MES literature, such sample sizes are not unusual. For example, in professional contexts [94,95] relied on 67 and 123 participants, respectively. In academic settings, prior studies have similarly worked with modest samples, such as 90 participants [24] and between 100 and 120 students [19,23].

For the PLS-SEM analysis, as shown in Figure 1, the model considers four predictor variables. According to the “ten times rule” [89], the minimum required sample size would be 40 cases, given that Figure 1 presents a model with four regressors. Additionally, a power analysis conducted with G*Power 3.1.0 [96] confirmed that with a sample of 151 participants, it is possible to achieve a statistical power of 80% using a significance level of 5% for a minimum effect size of 0.08 (equivalent to a coefficient of determination of 7.05%, considered small). When the criterion of statistical power is established for the significance of coefficients, an 80% power, a 5% significance level, and effect sizes of f2 = 0.0525 are maintained.

Regarding NCA, following [97], a statistical power of 100% is achieved using the ceiling-envelopment free disposal hull, assuming a 5% significance level for medium effect sizes (d = 0.1) and a uniform distribution of data under the ceiling envelopment.

4. Results

4.1. Descriptive Statistics and Measurement Model Assessment

Table 1 presents the means and standard deviations of the evaluated items. The assessment of use is high (practically 7). The evaluations of the ethical constructs are, in all cases, clearly above 5. For moral equity, values range between 7.04 and 7.71; for relativism, between 6.33 and 7.83; and for consequentialism, between 6.60 and 8.40. The lowest scores correspond to deontology, ranging between 5.82 and 6.17. The Cramér–von Mises statistic rejects the null hypothesis of normality for the items.

The results in Table 1 suggest that scales possess internal consistency. For almost all constructs, both Cronbach’s alpha and the two measures of composite reliability exceed the recommended threshold of 0.70. Furthermore, convergent validity is confirmed: as shown in Table 2, factor loadings exceed the value of 0.70, and the average variance extracted (AVE) values are greater than 0.50.

Table 2.

Pearson’s correlations and measures of discriminant capability of scales.

Table 2 also demonstrates that the scales exhibit adequate discriminant validity. The square roots of AVEs are above the correlations between constructs. Likewise, the heterotrait–monotrait ratios (HTMT) generally do not exceed the desirable threshold of 0.85, and in the case where it is exceeded (the relationship be-tween consequentialism and moral equity), the value does not reach the critical limit of 0.90 [89].

It should also be noted that our data did not reveal any gender-based differences in GAI use within this context. Women reported a slightly higher mean score than men, with a gender difference of 0.57; however, this difference was not statistically significant (t-ratio = −1.13, p = 0.262). Likewise, Kendall’s τ was 0.079 (p = 0.299), which further confirms the non-rejection of the independence between gender and USE. This contrasts with the much stronger and significant correlations observed between USE and the ethical constructs MEQU, RELA, CONS, and DEONT—all close to or above 0.5 (p < 0.001 in all cases).

4.2. PLS-SEM Estimation (Research Objective 1)

Table 3 displays the results of the structural model estimation developed in Section 2 using PLS-SEM. The coefficient of determination (R2) reaches 51.5%, which indicates a moderate level of explanatory power [89]. This value is well above the 7.05% threshold required to ensure a statistical power of 80% when testing the significance of the overall model. The standardized root mean squared residual is below 0.1, which implies a satisfactory model fit.

Table 3.

Results of the estimation of path coefficients with PLS-SEM of the model proposed in Section 1.

The same table also shows that there are no multicollinearity issues, as the variance inflation factor for all variables is below 3.3. With respect to the significance of the hypothesized relationships presented in Section 2, only the influence of consequentialism is statistically significant, with a path coefficient (β) = 0.603, a p-value < 0.001, and an effect size of f2 = 0.244.

Table 4 presents the predictive power metrics of the model. The Stone–Geisser Q2 value is 45.9% (>0), indicating that the model exhibits predictive capability [89]. CVPAT results allows stating that the proposed model improves both the indicator average, with an average loss difference (ALD) = −3.60 (p < 0.001), and the parsimonious linear model (ALD = −0.19, p = 0.433). Nevertheless, the greater performance relative to the parsimonious linear model has not statistical significance.

Table 4.

Measures of the PLS-SEM model predictive power.

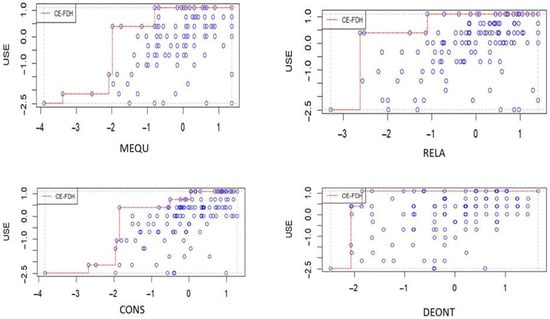

4.3. Results of Necessary Condition Analysis (Research Objective 2)

While Table 5 reports the effect size values of each variable on USE along with the bottleneck table, Figure 2 illustrates the ceiling envelopment analyses of the relationships between USE and the ethical constructs. The effect sizes for moral equity (d = 0.380) and consequentialism (d = 0.429) are classified as high, whereas relativism (d = 0.207) and deontology (d = 0.109) fall within the medium range according to [38]. In all cases, the effects are statistically significant (p < 0.001).

Table 5.

Effect sizes of NCA of explanatory factors and bottleneck table of USE (expressed as quantiles).

Figure 2.

Ceiling-envelopment-free disposal hull of ethical constructs.

Table 5 further shows that even very limited use (10th percentile) requires reaching the 34.5th percentile in moral equity and the 36.6th percentile in consequentialism. Notably, the thresholds for achieving both a median level of acceptance (50th percentile) and a high level of acceptance (80th percentile) remain practically unchanged, with the only difference being that the consequentialism threshold increases slightly from the 36.6th to 38.8th percentile. Finally, secure use of AI (100th percentile) requires surpassing the median values for both moral equity (59.0th) and consequentialism (75.7th), while the requirements for relativism (46.7th) and deontology (15th) remain below the median, indicating comparatively less stringent thresholds.

These results highlight that moral equity and consequentialism represent critical necessary conditions for the acceptance of GAI use, whereas relativism and deontology despite being also necessary conditions, play a secondary role, imposing comparatively less stringent requirements.

5. Discussion

5.1. General Considerations

This paper set out to analyze the acceptance of generative artificial intelligence (GAI) use in the linguistic correction of academic essays from the perspective of moral judgments. The predicted use was explained through four ethical constructs derived from the multidimensional ethics scale (MES) initially proposed by Reidenbach and Robin [18] and subsequently revised in Shawver and Sennetti [19] and Andrés-Sánchez [28]: moral equity (MEQU), relativism (RELA), consequentialism (CONS), and deontology (DEONT). The study was guided by two research objectives (RO).

The first objective (RO1) was to assess the statistical adequacy of the MES in explaining the use of GAI, applying PLS-SEM. This approach made it possible to analyze, from the logic of sufficiency, the extent to which ethical constructs predict students’ predisposition to use GAI in the proposed academic scenario (USE) [26]. This is the common framework in empirical studies applying the MES to decision-making, such as the use of controversial technologies [21,28], business and managerial decisions [39,98], or academic behaviors [23,25,32,45].

The second objective (RO2) sought to determine whether the use of GAI requires reaching a minimum level in each ethical construct. In other words, it aimed to identify whether, for a given level of acceptance (USE) to occur, students must evaluate the ethical dimension X at least above a minimum threshold (Xa). This approach follows the logic of necessity [26].

With regard to RO1, the model showed adequate explanatory capacity, accounting for more than 50% of the variance of USE, along with strong predictive power (Stone–Geisser Q2 close to 50%). It also outperformed the benchmarks cross-validated ability predictive test [92], such as the indicator average and the parsimonious linear model. All constructs correlated above 0.5 with the decision to use GAI, but only consequentialism reached statistical significance in the regression. This finding confirms the relevance of consequentialism for understanding ethical decisions in academic contexts, such as plagiarism [23,25], document falsification [24,25], or teachers’ decisions to encourage GAI use [45]. It is also consistent with the technology acceptance literature, where perceived usefulness, efficiency, and the absence of risks emerge as key determinants across cultural contexts: Islamic nations [53,70], Asian countries [46,52,72], and Europe [49,54]. Furthermore, it aligns with the relevance of hedonic and well-being motivations highlighted in the literature [49,55,73].

The lack of significance of MEQU, although unexpected, has been documented in previous MES-based studies of academic decisions, such as fraud in placement essays or the creation of chat rooms during individual tests [24]. Similarly, the nonsignificance of relativism is a recurring result [23,24,25,45]. As for deontology, other works have shown its limited relevance in behaviors related to plagiarism [23,24] or teachers’ use of GAI [45].

The results of RO1 do not imply that only consequentialism matters for understanding GAI acceptance, but rather highlight the weight of instrumental criteria in students’ decisions. The consequentialist dimension relates to tangible benefits such as improved textual clarity, better grades, reduced writing anxiety, or increased time availability for higher value-added activities. However, the analysis of RO2 qualifies this result: although MEQU, RELA, and DEONT are not sufficient on their own to explain use, they are necessary. In other words, for a student to use GAI, a minimally acceptable level in these ethical dimensions must be present. Yet, surpassing that threshold does not increase tendency to use: students simply deem the use admissible, and their final decision depends primarily on perceived usefulness.

Taken together, the findings suggest that the acceptance of GAI in essay correction is not explained solely by utilitarian logic nor by a normative, justice-oriented, or socially acceptable framework. Students appear to combine both dimensions: on the one hand, they seek concrete benefits derived from the technology’s use, while on the other, they need assurance that such use does not transgress a minimum threshold of justice, equity, social acceptance, and normative conformity.

5.2. Theoretical Implications

This study contributes to the field of applied ethics and the adoption of emerging technologies in higher education through several relevant insights. First, it extends the Multidimensional Ethics Scale (MES) [18,19] to the specific context of GAI use in the linguistic editing of academic essays. While the MES has been widely applied to the analysis of ethically controversial academic behaviors such as plagiarism, falsification, or cheating [24,25,32], its application to GAI allows for the exploration of a novel domain where the boundaries between legitimate and improper use remain unclear.

The research demonstrates the complementarity of sufficiency- and necessity-based approaches in understanding technology acceptance in ethically sensitive scenarios. The sequential use of PLS-SEM and NCA revealed that, although consequentialism is the main predictor of use predisposition. Yet, the other ethical criteria still operate as indispensable prerequisites. This finding highlights that ethical judgements do not operate in isolation but rather in a hierarchical manner: some dimensions explain variance in behavior, while others establish minimum thresholds without which the behavior does not occur.

The results further refine and nuance prior findings on GAI acceptance derived from technology adoption models such as TAM [29] and UTAUT [30]. Although these frameworks traditionally emphasize perceived usefulness and social influence as the main predictors of behavioral intention, our results reveal a more differentiated pattern. In the present context, perceived usefulness—conceptually aligned with consequentialism—remains a significant determinant, whereas the influence of the social environment—associated with moral relativism—appears nonsignificant when analyzed through sufficiency logic. This contrast suggests that in individual and private decisions, such as essay writing, social approval may lose relevance, while personal ethical evaluations and self-regulated moral reasoning gain greater prominence.

Finally, we understand that the outcomes contribute to the literature on ethics and technology by showing that GAI acceptance cannot be assessed solely in terms of efficiency or effectiveness but must also incorporate normative frameworks that address both equity and academic integrity. This integrative perspective opens new avenues for research into how different moral frameworks interact to guide the responsible adoption of disruptive technologies in education.

5.3. Practical Implications

From an applied perspective, we believe that findings offer valuable guidance for instructors, institutional leaders, and educational policy designers. First, the results underscore that ensuring an equitable condition of access to GAI I a necessary condition. So, we feel that since the perception of the existence of moral equity is a necessary condition for acceptance, it is crucial that universities guarantee that all students have similar opportunities to use these tools. This not only implies providing free or institutional access to high-quality versions but also reducing gaps stemming from differences in connectivity or devices.

In our opinion, results suggest that higher education institutions must establish clear and transparent rules regarding the use of GAI in academic writing. Although the scenario analyzed focuses on use limited to linguistic correction, the risk that students employ the tool to generate substantive content is real. Explicit policies that delineate what is permitted and what is prohibited—accompanied by awareness-raising strategies—may help reduce ambiguity and reinforce the commitment to academic integrity. One possible measure in this regard could be oral presentations of the essay, in which students demonstrate that they have consciously developed their work and can reflect on the ideas and arguments it argues.

The high USE scores associated with using GAI for writing enhancement may facilitate a transition toward ethically more questionable practices. Therefore, it is necessary to promote a balanced pedagogical approach that leverages the advantages of GAI while preserving the development of fundamental academic skills. Instructors should encourage activities in which GAI use is limited to editing or refinement tasks, while simultaneously requiring students to demonstrate their own abilities in writing, argumentation, and critical thinking. In this way, GAI becomes a supportive tool rather than a substitute for the educational process. A complementary measure could involve developing software capable not only of detecting AI-generated text, but also of distinguishing between content that, although partially produced with GAI assistance, clearly reflects the author’s own contribution and that which has been entirely generated by the tool.

The findings also provide evidence to inform the design of emotional and motivational support strategies. Since GAI can help reduce writing-related anxiety and free up time for higher value-added tasks, its regulated use may enhance student well-being. Nevertheless, we believe that this benefit must be balanced with training programs that strengthen self-regulation and prevent technological dependency.

Finally, from a public policy perspective, the results underscore the importance of integrating the ethical dimension into the digitalization of higher education. In our view, the findings suggest that access to technologies such as GAI should be evaluated not only in terms of innovation or efficiency, but also in relation to distributive justice, academic integrity, and sustainability. This implies incorporating ethical criteria into institutional digital transformation plans as well as into national and international regulatory frameworks. A concrete measure could be the implementation of ethics-based digital literacy programs that train both students and faculty to use GAI responsibly and equitably.

6. Conclusions

6.1. Main Findings

This study has explored the acceptance of GAI in academic essay writing by higher education students, focusing on its limited use as an assistant for linguistic correction. By combining PLS-SEM and NCA, findings were obtained that advance both theoretical and practical understanding.

First, the results show that ethical theories provide a solid framework for understanding students’ willingness to use GAI in academic contexts. While consequentialism emerges as the main predictor of use—highlighting practical benefits and perceived efficiency—the other ethical constructs (moral equity, relativism, and deontology) are equally relevant for GAI use to be deemed acceptable. This finding confirms that GAI acceptance depends on perceived usefulness, as suggested by classical technology adoption models, but also requires acceptability of its use from diverse ethical perspectives.

6.2. Limitations and Further Research

Although this study provides relevant insights into the acceptance of GAI in higher education, several limitations must be acknowledged when interpreting the results. At the same time, these limitations open avenues for future research.

First, the research focused on a single use scenario: the linguistic correction of previously drafted essays. While this framework allows for a clearer isolation of the ethical analysis of GAI use, it excludes other widespread practices such as summary generation, bibliographic assistance, or even the creation of complete drafts. Future studies should broaden the range of scenarios to examine how ethical judgments vary depending on the degree of the tool’s involvement in the creative process.

The sample of the study presents limitations in terms of representativeness. With 151 students from two Spanish universities, the size and cultural homogeneity restrict the generalizability of the findings to the broader student population. Variables such as academic discipline, level of study, cultural background, or prior familiarity with digital tools may condition students’ willingness to use GAI. Nonetheless, from a methodological standpoint, the sample is sufficient for the combined use of PLS-SEM and NCA, as supported by statistical power analyses. Beyond this adequacy, the contribution of the study lies in offering empirical evidence situated within the field of social sciences in the Spanish cultural context. We believe that such context-specific research, while limited in scope, provides a valuable reference point for subsequent comparative research and meta-analyses across countries and disciplines. Accordingly, we view our work as an initial step that should be replicated and extended with larger, multicultural, and cross-institutional samples.

Certain variables that may influence GAI use, such as gender and prior experience with the technology, were partially controlled. In the first case, the correlation between gender and GAI use was not significant, while in the second, all participants acknowledged having used GAI for academic purposes at least once. However, the influence of gender may rest less on its direct effect on the outcome, but rather on its potential moderating role over the explanatory variables, as observed in UTAUT [30].

Ultimately, gender might not determine the use of GAI per se but rather shape which ethical judgments become more relevant in explaining such use [10]. Similarly, GAI experience, if measured in degrees rather than as a binary variable, could also serve as an explanatory or moderating factor. These aspects undoubtedly warrant further research.

Moreover, the level of academic pressure has been considered an intrinsic circumstance of the student that shapes their deontological judgment regarding the appropriateness of using GAI, particularly when good grades are perceived as an implicit contract with their social environment. The use of academic stress scales, such as that [99], to measure this pressure and assess its explanatory or moderating influence on ethical constructs, undoubtedly represents a promising avenue for future research.

The study adopts a cross-sectional design, which limits the ability to capture the evolution of attitudes toward GAI over time. Given the rapid pace of technological advancement and the ongoing development of institutional and regulatory frameworks, students’ perceptions, ethical evaluations, and behavioral patterns may shift within relatively short periods. Longitudinal studies would therefore be valuable to trace how these attitudes and moral judgments consolidate, fluctuate, or even polarize as GAI becomes progressively embedded in academic practices. Such designs could also help distinguish between transient reactions to novelty and more stable patterns of ethical adaptation.

Although the linked use of PLS-SEM and NCA provides a novel and robust approach, the model does not incorporate additional contextual variables such as institutional regulatory pressure, disparities in access to paid versions, or perceived risks surrounding data privacy. Future research could enrich the model by integrating these dimensions, as well as by testing potential moderators such as gender, academic experience, or socioeconomic background.

This research focused exclusively on the perspective of students, leaving out other key stakeholders such as instructors, institutional leaders, and educational policy designers. Understanding how these groups perceive the legitimacy, benefits, and risks of GAI use in academia would provide a more holistic and interdisciplinary view of the phenomenon. Faculty members, for instance, may frame GAI through pedagogical and evaluative concerns, while institutional leaders might prioritize governance, integrity, and resource allocation issues. Likewise, policymakers could interpret GAI adoption within broader debates on educational equity, data ethics, and the digital divide. Incorporating these complementary viewpoints in future research would enrich both the theoretical understanding and the practical management of GAI integration in higher education.

The literature review reveals a scarcity of qualitative studies on GAI acceptance, and none framed from the perspective of the MES. Existing research has predominantly employed generalist uses of GAI [59] or has focused on students’ perspectives [2] and use TAM and UTAUT to contextualize the advantages and disadvantages that respondents associate with the use of GAI. Other contributions analyze teachers’ perspectives [50], or students’ views [100] by systematizing perceptions in educational rubrics, but without considering their ethical dimension.

For example, drawbacks such as the existence of premium GAI services or the requirement of reliable connectivity [2] go beyond a reduction in perceived usefulness. They also raise ethical concerns from the standpoint of moral equity, since such conditions may generate inequalities among students. Similarly, qualitative findings in [100] include statements about the advantages of GAI in education such as “Harnessing AI for Enhanced Academic Performance” and “Improving Written Communication”. While these can be interpreted within TAM as indicators of greater productivity and ease of use, they also invite ethical considerations. These benefits can be analyzed from a consequentialist perspective. However, they also depend on having good connectivity, which may reinforce inequality among students. Moreover, the improvement of written communication through GAI does not necessarily entail an enhancement of students’ own ability to write independently [12]. This raise concerns not only from the perspective of moral equity, given the potential loss of student autonomy in text production, but also from a deontological perspective, insofar as both students and academic institutions are expected to safeguard comprehensive education, which necessarily includes the development of written communication skills.

The absence of a qualitative perspective that incorporates both students and teachers from an MES standpoint—not only in this paper but also in the broader literature—highlights a clear opportunity for future qualitative research to adopt the MES framework as a way to deepen and enrich the study of GAI acceptance in education.

Taken together, future research should move toward a broader and more dynamic approach that integrates diverse use scenarios, educational stakeholders, and sociocultural contexts. Such efforts will contribute to a more comprehensive understanding of how ethics, equity, and regulation shape the responsible adoption of generative artificial intelligence in higher education.

Author Contributions

Conceptualization, A.P.-P. and M.A.-O.; methodology, A.P.-P. and G.P.-C.; software: J.d.A.-S.; validation: M.A.-O.; formal analysis: A.P.-P.; investigation. A.P.-P. and M.A.-O.; resources, G.P.-C.; data curation, J.d.A.-S.; writing—original draft preparation, A.P.-P. and J.d.A.-S.; writing—review and editing, G.P.-C.; visualization, J.d.A.-S.; supervision, G.P.-C.; project administration, M.A.-O. and G.P.-C.; funding acquisition: J.d.A.-S. and M.A.-O. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Telefonica and the Telefonica Chair on Smart Cities of the Universitat Rovira i Virgili and Universitat de Barcelona (project number: 42. DB.00.18.00).

Institutional Review Board Statement

(1) All participants received detailed written information about the study and procedure; (2) no data directly or indirectly related to the health of the subjects were collected, and therefore the Declaration of Helsinki was not mentioned when informing the subjects; (3) the anonymity of the collected data was ensured at all times; (4) the research received a favorable evaluation from the Ethics Committee of a researchers’ institution (CE_20250710_10_SOC).

Informed Consent Statement

Informed consent was obtained from all the subjects involved in the study.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CONS | Consequentialism |

| DEONT | Deontology |

| GAI | Generative artificial intelligence |

| MEQU | Moral equity |

| MES | Multidimensional ethics scale |

| NCA | Necessary condition analysis |

| PLS-SEM | Partial least squares-structural equation modelling |

| RELA | Relativism |

References

- Madsen, D.Ø.; Toston, D.M. ChatGPT and Digital Transformation: A Narrative Review of Its Role in Health, Education, and the Economy. Digital 2025, 5, 24. [Google Scholar] [CrossRef]

- Almassaad, A.; Alajlan, H.; Alebaikan, R. Student Perceptions of Generative Artificial Intelligence: Investigating Utilization, Benefits, and Challenges in Higher Education. Systems 2024, 12, 385. [Google Scholar] [CrossRef]

- Naznin, K.; Al Mahmud, A.; Nguyen, M.T.; Chua, C. ChatGPT Integration in Higher Education for Personalized Learning, Academic Writing, and Coding Tasks: A Systematic Review. Computers 2025, 14, 53. [Google Scholar] [CrossRef]

- Salem, M.A. Bridging or Burning? Digital Sustainability and PY Students’ Intentions to Adopt AI-NLP in Educational Contexts. Computers 2025, 14, 265. [Google Scholar] [CrossRef]

- Schmidt, D.A.; Alboloushi, B.; Thomas, A.; Magalhaes, R. Integrating Artificial Intelligence in Higher Education: Perceptions, Challenges, and Strategies for Academic Innovation. Comput. Educ. Open 2025, 9, 100274. [Google Scholar] [CrossRef]

- Shahzad, M.F.; Xu, S.; Liu, H.; Zahid, H. Generative Artificial Intelligence (ChatGPT-4) and Social Media Impact on Academic Performance and Psychological Well-Being in China’s Higher Education. Eur. J. Educ. 2025, 60, e12835. [Google Scholar] [CrossRef]

- de Fine Licht, K. Generative artificial intelligence in higher education: Why the ‘banning approach’ to student use is sometimes morally justified. Philos. Technol. 2024, 37, 113. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. Opinion paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Gorrieri, L. Should I use ChatGPT as an academic aid? A response to Aylsworth and Castro. Philos. Technol. 2025, 38, 8. [Google Scholar] [CrossRef]

- Pérez-Portabella, A.; Arias-Oliva, M.; Padilla-Castillo, G.; de Andrés-Sánchez, J. Passing with ChatGPT? Ethical Evaluations of Generative AI Use in Higher Education. Digital 2025, 5, 33. [Google Scholar] [CrossRef]

- Parviz, M. AI in education: Comparative perspectives from STEM and Non-STEM instructors. Comput. Educ. Open 2024, 6, 100190. [Google Scholar] [CrossRef]

- Aylsworth, T.; Castro, C. Should I use ChatGPT to write my papers? Philos. Technol. 2024, 37, 117. [Google Scholar] [CrossRef]

- Escorcia, D.; Campo, K.; Navarro, G.; Ros, C. Assessing writing practices in higher education: Characterizing self-reported practices and identifying their determinants. Assess. Writ. 2025, 66, 100976. [Google Scholar] [CrossRef]

- Callies, M.; Zaytseva, E.; Present-Thomas, R.L. Writing assessment in higher education: Making the framework work. Dutch J. Appl. Linguist. 2013, 2, 1–15. [Google Scholar] [CrossRef]

- Dahl, B.M.; Vasset, F.; Frilund, M. Students’ approaches to scientific essay writing as an educational method in higher education: A mixed methods study. Soc. Sci. Humanit. Open 2023, 7, 100389. [Google Scholar] [CrossRef]

- Kaplan, A. The Conduct of Inquiry: Methodology for Behavioral Science; Transaction Publishers: Piscataway, NJ, USA, 1964. [Google Scholar]

- Baumeister, R.F.; Bratslavsky, E.; Muraven, M.; Tice, D.M. Ego depletion: Is the active self a limited resource? J. Pers. Soc. Psychol. 1998, 74, 1252–1265. [Google Scholar] [CrossRef]

- Reidenbach, R.E.; Robin, D.P. Toward the development of a multidimensional scale for improving evaluations of business ethics. J. Bus. Ethics 1990, 9, 639–653. [Google Scholar] [CrossRef]

- Shawver, T.J.; Sennetti, J.T. Measuring ethical sensitivity and evaluation. J. Bus. Ethics 2009, 88, 663–678. [Google Scholar] [CrossRef]

- Andrés-Sánchez, J.d.; Arias-Oliva, M.; Pelegrín-Borondo, J.; Almahameed, A.A.M. The influence of ethical judgements on acceptance and non-acceptance of wearables and insideables: Fuzzy set qualitative comparative analysis. Technol. Soc. 2021, 67, 101689. [Google Scholar] [CrossRef]

- Pelegrín-Borondo, J.; Arias-Oliva, M.; Murata, K.; Souto-Romero, M. Does ethical judgment determine the decision to become a cyborg? J. Bus. Ethics 2020, 161, 5–17. [Google Scholar] [CrossRef]

- Arli, D.; Tjiptono, F.; Porto, R. The impact of moral equity, relativism and attitude on individuals’ digital piracy behaviour in a developing country. Mark. Intell. Plan. 2015, 33, 348–365. [Google Scholar] [CrossRef]

- Jung, I. Ethical judgments and behaviors: Applying a multidimensional ethics scale to measuring ICT ethics of college students. Comput. Educ. 2009, 53, 940–949. [Google Scholar] [CrossRef]

- Leonard, L.N.; Riemenschneider, C.K.; Manly, T.S. Ethical behavioral intention in an academic setting: Models and predictors. J. Acad. Ethics 2017, 15, 141–166. [Google Scholar] [CrossRef]

- Yang, S.C. Ethical academic judgments and behaviors: Applying a multidimensional ethics scale to measure the ethical academic behavior of graduate students. Ethics Behav. 2012, 22, 281–296. [Google Scholar] [CrossRef]

- Dul, J. A different causal perspective with necessary condition analysis. J. Bus. Res. 2024, 177, 114618. [Google Scholar] [CrossRef]

- Sarstedt, M.; Richter, N.F.; Hauff, S.; Ringle, C.M. Combined importance–performance map analysis (cIPMA) in partial least squares structural equation modeling (PLS–SEM): A SmartPLS 4 tutorial. J. Mark. Anal. 2024, 12, 746–760. [Google Scholar] [CrossRef]

- Andrés-Sánchez, J.d. The role of ethical perceptions in the acceptance of innovative sport technologies for competition: Analysis of carbon plate running shoes. Cult. Cienc. Y Deporte 2025. Accepted for publication. [Google Scholar]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Ahmed, Z.; Shanto, S.S.; Rime, M.H.K.; Morol, M.K.; Fahad, N.; Hossen, M.J.; Abdullah-Al-Jubair, M. The Generative AI Landscape in Education: Mapping the Terrain of Opportunities, Challenges, and Student Perception. IEEE Access 2024, 12, 147023–147050. [Google Scholar] [CrossRef]

- Prashar, A.; Gupta, P.; Dwivedi, Y.K. Plagiarism awareness efforts, students’ ethical judgment and behaviors: A longitudinal experiment study on ethical nuances of plagiarism in higher education. Stud. High. Educ. 2024, 49, 929–955. [Google Scholar] [CrossRef]

- Elshaer, I.A.; Hasanein, A.M.; Sobaih, A.E.E. The Moderating Effects of Gender and Study Discipline in the Relationship between University Students’ Acceptance and Use of ChatGPT. Eur. J. Investig. Health Psychol. Educ. 2024, 14, 1981–1995. [Google Scholar] [CrossRef] [PubMed]

- Strzelecki, A.; ElArabawy, S. Investigation of the moderation effect of gender and study level on the acceptance and use of generative AI by higher education students: Comparative evidence from Poland and Egypt. Br. J. Educ. Technol. 2024, 55, 1209–1230. [Google Scholar] [CrossRef]

- Mustofa, R.H.; Kuncoro, T.G.; Atmono, D.; Hermawan, H.D.; Sukirman. Extending the technology acceptance model: The role of subjective norms, ethics, and trust in AI tool adoption among students. Comput. Educ. Artif. Intell. 2025, 8, 100379. [Google Scholar] [CrossRef]

- Venkatesh, V.; Bala, H. Technology Acceptance Model 3 and a Research Agenda on Interventions. Decis. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.L.; Xu, X. Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of echnology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Dul, J. Necessary condition analysis (NCA): Logic and methodology of “necessary but not sufficient” causality. Organ. Res. Methods 2016, 19, 10–52. [Google Scholar] [CrossRef]

- Leonard, L.N.; Jones, K. Ethical awareness of seller’s behavior in consumer-to-consumer electronic commerce: Applying the multidimensional ethics scale. J. Internet Commer. 2017, 16, 202–218. [Google Scholar] [CrossRef]

- Killen, M. The origins of morality: Social equality, fairness, and justice. Philos. Psychol. 2018, 31, 767–803. [Google Scholar] [CrossRef]

- Nussbaum, M.; Sen, A. The Quality of Life; Clarendon Press: Oxford, UK, 1993. [Google Scholar]

- Kasneci, E.; Sessler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Toh, Y.; Looi, C.K. Transcending the dualities in digital education: A case study of Singapore. Front. Digit. Educ. 2024, 1, 121–131. [Google Scholar] [CrossRef]

- Biloš, A.; Budimir, B. Understanding the Adoption Dynamics of ChatGPT among Generation Z: Insights from a Modified UTAUT2 Model. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 863–879. [Google Scholar] [CrossRef]

- Pelegrín-Borondo, J.P.; Pascual, C.O.; Pascual, L.B.; Milon, A.G. Impact of ethical judgment on university professors encouraging students to use AI in academic tasks. In The Leading Role of Smart Ethics in the Digital World; Universidad de La Rioja: Logroño, Spain, 2024; pp. 53–61. [Google Scholar]

- Lai, C.Y.; Cheung, K.Y.; Chan, C.S.; Law, K.K. Integrating the adapted UTAUT model with moral obligation, trust and perceived risk to predict ChatGPT adoption for assessment support: A survey with students. Comput. Educ. Artif. Intell. 2024, 6, 100246. [Google Scholar] [CrossRef]

- Baghramian, M.; Carter, J.A. Relativism. In The Stanford Encyclopedia of Philosophy; Spring 2025 Edition; Zalta, E.N., Nodelman, U., Eds.; The Metaphysics Research Lab, Department of Philosophy, Stanford University: Stanford, CA, USA, 2025; Available online: https://plato.stanford.edu/entries/relativism/ (accessed on 21 July 2025).

- Andersen, S.; Hjortskov, M. The unnoticed influence of peers on educational preferences. Behav. Public Policy 2019, 6, 530–553. [Google Scholar] [CrossRef]

- Strzelecki, A. To use or not to use ChatGPT in higher education? A study of students’ acceptance and use of technology. Interact. Learn. Environ. 2024, 32, 5142–5155. [Google Scholar] [CrossRef]

- Tlais, S.; Alkhatib, A.; Hamdan, R.; HajjHussein, H.; Hallal, K.; Malti, W.E. Artificial intelligence in higher education: Early perspectives from Lebanese STEM faculty. TechTrends 2025, 69, 598–606. [Google Scholar] [CrossRef]

- Vanderbauwhede, W. Estimating the increase in emissions caused by AI-augmented search. arXiv 2024, arXiv:2407.16894. [Google Scholar]

- Chung, J.; Kwon, H. Privacy fatigue and its effects on ChatGPT acceptance among undergraduate students: Is privacy dead? Educ. Inf. Technol. 2025, 30, 12321–12343. [Google Scholar] [CrossRef]

- Alshammari, S.H.; Alshammari, M.H. Factors Affecting the Adoption and Use of ChatGPT in Higher Education. Int. J. Inf. Commun. Technol. Educ. (IJICTE) 2024, 20, 1–16. [Google Scholar] [CrossRef]

- Grassini, S.; Aasen, M.L.; Møgelvang, A. Understanding University Students’ Acceptance of ChatGPT: Insights from the UTAUT2 Model. Appl. Artif. Intell. 2024, 38, 2371168. [Google Scholar] [CrossRef]

- Cambra-Fierro, J.J.; Blasco, M.F.; López-Pérez, M.-E.E.; Trifu, A. ChatGPT adoption and its influence on faculty well-being: An empirical research in higher education. Educ. Inf. Technol. 2025, 30, 1517–1538. [Google Scholar] [CrossRef]

- Sinnott-Armstrong, W. Consequentialism. In The Stanford Encyclopedia of Philosophy; Zalta, E.N., Ed.; The Metaphysics Research Lab, Department of Philosophy, Stanford University: Stanford, CA, USA, 2023; Available online: https://plato.stanford.edu/entries/consequentialism/ (accessed on 21 July 2025).

- Deigh, J. Egoism. In An Introduction to Ethics; Cambridge University Press: Cambridge, UK, 2010; pp. 25–55. [Google Scholar] [CrossRef]

- Rae, S. Moral Choices: An Introduction to Ethics; Zondervan Academic: Grand Rapids, MI, USA, 2018. [Google Scholar]

- Menon, D.; Shilpa, K. “Chatting with ChatGPT”: Analyzing the factors influencing users’ intention to use the Open AI’s ChatGPT using the UTAUT model. Heliyon 2023, 9, e20962. [Google Scholar] [CrossRef]

- Cebrián Cifuentes, S.; Guerrero Valverde, E.; Checa Caballero, S. The Vision of University Students from the Educational Field in the Integration of ChatGPT. Digital 2024, 4, 648–659. [Google Scholar] [CrossRef]

- Bücker, S.; Nuraydin, S.; Simonsmeier, B.A.; Schneider, M.; Luhmann, M. Subjective well-being and academic achievement: A meta-analysis. J. Res. Pers. 2018, 74, 83–94. [Google Scholar] [CrossRef]

- Martinez, C.T.; Kock, N.; Cass, J. Pain and Pleasure in Short Essay Writing: Factors Predicting University Students’ Writing Anxiety and Writing Self-Efficacy. J. Adolesc. Adult Lit. 2011, 54, 351–360. [Google Scholar] [CrossRef]

- Slimmen, S.; Timmermans, O.; Mikolajczak-Degrauwe, K.; Oenema, A. How stress-related factors affect mental wellbeing of university students: A cross-sectional study to explore the associations between stressors, perceived stress, and mental wellbeing. PLoS ONE 2022, 17, e0275925. [Google Scholar] [CrossRef]

- Shaver, R. Egoism. In The Stanford Encyclopedia of Philosophy; Spring 2023 Edition; Zalta, E.N., Nodelman, U., Eds.; The Metaphysics Research Lab, Department of Philosophy, Stanford University: Stanford, CA, USA, 2023; Available online: https://plato.stanford.edu/archives/spr2023/entries/egoism/ (accessed on 21 July 2025).

- Culp, J. Ethics of education. In Encyclopedia of the Philosophy of Law and Social Philosophy; Springer: Dordrecht, The Netherlands, 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Alier, M.; García-Peñalvo, F.; Camba, J.D. Generative artificial intelligence in education: From deceptive to disruptive. Int. J. Interact. Multimed. Artif. Intell. 2024, 8, 5–14. [Google Scholar] [CrossRef]

- Meyer, J.G.; Urbanowicz, R.J.; Martin, P.C.; O’Connor, K.; Li, R.; Peng, P.C.; Bright, T.J.; Tatonetti, N.; Won, K.J.; Gonzalez-Hernandez, G.; et al. ChatGPT and large language models in academia: Opportunities and challenges. BioData Min. 2023, 16, 20. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Müller, H.; Holzinger, A.; Chen, F. Ethical ChatGPT: Concerns, challenges, and commandments. Electronics 2024, 13, 3417. [Google Scholar] [CrossRef]

- Venkatesh, V. Adoption and Use of AI Tools: A Research Agenda Grounded in UTAUT. Ann. Oper. Res. 2022, 108, 641–652. [Google Scholar] [CrossRef]

- Yildiz Durak, H.; Onan, A. Predicting the use of chatbot systems in education: A comparative approach using PLS-SEM and machine learning algorithms. Curr. Psychol. 2024, 43, 23656–23674. [Google Scholar] [CrossRef]

- Sobaih, A.E.E.; Elshaer, I.A.; Hasanein, A.M. Examining students’ acceptance and use of ChatGPT in Saudi Arabian higher education. Eur. J. Investig. Health Psychol. Educ. 2024, 14, 709–721. [Google Scholar] [CrossRef] [PubMed]

- Panggabean, E.-M.; Silalahi, A.D.K. How Do ChatGPT’s Benefit–Risk-coping paradoxes impact higher education in Taiwan and Indonesia? Comput. Educ. Artif. Intell. 2025, 8, 100412. [Google Scholar] [CrossRef]

- Abdaljaleel, M.; Barakat, M.; Alsanafi, M.; Salim, N.A.; Abazid, H.; Malaeb, D.; Mohammed, A.H.; Hassan, B.A.R.; Wayyes, A.M.; Farhan, S.S.; et al. A multinational study on the factors influencing university students’ attitudes and usage of ChatGPT. Sci. Rep. 2024, 14, 1983. [Google Scholar] [CrossRef]

- Alexander, L.; Moore, M. Deontological ethics. In The Stanford Encyclopedia of Philosophy; Winter 2024 Edition; Zalta, E.N., Nodelman, U., Eds.; The Metaphysics Research Lab, Department of Philosophy, Stanford University: Stanford, CA, USA, 2024; Available online: https://plato.stanford.edu/entries/ethics-deontological/ (accessed on 21 July 2025).