4.1. Implementation Details

We evaluate the proposed Soft Knowledge Distillation (SKD) strategy using a comprehensive set of eight datasets across three distinct image restoration tasks: deraining, deblurring, and dehazing. These tasks are critical for real-time traffic applications, where image quality must be restored under challenging environmental conditions.

For deraining, we use three datasets: the synthetic Rain1400 [

52], Test1200 [

53], and the real-world SPA [

29]. For deblurring, we utilize the synthetic Gopro [

54], HIDE [

55], and the real-world BLUR-J [

56]. Finally, for dehazing, we adopt the synthetic subset OTS and real-world subset RTTS from the RESIDE dataset [

57].

To quantitatively assess the image restoration quality, we use a combination of full-reference and no-reference evaluation metrics. Specifically, for the synthetic datasets, we employ the Peak Signal-to-Noise Ratio (PSNR) [

58] (in dB) and Structural Similarity Index (SSIM) [

59], which measure pixel-level fidelity and structural similarity, respectively. For real-world datasets, where ground-truth references are unavailable, we use two no-reference metrics, the Blind/Referenceless Image Spatial Quality Evaluator (BRISQUE) [

60] and Perception-based Image Quality Evaluator (PIQE) [

61], which assess image quality based on perceptual and spatial features.

In addition to image quality, we evaluate the model complexity by measuring the Floating Point Operations (FLOPs) and inference time on each image. These metrics are critical for evaluating the feasibility of our SKD strategy in resource-constrained environments such as autonomous vehicles, where computational efficiency is paramount. We highlight the best results in bold and underline the sub-optimal results.

The entire SKD framework is implemented using PyTorch 1.10. We adopt Adam as the optimizer, with a temperature parameter set to

= 1 × 10

−6. The trade-off weights used in the SKD loss function are

,

, and

. The student models are trained for 100 epochs with a batch size of 8. The learning rate starts at 2 × 10

−4 and is reduced gradually to 1 × 10

−6 using cosine annealing [

62] to ensure stable convergence. During training, all input images are randomly cropped into

patches, and pixel values are normalized to the range [−1, 1]. This augmentation strategy helps improve generalization and reduces the risk of overfitting, which is especially important when training on diverse and complex datasets like those used in this study.

For the teacher networks, we select three state-of-the-art transformer-based models known for their performance in image restoration tasks: Restormer [

2], Uformer [

3], and DRSformer [

4]. These models were chosen due to their ability to capture long-range dependencies and complex features in the image restoration process. The architectures of these teacher models vary in terms of depth and dimensions:

Restormer: 4, 6, 6, 8 layers per encoder–decoder level, with a feature dimension of 48.

Uformer: 1, 2, 8, 8 layers, with a feature dimension of 32.

DRSformer: 4, 4, 6, 6, 8 layers, with a feature dimension of 48.

The corresponding student models—Res-SKD, Ufor-SKD, and DRS-SKD—compress the hyper-parameters to more resource-efficient configurations:

Res-SKD: 1, 2, 2, 4 layers, with a feature dimension of 32.

Ufor-SKD: 1, 2, 4, 4 layers, with a feature dimension of 16.

DRS-SKD: 2, 2, 2, 2, 4 layers, with a feature dimension of 32.

To ensure reproducibility, we clarify that the student models maintain the same fundamental architectural blueprint as their respective teachers (e.g., the same type and sequence of transformer blocks), with compression achieved solely by reducing the number of layers in each block and the dimensionality of the feature channels. It is worth noting that while our experiments utilize transformer-based models to construct a strong benchmark, the proposed SKD framework is not bound to any specific network family. The distillation mechanism operates on feature maps and output images, making it readily applicable to other architectures, such as CNNs. The exploration of its efficacy with CNN-based teachers is a valuable direction for future work. This compression results in significant reductions in both FLOPs and parameters, achieving an average reduction of 85.4% and 85.8%, respectively. Despite the reduced complexity, the student models retain much of the performance of their teacher counterparts, as demonstrated in the following experimental results.

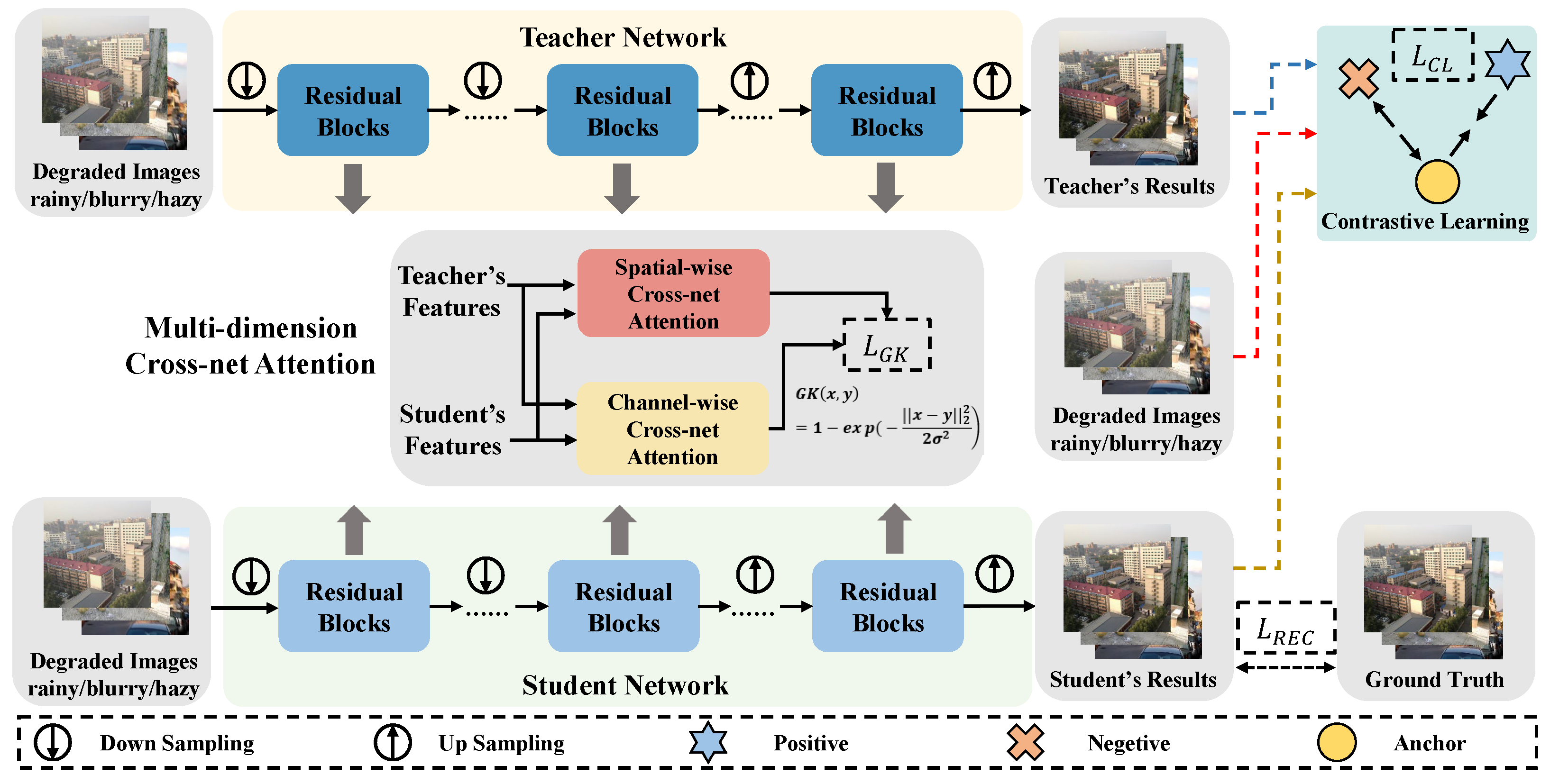

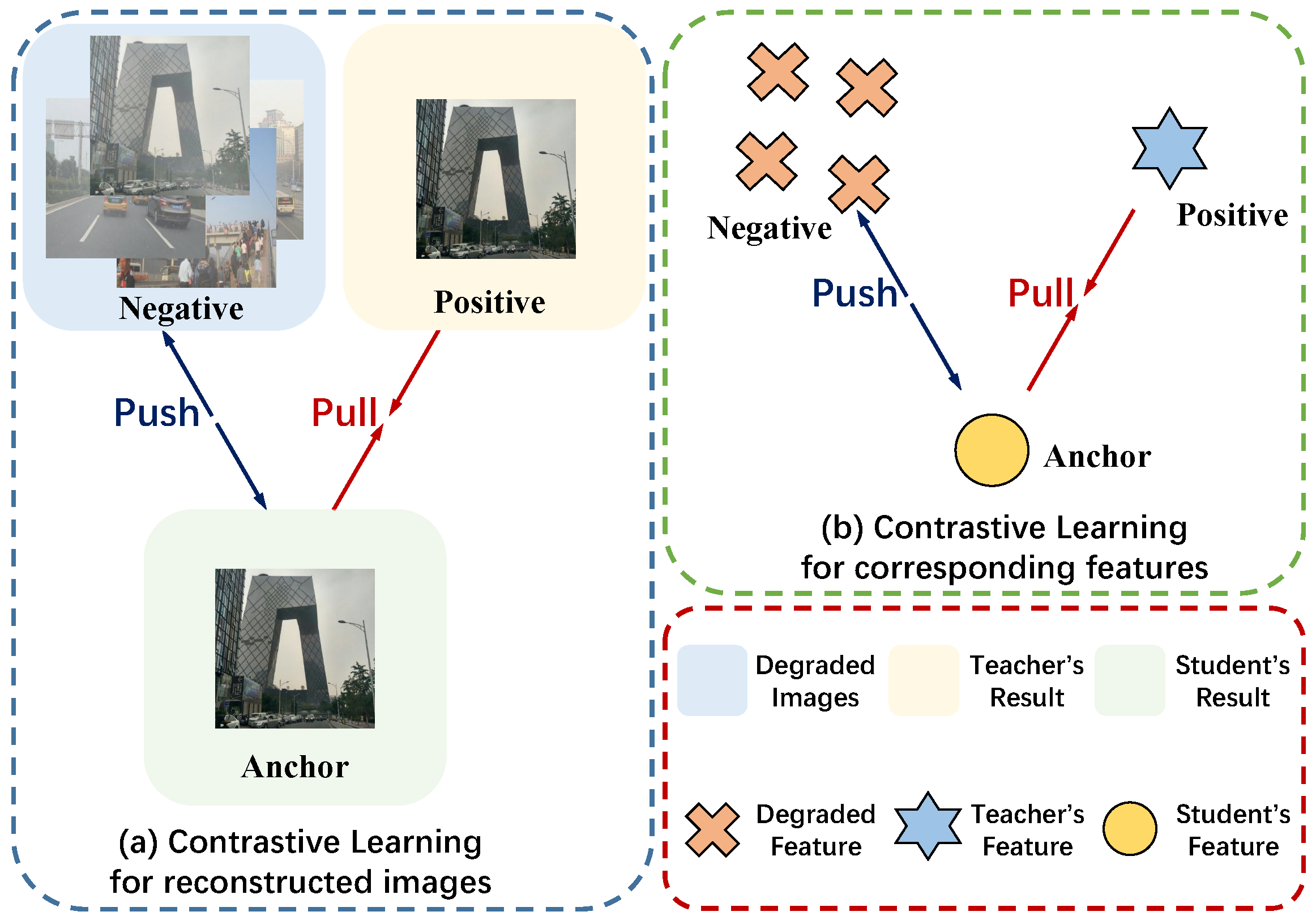

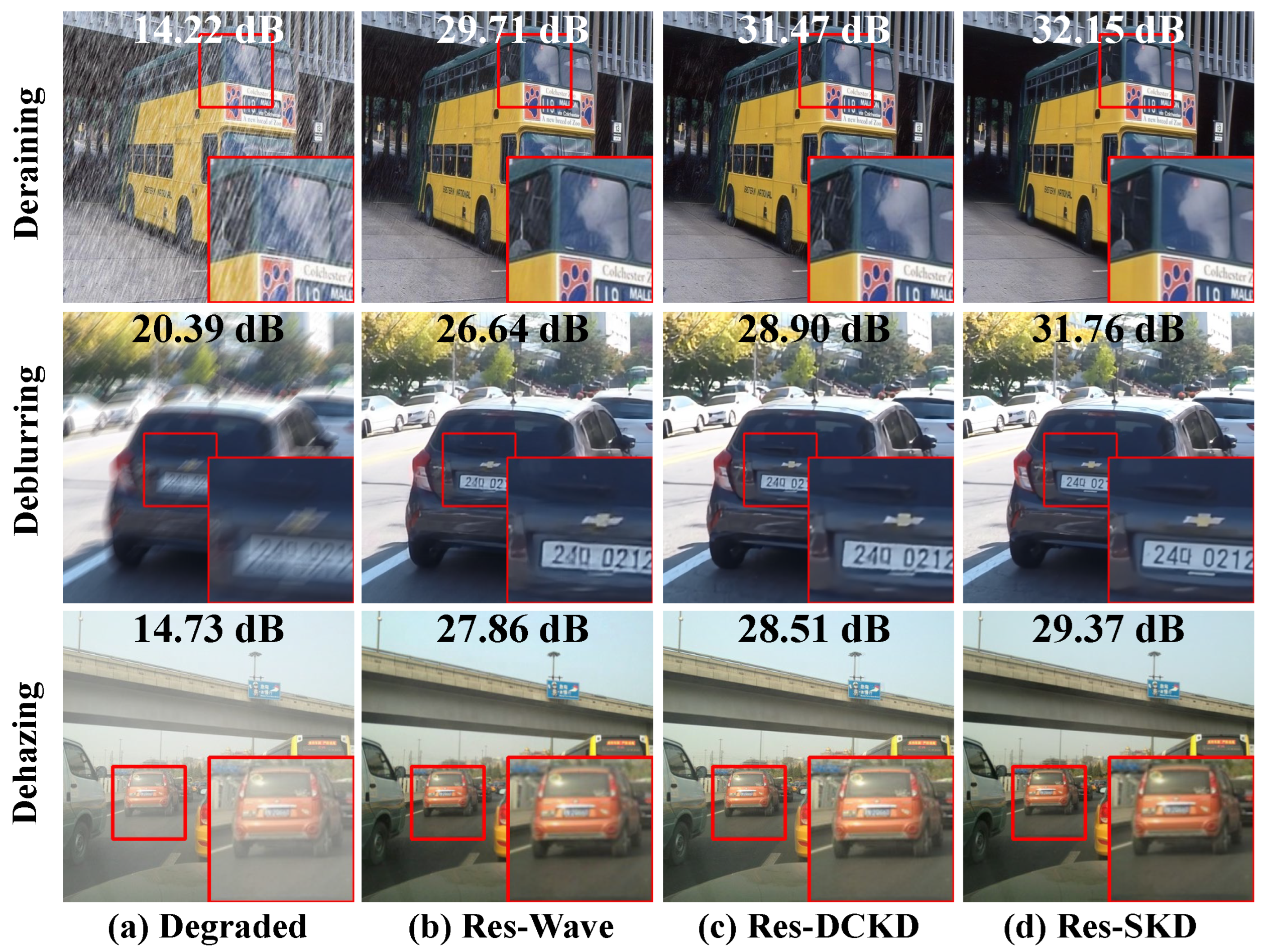

4.2. Comparisons with State-of-the-Arts

Comparison with knowledge distillation methods. We begin by comparing our Soft Knowledge Distillation (SKD) strategy with two state-of-the-art (SOTA) image-to-image transfer knowledge distillation methods: Wave [

16] and DCKD [

13]. These methods are popular in the field of image restoration, particularly in distilling complex teacher models into simpler, more efficient student models. We present both qualitative and quantitative comparisons in

Figure 6 and

Table 1.

For the deraining task, results are averaged over the Rain1400 [

52] and Test1200 [

53] datasets, while for deblurring, results are averaged across the Gopro [

54] and HIDE [

55] datasets. In the dehazing task, we evaluate using the SOTS subset of the RESIDE dataset [

57]. Our SKD-based model demonstrates a clear advantage over the other two SOTA methods in both visual quality and full-reference evaluation metrics (PSNR and SSIM). In particular, the restoration quality produced by SKD exhibits fewer artifacts, sharper edges, and more realistic details in both synthetic and real-world degradation scenarios.

Comparison with image restoration methods. In addition to comparing with knowledge distillation methods, we also benchmark our SKD strategy against seven image restoration models, including two for deraining (PReNet [

63] and RCDNet [

64]), two for deblurring (DMPHN [

65] and MT-RNN [

66]), two for dehazing (MSBDN [

28] and PSD [

27]), and one for generalized restoration (MPRNet [

34]).

As shown in

Figure 7 and

Table 2, our SKD-based student models significantly outperform the baselines in terms of computational complexity while maintaining competitive image quality and performance metrics. Despite being more lightweight, the SKD models are able to restore images with a similar level of detail and clarity as the much more complex models such as MPRNet, which is known for its robust performance across various restoration tasks. This demonstrates the efficiency and potential of SKD in achieving high-quality image restoration with reduced resource consumption, making it particularly suitable for deployment in resource-constrained environments.

Comparison on real degraded images. In real-world applications, it is crucial to ensure that the model performs effectively on images that are not only synthetically degraded but also subjected to real-world conditions. To this end, we extend our evaluation to real degraded images, as shown in

Figure 8 and

Table 3.

Although the models are primarily trained on synthetic datasets, our SKD-based Res-SKD student model exhibits strong performance in handling multiple degradations in real-world images. The results in

Figure 8 clearly demonstrate that Res-SKD can effectively mitigate rain, blur, and haze artifacts, yielding visually appealing and restored images that retain important fine-grained details. Furthermore, the no-reference evaluation metrics presented in

Table 3 (BRISQUE and PIQE) confirm that the SKD-based student models achieve satisfactory image quality, reinforcing their applicability in practical scenarios where ground-truth references are unavailable.

Our findings highlight the versatility and robustness of the proposed SKD strategy, which excels not only on synthetic data but also when applied to real-world challenges. This is a key strength for the deployment of image restoration models in real-time autonomous vehicle applications and other resource-limited environments.

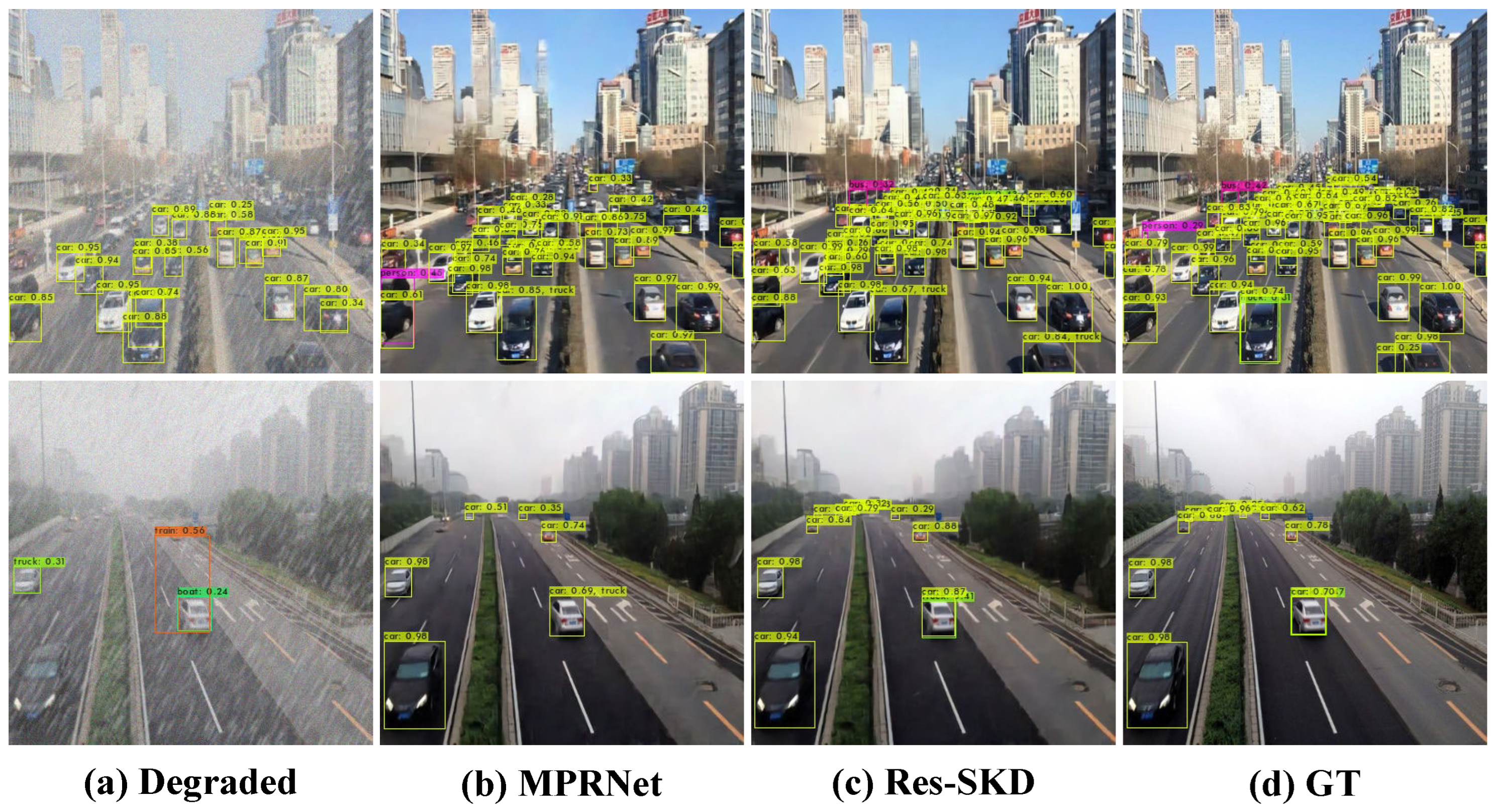

4.3. Object Detection Results

Object detection is a critical high-level computer vision task for traffic systems. However, the accuracy of object detection is heavily influenced by the quality of captured images. In complex outdoor environments, various degradation factors, including weather conditions, significantly degrade image quality, leading to reduced object detection performance.

To evaluate the impact of image restoration on object detection, we use the lightweight object detection model Yolov4 [

67] as a downstream task and assess performance using the mean Average Precision (mAP) metric. We test on the synthetic RMTD-test dataset [

68] and the real-world RMTD-real dataset [

68], both of which include images with multiple coexisting degradation factors.

The visualization results in

Figure 9 clearly demonstrate the benefit of using restored images. Object detection performance on images restored by our SKD-based Res-SKD model exhibits significantly higher recall and precision compared to low-quality images. Additionally, the detection results on Res-SKD-restored images outperform those of images restored by MPRNet [

34], and approach the accuracy levels achieved on high-quality images.

Quantitative evaluations in

Table 4 corroborate these observations, showing a significant improvement in mAP scores for images restored using Res-SKD. These results highlight the practical utility of our SKD strategy in enhancing the effectiveness of downstream tasks like object detection, particularly in autonomous vehicle applications where degraded image quality is a common challenge.

4.4. Ablation Studies

Ablation studies were conducted on the Gopro [

54] dataset for the deblurring task to validate the effectiveness of our proposed distillation strategy and to analyze the contribution of its individual components. A critical baseline is established by training the student architecture solely with the reconstruction loss (

) and without employing any knowledge distillation, which serves to isolate the impact of our SKD framework. The results, summarized in

Table 5 and

Figure 10, reveal the following:

Student with only: This setup reveals the native capacity of the compact student architecture. Its significantly lower performance, compared to all subsequent configurations that utilize the teacher network, clearly demonstrates that the performance gains are not merely a consequence of using a smaller model, but are primarily attributable to the proposed distillation method.

Channel-wise attention: Adding this mechanism improves the student model’s learning capacity, yielding a 0.41 dB gain in PSNR.

Spatial-wise attention: This mechanism contributes an additional 0.51 dB gain in PSNR.

Full Multi-Dimensional Cross-Net Attention (MCA): When both channel-wise and spatial-wise attention mechanisms are combined, the model achieves a 0.79 dB increase in PSNR and a 0.009 improvement in SSIM over the baseline model.

Contrastive learning loss (): This loss further enhances the model’s performance, adding a 0.25 dB gain in PSNR and a 0.004 improvement in SSIM.

The qualitative results in

Figure 10 further validate these findings, illustrating that the inclusion of the MCA mechanism and contrastive learning significantly enhances the visual quality of restored images. These results demonstrate the effectiveness of our proposed components in improving the distilled model’s performance.

To validate the choice of the Gaussian kernel for feature-level distillation, we conducted a controlled experiment comparing different similarity measures while keeping all other components of our SKD framework identical. As shown in

Table 6, the Gaussian kernel achieves the best performance, outperforming both Euclidean distance and cosine similarity by 0.32 dB and 0.26 dB in PSNR, respectively.

The superior performance can be attributed to the Gaussian kernel’s unique properties: (1) Unlike Euclidean distance, which is sensitive to absolute feature magnitudes and can cause gradient instability, the Gaussian kernel operates in a normalized similarity space that is more robust to feature-scale variations. (2) Compared to cosine similarity, which only considers angular alignment and ignores feature magnitude information, the Gaussian kernel incorporates both directional and magnitude relationships through the Euclidean distance in its exponent. (3) The exponential decay characteristic of the Gaussian kernel provides a soft-thresholding effect, focusing the distillation on semantically meaningful feature relationships while being tolerant to minor variations.

Empirically, we also observed that training with the Gaussian kernel exhibited smoother convergence and lower loss variance, confirming its stabilization effect on the distillation process.

4.5. Model Complexity and Hyper-Parameter Analysis

We evaluate the computational complexity of our model by comparing the FLOPs (floating-point operations) and inference time of our distilled student model with those of the teacher model and other SOTA image restoration models. The results are shown in

Table 7.

Our experiments demonstrate that the student model after distillation using the SKD strategy exhibits a substantial reduction in computational cost compared to the teacher models, with 85.4% reduction in FLOPs and 85.8% reduction in parameters. Moreover, our SKD-based model outperforms other SOTA models, including the relatively lightweight MPRNet [

34], in terms of both computational efficiency and restoration quality. This confirms the efficacy of our SKD strategy in achieving high-performance image restoration with significantly reduced complexity, making it suitable for deployment on resource-constrained platforms such as autonomous vehicles.

Additionally, we conduct an analysis of key hyper-parameters to further optimize the SKD framework. The selection process for all hyper-parameters is directly illustrated by comparing the different experimental settings (a) to (e) within the table. Specifically, the results of these experiments are shown in

Table 8, where we evaluate the impact of the trade-off weights (

,

, and

) used in the loss functions. We compare different settings for these hyper-parameters:

The value of is determined by comparing setting (a) () with setting (e) (), while keeping and constant at 0.2. The superior performance of (e) demonstrates that a higher weight of for the loss strikes the best balance, providing more effective guidance from the teacher model.

The values of and are analyzed by comparing settings (b), (c), (d), and (e). The optimal values are found to be and , ensuring that the model prioritizes both the MCA mechanism and contrastive learning loss effectively.

These experiments highlight the importance of hyper-parameter tuning in optimizing the performance of the SKD strategy, ensuring that it achieves the best trade-off between image restoration quality, model complexity, and computational efficiency.