Sparse Keyword Data Analysis Using Bayesian Pattern Mining

Abstract

1. Introduction

2. Research Background

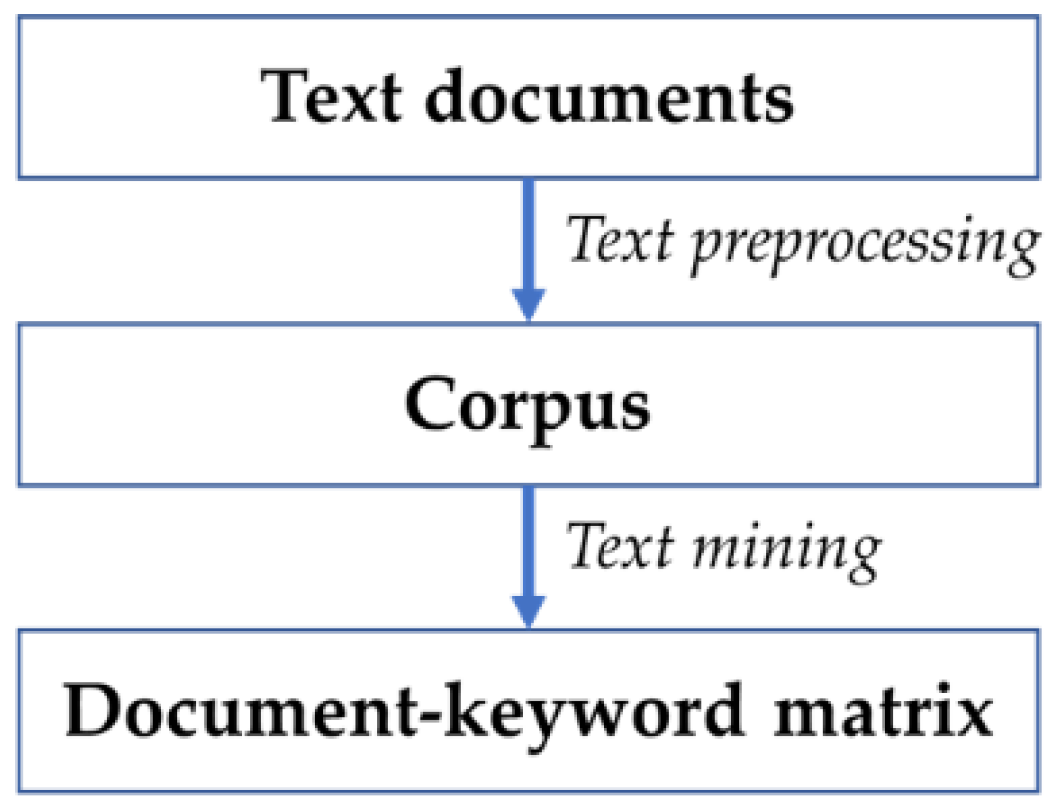

2.1. Keyword Analysis

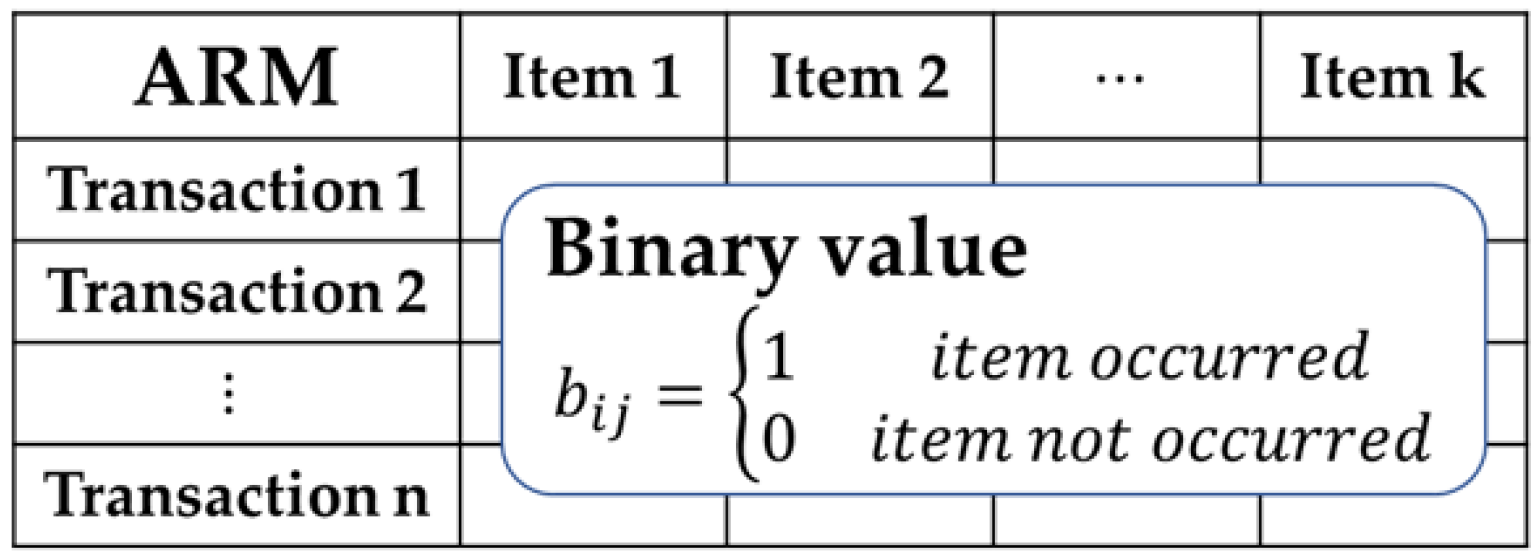

2.2. Association Rule Mining

3. Bayesian Pattern Mining for Sparse Keyword Data Analysis

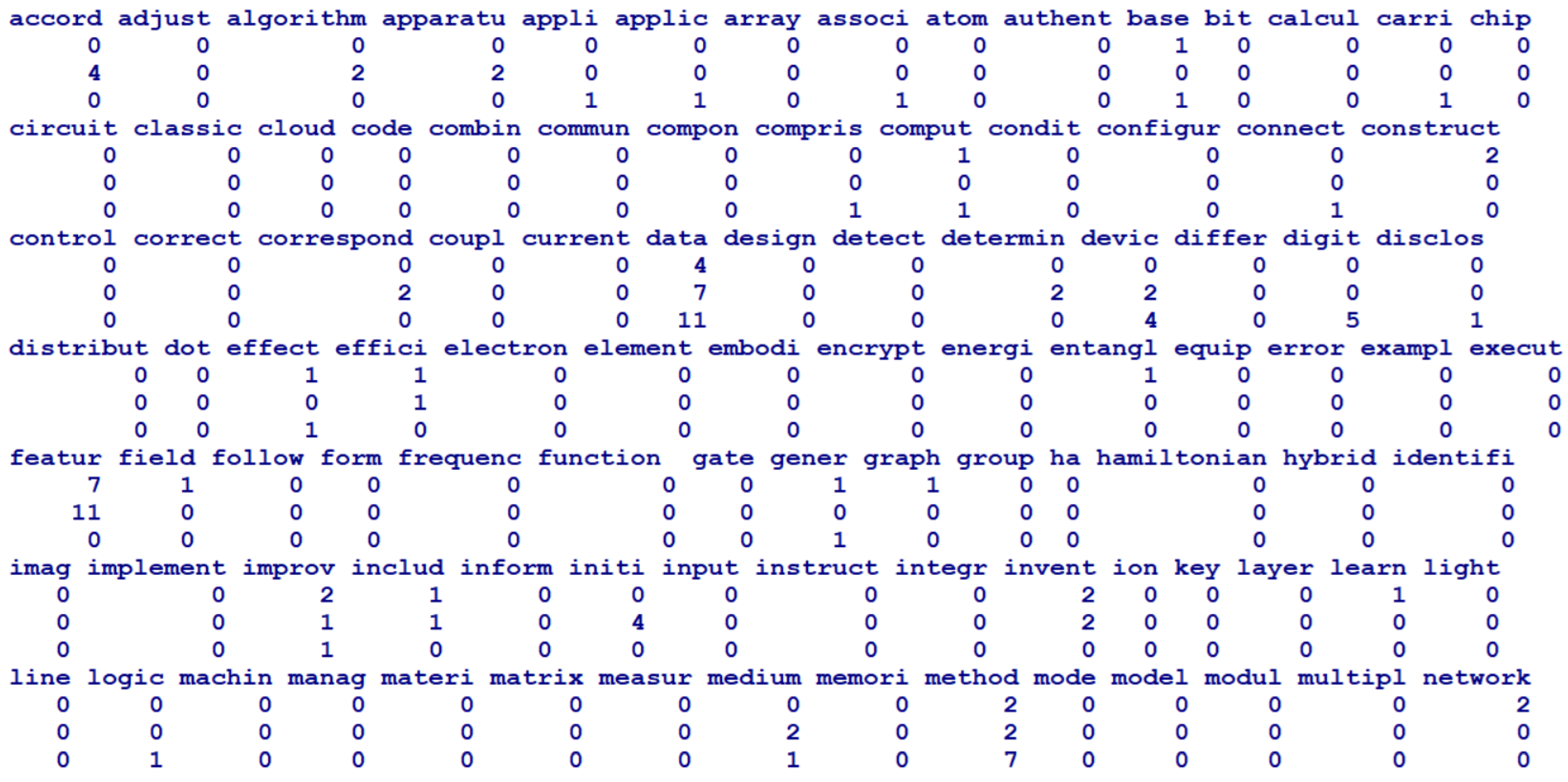

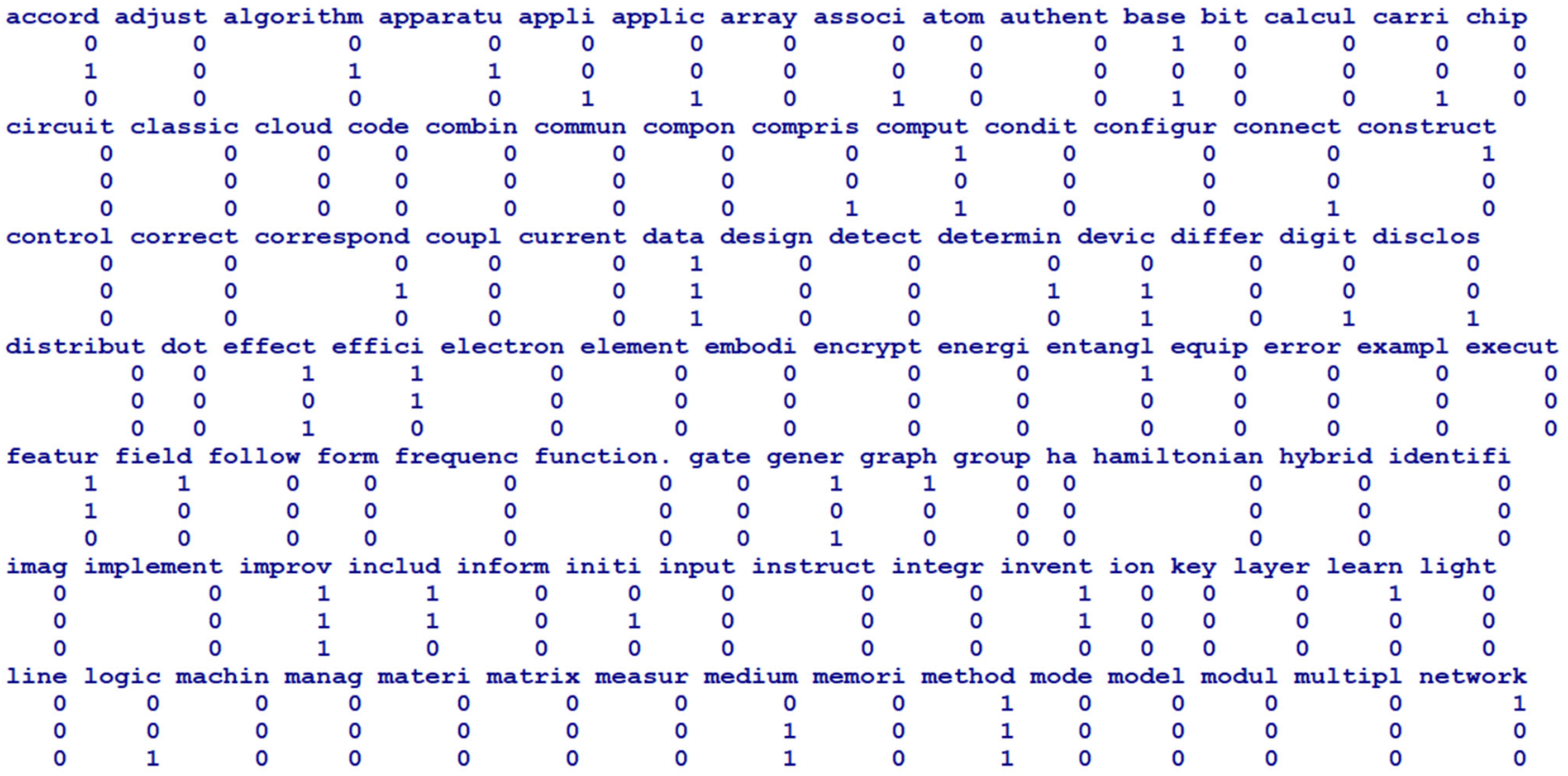

| Algorithm 1 Building Document–Keyword Matrix |

|

Input: - Document data - Unique string separator |

|

Output: - Extracted keywords - Document–keyword matrix 1. Collecting text data - documents, papers, patents, reports, news articles, legal documents - social media and chat comments, online comments 2. Checking data schema - removing duplicated documents - missing value imputation 3. Normalizing text data - removing spaces, numbers, symbols, etc. - lowercasing, using stopwords by user dictionary 4. Lemmatization and stemming 5. Parsing - corpus, text database 6. Constructing document–keyword matrix - sparse matrix, keyword extraction - each element representing frequency of keywords occurring in each document 7. Changing element value from count to binary - binary document–keyword matrix - using binary matrix for transaction data for association rule mining |

| Algorithm 2 Bayesian Pattern Mining |

|

Input: - sparse document–keyword matrix with binary data |

|

Output: - means and credible intervals of association rules 1. Constructing 2 × 2 contingency table - X: antecedent item (keyword) - Y: consequent item (keyword) 2. Building posterior distribution - prior: - likelihood: - posterior: 3. Obtaining interesting measures - support: - confidence: - lift: 4. Estimating mean and credible interval - drawing samples from posterior distribution - estimating probability values, expectation, and credible interval |

| Algorithm 3 Bayesian Inference for Association Rules |

|

Input: - of contingency table - prior, likelihood (M samples), posterior - threshold of confidence |

| Output: - summary statistics of confidence and lift - credible intervals of confidence and lift - probability values of and 1. Obtaining parameters for posterior distribution - for all elements 2. Drawing M samples from posterior distribution - posterior: 3. Computing interesting measures per sample - - - 4. Estimating summary statistics - calculating posterior mean and 95% credible intervals - estimating probability values of |

4. Experimental Results

5. Discussion and Implications

5.1. Theoretical Implications

5.2. Practical Implications

5.3. Differentiation from Existing Work

6. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Dai, Z.; Zhao, X.; Cui, B. TFIDF Text Keyword Mining Method Based on Hadoop Distributed Platform Under Massive Data. In Proceedings of the 2024 IEEE 2nd International Conference on Image Processing and Computer Applications (ICIPCA), Shenyang, China, 28–30 June 2024; pp. 1844–1848. [Google Scholar]

- Hu, H.; Chen, J.; Hu, H. Digital Trade Related Policy Text Classification and Quantification Based on TF-IDF Keyword Algorithm. In Proceedings of the 2024 International Symposium on Intelligent Robotics and Systems (ISoIRS), Changsha, China, 14–16 June 2024; pp. 284–288. [Google Scholar]

- Jun, S. Patent Keyword Analysis Using Bayesian Zero-Inflated Model and Text Mining. Stats 2024, 7, 827–841. [Google Scholar] [CrossRef]

- Jun, S. Zero-Inflated Text Data Analysis using Generative Adversarial Networks and Statistical Modeling. Computers 2023, 12, 258. [Google Scholar] [CrossRef]

- Kim, J.-M.; Jun, S. Graphical causal inference and copula regression model for apple keywords by text mining. Adv. Eng. Inform. 2015, 29, 918–929. [Google Scholar] [CrossRef]

- Singh, S.; Gupta, S.; Singh, V.; Narmadha, T.; Karthikeyan, K.; Chavan, G.T. Text Mining for Knowledge Discovery and Information Analysis. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kamand, India, 24–28 June 2024; p. 61001. [Google Scholar]

- Xue, D.; Shao, Z. Patent text mining based hydrogen energy technology evolution path identification. Int. J. Hydrogen Energy 2024, 49, 699–710. [Google Scholar] [CrossRef]

- Bzhalava, L.; Kaivo-oja, J.; Hassan, S.S. Digital business foresight: Keyword-based analysis and CorEx topic modeling. Futures 2024, 155, 103303. [Google Scholar] [CrossRef]

- Feng, L.; Niu, Y.; Liu, Z.; Wang, J.; Zhang, K. Discovering Technology Opportunity by Keyword-Based Patent Analysis: A Hybrid Approach of Morphology Analysis and USIT. Sustainability 2020, 12, 136. [Google Scholar] [CrossRef]

- Chen, G.; Hong, S.; Du, C.; Wang, P.; Yang, Z.; Xiao, L. Comparing semantic representation methods for keyword analysis in bibliometric research. J. Informetr. 2024, 18, 101529. [Google Scholar] [CrossRef]

- Jain, K.; Srivastava, M. Which Technologies Will Drive the Battery Electric Vehicle Industry?: A Keyword Network Based Roadmapping. In Proceedings of the 2024 Portland International Conference on Management of Engineering and Technology (PICMET), Portland, OR, USA, 4–8 August 2024; pp. 1–9. [Google Scholar]

- Jun, S. Keyword Data Analysis Using Generative Models Based on Statistics and Machine Learning Algorithms. Electronics 2024, 13, 798. [Google Scholar] [CrossRef]

- Lee, J.; Jeong, H. Keyword analysis of artificial intelligence education policy in South Korea. IEEE Access 2023, 11, 102408–102417. [Google Scholar] [CrossRef]

- Shin, H.; Lee, H.J.; Cho, S. General-use unsupervised keyword extraction model for keyword analysis. Expert Syst. Appl. 2023, 233, 120889. [Google Scholar] [CrossRef]

- de Souza, H.C.C.; Louzada, F.; Ramos, P.L.; de Oliveira Júnior, M.R.; Perdoná, G.D.S.C.A. Bayesian approach for the zero-inflated cure model: An application in a Brazilian invasive cervical cancer database. J. Appl. Stat. 2022, 49, 3178–3194. [Google Scholar] [CrossRef] [PubMed]

- Hwang, B.S. A Bayesian joint model for continuous and zero-inflated count data in developmental toxicity studies. Commun. Stat. Appl. Methods 2022, 29, 239–250. [Google Scholar] [CrossRef]

- Lee, K.H.; Coull, B.A.; Moscicki, A.B.; Paster, B.J.; Starr, J.R. Bayesian variable selection for multivariate zero-inflated models: Application to microbiome count data. Biostatistics 2020, 21, 499–517. [Google Scholar] [CrossRef] [PubMed]

- Lu, L.; Fu, Y.; Chu, P.; Zhang, X. A Bayesian Analysis of Zero-Inflated Count Data: An Application to Youth Fitness Survey. In Proceedings of the Tenth International Conference on Computational Intelligence and Security, Kunming, China, 15–16 November 2014; pp. 699–703. [Google Scholar]

- Neelon, B.; Chung, D. The LZIP: A Bayesian Latent Factor Model for Correlated Zero-Inflated Counts. Biometrics 2017, 73, 185–196. [Google Scholar] [CrossRef]

- Park, S.; Jun, S. Zero-Inflated Patent Data Analysis Using Compound Poisson Models. Appl. Sci. 2023, 13, 4505. [Google Scholar] [CrossRef]

- Sidumo, B.; Sonono, E.; Takaidza, I. Count Regression and Machine Learning Techniques for Zero-Inflated Overdispersed Count Data: Application to Ecological Data. Ann. Data Sci. 2024, 11, 803–817. [Google Scholar] [CrossRef]

- Wanitjirattikal, P.; Shi, C. A Bayesian zero-inflated binomial regression and its application in dose-finding study. J. Biopharm. Stat. 2020, 30, 322–333. [Google Scholar] [CrossRef]

- Young, D.S.; Roemmele, E.S.; Yeh, P. Zero-inflated modeling part I: Traditional zero-inflated count regression models, their applications, and computational tools. WIREs Comput. Stat. 2022, 14, e1541. [Google Scholar] [CrossRef]

- Feinerer, I.; Hornik, K. Package ‘tm’ Version 0.7-16, Text Mining Package; CRAN of R Project; R Foundation for Statistical Computing: Vienna, Austria, 2025. [Google Scholar]

- Salton, G.; Buckley, C. Term-weighting approaches in automatic text retrieval. Inf. Process. Manag. 1988, 24, 513–523. [Google Scholar] [CrossRef]

- Hogg, R.V.; McKean, J.M.; Craig, A.T. Introduction to Mathematical Statistics, 8th ed.; Pearson: Upper Saddle River, NJ, USA, 2018. [Google Scholar]

- Ramos, J. Using tf-idf to determine word relevance in document queries. In Proceedings of the First Instructional Conference on Machine Learning, Bangalore, India, 21–23 October 2021; Volume 242, pp. 29–48. [Google Scholar]

- Sucar, L.E. Probabilistic Graphical Models Principles and Applications; Springer: New York, NY, USA, 2015. [Google Scholar]

- Yang, X.; Sun, B.; Liu, S. Study of technology communities and dominant technology lock-in in the Internet of Things domain—Based on social network analysis of patent network. Inf. Process. Manag. 2025, 62, 103959. [Google Scholar] [CrossRef]

- Lu, Y.; Zheng, Q.; Quinn, D. Introducing Causal Inference Using Bayesian Networks and do-Calculus. J. Stat. Data Sci. Educ. 2023, 31, 3–17. [Google Scholar] [CrossRef]

- Park, S.; Jun, S. Patent Analysis Using Bayesian Data Analysis and Network Modeling. Appl. Sci. 2022, 12, 1423. [Google Scholar] [CrossRef]

- Workie, M.S.; Azene, A.G. Bayesian zero-inflated regression model with application to under-five child mortality. J. Big Data 2021, 8, 4. [Google Scholar] [CrossRef]

- Roper, A.T.; Cunningham, S.W.; Porter, A.L.; Mason, T.W.; Rossini, F.A.; Banks, J. Forecasting and Management of Technology; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Hu, M.; Mu, Y.; Jin, H. A bibliometric analysis of advances in CO2 reduction technology based on patents. Appl. Energy 2025, 382, 125193. [Google Scholar] [CrossRef]

- Agrawal, R.; Imieliński, T.; Swami, A. Mining association rules between sets of items in large databases. In Proceedings of the ACM SIGMOD International Conference on Management of Data, Washington, DC, USA, 1 June 1993; pp. 207–216. [Google Scholar]

- Han, J.; Kamber, M.; Pei, J. Data Mining: Concepts and Techniques, 3rd ed.; Morgan Kaufmann: Waltham, MA, USA, 2012. [Google Scholar]

- Tan, P.; Kumar, V.; Srivastava, J. Selecting the right interestingness measure for association patterns. In Proceedings of the eighth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Edmonton, Alberta, Canada, 23–26 July 2002; pp. 32–41. [Google Scholar]

- Campello, R.J.G.B.; Moulavi, D.; Sander, J. Density-Based Clustering Based on Hierarchical Density Estimates. In Advances in Knowledge Discovery and Data Mining, Proceedings of the PAKDD 2013, Gold Coast, Australia, 14–17 April 2013; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; Volume 7819, pp. 160–172. [Google Scholar]

- Aljalbout, E.; Golkov, V.; Siddiqui, Y.; Strobel, M.; Cremers, D. Clustering with Deep Learning: Taxonomy and New Methods. arXiv 2018, arXiv:1801.07648. [Google Scholar] [CrossRef]

- Beltagy, I.; Lo, K.; Cohan, A. SCIBERT: A Pretrained Language Model for Scientific Text. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, Hong Kong, China, 3–7 November 2019; pp. 3615–3620. [Google Scholar]

- Efron, B.; Hastie, T. Computer Age Statistical Inference; Cambridge University Press: New York, NY, USA, 2016. [Google Scholar]

- KIPRIS. Korea Intellectual Property Rights Information Service. Available online: www.kipris.or.kr (accessed on 1 March 2025).

- USPTO. The United States Patent and Trademark Office. Available online: http://www.uspto.gov (accessed on 15 March 2025).

- Bernhardt, C. Quantum Computing for Everyone; MIT Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Blais, A.; Grimsmo, A.L.; Girvin, S.M.; Wallraff, A. Circuit quantum electrodynamics. Rev. Mod. Phys. 2021, 93, 025005. [Google Scholar] [CrossRef]

- Lee, P.Y.; Ji, H.; Cheng, R. Quantum Computing and Information: A Scaffolding Approach, 2nd ed.; Polaris QCI Publishing: Middletown, NY, USA, 2025. [Google Scholar]

- Montanaro, A. Quantum Algorithms: An Overview. NPJ Quantum Inf. 2016, 2, 15023. [Google Scholar] [CrossRef]

- Nielsen, M.A.; Chuang, I.L. Quantum Computation and Quantum Information, 10th ed.; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2018, 2, 79. [Google Scholar] [CrossRef]

- R Development Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2024; Available online: http://www.R-project.org (accessed on 1 March 2025).

- Hahsler, M.; Buchta, C.; Gruen, B.; Hornik, K.; Borgelt, C.; Johnson, I.; Ledmi, M. Package ‘arules’ 1.7-11, Mining Association Rules and Frequent Itemsets; CRAN of R Project; R Foundation for Statistical Computing: Vienna, Austria, 2025. [Google Scholar]

| Item | Row Sum | ||

|---|---|---|---|

| Column sum | Total sum = N |

| Technology Category | Representative Keywords |

|---|---|

| Core | algorithm, bit, calcul, circuit, comput, entangl, error, gate, Hamiltonian, ion, measur, photon, quantum, qubit, sequenc, signal, simul, state, superconduct, timetrap, |

| Software | algorithm, authent, circuit, cloud, code, connect, data, electron, encrypt, execut, function, gate, graph, implement, includ, inform, key, logic, model, modul, network, optim, perform, processor, program, random, sampl, secret, secur, simul, structur, technolog, |

| Hardware | chip, electron, energi, ion, memori, optic, optical, photon, reson, storage, substrat, superconduct, trap |

| LHS | RHS | Confidence | Lift | Support |

|---|---|---|---|---|

| bit | quantum | 0.9809 | 1.0777 | 0.1271 |

| photon | quantum | 0.9806 | 1.0773 | 0.0379 |

| chip | quantum | 0.9766 | 1.0730 | 0.0761 |

| atom | quantum | 0.9714 | 1.0673 | 0.0255 |

| superconduct | quantum | 0.9659 | 1.0612 | 0.0669 |

| processor | quantum | 0.9596 | 1.0543 | 0.0995 |

| qubit | quantum | 0.9494 | 1.0431 | 0.2134 |

| logic | quantum | 0.9493 | 1.0430 | 0.0643 |

| program | quantum | 0.9489 | 1.0425 | 0.0717 |

| hybrid | quantum | 0.9424 | 1.0354 | 0.0491 |

| computing | quantum | 0.9402 | 1.0329 | 0.7622 |

| electron | quantum | 0.9330 | 1.0251 | 0.0792 |

| code | quantum | 0.9266 | 1.0181 | 0.0583 |

| hybrid | computing | 0.9239 | 1.1396 | 0.0482 |

| connect | quantum | 0.9237 | 1.0148 | 0.0870 |

| chip | computing | 0.9118 | 1.1248 | 0.0710 |

| memory | quantum | 0.9000 | 0.9888 | 0.0454 |

| inform | quantum | 0.8997 | 0.9885 | 0.1493 |

| network | quantum | 0.8986 | 0.9872 | 0.1150 |

| program | computing | 0.8949 | 1.1038 | 0.0676 |

| calculation | quantum | 0.8919 | 0.9800 | 0.1603 |

| cloud | computing | 0.8857 | 1.0925 | 0.0299 |

| vector | quantum | 0.8834 | 0.9706 | 0.0325 |

| cloud | quantum | 0.8762 | 0.9627 | 0.0296 |

| atom | computing | 0.8735 | 1.0774 | 0.0230 |

| superconduct | computing | 0.8698 | 1.0728 | 0.0602 |

| data | quantum | 0.8683 | 0.9540 | 0.2066 |

| processor | computing | 0.8654 | 1.0675 | 0.0897 |

| electron | computing | 0.8546 | 1.0542 | 0.0725 |

| qubit | computing | 0.8535 | 1.0527 | 0.1919 |

| LHS | RHS | Lift | Confidence | Support |

|---|---|---|---|---|

| encryption | security | 5.9356 | 0.5567 | 0.0411 |

| security | encryption | 5.9356 | 0.4382 | 0.0411 |

| random | encryption | 4.9903 | 0.3684 | 0.0203 |

| encryption | random | 4.9903 | 0.2747 | 0.0203 |

| processor | memory | 4.9056 | 0.2474 | 0.0256 |

| memory | processor | 4.9056 | 0.5085 | 0.0256 |

| atom | photon | 4.3320 | 0.1673 | 0.0044 |

| photon | atom | 4.3320 | 0.1139 | 0.0044 |

| superconduct | chip | 4.1792 | 0.3256 | 0.0225 |

| chip | superconduct | 4.1792 | 0.2893 | 0.0225 |

| encryption | cloud | 3.6980 | 0.1250 | 0.0092 |

| cloud | encryption | 3.6980 | 0.2730 | 0.0092 |

| random | security | 3.6373 | 0.3411 | 0.0188 |

| security | random | 3.6373 | 0.2002 | 0.0188 |

| cloud | random | 3.0565 | 0.1683 | 0.0057 |

| random | cloud | 3.0565 | 0.1033 | 0.0057 |

| memory | logic | 2.8909 | 0.1957 | 0.0099 |

| logic | memory | 2.8909 | 0.1458 | 0.0099 |

| chip | connect | 2.8801 | 0.2713 | 0.0211 |

| connect | chip | 2.8801 | 0.2244 | 0.0211 |

| security | cloud | 2.8772 | 0.0973 | 0.0091 |

| cloud | security | 2.8772 | 0.2698 | 0.0091 |

| chip | bit | 2.7757 | 0.3595 | 0.0280 |

| bit | chip | 2.7757 | 0.2162 | 0.0280 |

| processor | hybrid | 2.6400 | 0.1377 | 0.0143 |

| hybrid | processor | 2.6400 | 0.2737 | 0.0143 |

| superconduct | connect | 2.6000 | 0.2450 | 0.0170 |

| connect | superconduct | 2.6000 | 0.1800 | 0.0170 |

| memory | program | 2.3940 | 0.1809 | 0.0091 |

| program | memory | 2.3940 | 0.1207 | 0.0091 |

| LHS | RHS | Credible Interval of Confidence Measure | Lift | Support | ||

|---|---|---|---|---|---|---|

| Mean | Lower | Upper | Mean | Mean | ||

| bit | quantum | 0.9804 | 0.9719 | 0.9882 | 1.0773 | 0.1271 |

| photon | quantum | 0.9793 | 0.9631 | 0.9910 | 1.0760 | 0.0379 |

| chip | quantum | 0.9760 | 0.9636 | 0.9855 | 1.0724 | 0.0761 |

| atom | quantum | 0.9696 | 0.9443 | 0.9879 | 1.0654 | 0.0255 |

| superconduct | quantum | 0.9652 | 0.9496 | 0.9778 | 1.0605 | 0.0669 |

| processor | quantum | 0.9590 | 0.9459 | 0.9701 | 1.0538 | 0.0995 |

| qubit | quantum | 0.9493 | 0.9392 | 0.9584 | 1.0430 | 0.2134 |

| logic | quantum | 0.9487 | 0.9312 | 0.9647 | 1.0425 | 0.0643 |

| program | quantum | 0.9480 | 0.9303 | 0.9633 | 1.0417 | 0.0717 |

| hybrid | quantum | 0.9416 | 0.9192 | 0.9602 | 1.0345 | 0.0491 |

| computing | quantum | 0.9401 | 0.9347 | 0.9455 | 1.0330 | 0.7622 |

| electron | quantum | 0.9327 | 0.9159 | 0.9492 | 1.0250 | 0.0792 |

| code | quantum | 0.9261 | 0.9053 | 0.9443 | 1.0175 | 0.0583 |

| hybrid | computing | 0.9231 | 0.8977 | 0.9454 | 1.1388 | 0.0482 |

| connect | quantum | 0.9230 | 0.9047 | 0.9399 | 1.0142 | 0.0870 |

| chip | computing | 0.9117 | 0.8904 | 0.9312 | 1.1246 | 0.0710 |

| inform | quantum | 0.8993 | 0.8836 | 0.9137 | 0.9883 | 0.1493 |

| memory | quantum | 0.8992 | 0.8710 | 0.9243 | 0.9879 | 0.0454 |

| network | quantum | 0.8983 | 0.8814 | 0.9152 | 0.9870 | 0.1150 |

| program | computing | 0.8947 | 0.8716 | 0.9155 | 1.1036 | 0.0676 |

| calculation | quantum | 0.8917 | 0.8764 | 0.9065 | 0.9799 | 0.1603 |

| cloud | computing | 0.8839 | 0.8466 | 0.9171 | 1.0903 | 0.0299 |

| vector | quantum | 0.8825 | 0.8450 | 0.9145 | 0.9696 | 0.0325 |

| cloud | quantum | 0.8745 | 0.8362 | 0.9075 | 0.9608 | 0.0296 |

| atom | computing | 0.8721 | 0.8282 | 0.9104 | 1.0757 | 0.0230 |

| superconduct | computing | 0.8693 | 0.8413 | 0.8944 | 1.0721 | 0.0602 |

| data | quantum | 0.8680 | 0.8540 | 0.8819 | 0.9539 | 0.2066 |

| processor | computing | 0.8652 | 0.8416 | 0.8863 | 1.0674 | 0.0897 |

| electron | computing | 0.8538 | 0.8282 | 0.8780 | 1.0531 | 0.0725 |

| qubit | computing | 0.8532 | 0.8373 | 0.8679 | 1.0526 | 0.1919 |

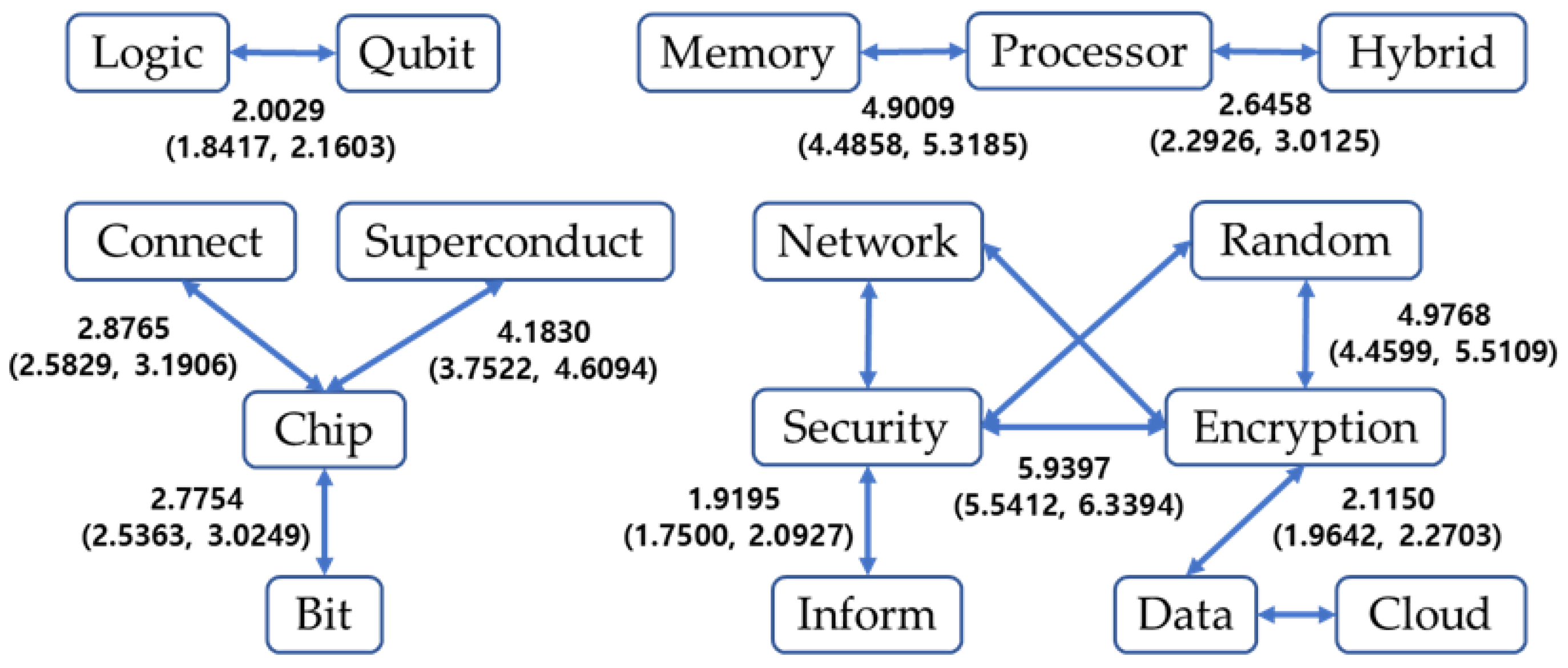

| LHS | RHS | Credible Interval of Lift Measure | Confidence | Support | ||

|---|---|---|---|---|---|---|

| Mean | Lower | Upper | Mean | Mean | ||

| security | encryption | 5.9397 | 5.5412 | 6.3394 | 0.4380 | 0.0411 |

| encryption | security | 5.9312 | 5.5399 | 6.3563 | 0.5568 | 0.0411 |

| encryption | random | 4.9807 | 4.4713 | 5.5169 | 0.2744 | 0.0203 |

| random | encryption | 4.9768 | 4.4599 | 5.5109 | 0.3680 | 0.0203 |

| processor | memory | 4.9091 | 4.4942 | 5.3458 | 0.2478 | 0.0256 |

| memory | processor | 4.9009 | 4.4858 | 5.3185 | 0.5086 | 0.0256 |

| chip | superconduct | 4.1867 | 3.7600 | 4.6149 | 0.2902 | 0.0225 |

| superconduct | chip | 4.1830 | 3.7522 | 4.6094 | 0.3263 | 0.0225 |

| security | random | 3.6474 | 3.2506 | 4.0597 | 0.2012 | 0.0188 |

| random | security | 3.6309 | 3.2322 | 4.0481 | 0.3406 | 0.0188 |

| connect | chip | 2.8765 | 2.5829 | 3.1906 | 0.2244 | 0.0211 |

| chip | connect | 2.8743 | 2.5706 | 3.2114 | 0.2708 | 0.0211 |

| bit | chip | 2.7764 | 2.5242 | 3.0326 | 0.2164 | 0.0280 |

| chip | bit | 2.7754 | 2.5363 | 3.0249 | 0.3598 | 0.0280 |

| processor | hybrid | 2.6458 | 2.2926 | 3.0125 | 0.1382 | 0.0143 |

| hybrid | processor | 2.6424 | 2.2901 | 2.9987 | 0.2741 | 0.0143 |

| superconduct | connect | 2.6076 | 2.3019 | 2.9425 | 0.2460 | 0.0170 |

| connect | superconduct | 2.6054 | 2.2929 | 2.9412 | 0.1806 | 0.0170 |

| data | cloud | 2.3239 | 2.0822 | 2.5532 | 0.0788 | 0.0187 |

| cloud | data | 2.3211 | 2.0941 | 2.5495 | 0.5520 | 0.0187 |

| network | security | 2.2942 | 2.0781 | 2.5122 | 0.2154 | 0.0275 |

| security | network | 2.2903 | 2.0867 | 2.5047 | 0.2930 | 0.0275 |

| encryption | data | 2.1150 | 1.9642 | 2.2703 | 0.5033 | 0.0371 |

| data | encryption | 2.1129 | 1.9585 | 2.2696 | 0.1561 | 0.0371 |

| logic | qubit | 2.0029 | 1.8417 | 2.1603 | 0.4504 | 0.0305 |

| qubit | logic | 2.0005 | 1.8366 | 2.1706 | 0.1357 | 0.0305 |

| security | inform | 1.9195 | 1.7500 | 2.0927 | 0.3184 | 0.0298 |

| inform | security | 1.9175 | 1.7436 | 2.0918 | 0.1800 | 0.0298 |

| encryption | network | 1.9101 | 1.6762 | 2.1574 | 0.2445 | 0.0180 |

| network | encryption | 1.9085 | 1.6822 | 2.1442 | 0.1412 | 0.0180 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jun, S. Sparse Keyword Data Analysis Using Bayesian Pattern Mining. Computers 2025, 14, 436. https://doi.org/10.3390/computers14100436

Jun S. Sparse Keyword Data Analysis Using Bayesian Pattern Mining. Computers. 2025; 14(10):436. https://doi.org/10.3390/computers14100436

Chicago/Turabian StyleJun, Sunghae. 2025. "Sparse Keyword Data Analysis Using Bayesian Pattern Mining" Computers 14, no. 10: 436. https://doi.org/10.3390/computers14100436

APA StyleJun, S. (2025). Sparse Keyword Data Analysis Using Bayesian Pattern Mining. Computers, 14(10), 436. https://doi.org/10.3390/computers14100436