1. Introduction

Vegan and vegetarian diets have been on the rise in popularity and availability over the years, as well as the number of online reviews within the food industry. Notably, The Vegan Society reported in 2019 that the number of vegans in Great Britain has quadrupled between 2014 and 2019 [

1]. It is not just the number of vegans that is increasing but also the number of people who occasionally swap meat or dairy with plant-based alternatives. In this regard, a survey conducted by Ipsos in 2022 showed that 58% of British people aged 16–75 already use plant-based alternatives in their diets [

2]. The same year, The Grocer reported that food sales of vegan products in a popular supermarket were 500% higher in January than the year before [

3]. With sales of vegan products increasing, so is the offer of vegan options in restaurants; the Vegan Society reported that 2020 was the first year that every one of the top ten UK restaurants at the time offered a vegan dish. One of the reasons people turn to vegan and vegetarian diets more often could be their many health benefits, as presented in [

4]. Another reason to go vegan or vegetarian might be the lower environmental impact plant-based diets have compared to omnivorous diets. A review paper regarding the different environmental impacts of different diets has shown that vegan diets had the lowest CO

2 production and the lowest land and water use when compared to omnivorous diets [

5]. Even though the rise of veganism is present, Oh et al. explain that research involved in vegan and vegan-friendly restaurants is lacking [

6].

In the hospitality and tourism sectors, deep learning and machine learning are still in the early stages of research [

7,

8]. This raises interest and motivation to further investigate and research topics within those sectors, including the culinary world of restaurants and, more specifically, vegan restaurants for this study.

This paper aims to find a connection between a written review of a vegan or vegetarian restaurant and the numerical rating, seeking to develop a predictive model that can accurately determine the numerical rating based solely on the content of the written reviews. Understanding the value that vegan restaurants represent to vegan and vegetarian customers is vital for the improvement of the existing restaurants and the future of vegan culinary innovation and sustainability. By comparing the performance of various machine learning models, we can determine which one is most effective for sentiment analysis and rating prediction in the context of vegan restaurant reviews.

Therefore, the paper aims to answer the following research questions: (RQ1) What are the elements of perceived quality in vegan and vegetarian restaurants? (RQ2) How do different machine learning models compare in sentiment analysis and rating prediction of vegan restaurant reviews?

It is important to point out that the study was performed in Croatia, where vegan and vegetarian food options are currently very limited. Due to a lack of past data, it was important to create a new dataset that covers the experiences of a small but rising sector of the population. Compared to regions in the EU with a developed vegan/vegetarian market, the Croatian setting brings unique problems and findings.

Furthermore, the presented study focuses on reviews published in English, which, as previously mentioned, is the chosen language for such reviews due to the international visibility of restaurants, especially on global online platforms such as Google Maps and others. This is critical, given the large number of foreign guests who prefer these types of restaurants. This choice broadens the scope of our results and ensures that the created models can be applied globally.

Although established sentiment analysis algorithms such as BERT, TF-IDF, and GloVe have been used for the experiment, in this study, they are explicitly adapted to the Croatian context, which has not yet been analyzed using this particular approach. The use of such methods is justified as they provide a basis for comparison with newer algorithms and methods, and their application to a new cultural and linguistic setting (i.e., in Croatia) represents an innovative extension of previous research.

The remainder of this paper is organized as follows:

Section 2 gives an overview of related work in the fields of text mining and sentiment analysis, which are based on customers’ reviews.

Section 3 describes the methodology used in this research.

Section 4 describes the results obtained during the research, and

Section 5 discusses those results.

2. Related Work

This section presents similar work related to predicting sentiment and numerical ratings based on textual data, more specifically—customer reviews. Other important steps and methods within the sentiment analysis realm are also presented and explained. To better understand the association between customer reviews and a product’s numerical rating, many researchers have investigated whether textual reviews can be predictive of their corresponding numerical ratings.

Research by Ghose & Ipeirotis highlights the significance of various feature categories, such as reviewer-related features, review subjectivity features, and review readability features, in predicting the helpfulness and economic impact of product reviews [

9]. Poushneh and Rajabi utilized Latent Dirichlet Allocation (LDA) to demonstrate that affective cues and words referencing specific product features can enhance the association between textual reviews and numerical ratings [

10]. Liu et al. propose an alternative perspective considering multi-aspect textual reviews and their effects on business revenue, emphasizing the importance of understanding the relationships between textual content and numerical ratings [

11].

The association between textual reviews and numerical ratings is further explored in the study by Fazzolari et al. [

12], where artificial intelligence tools are employed to comprehend the relationships between the textual content of reviews and the corresponding numerical scores. The study of Li et al. on restaurant recommendation models highlighted the significance of incorporating various textual information to accurately estimate consumer preferences, indicating that textual reviews play a fundamental role in predicting consumer behavior and preferences. By considering both the content and sentiment of textual reviews, businesses can enhance their understanding of customer preferences and improve their decision-making processes [

13]. Nicolò et al. emphasize the need for intelligent systems to utilize both textual and numerical reviews to enhance the understanding of tourist experiences and derive actionable insights for improvement [

14]. Puh and Bagić Babac show that there is a correlation between news headlines and stock price prediction [

15]. Horvat et al. propose a novel NP model as an original approach to sentiment analysis, with a focus on understanding emotional responses during major disasters or conflicts [

16], based on the n-gram language model derived from public usage of an online spellchecker service [

17].

In the realm of online reviews, Deng investigates the effects of textual reviews from consumers and critics on movie sales, highlighting how unstructured information in textual reviews can provide detailed insights into consumers’ product experiences and the reasons behind associated ratings [

18]. Xu combined customer ratings with textual reviews in the travel industry to predict customer ratings using Latent Semantic Analysis (LSA) and text regression, emphasizing the predictive power of textual content in determining numerical ratings [

19]. The study by Kulshrestha et al. demonstrates that utilizing textual features in deep learning models leads to higher rating prediction accuracy, which is particularly beneficial for domains like healthcare [

20].

The integration of textual reviews with numerical ratings provides valuable insights into customer behavior and preferences, enabling businesses to make informed decisions regarding product development, marketing strategies, and customer satisfaction initiatives. By leveraging advanced techniques such as LDA, LSA, and text regression, researchers have made significant strides in unraveling the complex interplay between textual reviews and numerical ratings, paving the way for more accurate predictive models and enhanced consumer insights.

2.1. Preprocessing and Normalization

Preprocessing and normalization are important steps in many natural language processing methods because they improve the prediction accuracy and lower the computational time of a classifier [

21]. A review study on preprocessing found conflicting preprocessing recommendations in different studies and reported that the preprocessing steps are often poorly explained [

22]. Normalization is comprised of stemming, lemmatization, stop word and punctuation removal, and spelling correction. Choosing the appropriate preprocessing steps depends on the problem and the analysis that is being conducted, but researchers recommend always lowering the words and handling negation since the meaning changes polarities instantly with negation [

22]. Since preprocessing steps are often unreported and subjective, it can be close to impossible to reproduce certain research findings [

22]. One of the steps in preprocessing is to remove stop words, for example, words like “the”, “a”, and “such”. Authors claim that the signal-to-noise ratio can be increased by removing stop words in unstructured text, and the removal of stop words can also increase the statistical significance of task-specific words [

23]. One of the characteristics regarding stop words is their term frequency, as it tends to be high. Whether to keep or remove stop words is dependent on the type of problem and analysis used in an article about preprocessing methods. The authors point out that stop words should be removed for topic modeling and cloud representation, but they should be kept for methods that use word vectors since stop words are crucial for understanding semantic relationships within sentences [

24]. By finding domain-specific stop words, authors were able to improve the accuracy of a LSTM model on a corpus of technical patent texts [

23]. To the best of our knowledge, there are no-stop word lists that are specific to the restaurant and vegan/vegetarian domain.

2.2. Topic Modelling

In a restaurant reviews rating prediction and subtopic generation study [

25], it was suggested that a restaurant experience can be divided into four aspects: service, food, place, and experience. Since the restaurant and hospitality industries share similarities in their focus on customer satisfaction, it is useful to look at research performed in the hospitality sector as well. To better understand the aspects of a successful wine tour, authors used multidimensional scaling and cluster analysis to find characteristic elements of wine tours. They identified five clusters focusing on service issues, wine and food associations, and the landscape and cultural context of the tours [

26]. It is important to identify all the aspects of a review or an experience for the customer so that certain problem areas can be improved.

2.3. Sentiment Analysis and Rating Prediction

Sentiment analysis within the hospitality and tourism sector has been explored in numerous studies. Asghar [

27] conducted an extensive analysis using a large dataset of reviews sourced from Yelp.com. Sixteen different models for predicting a numerical star rating were compared, and logistic regression with the adoption of unigrams and bigrams yielded the best results. Recent research has shown that logistic regression outperforms other machine learning approaches like decision trees, k-nearest neighbors (KNN), or support vector machines (SVM) when it comes to predicting the sentiments of textual reviews about insurance products [

28]. Other studies show different results on similar problems; a study on Twitter reviews about virtual home assistants shows that SVM performed best with an accuracy of 90.3%. The authors claim that it could be due to the unstructured nature of tweets [

21]. Ensemble-based deep learning models and methods yield better results in predicting product sentiments than more conventional and traditional methods that use machine learning [

29]. In [

30], Rafay et al. compared a naïve Bayes model and a C-LSTM neural network and showed that a C-LSTM neural network outperforms a naïve Bayes model when given the task of binary or multiclass classification of written Yelp reviews. A different study that examined the performance of Long Short Term Memory (LSTM) architecture found it to be good at predicting sentiment [

31]. Specifically, the accuracy achieved ranged between 80% and 90% when applied to predict sentiment in tourist reviews about Bali beaches on TripAdvisor. The authors found that positive reviews about Bali beaches greatly outnumbered the negative reviews, and they emphasized the importance of balancing the dataset by undersampling positive reviews; otherwise, the dataset could be biased towards positive reviews. Another look into online reviews reveals a prevailing trend of predominantly positive sentiments, where positive reviews significantly outnumber their negative counterparts. Moreover, a study on hotel reviews found that most prevalent reviews exhibit deep and strong positive sentiments, followed by ordinary positive sentiments [

32].

When compared to the convolutional neural networks, LSTM architecture was found to perform better in a study by Martin et al. on the tourist review sentiment of Booking and TripAdvisor [

33]. The authors found that the highest accuracies were obtained with a LSTM with a vector length of 300. The authors also found no positive effect of combining convolutional layers with recurrent LSTM layers. Another study [

25] that compared the bidirectional LSTM algorithm and Simple embedding + average pooling on restaurant reviews found the former method to perform better in sentiment prediction and generating subtopics and the latter to be superior in online review rating prediction tasks. In the study, authors Luo and Xu also elaborate that deep learning algorithms outperform machine learning models due to a large dataset.

Even though deep learning models often significantly outperform machine learning approaches in typical NLP tasks with large datasets, they lack explainability [

34]. Another study on deep learning techniques regarding sentiment analysis and rating prediction criticized these models for being a black box model with unknown and untraceable predictions [

35].

Large Language Models (LLMs) have also recently been used in the context of sentiment analysis. A study performed by Zhang et al. [

36] offers several insights into the effectiveness of Large Language Models (LLMs) like GPT-3, PaLM, and ChatGPT in multiple sentiment analysis tasks. The study reveals that LLMs perform well in simple sentiment analysis tasks, such as binary sentiment classification, even in zero-shot settings.

Another study by Zhang et al. [

37] looked at different sentiment analysis tasks, and LLMs found that big LLM-s show state-of-the-art performance, particularly in zero-shot and few-shot learning scenarios, outperforming sLLMs in datasets with limited training data and imbalanced distributions.

Rodriguez Inserte et al. [

38] explored the use of smaller large language models (LLMs) on financial sentiment analysis and demonstrated that smaller models, when fine-tuned with financial documents and specific instructional datasets, can achieve performance comparable to or even surpass larger models like BloombergGPT in financial sentiment tasks.

Falatouri et al. [

39] evaluate the performance of large language models (LLMs) like ChatGPT 3.5 and Claude 3 in service quality assessment using customer reviews. The study demonstrates that LLMs perform better than traditional NLP methods in both sentiment analysis and extracting service quality dimensions. ChatGPT achieved an accuracy of 76%, and Claude 3 reached 68% in classifying service quality dimensions from reviews. In sentiment analysis, ChatGPT gave similar answers as human raters, outperforming traditional models like VADER and TextBlob. While LLMs offer improvements in efficiency, there remain challenges, such as misclassifications, highlighting the need for human oversight. The paper concludes that LLMs enhance service quality assessment but require further refinement for optimal performance [

39].

A novel methodology was introduced by Miah et al. [

40] to perform sentiment analysis across multiple languages by translating texts into English. The study employs two translation systems, LibreTranslate, and Google Translate, and uses a set of sentiment analysis models, including Twitter-Roberta-Base-Sentiment, BERTweet-Base, and GPT-3. The proposed ensemble model achieved an accuracy of up to 86.71% and an F1 score of approximately 0.8106 when using Google Translate. The research findings underscore the feasibility of using translation as a bridge for sentiment analysis in languages lacking extensive labeled datasets, making significant contributions to cross-lingual natural language processing.

3. Methodology

To obtain the answers to the previously mentioned research questions, the research was divided into five distinct stages.

The first stage consisted of gathering data from TripAdvisor [

41]. Only written reviews and numerical ratings from vegan and vegetarian restaurants were scraped using Python 3.9.0.

After gathering the data, the next step was to preprocess it by removing certain words and punctuation. During preprocessing, two datasets were created, one consisting of reviews and a binary value for sentiment, 1 being positive and 0 negative, and another dataset consisting of reviews and their original numerical rating from 1 to 5 stars.

The next step was to apply clustering methods to the corpus of reviews to understand important aspects of the vegan culinary experience.

When data was clean and ready to be used in training, multiple machine learning models were trained and tested.

The last stage was a search for answers and insights within the obtained results.

3.1. Data Collection and Preprocessing

Our research data was scraped from the TripAdvisor site in the span of three months, from November 2022 to January 2023. Each restaurant that we scraped data from was checked via The Happy Cow [

42]; the community focused on veganism and vegetarianism and a restaurant guide to ensure that it is a vegan or a vegetarian restaurant. A total of 33,439 reviews in English and respective ratings were collected from different vegan and vegetarian restaurants, as well as from restaurants that offer a vegan or vegetarian menu.

To ensure data consistency and quality, all non-English words, numbers, URLs, and special characters were removed during preprocessing. Reviews that did not scrape correctly or were incorrectly saved in the CSV file were also excluded from the analysis. Due to the volume of available data, not all restaurants were scraped to avoid overwhelming the site; instead, a smaller number of restaurants with a high number of reviews were chosen.

The data has been anonymized to protect individual privacy and ensure that no personally identifiable information is used in the analysis.

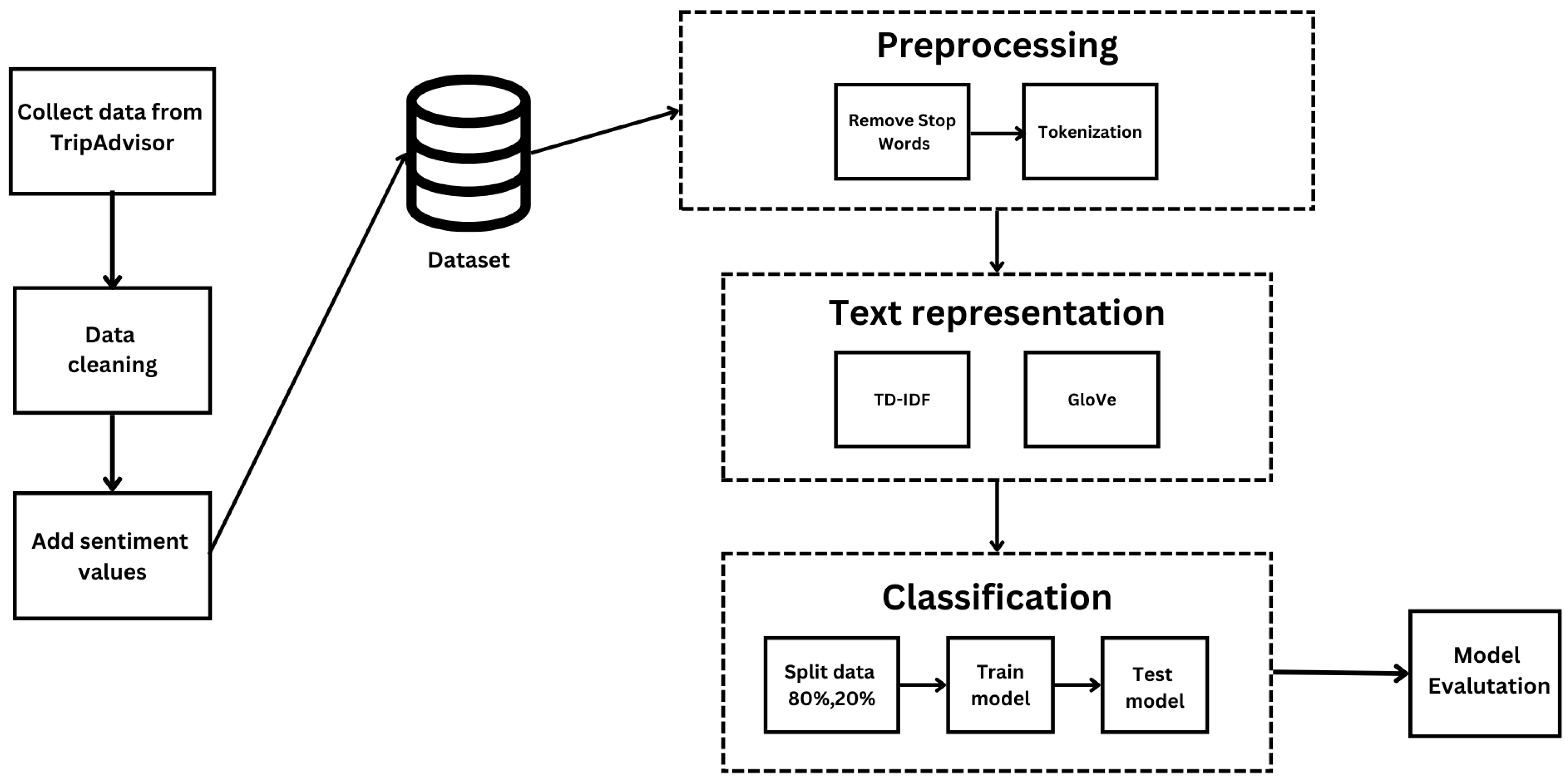

Figure 1 depicts how data collection and preprocessing steps and model training were performed.

The restaurants that were chosen for scraping were always situated in big or capital cities because vegan cuisine is often sparse in rural areas and small cities. Only textual reviews and numerical ratings were collected because the focus of this paper is to identify characteristics of vegan and vegetarian culinary experiences and test the ability of machine learning models to predict the ratings and sentiments of reviews (

Table 1).

Preprocessing the data consisted of two steps.

The first step was to remove stop words and non-English words, lemmatize the reviews, and remove any punctuation. This is a common step in preprocessing since it removes words like “the”, “a”, “I”, “on”, etc. Lemmatization is the step necessary for reducing the word to its base or canonical form.

The second step was adding the sentiment value to the reviews, thus expanding the current dataset with a semantical value, zero for negative sentiment and one for positive sentiment.

The WKWSCI Sentiment Lexicon [

43] was used to determine the sentiment of the review by summing each word’s sentiment given by the lexicon and averaging it with the number of words in the review. Positive results yielded a positive sentiment, and similarly negative results yielded a negative sentiment. Sentiment was determined by combining a numerical rating from the TripAdvisor site and the WKWSCI Sentiment Lexicon. In this lexicon, scores for sentiment are integer values that span from −3 to 3. Since the original dataset consisted of a written review and a numerical rating ranging from one to five, with five being the most positive score, sentiment analysis took those numerical ratings into consideration as well. This combination was used to gain better insight into the reviews rated with three stars and to spot any contradicting reviews where the numerical rating does not match the written review. The following rules were used to determine the sentiment of the review (

Table 2):

rating of 5 or 4 and a sentiment score higher than “−3” → positive sentiment 1,

rating of 3 and a positive sentiment score → positive sentiment 1,

rating of 2 or 1 and a sentiment score of “3” → positive sentiment 1,

rating of 5 or 4 and a sentiment score of “−3” → negative sentiment −1,

rating of 3 and a non-positive score → negative sentiment −1,

rating of 2 or 1 and a sentiment score lower than “3” → negative sentiment −1.

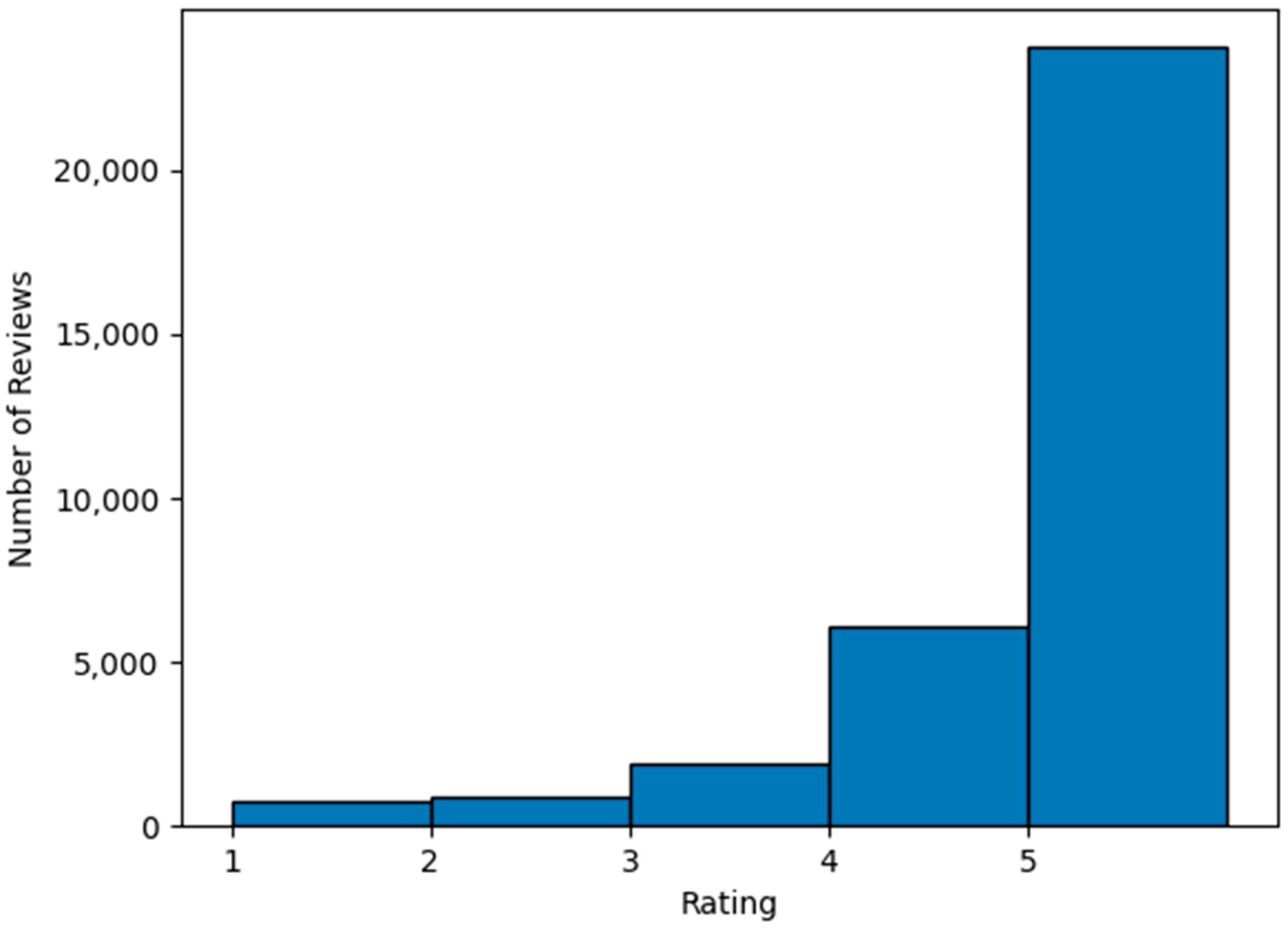

In the dataset, more than 70% of the reviews are 5-star reviews, and an additional 18% are 4-star reviews. The remaining 12% of the dataset comprises 3-star, 2-star, and 1-star reviews combined. Unfortunately, this indicates that the dataset is highly imbalanced, with a significant skew towards positive reviews. Understanding this imbalance is crucial for interpreting the model’s performance and ensuring that any evaluation metrics accurately reflect the model’s ability to handle less frequent classes.

Figure 2 gives the distribution of the star-ratings in the developed dataset.

3.2. Text Representation Model

Word representation is a means of converting words into numerical values that can then be understood by computers. Methods of word representation can be as simple as using word occurrence in a document or document frequency and as complex as using multidimensional vectors and deep learning to calculate the co-occurrence of each word with another in a document. Word embeddings, are real-valued representations of words produced by distributional semantic models (DSMs), and they are a nessecity tool in NLP, but Bakarov points out that they are often limited not well understood [

44]. More specifically word embedding can be represented as a mapping:

that maps a word w from a vocabulary

V into a real-valued vector

in an embedding space of dimensionality

D [

45]. Common word representation methods that are also used in this paper are term frequency inverse document frequency (TF-IDF) and global vectors (GloVe). TF-IDF is often used in NLP tasks because of its simplicity. It uses the relevance of the word in an entire document and scales it over all documents. Term frequency is the number of times a word or term

t appears in a document, divided by the total number of words in a document.

Inverse document frequency does an almost opposite calculation, it represents how important a word is in the entire set of words. For rare words in the corpus, IDF will be high. It is calculated by dividing the total number of documents or reviews, in this case, by the number of documents that contain a specific word—document frequency.

The TF-IDF value is then calculated as a product of the two values.

GloVe is a global log-bilinear regression model for the unsupervised learning of word representations [

46]. The researchers at Stanford trained the GloVe model on aggregated global word-word co-occurrence statistics from a corpus, and the resulting representations are multidimensional vectors with numerical values. The importance of this kind of representation is that words are represented numerically without losing their semantic meanings. This is best shown in a popular example from the GloVe research: the words king and queen are opposites when taking the gender of the word into account, just like the words man and woman.

3.3. Clustering and Visualization

To graphically represent the written reviews, we used the Multidimensional Scaling Method (MDS), which represents consumer opinions on a Cartesian plane. It is a statistical technique aimed at visually representing similar data points in a lower-dimensional space. The intent is to identify similarities in the set of terms that correspond to the dimensions of vegan restaurants. MDS is based on a distance model and can be used on existing word vectors, or a new document-term matrix can be calculated for a given dataset. The ability to calculate distances allows us to graphically interpret neighboring words. As presented in a paper on wine tours [

26], a document matrix is a matrix that describes the frequency of terms occurring in a collection of documents. Document-matrix can be transformed into a co-occurrence matrix in which the number of times two words occur together in the same review is presented. In the end, the cosine similarity index is used to calculate the distances between the terms.

In this paper, MDS is used to represent the word vectors from reviews. The word vectors used are obtained from GloVe. MDS was used to reduce the high-dimensional GloVe vectors into a two-dimensional space.

After MDS, the vectors are represented in two dimensions, and we can perform clustering to determine different topics and themes in the reviews.

A simple clustering method to categorize the embedded points is K-means. It is an unsupervised learning algorithm for partitioning data into k clusters, where each word vector belongs to the cluster with the nearest mean. It operates by initializing k centroids randomly, then it iteratively assigns each data point to the nearest centroid and recalculates the centroids based on the current cluster memberships. The process repeats until the centroids stabilize and the cluster assignments no longer change significantly.

Here the represents the centroid for each cluster i and is a set of data points assigned to the cluster, and x are the individual data points in the cluster. In this case, x is one of the top 200 words occurring in the reviews. The main downfall of K-means as an unsupervised learning algorithm is the need to know the number of centroids or clusters before training.

Since the actual number of topics is not known in advance, two common methods for determining

k are presented: the elbow method and the silhouette method. These methods have been successfully applied in various studies, such as sentiment analysis of tweets [

47] or the development of machine learning models for treatment prediction [

48].

The elbow method is a heuristic used in determining the optimal number of clusters for K-means clustering. It involves running the K-means algorithm on the dataset multiple times for a range of k values and calculating the sum of squared distances from each data point to its assigned centroid. When all of these distances are plotted against the number of clusters, the “elbow” point can be seen on a graph, where the rate of decrease sharply changes, signifying the natural division of clusters.

The other method is the silhouette method. It measures the quality of clustering by calculating the silhouette coefficient for each data point or word. This value ranges from −1 to 1, where a low value indicates that the data point is poorly matched to its own cluster and well matched to the neighboring clusters. The optimal number of clusters k is usually the one that maximizes the average silhouette coefficient across all data points and the one that has the silhouette widths approximately the same.

3.4. Machine Learning Methods Used in the Experiment

Since the problem presented in this paper is a binary and a multiclass classification problem, several machine-learning methods were explored and tested. The five methods used were Naïve Bayes, support vector machines (SVM), random forest, a neural network, and Bidirectional Encoder Representation from Transformers. These methods can be used for binary classification (sentiment analysis) and multiclass classification (rating prediction).

The selection of algorithms for the experiment was primarily guided by the existing literature in the field of sentiment analysis for vegan and vegetarian restaurants. The review of previous studies, as described in

Section 2, revealed that the selected algorithms are the most suitable due to their effectiveness in processing textual data and their ability to extract meaningful patterns relevant to consumer behavior and sentiment.

3.4.1. Naïve Bayes

A simple yet powerful machine learning method is the Naïve Bayes classifier. Bayes’ Theorem is at the center of the Naïve Bayes classifier with the naïve assumption that features are independent given the class label. The probability that the Bayes theorem calculates is the probability of an event based on prior knowledge. Bayes theorem is presented in the equation below where

P(y|x) is the posterior probability of a class

y given the data point

x, i.e., the probability of the data point

x belonging to the class

y.

P(x|y) is the likelihood, which is the probability of observing the data point

x, given that it belongs to class

y.

P(y) is the prior probability of the class

y before observing the data.

P(x) is the marginal likelihood, which is the probability of observing the data

x under all possible classes.

The task of multiclass classification with Naïve Bayes classifier is similar to the binary one, but the computation extends across more than two classes. The predicted class is one with the maximum posterior probability P(y|x).

3.4.2. Support Vector Machines

Support vector machines (SVMs) find the optimal hyperplane that maximizes the margin between classes. In higher dimensions, this involves constructing a hyperplane or a set of hyperplanes in a high-dimensional space. For a sentimental analysis task, the SVM algorithm seeks the hyperplane with the largest margin between the two classes, positive and negative, where the margin is defined as the distance between the hyperplane and the nearest points from either class, known as support vectors. The SVM optimization problem has defined constraints regarding the support vectors and is therefore solved as a dual Lagrange function. It aims to maximize the margin width between the support vectors and the hyperplane while using only the closest data points to the margin. This optimization problem with constraints can be modeled as follows:

Subject to constraints:

The optimal solution found represents the hyperplane that separates the two classes. For a rating prediction, the SVM method extends binary SVM through methods like “one-vs-one” (OVO) or “one-vs-rest” (OVR), where multiple binary classifiers are trained, and a voting system decides the final classification, making it a collection of binary classifiers.

3.4.3. Random Forests

Random forests accumulate the predictions of multiple decision trees to improve classification accuracy. It operates by constructing a multitude of decision trees during the training phase and outputting the class, that is, the mode of the classes for classification or mean prediction for regression of the individual trees. For a binary classification, each tree in the forest makes a binary decision, and the class with the majority vote across all trees is chosen as the final classification. Since sentiment analysis datasets can often be unbalanced, the use of random forest can be useful because the random forest is naturally equipped to handle imbalanced data through its ensemble approach. For a multiclass classification, the process is similar to binary classification, but each tree’s decision contributes to a vote for one of multiple classes. The class receiving the highest number of votes is selected. The training algorithm for random forests selects a random sample with the replacement of the training set and fits the trees to these samples. After training, predictions for unseen sample

are made by finding the average of predictions given from all the individual trees on the unseen sample shown as:

where B is the total number of trees used and

(

x) is the prediction from each of the trees.

3.4.4. Neural Networks

Neural Networks consist of layers of connected neurons, where each connection represents a weighted dependency. Activation functions determine the output at each node, allowing the network to model complex non-linear relationships. In a binary classification neural network, the output layer typically has a single neuron with a sigmoid activation function, yielding a probability score. The decision boundary is usually set at 0.5, with scores above this threshold classified as one class and below as the other.

For multiclass classification, the output layer contains a neuron for each class with a softmax. Softmax is a mathematical function that converts a vector of numerical values into a vector of probabilities, where the probabilities of each value are proportional to the exponential of the original values. Here, it is used in the last layer of a neural network for a rating prediction, and the output values sum up to 1, which can be interpreted as class probabilities. The class with the highest probability is the predicted class. The Formula (8) shows the way a neural network calculates the softmax value for each node that represents each of the multiple classes.

3.4.5. Bidirectional Encoder Representation from Transformers

Bidirectional Encoder Representation from Transformers is a language model based on transformer architecture. Transformers are a class of deep learning models that utilize self-attention mechanisms to weigh the importance of different parts of the input data differently, enabling the model to capture complex dependencies and relationships in text data. In a survey regarding BERT-related papers, it was concluded that in 2020, BERT became the baseline for NLP tasks [

49]. BERT consists of three modules: embedding, a stack of encoders, and un-embedding. The embedding module is used to convert an array of one-hot encoded tokens into an array of vectors. The second module performs transformations over the array of vectors from the first module. The last module is the un-embedding module, and it translates the final representation of the vector back into one-hot encoded tokens.

Using BERT consists of two main steps: pre-training and fine-tuning. Pre-training consists of many different tasks regarding training objectives and how to approach them. Pre-training can consist of masking words or experimenting with span replacement. Fine-tuning is crucial to teach the model to focus more on the task at hand.

3.5. Model Architectures

The initial phase of the research involved a thorough analysis of the dataset using the K-Nearest Neighbours (KNN) algorithm, varying the value of k from 2 to 15 to determine its impact on model performance. MDS was used to represent the data in three clusters to visualize the dataset’s intrinsic structure, providing a foundational understanding of the dataset’s characteristics. The investigation was structured around two primary tasks: sentiment analysis and rating prediction. The sentiment analysis aimed to categorize textual data into sentiment classes, while the rating prediction task was approached as a multiclass classification problem, predicting ratings on a scale from 1 to 5. Two methods were utilized to represent the text: term frequency-inverse document frequency (TF-IDF) vectors and GloVe (Global Vectors for Word Representation) embeddings.

The first task involved sentiment analysis using TF-IDF and later GloVe vectors to transform text data into a numerical format conducive to machine learning models. We applied NB, SVM, Random Forests, NN, and BERT to this representation, analyzing the effectiveness of each model in discerning sentiment from textual data. The second task focused on predicting ratings using TF-IDF vectors and GloVe vectors. This multiclass classification challenge required the models to predict a rating scale from 1 to 5, assessing the capability of NB, SVM, Random Forests, NN, and BERT to handle small distinctions in textual sentiment and user ratings.

3.6. Model Implementations

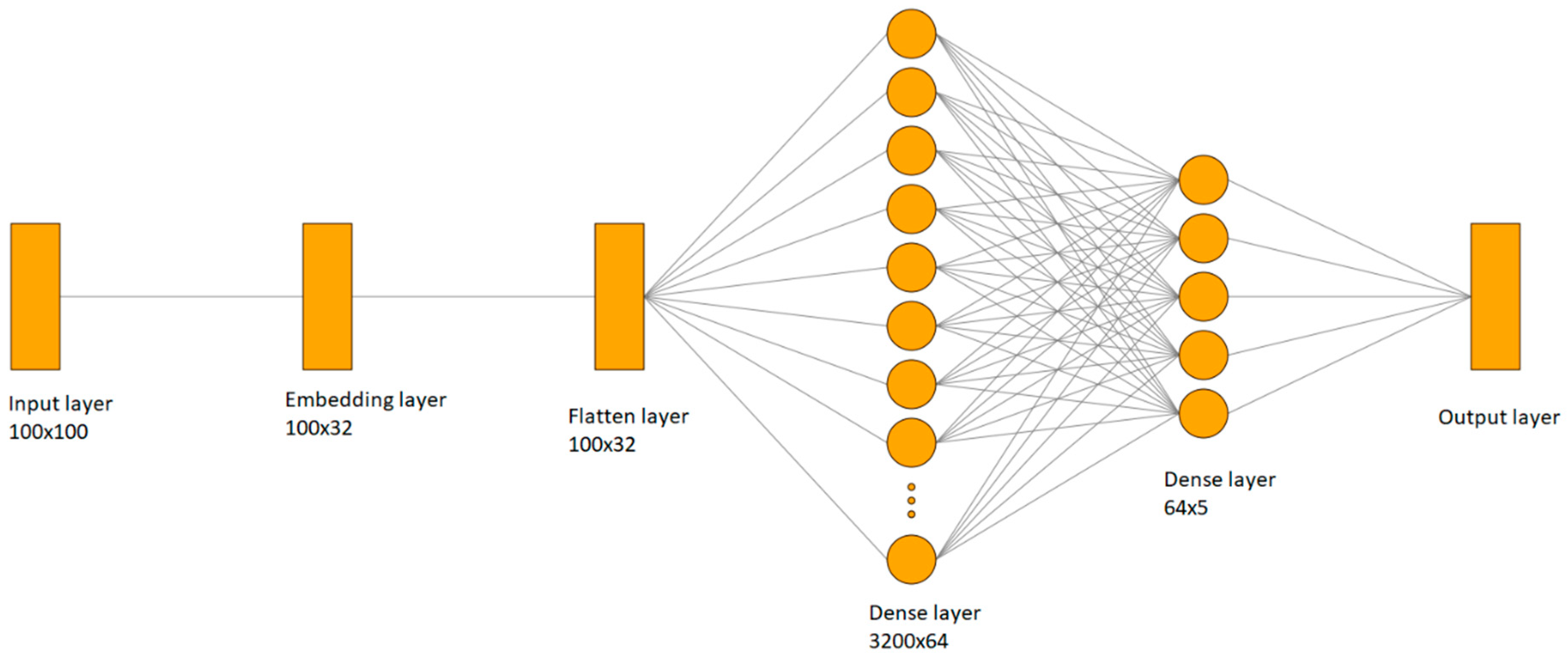

The neural network model is constructed using the Sequential API from Keras 2.7.0 and includes an Embedding layer that converts input sequences into dense vectors of fixed size, with a vocabulary limited to 5000 words. Following the Embedding layer, a flattened layer transforms the 2D matrix output into a 1D vector for input into a Dense layer. The Dense layer has 64 units with a ReLU activation function to capture patterns in the data. The final output layer is a Dense layer with five units using a softmax activation function for multi-class rating classification by producing a probability distribution over the classes. The model is compiled with the Adam optimizer and sparse categorical cross entropy as the loss function, which is suitable for multi-class classification problems where target labels are integers. The visualization of the neural network is shown on

Figure 3, with the corresponding layers.

The SVM model used has a linear kernel, which is effective for handling linearly separable data, particularly when transformed into high-dimensional space by TF-IDF. The regularization parameter is set to 1.0, with a balanced approach between achieving a wide margin and minimizing misclassification errors. The text features are extracted using a TF-IDF vectorizer, and English stop words are removed to improve the relevance of the features.

The Random Forest model was configured with 100 decision trees, which helps to improve model robustness and accuracy by aggregating the predictions from multiple trees. The model uses a random seed to ensure that the results are reproducible and consistent across different runs. The TF-IDF vectorizer is used to transform the text reviews into numerical features, with common English stop words removed.

The Multinomial Naive Bayes classifier is used with its default parameters, which include alpha = 1.0 for smoothing, helping to handle zero frequencies in the data, and fit_prior = True, allowing the model to learn class priors from the training data. The TfidfVectorizer is set to limit the vocabulary to the top 5000 features, which helps manage computational efficiency and focuses on the most significant terms.

After the initial setup of the models, it is crucial to separate the training and testing data. The dataset for all models was initially split into 80% training data and 20% testing data. Some models, like neural networks and BERT, use an additional validation set. During the training of the neural networks, a further 10% of training data was reserved for validation, resulting in 8% of the original dataset being used for validation. This leaves 72% of the original dataset for training. The final 20% of the original dataset was utilized for testing the model’s performance. This approach ensures that we have a robust evaluation of the model’s ability to generalize to unseen data, with a clear separation of training, validation, and testing phases.

4. Results

In this section, the results of the paper are presented. Firstly, the visualization and clustering that used multidimensional scaling and KNN clustering are shown. The purpose of this was to better understand the characteristics of the dataset. Secondly, the results of sentiment analysis and rating prediction with various machine learning models are shown. All machine learning methods were tested once with a TF-IDF word representation and again with a GloVe word representation. As presented in the previous section, the task of determining the number of clusters in a dataset can be complex.

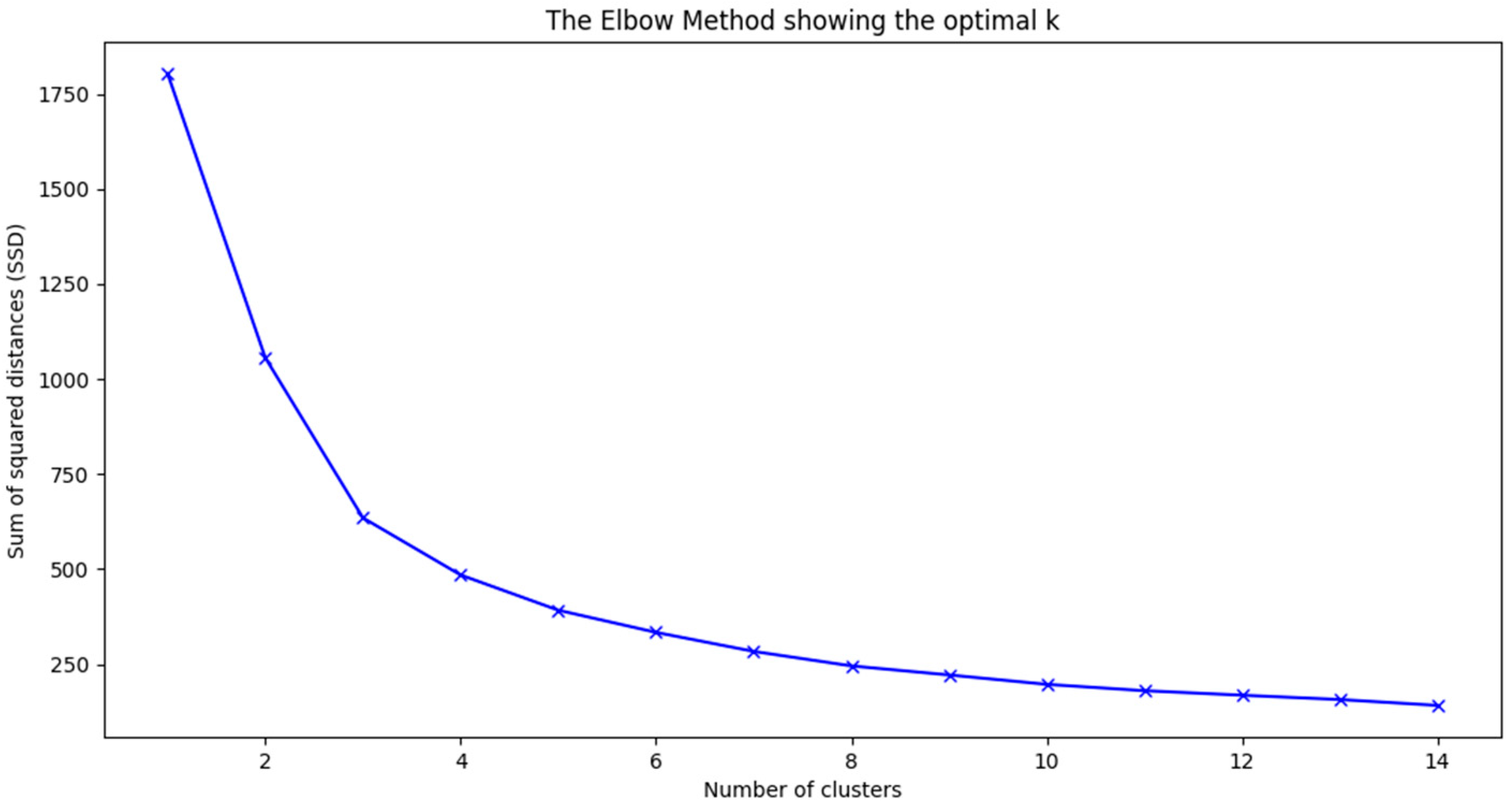

Figure 4 shows the results of the elbow method. Since there is a significant change in the decrease rate at

k = 3, we can take that into consideration when determining the optimal number of clusters.

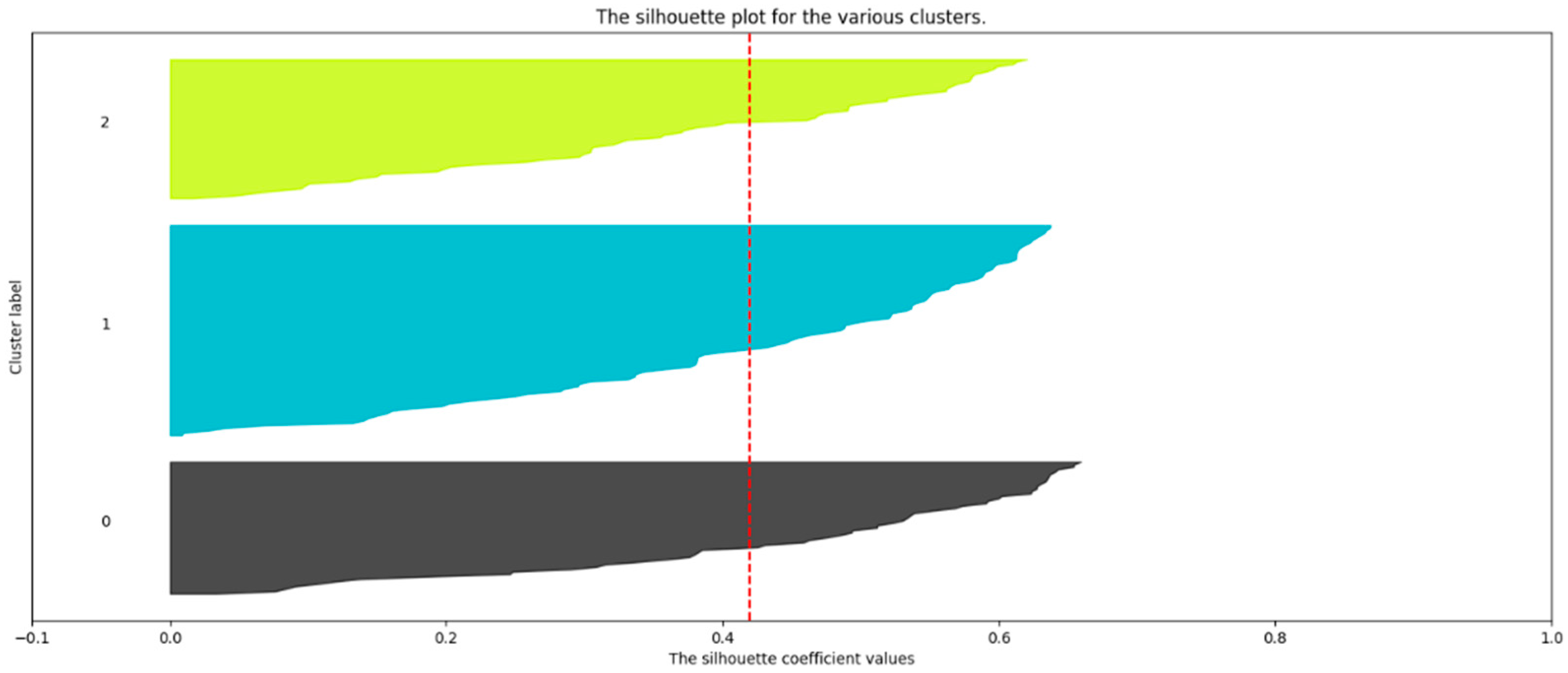

Figure 5 shows the silhouettes for

k = 3 clusters. All three silhouettes exceed the average silhouette coefficient represented by the red stripped line, and they do not vary too greatly in silhouette width.

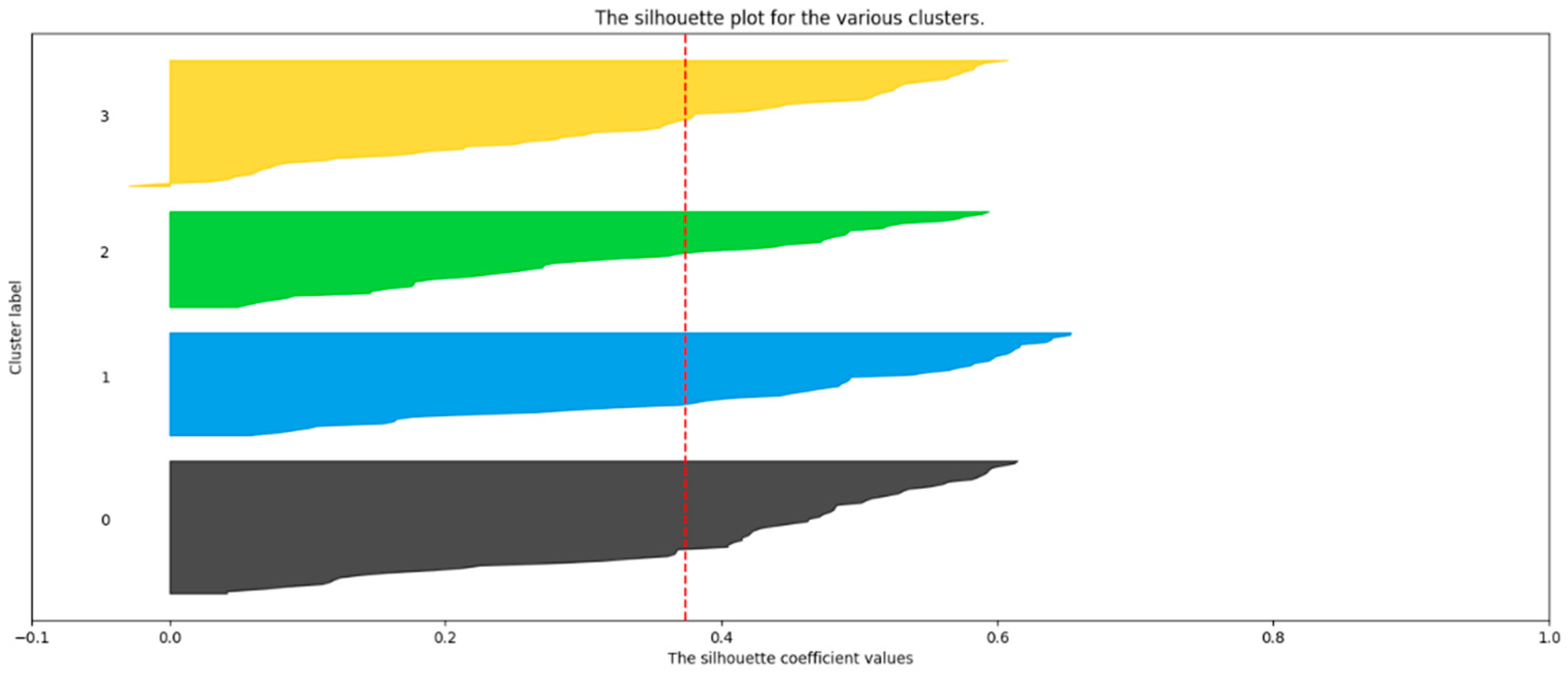

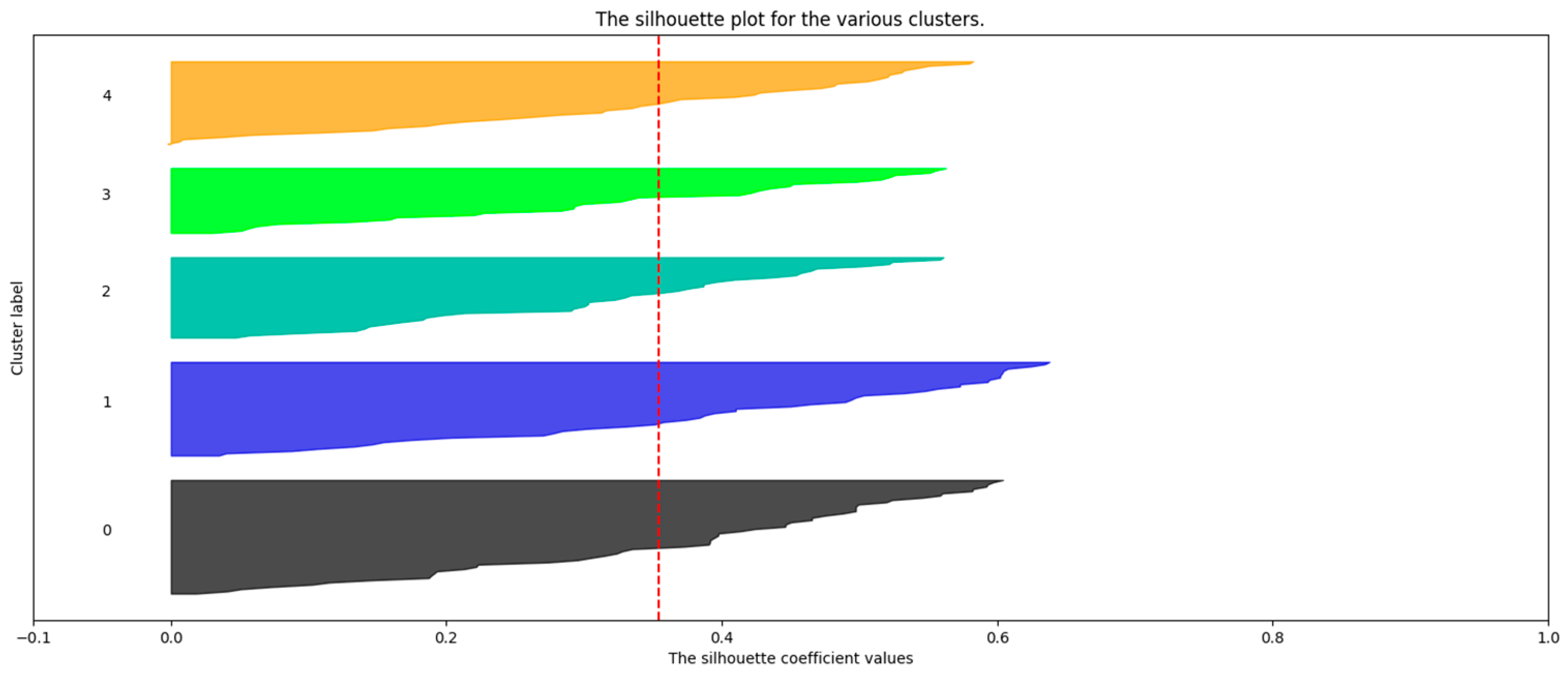

Figure 6 and

Figure 7 show silhouette methods for

k = 4 and

k = 5, respectively, and even though all silhouettes exceed the average silhouette score, some of them are too narrow, which indicates that data points are poorly matched to their assigned clusters.

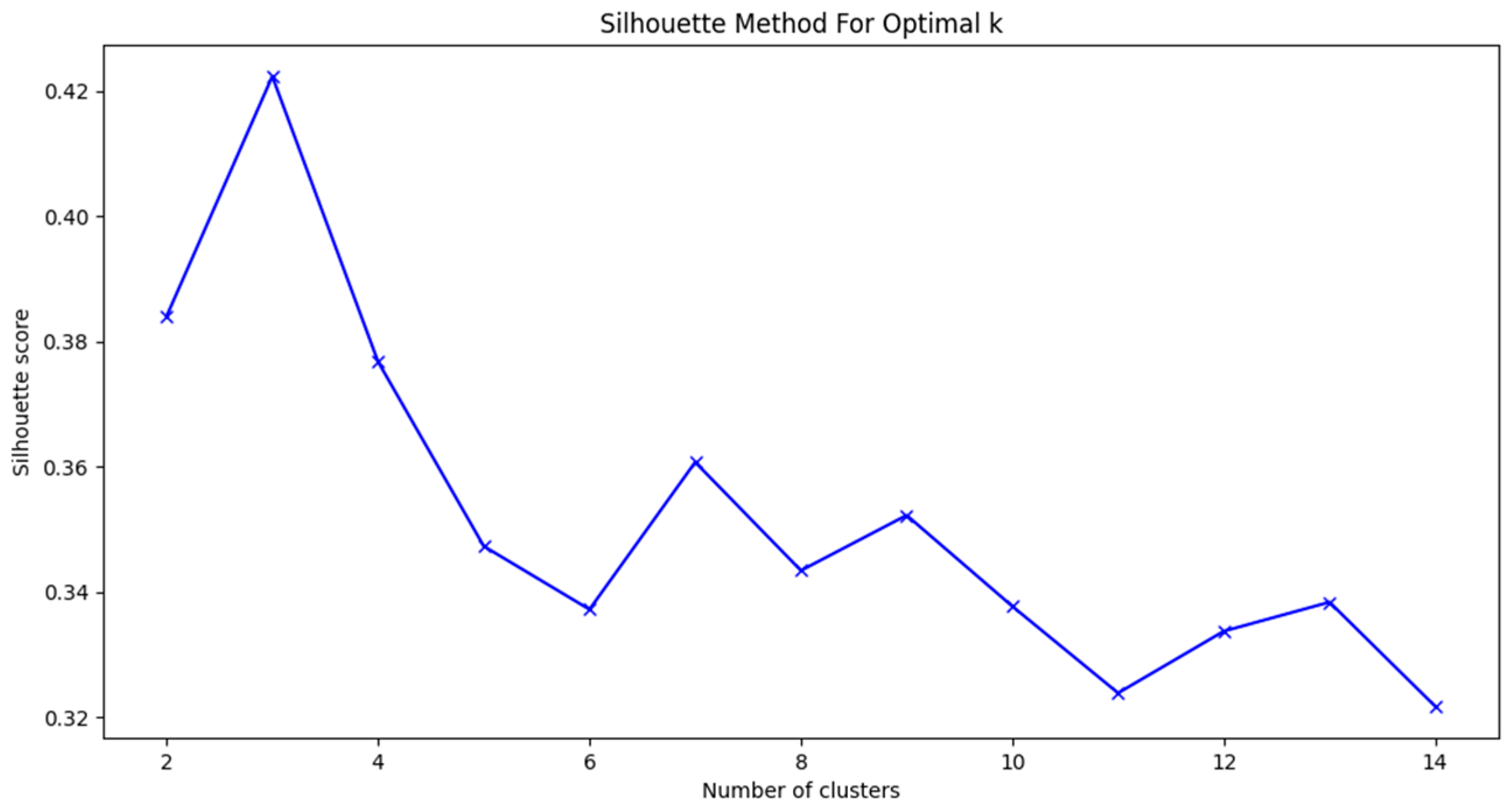

Figure 8 shows the change of the silhouette score based on the number of clusters k. This method prefers the values of k that maximize the silhouette score.

Based on the elbow method and the silhouette methods, the chosen number of clusters for the top 200 words in the dataset was k = 3.

According to the silhouette method (

Figure 5,

Figure 6,

Figure 7 and

Figure 8) and the elbow method (

Figure 4), the number of clusters was selected to be

k = 3 because the silhouettes were uniform for this value of

k, which is desirable when using this method for cluster selection. The silhouette method for a different number of clusters showed a greater variation in silhouette strength, which is not desirable. As can be seen in

Figure 4, the elbow method suggests k as the value at which the gradient stabilizes. Based on this figure, both the values

k = 3 and

k = 4 could be chosen, but after applying the silhouette method, the value

k = 3 was finally selected.

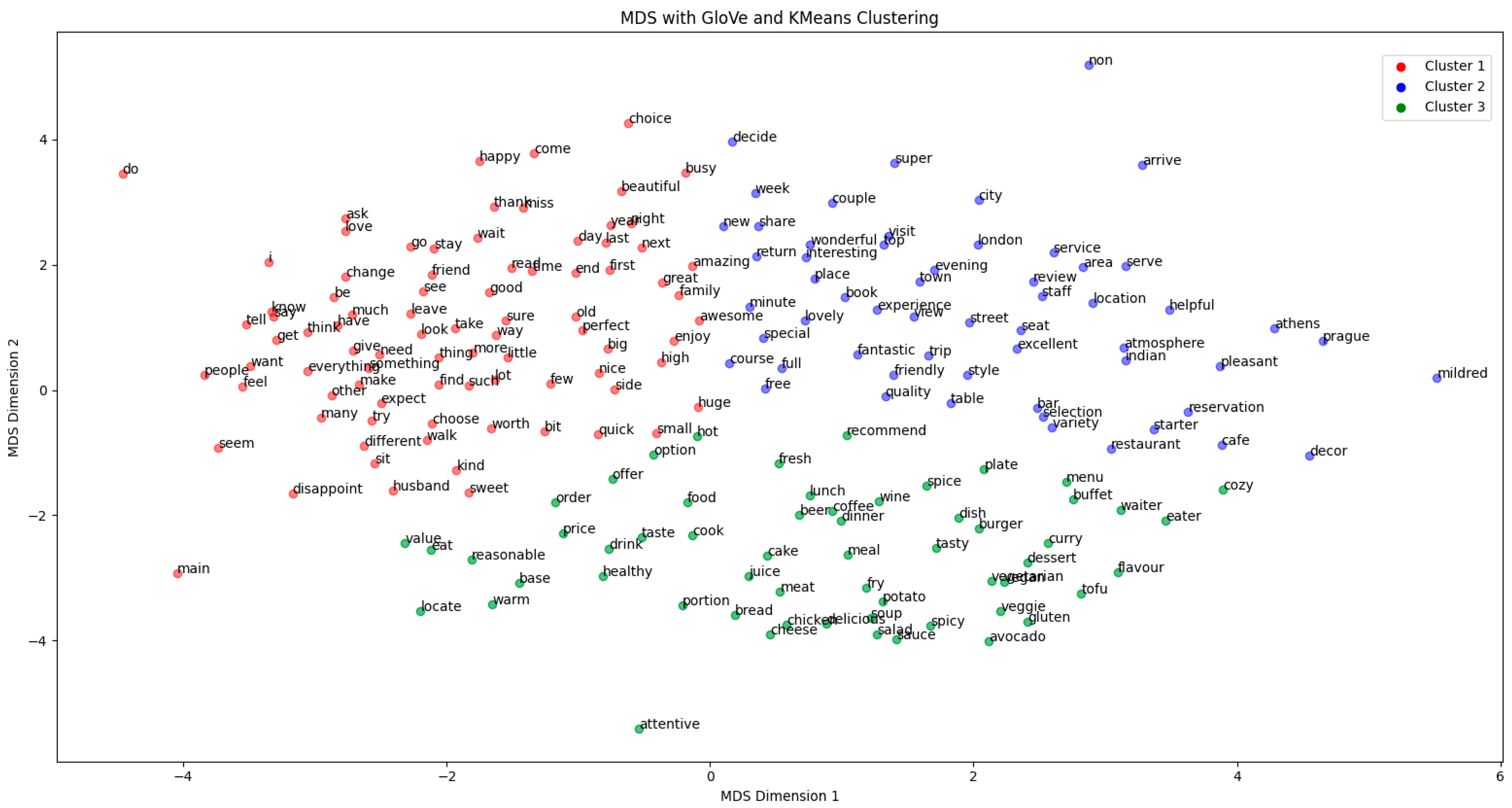

After these results were obtained, it was possible to visually represent and determine different topics of the vegan restaurant reviews, as depicted in

Figure 9.

Three primary clusters were identified:

the first highlights the restaurant’s setting and ambiance,

the second focuses on the personal experiences and emotional responses of customers,

the third emphasizes the quality, variety, and specific dietary options of the food served.

In

Table 3, the most common words from each cluster are shown. The physical environment and accessibility, including decor, location, and convenience features like parking, play a significant role in the dining experience, as reflected in words such as “new”, “street”, and “atmosphere”. Personal experiences are characterized by emotional engagement and service quality, with words like “happy”, “enjoy”, and “love”, while the food is described through its taste, presentation, and dietary inclusivity, with words like “wine”, “vegan”, and “flavour”.

4.1. Predicting Sentiment and Rating

The results of the two main tasks, sentiment analysis and rating prediction, are shown here. The sentiment analysis is used to classify reviews into positive or negative, and the rating prediction task is a multiclass classification problem, predicting ratings on a scale from 1 to 5. Two methods were utilized to represent the reviews: TF-IDF vectors and GloVe embeddings. Machine learning methods that were used are naive Bayes (NB), support vector machines (SVM), random forests, neural networks (NN), and BERT.

BERT was trained in 4 epochs with a batch size of 16. All machine learning models apart from BERT were tested with TF-IDF and GloVe vectorization and compared; only BERT preprocesses the text in its first step and uses the data from pretraining for word embeddings. The neural network that was used for rating prediction consists of four layers with the activation function ReLu. The input layer is the embedding layer, and it has 100 nodes. The first hidden layer is used for flattening the data, and it consists of 10,000 nodes and no parameters since its goal is only to transform data and not to learn any patterns. The second hidden layer is used to find patterns within the data, and it has 64 nodes. The output layer is the softmax function that has 5 nodes for each class of rating.

4.1.1. Sentiment Analyis

The sentiment analysis task had to classify reviews into positive (+1) and negative (−1) classes. The results, summarized in

Table 4 for models with TF-IDF and in

Table 5 for models with GloVe, show the performance of neural networks, random forest, support vector machines (SVM), naïve Bayes (NB) models, and BERT. The measures that are presented are overall accuracy, where models exhibited accuracy scores ranging from 0.94 to 0.96 for TF-IDF and 0.94 to 0.96 for GloVe representations. Other values presented are F1 scores for a positive and a negative class. The F1 scores for the positive class were notably high across all models and word representations, indicating robust model performance in identifying positive sentiments. The F1 scores for the negative class were significantly lower than those for the positive class, especially for models trained with GloVe vectors.

Our results demonstrate a higher accuracy value over the studies regarding customer reviews presented by Hossain [

50] on MNB with an accuracy score of 0.7619 and SVM accuracy of 0.7142, Martin [

33] using an LSTM neural network with an accuracy of 0.89, and Wadhe [

51] who obtained accuracy results of 0.86 with TF-IDF vectorization and random forests. This could be due to a variety of factors, including differences in datasets, feature representation methods (use of GloVe or TF-IDF), or model architecture and tuning.

Our results obtained from the neural network results are comparable to Hossain’s [

52], with some of our models even surpassing the accuracy of a combined CNN-LSTM architecture. This could indicate that simpler models, when properly tuned and coupled with effective feature representation methods, can achieve competitive or superior performance.

The BERT model in our analysis achieves the highest accuracy, which is consistent with current trends in natural language processing, where pre-trained transformer models like BERT show superior performance on a variety of tasks due to their deep understanding of language context. The differences in accuracy can also be attributed to methodological variations, such as the preprocessing steps, the size and nature of the dataset, the choice of feature extraction techniques, and the specific configurations of the models used.

4.1.2. Rating Prediction

The rating prediction task focused on classifying written reviews into one of five ratings (1 to 5) based on a preprocessed written review. Since reviews and rating datasets are known to be unbalanced, with the examples of positive classes or high-rating classes outnumbering the negative or lower-rating classes, it is important to consider class balancing. In our case, the five-star restaurant reviews greatly outnumber the other lower-rated reviews, as presented in

Figure 2.

This kind of imbalance can skew sentiment analysis and clustering results. While this bias is a well-documented issue in review data, our study aimed to explore these biases explicitly to understand how they affect sentiment analysis outcomes. By doing so, we sought to contribute to the field by providing insights into the limitations and reliability of sentiment analysis in real-world applications.

We considered approaches to mitigate the skewness in the data. The results in

Table 6 and

Table 7 show the differences between neural networks without class balancing and with it. Class balancing was implemented by using class weights, where less frequent classes have a higher class weight. The calculated class weights are inversely proportional to the class frequencies, and those values are passed to the neural network model during training.

Table 13 details the accuracy of each rating category using TF-IDF and GloVe representations, respectively. The accuracy of the models varied, with BERT generally outperforming other models. The model has generally performed well in predicting the extreme classes (1 and 5). For other machine learning models, NN, RF, SVM, and NB, similar accuracy was obtained across both word representations, though GloVe-based models tended to have lower F1 scores for intermediate ratings than their TF-IDF counterparts. TF-IDF word representation consistently gave slightly better results across most models and rating categories.

5. Discussion

It is important to point out that the dataset used in the presented research was created based on data from public services where users voluntarily submit their ratings, which are publicly available. However, sentiment analysis and predictive models may have inherent biases due to the nature of the data, such as the over-representation of certain sentiments or the possibility of misinterpretation of different languages for public reviews by tourists from different countries visiting Croatia. Furthermore, the results are only generalizable to a limited extent, as the dataset is region-specific and focuses on a niche market in Croatia.

5.1. Elements of Perceived Quality in Vegan and Vegetarian Restaurants

The visualization and clustering of the most frequent 200 words in the reviews can give us insights into the answer to the first research question stated in the introduction: what are the elements of perceived quality in vegan and vegetarian restaurants?

In

Figure 9, the clustering of the most common 200 words is shown. Only adjectives, verbs, and nouns were considered since non-abstract dominant topics are more significant in textual analysis. The three identified clusters represent different aspects that customers value in a restaurant experience:

The first cluster (in red) is focused on customers’ emotional responses; this cluster suggests that customers highly value the emotional aspect of dining out, including how happy, welcome, and satisfied they feel. It represents the terms customers use to describe their personal experience about the restaurant experience. This includes the feelings and emotions elicited by the restaurant, the quality of customer service, and the personal engagement with the staff and the dining environment. Some of the words that are common and represent this cluster are: “happy”, “think“, “wait”, “enjoy”, “love”. The focus is on the quality of the experience, including the attentiveness of the staff and the personal connection customers have with the place and the people.

The second cluster (blue) encompasses aspects related to the physical environment and accessibility of the restaurant. It likely includes words that describe the restaurant’s location, ambiance, decor, and convenience features such as parking availability and ease of access; some of the words from this cluster are: “new”, “street”, “free”, “atmosphere”, “experience”. The presence of these words indicates that the restaurant’s setting, including its atmosphere and physical location, is a crucial factor in the dining experience. This cluster highlights the importance customers place on the overall environment, including how new, modern, or conveniently located the restaurant is.

The third cluster (green) highlights the importance of food quality, creativity in meal preparation, and the availability of a variety of options that cater to vegan dietary preferences. There is a focus on the actual meals, including the taste, presentation, and diversity of the menu, especially in terms of accommodating different dietary needs like gluten-free or vegan options. Main words that describe this cluster are: “wine”, “burger”, “vegan”, “lovely”, “flavour”. The emphasis here is on the quality and variety of the food, including specific dishes, ingredients, and diets.

5.2. Comparison of Models in Sentiment Analysis and Rating Prediction

The results presented in

Table 1,

Table 2 and

Table 3 can give us insights into the second research question: how do different machine learning models compare in sentiment analysis and rating prediction of vegan restaurant reviews? As shown in the results, there is a slight variance in different word representation methods, and it indicates that both TF-IDF and GloVe representations are effective in capturing sentiment-related features in the text data. F1 scores were high for positive classes since there is a far greater number of data points with a positive label.

Since F1 values for the positive class were slightly higher with TF-IDF compared to GloVe, this may suggest that TF-IDF’s emphasis on unique terms slightly benefits the identification of positive sentiments. To further analyze the reasons for TF-IDF representation resulting in higher accuracy values than GloVe representations, we focus on the results achieved by the neural network for both representations.

A possible reason in the context of a neural network for rating prediction is that TF-IDF measures the “importance” of a term in a review relative to the entire dataset, which can sometimes be more effective for a domain-specific language. Additionally, the context provided by GloVe vectors, in this case, is limited, as they are simply arranged in a 100 × 100 matrix (with 100 words, each represented by a vector of 100 values), where the i-th row corresponds to the GloVe vector for the i-th word in the review, meaning that a “true” embedding matrix was not created. TF-IDF may also perform better with smaller datasets because it uses word frequencies directly from the dataset in use. Furthermore, for models like SVM and Naive Bayes, which excel with high-dimensional data and sparse matrices, TF-IDF is advantageous as it provides exactly such matrices and data.

In sentiment analysis, the F1 score for negative classes was of considerably lower value. This disparity highlights the challenge of accurately classifying negative sentiments, potentially due to imbalanced datasets. The rating prediction results show that the machine learning models were less successful at determining the rating of a written review. However, out of the machine learning models that tackled this task, BERT performed best. Since no vegan or vegetarian-specific lexicon was used, BERT may have performed better due to its ability to pre-train the model on the given data and better understand it. Research on BERT pre-training showed that using unsupervised pre-training is important for understanding [

53]. Other machine learning models performed similarly to BERT and with the sentiment analysis task using TF-IDF word representation yielded better results in rating predictions than GloVe word representation. This could be due to TF-IDF’s ability to highlight unique terms that are more predictive of specific ratings. In contrast, the GloVe representation, despite capturing semantic relationships, might dilute these unique indicators due to its pre-trained nature on a vast corpus.

6. Conclusions

This study aimed to explore the elements of perceived quality in vegan and vegetarian restaurants and to evaluate the effectiveness of different machine-leaning models in sentiment analysis and customer rating prediction. Through the analysis of the most frequent words, the research method identifies three distinct clusters that represent key aspects valued by customers. The clustering methods, supported by the elbow method and silhouette method, separated the topics into three groups: the restaurant ambiance, personal feelings and emotions, and the quality of food. Looking at the comparison of machine learning models in sentiment analysis and rating prediction, results indicate slight variances between TF-IDF and GloVe word representations. Both methods proved effective at capturing the sentiment of reviews, with a slight advantage in identifying positive sentiments. This could be due to TF-IDF identifying unique terms. Even in sentiment analysis, the challenge of tacking imbalanced datasets remains present, hence the less than desirable F1-score values of the negative class. In the task of rating prediction, BERT showed the best accuracy scores, likely due to its pre-training step. Since the vegan and vegetarian communities have grown rapidly in the last few years, there is a need to understand these new customers. Vegan and vegetarian restaurants have a major dietary difference from most restaurants, and therefore, customers could have different aspects of the dining experience they value more than customers of non-vegan restaurants. Transparency of vegan and vegetarian restaurants is valued amongst their customers, and there is a need to better understand customers’ wishes and concerns.

There are no freely available restaurant or food-specific lexicons to our knowledge, and more specifically, there are no vegan and vegetarian-specific lexicons; future research could be conducted to bridge this gap. Another significant problem of this study is the imbalance of the dataset presented in

Figure 3. We can see that the number of reviews within each of the five rating classes varies greatly. Most reviews have five stars or four stars, and the other three classes are not represented in the same numbers or even in the same order of size. Many written reviews have negation, or some opinions are not literally and clearly expressed. Since the preprocessing of tasks did not tackle negation, handling the data itself can be skewed. The use of some negating or contradicting words can change the entire meaning of a sentence, and it should be properly handled in future work. This is even more so important for underrepresented reviews with a low rating. In the sentiment analysis task, there was no neutral class, which could be a poor representation of a real review since customers have the ability to choose the rating of three stars as the neutral option. Regarding the second research question, there is room for improvement when it comes to comparing machine learning models. The presented values are overall accuracy and F1 scores for different classes, but there are more ways to compare models, and in future work, a more comprehensive way of comparing the models should be considered.

There are at least three benefits of the presented research. First, the presented predictive model provides restaurant owners in Croatia with valuable insights into customer sentiment and customer preferences by analyzing review content. By identifying which aspects of their services and offerings are most strongly associated with higher ratings, restaurant owners can prioritize improvements and refine marketing strategies to better meet customer expectations, leading to higher satisfaction and potentially increased revenue. Second, for customers, the model provides a tool to make more informed restaurant decisions by predicting ratings based on review content. This allows them to quickly find restaurants that meet their specific needs, especially in niche markets such as vegan and vegetarian cuisine. This leads to a more personalized and satisfying restaurant experience. Finally, review platforms can use the model to improve the accuracy and reliability of ratings by cross-verifying numerical scores with the sentiments expressed in the written reviews, identifying and flagging inconsistent or biased entries.

Future work can be organized around three key areas: (1) model improvement, (2) feature exploration, and (3) applying the presented research in other contexts.

For model improvement, future research could explore the integration of additional machine learning models, particularly deep learning techniques that can capture more complex data patterns. The use of models such as LSTM networks or transformer-based models (e.g., BERT) could improve both the accuracy and interpretability of sentiment analysis and valuation predictions. As for feature exploration, the authors recommend extending the analysis beyond text-based sentiment to include metadata about the reviews, such as the time of the rating, the location of the rater, or the length of the rating. These additional features could provide deeper insights and potentially improve the predictive power of the models. Finally, future work on applying the research in other contexts could extend the study to other geographic regions or niche markets within the hospitality industry. For example, applying the methodology to vegan and vegetarian restaurants in areas with more established markets could provide valuable comparative insights and help assess the generalizability of the models.

The study not only improves the understanding of the niche market of vegan and vegetarian gastronomy in Croatia but also illustrates how sentiment analysis and clustering techniques can be effectively applied to similarly underrepresented areas. This research holds significant value for researchers interested in exploring sentiment analysis and customer feedback in contexts with limited data availability.