Abstract

The digital era introduces significant challenges for privacy protection, which grow constantly as technology advances. Privacy is a personal trait, and individuals may desire a different level of privacy, which is known as their “privacy concern”. To achieve privacy, the individual has to act in the digital world, taking steps that define their “privacy behavior”. It has been found that there is a gap between people’s privacy concern and their privacy behavior, a phenomenon that is called the “privacy paradox”. In this research, we investigated if the privacy paradox is domain-specific; in other words, does it vary for an individual when that person moves between different domains, for example, when using e-Health services vs. online social networks? A unique metric was developed to estimate the paradox in a way that enables comparisons, and an empirical study in which validated participants acted in eight domains. It was found that the domain does indeed affect the magnitude of the privacy paradox. This finding has a profound significance both for understanding the privacy paradox phenomenon and for the process of developing effective means to protect privacy.

1. Introduction

In the current digital age, observers have noted that users’ increased privacy awareness influences their decisions when using the Internet and in other situations [1,2]. These decisions are significant because digital platforms, which are the foundations of the digital age, are expanding and penetrating increasingly large portions of our lives [3,4]. Users are exposed to an extensive range of privacy violation risks, on a variety of platforms. These risks occur, for example, when browsing the Internet [5], buying or selling via e-commerce [6], using wearable eHealth technologies [7], using cloud computing via 5G technologies [8], and using devices connected to the Internet of Things (IoT) [9].

Privacy concerns, which stem directly from the abovementioned risks, are one of the main drivers behind users’ decisions [10]. “Privacy concern” (also known as “privacy attitudes”) might be defined as: “concern about the safeguarding and usage of personal data provided to an entity (such as a firm)” [11]. Just as privacy risks can be observed on most digital platforms, privacy concerns are present there as well, as when using online social networks (OSN) [12], mobile technologies [13], and search engines [14]. These insights are correlated. The presence of privacy concerns results in final users acting in accordance with their privacy behavior. Privacy behavior may be defined as “an active process in which individuals limit access to personal information to attain a balance between desired and actual privacy” [15].

Given the effect of privacy concerns on privacy behavior, we might expect them to match. However, research has shown that this is only rarely the case; indeed, a significant inconsistency between people’s privacy concerns and their privacy behavior can be observed. This phenomenon is called the “privacy paradox”, a term coined by Barry Brown [16]. He noticed that the use of supermarket loyalty cards was not consistent with customers’ privacy concerns. The digital security company Norton formulated a commercial definition of the privacy paradox: “discord between our actual online activities and our attitudes about online privacy” [17]. The unexpected deviation from the privacy concern, which is revealed by the actual privacy behavior, reflects the privacy paradox.

A prominent example of the privacy paradox is the frivolity with which consumers share sensitive personal data in contradiction to their concerns about their ability to control this data [18]. It was shown that while “privacy concerns negatively affect the intention to disclose personal information”, they do not significantly affect users’ actual disclosing behaviors [19]. Another case of this phenomenon appears in the Mobile Health (mHealth) field, when the growing concern regarding this sensitive data collection is not expressed in the users’ behavior toward these applications [20]. It was also found that there is only a low correlation between the users’ stated concerns and the authorizations they grant when sharing information, e.g., when using OSN [21]. Sometimes, the term “privacy paradox” (or other similar terms) is used to express tension between two different entities, e.g., teenagers in America disclosed intimate information online while governments and commercial entities collect and use this data [22]. However, this study uses the term “privacy paradox” in its classic, prevalent definition, i.e., when tension exists within the same individual. The privacy paradox, in this sense, has been researched in specific domains, as noted above, and also as a general phenomenon. For example, Gerber et al. [23] claim that while users consider privacy a vital issue, they hardly take any significant action to protect their data.

It seems that most researchers agree that the privacy paradox does exist, and a significant number of attempts have been made to explain it. One prominent theory attributes the gap between privacy concerns and behavior to limited technological literacy [24], simply arguing that while users are concerned about their privacy, they cannot express it in action due to their limited knowledge and understanding. However, it has been shown that the privacy paradox sometimes exists even in cases when the user is equipped with adequate technological literacy [25]. Another explanation relies on the privacy calculus theory, claiming that users consider privacy loss vs. the benefits gained and therefore are ready to give up some privacy despite the existing concern [26]. Again, it was found that users tend to overweigh benefits and underestimate risks, thereby impairing the quality of their decision making [27]. Another direction for explaining the privacy paradox relates not only to the rational factors (as in the privacy calculus theory) in users’ decision making but also to emotional factors [28].

Some researchers deny the existence of the privacy paradox, e.g., Solvo [29] claims, “the privacy paradox is a myth created by faulty logic” and that the behavior observed in studies of the privacy paradox does not lead to a conclusion of less regulation. Another claim of a similar nature argues that disclosing information is not a proof of “anti-privacy behavior” [30]. Other approaches seek to divert the privacy paradox from the privacy concern factor to other factors, such as trust, claiming that concerns and behavior are not paradoxical [31]. For example, the major factor in the willingness to use e-government services is trust [32]. This approach actually agrees that a paradox does exists, but that it is not the “privacy paradox” as we define it. However, most studies do indicate that the privacy paradox exits [33,34], and even deny forecasts that it will soon disappear [35].

From the perspective of domains, the literature addresses the privacy paradox in general, e.g., ElShahed [36] tested the privacy paradox among Egyptian youth, or in specific domains, e.g., Gouthier et al. [37] addressed the privacy paradox phenomenon in e-commerce domains; Schubert [38] explored the privacy paradox in the Facebook OSN and specifically asking whether literacy mitigates the paradox. Lee [39] investigated the paradox within the Internet of Things (IoT) domain, and showed that it varied according to the IoT service. In some cases, the domain is narrowed to a very specific subject, e.g., Duan and Deng [40] explored the privacy paradox in the domain of contact-tracing apps (advanced tools for fighting the spread of pandemics like COVID-19).

In the present research, we ask if the privacy paradox is a domain-specific phenomenon. By “domain” we mean the digital platform or the type of activity used in a digital operation, e.g., e-commerce, online social network (OSN) or electronic health services (eHealth). It is well-known that the magnitude of the privacy paradox varies from one individual to another [27]. As mentioned above, there is a broad discussion in the literature on the privacy paradox in diverse domains, e.g., in online shopping [41] or self-identity in OSNs [42]. However, the question of whether the privacy paradox of a specific individual differs depending on the domain remains unanswered. The privacy paradox phenomenon can be described by a two-dimensional model: the first dimension is the domain, and the second dimension is the individual. For research purposes, the value of one dimension may be kept fixed in order to investigate how the other dimension (which varies) affects the phenomenon. Keeping the domain value fixed is prevalent, as described above. A private case of this approach is a general privacy paradox study in which the phenomenon is averaged across a few domains, resulting in a singular value. However, the literature lacks a case of keeping the individual dimension fixed and investigating how the domain factor affects the privacy paradox phenomenon. This lack is the gap that is addressed in this study. In other words, this study examines whether the privacy paradox observed in a given person varies significantly in different domains. This question is significant both theoretically and practically. From the theoretical point of view, the research enriches our knowledge of how the domain factor (which is the independent variable in this study) affects the privacy paradox phenomenon (the dependent variable). From the practical point of view, this research is applicable because despite privacy being heavily regulated, users still have to act in order to protect their privacy, and the level of privacy gained depends primarily on the effectiveness of their actions. The privacy paradox is an obstacle for privacy protection, and therefore must be addressed. Distinguishing between the magnitude of the privacy paradox in different domains may help focus proposed solutions for this problem. To answer the research question, it was necessary to develop a special metric. The prior literature deals with the privacy paradox in general, and also according to comparing variations between different populations in a specific domain, using demographic data like age, or examining the effect of technological literacy. However, within-subject (individual) analysis in different domains is yet to be considered.

By its nature, the privacy paradox is qualitative rather than quantitative. However, the current research question requires that it be quantified. Although most of the literature does consider the privacy paradox a qualitative factor, some researchers do quantify it, e.g., by calculating the perceived benefits and risks to estimate the deviation from optimality which indicates the presence of the privacy paradox [43]. Gimpel et al. [44] defined seven requirements: “quantifiability, precision, comparability, obtainability, interpretability, usefulness, and economy”, and used them to build a metric for evaluating the privacy paradox.

The rest of this paper is organized as follows: Section 2 introduces the methodology that was developed to estimate the privacy paradox in different domains for the same individual, Section 3 presents the results of the empirical study with valid participants and eight domains, and Section 4 discusses the results, limitations and further research suggestions. Finally, Section 5 summarizes the conclusions.

2. Estimation Methodology

2.1. Estimating the Paradox—Elementary Elements

The privacy paradox is the gap between privacy concerns and privacy behavior. Therefore, evaluating the privacy paradox requires measuring both factors. Of the possible methods available, for the current empirical study, we choose a pair of samplings for each individual, in each domain; one to estimate privacy concerns, and the other to estimate privacy behavior in that domain. For example, in the e-commerce domain, privacy concern was estimated by the question, “When purchasing items on the Internet, I always use secure sites”, while privacy behavior was estimated by introducing to the participant a scenario as depicted in Figure 1, and asking “You need to buy a new laptop. You choose the model, and the lowest price found on the Internet (as shown in the picture) is $750. The next lowest price is $800. Do you agree to make this purchase”? The questions for all domains were shuffled randomly to minimize interaction and mutual effect. Each sample was ranked on a non-binary Likert scale, and the difference between an individual’s answers for each domain yields the raw magnitude of their privacy paradox in that particular domain.

Figure 1.

An e-commerce scenario to estimate privacy behavior in this domain. This example was introduced to each participant as a simulated scenario.

2.2. Formalizing the Paradox Raw Measurement

To formalize the model, assume we have domains , when and is the group of all the domains (). Now, assume we have a group of individuals, indexed as . For each individual , we define the level of his/her privacy concern in domain and their level of privacy behavior in domain , as , respectively.

Now, the expression describes the differences between the concern level and the behavior level of individual regarding domain . This value is not absolute; a positive value indicates concern that is higher than behavior and vice versa. This expression is not yet the privacy paradox, because, according to prevalent usage in the academic, industrial and popular literature, the privacy paradox is limited to situations when behavior is lower than the concern (), i.e., the individual is not protecting his or her privacy as desired. A reverse situation reflects the behavior of an individual who is overreacting with regard to their privacy, and is not perceived of as a paradox. Therefore, we define the privacy paradox magnitude () to be the difference if the privacy concern is higher than the privacy behavior, otherwise it is (no paradox). is the matrix of the paradox’s magnitudes for all the individuals and all the domains:

When is the paradox magnitude of individual in domain , and is given by Equation (1). Please note that .

2.3. Paradox Analysis

The average of the paradox magnitude across all the domains for a specific individual (the average of a single line in matrix ) is calculated using:

The deviation of the paradox magnitude of a single domain from the average (both for a specific individual ) indicates how specific this domain is for individual , and is calculated using:

The real deviation should be calculated based on the absolute value of the difference; however, by omitting the absolute operator, the sign of the result indicates the direction of deviation. The index is a first straightforward indication of how specific this domain is, i.e., how it deviates from the central line; however, as will be shown in the empirical study, a deep analysis of the matrix provides a more accurate indication.

For example, assume that we have domains, namely: , and for individual we received the pairs of privacy concerns and privacy behavior levels for each domain, respectively: , i.e., and . According to Equation (1), the set of paradox magnitude for this individual is . The average paradox for this individual is , and the deviation of each domain, i.e., how specific each domain is for this individual, is given by: . In this theoretical example, it can be noticed that the specificity of the privacy paradox for the domains is relatively high, while for the others it is less specific.

3. Empirical Study

3.1. The Empirical Study Design

In the empirical study, we examined the eight domains listed in Table 1. For each domain, participants were asked a pair of questions; one aimed to test privacy concerns by addressing their general attitude to the subject (which might be considered declarative), while the other aimed to test privacy behavior by presenting a specific scenario and eliciting the participant’s action in this scenario. The answers to these questions were recorded on a five-level Likert. To reduce interaction between the answers to each question in the pairs, the questions were shuffled randomly.

Table 1.

Domains of the empirical study. This table details the list of domains that were tested in the empirical study and for each domain, the parameter that was used as an indicator.

The questionnaire was divided into six parts: (a) an explanation of the survey process, including contact details for the researchers, and the mandatory request for consent; (b) demographic questions; (c) questions testing privacy concerns vs. privacy behavior (eight pairs); (d) technological literacy evaluation; (e) general questions about privacy concerns; and (f) gratitude and reward data. The research was approved by the institutional ethics committee.

3.2. The Participants

The participants were recruited via the crowdsourcing platform Amazon Mechanical Turk (MTurk), a validated platform for research of this type. MTurk was found to be a viable alternative for data collection [45]. Platforms like MTurk were found, for example, to be a reliable tool for conducting marketing research when investigating customers’ behavior [46], a field which has similar traits to the current research; and, more closely related, this tool is adequate for psychological research [47]. By applying the MTurk qualification feature, we ensured that each participant would be assigned to one task only, granting the sample size by avoiding replication. The payment fee was $0.3, which is considered to be above average and fair reward [48]. Participants were required to be at least 18 years old, and to understand and express their consent to participate explicitly (by opting-in). The study was anonymous, both on MTurk, which was used as the requirement platform, and on Qualtrics, which was used as the survey platform. That way, the participants could answer sensitive questions comfortably, and their privacy was guaranteed.

In total, after disqualification, we received valid participants (each provided a result). Of all the participants, 19% were 18–25 years old, 28% were 26–30 years old, 36% were 31–40 years old, 12% were 41–50 years old, 4% were 51–60 years old, and 1% were above 60 years old; 55% were males and 45% were females; 66% had a bachelor’s degree, 16% had a master’s degree or higher, 15% had a high school diploma, and 5% had an associate degree, and fewer than 1% had not completed high school or did not get a diploma. Regarding employment, 70% were employees, 25% were self-employed, and 3% were unemployed but looking for work.

3.3. Results

3.3.1. Data Cleansing

To validate the survey’s answers, we applied two filters:

- Arbitrary answers: If 85% or above of the answers were identical, this participant’s answer was disqualified.

- Inconsistency: As described above, the survey also included a separate section where participants were asked about their general privacy concerns. This section contained eight questions, and the answers to these questions were given on an 11-level Likert scale. The average result was normalized to five levels (the same as section c, the core questionnaire). If the average of these two sections deviated by more than two levels, the participant’s answer was disqualified.

Out of total participants, 42 were disqualified according to the above criteria, leaving valid participants.

3.3.2. Raw Results

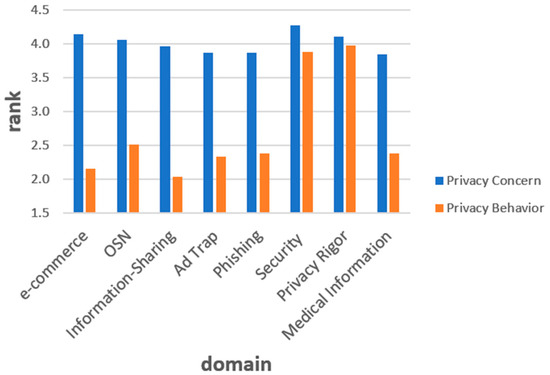

The average Privacy Concern vs. the average Privacy Behavior of all participants for each domain is depicted in Figure 2. The X-axis describes the different domains, while the Y-axis describes the levels of privacy concerns (blue bars) and privacy behavior (orange bars). For each domain, a paired two-tailed sample t-test was conducted to evaluate if there are differences. There were significant differences between the privacy concern and behavior in all domains: e-commerce, privacy concern SD = 0.75) vs. privacy behavior SD = 0.81); . OSN, privacy concern SD = 0.81) vs. privacy behavior SD = 1.40); . Information-Sharing, privacy concern SD = 0.88) vs. privacy behavior SD = 0.83); . Ad Trap, privacy concern SD = 0.87) vs. privacy behavior SD = 1.17); . Phishing, privacy concern SD = 0.87) vs. privacy behavior SD = 1.22); . Security, privacy concern SD = 0.82) vs. privacy behavior SD = 0.92); . Privacy Rigor, privacy concern SD = 0.83) vs. privacy behavior SD = 0.90); . Medical Information, privacy concern SD = 0.92) vs. privacy behavior SD = 1.08); .

Figure 2.

The average Privacy Concern (blue columns) vs. Privacy Behavior (orange columns) for each domain. The values are given across the entire empirical study population and provide an overall impression of these differences.

These preliminary results imply the existence of the privacy paradox in all domains (although with visually less difference for Security and Privacy Rigor domains). However, addressing the research question and determining if the paradox exists within an individual and not only on the statistical level requires further analysis relying on using the proposed metrics.

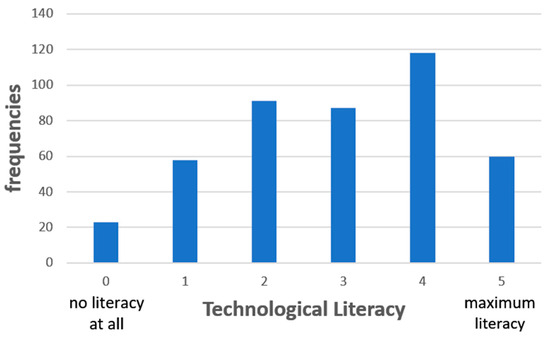

The technological literacy level was sampled with five questions that could each be answered correctly (1) or incorrectly (0), and the literacy level is the sum of these ranks. Therefore, the literacy scale ranges from 0 (no literacy at all) to 5 (maximum literacy). Usually, technological literacy assessment tools are more extensive. Still, there are two major differences between these cases and the purpose of this research: (a) they are designed to answer much more complex questions and to break down this measurement, while in our study we are only interested in a general singular index—an example would be analyzing literacy on a two-dimensional scale: the cognitive dimension with the factors {knowledge, capabilities, critical thinking decision making}, and the content area dimensions with the factors {technology and society, design, products and systems, characteristics and core concepts and connections} [49]; and (b) while for a general-purpose assessment, absolute values are required, this research only requires an ordinal index of the technological literacy values in order to test the correlation with the privacy paradox [50]. Therefore, even a shift in the values of this assessment will not influence the relevant test. The literature includes some relatively small surveys where the research questions are narrowed, for example, four questions to test technological literacy in academic writing [51].

The distribution of technological literacy SD = 1.42) is depicted in Figure 3, where the X-axis describes the technological literacy level, while the Y-axis describes the frequencies of the technological literacy level among the empirical study population.

Figure 3.

The distribution of technological literacy. This graph visualizes the distribution of this trait (which allegedly has relevancy to the privacy paradox phenomenon) across the entire empirical study population.

3.3.3. Domain-Specific Analysis

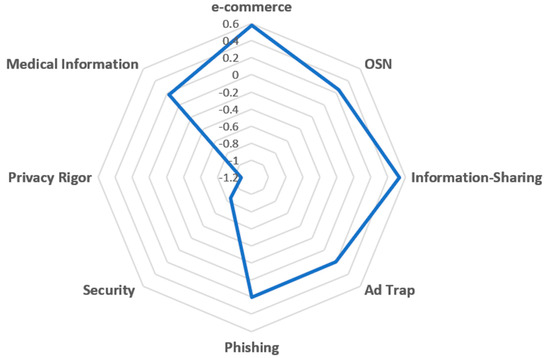

Given the raw matrix of the results (privacy concerns and privacy behavior for each domain and for each participant), the matrix (the paradox’s magnitudes for all the individuals and for all the domains) was calculated, and the index (the average of the paradox magnitude across all the domains for a specific individual ) was determined for each participant. Now, the deviation of each domain for each participant can be calculated. The average of across all participants is given by: , and depicted in Figure 4. The deviation of each domain is a measure of its uniqueness, indicating how specific it is. Therefore, this figure provides a visual illustration of the answer to the research question. The scale on the chart’s radius (the distance from the center) indicates the level of domain specificity. For example, it can be noticed that e-commerce has a high domain-specific value, Ad Trap has a middle value, and Security a low value.

Figure 4.

The average deviation of each domain (specificity of the domain). In this radar chart, the domains are arranged on the vertices, and the more distant the graph is from the center, the more specific the domain.

While Figure 4 provides an intuitive visualization of the levels of domain specificity, to evaluate the significance of the differences and answer the question about domain specificity in an objective way, we conducted a repeated measures ANOVA (rANOVA) of the values (the paradox magnitudes of each domain), since the results of each domain for a specific participant are paired. This analysis tests the hypothesis that the privacy paradox, in general, is domain-specific. The rANOVA test requires three assumptions: (a) Independence, which is addressed because the participants were not repeated and are not correlated with each other; (b) Normality, which can be neglected because the sample size is ; and (c) Sphericity. This assumption was tested by applying Mauchly’s test and was violated in our case; therefore, the Greenhouse–Geisser correction was applied. The results of the rANOVA show that there are significant differences in the magnitudes of the privacy paradox in the different domains . To test which domain is actually different from the others, we applied the Bonferroni adjustment. This analysis is a statistical hypothesis test for the existence of domain-specific results in between each combination of two domains as detailed henceforth (each combination is a standalone hypothesis). The results are shown in Table 2. An equal sign indicates that there is no significant difference between these two domains, i.e., these domains are not specific when related to each other. In total, there are 28 domain combinations (neglecting the order, and naturally not comparing a domain with itself), out of which 8 (~29%) are not significantly different, and the others (~71%) are significantly different. The OSN domain is significantly different from 3 other domains; Ad Trap and Medical-Information (each) are significantly different from 4 other domains; e-commerce, Security and Privacy Rigor (each) are significantly different from 6 other domains. The results indicate that all domains are specific to different extents.

Table 2.

Comparison of the privacy paradox domain specificity between domains. Each domain appears both in the rows header and in the columns header. An equal sign indicates no significant difference between intersecting domains. Pairs of domains which appear in the table without the equal sign are significantly specific one to each other.

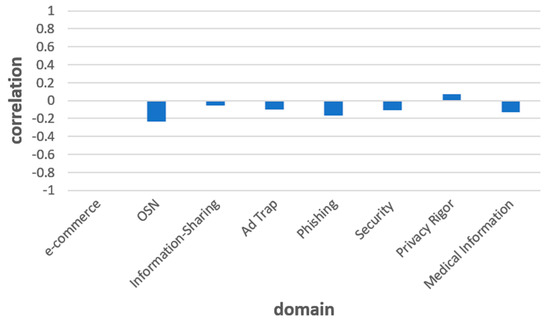

As mentioned above, the gap between privacy concerns and behavior, i.e., the privacy paradox, might be explained by limited technological literacy [24]. A true paradox exists if users, while understanding the implication, act in a direction opposite to their personal preferences. Therefore, the technological literacy factor may be accommodated in the model as a necessary condition for the existence of a paradox (rather than an explainable gap between privacy concern and privacy behavior). That said, we hypothesize that when technological literacy is low, the paradox does not really exist, because the individual cannot efficiently enact their preferences (reflecting privacy concern) by making adequate decisions and acting appropriately (reflecting privacy behavior). Consequently, the question of domain specificity has less significance. To measure the technological literacy effect, the Pearson correlation coefficient between each paradox level of a specific domain and the literacy level was calculated, as depicted in Figure 5. The X-axis describes the domain, while the Y-axis describes the correlation. As can be seen, the correlation coefficients vary from −0.23 to +0.07, which is not significant. Therefore, technological literacy cannot settle this paradox, which is proven to exist.

Figure 5.

The correlation between the privacy paradox of each domain and technological literacy. It can be noticed that the correlation for all the domains is low and not significant; therefore, the technological literacy factor cannot explain the privacy paradox.

4. Discussion

In this research, the privacy paradox or gap between a user’s privacy concern and the same user’s privacy behavior was investigated from a unique angle. The aim of this study was to discover whether the magnitude of the privacy paradox depends on the specific domain hosting the scenario. To this end, a unique metric was developed, and an empirical study that included valid participants and eight domains was conducted. It showed that the privacy paradox indeed differs from one domain to another.

The privacy paradox has been researched extensively, and has a significant presence both in the academic and commercial/popular literature (a Google Scholar search yields 1,970,000 results, and a general Google search yields 124,000,000 results). For example, a meta-analysis of 166 studies from 34 countries showed, “privacy concerns did not predict SNS [social network sites, in this paper OSNs] use” [52]. The intense pursuit of this issue is a testimonial to its significance. In the current digital era, users find it increasingly difficult and even frustrating to protect their privacy [53]. When considering individuals’ failure to protect their privacy, the privacy paradox may act as a describer, measure, and explanatory. Therefore, this phenomenon is important, and understanding the privacy paradox may pave the way for better privacy protection. Its significance may continue to increase over time, because technology in general, and artificial intelligence (AI) specifically, is forging ahead, making it more difficult for humans to protect their privacy. The rise of AI enables a more efficient and sensitive data collection by organizations and even worse than that, a higher level of deduction which can ‘mind’, and in that way disclose, unexpected hidden information. Therefore, AI is a threat to privacy, and when combined with the privacy paradox, a higher privacy loss may occur. For example, when IoT devices that collect data and train machines are enhanced with AI, the privacy paradox, i.e., humans’ inability to attain their desired degree of privacy protection, is pushed to the edge [54].

The privacy paradox does not suggest that privacy concerns (or privacy attitudes) and privacy behavior are not correlated. The so-called paradox is the gap between them. This gap presents the amount of privacy that a user loses, related to the amount of privacy he would like to protect, which varies from one individual to another. Therefore, a first, essential step towards mitigation of the paradox, i.e., better privacy protection, is to quantify its magnitude. This capability is one basic contribution of this research to the issue. In this paper, both privacy concerns and privacy behavior were measured indirectly by surveying the participants. Privacy behavior, at least, may be evaluated more accurately by observations during real digital activity. This is a limitation of the current study and an opportunity for further research. Moreover, the measurement of the gap indicates the amount of undesired privacy loss. However, in order to accommodate the index in privacy protection models, further research should be carried out to translate this gap into tangible values [55].

Beyond measuring the privacy paradox, the focus is on where it occurs, or more precisely its magnitude in each of the domains, which may help understand the phenomena and develop solutions. The territories are multi-dimensional and encompass several factors. A major factor is the demographic traits of the individual, e.g., the age factor [56]. Another interesting example is when the decision maker and the information owner are not the same entity, e.g., parents who share information about their children without their consent [57]. This paper investigated the domain in which the event occurs. Evaluating the privacy paradox in each domain is essential for developing efficient protection. Means of protection are resources which, therefore, cannot be unlimited. This issue is for illustration very much like securing a geographical perimeter with a limited number of guards. Knowing where the weak spots are facilitates assigning resources in an optimal manner. Similarly, being aware of the more problematic domains might help focus privacy protection in those areas. Moreover, while this research focuses on domains, the other territories may interact with each other in additional ways. e.g., the distribution of the privacy paradox across domains may be different among populations with different age groups. This multi-dimensional problem could be addressed in further research.

Technological literacy, defined as the “ability to use, manage, understand, and assess technology” [58], is a central factor of the privacy paradox. Indeed, technological literacy is a necessary condition if users are to make proper decisions to protect their privacy [59]. Technological literacy enables an efficient implementation (=privacy behavior) of preferences (=privacy concern). For example, it has been shown that children can be trained to improve their privacy literacy and thereby enhance their privacy [60]. Therefore, technological literacy is perceived as a possible explanation for the privacy paradox. Technological literacy also has dimensions, e.g., a person may show that they understand the consequences of publishing information on OSN while showing relative ignorance about the consequences of using an IoT device. This factor calls for joining the technological literacy factor (which has been researched extensively) and the domain factor (the subject of the current research). That said, we did address technological literacy within the scope of the current research and found no significant correlation between it and the magnitude of the privacy paradox. However, the questions regarding technological literacy were elementary and did not distinguish between literacies in different domains. This could also be a topic for further research focusing on both the privacy paradox and technological literacy, measured for the same individual and for a specific domain.

5. Conclusions

This research indicates that the privacy paradox, i.e., the gap between individuals’ privacy concerns (which reflects worries over privacy violations) and privacy behavior (which reflects the actual actions taken to protect privacy), is domain-specific and does vary from one domain to another. This paradox is a significant obstacle to the protection of privacy because users are not acting in a way that reflects their privacy preferences. Bridging this gap is key to providing better privacy preservation; therefore, these research findings have a profound significance for understanding and developing effective privacy protection that is capable of providing individuals with the amount of privacy they desire. Indicating the existence of domain specificity in the privacy paradox phenomenon in general, and specifically quantifying its magnitude as a function of the domain, enables focusing on the areas that are more problematic from a privacy-protection point of view.

Funding

This research received no external funding.

Institutional Review Board Statement

The study protocol was approved by the Institutional Review Board (or Ethics Committee) of Ariel University (confirmation number: AU-ENG-RH-20230328) for research titled: “Is the Privacy Paradox Domain Specific”.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Acknowledgments

This work was supported by the Ariel Cyber Innovation Center in conjunction with the Israel National Cyber directorate in the Prime Minister’s Office.

Conflicts of Interest

The author declares no conflict of interest.

References

- Correia, J.; Compeau, D. Information privacy awareness (IPA): A review of the use, definition and measurement of IPA. In Proceedings of the 50th Hawaii International Conference on System Sciences, Waikoloa Village, HI, USA, 4–7 January 2017. [Google Scholar]

- Wagner, C.; Trenz, M.; Veit, D. How do habit and privacy awareness shape privacy decisions? In Proceedings of the AMCIS 2020 Proceedings 23, Virtual, 15–17 August 2020.

- ITU. New ITU Statistics Show More than Half the World Is Now Using the Internet; International Telecommunication Union (ITU): Geneva, Switzerland, 2018. [Google Scholar]

- Shepherd, J. What is the digital era? In Social and Economic Transformation in the Digital Era; IGI Global: Hershey, PA, USA, 2004; pp. 1–18. [Google Scholar]

- Ahmed, A.; Javed, A.R.; Jalil, Z.; Srivastava, G.; Gadekallu, T.R. Privacy of web browsers: A challenge in digital forensics. In Proceedings of the Genetic and Evolutionary Computing: Proceedings of the Fourteenth International Conference on Genetic and Evolutionary Computing, Jilin, China, 21–23 October 2021; Springer: Singapore, 2022. [Google Scholar]

- Jones, B.I. Understanding Ecommerce Consumer Privacy From the Behavioral Marketers’ Viewpoint. Ph.D. Dissertation, Walden University, Minneapolis, MN, USA, 2019. [Google Scholar]

- Bellekens, X.; Seeam, A.; Hamilton, A.W.; Seeam, P.; Nieradzinska, K. Pervasive eHealth services a security and privacy risk awareness survey. In Proceedings of the 2016 International Conference On Cyber Situational Awareness, Data Analytics And Assessment (CyberSA), London, UK, 13–14 January 2016. [Google Scholar]

- Akman, G.; Ginzboorg, P.; Damir, M.T.; Niemi, V. Privacy-Enhanced AKMA for Multi-Access Edge Computing Mobility. Computers 2022, 12, 2. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, L.; Yin, G.; Li, L.; Zhao, H. A Survey on Security and Privacy Issues in Internet-of-Things. IEEE Internet Things J. 2017, 4, 1250–1258. [Google Scholar] [CrossRef]

- Anic, I.-D.; Budak, J.; Rajh, E.; Recher, V.; Skare, V.; Skrinjaric, B. Extended model of online privacy concern: What drives consumers’ decisions? Online Inf. Rev. 2019, 43, 799–817. [Google Scholar] [CrossRef]

- IGI Global. What is Privacy Concern. 2021. Available online: https://www.igi-global.com/dictionary/privacy-concern/40729 (accessed on 28 June 2023).

- Lin, S.-W.; Liu, Y.-C. The effects of motivations, trust, and privacy concern in social networking. Serv. Bus. 2012, 6, 411–424. [Google Scholar] [CrossRef]

- Xu, H.; Gupta, S.; Rosson, M.B.; Carroll, J.M. Measuring mobile users’ concerns for information privacy. In Proceedings of the Thirty Third International Conference on Information Systems, Orlando, FL, USA, 16–19 December 2012. [Google Scholar]

- Aljifri, H.; Navarro, D.S. Search engines and privacy. Comput. Secur. 2004, 23, 379–388. [Google Scholar] [CrossRef]

- Petronio, S.; Altman, I. Boundaries of Privacy: Dialectics of Disclosure; Suny Press: Albany, NY, USA, 2002. [Google Scholar] [CrossRef]

- The Privacy Issue. Decoding the Privacy Paradox. 2021. Available online: https://theprivacyissue.com/privacy-and-society/decoding-privacy-paradox (accessed on 28 June 2023).

- Stouffer, C. The Privacy Paradox: How Much Privacy Are We Willing to Give up Online? Norton. 2021. Available online: https://us.norton.com/blog/privacy/how-much-privacy-we-give-up#:~:text=First%20coined%20in%202001%2C%20the,t%20protect%20their%20information%20online (accessed on 1 May 2023).

- Norberg, P.A.; Horne, D.R.; Horne, D.A. The privacy paradox: Personal information disclosure intentions versus behaviors. J. Consum. Aff. 2007, 41, 100–126. [Google Scholar] [CrossRef]

- Kim, B.; Kim, D. Understanding the Key Antecedents of Users’ Disclosing Behaviors on Social Networking Sites: The Privacy Paradox. Sustainability 2020, 12, 5163. [Google Scholar] [CrossRef]

- Zhu, M.; Wu, C.; Huang, S.; Zheng, K.; Young, S.D.; Yan, X.; Yuan, Q. Privacy paradox in mHealth applications: An integrated elaboration likelihood model incorporating privacy calculus and privacy fatigue. Telemat. Inform. 2021, 61, 101601. [Google Scholar] [CrossRef]

- Chen, L.; Huang, Y.; Ouyang, S.; Xiong, W. The Data Privacy Paradox and Digital Demand; National Bureau of Economic Research: Cambridge, MA, USA, 2021. [Google Scholar] [CrossRef]

- Barnes, S.B. A privacy paradox: Social networking in the United States. First Monday 2006, 11. [Google Scholar] [CrossRef]

- Gerber, N.; Gerber, P.; Volkamer, M. Explaining the privacy paradox: A systematic review of literature investigating privacy attitude and behavior. Comput. Secur. 2018, 77, 226–261. [Google Scholar] [CrossRef]

- Hargittai, E.; Marwick, A. What can I really do?” Explaining the privacy paradox with online apathy. Int. J. Commun. 2016, 10, 21. [Google Scholar]

- Barth, S.; de Jong, M.D.; Junger, M.; Hartel, P.H.; Roppelt, J.C. Putting the privacy paradox to the test: Online privacy and security behaviors among users with technical knowledge, privacy awareness, and financial resources. Telemat. Inform. 2019, 41, 55–69. [Google Scholar] [CrossRef]

- Min, J.; Kim, B. How are people enticed to disclose personal information despite privacy concerns in social network sites? The calculus between benefit and cost. J. Assoc. Inf. Sci. Technol. 2014, 66, 839–857. [Google Scholar] [CrossRef]

- Kokolakis, S. Privacy attitudes and privacy behaviour: A review of current research on the privacy paradox phenomenon. Comput. Secur. 2017, 64, 122–134. [Google Scholar] [CrossRef]

- Stones, R. Structuration Theory, Traditions in Social Theory; Macmillan International Higher Education: London, UK, 2005. [Google Scholar]

- Solove, D.J. The myth of the privacy paradox. Geo. Wash. L. Rev. 2021, 89, 1. [Google Scholar] [CrossRef]

- Martin, K. Breaking the Privacy Paradox: The Value of Privacy and Associated Duty of Firms. Bus. Ethic Q. 2019, 30, 65–96. [Google Scholar] [CrossRef]

- Lutz, C.; Strathoff, P. Privacy Concerns and Online Behavior--Not So Paradoxical after All? Viewing the Privacy Paradox through Different Theoretical Lenses. 2014. Available online: https://ssrn.com/abstract=2425132 (accessed on 26 July 2023).

- AlAbdali, H.; AlBadawi, M.; Sarrab, M.; AlHamadani, A. Privacy preservation instruments influencing the trust-worthiness of e-government services. Computers 2021, 10, 114. [Google Scholar] [CrossRef]

- Cole, B.M. The Privacy Paradox Online: Exploring How Users Process Privacy Policies and the Impact on Privacy Protective Behaviors. Ph.D. Dissertation, San Diego State University, San Diego, CA, USA, 2023. [Google Scholar]

- Willems, J.; Schmid, M.J.; Vanderelst, D.; Vogel, D.; Ebinger, F. AI-driven public services and the privacy paradox: Do citizens really care about their privacy? Public Manag. Rev. 2022, 1–19. [Google Scholar] [CrossRef]

- Dienlin, T.; Trepte, S. Is the privacy paradox a relic of the past? An in-depth analysis of privacy attitudes and privacy behaviors. Eur. J. Soc. Psychol. 2014, 45, 285–297. [Google Scholar] [CrossRef]

- ElShahed, H. Privacy Paradox Amid E-Commerce Epoch: Examining Egyptian Youth’s Practices of Digital Literacy Online. In Marketing and Advertising in the Online-to-Offline (O2O) World; IGI Global: Hershey, PA, USA, 2023; pp. 45–64. [Google Scholar]

- Gouthier, M.H.; Nennstiel, C.; Kern, N.; Wendel, L. The more the better? Data disclosure between the con-flicting priorities of privacy concerns, information sensitivity and personalization in e-commerce. J. Bus. Res. 2022, 148, 174–189. [Google Scholar] [CrossRef]

- Schubert, R.; Marinica, I.; Mosetti, L.; Bajka, S. Mitigating the Privacy Paradox through Higher Privacy Literacy? Insights from a Lab Experiment Based on Facebook Data. 2022. Available online: https://ssrn.com/abstract=4242866 (accessed on 26 July 2023).

- Lee, A.-R. Investigating the Personalization–Privacy Paradox in Internet of Things (IoT) Based on Dual-Factor Theory: Moderating Effects of Type of IoT Service and User Value. Sustainability 2021, 13, 10679. [Google Scholar] [CrossRef]

- Duan, S.X.; Deng, H. Exploring privacy paradox in contact tracing apps adoption. Internet Res. 2022, 32, 1725–1750. [Google Scholar] [CrossRef]

- Bandara, R.; Fernando, M.; Akter, S. Explicating the privacy paradox: A qualitative inquiry of online shopping consumers. J. Retail. Consum. Serv. 2019, 52, 101947. [Google Scholar] [CrossRef]

- Wu, P.F. The privacy paradox in the context of online social networking: A self-identity perspective. J. Assoc. Inf. Sci. Technol. 2018, 70, 207–217. [Google Scholar] [CrossRef]

- Hou, Z.; Qingyan, F. Quantifying and Examining Privacy Paradox of Social Media Users. Data Anal. Knowl. Discov. 2021, 5, 111–125. [Google Scholar] [CrossRef]

- Gimpel, H.; Kleindienst, D.; Waldmann, D. The disclosure of private data: Measuring the privacy paradox in digital services. Electron. Mark. 2018, 28, 475–490. [Google Scholar] [CrossRef]

- Paolacci, G.; Chandler, J.; Ipeirotis, P.G. Running experiments on Amazon Mechanical Turk. Judgm. Decis. Mak. 2010, 5, 411–419. [Google Scholar] [CrossRef]

- Bentley, F.R.; Daskalova, N.; White, B. Comparing the Reliability of Amazon Mechanical Turk and Survey Monkey to Traditional Market Research Surveys. In Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1092–1099. [Google Scholar] [CrossRef]

- Bates, J.A.; Lanza, B.A. Conducting psychology student research via the Mechanical Turk crowdsourcing service. N. Am. J. Psychol. 2013, 15, 385–394. [Google Scholar]

- Burleigh, T. What Is Fair Payment on MTurk? 2019. Available online: https://tylerburleigh.com/blog/what-is-fair-payment-on-mturk/ (accessed on 26 July 2023).

- Gamire, E.; Pearson, G. Tech Tally: Approaches to Assessing Technological Literacy; Island Press: Washington, DC, USA, 2006; p. 5. [Google Scholar]

- Long, J.D.; Feng, D.; Cliff, N. Ordinal Analysis of Behavioral Data. In Handbook of Psychology; Wiley Online Library: Hoboken, NJ, USA, 2003; pp. 635–661. [Google Scholar]

- Supriyadi, T.; Saptani, E.; Rukmana, A.; Suherman, A.; Alif, M.N.; Rahminawati, N. Students’ Technological Literacy to Improve Academic Writing and Publication Quality. Univers. J. Educ. Res. 2020, 8, 6022–6035. [Google Scholar] [CrossRef]

- Baruh, L.; Secinti, E.; Cemalcilar, Z. Online Privacy Concerns and Privacy Management: A Meta-Analytical Review. J. Commun. 2017, 67, 26–53. [Google Scholar] [CrossRef]

- Elahi, S. Privacy and consent in the digital era. Inf. Secur. Tech. Rep. 2009, 14, 113–118. [Google Scholar] [CrossRef]

- Hu, R. Breaking the Privacy Paradox: Pushing AI to the Edge with Provable Guarantees. 2022. Available online: https://www.proquest.com/docview/2665535602?pq-origsite=gscholar&fromopenview=true (accessed on 1 April 2023).

- Goldfarb, A.; Que, V.F. The Economics of Digital Privacy. Annu. Rev. Econ. 2023, 15. [Google Scholar] [CrossRef]

- Obar, J.A.; Oeldorf-Hirsch, A. Older Adults and ‘The Biggest Lie on the Internet’: From Ignoring Social Media Policies to the Privacy Paradox. Int. J. Commun. 2022, 16, 4779–4800. [Google Scholar]

- Bhroin, N.N.; Dinh, T.; Thiel, K.; Lampert, C.; Staksrud, E.; Ólafsson, K. The privacy paradox by proxy: Considering predictors of sharenting. Media Commun. 2022, 10, 371–383. [Google Scholar] [CrossRef]

- ITEEA. Standards for Technological Literacy: Content for the Study of Technology; ITEEA: Reston, VA, USA, 2000. [Google Scholar]

- Furnell, S.; Moore, L. Security literacy: The missing link in today’s online society? Comput. Fraud. Secur. 2014, 5, 12–18. [Google Scholar] [CrossRef]

- Desimpelaere, L.; Hudders, L.; Van de Sompel, D. Knowledge as a strategy for privacy protection: How a privacy literacy training affects children’s online disclosure behaviour. Comput. Hum. Behav. 2020, 110, 106382. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).