Multistage Spatial Attention-Based Neural Network for Hand Gesture Recognition

Abstract

1. Introduction

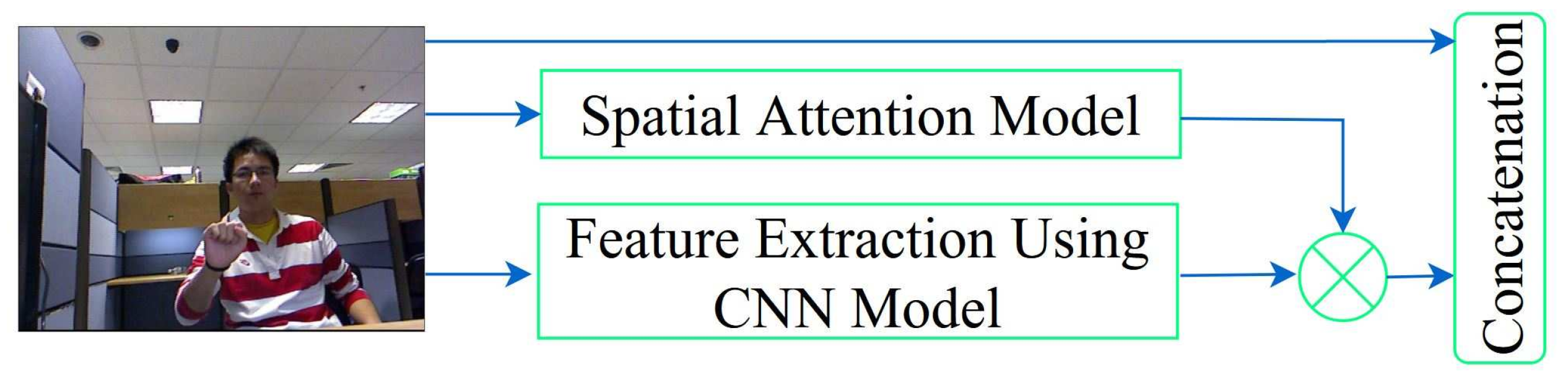

- We proposed a multistage, attention-based feature-fusion-based deep learning for recognizing hand gestures. We implemented the model in three phases: The first two phases are used for feature extraction, and the third phase is used for classification. The first two phases consist of a combination of feature extractors and spatial attention modules.

- The first phase is used to extract spatial features, and the second phase is used to highlight effective features.

- Finally, we applied a new classification module adapted from CNNs, which demonstrated state-of-the-art classification performance on three hand gesture datasets used as benchmarks, and it outperformed the existing modules.

2. Related Work

3. Dataset

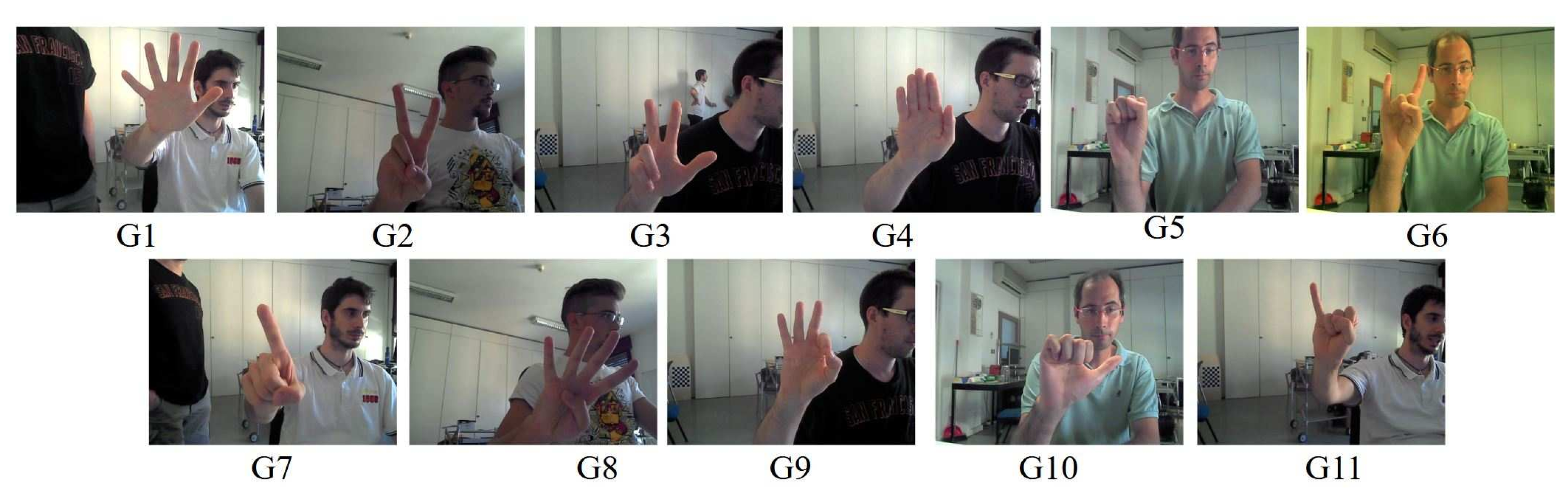

3.1. Creative Senz3d Dataset

3.2. NTU Dataset

3.3. Kinetic and Leap Motion Gestures

4. Proposed Method

4.1. First-Stage Feature Extractor

4.2. First-Stage Spatial Attention Module

4.3. Second-Stage Feature Extractor

4.4. Second-Stage Spatial Attention Module

4.5. Classification Architecture

4.6. Inference and Training

5. Experimental Results

5.1. Experimental Setting

5.2. Performance Result of the Senz3D and NTU Dataset

5.3. Performance Results of the Kinematic Dataset

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ren, Z.; Yuan, J.; Meng, J.; Zhang, Z. Robust part-based hand gesture recognition using kinect sensor. IEEE Trans. Multimed. 2013, 15, 1110–1120. [Google Scholar] [CrossRef]

- Wachs, J.P.; Kölsch, M.; Stern, H.; Edan, Y. Vision-based hand-gesture applications. Commun. ACM 2011, 54, 60–71. [Google Scholar] [CrossRef]

- Jalal, A.; Rasheed, Y.A. Collaboration achievement along with performance maintenance in video streaming. In Proceedings of the IEEE Conference on Interactive Computer Aided Learning, Villach, Austria, 26 September 2007; Volume 2628, p. 18. [Google Scholar]

- Jalal, A.; Shahzad, A. Multiple facial feature detection using vertex-modeling structure. In Proceedings of the ICL, Villach, Austria, 26–28 September 2007; pp. 1–7. [Google Scholar]

- Jalal, A.; Kim, S.; Yun, B. Assembled algorithm in the real-time H. 263 codec for advanced performance. In Proceedings of the IEEE 7th International Workshop on Enterprise Networking and Computing in Healthcare Industry (HEALTHCOM 2005), Busan, Republic of Korea, 23–25 June 2005; pp. 295–298. [Google Scholar]

- Jalal, A.; Kim, S. Advanced performance achievement using multi-algorithmic approach of video transcoder for low bit rate wireless communication. ICGST Int. J. Graph. Vis. Image Process. 2005, 5, 27–32. [Google Scholar]

- Jalal, A.; Uddin, I. Security architecture for third generation (3G) using GMHS cellular network. In Proceedings of the 2007 IEEE International Conference on Emerging Technologies, Rawalpindi, Pakistan, 12–13 November 2007; pp. 74–79. [Google Scholar]

- Jalal, A.; Zeb, M.A. Security enhancement for e-learning portal. IJCSNS Int. J. Comput. Sci. Netw. Secur. 2008, 8. [Google Scholar]

- Jalal, A.; Kim, S. The mechanism of edge detection using the block matching criteria for the motion estimation. 한국 HCI 학회 학술대회. 2005, pp. 484–489. Available online: https://www.dbpia.co.kr/Journal/articleDetail?nodeId=NODE01886372 (accessed on 8 June 2022).

- Jalal, A.; Kim, S. Algorithmic implementation and efficiency maintenance of real-time environment using low-bitrate wireless communication. In Proceedings of the Fourth IEEE Workshop on Software Technologies for Future Embedded and Ubiquitous Systems, and the Second International Workshop on Collaborative Computing, Integration, and Assurance (SEUS-WCCIA’06), Gyeongju, Republic of Korea, 27–28 April 2006; p. 6. [Google Scholar]

- Shin, J.; Kim, C.M. Non-touch character input system based on hand tapping gestures using Kinect sensor. IEEE Access 2017, 5, 10496–10505. [Google Scholar] [CrossRef]

- Murata, T.; Shin, J. Hand gesture and character recognition based on kinect sensor. Int. J. Distrib. Sens. Netw. 2014, 10, 278460. [Google Scholar] [CrossRef]

- Shin, J.; Matsuoka, A.; Hasan, M.A.M.; Srizon, A.Y. American sign language alphabet recognition by extracting feature from hand pose estimation. Sensors 2021, 21, 5856. [Google Scholar] [CrossRef]

- Marin, G.; Dominio, F.; Zanuttigh, P. Hand gesture recognition with leap motion and kinect devices. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 1565–1569. [Google Scholar]

- Moeslund, T.B.; Störring, M.; Granum, E. A natural interface to a virtual environment through computer vision-estimated pointing gestures. In International Gesture Workshop; Springer: Berlin/Heidelberg, Germany, 2001; pp. 59–63. [Google Scholar]

- Streitz, N.A.; Tandler, P.; Müller-Tomfelde, C.; Konomi, S. Roomware: Towards the next generation of human–computer interaction based on an integrated design of real and virtual worlds. Hum.-Comput. Interact. New Millenn. 2001, 553, 578. [Google Scholar]

- Dewaele, G.; Devernay, F.; Horaud, R. Hand motion from 3d point trajectories and a smooth surface model. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2004; pp. 495–507. [Google Scholar]

- Miah, A.S.M.; Shin, J.; Hasan, M.A.M.; Rahim, M.A.; Okuyama, Y. Rotation, Translation Furthermore, Scale Invariant Sign Word Recognition Using Deep Learning. In Computer Systems Science and Engineering; Volume 44, Available online: https://doi.org/10.32604/csse.2023.029336 (accessed on 1 December 2022).

- Miah, A.S.M.; Shin, J.; Hasan, M.A.M.; Rahim, M.A. BenSignNet: Bengali Sign Language Alphabet Recognition Using Concatenated Segmentation and Convolutional Neural Network. Appl. Sci. 2022, 12, 3933. [Google Scholar] [CrossRef]

- Erol, A.; Bebis, G.; Nicolescu, M.; Boyle, R.D.; Twombly, X. Vision-based hand pose estimation: A review. Comput. Vis. Image Underst. 2007, 108, 52–73. [Google Scholar] [CrossRef]

- Murthy, G.; Jadon, R. A review of vision-based hand gestures recognition. Int. J. Inf. Technol. Knowl. Manag. 2009, 2, 405–410. [Google Scholar]

- Shotton, J.; Fitzgibbon, A.; Cook, M.; Sharp, T.; Finocchio, M.; Moore, R.; Kipman, A.; Blake, A. Real-time human pose recognition in parts from single depth images. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1297–1304. [Google Scholar]

- Mohla, S.; Pande, S.; Banerjee, B.; Chaudhuri, S. Fusatnet: Dual attention based spectrospatial multimodal fusion network for hyperspectral and lidar classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 92–93. [Google Scholar]

- Marin, G.; Dominio, F.; Zanuttigh, P. Hand gesture recognition with jointly calibrated leap motion and depth sensor. Multimed. Tools Appl. 2016, 75, 14991–15015. [Google Scholar] [CrossRef]

- Zhou, R. Shape Based Hand Gesture Recognition. Ph.D. Thesis, Nanyang Technological University, Singapore, 2020. [Google Scholar]

- Biasotti, S.; Tarini, M.; Giachetti, A. Exploiting Silhouette Descriptors and Synthetic Data for Hand Gesture Recognition. Available online: https://diglib.eg.org/bitstream/handle/10.2312/stag20151288/015-023.pdf (accessed on 1 December 2022).

- Yuanyuan, S.; Yunan, L.; Xiaolong, F.; Kaibin, M.; Qiguang, M. Review of dynamic gesture recognition. Virtual Real. Intell. Hardw. 2021, 3, 183–206. [Google Scholar]

- Nunez, J.C.; Cabido, R.; Pantrigo, J.J.; Montemayor, A.S.; Velez, J.F. Convolutional neural networks and long short-term memory for skeleton-based human activity and hand gesture recognition. Pattern Recognit. 2018, 76, 80–94. [Google Scholar] [CrossRef]

- Su, M.C. A fuzzy rule-based approach to spatio-temporal hand gesture recognition. IEEE Trans. Syst. Man, Cybern. Part C (Appl. Rev.) 2000, 30, 276–281. [Google Scholar]

- Jetley, S.; Lord, N.A.; Lee, N.; Torr, P.H. Learn to pay attention. arXiv 2018, arXiv:1804.02391. [Google Scholar]

- Mou, L.; Zhu, X.X. Learning to pay attention on spectral domain: A spectral attention module-based convolutional network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 58, 110–122. [Google Scholar] [CrossRef]

- Iwai, Y.; Watanabe, K.; Yagi, Y.; Yachida, M. Gesture recognition by using colored gloves. In Proceedings of the 1996 IEEE International Conference on Systems, Man and Cybernetics. Information Intelligence and Systems (Cat. No. 96CH35929), Beijing, China, 14–17 October 1996; Volume 1, pp. 76–81. [Google Scholar]

- Wilson, A.D.; Bobick, A.F. Parametric hidden markov models for gesture recognition. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 884–900. [Google Scholar] [CrossRef]

- Lee, H.K.; Kim, J.H. An HMM-based threshold model approach for gesture recognition. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 961–973. [Google Scholar]

- Kwok, C.; Fox, D.; Meila, M. Real-time particle filters. In Proceedings of the Advances in Neural Information Processing Systems 15 (NIPS 2002), Vancouver, BC, Canada, 9–14 December 2002; Volume 15. [Google Scholar]

- Doucet, A.; De Freitas, N.; Gordon, N.J. Sequential Monte Carlo Methods in Practice; Springer: Berlin/Heidelberg, Germany, 2001; Volume 1. [Google Scholar]

- Nagi, J.; Ducatelle, F.; Di Caro, G.A.; Cireşan, D.; Meier, U.; Giusti, A.; Nagi, F.; Schmidhuber, J.; Gambardella, L.M. Max-pooling convolutional neural networks for vision-based hand gesture recognition. In Proceedings of the 2011 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Lumpur, Malaysia, 16–18 November 2011; pp. 342–347. [Google Scholar]

- Tao, W.; Leu, M.C.; Yin, Z. American Sign Language alphabet recognition using Convolutional Neural Networks with multiview augmentation and inference fusion. Eng. Appl. Artif. Intell. 2018, 76, 202–213. [Google Scholar] [CrossRef]

- Naguri, C.R.; Bunescu, R.C. Recognition of dynamic hand gestures from 3D motion data using LSTM and CNN architectures. In Proceedings of the 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2017; pp. 1130–1133. [Google Scholar]

- Memo, A.; Zanuttigh, P. Head-mounted gesture-controlled interface for human-computer interaction. Multimed. Tools Appl. 2018, 77, 27–53. [Google Scholar] [CrossRef]

- Ma, L.; Jia, X.; Sun, Q.; Schiele, B.; Tuytelaars, T.; Gool, L.V. Pose Guided Person Image Generation. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation network. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Tock, K. Google CoLaboratory as a platform for Python coding with students. RTSRE Proc. 2019, 2. [Google Scholar]

- Gollapudi, S. OpenCV with Python. In Learn Computer Vision Using OpenCV; Springer: Berlin/Heidelberg, Germany, 2019; pp. 31–50. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the thirteenth international artificial intelligence and statistics conference. JMLR Workshop and Conference Proceedings, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- Dozat, T. Incorporating Nesterov Momentum into Adam. 2016. Available online: https://openreview.net/forum?id=OM0jvwB8jIp57ZJjtNEZ (accessed on 1 December 2022).

- Tang, H.; Wang, W.; Xu, D.; Yan, Y.; Sebe, N. GestureGAN for Hand Gesture-to-Gesture Translation in the Wild. In Proceedings of the CVPR 2018 (IEEE), Salt Lake City, UT, USA, 18–23 June 2018; pp. 774–782. [Google Scholar]

- Siarohin, A.; Sangineto, E.; Lathuilière, S.; Sebe, N. Deformable GANs for Pose-based Human Image Generation. In Proceedings of the CVPR 2018 (IEEE), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3408–3416. [Google Scholar]

- Ma, L.; Sun, Q.; Georgoulis, S.; Van Gool, L.; Schiele, B.; Fritz, M. Disentangled Person Image Generation. In Proceedings of the CVPR 2018 (IEEE), Salt Lake City, UT, USA, 18–23 June 2018; pp. 99–108. [Google Scholar]

- Yan, Y.; Xu, J.; Ni, B.; Zhang, W.; Yang, X. Skeleton-aided articulated motion generation. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 199–207. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Miah, A.S.M.; Hasan, M.A.M.; Shin, J.; Okuyama, Y.; Tomioka, Y. Multistage Spatial Attention-Based Neural Network for Hand Gesture Recognition. Computers 2023, 12, 13. https://doi.org/10.3390/computers12010013

Miah ASM, Hasan MAM, Shin J, Okuyama Y, Tomioka Y. Multistage Spatial Attention-Based Neural Network for Hand Gesture Recognition. Computers. 2023; 12(1):13. https://doi.org/10.3390/computers12010013

Chicago/Turabian StyleMiah, Abu Saleh Musa, Md. Al Mehedi Hasan, Jungpil Shin, Yuichi Okuyama, and Yoichi Tomioka. 2023. "Multistage Spatial Attention-Based Neural Network for Hand Gesture Recognition" Computers 12, no. 1: 13. https://doi.org/10.3390/computers12010013

APA StyleMiah, A. S. M., Hasan, M. A. M., Shin, J., Okuyama, Y., & Tomioka, Y. (2023). Multistage Spatial Attention-Based Neural Network for Hand Gesture Recognition. Computers, 12(1), 13. https://doi.org/10.3390/computers12010013