Artificial Intelligence for Lymph Node Detection and Malignancy Prediction in Endoscopic Ultrasound: A Multicenter Study

Simple Summary

Abstract

1. Introduction

1.1. Background and Clinical Significance

1.2. Study Objectives

2. Materials and Methods

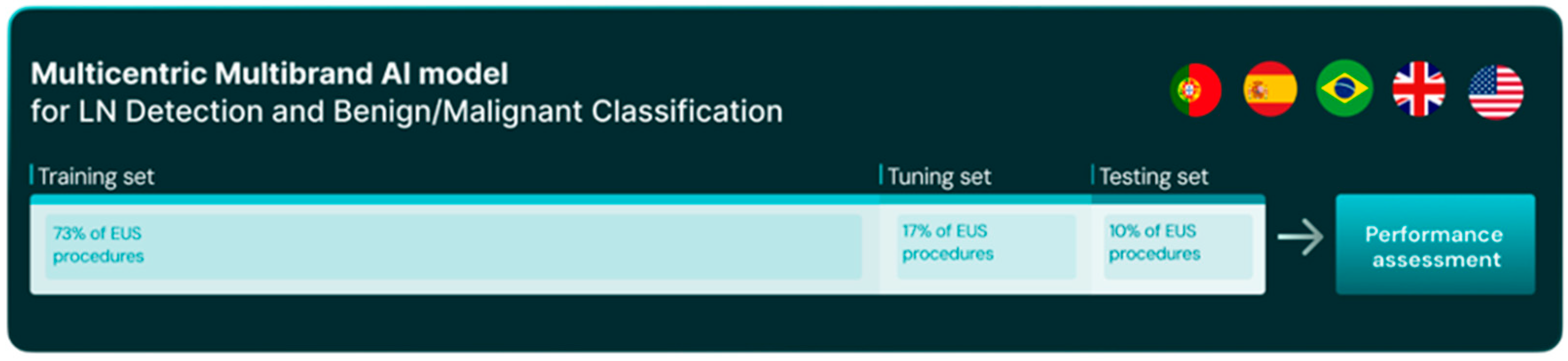

2.1. Study Design, Data Collection and Dataset Preparation

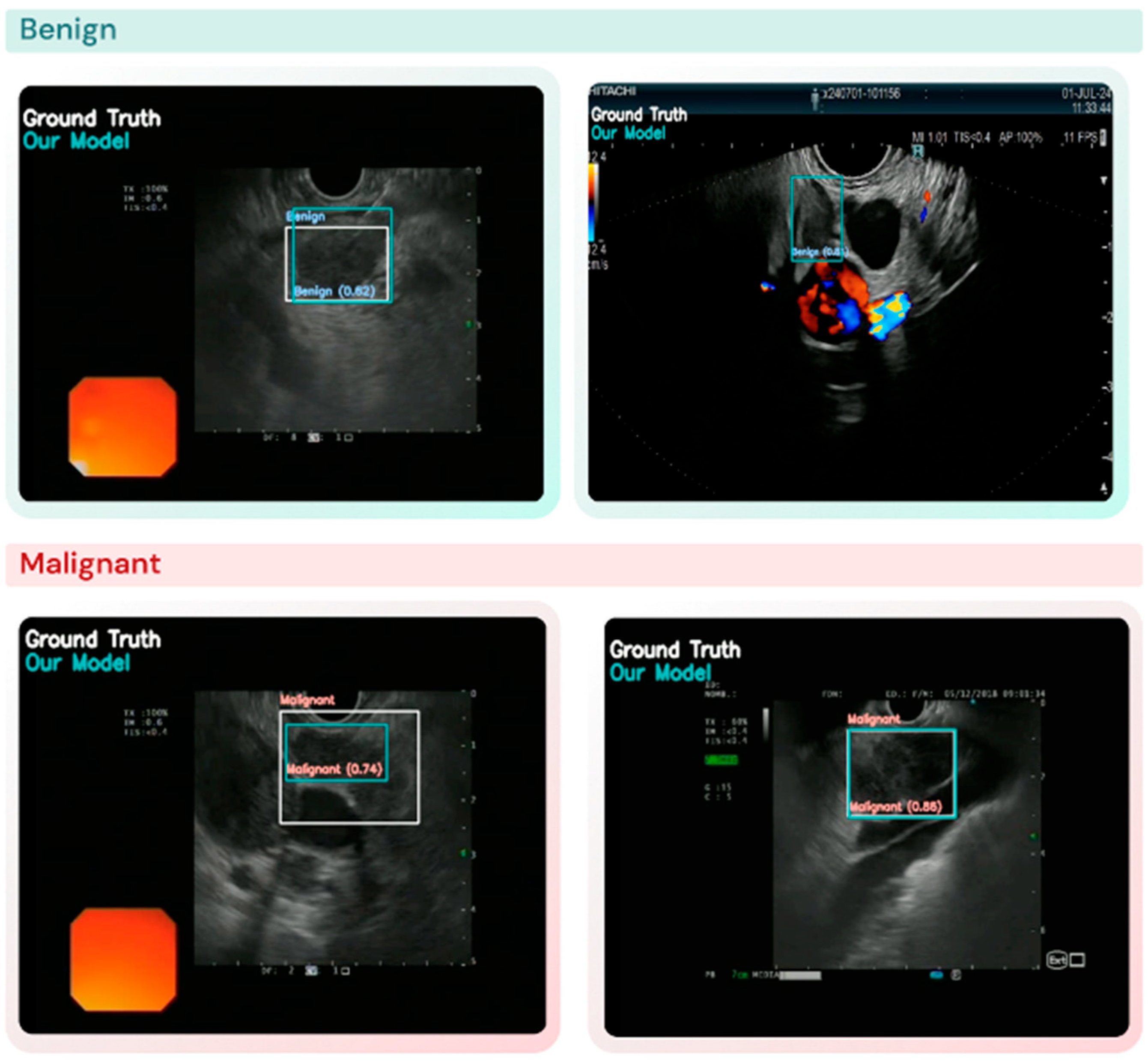

2.2. AI Model Selection and Development

2.3. Performance Evaluation: Lesion Detection and Diagnosis (Classification) Task

2.4. Statistical Analysis

3. Results

3.1. Dataset Characteristics

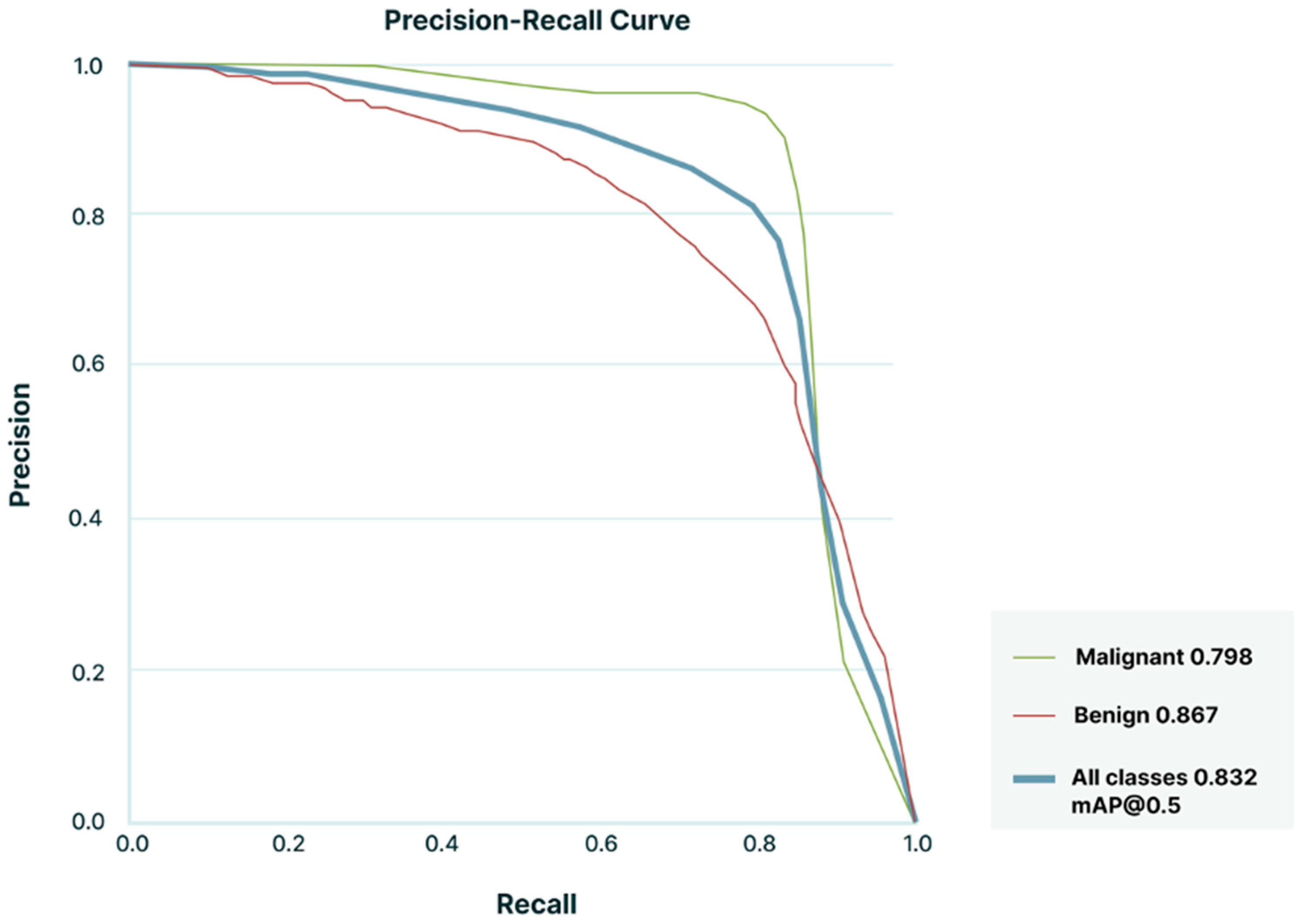

3.2. Lymph Node Detection and Classification Performance

4. Discussion

- Image Artifacts: Artifacts such as noise, shadowing, or low-resolution images are known to contribute to the CNN’s inability to accurately detect and classify LNs. These issues were more prevalent in cases where classified images were taken under suboptimal conditions, such as endoscope movement or in challenging probe positions.

- Class Imbalance: Despite including a large number of patients (and LN images) and efforts to balance the dataset, a slight disproportion in malignant versus benign LNs in certain subgroups may have introduced some performance bias.

- Annotation Challenges: The reliance on bounding boxes for lesion annotation, while practical, may not always capture the full morphological complexity of LNs, leading to incomplete training signals for the model. This limitation could be addressed with more advanced segmentation approaches in future iterations.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Vazquez-Sequeiros, E.; Norton, I.D.; Clain, J.E.; Wang, K.K.; Affi, A.; Allen, M.; Deschamps, C.; Miller, D.; Salomao, D.; Wiersema, M.J. Impact of EUS-guided fine-needle aspiration on lymph node staging in patients with esophageal carcinoma. Gastrointest. Endosc. 2001, 53, 751–757. [Google Scholar] [CrossRef] [PubMed]

- Harrington, C.; Smith, L.; Bisland, J.; Lopez Gonzalez, E.; Jamieson, N.; Paterson, S.; Stanley, A.J. Mediastinal node staging by positron emission tomography-computed tomography and selective endoscopic ultrasound with fine needle aspiration for patients with upper gastrointestinal cancer: Results from a regional centre. World J. Gastrointest. Endosc. 2018, 10, 37–44. [Google Scholar] [CrossRef]

- Catalano, M.F.; Sivak, M.V., Jr.; Rice, T.; Gragg, L.A.; Van Dam, J. Endosonographic features predictive of lymph node metastasis. Gastrointest. Endosc. 1994, 40, 442–446. [Google Scholar] [CrossRef]

- Bhutani, M.S.; Hawes, R.H.; Hoffman, B.J. A comparison of the accuracy of echo features during endoscopic ultrasound (EUS) and EUS-guided fine-needle aspiration for diagnosis of malignant lymph node invasion. Gastrointest. Endosc. 1997, 45, 474–479. [Google Scholar] [CrossRef]

- Takasaki, Y.; Irisawa, A.; Shibukawa, G.; Sato, A.; Abe, Y.; Yamabe, A.; Arakawa, N.; Maki, T.; Yoshida, Y.; Igarashi, R.; et al. New endoscopic ultrasonography criteria for malignant lymphadenopathy based on inter-rater agreement. PLoS ONE 2019, 14, e0212427. [Google Scholar] [CrossRef] [PubMed]

- de Melo, S.W., Jr.; Panjala, C.; Crespo, S.; Diehl, N.N.; Woodward, T.A.; Raimondo, M.; Wallace, M.B. Interobserver agreement on the endosonographic features of lymph nodes in aerodigestive malignancies. Dig. Dis. Sci. 2011, 56, 3204–3208. [Google Scholar] [CrossRef]

- Knabe, M.; Gunter, E.; Ell, C.; Pech, O. Can EUS elastography improve lymph node staging in esophageal cancer? Surg. Endosc. 2013, 27, 1196–1202. [Google Scholar] [CrossRef]

- Lisotti, A.; Fusaroli, P. Contrast-enhanced EUS for the differential diagnosis of lymphadenopathy: Technical improvement with defined indications. Gastrointest. Endosc. 2019, 90, 995–996. [Google Scholar] [CrossRef]

- Fusaroli, P.; Napoleon, B.; Gincul, R.; Lefort, C.; Palazzo, L.; Palazzo, M.; Kitano, M.; Minaga, K.; Caletti, G.; Lisotti, A. The clinical impact of ultrasound contrast agents in EUS: A systematic review according to the levels of evidence. Gastrointest. Endosc. 2016, 84, 587–596.e10. [Google Scholar] [CrossRef] [PubMed]

- Chen, V.K.; Eloubeidi, M.A. Endoscopic ultrasound-guided fine needle aspiration is superior to lymph node echofeatures: A prospective evaluation of mediastinal and peri-intestinal lymphadenopathy. Am. J. Gastroenterol. 2004, 99, 628–633. [Google Scholar] [CrossRef]

- de Moura, D.T.H.; McCarty, T.R.; Jirapinyo, P.; Ribeiro, I.B.; Farias, G.F.A.; Ryou, M.; Lee, L.S.; Thompson, C.C. Endoscopic Ultrasound Fine-Needle Aspiration versus Fine-Needle Biopsy for Lymph Node Diagnosis: A Large Multicenter Comparative Analysis. Clin. Endosc. 2020, 53, 600–610. [Google Scholar] [CrossRef]

- Chen, L.; Li, Y.; Gao, X.; Lin, S.; He, L.; Luo, G.; Li, J.; Huang, C.; Wang, G.; Yang, Q.; et al. High Diagnostic Accuracy and Safety of Endoscopic Ultrasound-Guided Fine-Needle Aspiration in Malignant Lymph Nodes: A Systematic Review and Meta-Analysis. Dig. Dis. Sci. 2021, 66, 2763–2775. [Google Scholar] [CrossRef]

- Dumitrescu, E.A.; Ungureanu, B.S.; Cazacu, I.M.; Florescu, L.M.; Streba, L.; Croitoru, V.M.; Sur, D.; Croitoru, A.; Turcu-Stiolica, A.; Lungulescu, C.V. Diagnostic Value of Artificial Intelligence-Assisted Endoscopic Ultrasound for Pancreatic Cancer: A Systematic Review and Meta-Analysis. Diagnostics 2022, 12, 309. [Google Scholar] [CrossRef] [PubMed]

- Ozcelik, N.; Ozcelik, A.E.; Bulbul, Y.; Oztuna, F.; Ozlu, T. Can artificial intelligence distinguish between malignant and benign mediastinal lymph nodes using sonographic features on EBUS images? Curr. Med. Res. Opin. 2020, 36, 2019–2024. [Google Scholar] [CrossRef] [PubMed]

- Churchill, I.F.; Gatti, A.A.; Hylton, D.A.; Sullivan, K.A.; Patel, Y.S.; Leontiadis, G.I.; Farrokhyar, F.; Hanna, W.C. An Artificial Intelligence Algorithm to Predict Nodal Metastasis in Lung Cancer. Ann. Thorac. Surg. 2022, 114, 248–256. [Google Scholar] [CrossRef]

- Giovannini, M.; Thomas, B.; Erwan, B.; Christian, P.; Fabrice, C.; Benjamin, E.; Genevieve, M.; Paolo, A.; Pierre, D.; Robert, Y.; et al. Endoscopic ultrasound elastography for evaluation of lymph nodes and pancreatic masses: A multicenter study. World J. Gastroenterol. 2009, 15, 1587–1593. [Google Scholar] [CrossRef] [PubMed]

- Paterson, S.; Duthie, F.; Stanley, A.J. Endoscopic ultrasound-guided elastography in the nodal staging of oesophageal cancer. World J. Gastroenterol. 2012, 18, 889–895. [Google Scholar] [CrossRef]

- Larsen, M.H.; Fristrup, C.; Hansen, T.P.; Hovendal, C.P.; Mortensen, M.B. Endoscopic ultrasound, endoscopic sonoelastography, and strain ratio evaluation of lymph nodes with histology as gold standard. Endoscopy 2012, 44, 759–766. [Google Scholar] [CrossRef]

- Li, C.; Shuai, Y.; Zhou, X. Endoscopic ultrasound guided fine needle aspiration for the diagnosis of intra-abdominal lymphadenopathy: A systematic review and meta-analysis. Scand. J. Gastroenterol. 2020, 55, 114–122. [Google Scholar] [CrossRef]

- Anirvan, P.; Meher, D.; Singh, S.P. Artificial Intelligence in Gastrointestinal Endoscopy in a Resource-constrained Setting: A Reality Check. Euroasian J. Hepato-Gastroenterol. 2020, 10, 92–97. [Google Scholar] [CrossRef]

- Facciorusso, A.; Crino, S.F.; Ramai, D.; Madhu, D.; Fugazza, A.; Carrara, S.; Spadaccini, M.; Mangiavillano, B.; Gkolfakis, P.; Mohan, B.P.; et al. Comparative diagnostic performance of different techniques for EUS-guided fine-needle biopsy sampling of solid pancreatic masses: A network meta-analysis. Gastrointest. Endosc. 2023, 97, 839–848.E5. [Google Scholar] [CrossRef]

- Crino, S.F.; Conti Bellocchi, M.C.; Di Mitri, R.; Inzani, F.; Rimbas, M.; Lisotti, A.; Manfredi, G.; Teoh, A.Y.B.; Mangiavillano, B.; Sendino, O.; et al. Wet-suction versus slow-pull technique for endoscopic ultrasound-guided fine-needle biopsy: A multicenter, randomized, crossover trial. Endoscopy 2023, 55, 225–234. [Google Scholar] [CrossRef] [PubMed]

- Pinto-Coelho, L. How Artificial Intelligence Is Shaping Medical Imaging Technology: A Survey of Innovations and Applications. Bioengineering 2023, 10, 1435. [Google Scholar] [CrossRef] [PubMed]

- Mall, P.K.; Singh, P.K.; Srivastav, S.; Narayan, V.; Paprzycki, M.; Jaworska, T.; Ganzha, M. A comprehensive review of deep neural networks for medical image processing: Recent developments and future opportunities. Healthc. Anal. 2023, 4, 100216. [Google Scholar] [CrossRef]

- Saraiva, M.M.; Gonzalez-Haba, M.; Widmer, J.; Mendes, F.; Gonda, T.; Agudo, B.; Ribeiro, T.; Costa, A.; Fazel, Y.; Lera, M.E.; et al. Deep Learning and Automatic Differentiation of Pancreatic Lesions in Endoscopic Ultrasound: A Transatlantic Study. Clin. Transl. Gastroenterol. 2024, 15, e00771. [Google Scholar] [CrossRef]

| Set | Frames (n) | Exams (n) | Devices (n) | Benign (n) | Malignant (n) |

|---|---|---|---|---|---|

| Training | 44,745 | 60 | 5 | 20,922 | 23,823 |

| Tuning | 7748 | 14 | 4 | 1764 | 5984 |

| Testing | 7499 | 8 | 1 | 5208 | 2291 |

| Center | Training | Validation | Testing |

|---|---|---|---|

| Center 1 | 4 | 1 | 2 |

| Center 2 | 38 | 8 | 3 |

| Center 3 | 0 | 1 | 0 |

| Center 4 | 2 | 1 | 0 |

| Center 5 | 1 | 1 | 0 |

| Center 6 | 9 | 1 | 3 |

| Center 7 | 1 | 1 | 0 |

| Center 8 | 3 | 0 | 0 |

| Center 9 | 2 | 0 | 0 |

| Metric | Benign (%) [95% CI] | Malignant (%) [95% CI] |

|---|---|---|

| Detection rate | 69.1 [60.2–78.0] | 85.4 [80.4–90.3] |

| Sensitivity (Recall) | 96.8 [94.8–98.9] | 98.8 [98.5–99.2] |

| Specificity | 96.3 [95.1–97.6] | 99.0 [98.3–99.7] |

| PPV | 96.3 [95.0–97.6] | 99.0 [98.4–99.7] |

| NPV | 97.0 [95.0–98.9] | 98.8 [98.4–99.2] |

| Accuracy | 98.3 [97.6–99.1] | 98.3 [97.6–99.1] |

| F1-score | 96.6 [95.0–98.1] | 98.9 [98.4–99.4] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Agudo Castillo, B.; Mascarenhas Saraiva, M.; Pinto da Costa, A.M.M.; Ferreira, J.; Martins, M.; Mendes, F.; Cardoso, P.; Mota, J.; Almeida, M.J.; Afonso, J.; et al. Artificial Intelligence for Lymph Node Detection and Malignancy Prediction in Endoscopic Ultrasound: A Multicenter Study. Cancers 2025, 17, 3398. https://doi.org/10.3390/cancers17213398

Agudo Castillo B, Mascarenhas Saraiva M, Pinto da Costa AMM, Ferreira J, Martins M, Mendes F, Cardoso P, Mota J, Almeida MJ, Afonso J, et al. Artificial Intelligence for Lymph Node Detection and Malignancy Prediction in Endoscopic Ultrasound: A Multicenter Study. Cancers. 2025; 17(21):3398. https://doi.org/10.3390/cancers17213398

Chicago/Turabian StyleAgudo Castillo, Belén, Miguel Mascarenhas Saraiva, António Miguel Martins Pinto da Costa, João Ferreira, Miguel Martins, Francisco Mendes, Pedro Cardoso, Joana Mota, Maria João Almeida, João Afonso, and et al. 2025. "Artificial Intelligence for Lymph Node Detection and Malignancy Prediction in Endoscopic Ultrasound: A Multicenter Study" Cancers 17, no. 21: 3398. https://doi.org/10.3390/cancers17213398

APA StyleAgudo Castillo, B., Mascarenhas Saraiva, M., Pinto da Costa, A. M. M., Ferreira, J., Martins, M., Mendes, F., Cardoso, P., Mota, J., Almeida, M. J., Afonso, J., Ribeiro, T., Lera dos Santos, M. E., de Carvalho, M., Morís, M., García García de Paredes, A., de la Iglesia García, D., Fernández-Zarza, C. E., Pérez González, A., Kok, K.-S., ... González-Haba Ruiz, M. (2025). Artificial Intelligence for Lymph Node Detection and Malignancy Prediction in Endoscopic Ultrasound: A Multicenter Study. Cancers, 17(21), 3398. https://doi.org/10.3390/cancers17213398