Artificial Intelligence and Hysteroscopy: A Multicentric Study on Automated Classification of Pleomorphic Lesions

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Ethical Considerations

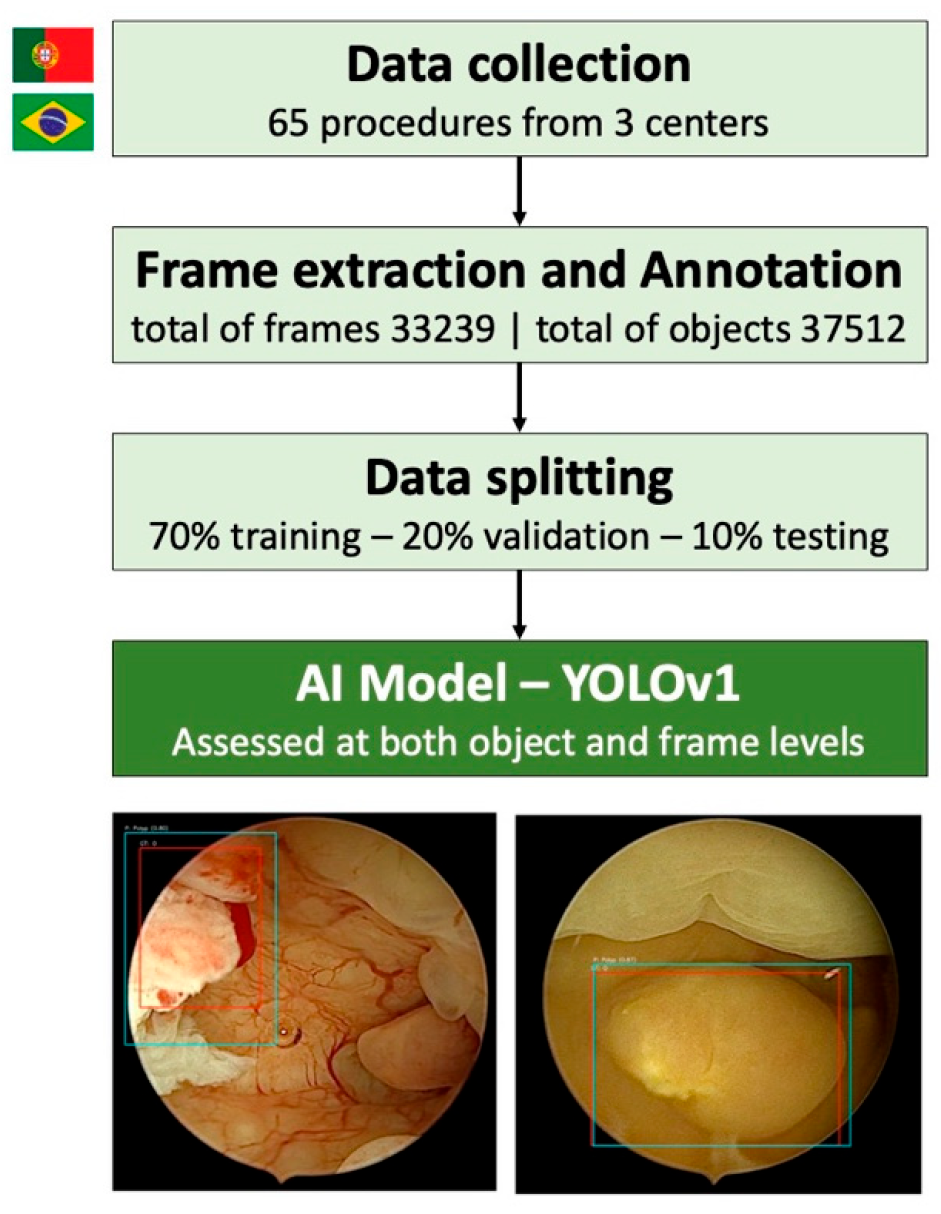

2.2. Study Design and Dataset Preparation

2.3. Model Development and Evaluation

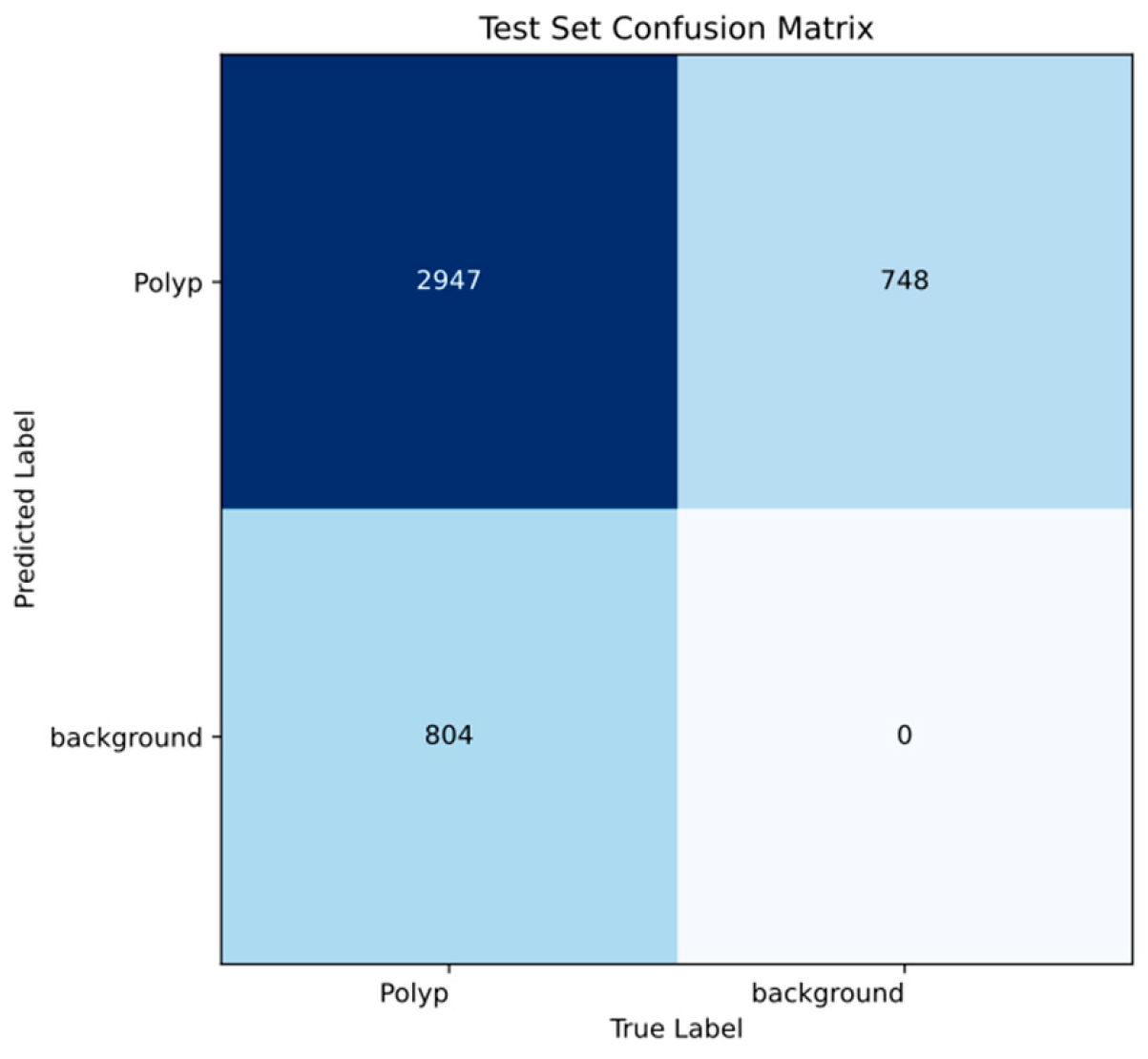

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pinto-Coelho, L. How Artificial Intelligence Is Shaping Medical Imaging Technology: A Survey of Innovations and Applications. Bioengineering 2023, 10, 1435. [Google Scholar] [CrossRef]

- Kim, H.Y.; Cho, G.J.; Kwon, H.S. Applications of artificial intelligence in obstetrics. Ultrasonography 2023, 42, 2–9. [Google Scholar] [CrossRef]

- Zhu, B.; Gu, H.; Mao, Z.; Beeraka, N.M.; Zhao, X.; Anand, M.P.; Zheng, Y.; Zhao, R.; Li, S.; Manogaran, P.; et al. Global burden of gynaecological cancers in 2022 and projections to 2050. J. Glob. Health 2024, 14, 04155. [Google Scholar] [CrossRef]

- Bray, F.; Ferlay, J.; Soerjomataram, I.; Siegel, R.L.; Torre, L.A.; Jemal, A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA A Cancer J. Clin. 2018, 68, 394–424. [Google Scholar] [CrossRef] [PubMed]

- Wilson, E.M.; Eskander, R.N.; Binder, P.S. Recent Therapeutic Advances in Gynecologic Oncology: A Review. Cancers 2024, 16, 770. [Google Scholar] [CrossRef] [PubMed]

- Somasegar, S.; Bashi, A.; Lang, S.M.; Liao, C.I.; Johnson, C.; Darcy, K.M.; Tian, C.; Kapp, D.S.; Chan, J.K. Trends in Uterine Cancer Mortality in the United States: A 50-Year Population-Based Analysis. Obstet. Gynecol. 2023, 142, 978–986. [Google Scholar] [CrossRef] [PubMed]

- Anca-Stanciu, M.-B.; Manu, A.; Olinca, M.V.; Coroleucă, C.; Comandașu, D.-E.; Coroleuca, C.A.; Maier, C.; Bratila, E. Comprehensive Review of Endometrial Cancer: New Molecular and FIGO Classification and Recent Treatment Changes. J. Clin. Med. 2025, 14, 1385. [Google Scholar] [CrossRef]

- Gynecologists, A.C.o.O.a. ACOG practice bulletin, clinical management guidelines for obstetrician-gynecologists, number 65, August 2005: Management of endometrial cancer. Obstet. Gynecol. 2005, 106, 413–425. [Google Scholar] [CrossRef]

- Berek, J.S.; Matias-Guiu, X.; Creutzberg, C.; Fotopoulou, C.; Gaffney, D.; Kehoe, S.; Lindemann, K.; Mutch, D.; Concin, N.; Endometrial Cancer Staging Subcommittee, F.W.s.C.C. FIGO staging of endometrial cancer: 2023. Int. J. Gynecol. Obstet. 2023, 162, 383–394. [Google Scholar] [CrossRef]

- Stocker, L.; Umranikar, A.; Moors, A.; Umranikar, S. An overview of hysteroscopy and hysteroscopic surgery. Obstet. Gynaecol. Reprod. Med. 2013, 23, 146–153. [Google Scholar] [CrossRef]

- De Silva, P.M.; Smith, P.P.; Cooper, N.A.M.; Clark, T.J. Outpatient Hysteroscopy: (Green-top Guideline no. 59). BJOG 2024, 131, e86–e110. [Google Scholar] [CrossRef]

- Orlando, M.S.; Bradley, L.D. Implementation of Office Hysteroscopy for the Evaluation and Treatment of Intrauterine Pathology. Obstet. Gynecol. 2022, 140, 499–513. [Google Scholar] [CrossRef] [PubMed]

- Gavião, W.; Scharcanski, J.; Frahm, J.M.; Pollefeys, M. Hysteroscopy video summarization and browsing by estimating the physician’s attention on video segments. Med. Image Anal. 2012, 16, 160–176. [Google Scholar] [CrossRef]

- Moodley, M.; Roberts, C. Clinical pathway for the evaluation of postmenopausal bleeding with an emphasis on endometrial cancer detection. J. Obstet. Gynaecol. 2004, 24, 736–741. [Google Scholar] [CrossRef] [PubMed]

- Andía Ortiz, D.; Gorostiaga Ruiz-Garma, A.; Villegas Guisasola, I.; Mozo-Rosales Fano, F.; San Román Sigler, V.; Escobar Martinez, A. Outcomes of endometrial-polyp treatment with hysteroscopy in operating room. Gynecol. Surg. 2008, 5, 35–39. [Google Scholar] [CrossRef]

- Dhombres, F.; Bonnard, J.; Bailly, K.; Maurice, P.; Papageorghiou, A.T.; Jouannic, J.-M. Contributions of Artificial Intelligence Reported in Obstetrics and Gynecology Journals: Systematic Review. J. Med. Internet Res. 2022, 24, e35465. [Google Scholar] [CrossRef]

- Shrestha, P.; Poudyal, B.; Yadollahi, S.; Wright, D.E.; Gregory, A.V.; Warner, J.D.; Korfiatis, P.; Green, I.C.; Rassier, S.L.; Mariani, A.; et al. A systematic review on the use of artificial intelligence in gynecologic imaging—Background, state of the art, and future directions. Gynecol. Oncol. 2022, 166, 596–605. [Google Scholar] [CrossRef]

- Iftikhar, P.; Kuijpers, M.V.; Khayyat, A.; Iftikhar, A.; DeGouvia De Sa, M. Artificial Intelligence: A New Paradigm in Obstetrics and Gynecology Research and Clinical Practice. Cureus 2020, 12, e7124. [Google Scholar] [CrossRef]

- Brandão, M.; Mendes, F.; Martins, M.; Cardoso, P.; Macedo, G.; Mascarenhas, T.; Mascarenhas Saraiva, M. Revolutionizing Women’s Health: A Comprehensive Review of Artificial Intelligence Advancements in Gynecology. J. Clin. Med. 2024, 13, 1061. [Google Scholar] [CrossRef]

- Li, N.; Zhao, X.; Yang, Y.; Zou, X. Objects Classification by Learning-Based Visual Saliency Model and Convolutional Neural Network. Comput. Intell. Neurosci. 2016, 2016, 7942501. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Kurniawan, A.; Erlangga, E.; Tanjung, T.; Ariani, F.; Aprilinda, Y.; Endra, R. Review of Deep Learning Using Convolutional Neural Network Model. Eng. Headw. 2024, 3, 49–55. [Google Scholar] [CrossRef]

- Messmann, H.; Bisschops, R.; Antonelli, G.; Libânio, D.; Sinonquel, P.; Abdelrahim, M.; Ahmad, O.F.; Areia, M.; Bergman, J.; Bhandari, P.; et al. Expected value of artificial intelligence in gastrointestinal endoscopy: European Society of Gastrointestinal Endoscopy (ESGE) Position Statement. Endoscopy 2022, 54, 1211–1231. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2015. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Neofytou, M.S.; Pattichis, M.S.; Pattichis, C.S.; Tanos, V.; Kyriacou, E.C.; Koutsouris, D.D. Texture-based classification of hysteroscopy images of the endometrium. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2006, 2006, 3005–3008. [Google Scholar] [CrossRef]

- Vlachokosta, A.A.; Asvestas, P.A.; Gkrozou, F.; Lavasidis, L.; Matsopoulos, G.K.; Paschopoulos, M. Classification of hysteroscopical images using texture and vessel descriptors. Med. Biol. Eng. Comput. 2013, 51, 859–867. [Google Scholar] [CrossRef]

- Takahashi, Y.; Sone, K.; Noda, K.; Yoshida, K.; Toyohara, Y.; Kato, K.; Inoue, F.; Kukita, A.; Taguchi, A.; Nishida, H.; et al. Automated system for diagnosing endometrial cancer by adopting deep-learning technology in hysteroscopy. PLoS ONE 2021, 16, e0248526. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, Z.; Zhang, J.; Wang, C.; Wang, Y.; Chen, H.; Shan, L.; Huo, J.; Gu, J.; Ma, X. Deep learning model for classifying endometrial lesions. J. Transl. Med. 2021, 19, 10. [Google Scholar] [CrossRef]

- Li, B.; Chen, H.; Duan, H. Visualized hysteroscopic artificial intelligence fertility assessment system for endometrial injury: An image-deep-learning study. Ann. Med. 2025, 57, 2478473. [Google Scholar] [CrossRef]

| Evaluation Level | Metric | Value |

|---|---|---|

| Object level | Recall | 0.96 |

| Precision | 0.95 | |

| Map50 | 0.98 | |

| Map50-95 | 0.77 | |

| Frame level | Recall | 0.75 |

| Precision | 0.98 | |

| Mean f1 score | 0.82 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mascarenhas, M.; Peixoto, C.; Freire, R.; Cavaco Gomes, J.; Cardoso, P.; Castro, I.; Martins, M.; Mendes, F.; Mota, J.; Almeida, M.J.; et al. Artificial Intelligence and Hysteroscopy: A Multicentric Study on Automated Classification of Pleomorphic Lesions. Cancers 2025, 17, 2559. https://doi.org/10.3390/cancers17152559

Mascarenhas M, Peixoto C, Freire R, Cavaco Gomes J, Cardoso P, Castro I, Martins M, Mendes F, Mota J, Almeida MJ, et al. Artificial Intelligence and Hysteroscopy: A Multicentric Study on Automated Classification of Pleomorphic Lesions. Cancers. 2025; 17(15):2559. https://doi.org/10.3390/cancers17152559

Chicago/Turabian StyleMascarenhas, Miguel, Carla Peixoto, Ricardo Freire, Joao Cavaco Gomes, Pedro Cardoso, Inês Castro, Miguel Martins, Francisco Mendes, Joana Mota, Maria João Almeida, and et al. 2025. "Artificial Intelligence and Hysteroscopy: A Multicentric Study on Automated Classification of Pleomorphic Lesions" Cancers 17, no. 15: 2559. https://doi.org/10.3390/cancers17152559

APA StyleMascarenhas, M., Peixoto, C., Freire, R., Cavaco Gomes, J., Cardoso, P., Castro, I., Martins, M., Mendes, F., Mota, J., Almeida, M. J., Silva, F., Gutierres, L., Mendes, B., Ferreira, J., Mascarenhas, T., & Zulmira, R. (2025). Artificial Intelligence and Hysteroscopy: A Multicentric Study on Automated Classification of Pleomorphic Lesions. Cancers, 17(15), 2559. https://doi.org/10.3390/cancers17152559