Real-Time Intraoperative Decision-Making in Head and Neck Tumor Surgery: A Histopathologically Grounded Hyperspectral Imaging and Deep Learning Approach

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

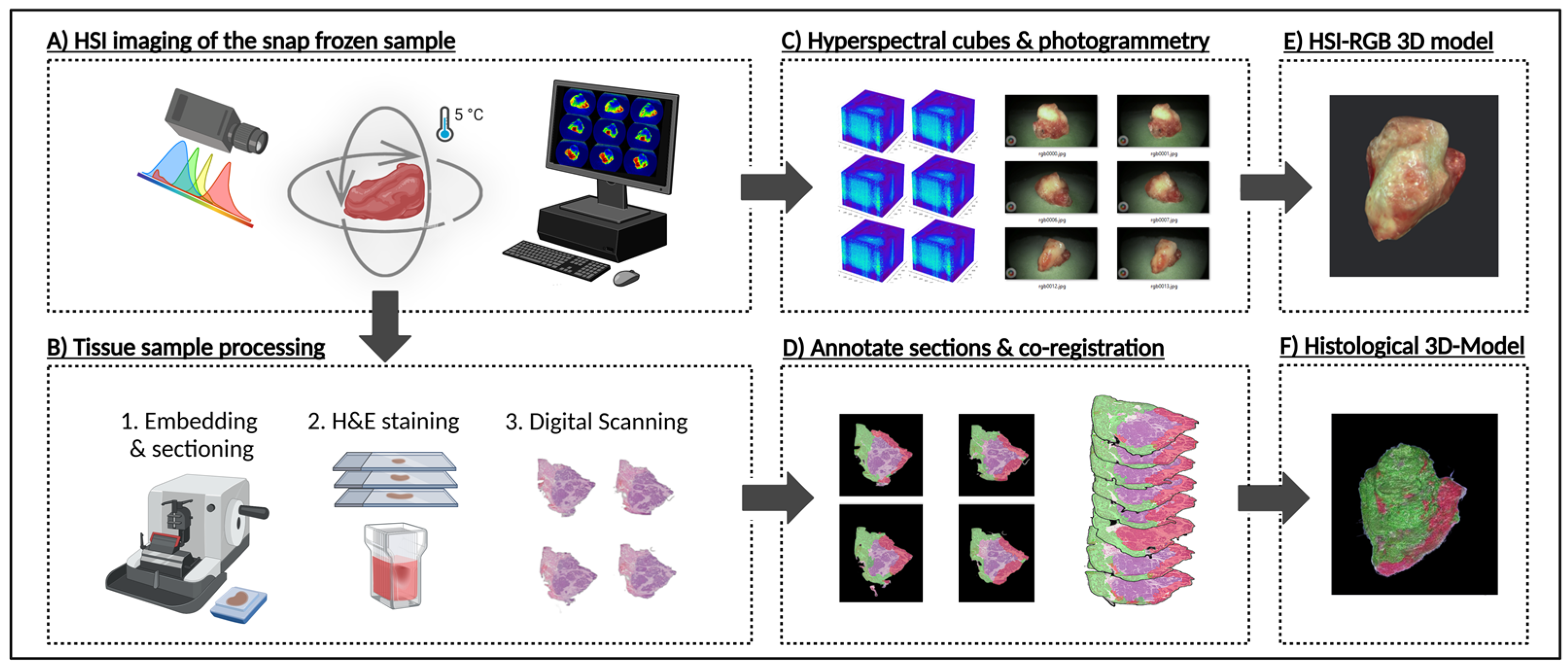

2.1. Sample Preparation for HSI 3D Modelling

2.2. Sample Preparation for 3D Histology Modelling

2.3. Deep Learning and CNN

2.4. Statistical Metrics for Evaluating Model Performance

3. Results

3.1. Performance Metrics of the Deep Learning Model

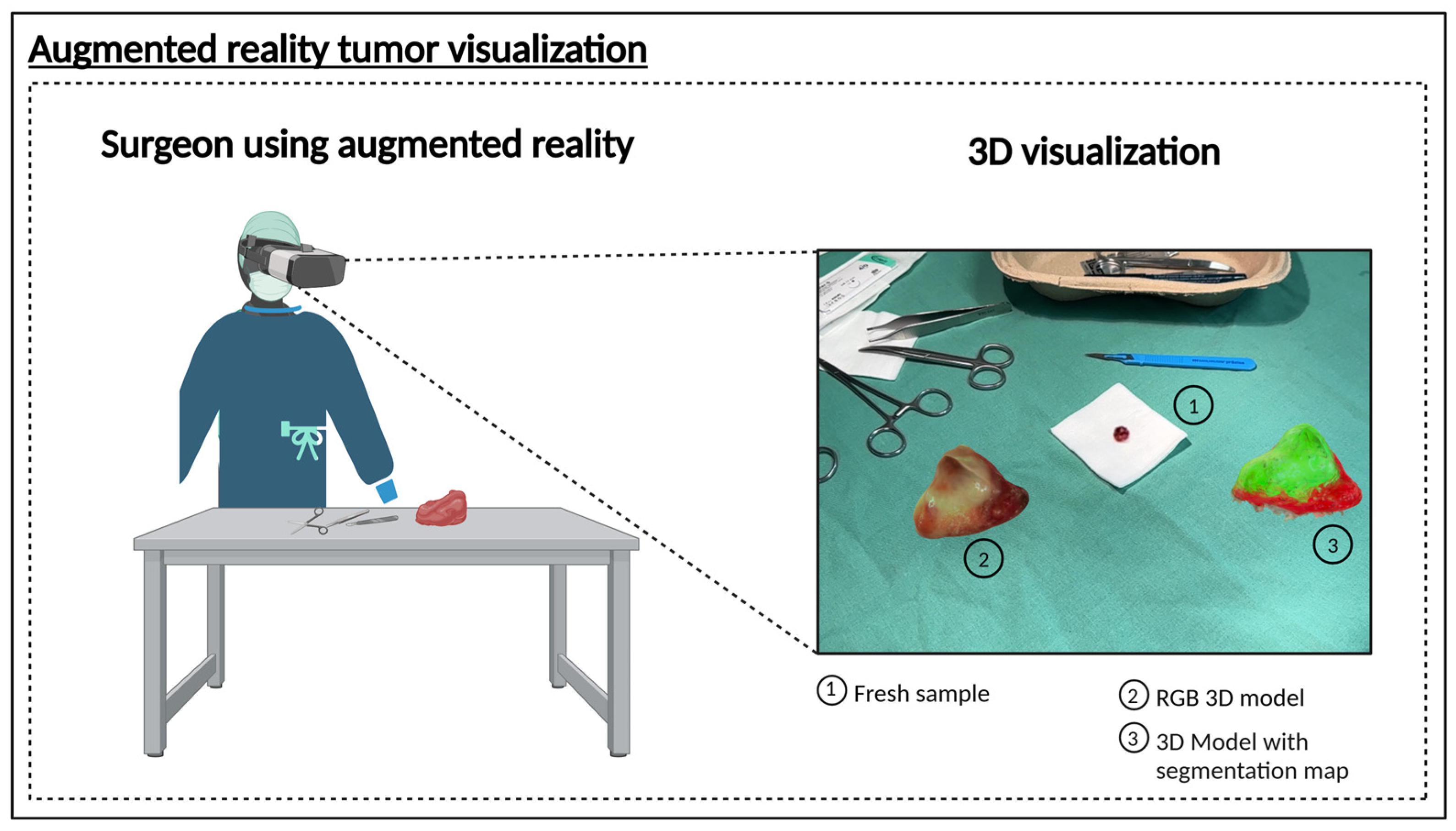

3.2. Proof of Concept 3D Hyperspectral Imaging System

4. Discussion

5. Conclusions

6. Patents

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 3D | three-dimensional |

| AI | artificial intelligence |

| API | Application Programming Interface |

| AR | augmented reality |

| AUC | area under the curve |

| BMBF | Bundesministerium für Bildung und Forschung (Federal Ministry of Education and Research, Germany) |

| CE | Conformité Européenne (CE marking) |

| CNN | convolutional neural network |

| DFG | Deutsche Forschungsgemeinschaft (German Research Foundation) |

| DRKS | Deutsches Register Klinischer Studien (German Clinical Trials Register) |

| EU | European Union |

| FFPE | formalin-fixed paraffin-embedded |

| FN | false negative |

| FP | false positive |

| H&E | hematoxylin and eosin |

| HNSCC | head and neck squamous cell carcinoma |

| HSI | hyperspectral imaging |

| IKOSA | brand name of the automated annotation software platform |

| LED | light-emitting diode |

| MDR | Medical Device Regulation |

| MRI | magnetic resonance imaging |

| NanoZoomer | brand name of the digital microscope scanner |

| OCT | optical coherence tomography |

| PAT | photoacoustic tomography |

| RGB | red, green, blue |

| TIVITA™ | brand name of the hyperspectral imaging system |

| TN | true negative |

| TP | true positive |

| TNM | tumor-node-metastasis classification |

| U-Net | U-shaped convolutional neural network architecture |

| UwU-Net | U-within-U-Net architecture |

References

- Iseli, T.A.; Lin, M.J.; Tsui, A.; Guiney, A.; Wiesenfeld, D.; Iseli, C.E. Are wider surgical margins needed for early oral tongue cancer? J. Laryngol. Otol. 2012, 126, 289–294. [Google Scholar] [CrossRef]

- Smits, R.W.H.; van Lanschot, C.G.F.; Aaboubout, Y.; de Ridder, M.; Hegt, V.N.; Barroso, E.M.; Meeuwis, C.A.; Sewnaik, A.; Hardillo, J.A.; Monserez, D.; et al. Intraoperative Assessment of the Resection Specimen Facilitates Achievement of Adequate Margins in Oral Carcinoma. Front. Oncol. 2020, 10, 14593. [Google Scholar] [CrossRef]

- Ramadan, S.; Bellas, A.; Al-Qurayshi, Z.; Chang, K.; Zolkind, P.; Pipkorn, P.; Mazul, A.L.; Harbison, R.A.; Jackson, R.S.; Puram, S.V. Use of Intraoperative Frozen Section to Assess Surgical Margins in HPV-Related Oropharyngeal Carcinoma. JAMA Otolaryngol. Head Neck Surg. 2025, 151, 253. [Google Scholar] [CrossRef] [PubMed]

- Heidkamp, J.; Scholte, M.; Rosman, C.; Manohar, S.; Fütterer, J.J.; Rovers, M.M. Novel imaging techniques for intraoperative margin assessment in surgical oncology: A systematic review. Int. J. Cancer 2021, 149, 635–645. [Google Scholar] [CrossRef] [PubMed]

- Yoon, J. Hyperspectral imaging for clinical applications. BioChip J. 2022, 16, 1–12. [Google Scholar] [CrossRef]

- Young, K.; Ma, E.; Kejriwal, S.; Nielsen, T.; Aulakh, S.S.; Birkeland, A.C. Intraoperative in vivo imaging modalities in head and neck cancer surgical margin delineation: A systematic review. Cancers 2022, 14, 3416. [Google Scholar] [CrossRef] [PubMed]

- Puustinen, S.; Vrzáková, H.; Hyttinen, J.; Rauramaa, T.; Fält, P.; Hauta-Kasari, M.; Bednarik, R.; Koivisto, T.; Rantala, S.; von Und Zu Fraunberg, M.; et al. Hyperspectral Imaging in Brain Tumor Surgery-Evidence of Machine Learning-Based Performance. World Neurosurg. 2023, 175, e614–e635. [Google Scholar] [CrossRef]

- Pertzborn, D.; Bali, A.; Mühlig, A.; von Eggeling, F.; Guntinas-Lichius, O. Hyperspectral imaging and evaluation of surgical margins: Where do we stand? Curr. Opin. Otolaryngol. Head Neck Surg. 2024, 32, 96–104. [Google Scholar] [CrossRef]

- Pertzborn, D.; Nguyen, H.N.; Hüttmann, K.; Prengel, J.; Ernst, G.; Guntinas-Lichius, O.; von Eggeling, F.; Hoffmann, F. Intraoperative Assessment of Tumor Margins in Tissue Sections with Hyperspectral Imaging and Machine Learning. Cancers 2022, 15, 213. [Google Scholar] [CrossRef]

- Leon, R.; Martinez-Vega, B.; Fabelo, H.; Ortega, S.; Melian, V.; Castaño, I.; Carretero, G.; Almeida, P.; Garcia, A.; Quevedo, E.; et al. Non-Invasive Skin Cancer Diagnosis Using Hyperspectral Imaging for In-Situ Clinical Support. J. Clin. Med. 2020, 9, 1662. [Google Scholar] [CrossRef]

- He, Q.; Wang, R. Hyperspectral imaging enabled by an unmodified smartphone for analyzing skin morphological features and monitoring hemodynamics. Biomed. Opt. Express 2020, 11, 895–910. [Google Scholar] [CrossRef] [PubMed]

- Kohler, L.H.; Köhler, H.; Kohler, S.; Langer, S.; Nuwayhid, R.; Gockel, I.; Spindler, N.; Osterhoff, G. Hyperspectral Imaging (HSI) as a new diagnostic tool in free flap monitoring for soft tissue reconstruction: A proof of concept study. BMC Surg. 2021, 21, 222. [Google Scholar] [CrossRef] [PubMed]

- Yoon, J.; Joseph, J.; Waterhouse, D.J.; Borzy, C.; Siemens, K.; Diamond, S.; Tsikitis, V.L.; Bohndiek, S.E. First experience in clinical application of hyperspectral endoscopy for evaluation of colonic polyps. J. Biophotonics 2021, 14, e202100078. [Google Scholar] [CrossRef] [PubMed]

- Waterhouse, D.J.; Januszewicz, W.; Ali, S.; Fitzgerald, R.C.; di Pietro, M.; Bohndiek, S.E. Spectral Endoscopy Enhances Contrast for Neoplasia in Surveillance of Barrett’s Esophagus. Cancer Res. 2021, 81, 3415–3425. [Google Scholar] [CrossRef]

- Francesca, M.; Fons van der, S.; Sveta, Z.; Esther, K.; Susan Brouwer de, K.; Theo, R.; Caifeng, S.; Jean, S.; Peter, H.N.d.W. Automated tumor assessment of squamous cell carcinoma on tongue cancer patients with hyperspectral imaging. In Proceedings of the Proc.SPIE, San Diego, CA, USA, 8 March 2019; p. 109512K. [Google Scholar]

- Woolgar, J.A.; Triantafyllou, A. A histopathological appraisal of surgical margins in oral and oropharyngeal cancer resection specimens. Oral Oncol. 2005, 41, 1034–1043. [Google Scholar] [CrossRef]

- Aboughaleb, I.H.; Aref, M.H.; El-Sharkawy, Y.H. Hyperspectral imaging for diagnosis and detection of ex-vivo breast cancer. Photodiagnosis Photodyn. Ther. 2020, 31, 101922. [Google Scholar] [CrossRef]

- Zhang, Y.; Yu, S.; Zhu, X.; Ning, X.; Liu, W.; Wang, C.; Liu, X.; Zhao, D.; Zheng, Y.; Bao, J. Explainable liver tumor delineation in surgical specimens using hyperspectral imaging and deep learning. Biomed. Opt. Express 2021, 12, 4510–4529. [Google Scholar] [CrossRef]

- Ortega, S.; Halicek, M.; Fabelo, H.; Camacho, R.; Plaza, M.L.; Godtliebsen, F.; Callicó, C.M.; Fei, B. Hyperspectral Imaging for the Detection of Glioblastoma Tumor Cells in H&E Slides Using Convolutional Neural Networks. Sensors 2020, 20, 1911. [Google Scholar] [CrossRef]

- Lv, M.; Chen, T.; Yang, Y.; Tu, T.; Zhang, N.; Li, W.; Li, W. Membranous nephropathy classification using microscopic hyperspectral imaging and tensor patch-based discriminative linear regression. Biomed. Opt. Express 2021, 12, 2968–2978. [Google Scholar] [CrossRef]

- Liu, N.; Guo, Y.; Jiang, H.; Yi, W. Gastric cancer diagnosis using hyperspectral imaging with principal component analysis and spectral angle mapper. J. Biomed. Opt. 2020, 25, 066005. [Google Scholar] [CrossRef]

- Halicek, M.; Dormer, J.D.; Little, J.V.; Chen, A.Y.; Myers, L.; Sumer, B.D.; Fei, B. Hyperspectral Imaging of Head and Neck Squamous Cell Carcinoma for Cancer Margin Detection in Surgical Specimens from 102 Patients Using Deep Learning. Cancers 2019, 11, 1367. [Google Scholar] [CrossRef] [PubMed]

- Halicek, M.; Dormer, J.D.; Little, J.V.; Chen, A.Y.; Fei, B. Tumor detection of the thyroid and salivary glands using hyperspectral imaging and deep learning. Biomed. Opt. Express 2020, 11, 1383–1400. [Google Scholar] [CrossRef] [PubMed]

- Kho, E.; de Boer, L.L.; Van de Vijver, K.K.; van Duijnhoven, F.; Vrancken Peeters, M.; Sterenborg, H.; Ruers, T.J.M. Hyperspectral Imaging for Resection Margin Assessment during Cancer Surgery. Clin. Cancer Res. 2019, 25, 3572–3580. [Google Scholar] [CrossRef]

- Lu, G.; Fei, B. Medical hyperspectral imaging: A review. J. Biomed. Opt. 2014, 19, 010901. [Google Scholar] [CrossRef] [PubMed]

- Bali, A.; Bitter, T.; Mafra, M.; Ballmaier, J.; Kouka, M.; Schneider, G.; Mühlig, A.; Ziller, N.; Werner, T.; von Eggeling, F.; et al. Endoscopic In Vivo Hyperspectral Imaging for Head and Neck Tumor Surgeries Using a Medically Approved CE-Certified Camera with Rapid Visualization During Surgery. Cancers 2024, 16, 3785. [Google Scholar] [CrossRef]

- Ma, L.; Lu, G.; Wang, D.; Qin, X.; Chen, Z.G.; Fei, B. Adaptive deep learning for head and neck cancer detection using hyperspectral imaging. Vis. Comput. Ind. Biomed. Art 2019, 2, 18. [Google Scholar] [CrossRef]

- Trajanovski, S.; Shan, C.; Weijtmans, P.J.C.; de Koning, S.G.B.; Ruers, T.J.M. Tongue Tumor Detection in Hyperspectral Images Using Deep Learning Semantic Segmentation. IEEE Trans. Biomed. Eng. 2021, 68, 1330–1340. [Google Scholar] [CrossRef]

- Harper, D.J.; Konegger, T.; Augustin, M.; Schützenberger, K.; Eugui, P.; Lichtenegger, A.; Merkle, C.W.; Hitzenberger, C.K.; Glösmann, M.; Baumann, B. Hyperspectral optical coherence tomography for in vivo visualization of melanin in the retinal pigment epithelium. J. Biophotonics 2019, 12, e201900153. [Google Scholar] [CrossRef]

- Diot, G.; Metz, S.; Noske, A.; Liapis, E.; Schroeder, B.; Ovsepian, S.V.; Meier, R.; Rummeny, E.; Ntziachristos, V. Multispectral Optoacoustic Tomography (MSOT) of Human Breast Cancer. Clin. Cancer Res. 2017, 23, 6912–6922. [Google Scholar] [CrossRef]

- Birkeland, A.C.; Rosko, A.J.; Chinn, S.B.; Prince, M.E.; Sun, G.H.; Spector, M.E. Prevalence and outcomes of head and neck versus non-head and neck second primary malignancies in head and neck squamous cell carcinoma: An analysis of the surveillance, epidemiology, and end results database. ORL 2016, 78, 61–69. [Google Scholar] [CrossRef]

- Haring, C.T.; Kana, L.A.; Dermody, S.M.; Brummel, C.; McHugh, J.B.; Casper, K.A.; Chinn, S.B.; Malloy, K.M.; Mierzwa, M.; Prince, M.E. Patterns of recurrence in head and neck squamous cell carcinoma to inform personalized surveillance protocols. Cancer 2023, 129, 2817–2827. [Google Scholar] [CrossRef] [PubMed]

- Griwodz, C.; Gasparini, S.; Calvet, L.; Gurdjos, P.; Castan, F.; Maujean, B.; Lanthony, Y.; de Lillo, G. AliceVision Meshroom: An open-source 3D reconstruction pipeline. In Proceedings of the 12th ACM Multimedia Systems Conference (MMSys 2021), Istanbul, Turkey, 28 September 2021; ACM: Association for Computing Machinery: Istanbul, Turkey, 2021. [Google Scholar]

- Fedorov, A.; Beichel, R.; Kalpathy-Cramer, J.; Finet, J.; Fillion-Robin, J.C.; Pujol, S.; Bauer, C.; Jennings, D.; Fennessy, F.; Sonka, M.; et al. 3D Slicer as an image computing platform for the Quantitative Imaging Network. Magn. Reson. Imaging 2012, 30, 1323–1341. [Google Scholar] [CrossRef] [PubMed]

- Neha, F.; Bhati, D.; Shukla, D.; Dalvi, S.M.; Mantzou, N.; Shubbar, S. U-Net in Medical Image Segmentation: A Review of Its Applications Across Modalities. arXiv 2024, arXiv:2412.02242. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; proceedings, part III 18. Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Manifold, B.; Men, S.; Hu, R.; Fu, D. A versatile deep learning architecture for classification and label-free prediction of hyperspectral images. Nat. Mach. Intell. 2021, 3, 306–315. [Google Scholar] [CrossRef] [PubMed]

- Petit, O.; Thome, N.; Rambour, C.; Themyr, L.; Collins, T.; Soler, L. U-net transformer: Self and cross attention for medical image segmentation. In Proceedings of the Machine Learning in Medical Imaging: 12th International Workshop, MLMI 2021, Held in Conjunction with MICCAI 2021, Strasbourg, France, 27 September 2021; Proceedings 12. Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Liu, Z.; Wang, H.; Li, Q. Tongue tumor detection in medical hyperspectral images. Sensors 2012, 12, 162–174. [Google Scholar] [CrossRef]

- Gerstner, A.O.; Laffers, W.; Bootz, F.; Farkas, D.L.; Martin, R.; Bendix, J.; Thies, B. Hyperspectral imaging of mucosal surfaces in patients. J. Biophotonics 2012, 5, 255–262. [Google Scholar] [CrossRef]

- Eggert, D.; Bengs, M.; Westermann, S.; Gessert, N.; Gerstner, A.O.H.; Mueller, N.A.; Bewarder, J.; Schlaefer, A.; Betz, C.; Laffers, W. In vivo detection of head and neck tumors by hyperspectral imaging combined with deep learning methods. J. Biophotonics 2022, 15, e202100167. [Google Scholar] [CrossRef]

- Brouwer de Koning, S.G.; Weijtmans, P.; Karakullukcu, M.B.; Shan, C.; Baltussen, E.J.M.; Smit, L.A.; van Veen, R.L.P.; Hendriks, B.H.W.; Sterenborg, H.J.C.M.; Ruers, T.J.M. Toward assessment of resection margins using hyperspectral diffuse reflection imaging (400–1,700 nm) during tongue cancer surgery. Lasers Surg. Med. 2020, 52, 496–502. [Google Scholar] [CrossRef]

- Halicek, M.; Fabelo, H.; Ortega, S.; Callico, G.M.; Fei, B. In-vivo and ex-vivo tissue analysis through hyperspectral imaging techniques: Revealing the invisible features of cancer. Cancers 2019, 11, 756. [Google Scholar] [CrossRef]

- Barberio, M.; Lapergola, A.; Benedicenti, S.; Mita, M.; Barbieri, V.; Rubichi, F.; Altamura, A.; Giaracuni, G.; Tamburini, E.; Diana, M. Intraoperative bowel perfusion quantification with hyperspectral imaging: A guidance tool for precision colorectal surgery. Surg. Endosc. 2022, 36, 8520–8532. [Google Scholar] [CrossRef]

- Lu, G.; Little, J.V.; Wang, X.; Zhang, H.; Patel, M.R.; Griffith, C.C.; El-Deiry, M.W.; Chen, A.Y.; Fei, B. Detection of head and neck cancer in surgical specimens using quantitative hyperspectral imaging. Clin. Cancer Res. 2017, 23, 5426–5436. [Google Scholar] [CrossRef] [PubMed]

- de Koning, K.J.; Adriaansens, C.M.; Noorlag, R.; de Bree, R.; van Es, R.J. Intraoperative Techniques That Define the Mucosal Margins of Oral Cancer In-Vivo: A Systematic Review. Cancers 2024, 16, 1148. [Google Scholar] [CrossRef] [PubMed]

- Ni, X.-G.; Wang, G.-Q. The role of narrow band imaging in head and neck cancers. Curr. Oncol. Rep. 2016, 18, 1–7. [Google Scholar] [CrossRef]

- Villard, A.; Breuskin, I.; Casiraghi, O.; Asmandar, S.; Laplace-Builhe, C.; Abbaci, M.; Plana, A.M. Confocal laser endomicroscopy and confocal microscopy for head and neck cancer imaging: Recent updates and future perspectives. Oral Oncol. 2022, 127, 105826. [Google Scholar] [CrossRef]

- Dittberner, A.; Ziadat, R.; Hoffmann, F.; Pertzborn, D.; Gassler, N.; Guntinas-Lichius, O. Fluorescein-guided panendoscopy for head and neck cancer using handheld probe-based confocal laser endomicroscopy: A pilot study. Front. Oncol. 2021, 11, 671880. [Google Scholar] [CrossRef] [PubMed]

- Boehm, F.; Alperovich, A.; Schwamborn, C.; Mostafa, M.; Giannantonio, T.; Lingl, J.; Lehner, R.; Zhang, X.; Hoffmann, T.K.; Schuler, P.J. Enhancing surgical precision in squamous cell carcinoma of the head and neck: Hyperspectral imaging and artificial intelligence for improved margin assessment in an ex vivo setting. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2025, 332, 125817. [Google Scholar] [CrossRef]

- Singh, M.K.; Kumar, B. Fine tuning the pre-trained convolutional neural network models for hyperspectral image classification using transfer learning. In Computer Vision and Robotics: Proceedings of CVR 2022; Springer: Berlin/Heidelberg, Germany, 2023; pp. 271–283. [Google Scholar]

- Guerri, M.F.; Distante, C.; Spagnolo, P.; Bougourzi, F.; Taleb-Ahmed, A. Deep learning techniques for hyperspectral image analysis in agriculture: A review. ISPRS Open J. Photogramm. Remote Sens. 2024, 12, 100062. [Google Scholar] [CrossRef]

- Nunes, K.L.; Jegede, V.; Mann, D.S.; Llerena, P.; Wu, R.; Estephan, L.; Kumar, A.; Siddiqui, S.; Banoub, R.; Keith, S.W.; et al. A Randomized Pilot Trial of Virtual Reality Surgical Planning for Head and Neck Oncologic Resection. Laryngoscope 2025, 135, 1090–1097. [Google Scholar] [CrossRef]

| ID | Localization | TNM | Histopathology |

|---|---|---|---|

| HSI-3D-1 | Oropharynx | pT4a N1 M0 | Squamous cell carcinoma |

| HSI-3D-2 | Oral cavity | pT4a N0 M0 | Squamous cell carcinoma |

| HSI-3D-3 | Hypopharynx | pT1 N2b M0 | Squamous cell carcinoma |

| HSI-3D-4 | Oropharynx | pT4a N3b M0 | Squamous cell carcinoma |

| HSI-3D-5 | Nose | pT4a N0 M0 | Squamous cell carcinoma |

| HSI-3D-6 | Oral cavity | pT3 N3b M0 | Squamous cell carcinoma |

| Model | Class | Accuracy | Recall | Precision | F1-Score |

|---|---|---|---|---|---|

| U-Net | Tumor | 0.9751 ± 0.0022 | 0.9325 ± 0.0245 | 0.9062 ± 0.0106 | 0.9062 ± 0.0106 |

| Healthy | 0.6857 ± 0.0820 | 0.7428 ± 0.0347 | 0.7428 ± 0.0347 | ||

| Background | 0.9976 ± 0.0005 | 0.9975 ± 0.0002 | 0.9975 ± 0.0002 | ||

| UwU-Net | Tumor | 0.9286 ± 0.0165 | 0.9069 ± 0.1118 | 0.8390 ± 0.0490 | 0.8390 ± 0.0490 |

| Healthy | 0.3678 ± 0.2585 | 0.4161 ± 0.2532 | 0.4161 ± 0.2532 | ||

| Background | 0.9921 ± 0.0049 | 0.9910 ± 0.0026 | 0.9910 ± 0.0026 | ||

| U-Net Transformer | Tumor | 0.9573 ± 0.0042 | 0.9141 ± 0.0345 | 0.8940 ± 0.0116 | 0.8940 ± 0.0116 |

| Healthy | 0.6570 ± 0.1193 | 0.6964 ± 0.0573 | 0.6964 ± 0.0573 | ||

| Background | 0.9951 ± 0.0012 | 0.9953 ± 0.0005 | 0.9953 ± 0.0005 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bali, A.; Wolter, S.; Pelzel, D.; Weyer, U.; Azevedo, T.; Lio, P.; Kouka, M.; Geißler, K.; Bitter, T.; Ernst, G.; et al. Real-Time Intraoperative Decision-Making in Head and Neck Tumor Surgery: A Histopathologically Grounded Hyperspectral Imaging and Deep Learning Approach. Cancers 2025, 17, 1617. https://doi.org/10.3390/cancers17101617

Bali A, Wolter S, Pelzel D, Weyer U, Azevedo T, Lio P, Kouka M, Geißler K, Bitter T, Ernst G, et al. Real-Time Intraoperative Decision-Making in Head and Neck Tumor Surgery: A Histopathologically Grounded Hyperspectral Imaging and Deep Learning Approach. Cancers. 2025; 17(10):1617. https://doi.org/10.3390/cancers17101617

Chicago/Turabian StyleBali, Ayman, Saskia Wolter, Daniela Pelzel, Ulrike Weyer, Tiago Azevedo, Pietro Lio, Mussab Kouka, Katharina Geißler, Thomas Bitter, Günther Ernst, and et al. 2025. "Real-Time Intraoperative Decision-Making in Head and Neck Tumor Surgery: A Histopathologically Grounded Hyperspectral Imaging and Deep Learning Approach" Cancers 17, no. 10: 1617. https://doi.org/10.3390/cancers17101617

APA StyleBali, A., Wolter, S., Pelzel, D., Weyer, U., Azevedo, T., Lio, P., Kouka, M., Geißler, K., Bitter, T., Ernst, G., Xylander, A., Ziller, N., Mühlig, A., von Eggeling, F., Guntinas-Lichius, O., & Pertzborn, D. (2025). Real-Time Intraoperative Decision-Making in Head and Neck Tumor Surgery: A Histopathologically Grounded Hyperspectral Imaging and Deep Learning Approach. Cancers, 17(10), 1617. https://doi.org/10.3390/cancers17101617