Artificial Intelligence and Machine Learning in Ocular Oncology, Retinoblastoma (ArMOR): Experience with a Multiracial Cohort

Abstract

Simple Summary

Abstract

1. Introduction

2. Methods

2.1. Data Collection

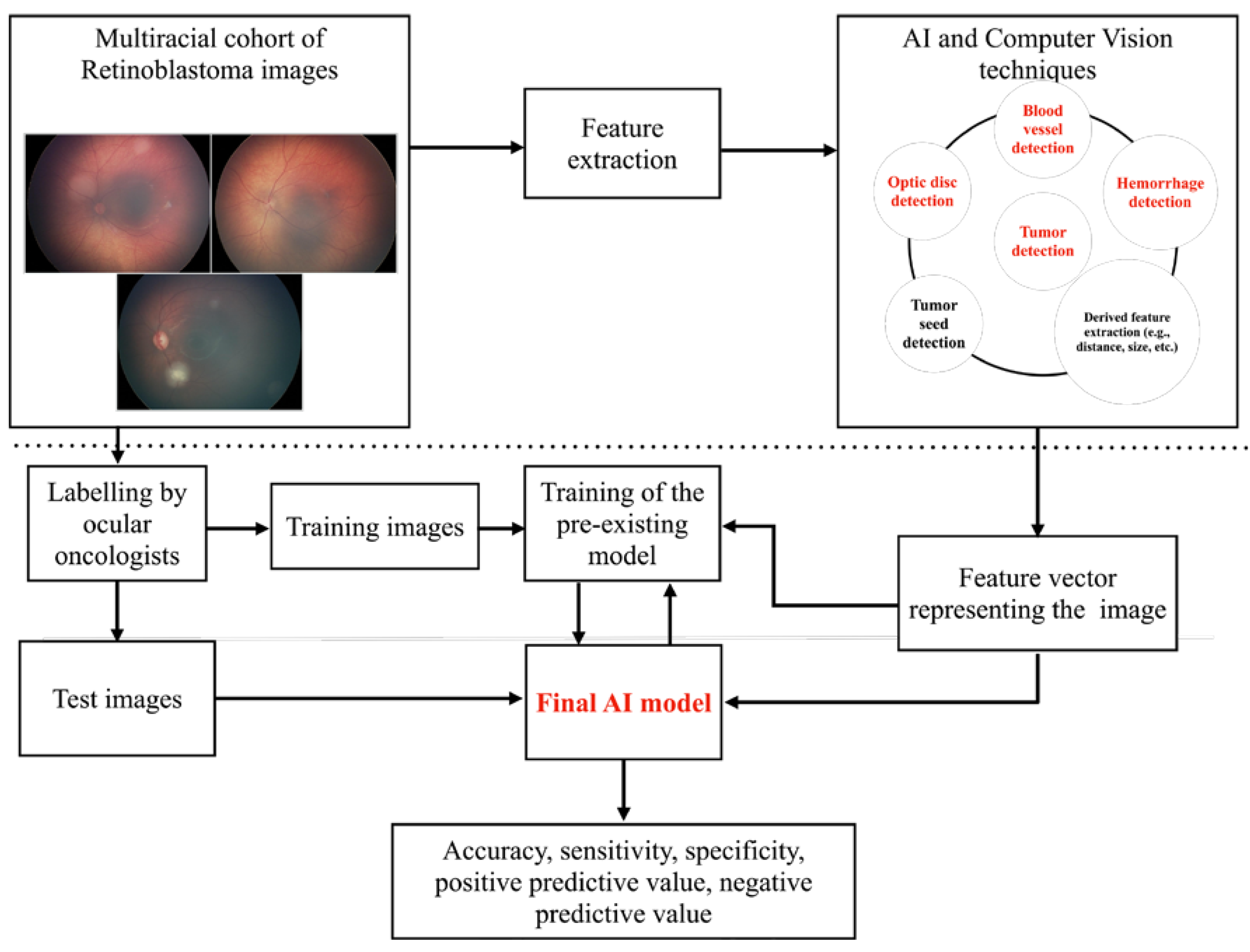

2.2. Feature Extraction

2.3. Identification of Specific Problems and Development of Solutions

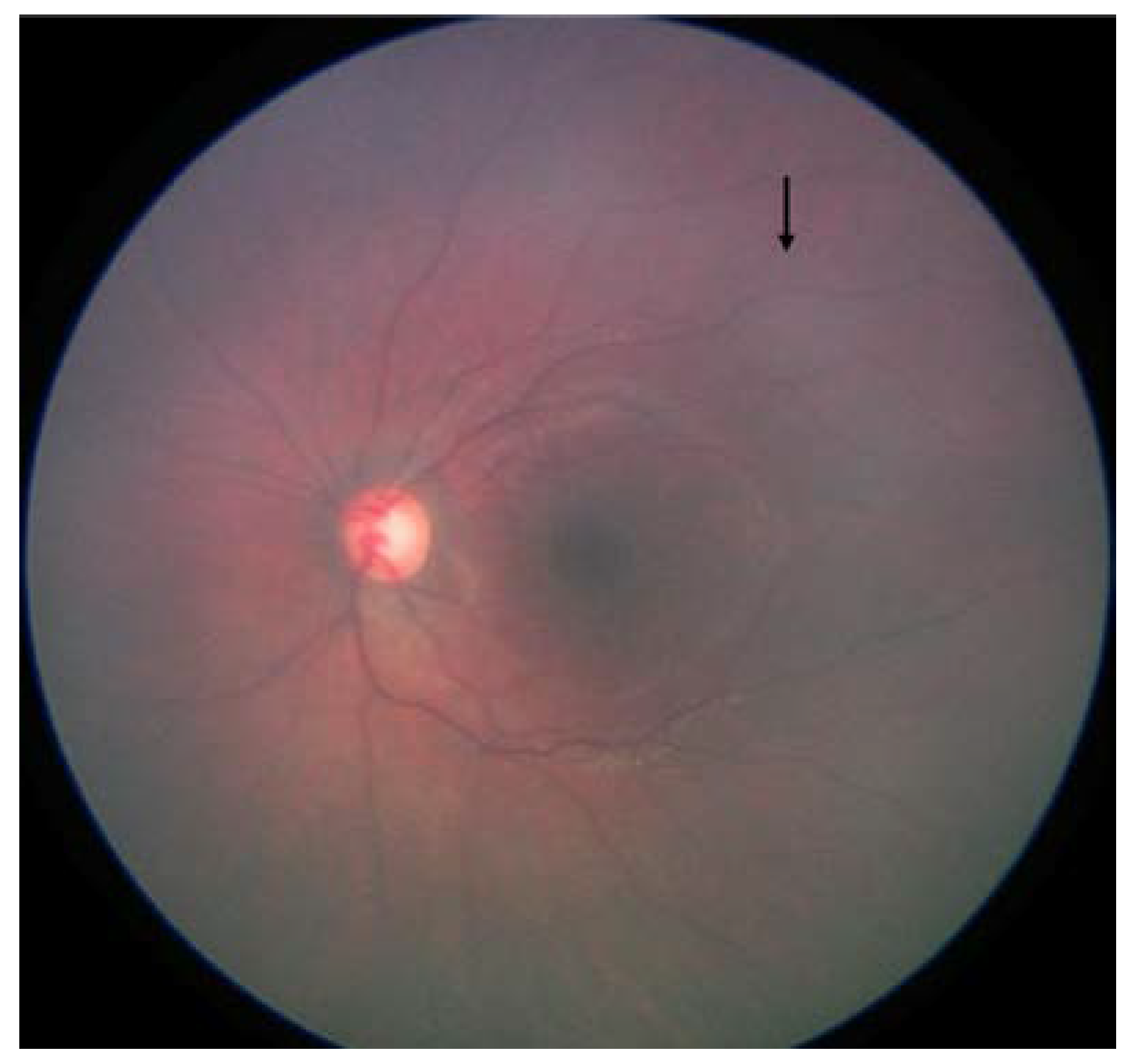

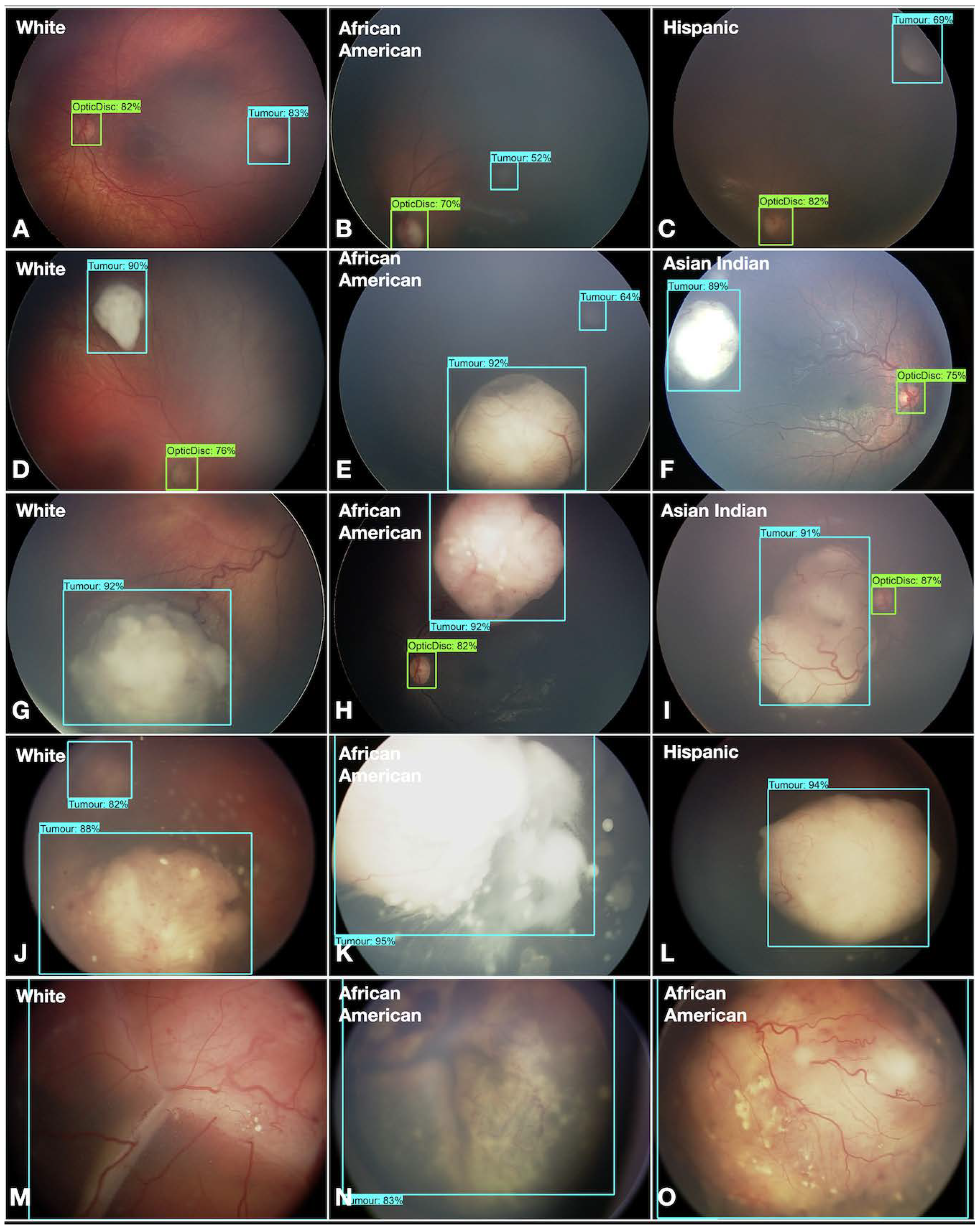

- Fundus color: This significantly impacted the AI/ML models for detecting optic discs and tumors across various races. While the larger tumors did not pose much of a problem, the smaller ones, especially groups A and B, often blended within the fundus color (Figure 2). This problem was mitigated by retraining, testing, and validating against the multiracial data, thus ensuring no data imbalance across different categories.

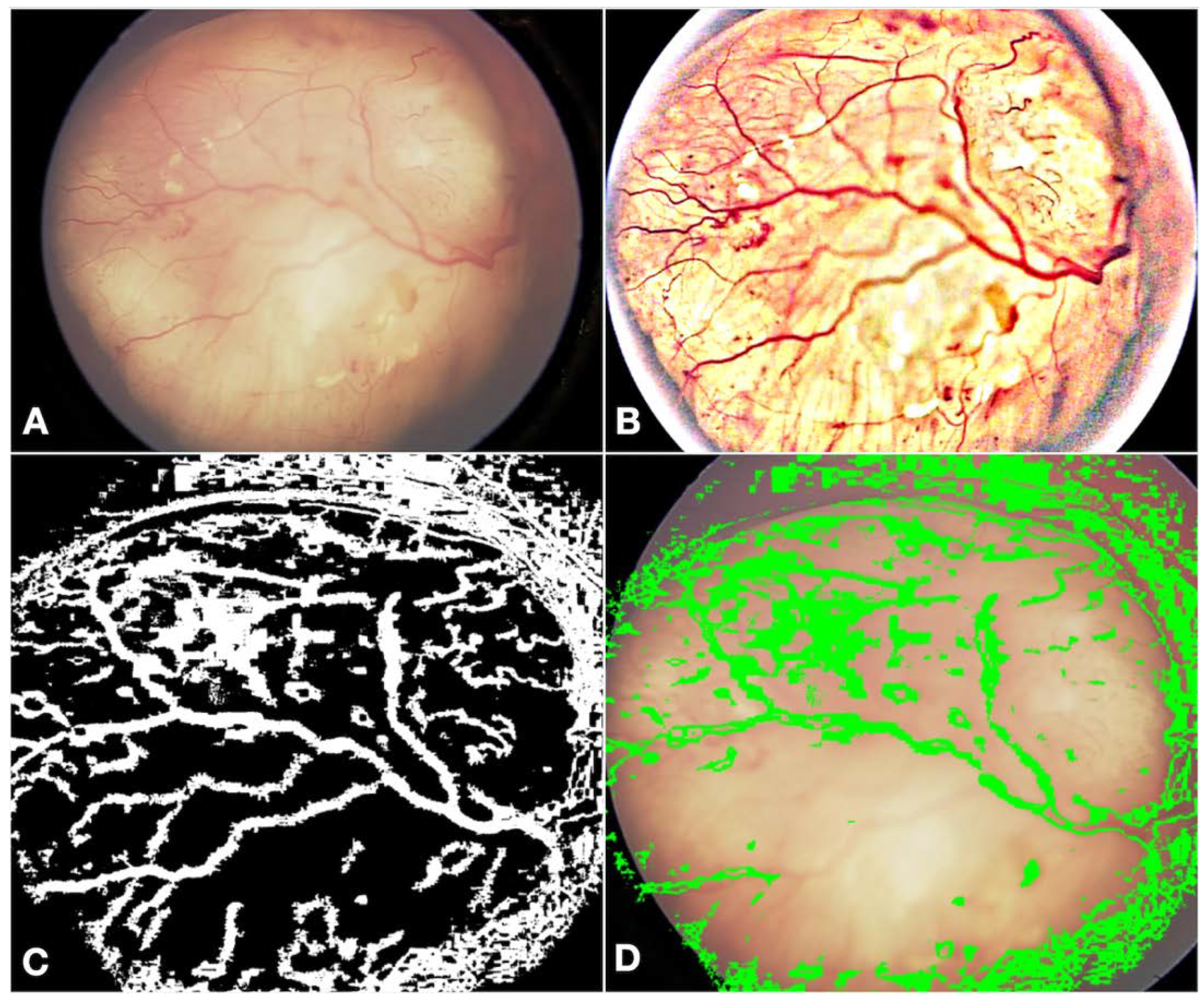

- Fundus contrast: The fundus images for Asian-Indian and African American races had a clear contrast between blood vessels, hemorrhages, and the background fundus color. The fundus images did not have such a contrast for the White race. Our default Computer Vision feature extraction model from the pre-existing algorithm proved too broad, resulting in incorrect grouping to C, D, and E groups. Sometimes, the AI model assigned group D or E labels to group A tumors. To bring about a more versatile broader algorithm applicable to all races, we appended a pre-processing step to perform color segmentation and a set of enhancements. The color segmentation and contrast enhancement approaches were used only to extract features such as blood vessels and hemorrhages. We retrained the models for other features to capture other variabilities, as a standard practice described in the literature [26]. This pre-processing step transformed the cohort with multiracial fundus images into a common framework so that the blood vessel and hemorrhage detection computer models could be uniformly applied. Figure 3 illustrates the three-step process we employed: pre-processing, identification of blood vessels/hemorrhage, and computing features for classification, such as the area covered and adjacent retina visible or not visible.

- Retraining of the AI model: Due to the differences in fundus color and contrast, the AI model showed a propensity towards assigning inaccurate C, D, and E group labels in the multiracial dataset. To delineate and enable the model to learn this subtle difference from the data set, we re-trained the AI model using a new ML model on the features extracted from various new/modified models, as described in Figure 1 and Figure 2. A total of 14 extracted and derived features were used to build an XGBoost ML model for grouping RB.

- Assignment of a group label to an eye: Since different images of the same eye would show variation in the presence or absence of the tumor, its size, and associated features, variation was expected for group labels assigned to the images. Several factors were carefully considered when assigning a group label to the eye based on group labels of individual images. After grouping every image of an eye to arrive at a patient-level diagnosis, we followed a two-step process, which we termed aggregation. The first step in the aggregation was to remove outliers. We noted that within the large dataset, there were subtle inadequacies, such as a lack of focus only on a small set of images of an eye. Instead of invalidating such eyes and limiting the analysis, the outlier step in our aggregation algorithm removed these if (i) there were ≥5 such images in an eye and (ii) there was only one image with a group assigned ≥2 groups away from the rest of the images of that eye. The second step in assigning a group label to the eye was to look for the most frequent group label assigned within the images of that eye that is greater than a threshold of 65% or defaults to a conservative stance of the highest group among the images if there is no clear majority.

2.4. Performance Metrics and Statistical Analysis

3. Results

3.1. Dataset, Groups, and Distribution

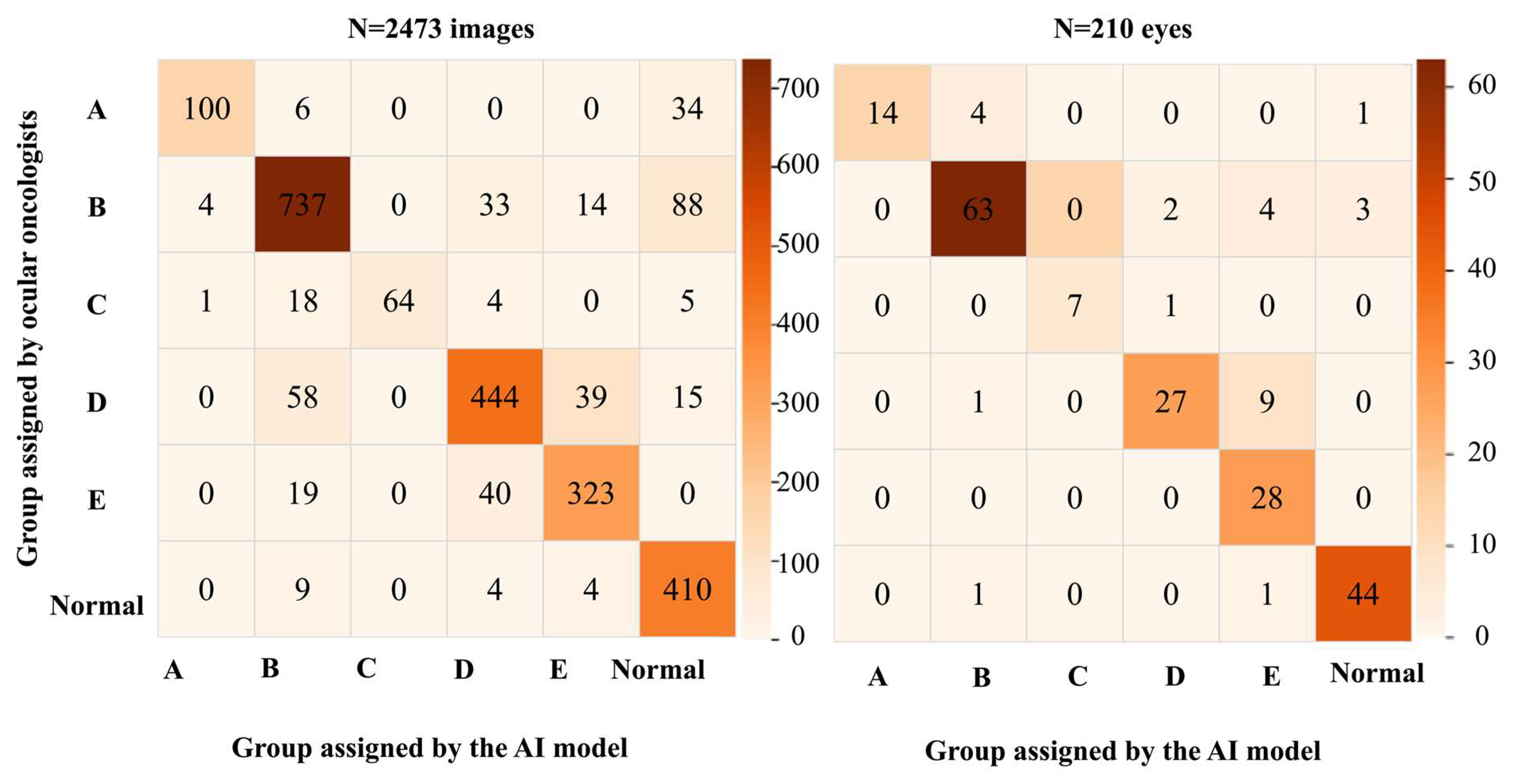

3.2. Performance Metrics

3.3. Analysis of the Sensitivity for Detecting RB by the AI Model Based on the Race and ICRB Group

4. Discussion

- We developed a generic model that can be easily deployed across a clinical practice with multiracial data, avoiding the need for multiple models. A single algorithm with one set of models will be versatile in deployment, retraining, and testing.

- Further, prior definitive information on the race may only sometimes be available to apply an appropriate model based on that information. Instead, selecting a common model for multiracial data agnostic to the race is more versatile. We measured the performance against various races and verified that our proposed approach worked, proving that the learning of different AI/ML models is uniform.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ciller, C.; Ivo, S.; De Zanet, S.; Apostolopoulos, S.; Munier, F.L.; Wolf, S.; Thiran, J.P.; Cuadra, M.B.; Sznitman, R. Automatic segmentation of retinoblastoma in fundus image photography using convolutional neural networks. Investig. Ophthalmol. Vis. Sci. 2017, 58, 3332. [Google Scholar]

- Andayani, U.; Siregar, B.; Sandri, W.E.; Muchtar, M.; Syahputra, M.; Fahmi, F.; Nasution, T. Identification of retinoblastoma using backpropagation neural network. J. Phys. Conf. Ser. 2019, 1235, 012093. [Google Scholar] [CrossRef]

- Jaya, I.; Andayani, U.; Siregar, B.; Febri, T.; Arisandi, D. Identification of retinoblastoma using the extreme learning machine. J. Phys. Conf. Ser. 2019, 1235, 12057. [Google Scholar] [CrossRef]

- Deva Durai, C.A.; Jebaseeli, T.J.; Alelyani, S.; Mubharakali, A. Early prediction and diagnosis of retinoblastoma using deep learning techniques. arXiv 2021, arXiv:2103.07622. [Google Scholar]

- Strijbis, V.I.J.; de Bloeme, C.M.; Jansen, R.W.; Kebiri, H.; Nguyen, H.G.; de Jong, M.C.; Moll, A.C.; Bach-Cuadra, M.; de Graaf, P.; Steenwijk, M.D. Multi-view convolutional neural networks for automated ocular structure and tumor segmentation in retinoblastoma. Sci. Rep. 2021, 11, 14590. [Google Scholar] [CrossRef]

- Rahdar, A.; Ahmadi, M.J.; Naseripour, M.; Akhtari, A.; Sedaghat, A.; Hosseinabadi, V.Z.; Yarmohamadi, P.; Hajihasani, S.; Mirshahi, R. Semi-supervised segmentation of retinoblastoma tumors in fundus images. Sci. Rep. 2013, 13, 13010. [Google Scholar] [CrossRef]

- Jebaseeli, T.; David, D. Diagnosis of ophthalmic retinoblastoma tumors using 2.75 D CNN segmentation technique. In Computational Methods and Deep Learning for Ophthalmology; Academic Press: Cambridge, MA, USA, 2023; pp. 107–119. [Google Scholar]

- Alruwais, N.; Obayya, M.; Al-Mutiri, F.; Assiri, M.; Alneil, A.A.; Mohamed, A. Advancing retinoblastoma detection based on binary arithmetic optimization and integrated features. PeerJ Comput. Sci. 2023, 22, e1681. [Google Scholar] [CrossRef]

- Aldughayfiq, B.; Ashfaq, F.; Jhanjhi, N.Z.; Humayun, M. Explainable AI for retinoblastoma diagnosis: Interpreting deep learning models with LIME and SHAP. Diagnostics 2023, 13, 1932. [Google Scholar] [CrossRef]

- Zhang, R.; Dong, L.; Li, R.; Zhang, K.; Li, Y.; Zhao, H.; Shi, J.; Ge, X.; Xu, X.; Jiang, L.; et al. Automatic retinoblastoma screening and surveillance using deep learning. Br. J. Cancer 2023, 129, 466–474. [Google Scholar] [CrossRef]

- Kaliki, S.; Vempuluru, V.S.; Ghose, N.; Patil, G.; Viriyala, R.; Dhara, K.K. Artificial intelligence and machine learning in ocular oncology: Retinoblastoma. Indian J. Ophthalmol. 2023, 71, 424–430. [Google Scholar] [CrossRef]

- Habeb, A.; Zhu, N.; Taresh, M.; Ali, A. Deep ocular tumor classification model using cuckoo search algorithm and Caputo fractional gradient descent. PeerJ Comput. Sci. 2024, 10, e1923. [Google Scholar] [CrossRef]

- Fabian, I.D.; Abdallah, E.; Abdullahi, S.U.; Abdulqader, R.A.; Adamou Boubacar, S.; Ademola-Popoola, D.S.; Adio, A.; Afshar, A.R.; Aggarwal, P.; Aghaji, A.E.; et al. Global retinoblastoma presentation and analysis by national income level. JAMA Oncol. 2020, 6, 685–695. [Google Scholar]

- Kaliki, S.; Ji, X.; Zou, Y.; Sultana, S.; Taju Sherief, S.; Cassoux, N.; Diaz Coronado, R.Y.; Luis Garcia Leon, J.; López, A.M.Z.; Polyakov, V.G.; et al. Lag time between onset of first symptom and treatment of retinoblastoma: An international collaborative study of 692 patients from 10 countries. Cancers 2021, 13, 1956. [Google Scholar] [CrossRef]

- Shields, C.L.; Mashayekhi, A.; Au, A.K.; Czyz, C.; Leahey, A.; Meadows, A.T.; Shields, J.A. The International Classification of Retinoblastoma predicts chemoreduction success. Ophthalmology 2006, 113, 2276–2280. [Google Scholar] [CrossRef]

- Luca, A.R.; Ursuleanu, T.F.; Gheorghe, L.; Grigorovici, R.; Iancu, S.; Hlusneac, M.; Grigorovici, A. Impact of quality, type, and volume of data used by deep learning models in the analysis of medical images. Inform. Med. Unlocked 2022, 29, 100911. [Google Scholar] [CrossRef]

- Rajesh, A.E.; Olvera-Barrios, A.; Warwick, A.N.; Wu, Y.; Stuart, K.V.; Biradar, M.; Ung, C.Y.; Khawaja, A.P.; Luben, R.; Foster, P.J.; et al. EPIC Norfolk, UK Biobank Eye and Vision Consortium. Ethnicity is not biology: Retinal pigment score to evaluate biological variability from ophthalmic imaging using machine learning. medRxiv 2023. [Google Scholar] [CrossRef]

- Wolf-Schnurrbusch, U.E.K.; Röösli, N.; Weyermann, E.; Heldner, M.R.; Höhne, K.; Wolf, S. Ethnic differences in macular pigment density and distribution. Investig. Ophthalmol. Vis. Sci. 2007, 48, 3783–3787. [Google Scholar] [CrossRef]

- Li, X.; Wong, W.L.; Cheung, C.Y.; Cheng, C.; Ikram, M.K.; Li, J.; Chia, K.S.; Wong, T.Y. Racial differences in retinal vessel geometric characteristics: A multiethnic study in healthy Asians. Investig. Ophthalmol. Vis. Sci. 2013, 5, 3650–3656. [Google Scholar] [CrossRef]

- Seely, K.R.; McCall, M.; Ying, G.S.; Prakalapakorn, S.G.; Freedman, S.F.; Toth, C.A.; BabySTEPS Group. Ocular pigmentation impact on retinal versus choroidal optical coherence tomography imaging in preterm infants. Transl. Vis. Sci. Technol. 2023, 12, 7. [Google Scholar] [CrossRef]

- Davey, P.G.; Lievens, C.; Ammono-Monney, S. Differences in macular pigment optical density across four ethnicities: A comparative study. Ther. Adv. Ophthalmol. 2020, 12, 2515841420924167. [Google Scholar] [CrossRef]

- U.S. Office of Management and Budget’s Statistical Policy Directive No. 15: Standards for Maintaining, Collecting, and Presenting Federal Data on Race and Ethnicity. Available online: https://spd15revision.gov/ (accessed on 24 September 2024).

- Vargas, E.A.; Scherer, L.A.; Fiske, S.T.; Barabino, G.A.; National Academies of Sciences, Engineering, and Medicine (Eds.) Population Data and Demographics in the United States. In Advancing Antiracism, Diversity, Equity, and Inclusion in STEMM Organizations: Beyond Broadening Participation; National Academies Press (US): Washington, DC, USA, 2023. Available online: https://www.ncbi.nlm.nih.gov/books/NBK593023/ (accessed on 24 September 2024).

- Lopez, M.H.; Krogstad, J.M.; Passel, J.S. “Who Is Hispanic?”. Pew Research Center. Available online: https://www.pewresearch.org/fact-tank/2020/09/15/who-is-hispanic/ (accessed on 24 September 2024).

- U.S. Department of Transportation. ‘Hispanic Americans’, Which Includes Persons of Mexican, Puerto Rican, Cuban, Dominican, Central or South American, or Other Spanish or Portuguese Culture or Origin, Regardless of Race. Available online: https://www.fhwa.dot.gov/hep/guidance/superseded/49cfr26.cfm (accessed on 24 September 2024).

- Gaudio, A.; Smailagic, A.; Campilho, A. Enhancement of retinal fundus images via pixel color amplification. In Image Analysis and Recognition; Karray, F., Campilho, A., Wang, Z., Eds.; ICIAR 2020. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 11663. [Google Scholar]

- Jafarizadeh, A.; Maleki, S.F.; Pouya, P.; Sobhi, N.; Abdollahi, M.; Pedrammehr, S.; Lim, C.P.; Asadi, H.; Alizadehsani, R.; Tan, R.S.; et al. Current and future roles of artificial intelligence in retinopathy of prematurity. arXiv 2024, arXiv:2402.09975. [Google Scholar] [CrossRef]

- Ramanathan, A.; Athikarisamy, S.E.; Lam, G.C. Artificial intelligence for the diagnosis of retinopathy of prematurity: A systematic review of current algorithms. Eye 2023, 37, 2518–2526. [Google Scholar] [CrossRef]

- Heneghan, C.; Flynn, J.; O’Keefe, M.; Cahill, M. Characterization of changes in blood vessel width and tortuosity in retinopathy of prematurity using image analysis. Med. Image Anal. 2002, 6, 407–429. [Google Scholar] [CrossRef]

- Rao, D.P.; Savoy, F.M.; Tan, J.Z.E.; Fung, B.P.; Bopitiya, C.M.; Sivaraman, A.; Vinekar, A. Development and validation of an artificial intelligence based screening tool for detection of retinopathy of prematurity in a South Indian population. Front. Pediatr. 2023, 11, 1197237. [Google Scholar] [CrossRef] [PubMed]

- Cha, K.; Woo, H.K.; Park, D.; Chang, D.K.; Kang, M. Effects of background colors, flashes, and exposure values on the accuracy of a smartphone-based pill recognition system using a deep convolutional neural network: Deep learning and experimental approach. JMIR Med. Inform. 2021, 9, e26000. [Google Scholar] [CrossRef]

- Coyner, A.S.; Singh, P.; Brown, J.M.; Ostmo, S.; Chan, R.V.P.; Chiang, M.F.; Kalpathy-Cramer, J.; Campbell, J.P. Imaging and informatics in retinopathy of prematurity consortium. Association of biomarker-based artificial intelligence with risk of racial bias in retinal Images. JAMA Ophthalmol. 2023, 141, 543–552. [Google Scholar] [CrossRef]

| Learning Model | Number of Images Labelled for Training + Validation + Test of the AI Model (n) | Number of Images Used for Training (n) | Number of Images Used for Cross-Validation (n) | Number of Images Used for Test (n) |

|---|---|---|---|---|

| Deep learning (MultiLabel Classification: optic disc + tumor) | 784 | 501 | 126 | 157 |

| Deep Learning (seeds) | 132 | 84 | 21 | 27 |

| Final AI model (XGBoost model) | 2473 | 1582 | 396 | 495 |

| No. of Images | No. of Eyes | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Race | Race | |||||||||||||

| ICRB Group | W | AA | H | AI | AO | NA | All Races | W | AA | H | AI | AO | NA | All Races |

| Normal | 328 | 72 | 13 | 5 | 6 | 3 | 427 | 31 | 10 | 2 | 1 | 1 | 1 | 46 |

| A | 120 | 17 | 1 | 0 | 0 | 2 | 140 | 16 | 2 | 0 | 0 | 0 | 1 | 19 |

| B | 705 | 125 | 22 | 15 | 0 | 9 | 876 | 57 | 9 | 1 | 2 | 0 | 3 | 72 |

| C | 70 | 6 | 0 | 16 | 0 | 0 | 92 | 5 | 1 | 0 | 2 | 0 | 0 | 8 |

| D | 394 | 118 | 28 | 13 | 3 | 0 | 556 | 25 | 8 | 2 | 1 | 1 | 0 | 37 |

| E | 276 | 84 | 13 | 0 | 9 | 0 | 382 | 19 | 7 | 1 | 0 | 1 | 0 | 28 |

| All groups | 1893 | 422 | 77 | 49 | 18 | 14 | 2473 | 153 | 37 | 6 | 6 | 3 | 5 | 210 |

| Detection of RB | Normal | A | B | C | D | E | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| N = 2473 | n = 210 | N = 427 | n = 46 | N = 140 | n = 19 | N = 876 | n = 72 | N = 92 | n = 8 | N = 556 | n = 37 | N = 382 | n = 28 | |

| Ac | 94% | 97% | 94% | 97% | 98% | 98% | 90% | 93% | 99% | >99% | 92% | 94% | 95% | 93% |

| Sn | 93% | 98% | 96% | 96% | 71% | 74% | 84% | 88% | 70% | 88% | 80% | 73% | 85% | 100% |

| Sp | 96% | 96% | 93% | 98% | >99% | 100% | 93% | 96% | 100% | 100% | 96% | 98% | 97% | 92% |

| PPV | 99% | 99% | 74% | 92% | 95% | 100% | 87% | 91% | 100% | 100% | 85% | 90% | 85% | 67% |

| NPV | 74% | 92% | 99% | 99% | 98% | 97% | 91% | 94% | 99% | >99% | 94% | 94% | 97% | 100% |

| Race | Normal (n) | A (n) | B (n) | C (n) | D (n) | E (n) | Total (n) |

|---|---|---|---|---|---|---|---|

| White (n = 153) | 94% (31) | 75% (16) | 88% (57) | 80% (5) | 68% (25) | 100% (19) | 86% (153) |

| African American (n = 37) | 100% (10) | 50% (2) | 89% (9) | 100% (1) | 75% (8) | 100% (7) | 89% (37) |

| Asian Indian (n = 6) | 100% (1) | na | 100% (2) | 100% (2) | 100% (1) | na | 100% (6) |

| Asian Oriental (n = 3) | 100% (1) | na | na | na | 100% (1) | 100% (1) | 100% (3) |

| Hispanic (n = 6) | 100% (2) | na | 100% (1) | na | 100% (2) | 100% (1) | 100% (6) |

| Not available (n = 5) | 100% (1) | 100% (1) | 67% (3) | na | Na | na | 80% (5) |

| Total (n = 210) | 96% (46) | 74% (19) | 88% (72) | 88% (8) | 73% (37) | 100% (28) | 87% (210) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vempuluru, V.S.; Viriyala, R.; Ayyagari, V.; Bakal, K.; Bhamidipati, P.; Dhara, K.K.; Ferenczy, S.R.; Shields, C.L.; Kaliki, S. Artificial Intelligence and Machine Learning in Ocular Oncology, Retinoblastoma (ArMOR): Experience with a Multiracial Cohort. Cancers 2024, 16, 3516. https://doi.org/10.3390/cancers16203516

Vempuluru VS, Viriyala R, Ayyagari V, Bakal K, Bhamidipati P, Dhara KK, Ferenczy SR, Shields CL, Kaliki S. Artificial Intelligence and Machine Learning in Ocular Oncology, Retinoblastoma (ArMOR): Experience with a Multiracial Cohort. Cancers. 2024; 16(20):3516. https://doi.org/10.3390/cancers16203516

Chicago/Turabian StyleVempuluru, Vijitha S., Rajiv Viriyala, Virinchi Ayyagari, Komal Bakal, Patanjali Bhamidipati, Krishna Kishore Dhara, Sandor R. Ferenczy, Carol L. Shields, and Swathi Kaliki. 2024. "Artificial Intelligence and Machine Learning in Ocular Oncology, Retinoblastoma (ArMOR): Experience with a Multiracial Cohort" Cancers 16, no. 20: 3516. https://doi.org/10.3390/cancers16203516

APA StyleVempuluru, V. S., Viriyala, R., Ayyagari, V., Bakal, K., Bhamidipati, P., Dhara, K. K., Ferenczy, S. R., Shields, C. L., & Kaliki, S. (2024). Artificial Intelligence and Machine Learning in Ocular Oncology, Retinoblastoma (ArMOR): Experience with a Multiracial Cohort. Cancers, 16(20), 3516. https://doi.org/10.3390/cancers16203516